|

PLearn 0.1

|

|

PLearn 0.1

|

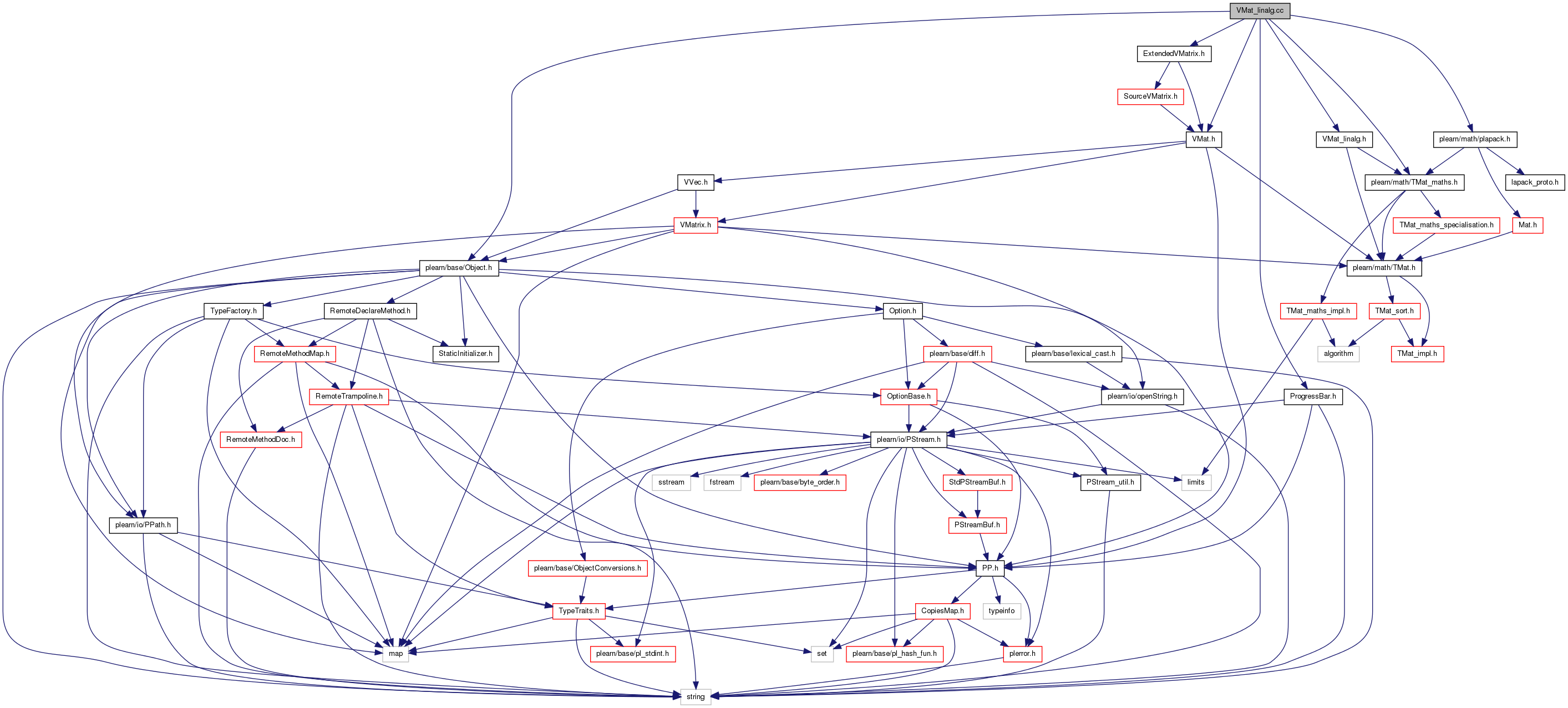

#include <plearn/base/Object.h>#include <plearn/base/ProgressBar.h>#include "VMat_linalg.h"#include <plearn/math/TMat_maths.h>#include "VMat.h"#include "ExtendedVMatrix.h"#include <plearn/math/plapack.h>

Go to the source code of this file.

Namespaces | |

| namespace | PLearn |

< for swap | |

Functions | |

| Mat | PLearn::transposeProduct (VMat m) |

| computes M'.M | |

| Mat | PLearn::transposeProduct (VMat m1, VMat m2) |

| computes M1'.M2 | |

| Vec | PLearn::transposeProduct (VMat m1, Vec v2) |

| computes M1'.V2 | |

| Mat | PLearn::productTranspose (VMat m1, VMat m2) |

| computes M1.M2' | |

| Mat | PLearn::product (Mat m1, VMat m2) |

| computes M1.M2 | |

| VMat | PLearn::transpose (VMat m1) |

| returns M1' | |

| real | PLearn::linearRegression (VMat inputs, VMat outputs, real weight_decay, Mat theta_t, bool use_precomputed_XtX_XtY, Mat XtX, Mat XtY, real &sum_squared_Y, Vec &outputwise_sum_squared_Y, bool return_squared_loss, int verbose_every, bool cholesky, int apply_decay_from) |

| Mat | PLearn::linearRegression (VMat inputs, VMat outputs, real weight_decay, bool include_bias=false) |

| Version that does all the memory allocations of XtX, XtY and theta_t. | |

| real | PLearn::weightedLinearRegression (VMat inputs, VMat outputs, VMat gammas, real weight_decay, Mat theta_t, bool use_precomputed_XtX_XtY, Mat XtX, Mat XtY, real &sum_squared_Y, Vec &outputwise_sum_squared_Y, real &sum_gammas, bool return_squared_loss=false, int verbose_computation_every=0, bool cholesky=true, int apply_decay_from=1) |

| Linear regression where each input point is given a different importance weight (the gammas); returns weighted average of squared loss This regression is made with no added bias. | |

| Mat | PLearn::weightedLinearRegression (VMat inputs, VMat outputs, VMat gammas, real weight_decay, bool include_bias) |

| Version that does all the memory allocations of XtX, XtY and theta_t. | |

Definition in file VMat_linalg.cc.

1.7.4

1.7.4