|

PLearn 0.1

|

|

PLearn 0.1

|

Go to the source code of this file.

Namespaces | |

| namespace | PLearn |

< for swap | |

Functions | |

| void | PLearn::lapack_Xsyevx_ (char *JOBZ, char *RANGE, char *UPLO, int *N, double *A, int *LDA, double *VL, double *VU, int *IL, int *IU, double *ABSTOL, int *M, double *W, double *Z, int *LDZ, double *WORK, int *LWORK, int *IWORK, int *IFAIL, int *INFO) |

| void | PLearn::lapack_Xsyevx_ (char *JOBZ, char *RANGE, char *UPLO, int *N, float *A, int *LDA, float *VL, float *VU, int *IL, int *IU, float *ABSTOL, int *M, float *W, float *Z, int *LDZ, float *WORK, int *LWORK, int *IWORK, int *IFAIL, int *INFO) |

| void | PLearn::lapack_Xgesdd_ (char *JOBZ, int *M, int *N, double *A, int *LDA, double *S, double *U, int *LDU, double *VT, int *LDVT, double *WORK, int *LWORK, int *IWORK, int *INFO) |

| void | PLearn::lapack_Xgesdd_ (char *JOBZ, int *M, int *N, float *A, int *LDA, float *S, float *U, int *LDU, float *VT, int *LDVT, float *WORK, int *LWORK, int *IWORK, int *INFO) |

| void | PLearn::lapack_Xsyevr_ (char *JOBZ, char *RANGE, char *UPLO, int *N, float *A, int *LDA, float *VL, float *VU, int *IL, int *IU, float *ABSTOL, int *M, float *W, float *Z, int *LDZ, int *ISUPPZ, float *WORK, int *LWORK, int *IWORK, int *LIWORK, int *INFO) |

| void | PLearn::lapack_Xsyevr_ (char *JOBZ, char *RANGE, char *UPLO, int *N, double *A, int *LDA, double *VL, double *VU, int *IL, int *IU, double *ABSTOL, int *M, double *W, double *Z, int *LDZ, int *ISUPPZ, double *WORK, int *LWORK, int *IWORK, int *LIWORK, int *INFO) |

| void | PLearn::lapack_Xsygvx_ (int *ITYPE, char *JOBZ, char *RANGE, char *UPLO, int *N, double *A, int *LDA, double *B, int *LDB, double *VL, double *VU, int *IL, int *IU, double *ABSTOL, int *M, double *W, double *Z, int *LDZ, double *WORK, int *LWORK, int *IWORK, int *IFAIL, int *INFO) |

| void | PLearn::lapack_Xsygvx_ (int *ITYPE, char *JOBZ, char *RANGE, char *UPLO, int *N, float *A, int *LDA, float *B, int *LDB, float *VL, float *VU, int *IL, int *IU, float *ABSTOL, int *M, float *W, float *Z, int *LDZ, float *WORK, int *LWORK, int *IWORK, int *IFAIL, int *INFO) |

| void | PLearn::lapack_Xpotrf_ (char *UPLO, int *N, float *A, int *LDA, int *INFO) |

| void | PLearn::lapack_Xpotrf_ (char *UPLO, int *N, double *A, int *LDA, int *INFO) |

| void | PLearn::lapack_Xpotrs_ (char *UPLO, int *N, int *NRHS, float *A, int *LDA, float *B, int *LDB, int *INFO) |

| void | PLearn::lapack_Xpotrs_ (char *UPLO, int *N, int *NRHS, double *A, int *LDA, double *B, int *LDB, int *INFO) |

| void | PLearn::lapack_Xposvx_ (char *FACT, char *UPLO, int *N, int *NRHS, float *A, int *LDA, float *AF, int *LDAF, char *EQUED, float *S, float *B, int *LDB, float *X, int *LDX, float *RCOND, float *FERR, float *BERR, float *WORK, int *IWORK, int *INFO) |

| void | PLearn::lapack_Xposvx_ (char *FACT, char *UPLO, int *N, int *NRHS, double *A, int *LDA, double *AF, int *LDAF, char *EQUED, double *S, double *B, int *LDB, double *X, int *LDX, double *RCOND, double *FERR, double *BERR, double *WORK, int *IWORK, int *INFO) |

| template<class num_t > | |

| void | PLearn::lapackEIGEN (const TMat< num_t > &A, TVec< num_t > &eigenvals, TMat< num_t > &eigenvecs, char RANGE='A', num_t low=0, num_t high=0, num_t ABSTOL=0) |

| Computes the eigenvalues and eigenvectors of a symmetric (NxN) matrix A. | |

| template<class num_t > | |

| void | PLearn::lapackGeneralizedEIGEN (const TMat< num_t > &A, const TMat< num_t > &B, int ITYPE, TVec< num_t > &eigenvals, TMat< num_t > &eigenvecs, char RANGE='A', num_t low=0, num_t high=0, num_t ABSTOL=0) |

| Computes the eigenvalues and eigenvectors of a real generalized symmetric-definite eigenproblem, of the form A*x=(lambda)*B*x, A*Bx=(lambda)*x, or B*A*x=(lambda)*x A and B are assumed to be symmetric and B is also positive definite. | |

| template<class num_t > | |

| void | PLearn::eigenVecOfSymmMat (TMat< num_t > &m, int k, TVec< num_t > &eigen_values, TMat< num_t > &eigen_vectors, bool verbose=true) |

| Computes up to k largest eigen_values and corresponding eigen_vectors of symmetric matrix m. | |

| template<class num_t > | |

| void | PLearn::generalizedEigenVecOfSymmMat (TMat< num_t > &m1, TMat< num_t > &m2, int itype, int k, TVec< num_t > &eigen_values, TMat< num_t > &eigen_vectors) |

| Computes up to k largest eigen_values and corresponding eigen_vectors of a real generalized symmetric-definite eigenproblem, of the form m1*x=(lambda)*m2*x (itype = 1), m1*m2*x=(lambda)*x (itype = 2) or m2*m1*x=(lambda)*x (itype = 3) m1 and m2 are assumed to be symmetric and m2 is also positive definite. | |

| template<class num_t > | |

| void | PLearn::lapackSVD (const TMat< num_t > &At, TMat< num_t > &Ut, TVec< num_t > &S, TMat< num_t > &V, char JOBZ='A', real safeguard=1) |

| template<class num_t > | |

| void | PLearn::SVD (const TMat< num_t > &A, TMat< num_t > &U, TVec< num_t > &S, TMat< num_t > &Vt, char JOBZ='A', real safeguard=1) |

| Performs the SVD decomposition A = U.S.Vt Where U and Vt are orthonormal matrices. | |

| int | PLearn::eigen_SymmMat (Mat &in, Vec &e_value, Mat &e_vector, int &n_evalues_found, bool compute_all, int nb_eigen, bool compute_vectors, bool largest_evalues) |

| int | PLearn::eigen_SymmMat_decreasing (Mat &in, Vec &e_value, Mat &e_vector, int &n_evalues_found, bool compute_all, int nb_eigen, bool compute_vectors=true, bool largest_evalues=true) |

| same as the previous call, but eigenvalues/vectors are sorted by largest firat (in decreasing order) | |

| int | PLearn::matInvert (Mat &in, Mat &inverse) |

| This function compute the inverse of a matrix. | |

| Mat | PLearn::multivariate_normal (const Vec &mu, const Mat &A, int N) |

| generate N vectors sampled from the normal with mean vector mu and covariance matrix A | |

| Vec | PLearn::multivariate_normal (const Vec &mu, const Mat &A) |

| generate a vector sampled from the normal with mean vector mu and covariance matrix A | |

| Vec | PLearn::multivariate_normal (const Vec &mu, const Vec &e_values, const Mat &e_vectors) |

| generate 1 vector sampled from the normal with mean mu and covariance matrix A = evectors * diagonal(e_values) * evectors' | |

| void | PLearn::multivariate_normal (Vec &x, const Vec &mu, const Vec &e_values, const Mat &e_vectors, Vec &z) |

| generate a vector x sampled from the normal with mean mu and covariance matrix A = evectors * diagonal(e_values) * evectors' (the normal(0,I) originally sampled to obtain x is stored in z). | |

| int | PLearn::lapackSolveLinearSystem (Mat &At, Mat &Bt, TVec< int > &pivots) |

| Mat | PLearn::solveLinearSystem (const Mat &A, const Mat &B) |

| Vec | PLearn::solveLinearSystem (const Mat &A, const Vec &b) |

| Returns solution x of Ax = b (same as above, except b and x are vectors) | |

| void | PLearn::solveLinearSystem (const Mat &A, const Mat &Y, Mat &X) |

| for matrices A such that A.length() <= A.width(), find X s.t. | |

| void | PLearn::solveTransposeLinearSystem (const Mat &A, const Mat &Y, Mat &X) |

| for matrices A such that A.length() >= A.width(), find X s.t. | |

| Vec | PLearn::constrainedLinearRegression (const Mat &Xt, const Vec &Y, real lambda) |

| void | PLearn::lapackCholeskyDecompositionInPlace (Mat &A, char uplo='L') |

| Call LAPACK to perform in-place Cholesky Decomposition of a square SYMMETRIC matrix A. | |

| void | PLearn::lapackCholeskySolveInPlace (Mat &A, Mat &B, bool B_is_column_major=false, char uplo='L') |

| Call LAPACK to solve in-place a linear system given its previously-computed Cholesky decomposition. | |

| real | PLearn::GCV (Mat X, Mat Y, real weight_decay, bool X_is_transposed, Mat *W) |

| Compute the generalization error estimator called Generalized Cross-Validation (Craven & Wahba 1979), and the corresponding ridge regression weights in min ||Y - X*W'||^2 + weight_decay ||W||^2. | |

| real | PLearn::GCVfromSVD (real n, real Y2minusZ2, Vec Z, Vec s) |

| Estimator of generalization error estimator called Generalized Cross-Validation (Craven & Wahba 1979), computed from the SVD of the input matrix X in the ridge regression. | |

| real | PLearn::ridgeRegressionByGCV (Mat X, Mat Y, Mat W, real &best_GCV, bool X_is_transposed=false, real initial_weight_decay_guess=-1, int explore_threshold=5, real min_weight_decay=0) |

| Perform ridge regression WITH model selection (i.e. | |

| real | PLearn::weightedRidgeRegressionByGCV (Mat X, Mat Y, Vec gamma, Mat W, real &best_gcv, real min_weight_decay=0) |

| Similar to ridgeRegressionByGCV, but with support form sample weights gamma. | |

| void | PLearn::affineNormalization (Mat data, Mat W, Vec bias, real regularizer) |

| Vec | PLearn::closestPointOnHyperplane (const Vec &x, const Mat &points, real weight_decay=0.) |

| closest point to x on hyperplane that passes through all points (with weight decay) | |

| real | PLearn::hyperplaneDistance (const Vec &x, const Mat &points, real weight_decay=0.) |

| Distance between point x and closest point on hyperplane that passes through all points. | |

| template<class MatT > | |

| void | PLearn::diagonalizeSubspace (MatT &A, Mat &X, Vec &Ax, Mat &solutions, Vec &evalues, Mat &evectors) |

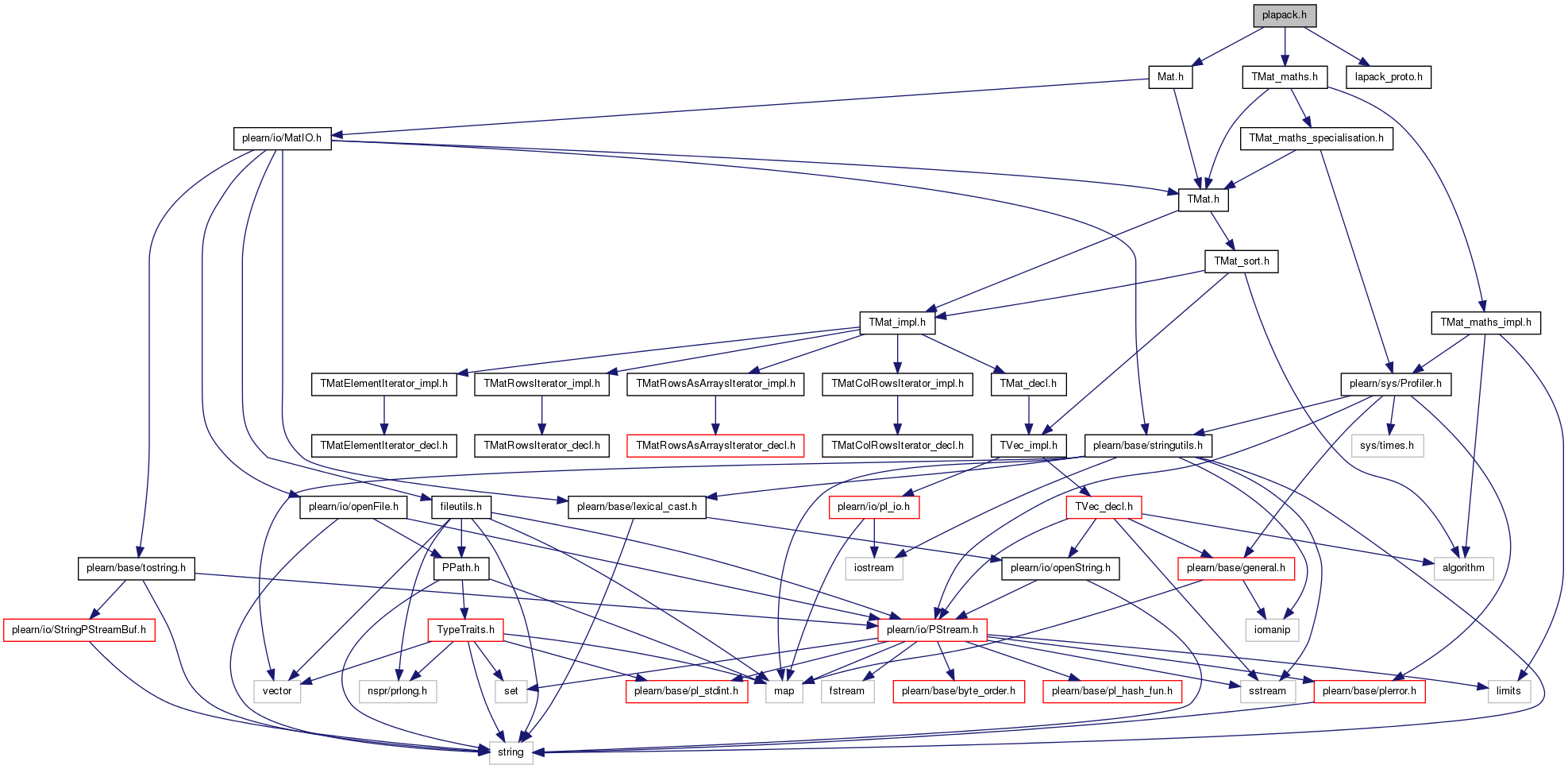

Definition in file plapack.h.

1.7.4

1.7.4