Paper

Don't Just Assume; Look and Answer: Overcoming Priors for Visual Question Answering

Aishwarya Agrawal,

Dhruv Batra,

Devi Parikh,

Aniruddha Kembhavi

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018

[ ArXiv |

Camera Ready |

Supplementary Material

]

@InProceedings{vqa-cp,

author = {Aishwarya Agrawal and Dhruv Batra and Devi Parikh and Aniruddha Kembhavi},

title = {Don't Just Assume; Look and Answer: Overcoming Priors for Visual Question Answering},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2018},

}

Data

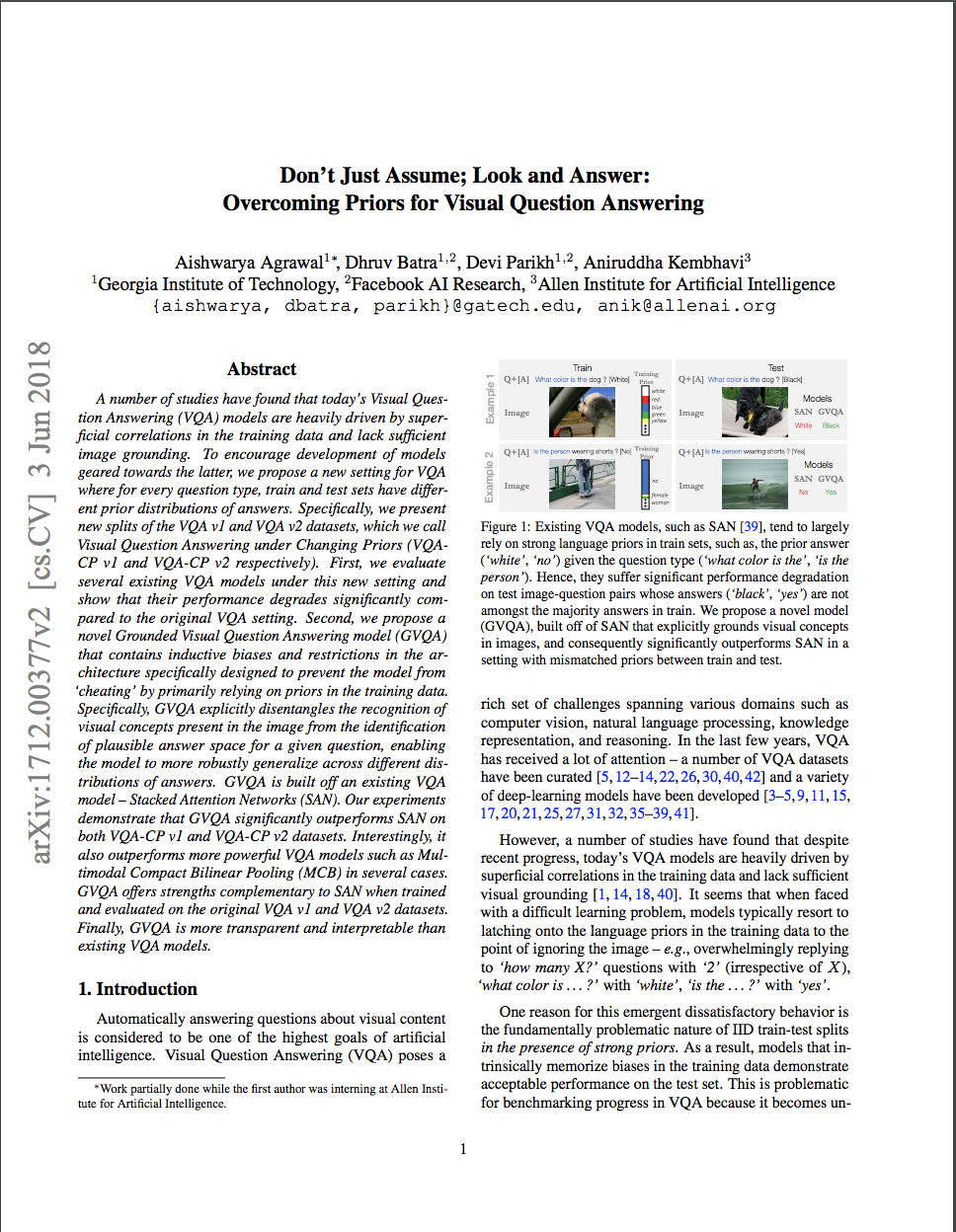

The Visual Question Answering under Changing Priors (VQA-CP) v1 and v2 datasets are created by re-organizing the train and val splits of the VQA v1 and VQA v2 datasets respectively, such that the distribution of answers per question type (such as, "how many", "what color is", etc.) is by design different in the test split compared to the train split. The VQA-CP v1 and VQA-CP v2 datasets are provided below.

VQA-CP Annotations

VQA-CP Input Questions

VQA-CP Input Images

The images in the VQA-CP v1 and v2 datasets (both train and test) are from training and validation sets of the COCO dataset:

Input Questions Format

The format of the questions is same as that of the VQA v1 dataset except that this file only contains the "questions" field and that each question item contains an additional field -- "coco_split" which takes one of the two values -- "train2014" / "val2014" depending on whether the image is from COCO train split or val split. More details about each field is provided below.

{

question{

"question_id" : int,

"image_id" : int,

"coco_split" : str,

"question" : str

}

Annotations Format

The format of the annotations is same as that of the VQA v1 dataset except that this file only contains the "annotations" field and that the annotation item for each question contains an additional field -- "coco_split" which takes one of the two values -- "train2014" / "val2014" depending on whether the image is from COCO train split or val split. More details about each field is provided below.

{

annotation{

"question_id" : int,

"image_id" : int,

"coco_split" : str,

"question_type" : str,

"answer_type" : str,

"answers" : [answer],

"multiple_choice_answer" : str

}

answer{

"answer_id" : int,

"answer" : str,

"answer_confidence": str

}

question_type: type of the question determined by the first few words of the question. For details, please see README.

answer_type: type of the answer -- "yes/no", "number", and "other".

multiple_choice_answer: correct multiple choice answer.

answer_confidence: subject's confidence in answering the question. For details, please see the VQA paper.

Code and Trained Models

The code and trained models for Grounded Visual Question Answering (GVQA) model are available here.