|

PLearn 0.1

|

|

PLearn 0.1

|

Affine transformation module, with stochastic gradient descent updates. More...

#include <ShuntingNNetLayerModule.h>

Public Member Functions | |

| ShuntingNNetLayerModule () | |

| Default constructor. | |

| virtual void | fprop (const Vec &input, Vec &output) const |

| given the input, compute the output (possibly resize it appropriately) SOON TO BE DEPRECATED, USE fprop(const TVec<Mat*>& ports_value) | |

| virtual void | fprop (const Mat &inputs, Mat &outputs) |

| Overridden. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, const Vec &output_gradient) |

| SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then). | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) | |

| virtual void | forget () |

| reset the parameters to the state they would be BEFORE starting training. | |

| virtual void | setLearningRate (real dynamic_learning_rate) |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual ShuntingNNetLayerModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | start_learning_rate |

| Starting learning-rate, by which we multiply the gradient step. | |

| real | decrease_constant |

| learning_rate = start_learning_rate / (1 + decrease_constant*t), where t is the number of updates since the beginning | |

| real | init_weights_random_scale |

| If init_weights is not provided, the weights are initialized randomly from a uniform in [-r,r], with r = init_weights_random_scale/input_size. | |

| real | init_quad_weights_random_scale |

| int | n_filters |

| Number of excitation/inhibition quadratic weights. | |

| int | n_filters_inhib |

| TVec< Mat > | excit_quad_weights |

| The weights, one neuron per line. | |

| TVec< Mat > | inhib_quad_weights |

| Mat | excit_weights |

| Vec | bias |

| The bias. | |

| Vec | excit_num_coeff |

| The multiplicative coefficients of excitation and inhibition (in the numerator of the output activation) | |

| Vec | inhib_num_coeff |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| Vec | ones |

| A vector filled with all ones. | |

| Mat | batch_excitations |

| Mat | batch_inhibitions |

Private Types | |

| typedef OnlineLearningModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | resizeOnes (int n) const |

| Resize vector 'ones'. | |

Private Attributes | |

| real | learning_rate |

| int | step_number |

Affine transformation module, with stochastic gradient descent updates.

Neural Network layer, using stochastic gradient to update neuron weights, Output = weights * Input + bias Weights and bias are updated by online gradient descent, with learning rate possibly decreasing in 1/(1 + n_updates_done * decrease_constant). An L1 and L2 regularization penalty can be added to push weights to 0. Weights can be initialized to 0, to a given initial matrix, or randomly from a uniform distribution.

Definition at line 65 of file ShuntingNNetLayerModule.h.

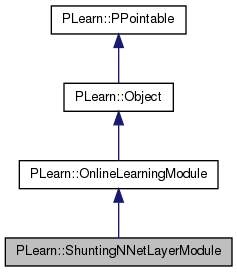

typedef OnlineLearningModule PLearn::ShuntingNNetLayerModule::inherited [private] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 67 of file ShuntingNNetLayerModule.h.

| PLearn::ShuntingNNetLayerModule::ShuntingNNetLayerModule | ( | ) |

Default constructor.

Definition at line 65 of file ShuntingNNetLayerModule.cc.

:

start_learning_rate( .001 ),

decrease_constant( 0. ),

init_weights_random_scale( 1. ),

init_quad_weights_random_scale( 1. ),

n_filters( 1 ),

n_filters_inhib( -1 ),

step_number( 0 )

{}

| string PLearn::ShuntingNNetLayerModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

| OptionList & PLearn::ShuntingNNetLayerModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

| RemoteMethodMap & PLearn::ShuntingNNetLayerModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

| Object * PLearn::ShuntingNNetLayerModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

| StaticInitializer ShuntingNNetLayerModule::_static_initializer_ & PLearn::ShuntingNNetLayerModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

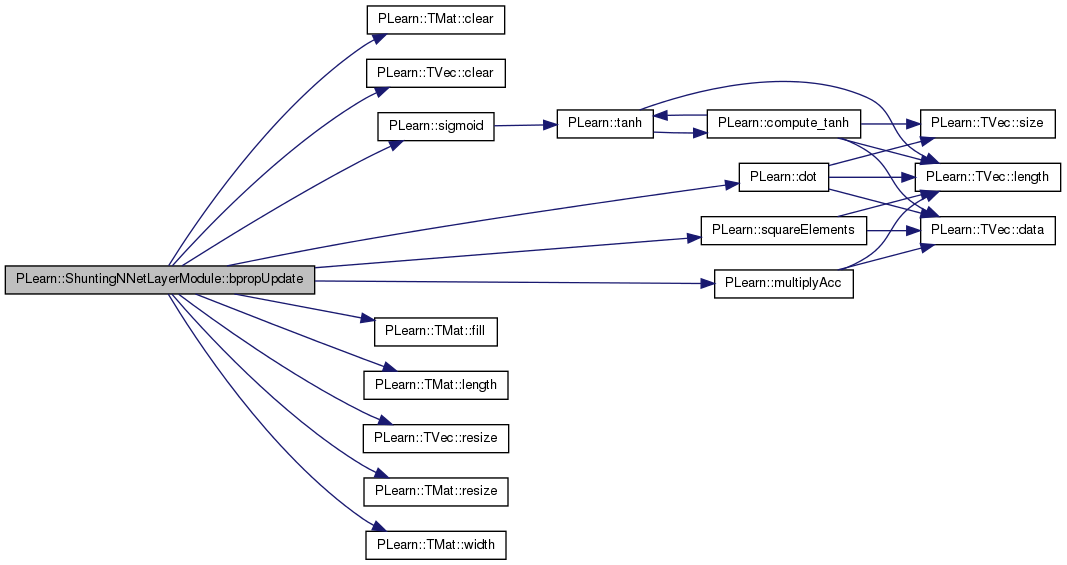

| void PLearn::ShuntingNNetLayerModule::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 380 of file ShuntingNNetLayerModule.cc.

References batch_excitations, batch_inhibitions, bias, PLearn::TMat< T >::clear(), PLearn::TVec< T >::clear(), decrease_constant, PLearn::dot(), excit_num_coeff, excit_quad_weights, excit_weights, PLearn::TMat< T >::fill(), i, inhib_num_coeff, inhib_quad_weights, PLearn::OnlineLearningModule::input_size, learning_rate, PLearn::TMat< T >::length(), PLearn::multiplyAcc(), n, n_filters, n_filters_inhib, PLearn::OnlineLearningModule::output_size, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), return, PLearn::sigmoid(), PLearn::squareElements(), start_learning_rate, step_number, and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

PLASSERT( outputs.width() == output_size );

PLASSERT( output_gradients.width() == output_size );

//fprop(inputs);

int n = inputs.length();

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == input_size &&

input_gradients.length() == n,

"Cannot resize input_gradients and accumulate into it" );

}

else

{

input_gradients.resize(n, input_size);

input_gradients.fill(0);

}

learning_rate = start_learning_rate / (1+decrease_constant*step_number);

real avg_lr = learning_rate / n; // To obtain an average on a mini-batch.

if ( avg_lr == 0. )

return ;

Mat tmp(n, output_size);

// tmp = (1 + E + S ).^2;

tmp.fill(1.);

multiplyAcc(tmp, batch_excitations, (real)1);

multiplyAcc(tmp, batch_inhibitions, (real)1);

squareElements(tmp);

Vec bias_updates(output_size);

Mat excit_weights_updates( output_size, input_size);

TVec<Mat> excit_quad_weights_updates(n_filters);

TVec<Mat> inhib_quad_weights_updates(n_filters_inhib);

// Initialisation

bias_updates.clear();

excit_weights_updates.clear();

for( int k=0; k < n_filters; k++ )

{

excit_quad_weights_updates[k].resize( output_size, input_size);

excit_quad_weights_updates[k].clear();

if (k < n_filters_inhib) {

inhib_quad_weights_updates[k].resize( output_size, input_size);

inhib_quad_weights_updates[k].clear();

}

}

for( int i_sample = 0; i_sample < n; i_sample++ )

for( int i=0; i<output_size; i++ )

{

real Dactivation_Dexcit = ( excit_num_coeff[i] + batch_inhibitions(i_sample,i)*(excit_num_coeff[i] + inhib_num_coeff[i]) ) / tmp(i_sample,i);

real Dactivation_Dinhib = - ( inhib_num_coeff[i] + batch_excitations(i_sample,i)*(excit_num_coeff[i] + inhib_num_coeff[i]) ) / tmp(i_sample,i);

real lr_og_excit = avg_lr * output_gradients(i_sample,i);

PLASSERT( batch_excitations(i_sample,i)>0. );

PLASSERT( n_filters_inhib==0 || batch_inhibitions(i_sample,i)>0. );

real lr_og_inhib = lr_og_excit * Dactivation_Dinhib / batch_inhibitions(i_sample,i);

lr_og_excit *= Dactivation_Dexcit / batch_excitations(i_sample,i);

real tmp2 = lr_og_excit * sigmoid( dot( excit_weights(i), inputs(i_sample) ) + bias[i] ) * .5;

bias_updates[i] -= tmp2;

multiplyAcc( excit_weights_updates(i), inputs(i_sample), -tmp2);

for( int k = 0; k < n_filters; k++ )

{

real tmp_excit2 = lr_og_excit * dot( excit_quad_weights[k](i), inputs(i_sample) );

real tmp_inhib2 = 0;

if (k < n_filters_inhib)

tmp_inhib2 = lr_og_inhib * dot( inhib_quad_weights[k](i), inputs(i_sample) );

//for( int j=0; j<input_size; j++ )

//{

// excit_quad_weights_updates[k](i,j) -= tmp_excit2 * inputs(i_sample,j);

// if (k < n_filters_inhib)

// inhib_quad_weights_updates[k](i,j) -= tmp_inhib2 * inputs(i_sample,j);

//}

multiplyAcc( excit_quad_weights_updates[k](i), inputs(i_sample), -tmp_excit2);

if (k < n_filters_inhib)

multiplyAcc( inhib_quad_weights_updates[k](i), inputs(i_sample), -tmp_inhib2);

}

}

multiplyAcc( bias, bias_updates, 1.);

multiplyAcc( excit_weights, excit_weights_updates, 1.);

for( int k = 0; k < n_filters; k++ )

{

multiplyAcc( excit_quad_weights[k], excit_quad_weights_updates[k], 1.);

if (k < n_filters_inhib)

multiplyAcc( inhib_quad_weights[k], inhib_quad_weights_updates[k], 1.);

}

batch_excitations.clear();

batch_inhibitions.clear();

step_number += n;

}

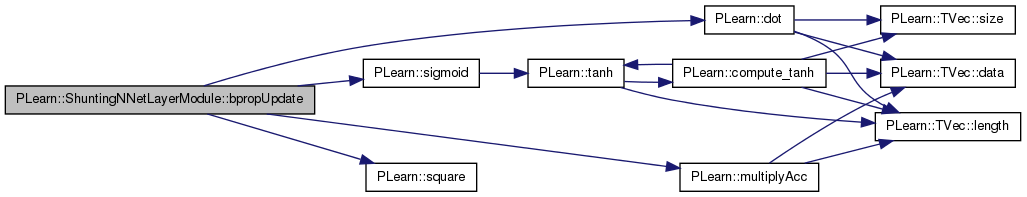

| void PLearn::ShuntingNNetLayerModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then).

Since sub-classes are supposed to learn ONLINE, the object is 'ready-to-be-used' just after any bpropUpdate. N.B. The DEFAULT IMPLEMENTATION JUST CALLS bpropUpdate(input, output, input_gradient, output_gradient) AND IGNORES INPUT GRADIENT.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 339 of file ShuntingNNetLayerModule.cc.

References batch_excitations, batch_inhibitions, bias, decrease_constant, PLearn::dot(), excit_num_coeff, excit_quad_weights, excit_weights, i, inhib_num_coeff, inhib_quad_weights, PLearn::OnlineLearningModule::input_size, j, learning_rate, PLearn::multiplyAcc(), n_filters, n_filters_inhib, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::sigmoid(), PLearn::square(), start_learning_rate, and step_number.

{

learning_rate = start_learning_rate / (1+decrease_constant*step_number);

for( int i=0; i<output_size; i++ )

{

real tmp = square(1 + batch_excitations(0,i) + batch_inhibitions(0,i) );

real Dactivation_Dexcit = ( excit_num_coeff[i] + batch_inhibitions(0,i)*(excit_num_coeff[i] + inhib_num_coeff[i]) ) / tmp;

real Dactivation_Dinhib = - ( inhib_num_coeff[i] + batch_excitations(0,i)*(excit_num_coeff[i] + inhib_num_coeff[i]) ) / tmp;

real lr_og_excit = learning_rate * output_gradient[i];

PLASSERT( batch_excitations(0,i)>0. );

PLASSERT( batch_inhibitions(0,i)>0. );

real lr_og_inhib = lr_og_excit * Dactivation_Dinhib / batch_inhibitions(0,i);

lr_og_excit *= Dactivation_Dexcit / batch_excitations(0,i);

tmp = lr_og_excit * sigmoid( dot( excit_weights(i), input ) + bias[i] ) * .5;

bias[i] -= tmp;

multiplyAcc( excit_weights(i), input, -tmp);

for( int k = 0; k < n_filters; k++ )

{

real tmp_excit2 = lr_og_excit * dot( excit_quad_weights[k](i), input );

real tmp_inhib2 = 0;

if (k < n_filters_inhib)

tmp_inhib2 = lr_og_inhib * dot( inhib_quad_weights[k](i), input );

for( int j=0; j<input_size; j++ )

{

excit_quad_weights[k](i,j) -= tmp_excit2 * input[j];

if (k < n_filters_inhib)

inhib_quad_weights[k](i,j) -= tmp_inhib2 * input[j];

}

}

}

step_number++;

}

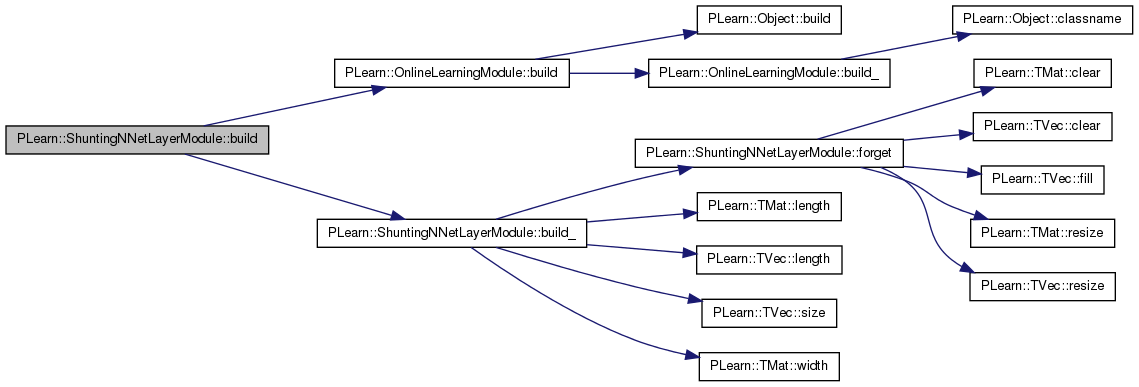

| void PLearn::ShuntingNNetLayerModule::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 173 of file ShuntingNNetLayerModule.cc.

References PLearn::OnlineLearningModule::build(), and build_().

{

inherited::build();

build_();

}

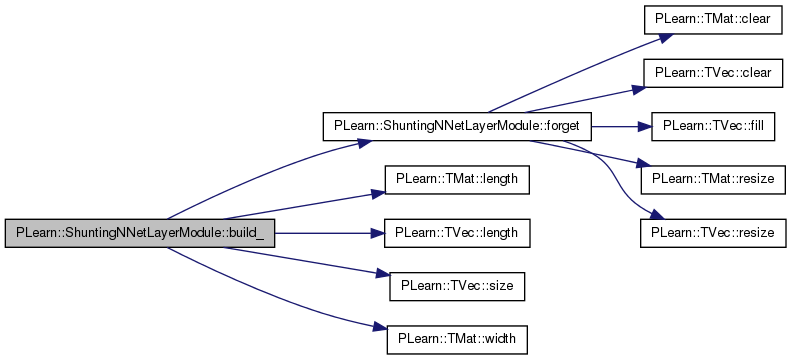

| void PLearn::ShuntingNNetLayerModule::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 150 of file ShuntingNNetLayerModule.cc.

References bias, excit_quad_weights, excit_weights, forget(), inhib_quad_weights, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), n_filters, n_filters_inhib, PLearn::OnlineLearningModule::output_size, PLASSERT, PLERROR, PLearn::TVec< T >::size(), and PLearn::TMat< T >::width().

Referenced by build().

{

if( input_size < 0 ) // has not been initialized

return;

if( output_size < 0 )

PLERROR("ShuntingNNetLayerModule::build_: 'output_size' is < 0 (%i),\n"

" you should set it to a positive integer (the number of"

" neurons).\n", output_size);

if (n_filters_inhib < 0)

n_filters_inhib= n_filters;

PLASSERT( n_filters>0 );

if( excit_quad_weights.length() != n_filters

|| inhib_quad_weights.length() != n_filters_inhib

|| excit_weights.length() != output_size

|| excit_weights.width() != input_size

|| bias.size() != output_size )

{

forget();

}

}

| string PLearn::ShuntingNNetLayerModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

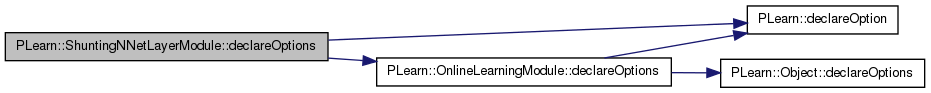

| void PLearn::ShuntingNNetLayerModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 79 of file ShuntingNNetLayerModule.cc.

References bias, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::OnlineLearningModule::declareOptions(), decrease_constant, excit_num_coeff, excit_quad_weights, excit_weights, inhib_num_coeff, inhib_quad_weights, init_quad_weights_random_scale, init_weights_random_scale, PLearn::OptionBase::learntoption, n_filters, n_filters_inhib, and start_learning_rate.

{

declareOption(ol, "start_learning_rate",

&ShuntingNNetLayerModule::start_learning_rate,

OptionBase::buildoption,

"Learning-rate of stochastic gradient optimization");

declareOption(ol, "decrease_constant",

&ShuntingNNetLayerModule::decrease_constant,

OptionBase::buildoption,

"Decrease constant of stochastic gradient optimization");

declareOption(ol, "init_weights_random_scale",

&ShuntingNNetLayerModule::init_weights_random_scale,

OptionBase::buildoption,

"Weights of the excitation (softplus part) are initialized randomly\n"

"from a uniform in [-r,r], with r = init_weights_random_scale/input_size.\n"

"To clear the weights initially, just set this option to 0.");

declareOption(ol, "init_quad_weights_random_scale",

&ShuntingNNetLayerModule::init_quad_weights_random_scale,

OptionBase::buildoption,

"Weights of the quadratic part (of excitation, as well as inhibition) are initialized randomly\n"

"from a uniform in [-r,r], with r = init_weights_random_scale/input_size.\n"

"To clear the weights initially, just set this option to 0.");

declareOption(ol, "n_filters",

&ShuntingNNetLayerModule::n_filters,

OptionBase::buildoption,

"Number of synapses per neuron for excitation.\n");

declareOption(ol, "n_filters_inhib",

&ShuntingNNetLayerModule::n_filters_inhib,

OptionBase::buildoption,

"Number of synapses per neuron for inhibition.\n"

"Must be lower or equal to n_filters in the current implementation (!).\n"

"If -1, then it is taken equal to n_filters.");

declareOption(ol, "excit_quad_weights", &ShuntingNNetLayerModule::excit_quad_weights,

OptionBase::learntoption,

"List of weights vectors of the neurons"

"contributing to the excitation -- quadratic part)");

declareOption(ol, "inhib_quad_weights", &ShuntingNNetLayerModule::inhib_quad_weights,

OptionBase::learntoption,

"List of weights vectors of the neurons (inhibation -- quadratic part)\n");

declareOption(ol, "excit_weights", &ShuntingNNetLayerModule::excit_weights,

OptionBase::learntoption,

"Input weights vectors of the neurons (excitation -- softplus part)\n");

declareOption(ol, "bias", &ShuntingNNetLayerModule::bias,

OptionBase::learntoption,

"Bias of the neurons (in the softplus of the excitations)\n");

declareOption(ol, "excit_num_coeff", &ShuntingNNetLayerModule::excit_num_coeff,

OptionBase::learntoption,

"Multiplicative Coefficient applied on the excitation\n"

"in the numerator of the activation closed form.\n");

declareOption(ol, "inhib_num_coeff", &ShuntingNNetLayerModule::inhib_num_coeff,

OptionBase::learntoption,

"Multiplicative Coefficient applied on the inhibition\n"

"in the numerator of the activation closed form.\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::ShuntingNNetLayerModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 130 of file ShuntingNNetLayerModule.h.

:

| ShuntingNNetLayerModule * PLearn::ShuntingNNetLayerModule::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

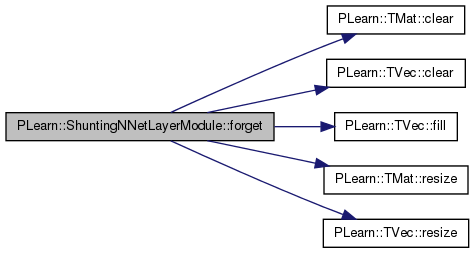

| void PLearn::ShuntingNNetLayerModule::forget | ( | ) | [virtual] |

reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Definition at line 183 of file ShuntingNNetLayerModule.cc.

References bias, PLearn::TMat< T >::clear(), PLearn::TVec< T >::clear(), excit_num_coeff, excit_quad_weights, excit_weights, PLearn::TVec< T >::fill(), inhib_num_coeff, inhib_quad_weights, init_quad_weights_random_scale, init_weights_random_scale, PLearn::OnlineLearningModule::input_size, learning_rate, n_filters, n_filters_inhib, PLearn::OnlineLearningModule::output_size, PLASSERT, PLWARNING, PLearn::OnlineLearningModule::random_gen, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), start_learning_rate, and step_number.

Referenced by build_().

{

learning_rate = start_learning_rate;

step_number = 0;

bias.resize( output_size );

bias.clear();

excit_num_coeff.resize( output_size );

inhib_num_coeff.resize( output_size );

excit_num_coeff.fill(1.);

inhib_num_coeff.fill(1.);

excit_weights.resize( output_size, input_size );

excit_quad_weights.resize( n_filters );

PLASSERT( n_filters_inhib >= 0 && n_filters_inhib <= n_filters );

inhib_quad_weights.resize( n_filters_inhib );

if( !random_gen )

{

PLWARNING( "ShuntingNNetLayerModule: cannot forget() without random_gen" );

return;

}

real r = init_weights_random_scale / (real)input_size;

if( r > 0. )

random_gen->fill_random_uniform(excit_weights, -r, r);

else

excit_weights.clear();

r = init_quad_weights_random_scale / (real)input_size;

if( r > 0. )

for( int k = 0; k < n_filters; k++ )

{

excit_quad_weights[k].resize( output_size, input_size );

random_gen->fill_random_uniform(excit_quad_weights[k], -r, r);

if ( k < n_filters_inhib ) {

inhib_quad_weights[k].resize( output_size, input_size );

random_gen->fill_random_uniform(inhib_quad_weights[k], -r, r);

}

}

else

for( int k = 0; k < n_filters; k++ )

{

excit_quad_weights[k].resize(output_size, input_size );

excit_quad_weights[k].clear();

if ( k < n_filters_inhib ) {

inhib_quad_weights[k].resize(output_size, input_size );

inhib_quad_weights[k].clear();

}

}

}

Overridden.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 278 of file ShuntingNNetLayerModule.cc.

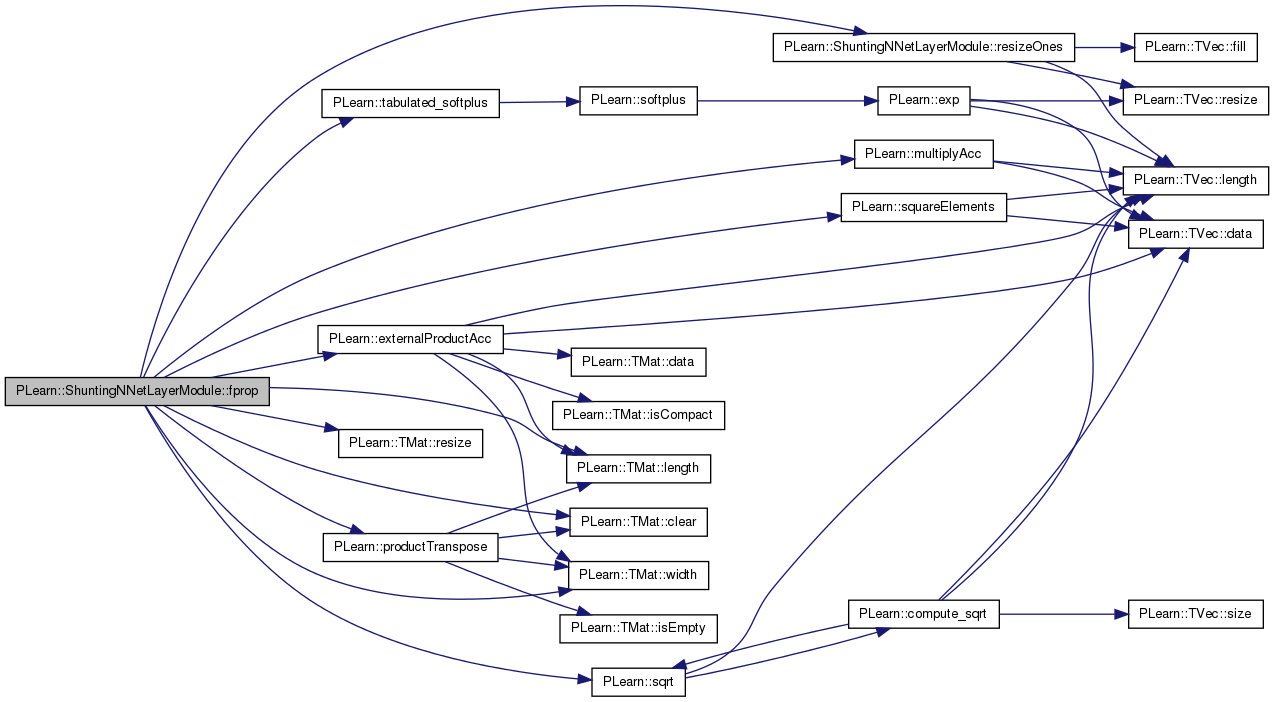

References batch_excitations, batch_inhibitions, bias, PLearn::TMat< T >::clear(), PLearn::OnlineLearningModule::during_training, excit_num_coeff, excit_quad_weights, excit_weights, PLearn::externalProductAcc(), i, inhib_num_coeff, inhib_quad_weights, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), PLearn::multiplyAcc(), n, n_filters, n_filters_inhib, ones, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::productTranspose(), PLearn::TMat< T >::resize(), resizeOnes(), PLearn::sqrt(), PLearn::squareElements(), PLearn::tabulated_softplus(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

int n = inputs.length();

outputs.resize(n, output_size);

Mat excitations_part2(n, output_size);

excitations_part2.clear();

productTranspose(excitations_part2, inputs, excit_weights);

resizeOnes(n);

externalProductAcc(excitations_part2, ones, bias);

Mat excitations(n, output_size), inhibitions(n, output_size);

excitations.clear();

inhibitions.clear();

for ( int k=0; k < n_filters; k++ )

{

Mat tmp_sample_output(n, output_size);

tmp_sample_output.clear();

productTranspose(tmp_sample_output, inputs, excit_quad_weights[k]);

squareElements(tmp_sample_output);

multiplyAcc(excitations, tmp_sample_output, 1.);

if ( k < n_filters_inhib ) {

tmp_sample_output.clear();

productTranspose(tmp_sample_output, inputs, inhib_quad_weights[k]);

squareElements(tmp_sample_output);

multiplyAcc(inhibitions, tmp_sample_output, 1.);

}

}

for( int i_sample = 0; i_sample < n; i_sample ++)

{

for( int i = 0; i < output_size; i++ )

{

excitations(i_sample,i) = sqrt( excitations(i_sample,i) + tabulated_softplus( excitations_part2(i_sample,i) ) );

inhibitions(i_sample,i) = sqrt( inhibitions(i_sample,i) );

real E = excitations(i_sample,i);

real S = inhibitions(i_sample,i);

outputs(i_sample,i) = ( excit_num_coeff[i]* E - inhib_num_coeff[i]* S ) /

(1. + E + S );

}

}

if( during_training )

{

batch_excitations.resize(n, output_size);

batch_inhibitions.resize(n, output_size);

batch_excitations << excitations;

batch_inhibitions << inhibitions;

}

}

given the input, compute the output (possibly resize it appropriately) SOON TO BE DEPRECATED, USE fprop(const TVec<Mat*>& ports_value)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 240 of file ShuntingNNetLayerModule.cc.

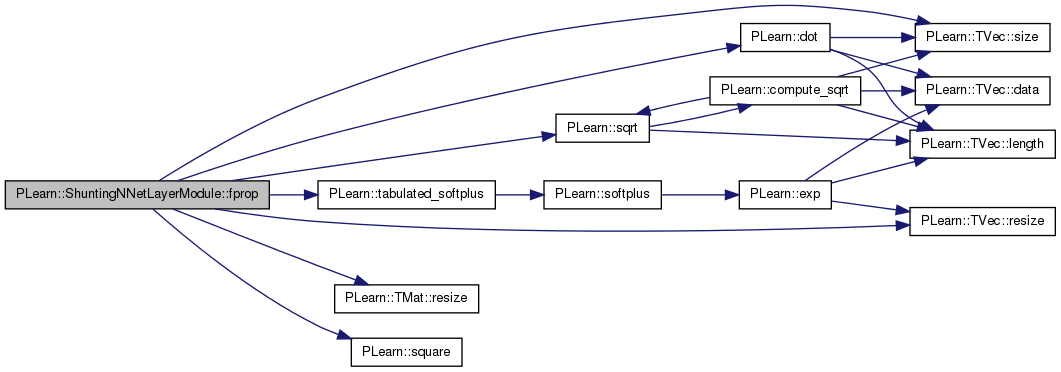

References batch_excitations, batch_inhibitions, bias, PLearn::dot(), PLearn::OnlineLearningModule::during_training, excit_num_coeff, excit_quad_weights, excit_weights, i, inhib_num_coeff, inhib_quad_weights, PLearn::OnlineLearningModule::input_size, n_filters, n_filters_inhib, PLearn::OnlineLearningModule::output_size, PLASSERT_MSG, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::sqrt(), PLearn::square(), and PLearn::tabulated_softplus().

{

PLASSERT_MSG( input.size() == input_size,

"input.size() should be equal to this->input_size" );

output.resize( output_size );

if( during_training )

{

batch_excitations.resize(1, output_size);

batch_inhibitions.resize(1, output_size);

}

// if( use_fast_approximations )

for( int i = 0; i < output_size; i++ )

{

real excitation = 0.;

real inhibition = 0.;

for ( int k=0; k < n_filters; k++ )

{

excitation += square( dot( excit_quad_weights[k](i), input ) );

if ( k < n_filters_inhib )

inhibition += square( dot( inhib_quad_weights[k](i), input ) );

}

excitation = sqrt( excitation + tabulated_softplus( dot( excit_weights(i), input ) + bias[i] ) );

inhibition = sqrt( inhibition );

if( during_training )

{

batch_excitations(0,i) = excitation;

batch_inhibitions(0,i) = inhibition;

}

output[i] = ( excit_num_coeff[i]* excitation - inhib_num_coeff[i]* inhibition ) /

(1. + excitation + inhibition );

}

// else

}

| OptionList & PLearn::ShuntingNNetLayerModule::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

| OptionMap & PLearn::ShuntingNNetLayerModule::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

| RemoteMethodMap & PLearn::ShuntingNNetLayerModule::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 60 of file ShuntingNNetLayerModule.cc.

| void PLearn::ShuntingNNetLayerModule::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 499 of file ShuntingNNetLayerModule.cc.

References bias, PLearn::deepCopyField(), excit_num_coeff, excit_quad_weights, excit_weights, inhib_num_coeff, inhib_quad_weights, PLearn::OnlineLearningModule::makeDeepCopyFromShallowCopy(), and ones.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(excit_weights, copies);

deepCopyField(excit_quad_weights, copies);

deepCopyField(inhib_quad_weights, copies);

deepCopyField(bias, copies);

deepCopyField(excit_num_coeff, copies);

deepCopyField(inhib_num_coeff, copies);

deepCopyField(ones, copies);

}

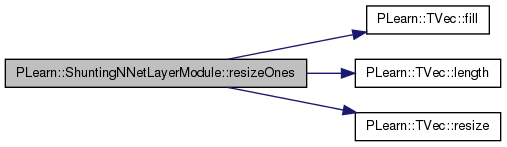

| void PLearn::ShuntingNNetLayerModule::resizeOnes | ( | int | n | ) | const [private] |

Resize vector 'ones'.

Definition at line 518 of file ShuntingNNetLayerModule.cc.

References PLearn::TVec< T >::fill(), PLearn::TVec< T >::length(), n, ones, and PLearn::TVec< T >::resize().

Referenced by fprop().

{

if (ones.length() < n) {

ones.resize(n);

ones.fill(1);

} else if (ones.length() > n)

ones.resize(n);

}

| void PLearn::ShuntingNNetLayerModule::setLearningRate | ( | real | dynamic_learning_rate | ) | [virtual] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 487 of file ShuntingNNetLayerModule.cc.

References start_learning_rate, and step_number.

{

start_learning_rate = dynamic_learning_rate;

step_number = 0;

// learning_rate will automatically be set in bpropUpdate()

}

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 130 of file ShuntingNNetLayerModule.h.

Mat PLearn::ShuntingNNetLayerModule::batch_excitations [mutable, protected] |

Definition at line 143 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), and fprop().

Mat PLearn::ShuntingNNetLayerModule::batch_inhibitions [mutable, protected] |

Definition at line 144 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), and fprop().

The bias.

Definition at line 94 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), build_(), declareOptions(), forget(), fprop(), and makeDeepCopyFromShallowCopy().

learning_rate = start_learning_rate / (1 + decrease_constant*t), where t is the number of updates since the beginning

Definition at line 77 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), and declareOptions().

The multiplicative coefficients of excitation and inhibition (in the numerator of the output activation)

Definition at line 98 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), declareOptions(), forget(), fprop(), and makeDeepCopyFromShallowCopy().

The weights, one neuron per line.

Definition at line 89 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), build_(), declareOptions(), forget(), fprop(), and makeDeepCopyFromShallowCopy().

Definition at line 91 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), build_(), declareOptions(), forget(), fprop(), and makeDeepCopyFromShallowCopy().

Definition at line 99 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), declareOptions(), forget(), fprop(), and makeDeepCopyFromShallowCopy().

Definition at line 90 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), build_(), declareOptions(), forget(), fprop(), and makeDeepCopyFromShallowCopy().

Definition at line 82 of file ShuntingNNetLayerModule.h.

Referenced by declareOptions(), and forget().

If init_weights is not provided, the weights are initialized randomly from a uniform in [-r,r], with r = init_weights_random_scale/input_size.

Definition at line 81 of file ShuntingNNetLayerModule.h.

Referenced by declareOptions(), and forget().

Definition at line 167 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), and forget().

Number of excitation/inhibition quadratic weights.

Definition at line 85 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), build_(), declareOptions(), forget(), and fprop().

Definition at line 86 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), build_(), declareOptions(), forget(), and fprop().

Vec PLearn::ShuntingNNetLayerModule::ones [mutable, protected] |

A vector filled with all ones.

Definition at line 141 of file ShuntingNNetLayerModule.h.

Referenced by fprop(), makeDeepCopyFromShallowCopy(), and resizeOnes().

Starting learning-rate, by which we multiply the gradient step.

Definition at line 73 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), declareOptions(), forget(), and setLearningRate().

Definition at line 168 of file ShuntingNNetLayerModule.h.

Referenced by bpropUpdate(), forget(), and setLearningRate().

1.7.4

1.7.4