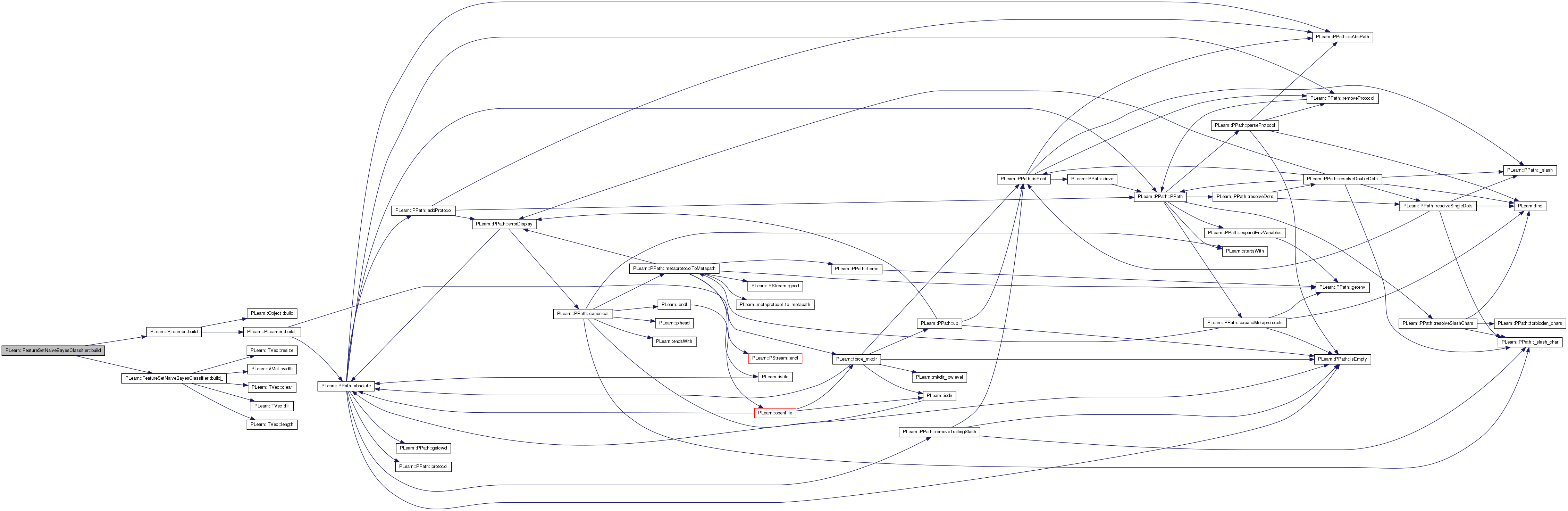

|

PLearn 0.1

|

|

PLearn 0.1

|

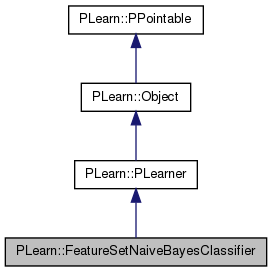

Naive Bayes classifier on a feature set space. More...

#include <FeatureSetNaiveBayesClassifier.h>

Public Member Functions | |

| FeatureSetNaiveBayesClassifier () | |

| virtual | ~FeatureSetNaiveBayesClassifier () |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual FeatureSetNaiveBayesClassifier * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | forget () |

| *** SUBCLASS WRITING: *** | |

| virtual int | outputsize () const |

| SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options. | |

| virtual TVec< string > | getTrainCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual TVec< string > | getTestCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual void | train () |

| *** SUBCLASS WRITING: *** | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| *** SUBCLASS WRITING: *** | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| *** SUBCLASS WRITING: *** | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| TVec< TVec< hash_map< int, int > > > | feature_class_counts |

| Feature-class pair counts. | |

| TVec< TVec< int > > | sum_feature_class_counts |

| Sums of feature-class pair counts, over features. | |

| TVec< int > | class_counts |

| Class counts. | |

| bool | possible_targets_vary |

| Indication that the set of possible targets vary from one input vector to another. | |

| TVec< PP< FeatureSet > > | feat_sets |

| FeatureSets to apply on input. | |

| bool | input_dependent_posterior_estimation |

| Indication that different estimations of the posterior probability of a feature given a class should be used for different inputs. | |

| real | smoothing_constant |

| Add-delta smoothing constant. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | getProbs (const Vec &inputv, Vec &outputv) const |

| void | batchComputeOutputAndConfidence (VMat inputs, real probability, VMat outputs_and_confidence) const |

| Changes the reference_set and then calls the parent's class method. | |

| virtual void | use (VMat testset, VMat outputs) const |

| Changes the reference_set and then calls the parent's class method. | |

| virtual void | test (VMat testset, PP< VecStatsCollector > test_stats, VMat testoutputs=0, VMat testcosts=0) const |

| Changes the reference_set and then calls the parent's class method. | |

| virtual VMat | processDataSet (VMat dataset) const |

| Changes the reference_set and then calls the parent's class method. | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| int | total_output_size |

| Total output size. | |

| int | total_feats_per_token |

| Number of features per input token for which a distributed representation is computed. | |

| int | n_feat_sets |

| Number of feature sets. | |

| Vec | feat_input |

| Feature input;. | |

| VMat | val_string_reference_set |

| VMatrix used to get values to string mapping for input tokens. | |

| VMat | target_values_reference_set |

| Possible target values mapping. | |

| PP< PRandom > | rgen |

| Random number generator for parameters initialization. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| **** SUBCLASS WRITING: **** | |

| int | my_argmax (const Vec &vec, int default_compare=0) const |

Private Attributes | |

| Vec | target_values |

| Vector of possible target values. | |

| Vec | output_comp |

| Vector for output computations. | |

| Vec | row |

| Row vector. | |

| TVec< TVec< int > > | feats |

| Features for each token. | |

| string | str |

| Temporary computations variable, used in fprop() and bprop() Care must be taken when using these variables, since they are used by many different functions. | |

| int | nfeats |

Naive Bayes classifier on a feature set space.

Definition at line 50 of file FeatureSetNaiveBayesClassifier.h.

typedef PLearner PLearn::FeatureSetNaiveBayesClassifier::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file FeatureSetNaiveBayesClassifier.h.

| PLearn::FeatureSetNaiveBayesClassifier::FeatureSetNaiveBayesClassifier | ( | ) |

Definition at line 56 of file FeatureSetNaiveBayesClassifier.cc.

: rgen(new PRandom()), possible_targets_vary(0), input_dependent_posterior_estimation(0), smoothing_constant(0) {}

| PLearn::FeatureSetNaiveBayesClassifier::~FeatureSetNaiveBayesClassifier | ( | ) | [virtual] |

Definition at line 64 of file FeatureSetNaiveBayesClassifier.cc.

{

}

| string PLearn::FeatureSetNaiveBayesClassifier::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

| OptionList & PLearn::FeatureSetNaiveBayesClassifier::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

| RemoteMethodMap & PLearn::FeatureSetNaiveBayesClassifier::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

Reimplemented from PLearn::PLearner.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

| Object * PLearn::FeatureSetNaiveBayesClassifier::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

| StaticInitializer FeatureSetNaiveBayesClassifier::_static_initializer_ & PLearn::FeatureSetNaiveBayesClassifier::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

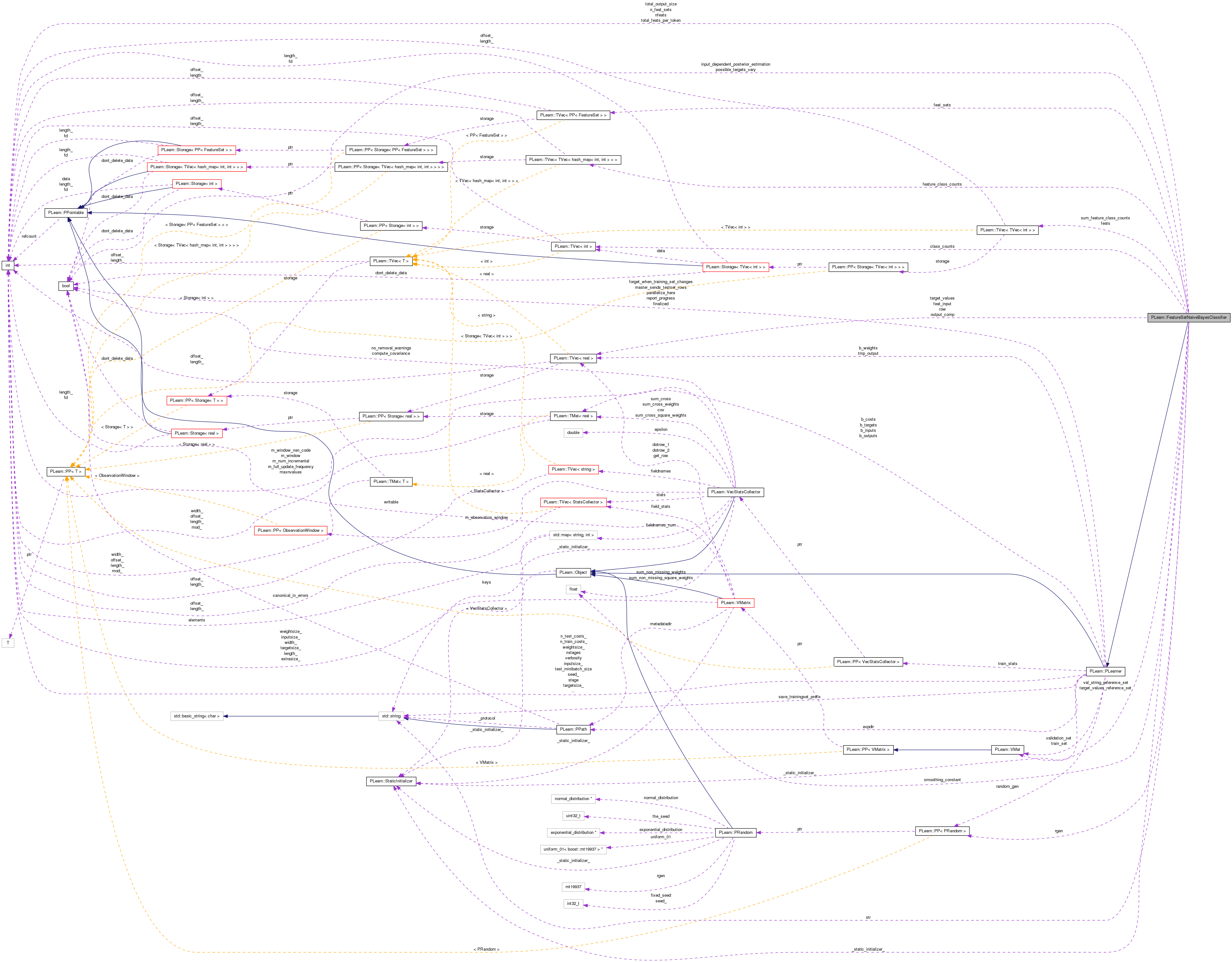

| void PLearn::FeatureSetNaiveBayesClassifier::batchComputeOutputAndConfidence | ( | VMat | inputs, |

| real | probability, | ||

| VMat | outputs_and_confidence | ||

| ) | const [protected, virtual] |

Changes the reference_set and then calls the parent's class method.

Reimplemented from PLearn::PLearner.

Definition at line 464 of file FeatureSetNaiveBayesClassifier.cc.

References PLearn::PLearner::batchComputeOutputAndConfidence(), PLearn::PLearner::train_set, and val_string_reference_set.

{

val_string_reference_set = inputs;

inherited::batchComputeOutputAndConfidence(inputs,probability,outputs_and_confidence);

val_string_reference_set = train_set;

}

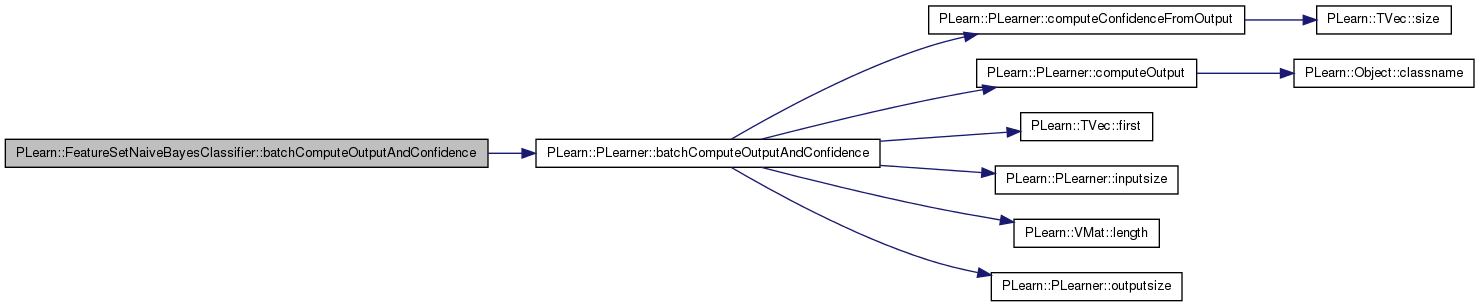

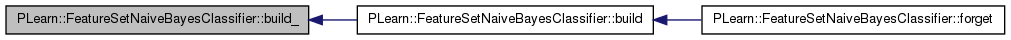

| void PLearn::FeatureSetNaiveBayesClassifier::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 108 of file FeatureSetNaiveBayesClassifier.cc.

References PLearn::PLearner::build(), and build_().

Referenced by forget().

{

inherited::build();

build_();

}

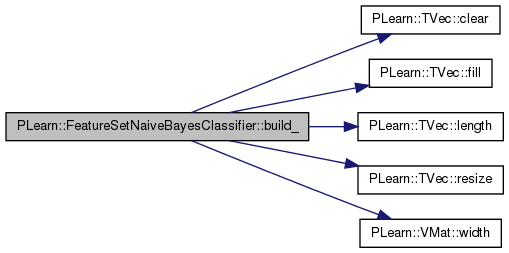

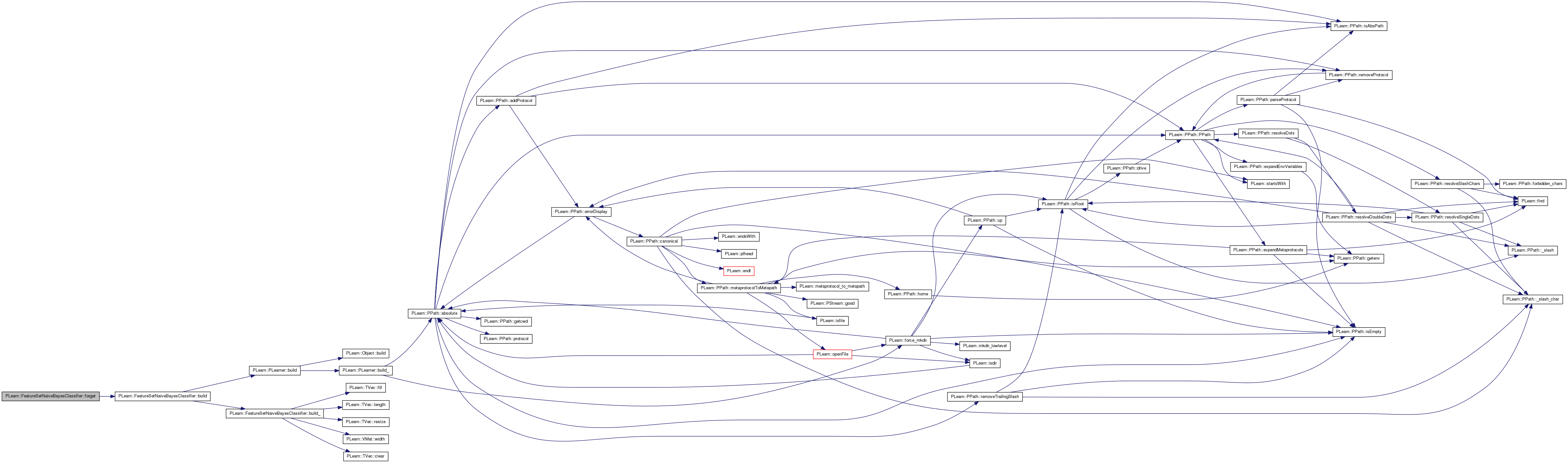

| void PLearn::FeatureSetNaiveBayesClassifier::build_ | ( | ) | [private] |

**** SUBCLASS WRITING: ****

This method should finish building of the object, according to set 'options', in *any* situation.

Typical situations include:

You can assume that the parent class' build_() has already been called.

A typical build method will want to know the inputsize(), targetsize() and outputsize(), and may also want to check whether train_set->hasWeights(). All these methods require a train_set to be set, so the first thing you may want to do, is check if(train_set), before doing any heavy building...

Note: build() is always called by setTrainingSet.

Reimplemented from PLearn::PLearner.

Definition at line 118 of file FeatureSetNaiveBayesClassifier.cc.

References class_counts, PLearn::TVec< T >::clear(), feat_sets, feats, feature_class_counts, PLearn::TVec< T >::fill(), i, input_dependent_posterior_estimation, PLearn::PLearner::inputsize_, j, PLearn::TVec< T >::length(), MISSING_VALUE, n_feat_sets, output_comp, PLERROR, PLearn::TVec< T >::resize(), rgen, row, PLearn::PLearner::seed_, PLearn::PLearner::stage, sum_feature_class_counts, target_values_reference_set, PLearn::PLearner::targetsize_, total_feats_per_token, total_output_size, PLearn::PLearner::train_set, val_string_reference_set, PLearn::PLearner::weightsize_, and PLearn::VMat::width().

Referenced by build().

{

// Don't do anything if we don't have a train_set

// It's the only one who knows the inputsize, targetsize and weightsize

if(inputsize_>=0 && targetsize_>=0 && weightsize_>=0)

{

if(targetsize_ != 1)

PLERROR("In FeatureSetNaiveBayesClassifier::build_(): targetsize_ must be 1, not %d",targetsize_);

if(weightsize_ > 0)

PLERROR("In FeatureSetNaiveBayesClassifier::build_(): weightsize_ > 0 is not supported");

n_feat_sets = feat_sets.length();

if(n_feat_sets == 0)

PLERROR("In FeatureSetNaiveBayesClassifier::build_(): at least one FeatureSet must be provided\n");

if(inputsize_ % n_feat_sets != 0)

PLERROR("In FeatureSetNaiveBayesClassifier::build_(): feat_sets.length() must be a divisor of inputsize()");

PP<Dictionary> dict = train_set->getDictionary(inputsize_);

total_output_size = dict->size();

total_feats_per_token = 0;

for(int i=0; i<n_feat_sets; i++)

total_feats_per_token += feat_sets[i]->size();

if(stage <= 0)

{

if(input_dependent_posterior_estimation)

{

feature_class_counts.resize(inputsize_/n_feat_sets);

sum_feature_class_counts.resize(inputsize_/n_feat_sets);

for(int i=0; i<feature_class_counts.length(); i++)

{

feature_class_counts[i].resize(total_output_size);

sum_feature_class_counts[i].resize(total_output_size);

for(int j=0; j<total_output_size; j++)

{

feature_class_counts[i][j].clear();

sum_feature_class_counts[i][j] = 0;

}

}

class_counts.resize(total_output_size);

class_counts.fill(0);

}

else

{

feature_class_counts.resize(1);

sum_feature_class_counts.resize(1);

feature_class_counts[0].resize(total_output_size);

sum_feature_class_counts[0].resize(total_output_size);

for(int j=0; j<total_output_size; j++)

{

feature_class_counts[0][j].clear();

sum_feature_class_counts[0][j] = 0;

}

class_counts.resize(total_output_size);

class_counts.fill(0);

}

}

output_comp.resize(total_output_size);

row.resize(train_set->width());

row.fill(MISSING_VALUE);

feats.resize(inputsize_);

// Making sure that all feats[i] have non null storage...

for(int i=0; i<feats.length(); i++)

{

feats[i].resize(1);

feats[i].resize(0);

}

val_string_reference_set = train_set;

target_values_reference_set = train_set;

if (seed_>=0)

rgen->manual_seed(seed_);

}

}

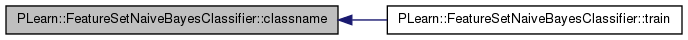

| string PLearn::FeatureSetNaiveBayesClassifier::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

Referenced by train().

| void PLearn::FeatureSetNaiveBayesClassifier::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the weighted costs from already computed output. The costs should correspond to the cost names returned by getTestCostNames().

NOTE: In exotic cases, the cost may also depend on some info in the input, that's why the method also gets so see it.

Implements PLearn::PLearner.

Definition at line 203 of file FeatureSetNaiveBayesClassifier.cc.

References PLERROR.

{

PLERROR("In FeatureSetNaiveBayesClassifier::computeCostsFromOutputs(): output is not enough to compute costs");

}

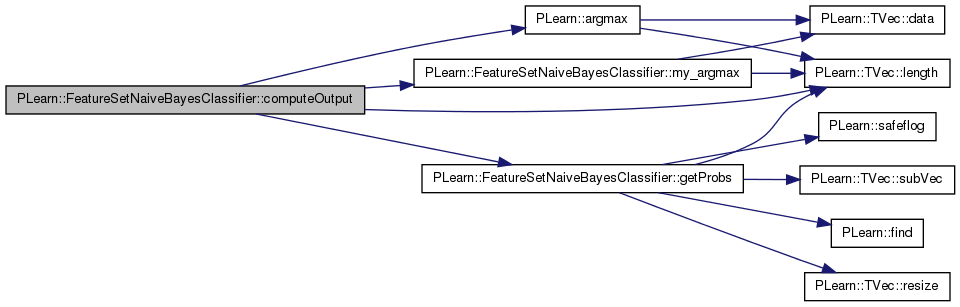

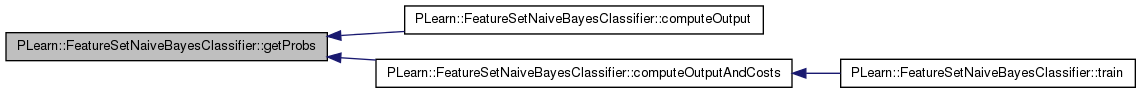

| void PLearn::FeatureSetNaiveBayesClassifier::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 231 of file FeatureSetNaiveBayesClassifier.cc.

References PLearn::argmax(), getProbs(), PLearn::TVec< T >::length(), my_argmax(), output_comp, possible_targets_vary, rgen, and target_values.

{

getProbs(inputv,output_comp);

if(possible_targets_vary)

outputv[0] = target_values[my_argmax(output_comp,rgen->uniform_multinomial_sample(output_comp.length()))];

else

outputv[0] = argmax(output_comp);

}

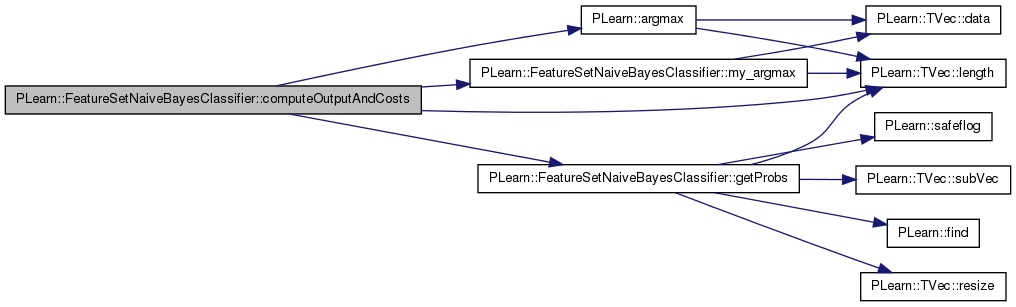

| void PLearn::FeatureSetNaiveBayesClassifier::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 243 of file FeatureSetNaiveBayesClassifier.cc.

References PLearn::argmax(), getProbs(), PLearn::TVec< T >::length(), my_argmax(), output_comp, possible_targets_vary, rgen, and target_values.

Referenced by train().

{

getProbs(inputv,output_comp);

if(possible_targets_vary)

outputv[0] = target_values[my_argmax(output_comp,rgen->uniform_multinomial_sample(output_comp.length()))];

else

outputv[0] = argmax(output_comp);

costsv[0] = (outputv[0] == targetv[0] ? 0 : 1);

}

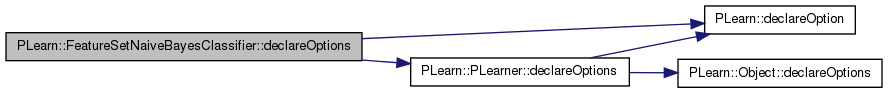

| void PLearn::FeatureSetNaiveBayesClassifier::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 68 of file FeatureSetNaiveBayesClassifier.cc.

References PLearn::OptionBase::buildoption, class_counts, PLearn::declareOption(), PLearn::PLearner::declareOptions(), feat_sets, feature_class_counts, input_dependent_posterior_estimation, PLearn::OptionBase::learntoption, possible_targets_vary, smoothing_constant, and sum_feature_class_counts.

{

declareOption(ol, "possible_targets_vary", &FeatureSetNaiveBayesClassifier::possible_targets_vary,

OptionBase::buildoption,

"Indication that the set of possible targets vary from\n"

"one input vector to another.\n");

declareOption(ol, "feat_sets", &FeatureSetNaiveBayesClassifier::feat_sets,

OptionBase::buildoption,

"FeatureSets to apply on input.\n");

declareOption(ol, "input_dependent_posterior_estimation", &FeatureSetNaiveBayesClassifier::input_dependent_posterior_estimation,

OptionBase::buildoption,

"Indication that different estimations of\n"

"the posterior probability of a feature given a class\n"

"should be used for different inputs.\n");

declareOption(ol, "smoothing_constant", &FeatureSetNaiveBayesClassifier::smoothing_constant,

OptionBase::buildoption,

"Add-delta smoothing constant.\n");

declareOption(ol, "feature_class_counts", &FeatureSetNaiveBayesClassifier::feature_class_counts,

OptionBase::learntoption,

"Feature-class pair counts.\n");

declareOption(ol, "sum_feature_class_counts", &FeatureSetNaiveBayesClassifier::sum_feature_class_counts,

OptionBase::learntoption,

"Sums of feature-class pair counts, over features.\n");

declareOption(ol, "class_counts", &FeatureSetNaiveBayesClassifier::class_counts,

OptionBase::learntoption,

"Class counts.\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::FeatureSetNaiveBayesClassifier::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 124 of file FeatureSetNaiveBayesClassifier.h.

:

static void declareOptions(OptionList& ol);

| FeatureSetNaiveBayesClassifier * PLearn::FeatureSetNaiveBayesClassifier::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

| void PLearn::FeatureSetNaiveBayesClassifier::forget | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!)

A typical forget() method should do the following:

This method is typically called by the build_() method, after it has finished setting up the parameters, and if it deemed useful to set or reset the learner in its fresh state. (remember build may be called after modifying options that do not necessarily require the learner to restart from a fresh state...) forget is also called by the setTrainingSet method, after calling build(), so it will generally be called TWICE during setTrainingSet!

Reimplemented from PLearn::PLearner.

Definition at line 257 of file FeatureSetNaiveBayesClassifier.cc.

References build(), PLearn::PLearner::stage, and PLearn::PLearner::train_set.

| OptionList & PLearn::FeatureSetNaiveBayesClassifier::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

| OptionMap & PLearn::FeatureSetNaiveBayesClassifier::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

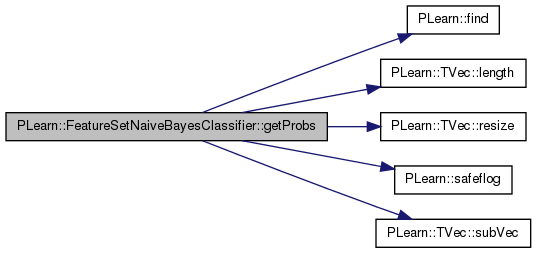

| void PLearn::FeatureSetNaiveBayesClassifier::getProbs | ( | const Vec & | inputv, |

| Vec & | outputv | ||

| ) | const [protected] |

Definition at line 395 of file FeatureSetNaiveBayesClassifier.cc.

References class_counts, feat_sets, feats, feature_class_counts, PLearn::find(), i, input_dependent_posterior_estimation, PLearn::PLearner::inputsize_, j, PLearn::TVec< T >::length(), n_feat_sets, nfeats, possible_targets_vary, PLearn::TVec< T >::resize(), row, PLearn::safeflog(), smoothing_constant, str, PLearn::TVec< T >::subVec(), sum_feature_class_counts, target_values, target_values_reference_set, total_feats_per_token, total_output_size, and val_string_reference_set.

Referenced by computeOutput(), and computeOutputAndCosts().

{

// Get possible target values

if(possible_targets_vary)

{

row.subVec(0,inputsize_) << inputv;

target_values_reference_set->getValues(row,inputsize_,

target_values);

outputv.resize(target_values.length());

}

// Get features

nfeats = 0;

for(int i=0; i<inputsize_; i++)

{

str = val_string_reference_set->getValString(i,inputv[i]);

feat_sets[i%n_feat_sets]->getFeatures(str,feats[i]);

nfeats += feats[i].length();

}

int id=0;

if(possible_targets_vary)

{

for(int i=0; i<target_values.length(); i++)

{

outputv[i] = safeflog(class_counts[(int)target_values[i]]);

for(int k=0; k<inputsize_; k++)

{

if(input_dependent_posterior_estimation)

id = k/n_feat_sets;

else

id = 0;

for(int j=0; j<feats[k].length(); j++)

{

outputv[i] -= safeflog(sum_feature_class_counts[id][(int)target_values[i]] + smoothing_constant*total_feats_per_token);

if(feature_class_counts[id][(int)target_values[i]].find(feats[k][j]) == feature_class_counts[id][(int)target_values[i]].end())

outputv[i] += safeflog(smoothing_constant);

else

outputv[i] += safeflog(feature_class_counts[id][(int)target_values[i]][feats[k][j]]+smoothing_constant);

}

}

}

}

else

{

for(int i=0; i<total_output_size; i++)

{

outputv[i] = safeflog(class_counts[i]);

for(int k=0; k<inputsize_; k++)

{

if(input_dependent_posterior_estimation)

id = k/n_feat_sets;

else

id = 0;

for(int j=0; j<feats[k].length(); j++)

{

outputv[i] -= safeflog(sum_feature_class_counts[id][i] + smoothing_constant*total_feats_per_token);

if(feature_class_counts[id][i].find(feats[k][j]) == feature_class_counts[id][i].end())

outputv[i] += safeflog(smoothing_constant);

else

outputv[i] += safeflog(feature_class_counts[id][i][feats[k][j]]+smoothing_constant);

}

}

}

}

}

| RemoteMethodMap & PLearn::FeatureSetNaiveBayesClassifier::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 54 of file FeatureSetNaiveBayesClassifier.cc.

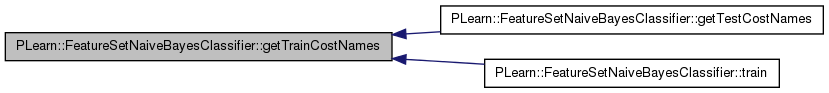

| TVec< string > PLearn::FeatureSetNaiveBayesClassifier::getTestCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the costs computed by computeCostsFromOutputs.

Implements PLearn::PLearner.

Definition at line 276 of file FeatureSetNaiveBayesClassifier.cc.

References getTrainCostNames().

{

return getTrainCostNames();

}

| TVec< string > PLearn::FeatureSetNaiveBayesClassifier::getTrainCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 266 of file FeatureSetNaiveBayesClassifier.cc.

Referenced by getTestCostNames(), and train().

{

TVec<string> ret(1);

ret[0] = "class_error";

return ret;

}

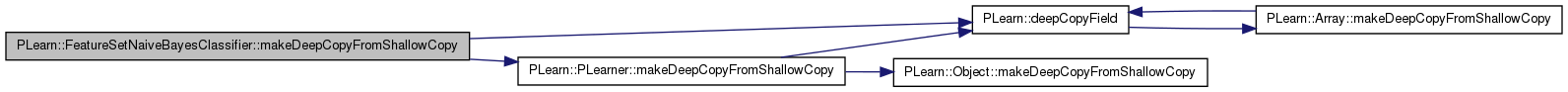

| void PLearn::FeatureSetNaiveBayesClassifier::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 284 of file FeatureSetNaiveBayesClassifier.cc.

References class_counts, PLearn::deepCopyField(), feat_sets, feats, feature_class_counts, PLearn::PLearner::makeDeepCopyFromShallowCopy(), output_comp, rgen, row, sum_feature_class_counts, target_values, target_values_reference_set, and val_string_reference_set.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// Private variables

deepCopyField(target_values,copies);

deepCopyField(output_comp,copies);

deepCopyField(row,copies);

deepCopyField(feats,copies);

// Protected variables

deepCopyField(val_string_reference_set,copies);

deepCopyField(target_values_reference_set,copies);

deepCopyField(rgen,copies);

// Public variables

deepCopyField(feature_class_counts,copies);

deepCopyField(sum_feature_class_counts,copies);

deepCopyField(class_counts,copies);

// Public build options

deepCopyField(feat_sets,copies);

}

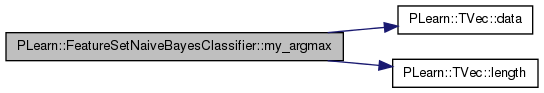

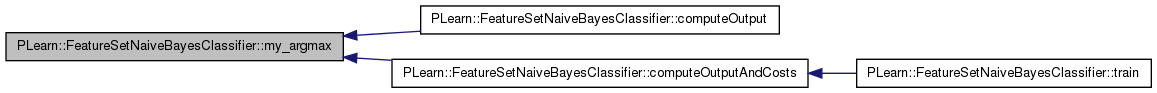

| int PLearn::FeatureSetNaiveBayesClassifier::my_argmax | ( | const Vec & | vec, |

| int | default_compare = 0 |

||

| ) | const [private] |

Definition at line 209 of file FeatureSetNaiveBayesClassifier.cc.

References PLearn::TVec< T >::data(), i, PLearn::TVec< T >::length(), and PLERROR.

Referenced by computeOutput(), and computeOutputAndCosts().

{

#ifdef BOUNDCHECK

if(vec.length()==0)

PLERROR("IN int argmax(const TVec<T>& vec) vec has zero length");

#endif

real* v = vec.data();

int indexmax = default_compare;

real maxval = v[default_compare];

for(int i=0; i<vec.length(); i++)

if(v[i]>maxval)

{

maxval = v[i];

indexmax = i;

}

return indexmax;

}

| int PLearn::FeatureSetNaiveBayesClassifier::outputsize | ( | ) | const [virtual] |

SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options.

Implements PLearn::PLearner.

Definition at line 311 of file FeatureSetNaiveBayesClassifier.cc.

References PLearn::PLearner::targetsize_.

Referenced by train().

{

return targetsize_;

}

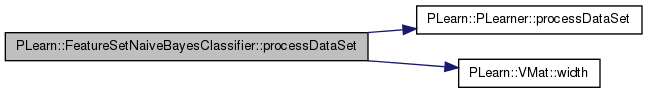

| VMat PLearn::FeatureSetNaiveBayesClassifier::processDataSet | ( | VMat | dataset | ) | const [protected, virtual] |

Changes the reference_set and then calls the parent's class method.

Reimplemented from PLearn::PLearner.

Definition at line 494 of file FeatureSetNaiveBayesClassifier.cc.

References PLearn::PLearner::processDataSet(), target_values_reference_set, PLearn::PLearner::train_set, val_string_reference_set, and PLearn::VMat::width().

{

VMat ret;

val_string_reference_set = dataset;

// Assumes it contains the target part information

if(dataset->width() > train_set->inputsize())

target_values_reference_set = dataset;

ret = inherited::processDataSet(dataset);

val_string_reference_set = train_set;

if(dataset->width() > train_set->inputsize())

target_values_reference_set = train_set;

return ret;

}

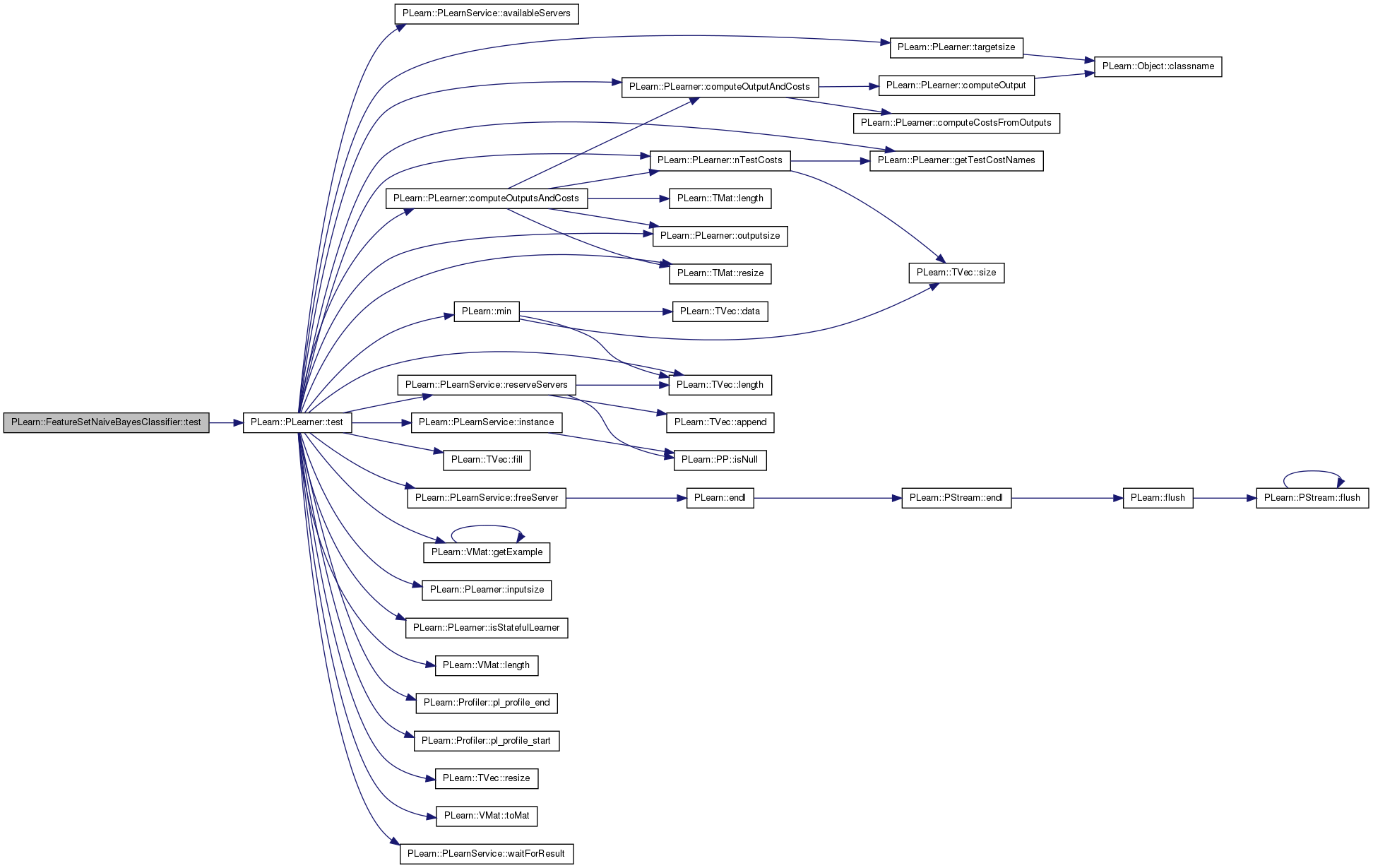

| void PLearn::FeatureSetNaiveBayesClassifier::test | ( | VMat | testset, |

| PP< VecStatsCollector > | test_stats, | ||

| VMat | testoutputs = 0, |

||

| VMat | testcosts = 0 |

||

| ) | const [protected, virtual] |

Changes the reference_set and then calls the parent's class method.

Reimplemented from PLearn::PLearner.

Definition at line 484 of file FeatureSetNaiveBayesClassifier.cc.

References target_values_reference_set, PLearn::PLearner::test(), PLearn::PLearner::train_set, and val_string_reference_set.

{

val_string_reference_set = testset;

target_values_reference_set = testset;

inherited::test(testset,test_stats,testoutputs,testcosts);

val_string_reference_set = train_set;

target_values_reference_set = train_set;

}

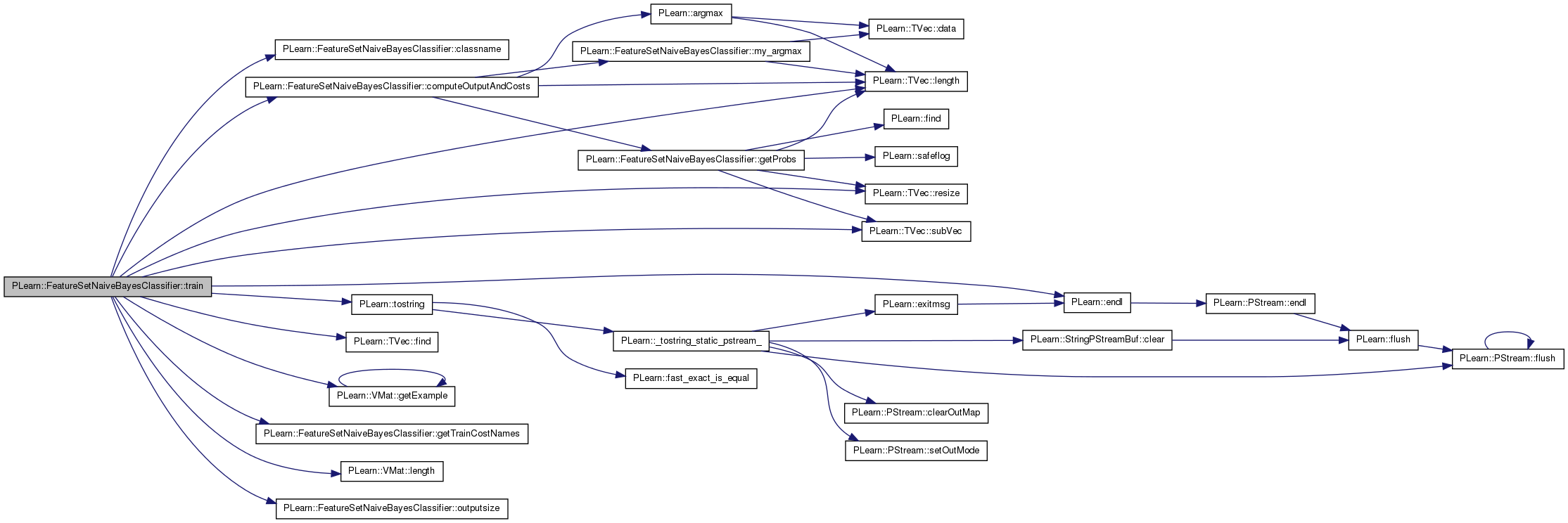

| void PLearn::FeatureSetNaiveBayesClassifier::train | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

The role of the train method is to bring the learner up to stage==nstages, updating the stats with training costs measured on-line in the process.

TYPICAL CODE:

static Vec input; // static so we don't reallocate/deallocate memory each time... static Vec target; // (but be careful that static means shared!) input.resize(inputsize()); // the train_set's inputsize() target.resize(targetsize()); // the train_set's targetsize() real weight; if(!train_stats) // make a default stats collector, in case there's none train_stats = new VecStatsCollector(); if(nstages<stage) // asking to revert to a previous stage! forget(); // reset the learner to stage=0 while(stage<nstages) { // clear statistics of previous epoch train_stats->forget(); //... train for 1 stage, and update train_stats, // using train_set->getSample(input, target, weight); // and train_stats->update(train_costs) ++stage; train_stats->finalize(); // finalize statistics for this epoch }

Implements PLearn::PLearner.

Definition at line 318 of file FeatureSetNaiveBayesClassifier.cc.

References class_counts, classname(), computeOutputAndCosts(), PLearn::endl(), feat_sets, feats, feature_class_counts, PLearn::TVec< T >::find(), PLearn::VMat::getExample(), getTrainCostNames(), i, input_dependent_posterior_estimation, PLearn::PLearner::inputsize_, j, PLearn::TVec< T >::length(), PLearn::VMat::length(), n_feat_sets, nfeats, output_comp, outputsize(), PLERROR, possible_targets_vary, PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), row, PLearn::PLearner::stage, str, PLearn::TVec< T >::subVec(), sum_feature_class_counts, target_values, PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, and PLearn::PLearner::verbosity.

{

if(!train_set)

PLERROR("In FeatureSetNaiveBayesClassifier::train, you did not setTrainingSet");

//if(!train_stats)

// PLERROR("In FeatureSetNaiveBayesClassifier::train, you did not setTrainStatsCollector");

Vec outputv(outputsize());

Vec costsv(getTrainCostNames().length());

Vec inputv(train_set->inputsize());

Vec targetv(train_set->targetsize());

real sample_weight=1;

int l = train_set->length();

if(stage == 0)

{

PP<ProgressBar> pb;

if(report_progress)

pb = new ProgressBar("Training " + classname()

+ " from stage 0 to " + tostring(l), l);

int id = 0;

for(int t=0; t<l;t++)

{

train_set->getExample(t,inputv,targetv,sample_weight);

// Get possible target values

if(possible_targets_vary)

{

row.subVec(0,inputsize_) << inputv;

train_set->getValues(row,inputsize_,

target_values);

output_comp.resize(target_values.length());

}

// Get features

nfeats = 0;

for(int i=0; i<inputsize_; i++)

{

str = train_set->getValString(i,inputv[i]);

feat_sets[i%n_feat_sets]->getFeatures(str,feats[i]);

nfeats += feats[i].length();

}

for(int i=0; i<inputsize_; i++)

{

for(int j=0; j<feats[i].length(); j++)

{

if(input_dependent_posterior_estimation)

id = i/n_feat_sets;

else

id = 0;

if(feature_class_counts[id][(int)targetv[0]].find(feats[i][j]) == feature_class_counts[id][(int)targetv[0]].end())

feature_class_counts[id][(int)targetv[0]][feats[i][j]] = 1;

else

feature_class_counts[id][(int)targetv[0]][feats[i][j]] += 1;

sum_feature_class_counts[id][(int)targetv[0]] += 1;

}

}

class_counts[(int)targetv[0]] += 1;

computeOutputAndCosts(inputv, targetv, outputv, costsv);

train_stats->update(costsv);

if(pb) pb->update(t);

}

stage = 1;

train_stats->finalize();

if(verbosity>1)

cout << "Epoch " << stage << " train objective: "

<< train_stats->getMean() << endl;

}

}

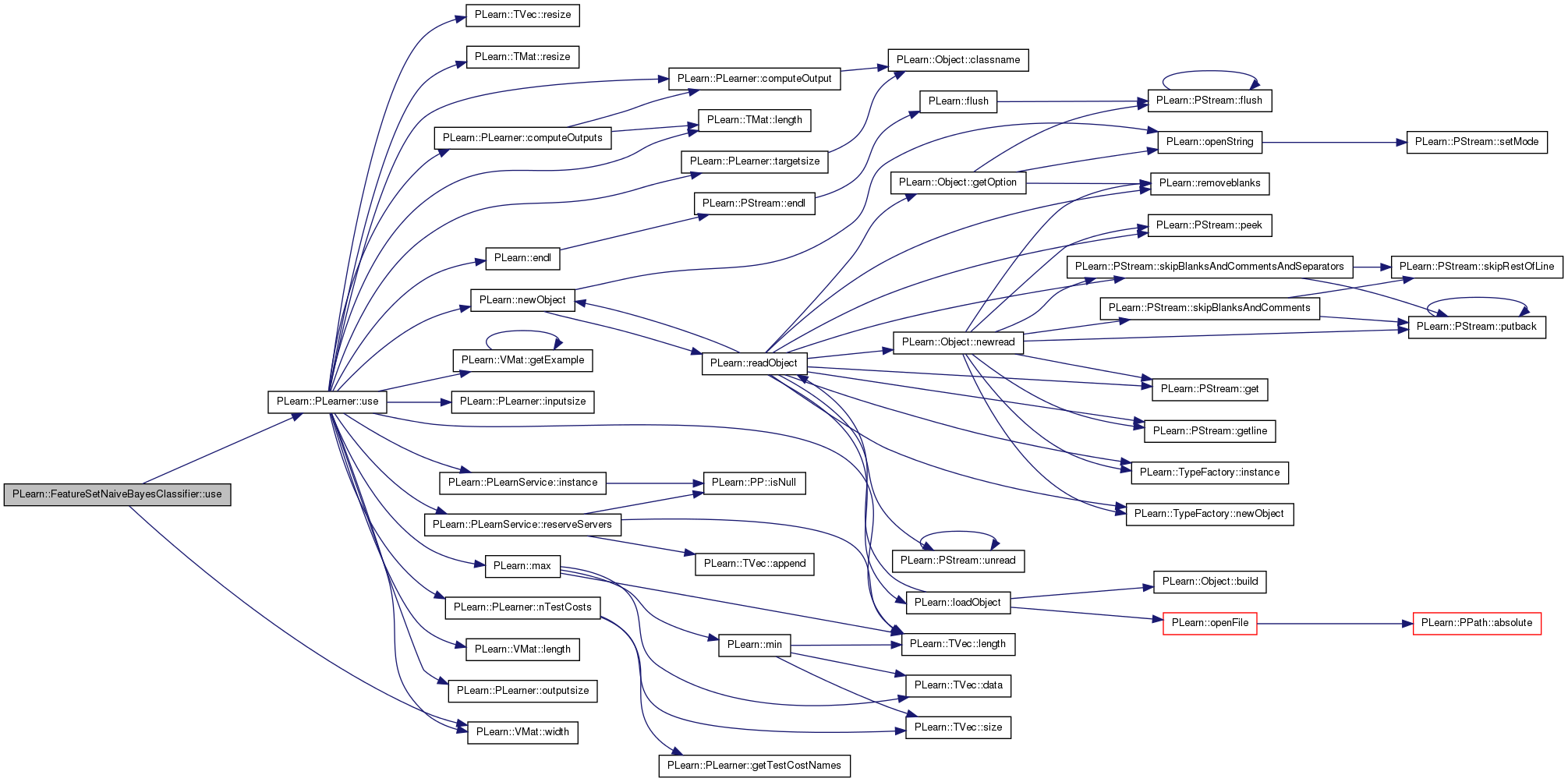

| void PLearn::FeatureSetNaiveBayesClassifier::use | ( | VMat | testset, |

| VMat | outputs | ||

| ) | const [protected, virtual] |

Changes the reference_set and then calls the parent's class method.

Reimplemented from PLearn::PLearner.

Definition at line 472 of file FeatureSetNaiveBayesClassifier.cc.

References target_values_reference_set, PLearn::PLearner::train_set, PLearn::PLearner::use(), val_string_reference_set, and PLearn::VMat::width().

{

val_string_reference_set = testset;

if(testset->width() > train_set->inputsize())

target_values_reference_set = testset;

target_values_reference_set = testset;

inherited::use(testset,outputs);

val_string_reference_set = train_set;

if(testset->width() > train_set->inputsize())

target_values_reference_set = train_set;

}

Reimplemented from PLearn::PLearner.

Definition at line 124 of file FeatureSetNaiveBayesClassifier.h.

Class counts.

Definition at line 97 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), declareOptions(), getProbs(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::FeatureSetNaiveBayesClassifier::feat_input [mutable, protected] |

Feature input;.

Definition at line 83 of file FeatureSetNaiveBayesClassifier.h.

FeatureSets to apply on input.

Definition at line 107 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), declareOptions(), getProbs(), makeDeepCopyFromShallowCopy(), and train().

TVec< TVec<int> > PLearn::FeatureSetNaiveBayesClassifier::feats [mutable, private] |

Features for each token.

Definition at line 64 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), getProbs(), makeDeepCopyFromShallowCopy(), and train().

| TVec< TVec< hash_map<int,int> > > PLearn::FeatureSetNaiveBayesClassifier::feature_class_counts |

Feature-class pair counts.

Definition at line 93 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), declareOptions(), getProbs(), makeDeepCopyFromShallowCopy(), and train().

Indication that different estimations of the posterior probability of a feature given a class should be used for different inputs.

Definition at line 111 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), declareOptions(), getProbs(), and train().

Number of feature sets.

Definition at line 81 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), getProbs(), and train().

int PLearn::FeatureSetNaiveBayesClassifier::nfeats [mutable, private] |

Definition at line 70 of file FeatureSetNaiveBayesClassifier.h.

Referenced by getProbs(), and train().

Vec PLearn::FeatureSetNaiveBayesClassifier::output_comp [mutable, private] |

Vector for output computations.

Definition at line 60 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), computeOutput(), computeOutputAndCosts(), makeDeepCopyFromShallowCopy(), and train().

Indication that the set of possible targets vary from one input vector to another.

Definition at line 105 of file FeatureSetNaiveBayesClassifier.h.

Referenced by computeOutput(), computeOutputAndCosts(), declareOptions(), getProbs(), and train().

PP<PRandom> PLearn::FeatureSetNaiveBayesClassifier::rgen [protected] |

Random number generator for parameters initialization.

Definition at line 89 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), computeOutput(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

Vec PLearn::FeatureSetNaiveBayesClassifier::row [mutable, private] |

Row vector.

Definition at line 62 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), getProbs(), makeDeepCopyFromShallowCopy(), and train().

Add-delta smoothing constant.

Definition at line 113 of file FeatureSetNaiveBayesClassifier.h.

Referenced by declareOptions(), and getProbs().

string PLearn::FeatureSetNaiveBayesClassifier::str [mutable, private] |

Temporary computations variable, used in fprop() and bprop() Care must be taken when using these variables, since they are used by many different functions.

Definition at line 69 of file FeatureSetNaiveBayesClassifier.h.

Referenced by getProbs(), and train().

Sums of feature-class pair counts, over features.

Definition at line 95 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), declareOptions(), getProbs(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::FeatureSetNaiveBayesClassifier::target_values [mutable, private] |

Vector of possible target values.

Definition at line 58 of file FeatureSetNaiveBayesClassifier.h.

Referenced by computeOutput(), computeOutputAndCosts(), getProbs(), makeDeepCopyFromShallowCopy(), and train().

VMat PLearn::FeatureSetNaiveBayesClassifier::target_values_reference_set [mutable, protected] |

Possible target values mapping.

Definition at line 87 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), getProbs(), makeDeepCopyFromShallowCopy(), processDataSet(), test(), and use().

Number of features per input token for which a distributed representation is computed.

Definition at line 79 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), and getProbs().

Total output size.

Definition at line 75 of file FeatureSetNaiveBayesClassifier.h.

Referenced by build_(), and getProbs().

VMat PLearn::FeatureSetNaiveBayesClassifier::val_string_reference_set [mutable, protected] |

VMatrix used to get values to string mapping for input tokens.

Definition at line 85 of file FeatureSetNaiveBayesClassifier.h.

Referenced by batchComputeOutputAndConfidence(), build_(), getProbs(), makeDeepCopyFromShallowCopy(), processDataSet(), test(), and use().

1.7.4

1.7.4