|

PLearn 0.1

|

|

PLearn 0.1

|

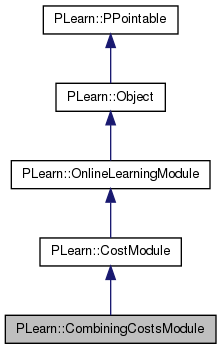

Combine several CostModules with the same input and target. More...

#include <CombiningCostsModule.h>

Public Member Functions | |

| CombiningCostsModule () | |

| Default constructor. | |

| virtual void | bpropAccUpdate (const TVec< Mat * > &ports_value, const TVec< Mat * > &ports_gradient) |

| Overridden so that sub-costs values can be taken into account. | |

| virtual void | fprop (const Vec &input, const Vec &target, Vec &cost) const |

| given the input and target, compute the cost | |

| virtual void | fprop (const Mat &inputs, const Mat &targets, Mat &costs) const |

| Overridden from parent class. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &target, real cost, Vec &input_gradient, bool accumulate=false) |

| Adapt based on the output gradient: this method should only be called just after a corresponding fprop. | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &targets, const Vec &costs, Mat &input_gradients, bool accumulate=false) |

| Overridden. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &target, real cost) |

| Calls this method on the sub_costs. | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &target, real cost, Vec &input_gradient, Vec &input_diag_hessian, bool accumulate=false) |

| Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back. | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &target, real cost) |

| Calls this method on the sub_costs. | |

| virtual void | forget () |

| reset the parameters to the state they would be BEFORE starting training. | |

| virtual void | setLearningRate (real dynamic_learning_rate) |

| Sets the sub_costs' learning rates. | |

| virtual void | finalize () |

| optionally perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation. | |

| virtual bool | bpropDoesNothing () |

| in case bpropUpdate does not do anything, make it known | |

| virtual TVec< string > | costNames () |

| Indicates the name of the computed costs. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual CombiningCostsModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| TVec< PP< CostModule > > | sub_costs |

| Vector containing the different sub_costs. | |

| Vec | cost_weights |

| The weights associated to each of the sub_costs. | |

| int | n_sub_costs |

| Number of sub-costs. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef CostModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| Vec | sub_costs_values |

| Stores the output values of the sub_costs. | |

| Mat | sub_costs_mbatch_values |

| Stores mini-batch outputs values of sub costs. | |

| Vec | partial_gradient |

| Stores intermediate values of the input gradient. | |

| Mat | partial_gradients |

| Used to store intermediate values of input gradient in mini-batch setting. | |

| Vec | partial_diag_hessian |

| Stores intermediate values of the input diagonal of Hessian. | |

Combine several CostModules with the same input and target.

It is possible to assign a weight on each of the sub_modules, so the back-propagated gradient will be a weighted sum of the modules' gradients. The first output is the weighted sum of the cost, the following ones are the original costs.

Definition at line 54 of file CombiningCostsModule.h.

typedef CostModule PLearn::CombiningCostsModule::inherited [private] |

Reimplemented from PLearn::CostModule.

Definition at line 56 of file CombiningCostsModule.h.

| PLearn::CombiningCostsModule::CombiningCostsModule | ( | ) |

| string PLearn::CombiningCostsModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

| OptionList & PLearn::CombiningCostsModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

| RemoteMethodMap & PLearn::CombiningCostsModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

| Object * PLearn::CombiningCostsModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

| StaticInitializer CombiningCostsModule::_static_initializer_ & PLearn::CombiningCostsModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

| void PLearn::CombiningCostsModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost | ||

| ) | [virtual] |

Calls this method on the sub_costs.

Reimplemented from PLearn::CostModule.

Definition at line 428 of file CombiningCostsModule.cc.

References bbpropUpdate(), i, PLearn::OnlineLearningModule::input_size, n_sub_costs, PLASSERT, PLearn::TVec< T >::size(), sub_costs, sub_costs_values, and PLearn::CostModule::target_size.

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

for( int i=0 ; i<n_sub_costs ; i++ )

sub_costs[i]->bbpropUpdate( input, target, sub_costs_values[i] );

}

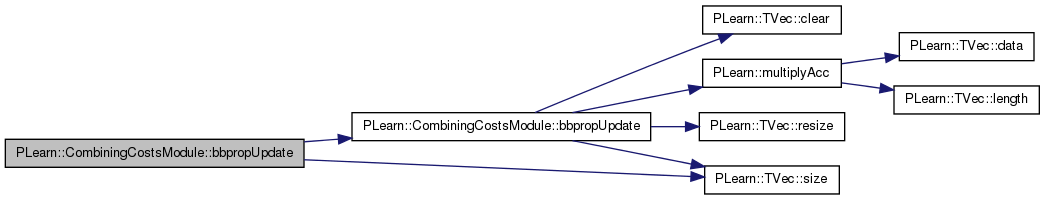

| void PLearn::CombiningCostsModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost, | ||

| Vec & | input_gradient, | ||

| Vec & | input_diag_hessian, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back.

Reimplemented from PLearn::CostModule.

Definition at line 375 of file CombiningCostsModule.cc.

References PLearn::TVec< T >::clear(), cost_weights, i, PLearn::OnlineLearningModule::input_size, PLearn::multiplyAcc(), n_sub_costs, partial_diag_hessian, partial_gradient, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sub_costs, sub_costs_values, and PLearn::CostModule::target_size.

Referenced by bbpropUpdate().

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == input_size,

"Cannot resize input_gradient AND accumulate into it" );

PLASSERT_MSG( input_diag_hessian.size() == input_size,

"Cannot resize input_diag_hessian AND accumulate into it"

);

}

else

{

input_gradient.resize( input_size );

input_gradient.clear();

input_diag_hessian.resize( input_size );

input_diag_hessian.clear();

}

for( int i=0 ; i<n_sub_costs ; i++ )

{

if( cost_weights[i] == 0. )

{

// Don't compute input_gradient nor input_diag_hessian

sub_costs[i]->bbpropUpdate( input, target, sub_costs_values[i] );

}

else if( cost_weights[i] == 1. )

{

// Accumulate directly into input_gradient and input_diag_hessian

sub_costs[i]->bbpropUpdate( input, target, sub_costs_values[i],

input_gradient, input_diag_hessian,

true );

}

else

{

// Put temporary results into partial_*, then multiply and add to

// input_*

sub_costs[i]->bbpropUpdate( input, target, sub_costs_values[i],

partial_gradient, partial_diag_hessian,

false );

multiplyAcc( input_gradient, partial_gradient, cost_weights[i] );

multiplyAcc( input_diag_hessian, partial_diag_hessian,

cost_weights[i] );

}

}

}

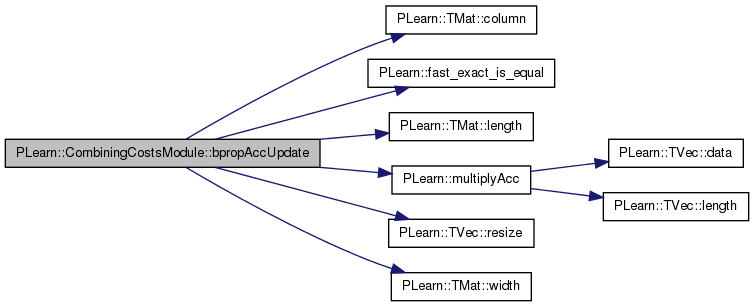

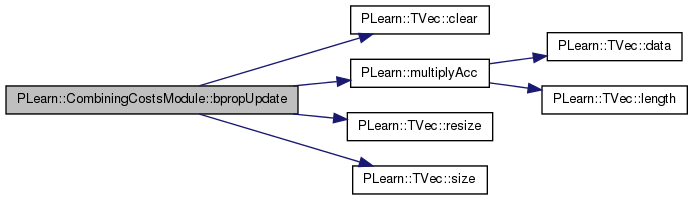

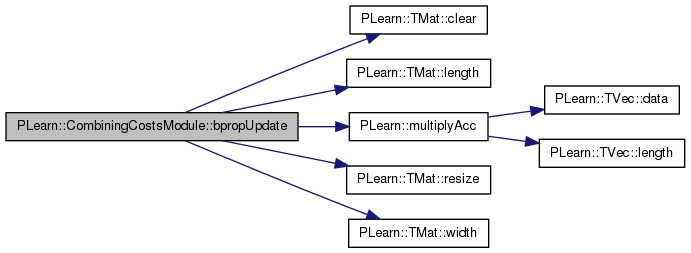

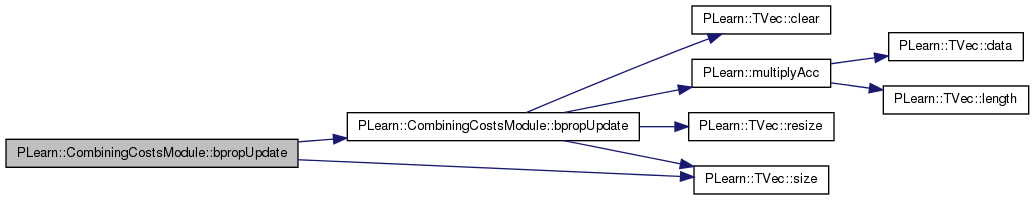

| void PLearn::CombiningCostsModule::bpropAccUpdate | ( | const TVec< Mat * > & | ports_value, |

| const TVec< Mat * > & | ports_gradient | ||

| ) | [virtual] |

Overridden so that sub-costs values can be taken into account.

Reimplemented from PLearn::CostModule.

Definition at line 233 of file CombiningCostsModule.cc.

References PLearn::TMat< T >::column(), cost_weights, PLearn::fast_exact_is_equal(), i, PLearn::TMat< T >::length(), PLearn::multiplyAcc(), n_sub_costs, partial_gradients, PLASSERT, PLearn::TVec< T >::resize(), sub_costs, sub_costs_values, and PLearn::TMat< T >::width().

{

Mat* inputs = ports_value[0];

Mat* targets = ports_value[1];

Mat* costs = ports_value[2];

PLASSERT( costs && costs->width() == n_sub_costs + 1 );

Mat* input_gradients = ports_gradient[0];

PLASSERT( input_gradients && input_gradients->isEmpty() &&

input_gradients->width() > 0 );

input_gradients->resize(inputs->length(), input_gradients->width());

sub_costs_values.resize(costs->length());

for( int i=0 ; i<n_sub_costs ; i++ )

{

sub_costs_values << costs->column(i + 1);

if (fast_exact_is_equal(cost_weights[i], 0))

{

// Do not compute input_gradients.

sub_costs[i]->bpropUpdate( *inputs, *targets, sub_costs_values);

}

else if (fast_exact_is_equal(cost_weights[i], 1))

{

// Accumulate directly into input_gradients.

sub_costs[i]->bpropUpdate(*inputs, *targets, sub_costs_values,

*input_gradients, true);

}

else

{

// Put the result into partial_gradients, then accumulate into

// input_gradients with the appropriate weight.

sub_costs[i]->bpropUpdate(*inputs, *targets, sub_costs_values,

partial_gradients, false);

multiplyAcc(*input_gradients, partial_gradients, cost_weights[i]);

}

}

}

| bool PLearn::CombiningCostsModule::bpropDoesNothing | ( | ) | [virtual] |

in case bpropUpdate does not do anything, make it known

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 480 of file CombiningCostsModule.cc.

References i, n_sub_costs, and sub_costs.

{

for( int i=0 ; i<n_sub_costs ; i++ )

if( !(sub_costs[i]->bpropDoesNothing()) )

return false;

return true;

}

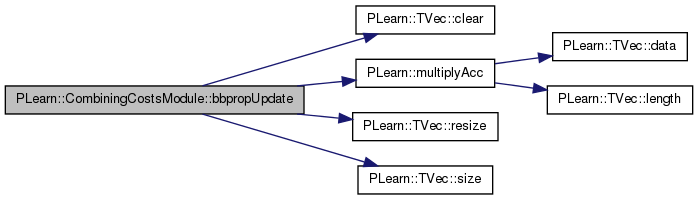

| void PLearn::CombiningCostsModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost, | ||

| Vec & | input_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Adapt based on the output gradient: this method should only be called just after a corresponding fprop.

Reimplemented from PLearn::CostModule.

Definition at line 273 of file CombiningCostsModule.cc.

References PLearn::TVec< T >::clear(), cost_weights, i, PLearn::OnlineLearningModule::input_size, PLearn::multiplyAcc(), n_sub_costs, partial_gradient, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sub_costs, sub_costs_values, and PLearn::CostModule::target_size.

Referenced by bpropUpdate().

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == input_size,

"Cannot resize input_gradient AND accumulate into it" );

}

else

{

input_gradient.resize( input_size );

input_gradient.clear();

}

for( int i=0 ; i<n_sub_costs ; i++ )

{

if( cost_weights[i] == 0. )

{

// Don't compute input_gradient

sub_costs[i]->bpropUpdate( input, target, sub_costs_values[i] );

}

else if( cost_weights[i] == 1. )

{

// Accumulate directly into input_gradient

sub_costs[i]->bpropUpdate( input, target, sub_costs_values[i],

input_gradient, true );

}

else

{

// Put the result into partial_gradient, then accumulate into

// input_gradient with the appropriate weight

sub_costs[i]->bpropUpdate( input, target, sub_costs_values[i],

partial_gradient, false );

multiplyAcc( input_gradient, partial_gradient, cost_weights[i] );

}

}

}

| void PLearn::CombiningCostsModule::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| const Vec & | costs, | ||

| Mat & | input_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Overridden.

Reimplemented from PLearn::CostModule.

Definition at line 315 of file CombiningCostsModule.cc.

References PLearn::TMat< T >::clear(), cost_weights, i, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), PLearn::multiplyAcc(), n_sub_costs, partial_gradients, PLASSERT, PLASSERT_MSG, PLearn::TMat< T >::resize(), sub_costs, sub_costs_mbatch_values, PLearn::CostModule::target_size, and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

PLASSERT( targets.width() == target_size );

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == input_size &&

input_gradients.length() == inputs.length(),

"Cannot resize input_gradients and accumulate into it" );

}

else

{

input_gradients.resize(inputs.length(), input_size );

input_gradients.clear();

}

Vec sub;

for( int i=0 ; i<n_sub_costs ; i++ )

{

sub = sub_costs_mbatch_values(i);

if( cost_weights[i] == 0. )

{

// Do not compute input_gradients.

sub_costs[i]->bpropUpdate( inputs, targets, sub );

}

else if( cost_weights[i] == 1. )

{

// Accumulate directly into input_gradients.

sub_costs[i]->bpropUpdate( inputs, targets, sub, input_gradients,

true );

}

else

{

// Put the result into partial_gradients, then accumulate into

// input_gradients with the appropriate weight.

sub_costs[i]->bpropUpdate( inputs, targets, sub, partial_gradients,

false);

multiplyAcc( input_gradients, partial_gradients, cost_weights[i] );

}

}

}

| void PLearn::CombiningCostsModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost | ||

| ) | [virtual] |

Calls this method on the sub_costs.

Reimplemented from PLearn::CostModule.

Definition at line 362 of file CombiningCostsModule.cc.

References bpropUpdate(), i, PLearn::OnlineLearningModule::input_size, n_sub_costs, PLASSERT, PLearn::TVec< T >::size(), sub_costs, sub_costs_values, and PLearn::CostModule::target_size.

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

for( int i=0 ; i<n_sub_costs ; i++ )

sub_costs[i]->bpropUpdate( input, target, sub_costs_values[i] );

}

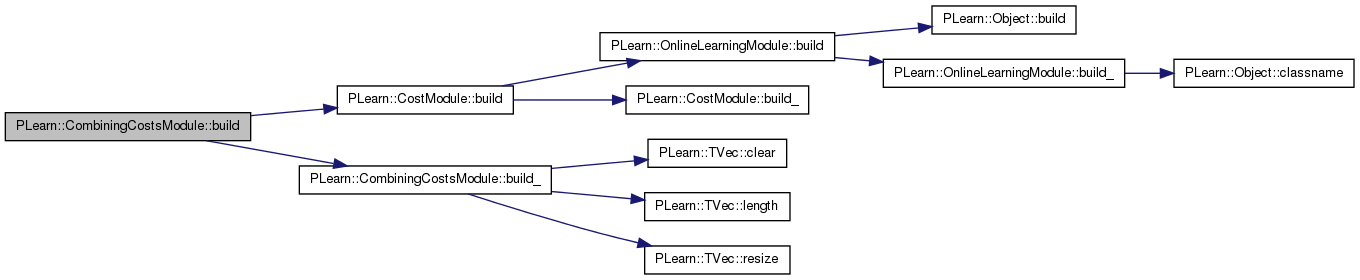

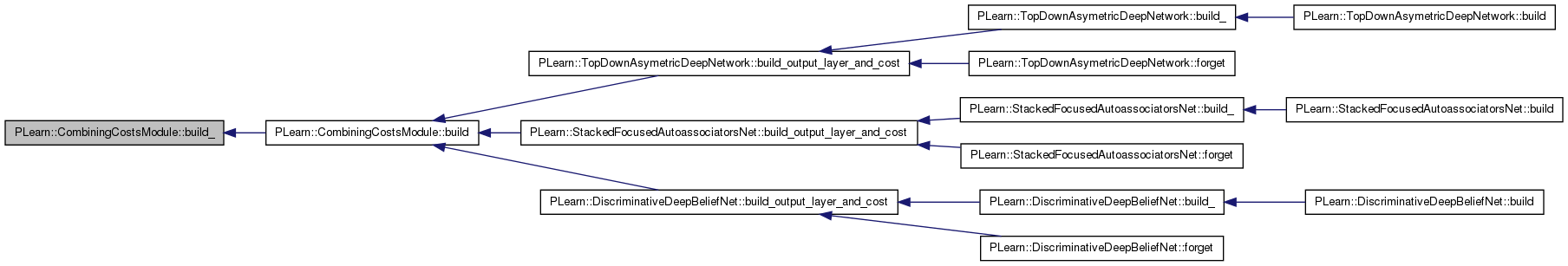

| void PLearn::CombiningCostsModule::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::CostModule.

Definition at line 159 of file CombiningCostsModule.cc.

References PLearn::CostModule::build(), and build_().

Referenced by PLearn::TopDownAsymetricDeepNetwork::build_output_layer_and_cost(), PLearn::StackedFocusedAutoassociatorsNet::build_output_layer_and_cost(), and PLearn::DiscriminativeDeepBeliefNet::build_output_layer_and_cost().

{

inherited::build();

build_();

}

| void PLearn::CombiningCostsModule::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::CostModule.

Definition at line 96 of file CombiningCostsModule.cc.

References PLearn::TVec< T >::clear(), cost_weights, i, PLearn::OnlineLearningModule::input_size, PLearn::TVec< T >::length(), n_sub_costs, PLearn::OnlineLearningModule::output_size, PLERROR, PLearn::OnlineLearningModule::random_gen, PLearn::TVec< T >::resize(), sub_costs, sub_costs_values, and PLearn::CostModule::target_size.

Referenced by build().

{

n_sub_costs = sub_costs.length();

if( n_sub_costs == 0 )

{

//PLWARNING("In CombiningCostsModule::build_ - sub_costs is empty (length 0)");

return;

}

// Default value: sub_cost[0] has weight 1, the other ones have weight 0.

if( cost_weights.length() == 0 )

{

cost_weights.resize( n_sub_costs );

cost_weights.clear();

cost_weights[0] = 1;

}

if( cost_weights.length() != n_sub_costs )

PLERROR( "CombiningCostsModule::build_(): cost_weights.length()\n"

"should be equal to n_sub_costs (%d != %d).\n",

cost_weights.length(), n_sub_costs );

if(sub_costs.length() == 0)

PLERROR( "CombiningCostsModule::build_(): sub_costs.length()\n"

"should be > 0.\n");

input_size = sub_costs[0]->input_size;

target_size = sub_costs[0]->target_size;

for(int i=1; i<sub_costs.length(); i++)

{

if(sub_costs[i]->input_size != input_size)

PLERROR( "CombiningCostsModule::build_(): sub_costs[%d]->input_size"

" (%d)\n"

"should be equal to %d.\n",

i,sub_costs[i]->input_size, input_size);

if(sub_costs[i]->target_size != target_size)

PLERROR( "CombiningCostsModule::build_(): sub_costs[%d]->target_size"

" (%d)\n"

"should be equal to %d.\n",

i,sub_costs[i]->target_size, target_size);

}

sub_costs_values.resize( n_sub_costs );

output_size = 1;

for (int i=0; i<n_sub_costs; i++)

output_size += sub_costs[i]->output_size;

// If we have a random_gen and some sub_costs do not, share it with them

if( random_gen )

for( int i=0; i<n_sub_costs; i++ )

{

if( !(sub_costs[i]->random_gen) )

{

sub_costs[i]->random_gen = random_gen;

sub_costs[i]->forget();

}

}

}

| string PLearn::CombiningCostsModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

Referenced by costNames().

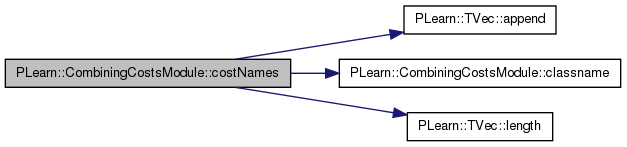

| TVec< string > PLearn::CombiningCostsModule::costNames | ( | ) | [virtual] |

Indicates the name of the computed costs.

Reimplemented from PLearn::CostModule.

Definition at line 490 of file CombiningCostsModule.cc.

References PLearn::TVec< T >::append(), classname(), i, j, PLearn::TVec< T >::length(), n_sub_costs, PLearn::OnlineLearningModule::name, and sub_costs.

{

TVec<string> names(1, "combined_cost");

for( int i=0 ; i<n_sub_costs ; i++ )

names.append( sub_costs[i]->costNames() );

if (name != "" && name != classname())

for (int j=0; j<names.length(); j++)

names[j] = name + "." + names[j];

return names;

}

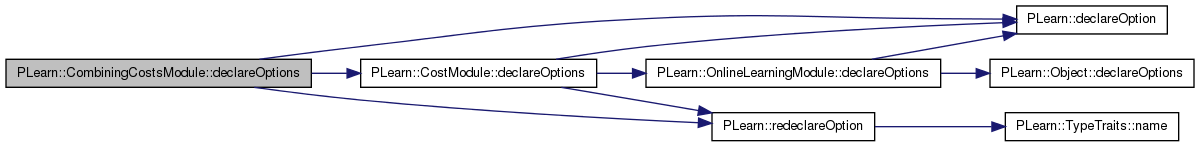

| void PLearn::CombiningCostsModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::CostModule.

Definition at line 68 of file CombiningCostsModule.cc.

References PLearn::OptionBase::buildoption, cost_weights, PLearn::declareOption(), PLearn::CostModule::declareOptions(), PLearn::OnlineLearningModule::input_size, PLearn::OptionBase::learntoption, n_sub_costs, PLearn::redeclareOption(), sub_costs, and PLearn::CostModule::target_size.

{

declareOption(ol, "sub_costs", &CombiningCostsModule::sub_costs,

OptionBase::buildoption,

"Vector containing the different sub_costs");

declareOption(ol, "cost_weights", &CombiningCostsModule::cost_weights,

OptionBase::buildoption,

"The weights associated to each of the sub_costs");

declareOption(ol, "n_sub_costs", &CombiningCostsModule::n_sub_costs,

OptionBase::learntoption,

"Number of sub_costs");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

redeclareOption(ol, "input_size", &CombiningCostsModule::input_size,

OptionBase::learntoption,

"Is set to sub_costs[0]->input_size.");

redeclareOption(ol, "target_size", &CombiningCostsModule::target_size,

OptionBase::learntoption,

"Is set to sub_costs[0]->target_size.");

}

| static const PPath& PLearn::CombiningCostsModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::CostModule.

Definition at line 132 of file CombiningCostsModule.h.

:

//##### Protected Member Functions ######################################

| CombiningCostsModule * PLearn::CombiningCostsModule::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

| void PLearn::CombiningCostsModule::finalize | ( | ) | [virtual] |

optionally perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation.

reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 473 of file CombiningCostsModule.cc.

References i, n_sub_costs, and sub_costs.

| void PLearn::CombiningCostsModule::forget | ( | ) | [virtual] |

reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Reimplemented from PLearn::CostModule.

Definition at line 442 of file CombiningCostsModule.cc.

References i, n_sub_costs, PLWARNING, PLearn::OnlineLearningModule::random_gen, and sub_costs.

{

if( !random_gen )

{

PLWARNING("CombiningCostsModule: cannot forget() without random_gen");

return;

}

for( int i=0 ; i<n_sub_costs ; i++ )

{

// Ensure sub_costs[i] can forget

if( !(sub_costs[i]->random_gen) )

sub_costs[i]->random_gen = random_gen;

sub_costs[i]->forget();

}

}

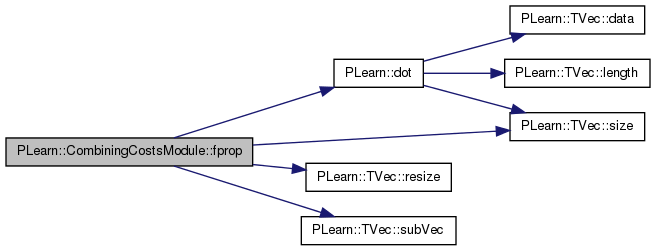

| void PLearn::CombiningCostsModule::fprop | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | cost | ||

| ) | const [virtual] |

given the input and target, compute the cost

Reimplemented from PLearn::CostModule.

Definition at line 186 of file CombiningCostsModule.cc.

References cost_weights, PLearn::dot(), i, PLearn::OnlineLearningModule::input_size, n_sub_costs, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sub_costs, sub_costs_values, PLearn::TVec< T >::subVec(), and PLearn::CostModule::target_size.

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

cost.resize( output_size );

int cost_index = 1;

for( int i=0 ; i<n_sub_costs ; i++ )

{

Vec sub_costs_val_i_all = cost.subVec(cost_index,

sub_costs[i]->output_size);

sub_costs[i]->fprop(input, target, sub_costs_val_i_all);

sub_costs_values[i] = cost[cost_index];

cost_index += sub_costs[i]->output_size;

}

cost[0] = dot( cost_weights, sub_costs_values );

}

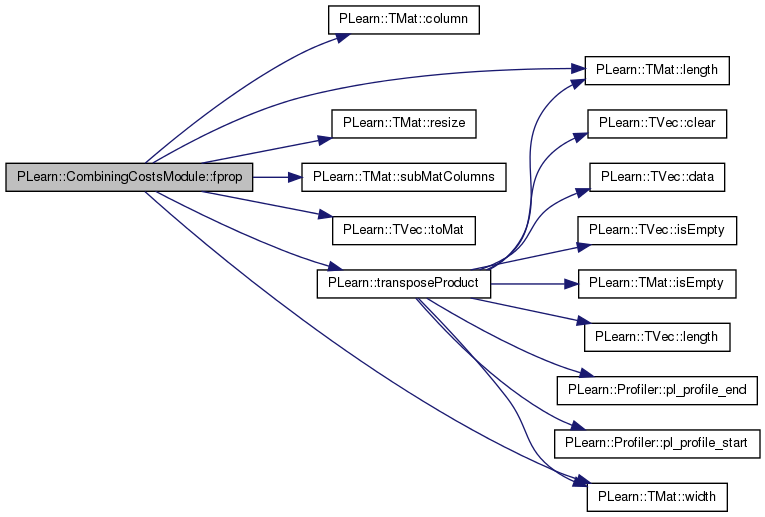

| void PLearn::CombiningCostsModule::fprop | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| Mat & | costs | ||

| ) | const [virtual] |

Overridden from parent class.

Reimplemented from PLearn::CostModule.

Definition at line 206 of file CombiningCostsModule.cc.

References PLearn::TMat< T >::column(), cost_weights, i, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), n_sub_costs, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TMat< T >::resize(), sub_costs, sub_costs_mbatch_values, PLearn::TMat< T >::subMatColumns(), PLearn::CostModule::target_size, PLearn::TVec< T >::toMat(), PLearn::transposeProduct(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

PLASSERT( targets.width() == target_size );

costs.resize(inputs.length(), output_size);

sub_costs_mbatch_values.resize(n_sub_costs, inputs.length());

int cost_index = 1;

for (int i=0; i<n_sub_costs; i++)

{

Mat sub_costs_val_i_all =

costs.subMatColumns(cost_index, sub_costs[i]->output_size);

sub_costs[i]->fprop(inputs, targets, sub_costs_val_i_all);

sub_costs_mbatch_values(i) << costs.column(cost_index);

cost_index += sub_costs[i]->output_size;

}

// final_cost = \sum weight_i * cost_i

Mat final_cost = costs.column(0);

Mat m_cost_weights = cost_weights.toMat(n_sub_costs, 1);

transposeProduct(final_cost, sub_costs_mbatch_values, m_cost_weights);

}

| OptionList & PLearn::CombiningCostsModule::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

| OptionMap & PLearn::CombiningCostsModule::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

| RemoteMethodMap & PLearn::CombiningCostsModule::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CombiningCostsModule.cc.

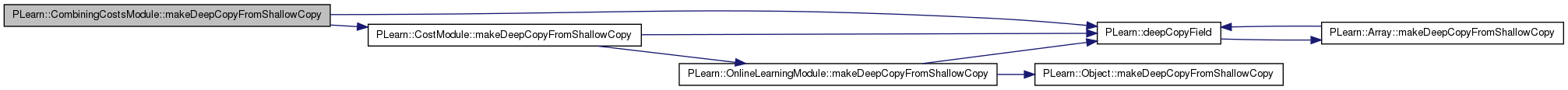

| void PLearn::CombiningCostsModule::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::CostModule.

Definition at line 169 of file CombiningCostsModule.cc.

References cost_weights, PLearn::deepCopyField(), PLearn::CostModule::makeDeepCopyFromShallowCopy(), partial_diag_hessian, partial_gradient, partial_gradients, sub_costs, sub_costs_mbatch_values, and sub_costs_values.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(sub_costs, copies);

deepCopyField(cost_weights, copies);

deepCopyField(sub_costs_values, copies);

deepCopyField(sub_costs_mbatch_values, copies);

deepCopyField(partial_gradient, copies);

deepCopyField(partial_gradients, copies);

deepCopyField(partial_diag_hessian, copies);

}

| void PLearn::CombiningCostsModule::setLearningRate | ( | real | dynamic_learning_rate | ) | [virtual] |

Sets the sub_costs' learning rates.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 462 of file CombiningCostsModule.cc.

References i, n_sub_costs, and sub_costs.

{

for( int i=0 ; i<n_sub_costs ; i++ )

sub_costs[i]->setLearningRate(dynamic_learning_rate);

}

Reimplemented from PLearn::CostModule.

Definition at line 132 of file CombiningCostsModule.h.

The weights associated to each of the sub_costs.

Definition at line 65 of file CombiningCostsModule.h.

Referenced by bbpropUpdate(), bpropAccUpdate(), bpropUpdate(), build_(), PLearn::TopDownAsymetricDeepNetwork::build_output_layer_and_cost(), PLearn::StackedFocusedAutoassociatorsNet::build_output_layer_and_cost(), PLearn::DiscriminativeDeepBeliefNet::build_output_layer_and_cost(), declareOptions(), fprop(), and makeDeepCopyFromShallowCopy().

Number of sub-costs.

Definition at line 68 of file CombiningCostsModule.h.

Referenced by bbpropUpdate(), bpropAccUpdate(), bpropDoesNothing(), bpropUpdate(), build_(), costNames(), declareOptions(), finalize(), forget(), fprop(), and setLearningRate().

Vec PLearn::CombiningCostsModule::partial_diag_hessian [mutable, private] |

Stores intermediate values of the input diagonal of Hessian.

Definition at line 171 of file CombiningCostsModule.h.

Referenced by bbpropUpdate(), and makeDeepCopyFromShallowCopy().

Vec PLearn::CombiningCostsModule::partial_gradient [mutable, private] |

Stores intermediate values of the input gradient.

Definition at line 164 of file CombiningCostsModule.h.

Referenced by bbpropUpdate(), bpropUpdate(), and makeDeepCopyFromShallowCopy().

Used to store intermediate values of input gradient in mini-batch setting.

Definition at line 168 of file CombiningCostsModule.h.

Referenced by bpropAccUpdate(), bpropUpdate(), and makeDeepCopyFromShallowCopy().

Vector containing the different sub_costs.

Definition at line 62 of file CombiningCostsModule.h.

Referenced by bbpropUpdate(), bpropAccUpdate(), bpropDoesNothing(), bpropUpdate(), build_(), PLearn::TopDownAsymetricDeepNetwork::build_output_layer_and_cost(), PLearn::StackedFocusedAutoassociatorsNet::build_output_layer_and_cost(), PLearn::DiscriminativeDeepBeliefNet::build_output_layer_and_cost(), costNames(), declareOptions(), finalize(), forget(), fprop(), makeDeepCopyFromShallowCopy(), and setLearningRate().

Mat PLearn::CombiningCostsModule::sub_costs_mbatch_values [mutable, private] |

Stores mini-batch outputs values of sub costs.

Definition at line 161 of file CombiningCostsModule.h.

Referenced by bpropUpdate(), fprop(), and makeDeepCopyFromShallowCopy().

Vec PLearn::CombiningCostsModule::sub_costs_values [mutable, private] |

Stores the output values of the sub_costs.

Definition at line 158 of file CombiningCostsModule.h.

Referenced by bbpropUpdate(), bpropAccUpdate(), bpropUpdate(), build_(), fprop(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4