|

PLearn 0.1

|

|

PLearn 0.1

|

#include <PLS.h>

Public Types | |

| typedef PLearner | inherited |

Public Member Functions | |

| PLS () | |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual PLS * | deepCopy (CopiesMap &copies) const |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual bool | computeConfidenceFromOutput (const Vec &, const Vec &output, real probability, TVec< pair< real, real > > &intervals) const |

| Compute confidence intervals from already-computed outputs. | |

| virtual TVec< string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static void | NIPALSEigenvector (const Mat &m, Vec &v, real precision) |

| Compute the largest eigenvector of m with the NIPALS algorithm: (1) v <- random initialization (but normalized) (2) v = m.v, normalize v (3) if there is a v[i] that has changed by more than 'preicision', go to (2), otherwise return v. | |

Public Attributes | |

| int | k |

| string | method |

| real | precision |

| bool | output_the_score |

| bool | output_the_target |

| bool | compute_confidence |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | computeResidVariance (VMat dataset, Vec &resid_variance) |

| Compute the variance of residuals on the specified dataset. | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Mat | B |

| int | m |

| Vec | mean_input |

| Vec | mean_target |

| int | p |

| Vec | stddev_input |

| Vec | stddev_target |

| Mat | W |

| Vec | resid_variance |

| Estimate of the residual variance for each output variable. | |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

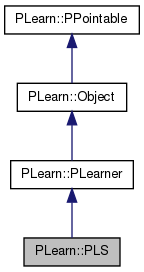

| typedef PLearner PLearn::PLS::inherited |

Reimplemented from PLearn::PLearner.

| PLearn::PLS::PLS | ( | ) |

Definition at line 58 of file PLS.cc.

: m(-1), p(-1), k(1), method("kernel"), precision(1e-6), output_the_score(false), output_the_target(true), compute_confidence(false) {}

| string PLearn::PLS::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

| OptionList & PLearn::PLS::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

| RemoteMethodMap & PLearn::PLS::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented from PLearn::PLearner.

| Object * PLearn::PLS::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

| StaticInitializer PLS::_static_initializer_ & PLearn::PLS::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

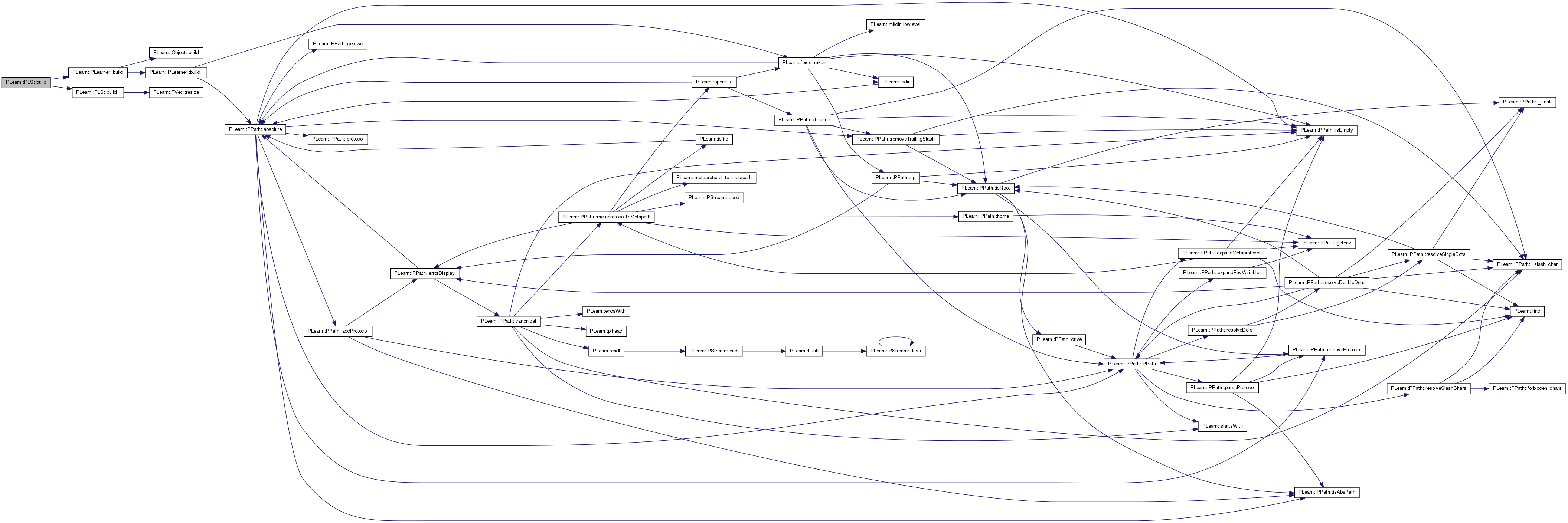

| void PLearn::PLS::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PLearner.

Definition at line 200 of file PLS.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::PLS::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 209 of file PLS.cc.

References m, mean_input, mean_target, method, output_the_score, output_the_target, p, PLERROR, PLWARNING, PLearn::TVec< T >::resize(), stddev_input, stddev_target, and PLearn::PLearner::train_set.

Referenced by build().

{

if (train_set) {

this->m = train_set->targetsize();

this->p = train_set->inputsize();

mean_input.resize(p);

stddev_input.resize(p);

mean_target.resize(m);

stddev_target.resize(m);

if (train_set->weightsize() > 0) {

PLWARNING("In PLS::build_ - The train set has weights, but the optimization algorithm won't use them");

}

// Check method consistency.

if (method == "pls1") {

// Make sure the target is 1-dimensional.

if (m != 1) {

PLERROR("In PLS::build_ - With the 'pls1' method, target should be 1-dimensional");

}

} else if (method == "kernel") {

// Everything should be ok.

} else {

PLERROR("In PLS::build_ - Unknown value for option 'method'");

}

}

if (!output_the_score && !output_the_target) {

// Weird, we don't want any output ??

PLWARNING("In PLS::build_ - There will be no output");

}

}

| string PLearn::PLS::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

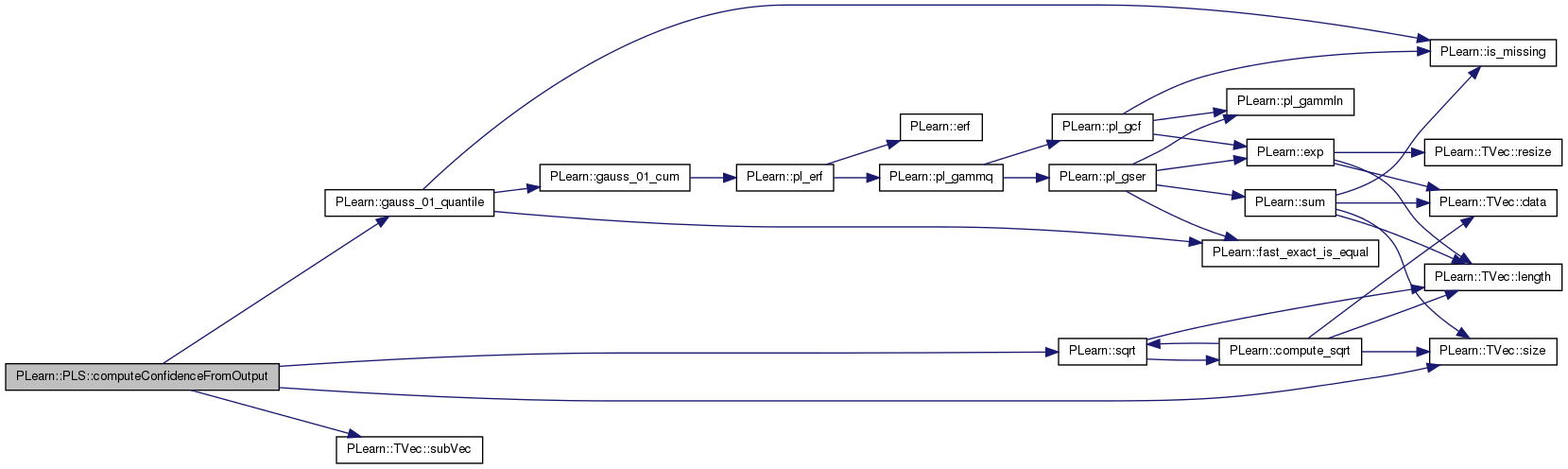

| bool PLearn::PLS::computeConfidenceFromOutput | ( | const Vec & | , |

| const Vec & | output, | ||

| real | probability, | ||

| TVec< pair< real, real > > & | intervals | ||

| ) | const [virtual] |

Compute confidence intervals from already-computed outputs.

Reimplemented from PLearn::PLearner.

Definition at line 287 of file PLS.cc.

References PLearn::gauss_01_quantile(), i, k, m, output_the_score, output_the_target, PLERROR, resid_variance, PLearn::TVec< T >::size(), PLearn::sqrt(), and PLearn::TVec< T >::subVec().

{

// Must figure out where the real output starts within the output vector

if (! output_the_target)

PLERROR("PLS::computeConfidenceFromOutput: the option 'output_the_target' "

"must be enabled in order to compute confidence intervals");

int ostart = (output_the_score? k : 0);

Vec regr_output = output.subVec(ostart, m);

if (m != resid_variance.size())

PLERROR("PLS::computeConfidenceFromOutput: residual variance not yet computed "

"or its size (= %d) does not match the output size (= %d)",

resid_variance.size(), m);

// two-tailed

const real multiplier = gauss_01_quantile((1+probability)/2);

intervals.resize(m);

for (int i=0; i<m; ++i) {

real half_width = multiplier * sqrt(resid_variance[i]);

intervals[i] = std::make_pair(output[i] - half_width,

output[i] + half_width);

}

return true;

}

| void PLearn::PLS::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 242 of file PLS.cc.

{

// No cost computed.

}

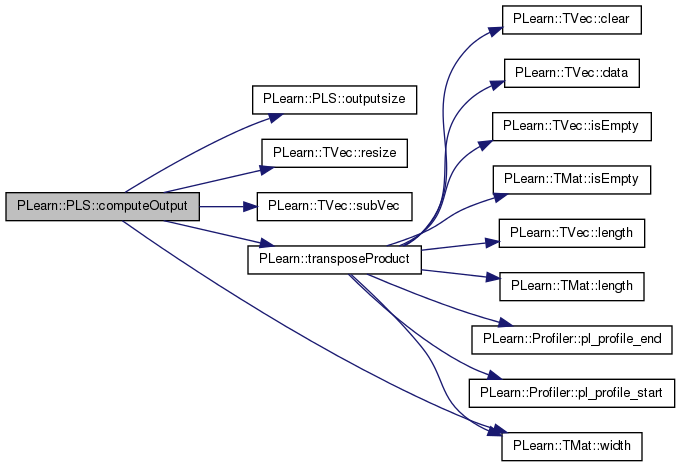

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 251 of file PLS.cc.

References B, k, m, mean_input, mean_target, output_the_score, output_the_target, outputsize(), p, PLERROR, PLWARNING, PLearn::TVec< T >::resize(), stddev_input, stddev_target, PLearn::TVec< T >::subVec(), PLearn::transposeProduct(), W, and PLearn::TMat< T >::width().

Referenced by computeResidVariance().

{

static Vec input_copy;

if (W.width()==0)

PLERROR("PLS::computeOutput but model was not trained!");

// Compute the output from the input

int nout = outputsize();

output.resize(nout);

// First normalize the input.

input_copy.resize(this->p);

input_copy << input;

input_copy -= mean_input;

input_copy /= stddev_input;

int target_start = 0;

if (output_the_score) {

transposeProduct(output.subVec(0, this->k), W, input_copy);

target_start = this->k;

}

if (output_the_target) {

if (this->m > 0) {

Vec target = output.subVec(target_start, this->m);

transposeProduct(target, B, input_copy);

target *= stddev_target;

target += mean_target;

} else {

// This is just a safety check, since it should never happen.

PLWARNING("In PLS::computeOutput - You ask to output the target but the target size is <= 0");

}

}

}

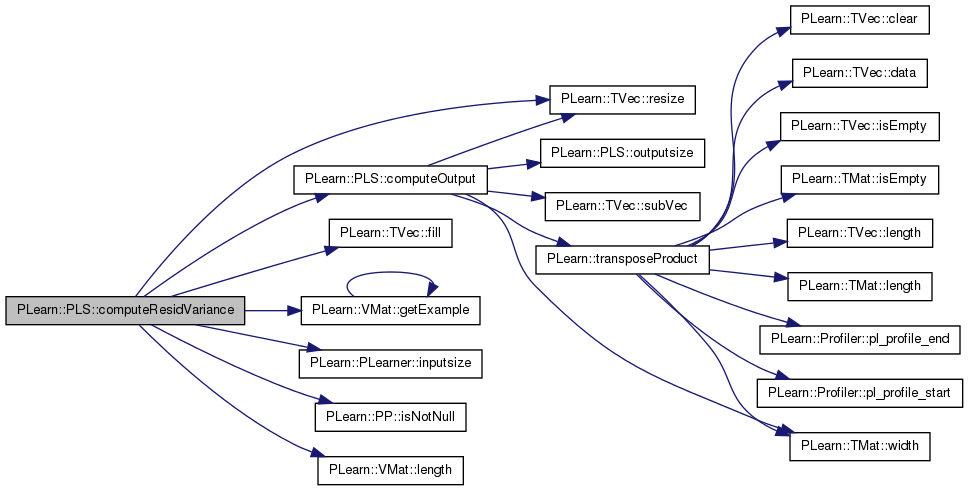

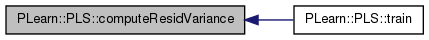

Compute the variance of residuals on the specified dataset.

Definition at line 596 of file PLS.cc.

References computeOutput(), PLearn::TVec< T >::fill(), PLearn::VMat::getExample(), i, PLearn::PLearner::inputsize(), PLearn::PP< T >::isNotNull(), PLearn::VMat::length(), m, n, output_the_score, output_the_target, PLASSERT, and PLearn::TVec< T >::resize().

Referenced by train().

{

PLASSERT( dataset.isNotNull() && m >= 0 );

bool old_output_score = output_the_score;

bool old_output_target= output_the_target;

output_the_score = false;

output_the_target = true;

resid_variance.resize(m);

resid_variance.fill(0.0);

Vec input, target, output(m);

real weight;

for (int i=0, n=dataset.length() ; i<n ; ++i) {

dataset->getExample(i, input, target, weight);

computeOutput(input, output);

target -= output;

target *= target; // Square of residual

resid_variance += target;

}

resid_variance /= (dataset.length() - inputsize());

output_the_score = old_output_score;

output_the_target = old_output_target;

}

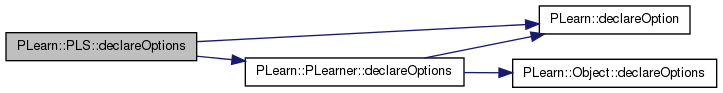

| void PLearn::PLS::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 133 of file PLS.cc.

References B, PLearn::OptionBase::buildoption, compute_confidence, PLearn::declareOption(), PLearn::PLearner::declareOptions(), k, PLearn::OptionBase::learntoption, m, mean_input, mean_target, method, output_the_score, output_the_target, p, precision, resid_variance, stddev_input, stddev_target, and W.

{

// Build options.

declareOption(ol, "k", &PLS::k, OptionBase::buildoption,

"The number of components (factors) computed.");

declareOption(ol, "method", &PLS::method, OptionBase::buildoption,

"The PLS algorithm used ('pls1' or 'kernel', see help for more details).\n");

declareOption(ol, "output_the_score", &PLS::output_the_score, OptionBase::buildoption,

"If set to 1, then the score (the low-dimensional representation of the input)\n"

"will be included in the output (before the target).");

declareOption(ol, "output_the_target", &PLS::output_the_target, OptionBase::buildoption,

"If set to 1, then (the prediction of) the target will be included in the\n"

"output (after the score).");

declareOption(ol, "compute_confidence", &PLS::compute_confidence,

OptionBase::buildoption,

"If set to 1, the variance of the residuals on the training set is\n"

"computed after training in order to allow the computation of confidence\n"

"intervals. In the current implementation, this entails performing another\n"

"traversal of the training set.");

// Learnt options.

declareOption(ol, "B", &PLS::B, OptionBase::learntoption,

"The regression matrix in Y = X.B + E.");

declareOption(ol, "m", &PLS::m, OptionBase::learntoption,

"Used to store the target size.");

declareOption(ol, "mean_input", &PLS::mean_input, OptionBase::learntoption,

"The mean of the input data X.");

declareOption(ol, "mean_target", &PLS::mean_target, OptionBase::learntoption,

"The mean of the target data Y.");

declareOption(ol, "p", &PLS::p, OptionBase::learntoption,

"Used to store the input size.");

declareOption(ol, "precision", &PLS::precision, OptionBase::buildoption,

"The precision to which we compute the eigenvectors.");

declareOption(ol, "stddev_input", &PLS::stddev_input, OptionBase::learntoption,

"The standard deviation of the input data X.");

declareOption(ol, "stddev_target", &PLS::stddev_target, OptionBase::learntoption,

"The standard deviation of the target data Y.");

declareOption(ol, "W", &PLS::W, OptionBase::learntoption,

"The regression matrix in T = X.W.");

declareOption(ol, "resid_variance", &PLS::resid_variance, OptionBase::learntoption,

"Estimate of the residual variance for each output variable. Saved as a\n"

"learned option to allow outputting confidence intervals when model is\n"

"reloaded and used in test mode. These are saved only if the option\n"

"'compute_confidence' is true at train-time.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::PLS::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Reimplemented from PLearn::PLearner.

| void PLearn::PLS::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 317 of file PLS.cc.

References B, PLearn::PLearner::stage, and W.

| OptionList & PLearn::PLS::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| OptionMap & PLearn::PLS::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| RemoteMethodMap & PLearn::PLS::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| TVec< string > PLearn::PLS::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 328 of file PLS.cc.

{

// No cost computed.

TVec<string> t;

return t;

}

| TVec< string > PLearn::PLS::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 338 of file PLS.cc.

{

// No cost computed.

TVec<string> t;

return t;

}

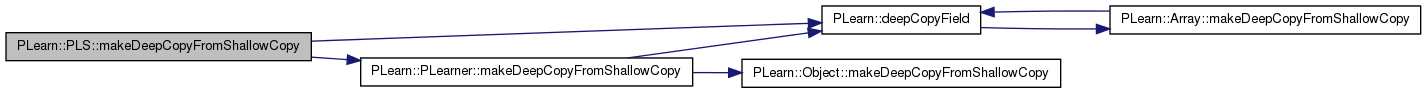

| void PLearn::PLS::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 348 of file PLS.cc.

References B, PLearn::deepCopyField(), PLearn::PLearner::makeDeepCopyFromShallowCopy(), mean_input, mean_target, resid_variance, stddev_input, stddev_target, and W.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// ### Call deepCopyField on all "pointer-like" fields

// ### that you wish to be deepCopied rather than

// ### shallow-copied.

// ### ex:

deepCopyField(B, copies);

deepCopyField(mean_input, copies);

deepCopyField(mean_target, copies);

deepCopyField(stddev_input, copies);

deepCopyField(stddev_target, copies);

deepCopyField(W, copies);

deepCopyField(resid_variance, copies);

}

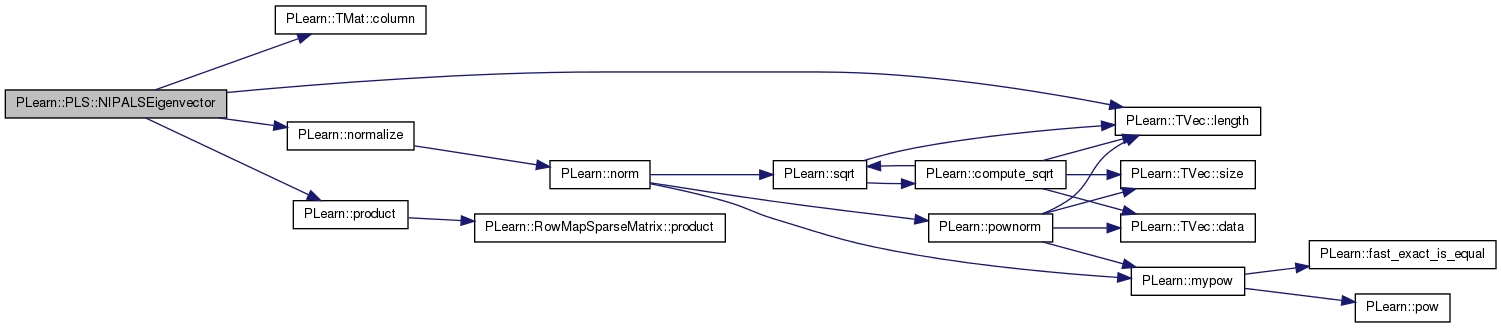

Compute the largest eigenvector of m with the NIPALS algorithm: (1) v <- random initialization (but normalized) (2) v = m.v, normalize v (3) if there is a v[i] that has changed by more than 'preicision', go to (2), otherwise return v.

Definition at line 368 of file PLS.cc.

References PLearn::TMat< T >::column(), i, PLearn::TVec< T >::length(), n, PLearn::normalize(), PLearn::product(), and w.

Referenced by train().

{

int n = v.length();

Vec w(n);

v << m.column(0);

normalize(v, 2.0);

bool ok = false;

while (!ok) {

w << v;

product(v, m, w);

normalize(v, 2.0);

ok = true;

for (int i = 0; i < n && ok; i++) {

if (fabs(v[i] - w[i]) > precision) {

ok = false;

}

}

}

}

| int PLearn::PLS::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 390 of file PLS.cc.

References k, m, output_the_score, and output_the_target.

Referenced by computeOutput().

{

int os = 0;

if (output_the_score) {

os += this->k;

}

if (output_the_target && m >= 0) {

// If m < 0, this means we don't know yet the target size, thus we

// shouldn't report it here.

os += this->m;

}

return os;

}

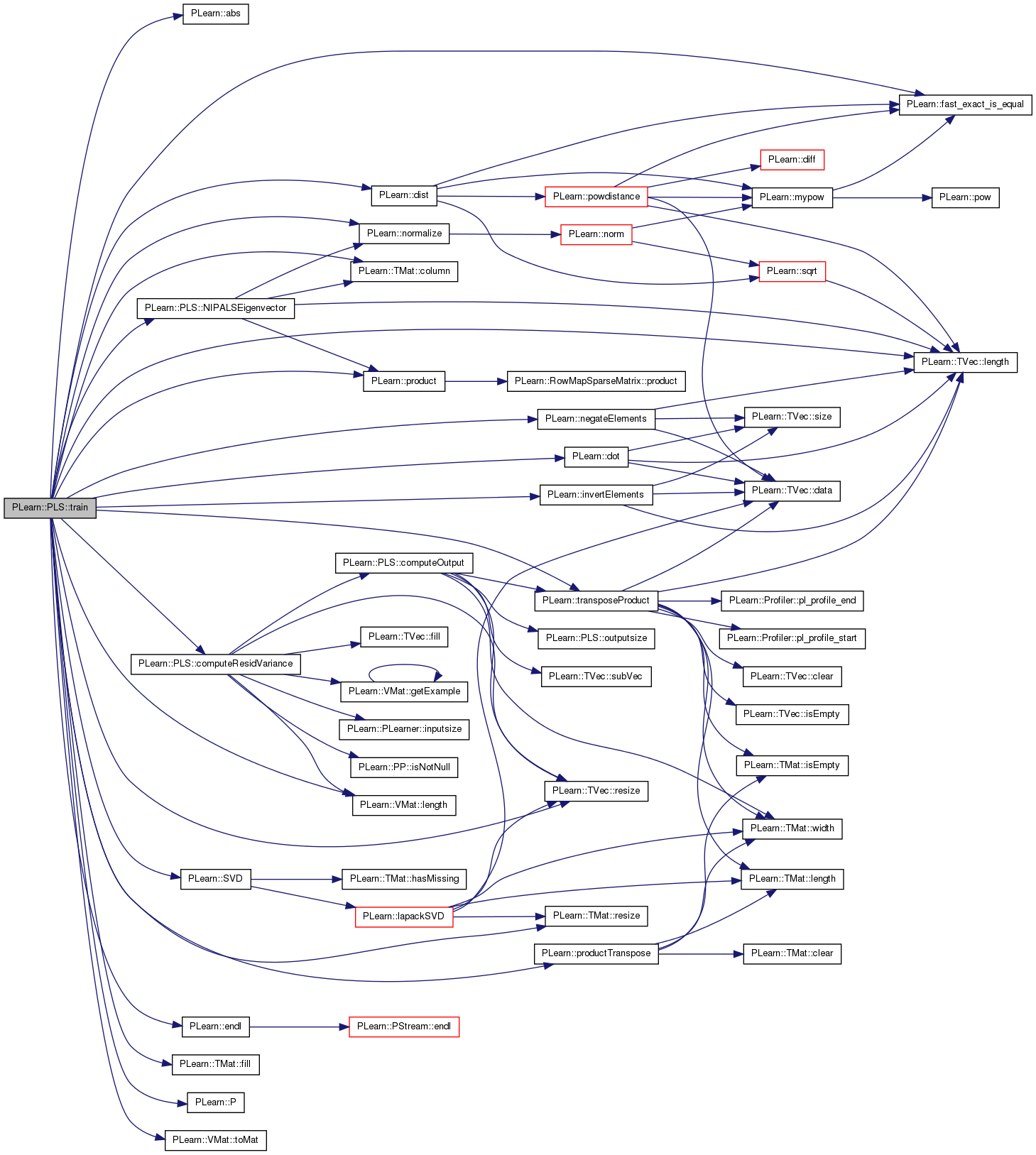

| void PLearn::PLS::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 407 of file PLS.cc.

References PLearn::abs(), B, PLearn::TMat< T >::column(), compute_confidence, computeResidVariance(), PLearn::dist(), PLearn::dot(), PLearn::endl(), PLearn::fast_exact_is_equal(), PLearn::TMat< T >::fill(), i, PLearn::invertElements(), j, k, PLearn::TVec< T >::length(), PLearn::VMat::length(), m, mean_input, mean_target, method, n, PLearn::negateElements(), NIPALSEigenvector(), PLearn::normalize(), PLearn::P(), p, precision, PLearn::product(), PLearn::productTranspose(), PLearn::PLearner::report_progress, resid_variance, PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), PLearn::PLearner::stage, stddev_input, stddev_target, PLearn::SVD(), PLearn::VMat::toMat(), PLearn::PLearner::train_set, PLearn::transposeProduct(), PLearn::PLearner::verbosity, and W.

{

if (stage == 1) {

// Already trained.

MODULE_LOG << "Skipping PLS training" << endl;

return;

}

MODULE_LOG << "PLS training started" << endl;

// Construct the centered and normalized training set, for the input

// as well as the target part.

DBG_MODULE_LOG << "Normalizing of the data" << endl;

VMat input_part = new SubVMatrix(train_set,

0, 0,

train_set->length(),

train_set->inputsize());

VMat target_part = new SubVMatrix( train_set,

0, train_set->inputsize(),

train_set->length(),

train_set->targetsize());

PP<ShiftAndRescaleVMatrix> X_vmat =

new ShiftAndRescaleVMatrix(input_part, true);

X_vmat->verbosity = this->verbosity;

mean_input << X_vmat->shift;

stddev_input << X_vmat->scale;

negateElements(mean_input);

invertElements(stddev_input);

PP<ShiftAndRescaleVMatrix> Y_vmat =

new ShiftAndRescaleVMatrix(target_part, target_part->width(), true);

Y_vmat->verbosity = this->verbosity;

mean_target << Y_vmat->shift;

stddev_target << Y_vmat->scale;

negateElements(mean_target);

invertElements(stddev_target);

// Some common initialization.

W.resize(p, k);

Mat P(p, k);

Mat Q(m, k);

int n = X_vmat->length();

VMat X_vmatrix = static_cast<ShiftAndRescaleVMatrix*>(X_vmat);

VMat Y_vmatrix = static_cast<ShiftAndRescaleVMatrix*>(Y_vmat);

if (method == "kernel") {

// Initialize the various coefficients.

DBG_MODULE_LOG << "Initialization of the coefficients" << endl;

Vec ph(p);

Vec qh(m);

Vec wh(p);

Vec tmp(p);

real ch;

Mat Ah = transposeProduct(X_vmatrix, Y_vmatrix);

Mat Mh = transposeProduct(X_vmatrix, X_vmatrix);

Mat Ch(p,p); // Initialized to Identity(p).

Mat Ah_t_Ah;

Mat update_Ah(p,m);

Mat update_Mh(p,p);

Mat update_Ch(p,p);

for (int i = 0; i < p; i++) {

for (int j = i+1; j < p; j++) {

Ch(i,j) = Ch(j,i) = 0;

}

Ch(i,i) = 1;

}

// Iterate k times to find the k first factors.

PP<ProgressBar> pb(

report_progress? new ProgressBar("Computing the PLS components", k)

: 0);

for (int h = 0; h < this->k; h++) {

Ah_t_Ah = transposeProduct(Ah,Ah);

if (m == 1) {

// No need to compute the eigenvector.

qh[0] = 1;

} else {

NIPALSEigenvector(Ah_t_Ah, qh, precision);

}

product(tmp, Ah, qh);

product(wh, Ch, tmp);

normalize(wh, 2.0);

W.column(h) << wh;

product(ph, Mh, wh);

ch = dot(wh, ph);

ph /= ch;

P.column(h) << ph;

transposeProduct(qh, Ah, wh);

qh /= ch;

Q.column(h) << qh;

Mat ph_mat(p, 1, ph);

Mat qh_mat(m, 1, qh);

Mat wh_mat(p, 1, wh);

update_Ah = productTranspose(ph_mat, qh_mat);

update_Ah *= ch;

Ah -= update_Ah;

update_Mh = productTranspose(ph_mat, ph_mat);

update_Mh *= ch;

Mh -= update_Mh;

update_Ch = productTranspose(wh_mat, ph_mat);

Ch -= update_Ch;

if (pb)

pb->update(h + 1);

}

} else if (method == "pls1") {

Vec s(n);

Vec old_s(n);

Vec y(n);

Vec lx(p);

Vec ly(1);

Mat T(n,k);

Mat X = X_vmatrix->toMat();

y << Y_vmatrix->toMat();

PP<ProgressBar> pb(

report_progress? new ProgressBar("Computing the PLS components", k)

: 0);

for (int h = 0; h < k; h++) {

if (pb)

pb->update(h);

s << y;

normalize(s, 2.0);

bool finished = false;

while (!finished) {

old_s << s;

transposeProduct(lx, X, s);

product(s, X, lx);

normalize(s, 2.0);

if (dist(old_s, s, 2) < precision) {

finished = true;

}

}

ly[0] = dot(s, y);

transposeProduct(lx, X, s);

T.column(h) << s;

P.column(h) << lx;

Q.column(h) << ly;

// X = X - s lx'

// y = y - s ly

for (int i = 0; i < n; i++) {

for (int j = 0; j < p; j++) {

X(i,j) -= s[i] * lx[j];

}

y[i] -= s[i] * ly[0];

}

}

DBG_MODULE_LOG << " Computation of the corresponding coefficients" << endl;

Mat tmp(n, p);

productTranspose(tmp, T, P);

Mat U, Vt;

Vec D;

real safeguard = 1.1; // Because the SVD may crash otherwise.

SVD(tmp, U, D, Vt, 'A', safeguard);

for (int i = 0; i < D.length(); i++) {

if (abs(D[i]) < precision) {

D[i] = 0;

} else {

D[i] = 1.0 / D[i];

}

}

Mat tmp2(n,p);

tmp2.fill(0);

for (int i = 0; i < D.length(); i++) {

if (!fast_exact_is_equal(D[i], 0)) {

tmp2(i) << D[i] * Vt(i);

}

}

product(tmp, U, tmp2);

transposeProduct(W, tmp, T);

}

B.resize(p,m);

productTranspose(B, W, Q);

// If we requested confidence intervals, compute the variance of the

// residuals on the training set

if (compute_confidence)

computeResidVariance(train_set, resid_variance);

else

resid_variance.resize(0);

MODULE_LOG << "PLS training ended" << endl;

stage = 1;

}

Reimplemented from PLearn::PLearner.

Mat PLearn::PLS::B [protected] |

Definition at line 64 of file PLS.h.

Referenced by computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 89 of file PLS.h.

Referenced by declareOptions(), and train().

Definition at line 84 of file PLS.h.

Referenced by computeConfidenceFromOutput(), computeOutput(), declareOptions(), outputsize(), and train().

int PLearn::PLS::m [protected] |

Definition at line 65 of file PLS.h.

Referenced by build_(), computeConfidenceFromOutput(), computeOutput(), computeResidVariance(), declareOptions(), outputsize(), and train().

Vec PLearn::PLS::mean_input [protected] |

Definition at line 66 of file PLS.h.

Referenced by build_(), computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::PLS::mean_target [protected] |

Definition at line 67 of file PLS.h.

Referenced by build_(), computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

| string PLearn::PLS::method |

Definition at line 85 of file PLS.h.

Referenced by build_(), declareOptions(), and train().

Definition at line 87 of file PLS.h.

Referenced by build_(), computeConfidenceFromOutput(), computeOutput(), computeResidVariance(), declareOptions(), and outputsize().

Definition at line 88 of file PLS.h.

Referenced by build_(), computeConfidenceFromOutput(), computeOutput(), computeResidVariance(), declareOptions(), and outputsize().

int PLearn::PLS::p [protected] |

Definition at line 68 of file PLS.h.

Referenced by build_(), computeOutput(), declareOptions(), and train().

Definition at line 86 of file PLS.h.

Referenced by declareOptions(), and train().

Vec PLearn::PLS::resid_variance [protected] |

Estimate of the residual variance for each output variable.

Saved as a learned option to allow outputting confidence intervals when model is reloaded and used in test mode.

Definition at line 76 of file PLS.h.

Referenced by computeConfidenceFromOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::PLS::stddev_input [protected] |

Definition at line 69 of file PLS.h.

Referenced by build_(), computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::PLS::stddev_target [protected] |

Definition at line 70 of file PLS.h.

Referenced by build_(), computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Mat PLearn::PLS::W [protected] |

Definition at line 71 of file PLS.h.

Referenced by computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

1.7.4

1.7.4