|

PLearn 0.1

|

|

PLearn 0.1

|

#include <SecondIterationWrapper.h>

Public Member Functions | |

| SecondIterationWrapper () | |

| virtual | ~SecondIterationWrapper () |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual SecondIterationWrapper * | deepCopy (CopiesMap &copies) const |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | train () |

| *** SUBCLASS WRITING: *** | |

| virtual void | forget () |

| *** SUBCLASS WRITING: *** | |

| virtual int | outputsize () const |

| SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options. | |

| virtual TVec< string > | getTrainCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual TVec< string > | getTestCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| *** SUBCLASS WRITING: *** | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| *** SUBCLASS WRITING: *** | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| **** SUBCLASS WRITING: **** | |

| void | computeClassStatistics () |

| void | computeSalesStatistics () |

| real | deGaussianize (real prediction) |

Private Attributes | |

| int | class_prediction |

| VMat | ref_train |

| VMat | ref_test |

| VMat | ref_sales |

| VMat | train_dataset |

| VMat | test_dataset |

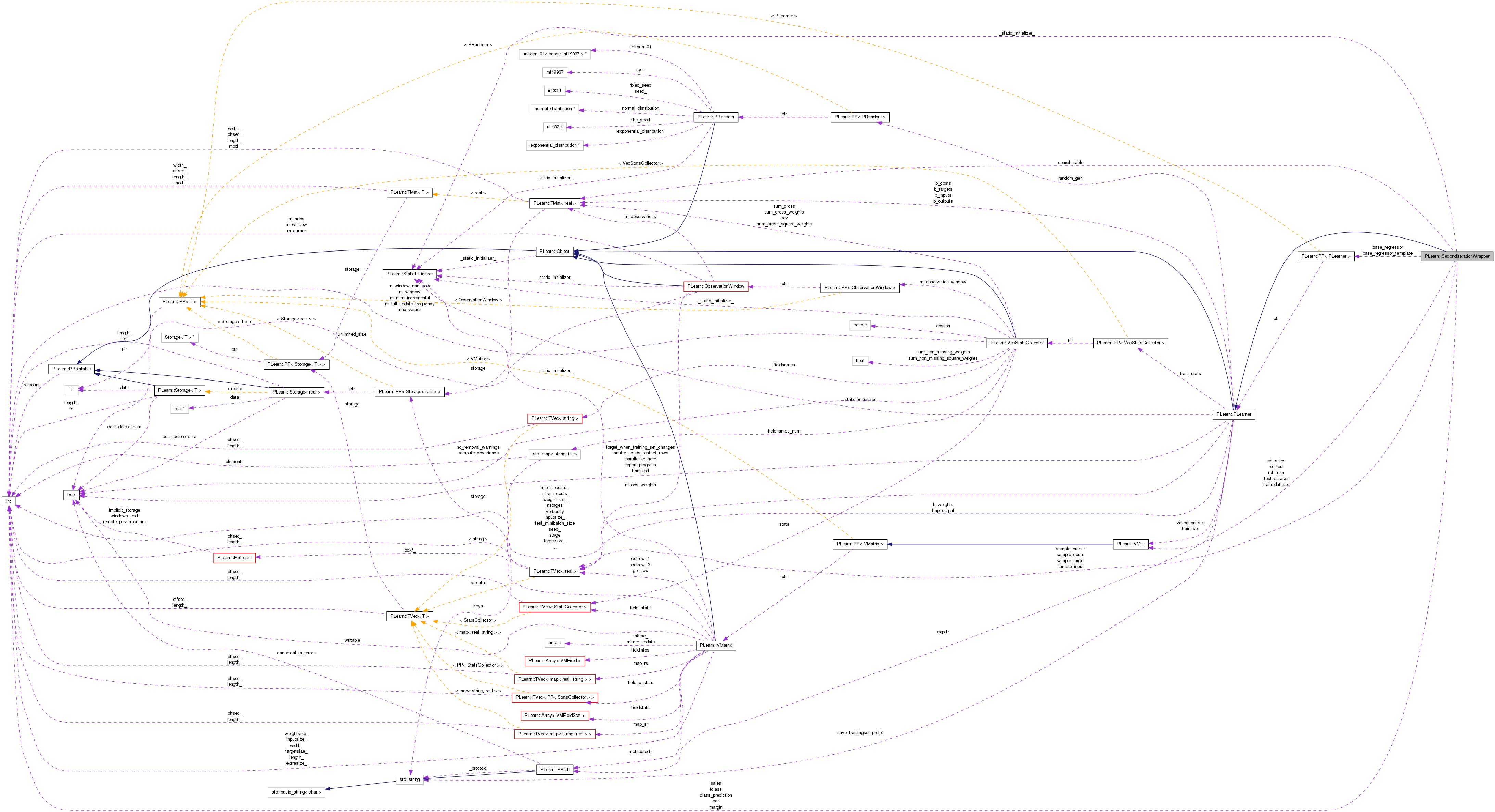

| PP< PLearner > | base_regressor_template |

| PP< PLearner > | base_regressor |

| int | margin |

| int | loan |

| int | sales |

| int | tclass |

| Mat | search_table |

| Vec | sample_input |

| Vec | sample_target |

| Vec | sample_output |

| Vec | sample_costs |

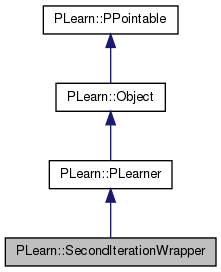

Definition at line 53 of file SecondIterationWrapper.h.

typedef PLearner PLearn::SecondIterationWrapper::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file SecondIterationWrapper.h.

| PLearn::SecondIterationWrapper::SecondIterationWrapper | ( | ) |

Definition at line 55 of file SecondIterationWrapper.cc.

: class_prediction(1) { }

| PLearn::SecondIterationWrapper::~SecondIterationWrapper | ( | ) | [virtual] |

Definition at line 60 of file SecondIterationWrapper.cc.

{

}

| string PLearn::SecondIterationWrapper::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file SecondIterationWrapper.cc.

| OptionList & PLearn::SecondIterationWrapper::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file SecondIterationWrapper.cc.

| RemoteMethodMap & PLearn::SecondIterationWrapper::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file SecondIterationWrapper.cc.

Reimplemented from PLearn::PLearner.

Definition at line 53 of file SecondIterationWrapper.cc.

| Object * PLearn::SecondIterationWrapper::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SecondIterationWrapper.cc.

| StaticInitializer SecondIterationWrapper::_static_initializer_ & PLearn::SecondIterationWrapper::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file SecondIterationWrapper.cc.

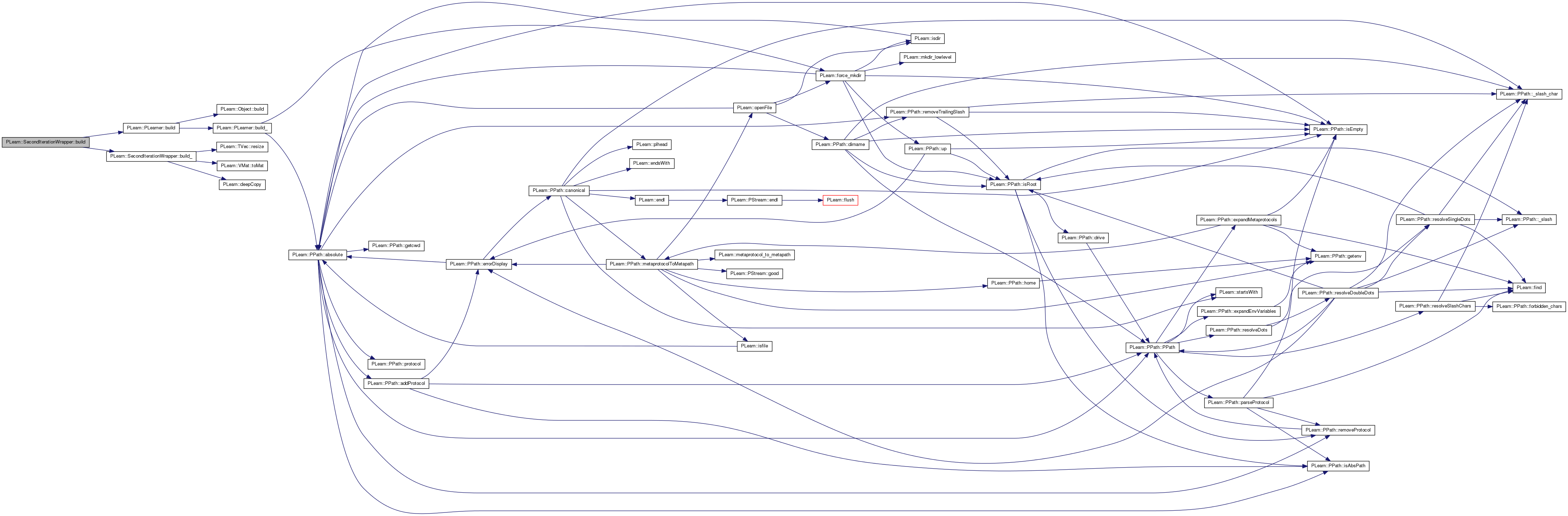

| void PLearn::SecondIterationWrapper::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 105 of file SecondIterationWrapper.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

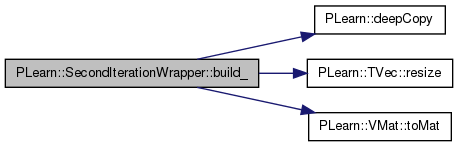

| void PLearn::SecondIterationWrapper::build_ | ( | ) | [private] |

**** SUBCLASS WRITING: ****

This method should finish building of the object, according to set 'options', in *any* situation.

Typical situations include:

You can assume that the parent class' build_() has already been called.

A typical build method will want to know the inputsize(), targetsize() and outputsize(), and may also want to check whether train_set->hasWeights(). All these methods require a train_set to be set, so the first thing you may want to do, is check if(train_set), before doing any heavy building...

Note: build() is always called by setTrainingSet.

Reimplemented from PLearn::PLearner.

Definition at line 111 of file SecondIterationWrapper.cc.

References base_regressor, base_regressor_template, class_prediction, PLearn::deepCopy(), loan, margin, PLERROR, ref_sales, ref_test, ref_train, PLearn::TVec< T >::resize(), sales, sample_costs, sample_input, sample_output, sample_target, search_table, tclass, test_dataset, PLearn::VMat::toMat(), train_dataset, and PLearn::PLearner::train_set.

Referenced by build().

{

if (train_set)

{

if (class_prediction == 0)

if(!ref_train || !ref_test || !ref_test || !train_dataset || !test_dataset)

PLERROR("In SecondIterationWrapper: missing reference data sets to compute statistics");

margin = 0;

loan = 1;

sales = 2;

tclass = 3;

base_regressor = ::PLearn::deepCopy(base_regressor_template);

base_regressor->setTrainingSet(train_set, true);

base_regressor->setTrainStatsCollector(new VecStatsCollector);

if (class_prediction == 0) search_table = ref_sales->toMat();

sample_input.resize(train_set->inputsize());

sample_target.resize(train_set->targetsize());

sample_output.resize(base_regressor->outputsize());

sample_costs.resize(4);

}

}

| string PLearn::SecondIterationWrapper::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SecondIterationWrapper.cc.

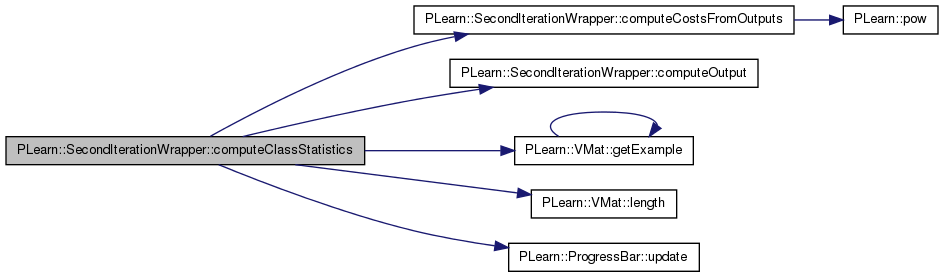

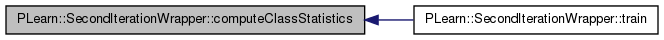

| void PLearn::SecondIterationWrapper::computeClassStatistics | ( | ) | [private] |

Definition at line 141 of file SecondIterationWrapper.cc.

References computeCostsFromOutputs(), computeOutput(), PLearn::VMat::getExample(), PLearn::VMat::length(), PLearn::PLearner::report_progress, sample_costs, sample_input, sample_output, sample_target, PLearn::PLearner::train_set, PLearn::PLearner::train_stats, and PLearn::ProgressBar::update().

Referenced by train().

{

real sample_weight;

ProgressBar* pb = NULL;

if (report_progress)

pb = new ProgressBar("Second Iteration : computing the train statistics: ",

train_set->length());

train_stats->forget();

for (int row = 0; row < train_set->length(); row++)

{

train_set->getExample(row, sample_input, sample_target, sample_weight);

computeOutput(sample_input, sample_output);

computeCostsFromOutputs(sample_input, sample_output, sample_target, sample_costs);

train_stats->update(sample_costs);

if (report_progress) pb->update(row);

}

train_stats->finalize();

if (report_progress) delete pb;

}

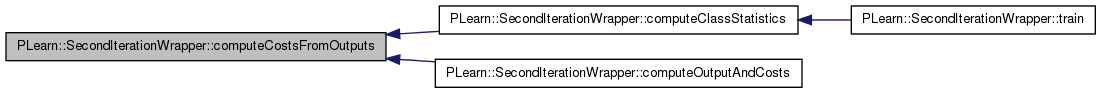

| void PLearn::SecondIterationWrapper::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the weighted costs from already computed output. The costs should correspond to the cost names returned by getTestCostNames().

NOTE: In exotic cases, the cost may also depend on some info in the input, that's why the method also gets so see it.

Implements PLearn::PLearner.

Definition at line 350 of file SecondIterationWrapper.cc.

References class_prediction, and PLearn::pow().

Referenced by computeClassStatistics(), and computeOutputAndCosts().

{

costsv[0] = pow((outputv[0] - targetv[0]), 2);

if (class_prediction == 1)

{

real class_pred;

if (outputv[0] <= 0.5) class_pred = 0.;

else if (outputv[0] <= 1.5) class_pred = 1.0;

// else if (outputv[0] <= 2.5) class_pred = 2.0;

else class_pred = 2.0;

costsv[1] = pow((class_pred - targetv[0]), 2);

costsv[2] = fabs(class_pred - targetv[0]);

costsv[3] = class_pred == targetv[0]?0:1;

return;

}

costsv[1] = 0.0;

costsv[2] = 0.0;

costsv[3] = 0.0;

}

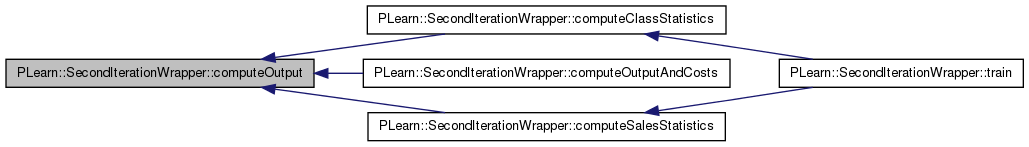

| void PLearn::SecondIterationWrapper::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 339 of file SecondIterationWrapper.cc.

References base_regressor.

Referenced by computeClassStatistics(), computeOutputAndCosts(), and computeSalesStatistics().

{

base_regressor->computeOutput(inputv, outputv);

}

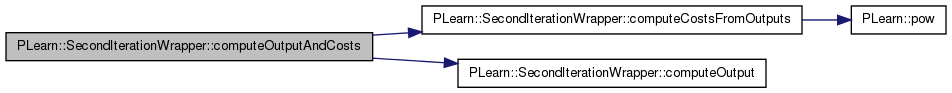

| void PLearn::SecondIterationWrapper::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 344 of file SecondIterationWrapper.cc.

References computeCostsFromOutputs(), and computeOutput().

{

computeOutput(inputv, outputv);

computeCostsFromOutputs(inputv, outputv, targetv, costsv);

}

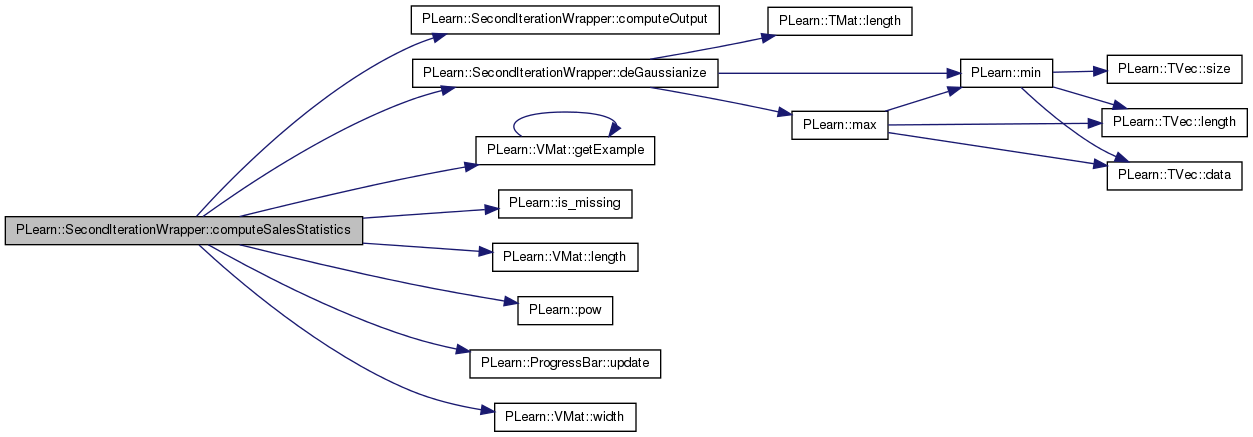

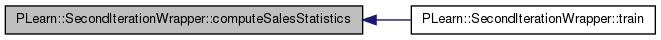

| void PLearn::SecondIterationWrapper::computeSalesStatistics | ( | ) | [private] |

Definition at line 162 of file SecondIterationWrapper.cc.

References base_regressor, computeOutput(), deGaussianize(), PLearn::PLearner::expdir, PLearn::VMat::getExample(), PLearn::is_missing(), PLearn::VMat::length(), loan, margin, PLearn::pow(), ref_test, ref_train, PLearn::PLearner::report_progress, sales, sample_costs, sample_input, sample_output, sample_target, tclass, test_dataset, train_dataset, PLearn::PLearner::train_set, PLearn::PLearner::train_stats, PLearn::ProgressBar::update(), and PLearn::VMat::width().

Referenced by train().

{

int row;

Vec sample_input(train_set->inputsize());

Vec sample_target(train_set->targetsize());

Vec reference_vector(ref_train->width());

real sample_weight;

Vec sample_output(base_regressor->outputsize());

Vec sample_costs(4);

Vec train_mean(3);

Vec train_std_error(3);

Vec valid_mean(3);

Vec valid_std_error(3);

Vec test_mean(3);

Vec test_std_error(3);

real sales_prediction;

real commitment;

real predicted_class;

ProgressBar* pb = NULL;

if (report_progress)

{

pb = new ProgressBar("Second Iteration : computing the train statistics: ", train_set->length());

}

train_stats->forget();

for (row = 0; row < train_set->length(); row++)

{

train_set->getExample(row, sample_input, sample_target, sample_weight);

ref_train->getRow(row, reference_vector);

computeOutput(sample_input, sample_output);

sales_prediction = deGaussianize(sample_output[0]);

commitment = 0.0;

if (!is_missing(reference_vector[margin])) commitment += reference_vector[margin];

if (!is_missing(reference_vector[loan])) commitment += reference_vector[loan];

if (sales_prediction < 1000000.0 && commitment < 200000.0) predicted_class = 1.0;

else if (sales_prediction < 10000000.0 && commitment < 1000000.0) predicted_class = 2.0;

else if (sales_prediction < 100000000.0 && commitment < 20000000.0) predicted_class = 3.0;

else predicted_class = 4.0;

sample_costs[0] = pow((sales_prediction - reference_vector[sales]), 2);

sample_costs[1] = pow((predicted_class - reference_vector[tclass]), 2);

if (predicted_class == reference_vector[tclass]) sample_costs[2] = 0.0;

else sample_costs[2] = 1.0;

train_stats->update(sample_costs);

if (report_progress) pb->update(row);

}

train_stats->finalize();

train_mean << train_stats->getMean();

train_std_error << train_stats->getStdError();

if (report_progress) delete pb;

if (report_progress)

{

pb = new ProgressBar("Second Iteration : computing the valid statistics: ", train_dataset->length() - train_set->length());

}

train_stats->forget();

for (row = train_set->length(); row < train_dataset->length(); row++)

{

train_dataset->getExample(row, sample_input, sample_target, sample_weight);

ref_train->getRow(row, reference_vector);

computeOutput(sample_input, sample_output);

sales_prediction = deGaussianize(sample_output[0]);

commitment = 0.0;

if (!is_missing(reference_vector[margin])) commitment += reference_vector[margin];

if (!is_missing(reference_vector[loan])) commitment += reference_vector[loan];

if (sales_prediction < 1000000.0 && commitment < 200000.0) predicted_class = 1.0;

else if (sales_prediction < 10000000.0 && commitment < 1000000.0) predicted_class = 2.0;

else if (sales_prediction < 100000000.0 && commitment < 20000000.0) predicted_class = 3.0;

else predicted_class = 4.0;

sample_costs[0] = pow((sales_prediction - reference_vector[sales]), 2);

sample_costs[1] = pow((predicted_class - reference_vector[tclass]), 2);

if (predicted_class == reference_vector[tclass]) sample_costs[2] = 0.0;

else sample_costs[2] = 1.0;

train_stats->update(sample_costs);

if (report_progress) pb->update(row);

}

train_stats->finalize();

valid_mean << train_stats->getMean();

valid_std_error << train_stats->getStdError();

if (report_progress) delete pb;

if (report_progress)

{

pb = new ProgressBar("Second Iteration : computing the test statistics: ", test_dataset->length());

}

train_stats->forget();

for (row = 0; row < test_dataset->length(); row++)

{

test_dataset->getExample(row, sample_input, sample_target, sample_weight);

ref_test->getRow(row, reference_vector);

computeOutput(sample_input, sample_output);

sales_prediction = deGaussianize(sample_output[0]);

commitment = 0.0;

if (!is_missing(reference_vector[margin])) commitment += reference_vector[margin];

if (!is_missing(reference_vector[loan])) commitment += reference_vector[loan];

if (sales_prediction < 1000000.0 && commitment < 200000.0) predicted_class = 1.0;

else if (sales_prediction < 10000000.0 && commitment < 1000000.0) predicted_class = 2.0;

else if (sales_prediction < 100000000.0 && commitment < 20000000.0) predicted_class = 3.0;

else predicted_class = 4.0;

sample_costs[0] = pow((sales_prediction - reference_vector[sales]), 2);

sample_costs[1] = pow((predicted_class - reference_vector[tclass]), 2);

if (predicted_class == reference_vector[tclass]) sample_costs[2] = 0.0;

else sample_costs[2] = 1.0;

train_stats->update(sample_costs);

if (report_progress) pb->update(row);

}

train_stats->finalize();

test_mean << train_stats->getMean();

test_std_error << train_stats->getStdError();

if (report_progress) delete pb;

TVec<string> stat_names(6);

stat_names[0] = "mse";

stat_names[1] = "mse_stderr";

stat_names[2] = "cse";

stat_names[3] = "cse_stderr";

stat_names[4] = "cle";

stat_names[5] = "cle_stderr";

VMat stat_file = new FileVMatrix(expdir + "class_stats.pmat", 3, stat_names);

stat_file->put(0, 0, train_mean[0]);

stat_file->put(0, 1, train_std_error[0]);

stat_file->put(0, 2, train_mean[1]);

stat_file->put(0, 3, train_std_error[1]);

stat_file->put(0, 4, train_mean[2]);

stat_file->put(0, 5, train_std_error[2]);

stat_file->put(1, 0, valid_mean[0]);

stat_file->put(1, 1, valid_std_error[0]);

stat_file->put(1, 2, valid_mean[1]);

stat_file->put(1, 3, valid_std_error[1]);

stat_file->put(1, 4, valid_mean[2]);

stat_file->put(1, 5, valid_std_error[2]);

stat_file->put(2, 0, test_mean[0]);

stat_file->put(2, 1, test_std_error[0]);

stat_file->put(2, 2, test_mean[1]);

stat_file->put(2, 3, test_std_error[1]);

stat_file->put(2, 4, test_mean[2]);

stat_file->put(2, 5, test_std_error[2]);

}

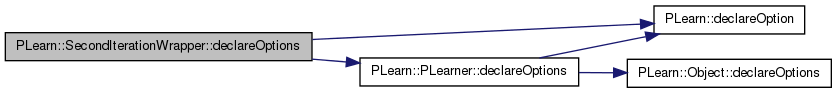

| void PLearn::SecondIterationWrapper::declareOptions | ( | OptionList & | ol | ) | [static] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 64 of file SecondIterationWrapper.cc.

References base_regressor, base_regressor_template, PLearn::OptionBase::buildoption, class_prediction, PLearn::declareOption(), PLearn::PLearner::declareOptions(), PLearn::OptionBase::learntoption, ref_sales, ref_test, ref_train, test_dataset, and train_dataset.

{

declareOption(ol, "class_prediction", &SecondIterationWrapper::class_prediction, OptionBase::buildoption,

"When set to 1 (default), indicates the base regression is on the class target.\n"

"Otherwise, we assume the regression is on the sales target.\n");

declareOption(ol, "base_regressor_template", &SecondIterationWrapper::base_regressor_template, OptionBase::buildoption,

"The template for the base regressor to be used.\n");

declareOption(ol, "ref_train", &SecondIterationWrapper::ref_train, OptionBase::buildoption,

"The reference set to compute train statistics.\n");

declareOption(ol, "ref_test", &SecondIterationWrapper::ref_test, OptionBase::buildoption,

"The reference set to compute test statistice.\n");

declareOption(ol, "ref_sales", &SecondIterationWrapper::ref_sales, OptionBase::buildoption,

"The reference set to de-gaussianize the prediction.\n");

declareOption(ol, "train_dataset", &SecondIterationWrapper::train_dataset, OptionBase::buildoption,

"The train data set.\n");

declareOption(ol, "test_dataset", &SecondIterationWrapper::test_dataset, OptionBase::buildoption,

"The test data set.\n");

declareOption(ol, "base_regressor", &SecondIterationWrapper::base_regressor, OptionBase::learntoption,

"The base regressor built from the template.\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::SecondIterationWrapper::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 97 of file SecondIterationWrapper.h.

:

void build_();

| SecondIterationWrapper * PLearn::SecondIterationWrapper::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file SecondIterationWrapper.cc.

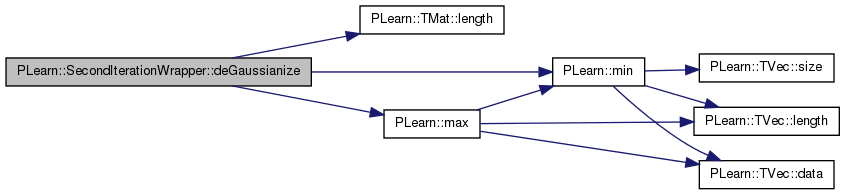

Definition at line 296 of file SecondIterationWrapper.cc.

References PLearn::TMat< T >::length(), PLearn::max(), PLearn::min(), and search_table.

Referenced by computeSalesStatistics().

{

if (prediction < search_table(0, 0)) return search_table(0, 1);

if (prediction > search_table(search_table.length() - 1, 0)) return search_table(search_table.length() - 1, 1);

int mid;

int min = 0;

int max = search_table.length() - 1;

while (max - min > 1)

{

mid = (max + min) / 2;

real mid_val = search_table(mid, 0);

if (prediction < mid_val) max = mid;

else if (prediction > mid_val) min = mid;

else min = max = mid;

}

if (min == max) return search_table(min, 1);

return (search_table(min, 1) + search_table(max, 1)) / 2.0;

}

| void PLearn::SecondIterationWrapper::forget | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!)

A typical forget() method should do the following:

This method is typically called by the build_() method, after it has finished setting up the parameters, and if it deemed useful to set or reset the learner in its fresh state. (remember build may be called after modifying options that do not necessarily require the learner to restart from a fresh state...) forget is also called by the setTrainingSet method, after calling build(), so it will generally be called TWICE during setTrainingSet!

Reimplemented from PLearn::PLearner.

Definition at line 315 of file SecondIterationWrapper.cc.

{

}

| OptionList & PLearn::SecondIterationWrapper::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SecondIterationWrapper.cc.

| OptionMap & PLearn::SecondIterationWrapper::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SecondIterationWrapper.cc.

| RemoteMethodMap & PLearn::SecondIterationWrapper::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SecondIterationWrapper.cc.

| TVec< string > PLearn::SecondIterationWrapper::getTestCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the costs computed by computeCostsFromOutputs.

Implements PLearn::PLearner.

Definition at line 334 of file SecondIterationWrapper.cc.

References getTrainCostNames().

{

return getTrainCostNames();

}

| TVec< string > PLearn::SecondIterationWrapper::getTrainCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 324 of file SecondIterationWrapper.cc.

Referenced by getTestCostNames().

{

TVec<string> return_msg(4);

return_msg[0] = "mse";

return_msg[1] = "square_class_error";

return_msg[2] = "linear_class_error";

return_msg[3] = "class_error";

return return_msg;

}

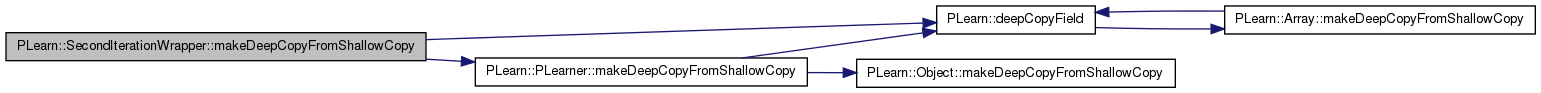

| void PLearn::SecondIterationWrapper::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 93 of file SecondIterationWrapper.cc.

References base_regressor, base_regressor_template, class_prediction, PLearn::deepCopyField(), PLearn::PLearner::makeDeepCopyFromShallowCopy(), ref_sales, ref_test, ref_train, and test_dataset.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(class_prediction, copies);

deepCopyField(ref_train, copies);

deepCopyField(ref_test, copies);

deepCopyField(ref_sales, copies);

deepCopyField(test_dataset, copies);

deepCopyField(base_regressor_template, copies);

deepCopyField(base_regressor, copies);

}

| int PLearn::SecondIterationWrapper::outputsize | ( | ) | const [virtual] |

SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options.

Implements PLearn::PLearner.

Definition at line 319 of file SecondIterationWrapper.cc.

References base_regressor.

{

return base_regressor?base_regressor->outputsize():-1;

}

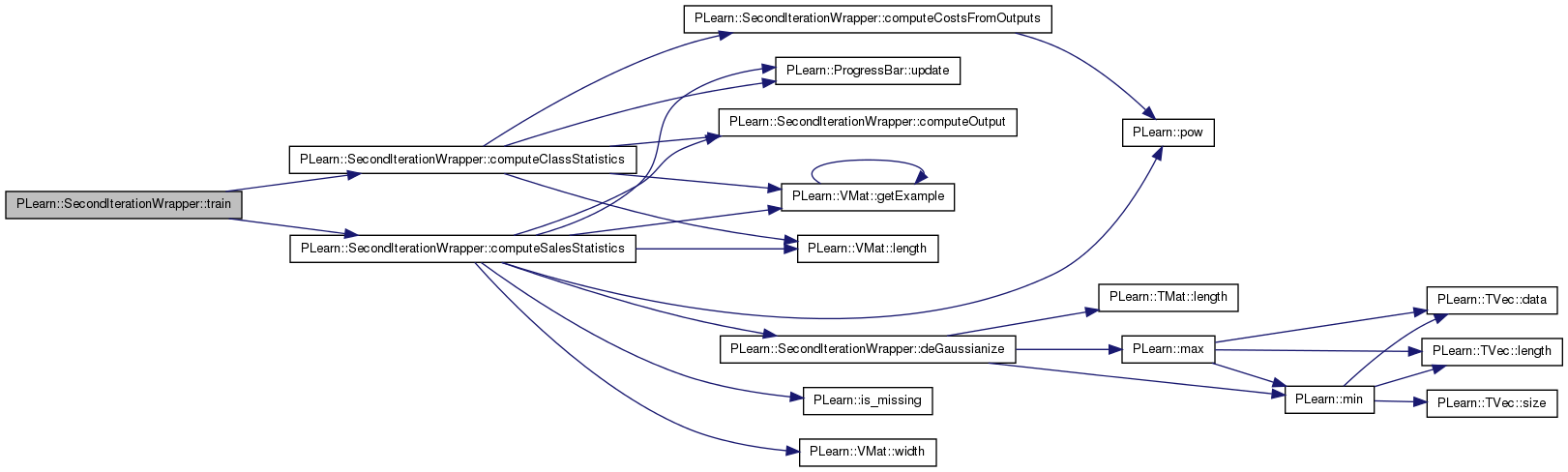

| void PLearn::SecondIterationWrapper::train | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

The role of the train method is to bring the learner up to stage==nstages, updating the stats with training costs measured on-line in the process.

TYPICAL CODE:

static Vec input; // static so we don't reallocate/deallocate memory each time... static Vec target; // (but be careful that static means shared!) input.resize(inputsize()); // the train_set's inputsize() target.resize(targetsize()); // the train_set's targetsize() real weight; if(!train_stats) // make a default stats collector, in case there's none train_stats = new VecStatsCollector(); if(nstages<stage) // asking to revert to a previous stage! forget(); // reset the learner to stage=0 while(stage<nstages) { // clear statistics of previous epoch train_stats->forget(); //... train for 1 stage, and update train_stats, // using train_set->getSample(input, target, weight); // and train_stats->update(train_costs) ++stage; train_stats->finalize(); // finalize statistics for this epoch }

Implements PLearn::PLearner.

Definition at line 133 of file SecondIterationWrapper.cc.

References base_regressor, class_prediction, computeClassStatistics(), computeSalesStatistics(), and PLearn::PLearner::nstages.

{

base_regressor->nstages = nstages;

base_regressor->train();

if (class_prediction == 1) computeClassStatistics();

else computeSalesStatistics();

}

Reimplemented from PLearn::PLearner.

Definition at line 97 of file SecondIterationWrapper.h.

Definition at line 76 of file SecondIterationWrapper.h.

Referenced by build_(), computeOutput(), computeSalesStatistics(), declareOptions(), makeDeepCopyFromShallowCopy(), outputsize(), and train().

Definition at line 69 of file SecondIterationWrapper.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

Definition at line 63 of file SecondIterationWrapper.h.

Referenced by build_(), computeCostsFromOutputs(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

int PLearn::SecondIterationWrapper::loan [private] |

Definition at line 82 of file SecondIterationWrapper.h.

Referenced by build_(), and computeSalesStatistics().

int PLearn::SecondIterationWrapper::margin [private] |

Definition at line 81 of file SecondIterationWrapper.h.

Referenced by build_(), and computeSalesStatistics().

Definition at line 66 of file SecondIterationWrapper.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

VMat PLearn::SecondIterationWrapper::ref_test [private] |

Definition at line 65 of file SecondIterationWrapper.h.

Referenced by build_(), computeSalesStatistics(), declareOptions(), and makeDeepCopyFromShallowCopy().

Definition at line 64 of file SecondIterationWrapper.h.

Referenced by build_(), computeSalesStatistics(), declareOptions(), and makeDeepCopyFromShallowCopy().

int PLearn::SecondIterationWrapper::sales [private] |

Definition at line 83 of file SecondIterationWrapper.h.

Referenced by build_(), and computeSalesStatistics().

Definition at line 90 of file SecondIterationWrapper.h.

Referenced by build_(), computeClassStatistics(), and computeSalesStatistics().

Definition at line 87 of file SecondIterationWrapper.h.

Referenced by build_(), computeClassStatistics(), and computeSalesStatistics().

Definition at line 89 of file SecondIterationWrapper.h.

Referenced by build_(), computeClassStatistics(), and computeSalesStatistics().

Definition at line 88 of file SecondIterationWrapper.h.

Referenced by build_(), computeClassStatistics(), and computeSalesStatistics().

Definition at line 85 of file SecondIterationWrapper.h.

Referenced by build_(), and deGaussianize().

int PLearn::SecondIterationWrapper::tclass [private] |

Definition at line 84 of file SecondIterationWrapper.h.

Referenced by build_(), and computeSalesStatistics().

Definition at line 68 of file SecondIterationWrapper.h.

Referenced by build_(), computeSalesStatistics(), declareOptions(), and makeDeepCopyFromShallowCopy().

Definition at line 67 of file SecondIterationWrapper.h.

Referenced by build_(), computeSalesStatistics(), and declareOptions().

1.7.4

1.7.4