|

PLearn 0.1

|

|

PLearn 0.1

|

#include <DeepNNet.h>

Public Member Functions | |

| DeepNNet () | |

| Default constructor. | |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual DeepNNet * | deepCopy (CopiesMap &copies) const |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| void | fprop () const |

| void | initializeParams (bool set_seed=true) |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | n_layers |

| int | n_outputs |

| int | default_n_units_per_hidden_layer |

| TVec< int > | n_units_per_layer |

| real | L1_regularizer |

| real | initial_learning_rate |

| real | learning_rate_decay |

| real | layerwise_learning_rate_adaptation |

| bool | normalize_per_unit |

| bool | normalize_percentage |

| bool | normalize_activations |

| string | output_cost |

| bool | add_connections |

| bool | remove_connections |

| real | initial_sparsity |

| int | connections_adaptation_frequency |

| real | init_scale |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| TVec< TVec< TVec< int > > > | sources |

| TVec< TVec< Vec > > | weights |

| TVec< Vec > | biases |

| Vec | layerwise_lr_factor |

| real | training_time |

| TVec< Vec > | activations |

| TVec< Vec > | activations_gradients |

| TVec< Mat > | avg_weight_gradients |

| Vec | layerwise_gradient_norm_ma |

| Vec | layerwise_gradient_norm |

| TVec< int > | n_weights_of_layer |

| real | learning_rate |

Private Types | |

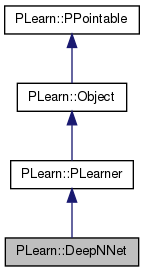

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Definition at line 51 of file DeepNNet.h.

typedef PLearner PLearn::DeepNNet::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 56 of file DeepNNet.h.

| PLearn::DeepNNet::DeepNNet | ( | ) |

Default constructor.

Definition at line 52 of file DeepNNet.cc.

: training_time(0), n_layers(3), n_outputs(1), default_n_units_per_hidden_layer(10), L1_regularizer(1e-5), initial_learning_rate(1e-4), learning_rate_decay(1e-6), layerwise_learning_rate_adaptation(0), normalize_per_unit(0), normalize_percentage(0), normalize_activations(0), output_cost("mse"), add_connections(true), remove_connections(true), initial_sparsity(0.9), connections_adaptation_frequency(0), init_scale(1) { }

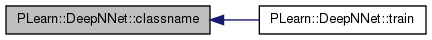

| string PLearn::DeepNNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 78 of file DeepNNet.cc.

| OptionList & PLearn::DeepNNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 78 of file DeepNNet.cc.

| RemoteMethodMap & PLearn::DeepNNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 78 of file DeepNNet.cc.

Reimplemented from PLearn::PLearner.

Definition at line 78 of file DeepNNet.cc.

| Object * PLearn::DeepNNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 78 of file DeepNNet.cc.

| StaticInitializer DeepNNet::_static_initializer_ & PLearn::DeepNNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 78 of file DeepNNet.cc.

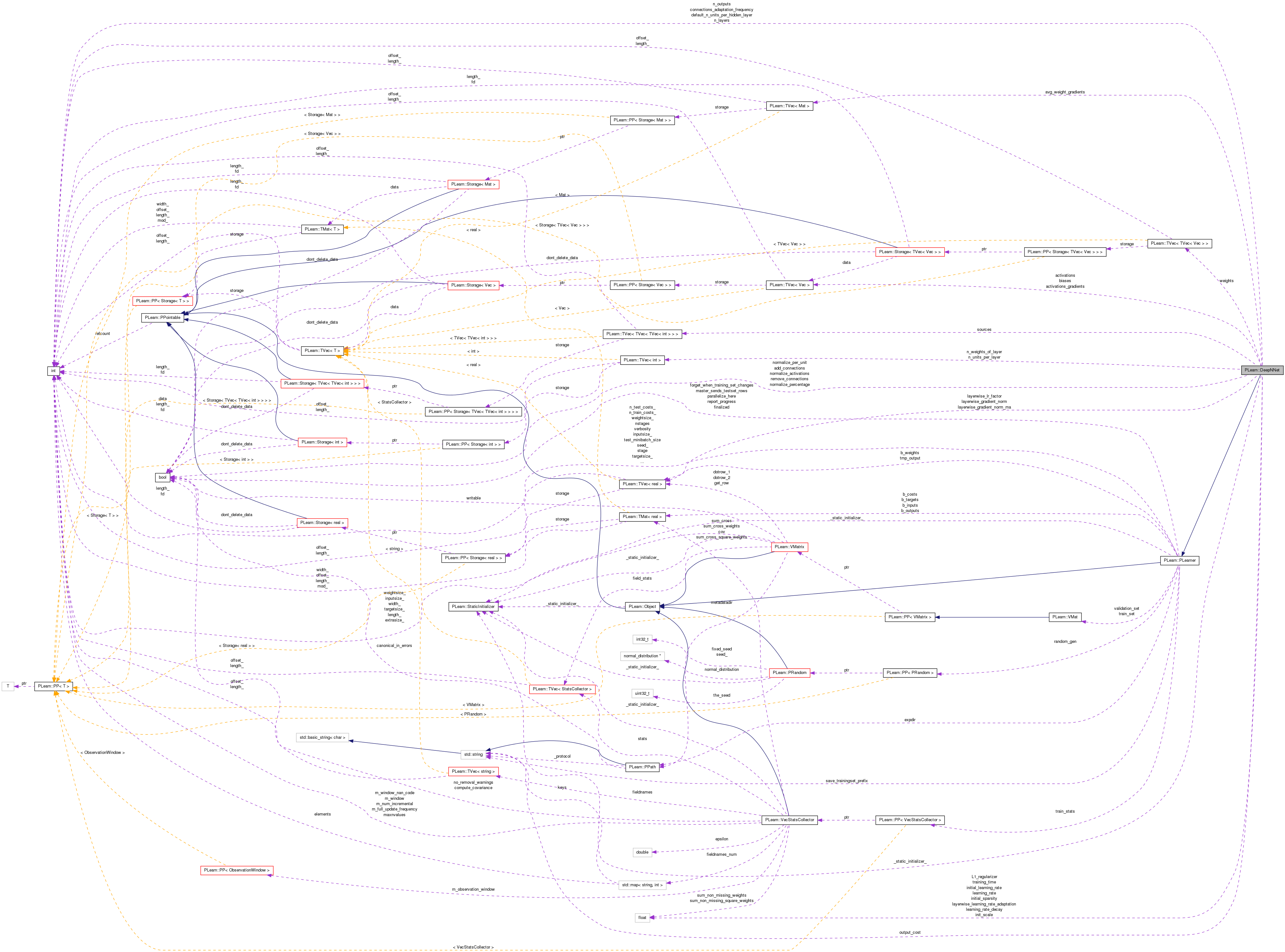

| void PLearn::DeepNNet::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PLearner.

Definition at line 307 of file DeepNNet.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

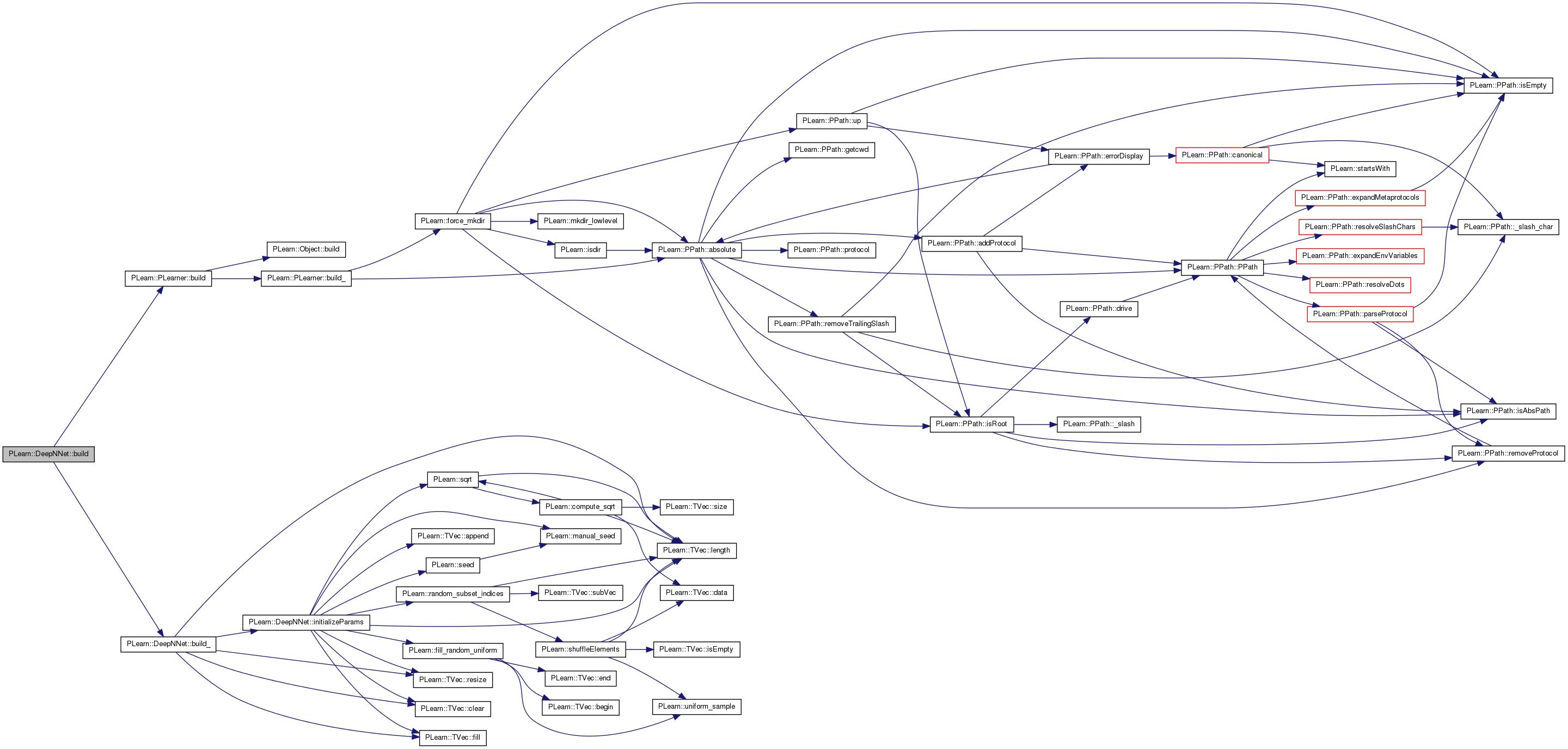

| void PLearn::DeepNNet::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 166 of file DeepNNet.cc.

References activations, activations_gradients, add_connections, avg_weight_gradients, biases, PLearn::TVec< T >::clear(), default_n_units_per_hidden_layer, PLearn::TVec< T >::fill(), i, initial_sparsity, initializeParams(), PLearn::PLearner::inputsize_, layerwise_gradient_norm, layerwise_gradient_norm_ma, layerwise_learning_rate_adaptation, layerwise_lr_factor, PLearn::TVec< T >::length(), n_layers, n_outputs, n_units_per_layer, n_weights_of_layer, PLearn::TVec< T >::resize(), sources, PLearn::PLearner::targetsize_, weights, and PLearn::PLearner::weightsize_.

Referenced by build().

{

// ### This method should do the real building of the object,

// ### according to set 'options', in *any* situation.

// ### Typical situations include:

// ### - Initial building of an object from a few user-specified options

// ### - Building of a "reloaded" object: i.e. from the complete set of all serialised options.

// ### - Updating or "re-building" of an object after a few "tuning" options have been modified.

// ### You should assume that the parent class' build_() has already been called.

// these would be -1 if a train_set has not be set already

if (inputsize_>=0 && targetsize_>=0 && weightsize_>=0)

{

if (add_connections)

{

avg_weight_gradients.resize(n_layers);

for (int l=0;l<n_layers;l++)

avg_weight_gradients[l].resize(n_units_per_layer[l+1],n_units_per_layer[l]);

}

if (layerwise_learning_rate_adaptation>0)

{

layerwise_lr_factor.resize(n_layers);

layerwise_gradient_norm_ma.resize(n_layers);

layerwise_gradient_norm.resize(n_layers);

n_weights_of_layer.resize(n_layers);

layerwise_lr_factor.fill(1.0);

layerwise_gradient_norm_ma.clear();

}

bool do_initialize = false;

if (sources.length() != n_layers) // in case we are called after loading the object we don't need to do this:

{

if (n_units_per_layer.length()==0)

{

n_units_per_layer.resize(n_layers);

for (int l=0;l<n_layers-1;l++)

n_units_per_layer[l] = default_n_units_per_hidden_layer;

n_units_per_layer[n_layers-1] = n_outputs;

}

sources.resize(n_layers);

weights.resize(n_layers);

biases.resize(n_layers);

for (int l=0;l<n_layers;l++)

{

sources[l].resize(n_units_per_layer[l]);

weights[l].resize(n_units_per_layer[l]);

biases[l].resize(n_units_per_layer[l]);

int n_previous = (l==0)? int((1-initial_sparsity)*inputsize_) :

int((1-initial_sparsity)*n_units_per_layer[l-1]);

for (int i=0;i<n_units_per_layer[l];i++)

{

sources[l][i].resize(n_previous);

weights[l][i].resize(n_previous);

}

}

do_initialize = true;

}

activations.resize(n_layers+1);

activations[0].resize(inputsize_);

activations.resize(n_layers+1);

activations_gradients.resize(n_layers);

for (int l=0;l<n_layers;l++)

{

activations[l+1].resize(n_units_per_layer[l]);

activations_gradients[l].resize(n_units_per_layer[l]);

}

if (do_initialize)

initializeParams();

}

}

| string PLearn::DeepNNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 78 of file DeepNNet.cc.

Referenced by train().

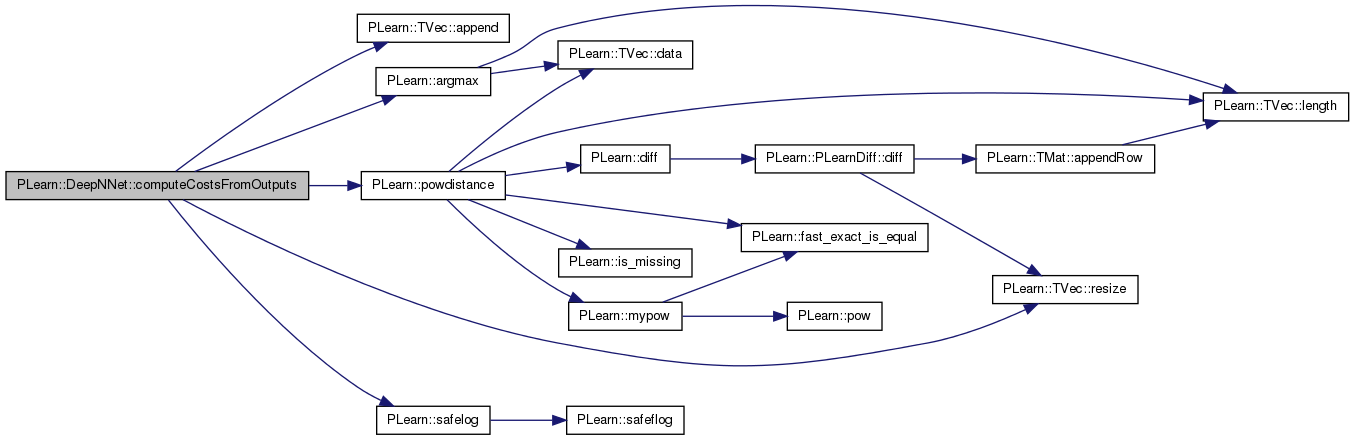

| void PLearn::DeepNNet::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 542 of file DeepNNet.cc.

References PLearn::TVec< T >::append(), PLearn::argmax(), PLearn::PLearner::nstages, output_cost, PLERROR, PLearn::powdistance(), PLearn::TVec< T >::resize(), PLearn::safelog(), and training_time.

{

costs.resize(0);

if (output_cost == "mse")

{

costs.append(powdistance(output,target));

}

else if (output_cost == "NLL")

{

int target_class = int(target[0]);

real p_target = output[target_class];

costs.append(-safelog(p_target));

int recognized_class = argmax(output);

costs.append(recognized_class!=target_class);

}

else PLERROR("DeepNNet: unknown output_cost = %s, expected mse or NLL",output_cost.c_str());

costs.append(real(nstages));

costs.append(training_time);

}

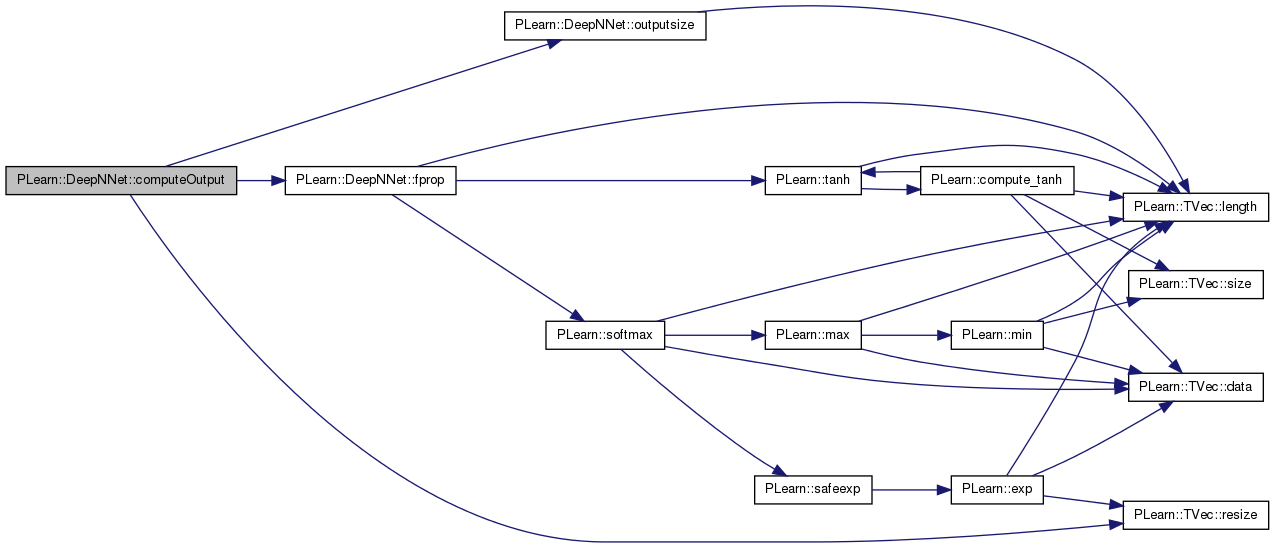

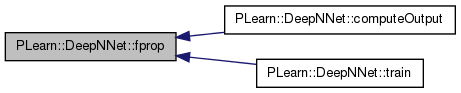

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 534 of file DeepNNet.cc.

References activations, fprop(), n_layers, outputsize(), and PLearn::TVec< T >::resize().

{

output.resize(outputsize());

activations[0] << input;

fprop();

output << activations[n_layers];

}

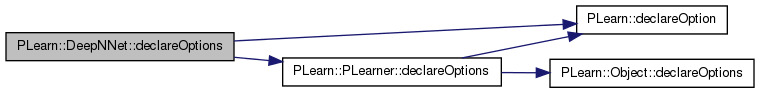

| void PLearn::DeepNNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 80 of file DeepNNet.cc.

References add_connections, biases, PLearn::OptionBase::buildoption, connections_adaptation_frequency, PLearn::declareOption(), PLearn::PLearner::declareOptions(), default_n_units_per_hidden_layer, init_scale, initial_learning_rate, initial_sparsity, L1_regularizer, layerwise_gradient_norm_ma, layerwise_learning_rate_adaptation, layerwise_lr_factor, learning_rate_decay, PLearn::OptionBase::learntoption, n_layers, n_outputs, n_units_per_layer, normalize_activations, normalize_per_unit, normalize_percentage, output_cost, remove_connections, sources, training_time, and weights.

{

declareOption(ol, "n_layers", &DeepNNet::n_layers, OptionBase::buildoption,

"Number of layers, including the output but not input layer");

declareOption(ol, "n_outputs", &DeepNNet::n_outputs, OptionBase::buildoption,

"Number of units of output layer");

declareOption(ol, "default_n_units_per_hidden_layer", &DeepNNet::default_n_units_per_hidden_layer,

OptionBase::buildoption, "If n_units_per_layer is not specified, it is given by this value for all hidden layers");

declareOption(ol, "n_units_per_layer", &DeepNNet::n_units_per_layer, OptionBase::buildoption,

"Number of units per layer, including the output but not input layer.\n"

"The last (output) layer number of units is the output size.");

declareOption(ol, "L1_regularizer", &DeepNNet::L1_regularizer, OptionBase::buildoption,

"amount of penalty on sum_{l,i,j} |weights[l][i][j]|");

declareOption(ol, "initial_learning_rate", &DeepNNet::initial_learning_rate, OptionBase::buildoption,

"learning_rate = initial_learning_rate/(1 + iteration*learning_rate_decay)\n"

"where iteration is incremented after each example is presented");

declareOption(ol, "learning_rate_decay", &DeepNNet::learning_rate_decay, OptionBase::buildoption,

"see the comment for initial_learning_rate.");

declareOption(ol, "layerwise_learning_rate_adaptation", &DeepNNet::layerwise_learning_rate_adaptation,

OptionBase::buildoption, "if 0 use stochastic gradient as usual, otherwise correct the\n"

"learning rates layerwise by multiplying by the ratio of average gradient norm\n"

"of the top layer by the i-th layer, to the power layerwise_learning_rate_adaptation.");

declareOption(ol, "normalize_per_unit", &DeepNNet::normalize_per_unit,

OptionBase::buildoption, "Try balancing the norm of the weight gradient vectors per unit, rather than per weight\n");

declareOption(ol, "normalize_percentage", &DeepNNet::normalize_percentage,

OptionBase::buildoption, "Try balancing the ratio the gradient to the weight squared, rather than the norm of the gradient\n");

declareOption(ol, "normalize_activations", &DeepNNet::normalize_activations,

OptionBase::buildoption, "Try balancing the norm of the gradient on the activations, per layer\n");

declareOption(ol, "output_cost", &DeepNNet::output_cost, OptionBase::buildoption,

"String-valued option specifies output non-linearity and cost:\n"

" 'mse': mean squared error for regression with linear outputs\n"

" 'NLL': negative log-likelihood of P(class|input) with softmax outputs");

declareOption(ol, "add_connections", &DeepNNet::add_connections, OptionBase::buildoption,

"whether to add connections when the potential connections average"

"gradient becomes larger in magnitude than that of existing connections");

declareOption(ol, "remove_connections", &DeepNNet::remove_connections, OptionBase::buildoption,

"whether to remove connections when their weight becomes too small");

declareOption(ol, "initial_sparsity", &DeepNNet::initial_sparsity, OptionBase::buildoption,

"initial fraction of weights that are set to 0.");

declareOption(ol, "connections_adaptation_frequency", &DeepNNet::connections_adaptation_frequency,

OptionBase::buildoption, "after how many examples do we try to adapt connections?\n"

"if set to 0, this is interpreted as the training set size.");

declareOption(ol, "init_scale", &DeepNNet::init_scale, OptionBase::buildoption,

"scaling factor of random initial weights range.");

declareOption(ol, "sources", &DeepNNet::sources, OptionBase::learntoption,

"The learned connectivity matrix at each layer\n"

"(source[l][i] = vector of indices of inputs of neuron i at layer l");

declareOption(ol, "weights", &DeepNNet::weights, OptionBase::learntoption,

"The learned weights at each layer\n"

"(weights[l][i] = vector of weights of inputs of neuron i at layer l");

declareOption(ol, "biases", &DeepNNet::biases, OptionBase::learntoption,

"The learned biases at each layer\n"

"(biases[l] = vector of biases of neurons at layer l");

declareOption(ol, "layerwise_lr_factor", &DeepNNet::layerwise_lr_factor, OptionBase::learntoption,

"The multiplicative factor for the learning rate at each layer");

declareOption(ol, "layerwise_gradient_norm_ma", &DeepNNet::layerwise_gradient_norm_ma, OptionBase::learntoption,

"The (moving) average of squared gradients at each layer");

declareOption(ol, "training_time", &DeepNNet::training_time, OptionBase::learntoption,

"The time spent during training (in seconds)");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::DeepNNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 149 of file DeepNNet.h.

Reimplemented from PLearn::PLearner.

Definition at line 78 of file DeepNNet.cc.

| void PLearn::DeepNNet::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 338 of file DeepNNet.cc.

References initializeParams(), PLearn::PLearner::stage, PLearn::PLearner::train_set, and training_time.

Referenced by train().

{

if (train_set) initializeParams();

stage = 0;

training_time = 0;

}

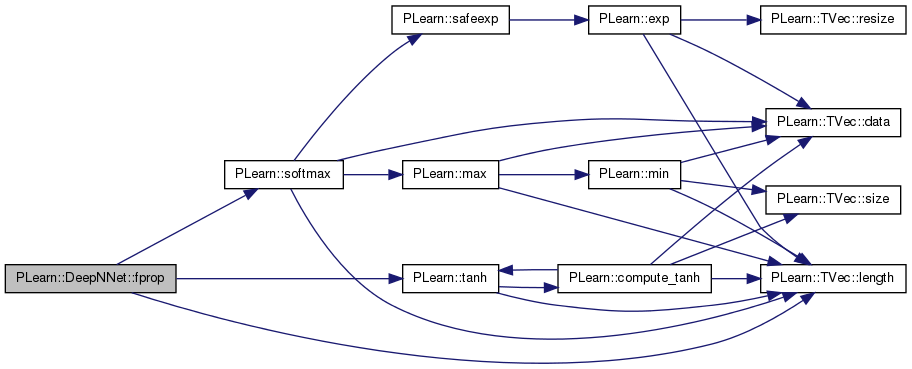

| void PLearn::DeepNNet::fprop | ( | ) | const |

Definition at line 345 of file DeepNNet.cc.

References activations, biases, i, PLearn::TVec< T >::length(), n_layers, n_units_per_layer, output_cost, PLearn::softmax(), sources, PLearn::tanh(), and weights.

Referenced by computeOutput(), and train().

{

for (int l=0;l<n_layers;l++)

{

int n_u = n_units_per_layer[l];

Vec biases_l = biases[l];

Vec previous_layer = activations[l];

Vec next_layer = activations[l+1];

for (int i=0;i<n_u;i++)

{

TVec<int> sources_i = sources[l][i];

Vec weights_i = weights[l][i];

int n_sources = sources_i.length();

real s=biases_l[i];

for (int k=0;k<n_sources;k++)

s += previous_layer[sources_i[k]] * weights_i[k];

if (l+1<n_layers)

next_layer[i] = tanh(s);

else next_layer[i] = s;

}

}

if (output_cost == "NLL")

{

Vec output = activations[n_layers];

softmax(output,output);

}

}

| OptionList & PLearn::DeepNNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 78 of file DeepNNet.cc.

| OptionMap & PLearn::DeepNNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 78 of file DeepNNet.cc.

| RemoteMethodMap & PLearn::DeepNNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 78 of file DeepNNet.cc.

| TVec< string > PLearn::DeepNNet::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 563 of file DeepNNet.cc.

References PLearn::TVec< T >::append(), and output_cost.

Referenced by getTrainCostNames().

{

TVec<string> names;

if (output_cost == "mse")

{

names.append("mse");

} else // "NLL"

{

names.append("NLL");

names.append("class_error");

}

names.append("nstages");

names.append("training_time");

return names;

}

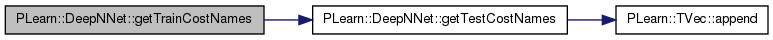

| TVec< string > PLearn::DeepNNet::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 579 of file DeepNNet.cc.

References getTestCostNames().

{

return getTestCostNames();

}

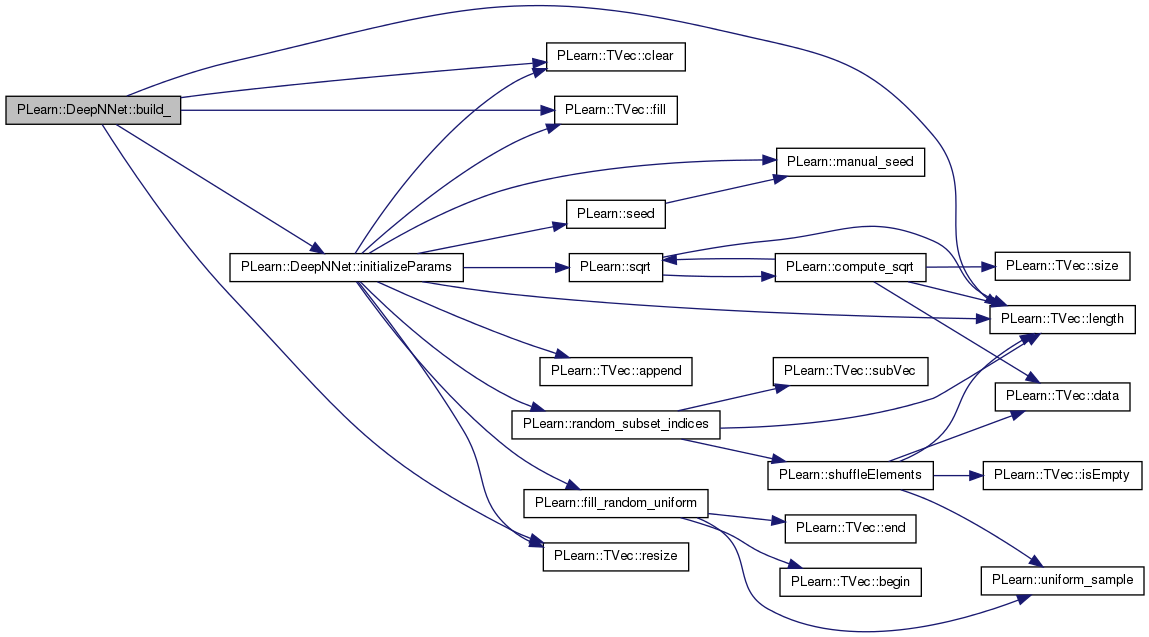

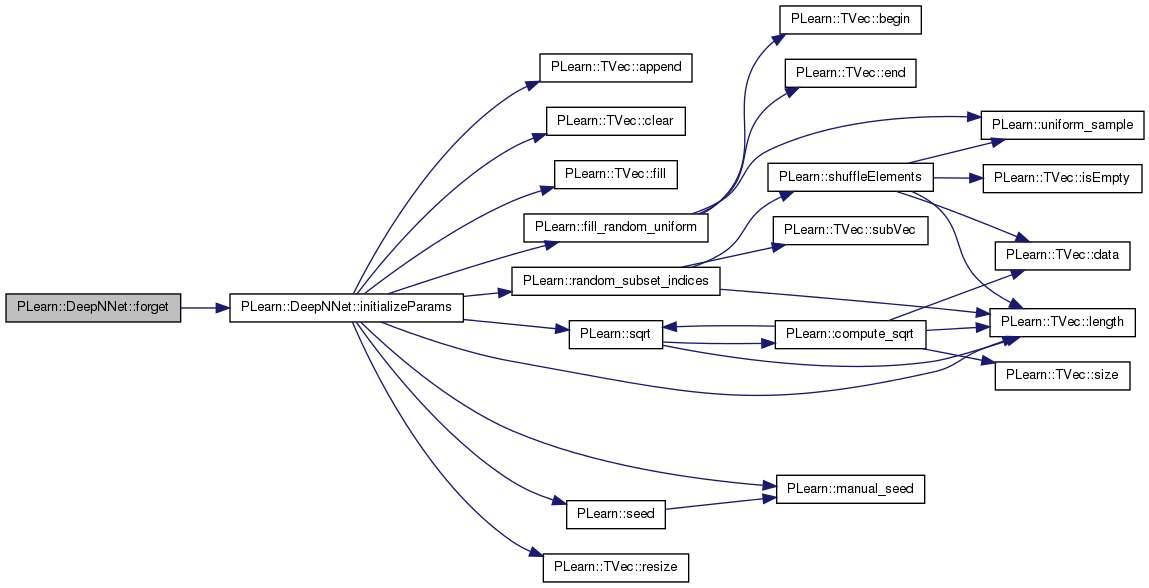

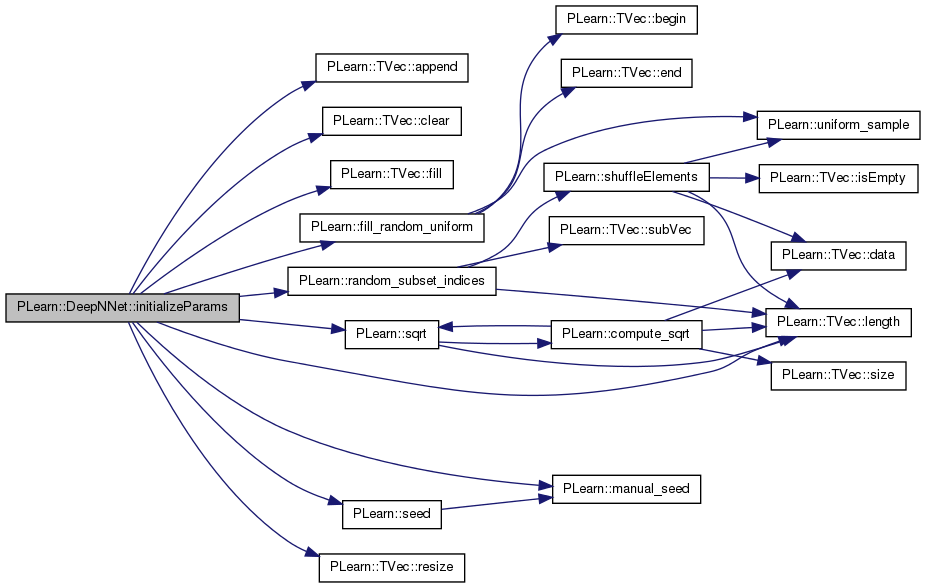

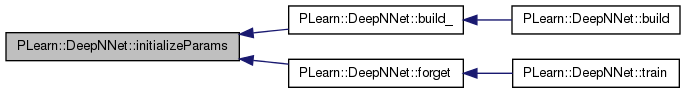

| void PLearn::DeepNNet::initializeParams | ( | bool | set_seed = true | ) |

Definition at line 239 of file DeepNNet.cc.

References PLearn::TVec< T >::append(), biases, PLearn::TVec< T >::clear(), PLearn::TVec< T >::fill(), PLearn::fill_random_uniform(), i, init_scale, initial_sparsity, PLearn::PLearner::inputsize_, j, layerwise_learning_rate_adaptation, PLearn::TVec< T >::length(), PLearn::manual_seed(), n_layers, n_units_per_layer, n_weights_of_layer, PLearn::random_subset_indices(), PLearn::TVec< T >::resize(), PLearn::seed(), PLearn::PLearner::seed_, sources, PLearn::sqrt(), and weights.

Referenced by build_(), and forget().

{

if (set_seed) {

if (seed_>=0)

manual_seed(seed_);

else

PLearn::seed();

}

for (int l=0;l<n_layers;l++)

{

biases[l].clear();

int n_previous = (l==0)?inputsize_:n_units_per_layer[l-1];

int n_next = n_units_per_layer[l];

if (initial_sparsity>0)

{

// first assign randomly some connections to each of the next layer unit

int n_in = 1+int(0.66 * (1-initial_sparsity) * n_previous);

if (n_in>n_previous) n_in=n_previous;

int n_out = 1+int(0.66 * (1-initial_sparsity) * n_next);

if (n_out>n_next) n_out=n_next;

for (int i=0;i<n_next;i++)

{

sources[l][i].resize(n_in);

random_subset_indices(sources[l][i],n_previous);

}

// then assign randomly some connections from each of the previous layer unit

TVec<int> dest(n_out);

for (int j=0;j<n_previous;j++)

{

random_subset_indices(dest,n_next);

for (int k=0;k<n_out;k++)

if (!sources[l][dest[k]].contains(j))

sources[l][dest[k]].append(j);

}

for (int i=0;i<n_next;i++)

{

int n_in = sources[l][i].length();

real delta = init_scale/sqrt((real)n_in);

weights[l][i].resize(n_in);

if (n_layers==1)

weights[l][i].fill(0);

else

fill_random_uniform(weights[l][i],-delta,delta);

}

}

else // fully connected, mostly for debugging

{

// real delta = 1.0/sqrt((real)n_previous);

real delta = init_scale/n_previous;

for (int i=0;i<n_next;i++)

{

sources[l][i].resize(n_previous);

weights[l][i].resize(n_previous);

for (int j=0;j<n_previous;j++)

sources[l][i][j] = j;

fill_random_uniform(weights[l][i],-delta,delta);

}

}

if (layerwise_learning_rate_adaptation>0)

{

n_weights_of_layer[l]=0;

for (int i=0;i<n_next;i++)

n_weights_of_layer[l] += sources[l][i].length();

}

}

}

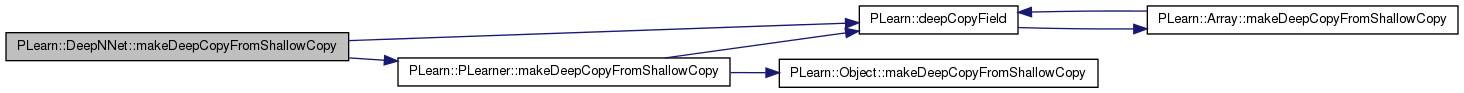

| void PLearn::DeepNNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 314 of file DeepNNet.cc.

References activations, activations_gradients, avg_weight_gradients, biases, PLearn::deepCopyField(), layerwise_gradient_norm, layerwise_gradient_norm_ma, layerwise_lr_factor, PLearn::PLearner::makeDeepCopyFromShallowCopy(), n_units_per_layer, n_weights_of_layer, output_cost, sources, and weights.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(sources, copies);

deepCopyField(weights, copies);

deepCopyField(biases, copies);

deepCopyField(layerwise_lr_factor, copies);

deepCopyField(activations, copies);

deepCopyField(activations_gradients, copies);

deepCopyField(avg_weight_gradients, copies);

deepCopyField(layerwise_gradient_norm_ma, copies);

deepCopyField(layerwise_gradient_norm, copies);

deepCopyField(n_weights_of_layer, copies);

deepCopyField(n_units_per_layer, copies);

deepCopyField(output_cost, copies);

}

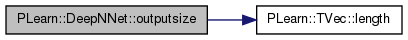

| int PLearn::DeepNNet::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 333 of file DeepNNet.cc.

References PLearn::TVec< T >::length(), and n_units_per_layer.

Referenced by computeOutput().

{

return n_units_per_layer[n_units_per_layer.length()-1];

}

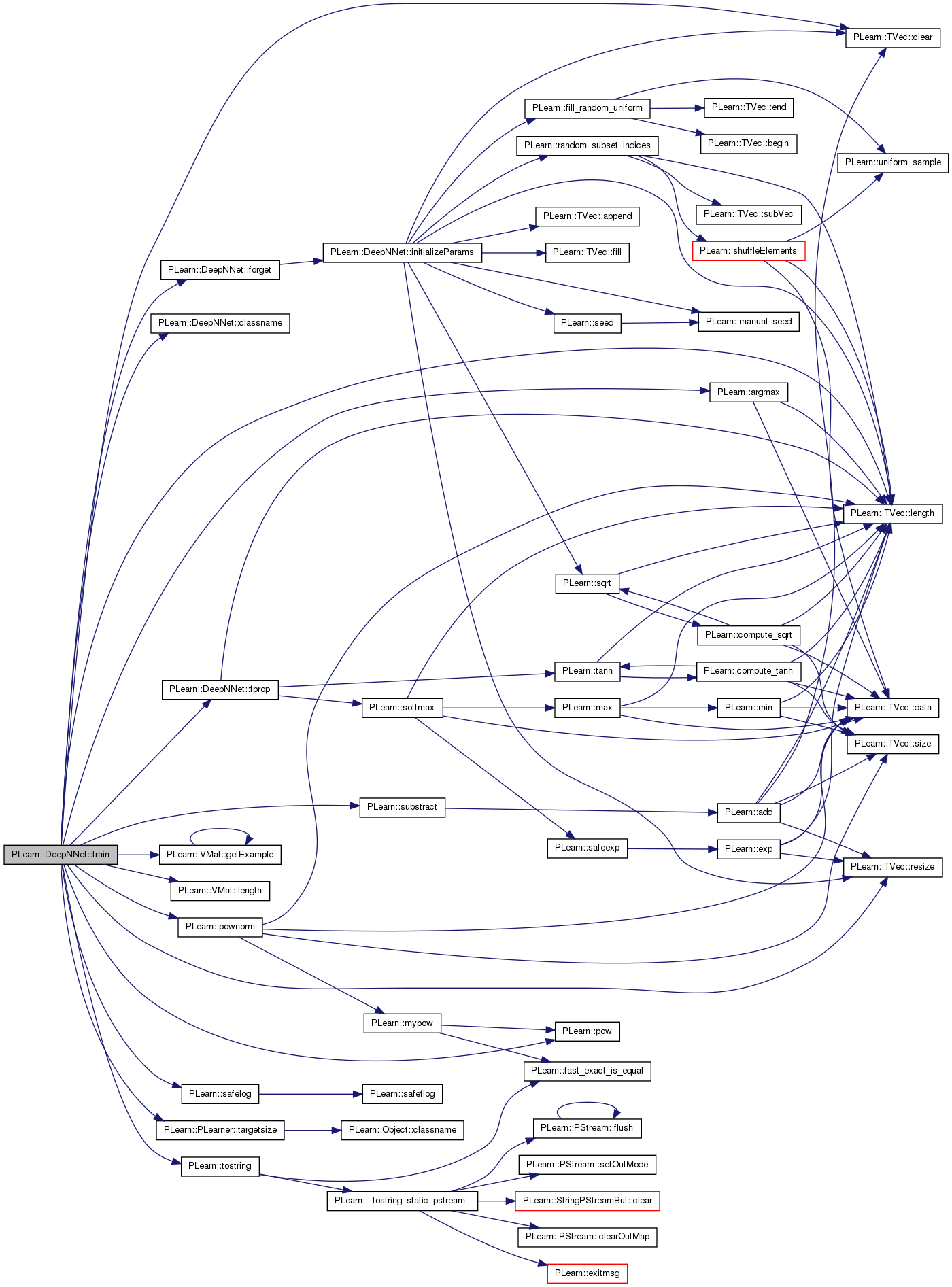

| void PLearn::DeepNNet::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 373 of file DeepNNet.cc.

References a, activations, activations_gradients, PLearn::argmax(), biases, classname(), PLearn::TVec< T >::clear(), forget(), fprop(), g, PLearn::VMat::getExample(), grad, i, initial_learning_rate, j, L1_regularizer, layerwise_gradient_norm, layerwise_gradient_norm_ma, layerwise_learning_rate_adaptation, layerwise_lr_factor, learning_rate, learning_rate_decay, PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::PLearner::n_examples, n_layers, n_outputs, n_units_per_layer, n_weights_of_layer, normalize_activations, normalize_per_unit, normalize_percentage, PLearn::PLearner::nstages, output_cost, PLERROR, PLearn::pow(), PLearn::pownorm(), PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), PLearn::safelog(), sources, PLearn::PLearner::stage, PLearn::substract(), PLearn::PLearner::targetsize(), PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_time, w, and weights.

{

// The role of the train method is to bring the learner up to stage==nstages,

// updating train_stats with training costs measured on-line in the process.

clock_t start_train = clock();

static Vec target;

static Vec train_costs;

target.resize(targetsize());

if (output_cost=="mse")

train_costs.resize(1);

else

train_costs.resize(2);

real example_weight;

if(!train_stats) // make a default stats collector, in case there's none

train_stats = new VecStatsCollector();

if(nstages<stage) // asking to revert to a previous stage!

forget(); // reset the learner to stage=0

int initial_stage = stage;

PP<ProgressBar> pb;

if (report_progress) {

pb = new ProgressBar("Training " + classname() + " from stage " + tostring(stage) + " to " + tostring(nstages), nstages - stage);

}

int n_examples = train_set->length();

int t=stage*n_examples;

while(stage<nstages)

{

// clear statistics of previous epoch

train_stats->forget();

if (layerwise_learning_rate_adaptation>0)

layerwise_gradient_norm.clear();

// train for 1 stage, and update train_stats,

for (int ex=0;ex<n_examples;ex++, t++)

{

// get the (input,target) pair

train_set->getExample(ex, activations[0], target, example_weight);

// fprop

fprop();

// compute cost

if (output_cost == "mse")

{

substract(activations[n_layers],target,activations_gradients[n_layers-1]);

train_costs[0] = example_weight*pownorm(activations_gradients[n_layers-1]);

activations_gradients[n_layers-1] *= 2*example_weight; // 2 from the square

}

else if (output_cost == "NLL")

{

Vec output = activations[n_layers];

int target_class = int(target[0]);

real p_target = output[target_class];

train_costs[0] = example_weight*(-safelog(p_target));

int recognized_class = argmax(output);

train_costs[1] = example_weight*(recognized_class!=target_class);

activations_gradients[n_layers-1] << output;

activations_gradients[n_layers-1][target_class] -= 1;

}

else PLERROR("DeepNNet: unknown output_cost = %s, expected mse or NLL",output_cost.c_str());

// bprop + update + track avg gradient

learning_rate = initial_learning_rate / (1 + t*learning_rate_decay);

if (layerwise_learning_rate_adaptation>0 && normalize_activations)

{

int l=n_layers-1;

Vec ag = activations_gradients[n_layers-1];

real& gn = layerwise_gradient_norm[l];

for (int i=0;i<n_outputs;i++)

{

real g = ag[i];

gn += g*g;

}

}

for (int l=n_layers-1;l>=0;l--)

{

Vec biases_l = biases[l];

Vec next_layer = activations[l+1];

Vec previous_layer = activations[l];

int n_next = next_layer.length();

int n_previous = previous_layer.length();

Vec next_layer_gradient = activations_gradients[l];

Vec previous_layer_gradient;

if (l>0)

{

previous_layer_gradient = activations_gradients[l-1];

previous_layer_gradient.clear();

}

real layer_learning_rate = learning_rate;

if (layerwise_learning_rate_adaptation>0)

layer_learning_rate *= layerwise_lr_factor[l];

for (int i=0;i<n_next;i++)

{

TVec<int> sources_i = sources[l][i];

Vec weights_i = weights[l][i];

int n_sources = sources_i.length();

real g_i = next_layer_gradient[i];

biases_l[i] -= learning_rate * g_i;

for (int k=0;k<n_sources;k++)

{

real w = weights_i[k];

int j=sources_i[k];

real sign_w = (w>0)?1:-1;

real grad = g_i * previous_layer[j];

weights_i[k] -= layer_learning_rate * (grad + L1_regularizer*sign_w);

if (l>0) // THE IF COULD BE FACTORED OUT (more ugly but more efficient)

previous_layer_gradient[j] += g_i * w;

if (layerwise_learning_rate_adaptation>0 && !normalize_activations) // THE IF COULD BE FACTORED OUT (more ugly but more efficient)

{

if (normalize_percentage)

layerwise_gradient_norm[l] += grad*grad/(w*w);

else

layerwise_gradient_norm[l] += grad*grad;

}

}

}

if (l>0)

for (int j=0;j<n_previous;j++)

{

real a = previous_layer[j];

real& g = previous_layer_gradient[j];

g *= (1 - a*a);

if (layerwise_learning_rate_adaptation>0 && normalize_activations)

layerwise_gradient_norm[l-1] += g*g;

}

}

if (layerwise_learning_rate_adaptation>0)

{

for (int l=0;l<n_layers;l++)

{

if (normalize_activations || normalize_per_unit)

layerwise_gradient_norm[l] /= n_units_per_layer[l]; // maybe we want larger weights, hence larger gradients where there are less terms in the sum, i.e. less weights

else // normalize per weight

layerwise_gradient_norm[l] /= n_weights_of_layer[l];

layerwise_gradient_norm_ma[l] = (1-learning_rate) * layerwise_gradient_norm_ma[l] + learning_rate * layerwise_gradient_norm[l];

layerwise_lr_factor[l] = pow(layerwise_gradient_norm_ma[n_layers-1]/layerwise_gradient_norm_ma[l],

0.5*layerwise_learning_rate_adaptation);

}

}

train_stats->update(train_costs);

}

++stage;

train_stats->finalize(); // finalize statistics for this epoch

if (report_progress)

pb->update(stage - initial_stage);

}

training_time += real(clock() - start_train) / real(CLOCKS_PER_SEC);

}

Reimplemented from PLearn::PLearner.

Definition at line 149 of file DeepNNet.h.

TVec<Vec> PLearn::DeepNNet::activations [mutable, protected] |

Definition at line 73 of file DeepNNet.h.

Referenced by build_(), computeOutput(), fprop(), makeDeepCopyFromShallowCopy(), and train().

TVec<Vec> PLearn::DeepNNet::activations_gradients [protected] |

Definition at line 74 of file DeepNNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 101 of file DeepNNet.h.

Referenced by build_(), and declareOptions().

TVec<Mat> PLearn::DeepNNet::avg_weight_gradients [protected] |

Definition at line 75 of file DeepNNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

TVec<Vec> PLearn::DeepNNet::biases [protected] |

Definition at line 68 of file DeepNNet.h.

Referenced by build_(), declareOptions(), fprop(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 105 of file DeepNNet.h.

Referenced by declareOptions().

Definition at line 91 of file DeepNNet.h.

Referenced by build_(), and declareOptions().

Definition at line 106 of file DeepNNet.h.

Referenced by declareOptions(), and initializeParams().

Definition at line 94 of file DeepNNet.h.

Referenced by declareOptions(), and train().

Definition at line 104 of file DeepNNet.h.

Referenced by build_(), declareOptions(), and initializeParams().

Definition at line 93 of file DeepNNet.h.

Referenced by declareOptions(), and train().

Vec PLearn::DeepNNet::layerwise_gradient_norm [protected] |

Definition at line 77 of file DeepNNet.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::DeepNNet::layerwise_gradient_norm_ma [protected] |

Definition at line 76 of file DeepNNet.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 96 of file DeepNNet.h.

Referenced by build_(), declareOptions(), initializeParams(), and train().

Vec PLearn::DeepNNet::layerwise_lr_factor [protected] |

Definition at line 69 of file DeepNNet.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

real PLearn::DeepNNet::learning_rate [protected] |

Definition at line 79 of file DeepNNet.h.

Referenced by train().

Definition at line 95 of file DeepNNet.h.

Referenced by declareOptions(), and train().

Definition at line 89 of file DeepNNet.h.

Referenced by build_(), computeOutput(), declareOptions(), fprop(), initializeParams(), and train().

Definition at line 90 of file DeepNNet.h.

Referenced by build_(), declareOptions(), and train().

Definition at line 92 of file DeepNNet.h.

Referenced by build_(), declareOptions(), fprop(), initializeParams(), makeDeepCopyFromShallowCopy(), outputsize(), and train().

TVec<int> PLearn::DeepNNet::n_weights_of_layer [protected] |

Definition at line 78 of file DeepNNet.h.

Referenced by build_(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 99 of file DeepNNet.h.

Referenced by declareOptions(), and train().

Definition at line 97 of file DeepNNet.h.

Referenced by declareOptions(), and train().

Definition at line 98 of file DeepNNet.h.

Referenced by declareOptions(), and train().

Definition at line 100 of file DeepNNet.h.

Referenced by computeCostsFromOutputs(), declareOptions(), fprop(), getTestCostNames(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 103 of file DeepNNet.h.

Referenced by declareOptions().

TVec<TVec<TVec<int> > > PLearn::DeepNNet::sources [protected] |

Definition at line 66 of file DeepNNet.h.

Referenced by build_(), declareOptions(), fprop(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

real PLearn::DeepNNet::training_time [protected] |

Definition at line 70 of file DeepNNet.h.

Referenced by computeCostsFromOutputs(), declareOptions(), forget(), and train().

TVec<TVec<Vec > > PLearn::DeepNNet::weights [protected] |

Definition at line 67 of file DeepNNet.h.

Referenced by build_(), declareOptions(), fprop(), initializeParams(), makeDeepCopyFromShallowCopy(), and train().

1.7.4

1.7.4