|

PLearn 0.1

|

|

PLearn 0.1

|

#include <LinearRegressor.h>

Public Member Functions | |

| LinearRegressor () | |

| virtual void | build () |

| simply calls inherited::build() then build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual LinearRegressor * | deepCopy (CopiesMap &copies) const |

| virtual int | outputsize () const |

| returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options) | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) This resizes the XtX and XtY matrices to signify that their content needs to be recomputed. | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

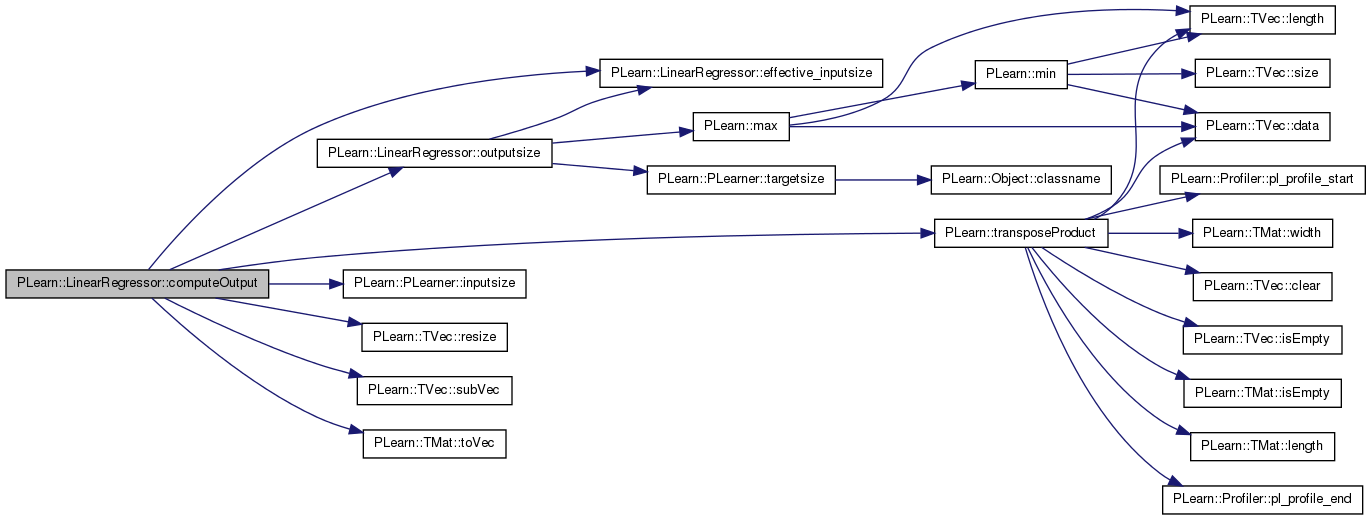

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input output = weights * (1, input) | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual bool | computeConfidenceFromOutput (const Vec &input, const Vec &output, real probability, TVec< pair< real, real > > &intervals) const |

| Compute confidence intervals based on the NORMAL distribution. | |

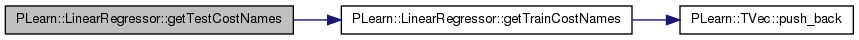

| virtual TVec< string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method) | |

| virtual TVec< string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| bool | include_bias |

| Whether to include a bias term in the regression (true by default) | |

| bool | cholesky |

| Whether to use Cholesky decomposition for computing the solution (true by default) | |

| real | weight_decay |

| Factor on the squared norm of parameters penalty (zero by default) | |

| bool | output_learned_weights |

| If true, the result of computeOutput*() functions is not the result of thre regression, but the learned regression parameters. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

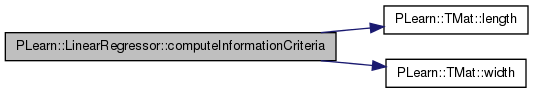

| void | computeInformationCriteria (real squared_error, int n) |

| Utility function to compute the AIC, BIC criteria from the squared error of the trained model. | |

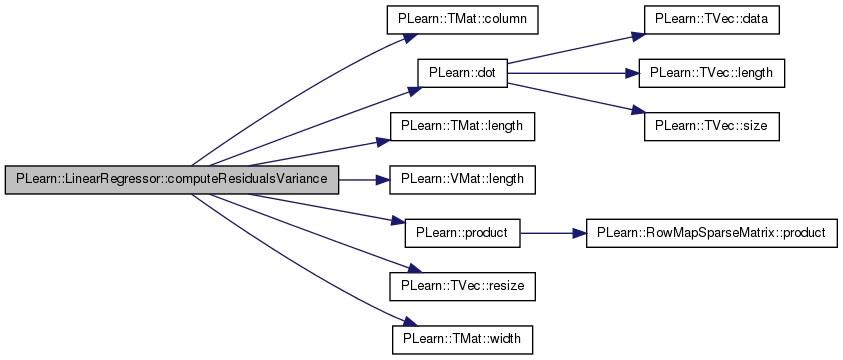

| void | computeResidualsVariance (const Vec &outputwise_sum_squared_Y) |

| Utility function to compute the variance of the residuals of the regression. | |

| int | effective_inputsize () const |

| Inputsize that takes into account optional bias term. | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Mat | XtX |

| can be re-used if train is called several times on the same data set | |

| Mat | XtY |

| can be re-used if train is called several times on the same data set | |

| real | sum_squared_y |

| can be re-used if train is called several times on the same data set | |

| real | sum_gammas |

| sum of weights if weighted error, also for re-using training set with different weight decays | |

| real | weights_norm |

| Sum of squares of weights. | |

| Mat | weights |

| The weight matrix computed by the regressor, (inputsize+1) x (outputsize) | |

| real | AIC |

| The Akaike Information Criterion computed at training time; Saved as a learned option to allow outputting AIC as a test cost. | |

| real | BIC |

| The Bayesian Information Criterion computed at training time Saved as a learned option to allow outputting BIC as a test cost. | |

| Vec | resid_variance |

| Estimate of the residual variance for each output variable. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | resetAccumulators () |

Private Attributes | |

| Vec | extendedinput |

| length 1+inputsize(), first element is 1.0 (used by the use method) | |

| Vec | input |

| extendedinput.subVec(1,inputsize()) | |

| Vec | train_costs |

Definition at line 48 of file LinearRegressor.h.

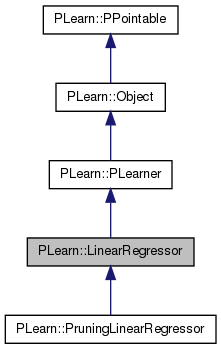

typedef PLearner PLearn::LinearRegressor::inherited [private] |

Reimplemented from PLearn::PLearner.

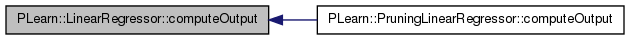

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 50 of file LinearRegressor.h.

| PLearn::LinearRegressor::LinearRegressor | ( | ) |

Definition at line 50 of file LinearRegressor.cc.

: sum_squared_y(MISSING_VALUE), sum_gammas(MISSING_VALUE), weights_norm(MISSING_VALUE), weights(), AIC(MISSING_VALUE), BIC(MISSING_VALUE), resid_variance(), include_bias(true), cholesky(true), weight_decay(0.0), output_learned_weights(false) { }

| string PLearn::LinearRegressor::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

| OptionList & PLearn::LinearRegressor::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

| RemoteMethodMap & PLearn::LinearRegressor::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

| Object * PLearn::LinearRegressor::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

| StaticInitializer LinearRegressor::_static_initializer_ & PLearn::LinearRegressor::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

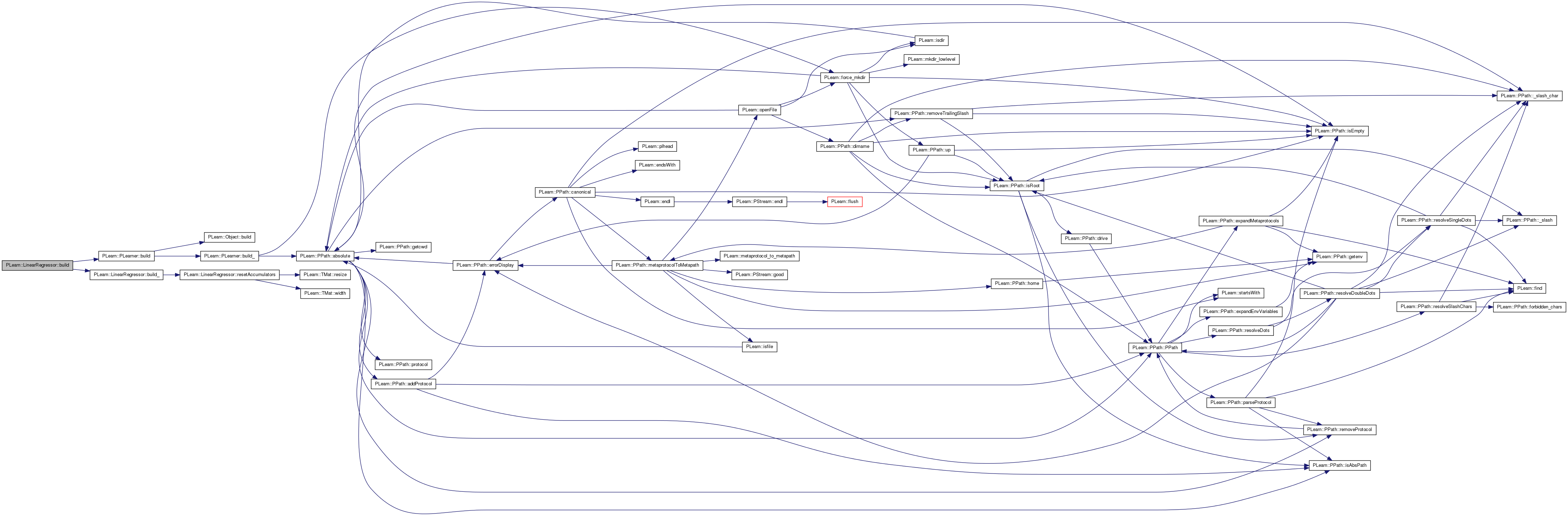

| void PLearn::LinearRegressor::build | ( | ) | [virtual] |

simply calls inherited::build() then build_()

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 168 of file LinearRegressor.cc.

References PLearn::PLearner::build(), and build_().

Referenced by PLearn::PruningLinearRegressor::build().

{

inherited::build();

build_();

}

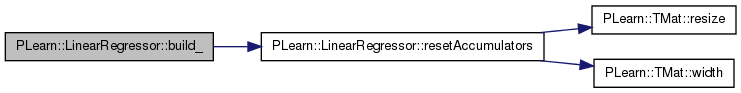

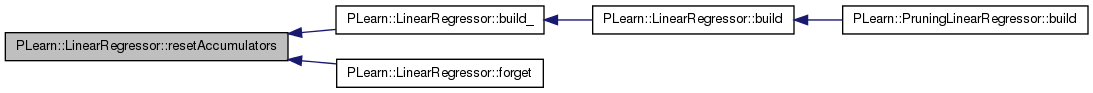

| void PLearn::LinearRegressor::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 160 of file LinearRegressor.cc.

References resetAccumulators().

Referenced by build().

{

// This resets various accumulators to speed up successive iterations of

// training in the case the training set has not changed.

resetAccumulators();

}

| string PLearn::LinearRegressor::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

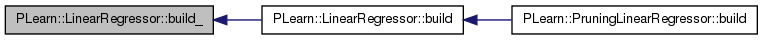

| bool PLearn::LinearRegressor::computeConfidenceFromOutput | ( | const Vec & | input, |

| const Vec & | output, | ||

| real | probability, | ||

| TVec< pair< real, real > > & | intervals | ||

| ) | const [virtual] |

Compute confidence intervals based on the NORMAL distribution.

Reimplemented from PLearn::PLearner.

Definition at line 338 of file LinearRegressor.cc.

References PLearn::gauss_01_quantile(), i, n, output_learned_weights, PLERROR, resid_variance, PLearn::TVec< T >::size(), and PLearn::sqrt().

{

// The option 'output_learned_weights' is incompatible with confidence...

if (output_learned_weights)

PLERROR("LinearRegressor::computeConfidenceFromOutput: the option "

"'output_learned_weights' is incompatible with confidence.");

const int n = output.size();

if (n != resid_variance.size())

PLERROR("LinearRegressor::computeConfidenceFromOutput: output vector "

"size (=%d) is incorrect or residuals variance (=%d) not yet computed",n,resid_variance.size());

// two-tailed

const real multiplier = gauss_01_quantile((1+probability)/2);

intervals.resize(n);

for (int i=0; i<n; ++i) {

real half_width = multiplier * sqrt(resid_variance[i]);

intervals[i] = std::make_pair(output[i] - half_width,

output[i] + half_width);

}

return true;

}

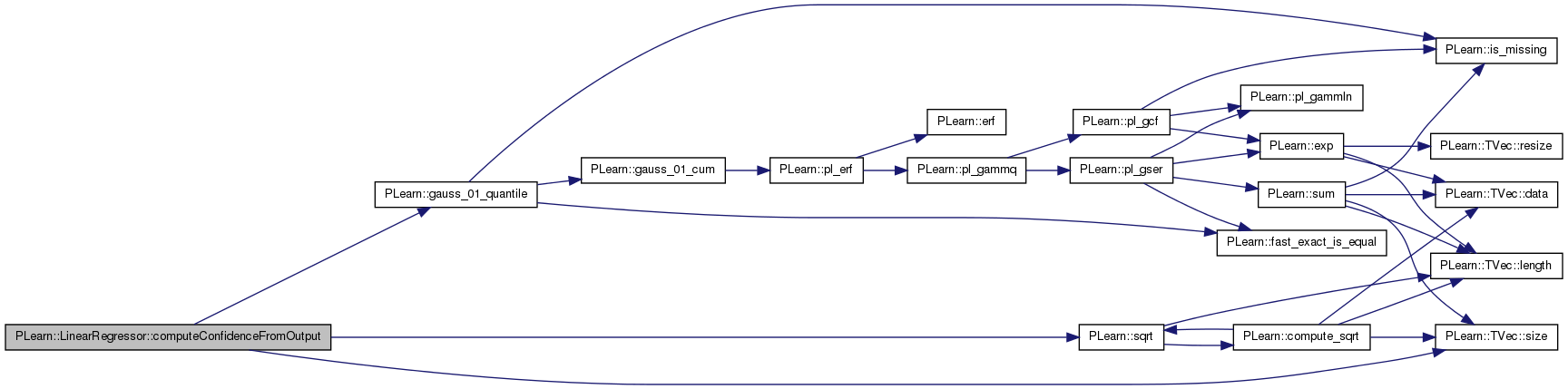

| void PLearn::LinearRegressor::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 318 of file LinearRegressor.cc.

References AIC, BIC, output_learned_weights, PLearn::powdistance(), PLearn::TVec< T >::resize(), weight_decay, and weights_norm.

{

// If 'output_learned_weights', there is no test cost

if (output_learned_weights)

return;

// Compute the costs from *already* computed output.

costs.resize(5);

real squared_loss = powdistance(output,target);

costs[0] = squared_loss + weight_decay*weights_norm;

costs[1] = squared_loss;

// The AIC/BIC/MABIC costs are computed at TRAINING-TIME and remain

// constant thereafter. Simply append the already-computed costs.

costs[2] = AIC;

costs[3] = BIC;

costs[4] = (AIC+BIC)/2;

}

Utility function to compute the AIC, BIC criteria from the squared error of the trained model.

Store the result in AIC and BIC members of the object.

Definition at line 385 of file LinearRegressor.cc.

References AIC, BIC, PLearn::TMat< T >::length(), n, pl_log, weights, and PLearn::TMat< T >::width().

Referenced by train().

{

// AIC = ln(squared_error/n) + 2*M/n

// BIC = ln(squared_error/n) + M*ln(n)/n,

// where M is the number of parameters

// NOTE the change in semantics: squared_error is now a MEAN squared error

real M = weights.length() * weights.width();

real lnsqerr = pl_log(squared_error);

AIC = lnsqerr + 2*M/n;

BIC = lnsqerr + M*pl_log(real(n))/n;

}

Computes the output from the input output = weights * (1, input)

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 297 of file LinearRegressor.cc.

References effective_inputsize(), extendedinput, include_bias, input, PLearn::PLearner::inputsize(), output_learned_weights, outputsize(), PLearn::TVec< T >::resize(), PLearn::TVec< T >::subVec(), PLearn::TMat< T >::toVec(), PLearn::transposeProduct(), and weights.

Referenced by PLearn::PruningLinearRegressor::computeOutput().

{

// If 'output_learned_weights', don't compute the linear regression at

// all, but instead flatten the weights vector and output it

if (output_learned_weights) {

output << weights.toVec();

return;

}

// Compute the output from the input

extendedinput.resize(effective_inputsize());

input = extendedinput;

if (include_bias) {

input = extendedinput.subVec(1,inputsize());

extendedinput[0] = 1.0;

}

input << actual_input;

output.resize(outputsize());

transposeProduct(output,weights,extendedinput);

}

| void PLearn::LinearRegressor::computeResidualsVariance | ( | const Vec & | outputwise_sum_squared_Y | ) | [protected] |

Utility function to compute the variance of the residuals of the regression.

Definition at line 398 of file LinearRegressor.cc.

References b, PLearn::TMat< T >::column(), PLearn::dot(), i, PLearn::TMat< T >::length(), PLearn::VMat::length(), N, PLearn::product(), resid_variance, PLearn::TVec< T >::resize(), PLearn::PLearner::train_set, weights, PLearn::TMat< T >::width(), and XtX.

Referenced by train().

{

// The following formula (for the unweighted case) is used:

//

// e'e = y'y - b'X'Xb

//

// where e is the residuals of the regression (for a single output), y

// is a column of targets (for a single output), b is the weigths

// vector, and X is the matrix of regressors. From this point, use the

// fact that an estimator of sigma is given by

//

// sigma_squared = e'e / (N-K),

//

// where N is the size of the training set and K is the extended input

// size (i.e. the length of the b vector).

const int ninputs = weights.length();

const int ntargets = weights.width();

const int N = train_set.length();

Vec b(ninputs);

Vec XtXb(ninputs);

resid_variance.resize(ntargets);

for (int i=0; i<ntargets; ++i) {

b << weights.column(i);

product(XtXb, XtX, b);

resid_variance[i] =

(outputwise_sum_squared_Y[i] - dot(b,XtXb)) / (N-ninputs);

}

}

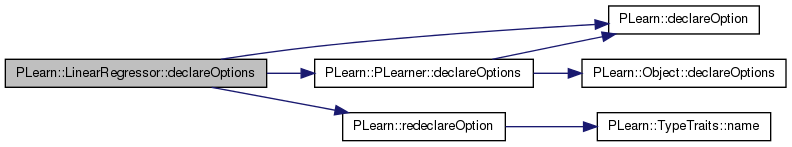

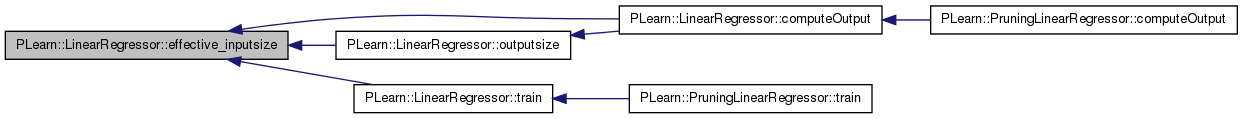

| void PLearn::LinearRegressor::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 94 of file LinearRegressor.cc.

References AIC, BIC, PLearn::OptionBase::buildoption, cholesky, PLearn::declareOption(), PLearn::PLearner::declareOptions(), include_bias, PLearn::OptionBase::learntoption, PLearn::OptionBase::nosave, output_learned_weights, PLearn::redeclareOption(), resid_variance, PLearn::PLearner::seed_, weight_decay, and weights.

Referenced by PLearn::PruningLinearRegressor::declareOptions().

{

// ### Declare all of this object's options here

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. Another possible flag to be combined with

// ### is OptionBase::nosave

//##### Build Options ####################################################

declareOption(ol, "include_bias", &LinearRegressor::include_bias,

OptionBase::buildoption,

"Whether to include a bias term in the regression (true by default)");

declareOption(ol, "cholesky", &LinearRegressor::cholesky,

OptionBase::buildoption,

"Whether to use the Cholesky decomposition or not, "

"when solving the linear system. Default=1 (true)");

declareOption(ol, "weight_decay", &LinearRegressor::weight_decay,

OptionBase::buildoption,

"The weight decay is the factor that multiplies the "

"squared norm of the parameters in the loss function");

declareOption(ol, "output_learned_weights",

&LinearRegressor::output_learned_weights,

OptionBase::buildoption,

"If true, the result of computeOutput*() functions is not the\n"

"result of thre regression, but the learned regression parameters.\n"

"(i.e. the matrix 'weights'). The matrix is flattened by rows.\n"

"NOTE by Nicolas Chapados: this option is a bit of a hack and might\n"

"be removed in the future. Let me know if you come to rely on it.");

//##### Learnt Options ###################################################

declareOption(ol, "weights", &LinearRegressor::weights,

OptionBase::learntoption,

"The weight matrix, which are the parameters computed by "

"training the regressor.\n");

declareOption(ol, "AIC", &LinearRegressor::AIC,

OptionBase::learntoption,

"The Akaike Information Criterion computed at training time;\n"

"Saved as a learned option to allow outputting AIC as a test cost.");

declareOption(ol, "BIC", &LinearRegressor::BIC,

OptionBase::learntoption,

"The Bayesian Information Criterion computed at training time;\n"

"Saved as a learned option to allow outputting BIC as a test cost.");

declareOption(ol, "resid_variance", &LinearRegressor::resid_variance,

OptionBase::learntoption,

"Estimate of the residual variance for each output variable\n"

"Saved as a learned option to allow outputting confidence intervals\n"

"when model is reloaded and used in test mode.\n");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

// Unused options.

redeclareOption(ol, "seed", &LinearRegressor::seed_, OptionBase::nosave,

"The random seed is not used in a linear regressor.");

}

| static const PPath& PLearn::LinearRegressor::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 145 of file LinearRegressor.h.

:

| LinearRegressor * PLearn::LinearRegressor::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

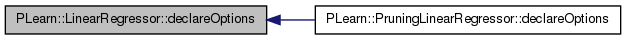

| int PLearn::LinearRegressor::effective_inputsize | ( | ) | const [inline, protected] |

Inputsize that takes into account optional bias term.

Definition at line 205 of file LinearRegressor.h.

Referenced by computeOutput(), outputsize(), and train().

{ return inputsize() + (include_bias? 1 : 0); }

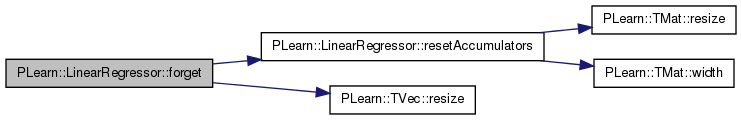

| void PLearn::LinearRegressor::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) This resizes the XtX and XtY matrices to signify that their content needs to be recomputed.

When train is called repeatedly without an intervening call to forget(), it is assumed that the training set has not changed, and XtX and XtY are not recomputed, avoiding a possibly lengthy pass through the data. This is particarly useful when doing hyper-parameter optimization of the weight_decay.

Reimplemented from PLearn::PLearner.

Definition at line 217 of file LinearRegressor.cc.

References resetAccumulators(), resid_variance, and PLearn::TVec< T >::resize().

{

resetAccumulators();

resid_variance.resize(0);

}

| OptionList & PLearn::LinearRegressor::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

| OptionMap & PLearn::LinearRegressor::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

| RemoteMethodMap & PLearn::LinearRegressor::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 92 of file LinearRegressor.cc.

| TVec< string > PLearn::LinearRegressor::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method)

Implements PLearn::PLearner.

Definition at line 363 of file LinearRegressor.cc.

References getTrainCostNames(), and output_learned_weights.

{

// If 'output_learned_weights', there is no test cost

if (output_learned_weights)

return TVec<string>();

else

return getTrainCostNames();

}

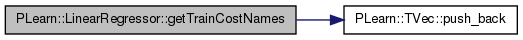

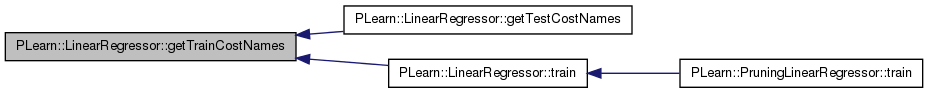

| TVec< string > PLearn::LinearRegressor::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 372 of file LinearRegressor.cc.

References PLearn::TVec< T >::push_back().

Referenced by getTestCostNames(), and train().

{

// Return the names of the objective costs that the train method computes

// and for which it updates the VecStatsCollector train_stats

TVec<string> names;

names.push_back("mse+penalty");

names.push_back("mse");

names.push_back("aic");

names.push_back("bic");

names.push_back("mabic");

return names;

}

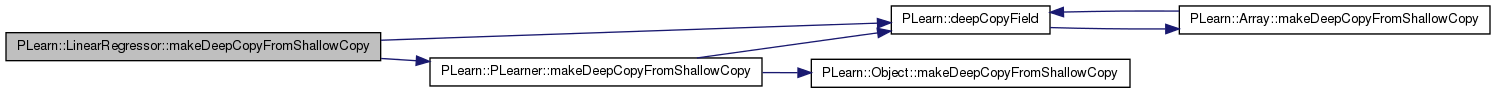

| void PLearn::LinearRegressor::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 175 of file LinearRegressor.cc.

References PLearn::deepCopyField(), extendedinput, input, PLearn::PLearner::makeDeepCopyFromShallowCopy(), resid_variance, train_costs, weights, XtX, and XtY.

Referenced by PLearn::PruningLinearRegressor::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

// ### Call deepCopyField on all "pointer-like" fields

// ### that you wish to be deepCopied rather than

// ### shallow-copied.

// ### ex:

deepCopyField(extendedinput, copies);

deepCopyField(input, copies);

deepCopyField(train_costs, copies);

deepCopyField(XtX, copies);

deepCopyField(XtY, copies);

deepCopyField(weights, copies);

deepCopyField(resid_variance, copies);

}

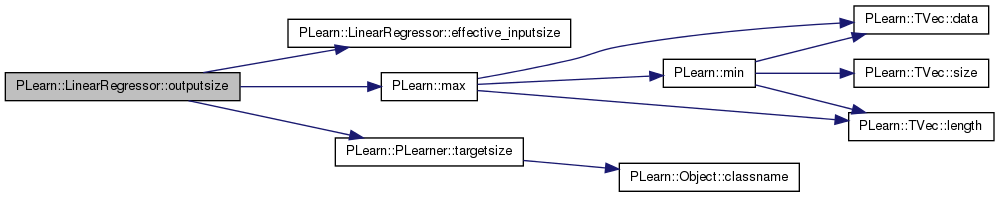

| int PLearn::LinearRegressor::outputsize | ( | ) | const [virtual] |

returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options)

Implements PLearn::PLearner.

Definition at line 192 of file LinearRegressor.cc.

References effective_inputsize(), PLearn::max(), output_learned_weights, and PLearn::PLearner::targetsize().

Referenced by computeOutput().

{

// If we output the learned parameters, the outputsize is the number of

// parameters

if (output_learned_weights)

return max(effective_inputsize() * targetsize(), -1);

int ts = targetsize();

if (ts >= 0) {

return ts;

} else {

// This learner's training set probably hasn't been set yet, so

// we don't know the targetsize.

return 0;

}

}

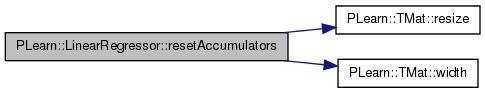

| void PLearn::LinearRegressor::resetAccumulators | ( | ) | [private] |

Definition at line 209 of file LinearRegressor.cc.

References PLearn::TMat< T >::resize(), sum_gammas, sum_squared_y, PLearn::TMat< T >::width(), XtX, and XtY.

Referenced by build_(), and forget().

{

XtX.resize(0,XtX.width());

XtY.resize(0,XtY.width());

sum_squared_y = 0;

sum_gammas = 0;

}

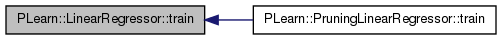

| void PLearn::LinearRegressor::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

See the forget() comment.

Implements PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 223 of file LinearRegressor.cc.

References AIC, BIC, cholesky, computeInformationCriteria(), computeResidualsVariance(), PLearn::dot(), effective_inputsize(), getTrainCostNames(), include_bias, PLearn::PLearner::inputsize(), PLearn::VMat::length(), PLearn::TMat< T >::length(), PLearn::linearRegression(), PLERROR, PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), PLearn::squared_error(), PLearn::VMat::subMatColumns(), PLearn::TMat< T >::subMatRows(), sum_gammas, sum_squared_y, PLearn::PLearner::targetsize(), train_costs, PLearn::PLearner::train_set, PLearn::PLearner::train_stats, PLearn::PLearner::verbosity, weight_decay, PLearn::weightedLinearRegression(), weights, weights_norm, XtX, and XtY.

Referenced by PLearn::PruningLinearRegressor::train().

{

if(targetsize()<=0)

PLERROR("In LinearRegressor::train() - Targetsize (%d) must be "

"positive", targetsize());

// Preparatory buffer allocation

bool recompute_XXXY = (XtX.length()==0);

if (recompute_XXXY)

{

XtX.resize(effective_inputsize(), effective_inputsize());

XtY.resize(effective_inputsize(), targetsize());

}

if(!train_stats) // make a default stats collector, in case there's none

train_stats = new VecStatsCollector();

train_stats->setFieldNames(getTrainCostNames());

train_stats->forget();

// Compute training inputs and targets; take into account optional bias

real squared_error=0;

Vec outputwise_sum_squared_Y;

VMat trainset_inputs = train_set.subMatColumns(0, inputsize());

VMat trainset_targets = train_set.subMatColumns(inputsize(), targetsize());

if (include_bias) // prepend a first column of ones

trainset_inputs = new ExtendedVMatrix(trainset_inputs,0,0,1,0,1.0);

// Choose proper function depending on whether the dataset is weighted

weights.resize(effective_inputsize(), targetsize());

if (train_set->weightsize()<=0)

{

squared_error =

linearRegression(trainset_inputs, trainset_targets,

weight_decay, weights,

!recompute_XXXY, XtX, XtY,

sum_squared_y, outputwise_sum_squared_Y,

true, report_progress?verbosity:0,

cholesky, include_bias?1:0);

}

else if (train_set->weightsize()==1)

{

squared_error =

weightedLinearRegression(trainset_inputs, trainset_targets,

train_set.subMatColumns(inputsize()+targetsize(),1),

weight_decay, weights,

!recompute_XXXY, XtX, XtY, sum_squared_y, outputwise_sum_squared_Y,

sum_gammas, true, report_progress?verbosity:0,

cholesky, include_bias?1:0);

}

else

PLERROR("LinearRegressor: expected dataset's weightsize to be either 1 or 0, got %d\n",

train_set->weightsize());

// Update the AIC and BIC criteria

computeInformationCriteria(squared_error, train_set.length());

// Update the sigmas for confidence intervals (the current formula does

// not account for the weights in the case of weighted linear regression)

computeResidualsVariance(outputwise_sum_squared_Y);

// Update the training costs

Mat weights_excluding_biases = weights.subMatRows(include_bias? 1 : 0, inputsize());

weights_norm = dot(weights_excluding_biases,weights_excluding_biases);

train_costs.resize(5);

train_costs[0] = squared_error + weight_decay*weights_norm;

train_costs[1] = squared_error;

train_costs[2] = AIC;

train_costs[3] = BIC;

train_costs[4] = (AIC+BIC)/2;

train_stats->update(train_costs);

train_stats->finalize();

}

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::PruningLinearRegressor.

Definition at line 145 of file LinearRegressor.h.

real PLearn::LinearRegressor::AIC [protected] |

The Akaike Information Criterion computed at training time; Saved as a learned option to allow outputting AIC as a test cost.

Definition at line 78 of file LinearRegressor.h.

Referenced by computeCostsFromOutputs(), computeInformationCriteria(), declareOptions(), and train().

real PLearn::LinearRegressor::BIC [protected] |

The Bayesian Information Criterion computed at training time Saved as a learned option to allow outputting BIC as a test cost.

Definition at line 82 of file LinearRegressor.h.

Referenced by computeCostsFromOutputs(), computeInformationCriteria(), declareOptions(), and train().

Whether to use Cholesky decomposition for computing the solution (true by default)

Definition at line 100 of file LinearRegressor.h.

Referenced by declareOptions(), and train().

Vec PLearn::LinearRegressor::extendedinput [mutable, private] |

length 1+inputsize(), first element is 1.0 (used by the use method)

Definition at line 53 of file LinearRegressor.h.

Referenced by computeOutput(), and makeDeepCopyFromShallowCopy().

Whether to include a bias term in the regression (true by default)

Definition at line 96 of file LinearRegressor.h.

Referenced by computeOutput(), declareOptions(), PLearn::PruningLinearRegressor::newDatasetIndices(), and train().

Vec PLearn::LinearRegressor::input [mutable, private] |

extendedinput.subVec(1,inputsize())

Definition at line 54 of file LinearRegressor.h.

Referenced by computeOutput(), and makeDeepCopyFromShallowCopy().

If true, the result of computeOutput*() functions is not the result of thre regression, but the learned regression parameters.

(i.e. the matrix 'weights'). The matrix is flattened by rows. NOTE by Nicolas Chapados: this option is a bit of a hack and might be removed in the future. Let me know if you come to rely on it.

Definition at line 110 of file LinearRegressor.h.

Referenced by computeConfidenceFromOutput(), computeCostsFromOutputs(), computeOutput(), declareOptions(), getTestCostNames(), and outputsize().

Vec PLearn::LinearRegressor::resid_variance [protected] |

Estimate of the residual variance for each output variable.

Saved as a learned option to allow outputting confidence intervals when model is reloaded and used in test mode.

Definition at line 87 of file LinearRegressor.h.

Referenced by computeConfidenceFromOutput(), computeResidualsVariance(), PLearn::PruningLinearRegressor::computeTRatio(), declareOptions(), forget(), and makeDeepCopyFromShallowCopy().

real PLearn::LinearRegressor::sum_gammas [protected] |

sum of weights if weighted error, also for re-using training set with different weight decays

Definition at line 61 of file LinearRegressor.h.

Referenced by resetAccumulators(), and train().

real PLearn::LinearRegressor::sum_squared_y [protected] |

can be re-used if train is called several times on the same data set

Definition at line 60 of file LinearRegressor.h.

Referenced by resetAccumulators(), and train().

Vec PLearn::LinearRegressor::train_costs [private] |

Definition at line 55 of file LinearRegressor.h.

Referenced by makeDeepCopyFromShallowCopy(), and train().

Factor on the squared norm of parameters penalty (zero by default)

Definition at line 103 of file LinearRegressor.h.

Referenced by computeCostsFromOutputs(), declareOptions(), and train().

Mat PLearn::LinearRegressor::weights [protected] |

The weight matrix computed by the regressor, (inputsize+1) x (outputsize)

Definition at line 74 of file LinearRegressor.h.

Referenced by computeInformationCriteria(), computeOutput(), computeResidualsVariance(), PLearn::PruningLinearRegressor::computeTRatio(), declareOptions(), makeDeepCopyFromShallowCopy(), PLearn::PruningLinearRegressor::newDatasetIndices(), and train().

real PLearn::LinearRegressor::weights_norm [protected] |

Sum of squares of weights.

Definition at line 64 of file LinearRegressor.h.

Referenced by computeCostsFromOutputs(), and train().

Mat PLearn::LinearRegressor::XtX [protected] |

can be re-used if train is called several times on the same data set

Definition at line 58 of file LinearRegressor.h.

Referenced by computeResidualsVariance(), PLearn::PruningLinearRegressor::computeTRatio(), makeDeepCopyFromShallowCopy(), resetAccumulators(), and train().

Mat PLearn::LinearRegressor::XtY [protected] |

can be re-used if train is called several times on the same data set

Definition at line 59 of file LinearRegressor.h.

Referenced by makeDeepCopyFromShallowCopy(), resetAccumulators(), and train().

1.7.4

1.7.4