|

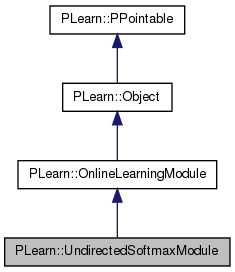

PLearn 0.1

|

|

PLearn 0.1

|

This class. More...

#include <UndirectedSoftmaxModule.h>

Public Member Functions | |

| UndirectedSoftmaxModule () | |

| weight decays | |

| virtual void | fprop (const Vec &input, Vec &output) const |

| given the input, compute the output (possibly resize it appropriately) SOON TO BE DEPRECATED, USE fprop(const TVec<Mat*>& ports_value) | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, const Vec &output_gradient) |

| SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then). | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient) |

| virtual void | bbpropUpdate (const Vec &input, const Vec &output, const Vec &output_gradient, const Vec &output_diag_hessian) |

| Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back. | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, Vec &input_diag_hessian, const Vec &output_diag_hessian) |

| virtual void | forget () |

| reset the parameters to the state they would be BEFORE starting training. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual UndirectedSoftmaxModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | start_learning_rate |

| Starting learning-rate, by which we multiply the gradient step. | |

| real | decrease_constant |

| learning_rate = start_learning_rate / (1 + decrease_constant*t), where t is the number of updates since the beginning | |

| Mat | init_weights |

| Vec | init_biases |

| real | init_weights_random_scale |

| real | L1_penalty_factor |

| real | L2_penalty_factor |

| Mat | weights |

| Vec | biases |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

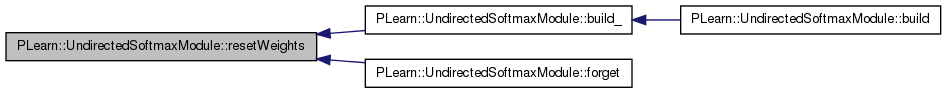

| virtual void | resetWeights () |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef OnlineLearningModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| real | learning_rate |

| int | step_number |

This class.

Definition at line 59 of file UndirectedSoftmaxModule.h.

typedef OnlineLearningModule PLearn::UndirectedSoftmaxModule::inherited [private] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 61 of file UndirectedSoftmaxModule.h.

| PLearn::UndirectedSoftmaxModule::UndirectedSoftmaxModule | ( | ) |

weight decays

Default constructor

Definition at line 84 of file UndirectedSoftmaxModule.cc.

:

start_learning_rate( .001 ),

decrease_constant( 0 ),

init_weights_random_scale( 1. ),

L1_penalty_factor( 0. ),

L2_penalty_factor( 0. ),

step_number( 0 )

/* ### Initialize all fields to their default value */

{

}

| string PLearn::UndirectedSoftmaxModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

| OptionList & PLearn::UndirectedSoftmaxModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

| RemoteMethodMap & PLearn::UndirectedSoftmaxModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

| Object * PLearn::UndirectedSoftmaxModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

| StaticInitializer UndirectedSoftmaxModule::_static_initializer_ & PLearn::UndirectedSoftmaxModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

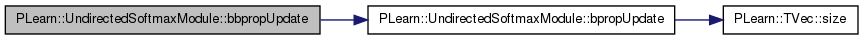

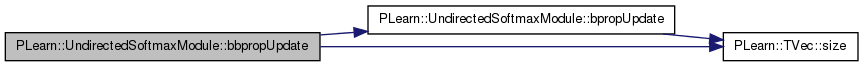

| void PLearn::UndirectedSoftmaxModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| Vec & | input_diag_hessian, | ||

| const Vec & | output_diag_hessian | ||

| ) | [virtual] |

Definition at line 216 of file UndirectedSoftmaxModule.cc.

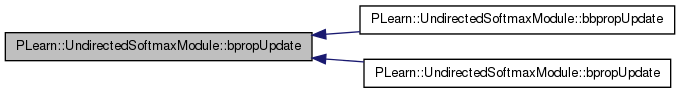

References bpropUpdate().

{

bpropUpdate( input, output, input_gradient, output_gradient );

}

| void PLearn::UndirectedSoftmaxModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | output_gradient, | ||

| const Vec & | output_diag_hessian | ||

| ) | [virtual] |

Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back.

If these methods are defined, you can use them INSTEAD of bpropUpdate(...) THE DEFAULT IMPLEMENTATION PROVIDED HERE JUST CALLS bbpropUpdate(input, output, input_gradient, output_gradient, in_hess, out_hess) AND IGNORES INPUT HESSIAN AND INPUT GRADIENT

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 195 of file UndirectedSoftmaxModule.cc.

References bpropUpdate(), PLearn::OnlineLearningModule::output_size, PLERROR, PLWARNING, and PLearn::TVec< T >::size().

{

PLWARNING("UndirectedSoftmaxModule::bbpropUpdate: You're providing\n"

"'output_diag_hessian', but it will not be used.\n");

int odh_size = output_diag_hessian.size();

if( odh_size != output_size )

{

PLERROR("UndirectedSoftmaxModule::bbpropUpdate:"

" 'output_diag_hessian.size()'\n"

" should be equal to 'output_size' (%i != %i)\n",

odh_size, output_size);

}

bpropUpdate( input, output, output_gradient );

}

| void PLearn::UndirectedSoftmaxModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then).

Since sub-classes are supposed to learn ONLINE, the object is 'ready-to-be-used' just after any bpropUpdate. N.B. The DEFAULT IMPLEMENTATION JUST CALLS bpropUpdate(input, output, input_gradient, output_gradient) AND IGNORES INPUT GRADIENT.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 111 of file UndirectedSoftmaxModule.cc.

References decrease_constant, i, PLearn::OnlineLearningModule::input_size, j, L1_penalty_factor, L2_penalty_factor, learning_rate, PLearn::OnlineLearningModule::output_size, PLERROR, PLWARNING, PLearn::TVec< T >::size(), start_learning_rate, step_number, and weights.

Referenced by bbpropUpdate(), and bpropUpdate().

{

int in_size = input.size();

int out_size = output.size();

int og_size = output_gradient.size();

// size check

if( in_size != input_size )

{

PLERROR("UndirectedSoftmaxModule::bpropUpdate: 'input.size()' should be"

" equal\n"

" to 'input_size' (%i != %i)\n", in_size, input_size);

}

if( out_size != output_size )

{

PLERROR("UndirectedSoftmaxModule::bpropUpdate: 'output.size()' should be"

" equal\n"

" to 'output_size' (%i != %i)\n", out_size, output_size);

}

if( og_size != output_size )

{

PLERROR("UndirectedSoftmaxModule::bpropUpdate: 'output_gradient.size()'"

" should\n"

" be equal to 'output_size' (%i != %i)\n",

og_size, output_size);

}

learning_rate = start_learning_rate / ( 1+decrease_constant*step_number);

if (L2_penalty_factor==0)

{

}

else

{

}

if (L1_penalty_factor!=0)

{

real delta = learning_rate * L1_penalty_factor;

for (int i=0;i<output_size;i++)

{

real* Wi = weights[i]; // don't apply penalty on bias

for (int j=0;j<input_size;j++)

{

real Wij = Wi[j];

if (Wij>delta)

Wi[j] -=delta;

else if (Wij<-delta)

Wi[j] +=delta;

else

Wi[j]=0;

}

}

}

if (L2_penalty_factor!=0)

{

real delta = learning_rate*L2_penalty_factor;

if (delta>1)

PLWARNING("UndirectedSoftmaxModule::bpropUpdate: learning rate = %f is too large!",learning_rate);

weights *= 1 - delta;

}

step_number++;

}

| void PLearn::UndirectedSoftmaxModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

Definition at line 180 of file UndirectedSoftmaxModule.cc.

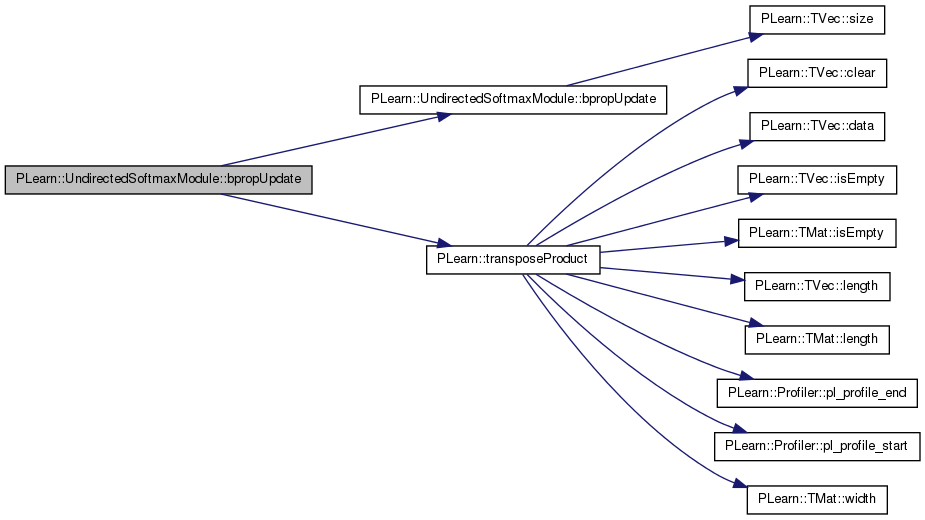

References bpropUpdate(), PLearn::OnlineLearningModule::input_size, PLearn::transposeProduct(), and weights.

{

// compute input_gradient from initial weights

input_gradient = transposeProduct( weights, output_gradient

).subVec( 1, input_size );

// do the update (and size check)

bpropUpdate( input, output, output_gradient);

}

| void PLearn::UndirectedSoftmaxModule::build | ( | ) | [virtual] |

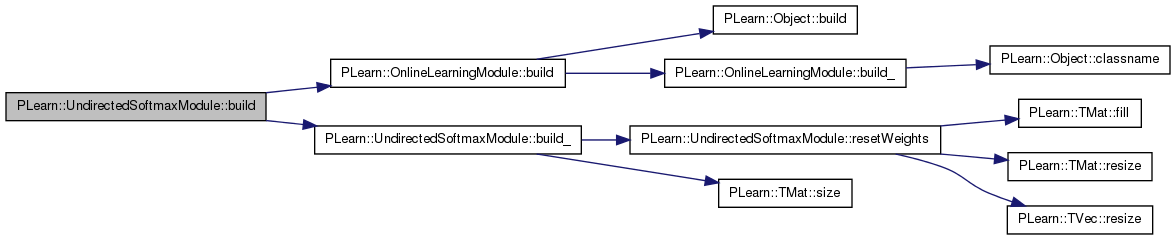

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 258 of file UndirectedSoftmaxModule.cc.

References PLearn::OnlineLearningModule::build(), and build_().

{

inherited::build();

build_();

}

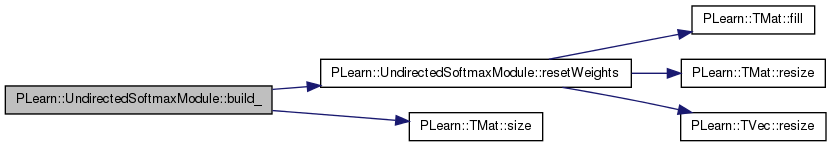

| void PLearn::UndirectedSoftmaxModule::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 326 of file UndirectedSoftmaxModule.cc.

References init_weights, init_weights_random_scale, PLearn::OnlineLearningModule::input_size, PLearn::OnlineLearningModule::output_size, PLERROR, PLWARNING, PLearn::OnlineLearningModule::random_gen, resetWeights(), PLearn::TMat< T >::size(), and weights.

Referenced by build().

{

if( input_size < 0 ) // has not been initialized

{

PLERROR("UndirectedSoftmaxModule::build_: 'input_size' < 0 (%i).\n"

"You should set it to a positive integer.\n", input_size);

}

else if( output_size < 0 ) // default to 1 neuron

{

PLWARNING("UndirectedSoftmaxModule::build_: 'output_size' is < 0 (%i),\n"

" you should set it to a positive integer (the number of"

" neurons).\n"

" Defaulting to 1.\n", output_size);

output_size = 1;

}

if( weights.size() == 0 )

{

resetWeights();

}

if (init_weights.size()==0 && init_weights_random_scale!=0 && !random_gen)

random_gen = new PRandom();

}

| string PLearn::UndirectedSoftmaxModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

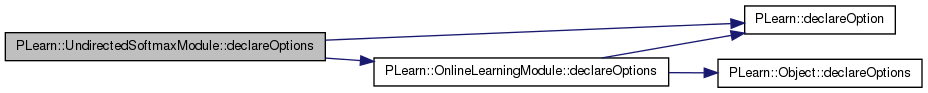

| void PLearn::UndirectedSoftmaxModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 274 of file UndirectedSoftmaxModule.cc.

References biases, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::OnlineLearningModule::declareOptions(), decrease_constant, init_biases, init_weights, init_weights_random_scale, L1_penalty_factor, L2_penalty_factor, PLearn::OptionBase::learntoption, start_learning_rate, and weights.

{

declareOption(ol, "start_learning_rate",

&UndirectedSoftmaxModule::start_learning_rate,

OptionBase::buildoption,

"Learning-rate of stochastic gradient optimization");

declareOption(ol, "decrease_constant",

&UndirectedSoftmaxModule::decrease_constant,

OptionBase::buildoption,

"Decrease constant of stochastic gradient optimization");

declareOption(ol, "init_weights", &UndirectedSoftmaxModule::init_weights,

OptionBase::buildoption,

"Optional initial weights of the neurons (one row per output).\n"

"If not provided then weights are initialized according\n"

"to a uniform distribution (see init_weights_random_scale)\n"

"and biases are initialized to 0.\n");

declareOption(ol, "init_biases", &UndirectedSoftmaxModule::init_biases,

OptionBase::buildoption,

"Optional initial biases (one per output neuron). If not provided\n"

"then biases are initialized to 0.\n");

declareOption(ol, "init_weights_random_scale", &UndirectedSoftmaxModule::init_weights_random_scale,

OptionBase::buildoption,

"If init_weights is not provided, the weights are initialized randomly by\n"

"from a uniform in [-r,r], with r = init_weights_random_scale/input_size.\n"

"To clear the weights initially, just set this option to 0.");

declareOption(ol, "L1_penalty_factor", &UndirectedSoftmaxModule::L1_penalty_factor,

OptionBase::buildoption,

"Optional (default=0) factor of L1 regularization term, i.e.\n"

"minimize L1_penalty_factor * sum_{ij} |weights(i,j)| during training.\n");

declareOption(ol, "L2_penalty_factor", &UndirectedSoftmaxModule::L2_penalty_factor,

OptionBase::buildoption,

"Optional (default=0) factor of L2 regularization term, i.e.\n"

"minimize 0.5 * L2_penalty_factor * sum_{ij} weights(i,j)^2 during training.");

declareOption(ol, "weights", &UndirectedSoftmaxModule::weights,

OptionBase::learntoption,

"Input weights of the output neurons (one row per output neuron)." );

declareOption(ol, "biases", &UndirectedSoftmaxModule::biases,

OptionBase::learntoption,

"Biases of the output neurons.");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::UndirectedSoftmaxModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 110 of file UndirectedSoftmaxModule.h.

:

//##### Protected Options ###############################################

| UndirectedSoftmaxModule * PLearn::UndirectedSoftmaxModule::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

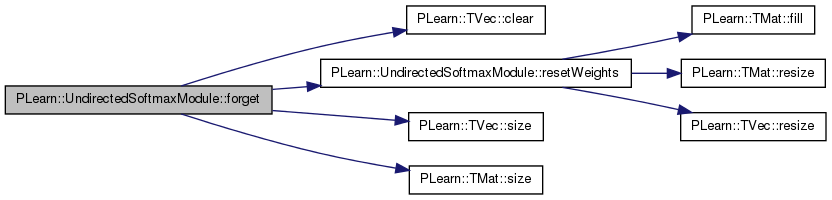

| void PLearn::UndirectedSoftmaxModule::forget | ( | ) | [virtual] |

reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Definition at line 227 of file UndirectedSoftmaxModule.cc.

References biases, PLearn::TVec< T >::clear(), init_biases, init_weights, init_weights_random_scale, PLearn::OnlineLearningModule::input_size, learning_rate, PLearn::OnlineLearningModule::random_gen, resetWeights(), PLearn::TVec< T >::size(), PLearn::TMat< T >::size(), start_learning_rate, step_number, and weights.

{

resetWeights();

if( init_weights.size() !=0 )

weights << init_weights;

else if (init_weights_random_scale!=0)

{

real r = init_weights_random_scale / input_size;

random_gen->fill_random_uniform(weights,-r,r);

}

if( init_biases.size() !=0 )

biases << init_biases;

else

biases.clear();

learning_rate = start_learning_rate;

step_number = 0;

}

given the input, compute the output (possibly resize it appropriately) SOON TO BE DEPRECATED, USE fprop(const TVec<Mat*>& ports_value)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 96 of file UndirectedSoftmaxModule.cc.

References PLearn::OnlineLearningModule::input_size, PLERROR, and PLearn::TVec< T >::size().

{

int in_size = input.size();

// size check

if( in_size != input_size )

{

PLERROR("UndirectedSoftmaxModule::fprop: 'input.size()' should be equal\n"

" to 'input_size' (%i != %i)\n", in_size, input_size);

}

}

| OptionList & PLearn::UndirectedSoftmaxModule::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

| OptionMap & PLearn::UndirectedSoftmaxModule::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

| RemoteMethodMap & PLearn::UndirectedSoftmaxModule::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 82 of file UndirectedSoftmaxModule.cc.

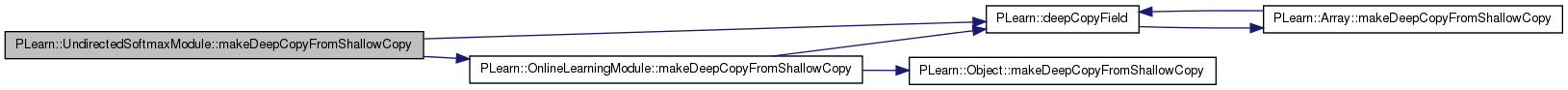

| void PLearn::UndirectedSoftmaxModule::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 264 of file UndirectedSoftmaxModule.cc.

References biases, PLearn::deepCopyField(), init_biases, init_weights, PLearn::OnlineLearningModule::makeDeepCopyFromShallowCopy(), and weights.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(init_weights, copies);

deepCopyField(init_biases, copies);

deepCopyField(weights, copies);

deepCopyField(biases, copies);

}

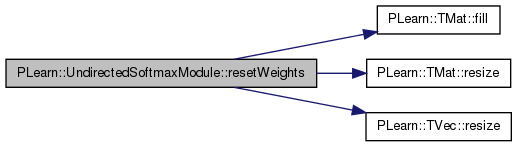

| void PLearn::UndirectedSoftmaxModule::resetWeights | ( | ) | [protected, virtual] |

Definition at line 249 of file UndirectedSoftmaxModule.cc.

References biases, PLearn::TMat< T >::fill(), PLearn::OnlineLearningModule::input_size, PLearn::OnlineLearningModule::output_size, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), and weights.

Referenced by build_(), and forget().

{

weights.resize( output_size, input_size );

biases.resize(output_size);

weights.fill( 0 );

}

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 110 of file UndirectedSoftmaxModule.h.

Definition at line 122 of file UndirectedSoftmaxModule.h.

Referenced by declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and resetWeights().

learning_rate = start_learning_rate / (1 + decrease_constant*t), where t is the number of updates since the beginning

Definition at line 70 of file UndirectedSoftmaxModule.h.

Referenced by bpropUpdate(), and declareOptions().

Definition at line 73 of file UndirectedSoftmaxModule.h.

Referenced by declareOptions(), forget(), and makeDeepCopyFromShallowCopy().

Definition at line 72 of file UndirectedSoftmaxModule.h.

Referenced by build_(), declareOptions(), forget(), and makeDeepCopyFromShallowCopy().

Definition at line 75 of file UndirectedSoftmaxModule.h.

Referenced by build_(), declareOptions(), and forget().

Definition at line 77 of file UndirectedSoftmaxModule.h.

Referenced by bpropUpdate(), and declareOptions().

Definition at line 77 of file UndirectedSoftmaxModule.h.

Referenced by bpropUpdate(), and declareOptions().

Definition at line 141 of file UndirectedSoftmaxModule.h.

Referenced by bpropUpdate(), and forget().

Starting learning-rate, by which we multiply the gradient step.

Definition at line 67 of file UndirectedSoftmaxModule.h.

Referenced by bpropUpdate(), declareOptions(), and forget().

Definition at line 142 of file UndirectedSoftmaxModule.h.

Referenced by bpropUpdate(), and forget().

Definition at line 121 of file UndirectedSoftmaxModule.h.

Referenced by bpropUpdate(), build_(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and resetWeights().

1.7.4

1.7.4