|

PLearn 0.1

|

|

PLearn 0.1

|

#include <LocallyMagnifiedDistribution.h>

Public Member Functions | |

| LocallyMagnifiedDistribution () | |

| Default constructor. | |

| virtual void | build () |

| Simply call inherited::build() then build_(). | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transform a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual LocallyMagnifiedDistribution * | deepCopy (CopiesMap &copies) const |

| virtual real | log_density (const Vec &x) const |

| Return log of probability density log(p(y | x)). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage == nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | mode |

| real | computation_neighbors |

| Ker | weighting_kernel |

| char | kernel_adapt_width_mode |

| PP< PDistribution > | localdistr |

| The distribution that will be trained with local weights. | |

| bool | fix_localdistr_center |

| real | width_neighbors |

| real | width_factor |

| string | width_optionname |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| int | getActualNComputationNeighbors () const |

| int | getActualNWidthNeighbors () const |

| double | trainLocalDistrAndEvaluateLogDensity (VMat local_trainset, Vec y) const |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declare this class' options. | |

Protected Attributes | |

| bool | display_adapted_width |

| Vec | emptyvec |

| Vec | NN_outputs |

| Vec | NN_costs |

| PP< GenericNearestNeighbors > | NN |

Private Types | |

| typedef PDistribution | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| Vec | trainsample |

| Global storage to save memory allocations. | |

| Vec | weights |

Definition at line 53 of file LocallyMagnifiedDistribution.h.

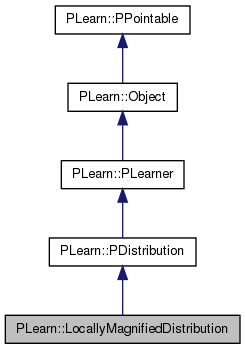

typedef PDistribution PLearn::LocallyMagnifiedDistribution::inherited [private] |

Reimplemented from PLearn::PDistribution.

Definition at line 58 of file LocallyMagnifiedDistribution.h.

| PLearn::LocallyMagnifiedDistribution::LocallyMagnifiedDistribution | ( | ) |

Default constructor.

Definition at line 57 of file LocallyMagnifiedDistribution.cc.

:display_adapted_width(true), mode(0), computation_neighbors(-1), kernel_adapt_width_mode(' '), fix_localdistr_center(true), width_neighbors(1.0), width_factor(1.0), width_optionname("sigma") { }

| string PLearn::LocallyMagnifiedDistribution::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

| OptionList & PLearn::LocallyMagnifiedDistribution::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

| RemoteMethodMap & PLearn::LocallyMagnifiedDistribution::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

| Object * PLearn::LocallyMagnifiedDistribution::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

| StaticInitializer LocallyMagnifiedDistribution::_static_initializer_ & PLearn::LocallyMagnifiedDistribution::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

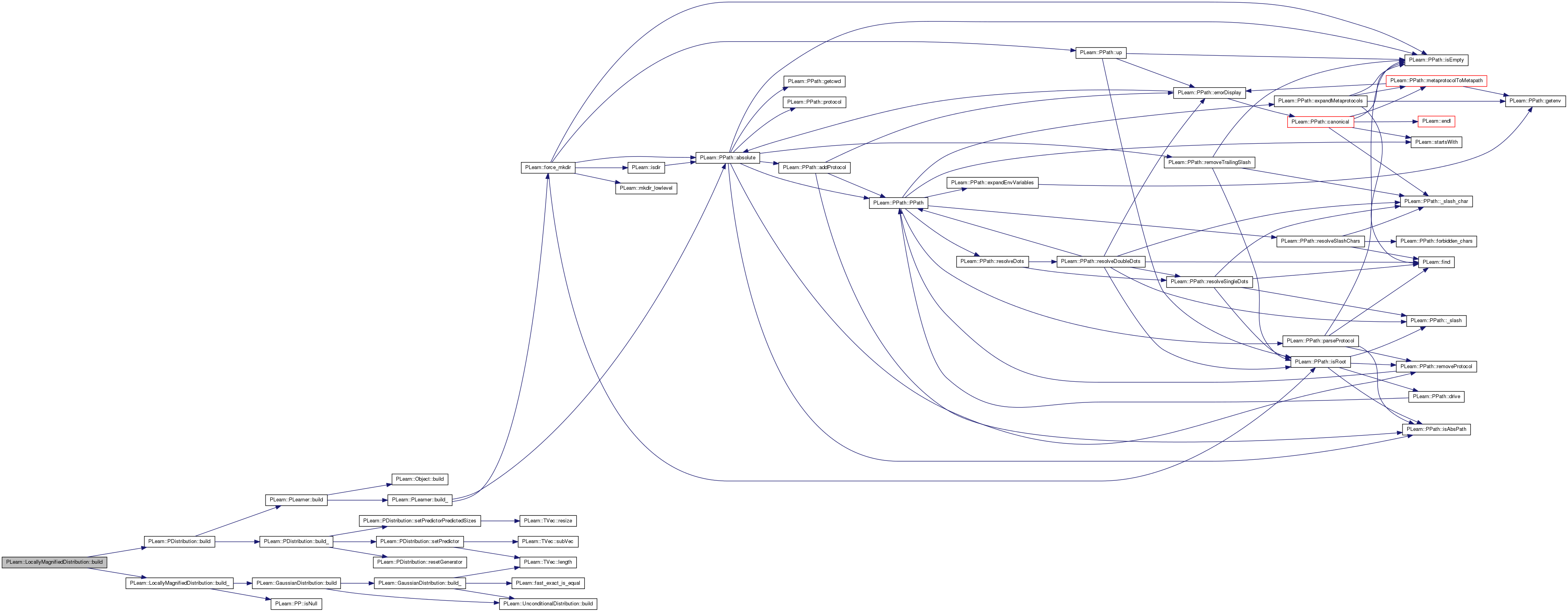

| void PLearn::LocallyMagnifiedDistribution::build | ( | ) | [virtual] |

Simply call inherited::build() then build_().

Reimplemented from PLearn::PDistribution.

Definition at line 141 of file LocallyMagnifiedDistribution.cc.

References PLearn::PDistribution::build(), and build_().

{

// ### Nothing to add here, simply calls build_().

inherited::build();

build_();

}

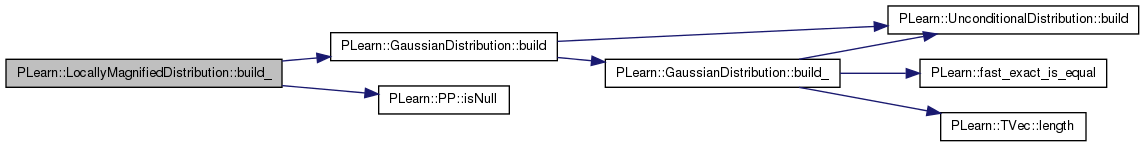

| void PLearn::LocallyMagnifiedDistribution::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PDistribution.

Definition at line 151 of file LocallyMagnifiedDistribution.cc.

References PLearn::GaussianDistribution::build(), PLearn::GaussianDistribution::ignore_weights_below, PLearn::PP< T >::isNull(), and localdistr.

Referenced by build().

{

// ### This method should do the real building of the object,

// ### according to set 'options', in *any* situation.

// ### Typical situations include:

// ### - Initial building of an object from a few user-specified options

// ### - Building of a "reloaded" object: i.e. from the complete set of all serialised options.

// ### - Updating or "re-building" of an object after a few "tuning" options have been modified.

// ### You should assume that the parent class' build_() has already been called.

// ### If the distribution is conditional, you should finish build_() by:

// PDistribution::finishConditionalBuild();

if(localdistr.isNull())

{

GaussianDistribution* distr = new GaussianDistribution();

distr->ignore_weights_below = 1e-6;

distr->build();

localdistr = distr;

}

}

| string PLearn::LocallyMagnifiedDistribution::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

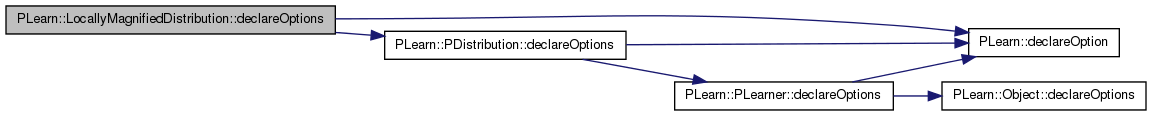

| void PLearn::LocallyMagnifiedDistribution::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declare this class' options.

Reimplemented from PLearn::PDistribution.

Definition at line 77 of file LocallyMagnifiedDistribution.cc.

References PLearn::OptionBase::buildoption, computation_neighbors, PLearn::declareOption(), PLearn::PDistribution::declareOptions(), fix_localdistr_center, kernel_adapt_width_mode, PLearn::OptionBase::learntoption, localdistr, mode, NN, PLearn::PLearner::train_set, weighting_kernel, width_factor, width_neighbors, and width_optionname.

{

// ### Declare all of this object's options here

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. Another possible flag to be combined with

// ### is OptionBase::nosave

declareOption(ol, "mode", &LocallyMagnifiedDistribution::mode, OptionBase::buildoption,

"Output computation mode");

declareOption(ol, "computation_neighbors", &LocallyMagnifiedDistribution::computation_neighbors, OptionBase::buildoption,

"This indicates to how many neighbors we should restrict ourselves for the computations.\n"

"(it's equivalent to giving all other data points a weight of 0)\n"

"If <=0 we use all training points (with an appropriate weight).\n"

"If >1 we consider only that many neighbors of the test point;\n"

"If between 0 and 1, it's considered a coefficient by which to multiply\n"

"the square root of the numbder of training points, to yield the actual \n"

"number of computation neighbors used");

declareOption(ol, "weighting_kernel", &LocallyMagnifiedDistribution::weighting_kernel, OptionBase::buildoption,

"The magnifying kernel that will be used to locally weigh the samples.\n"

"If it is left null then all computation_neighbors will receive a weight of 1\n");

declareOption(ol, "kernel_adapt_width_mode", &LocallyMagnifiedDistribution::kernel_adapt_width_mode, OptionBase::buildoption,

"This controls how we adapt the width of the kernel to the local neighborhood of the test point.\n"

"' ' means leave width unchanged\n"

"'A' means set the width to width_factor times the average distance to the neighbors determined by width_neighborss.\n"

"'M' means set the width to width_faactor times the maximum distance to the neighbors determined by width_neighborss.\n");

declareOption(ol, "width_neighbors", &LocallyMagnifiedDistribution::width_neighbors, OptionBase::buildoption,

"width_neighbors tells how many neighbors to consider to determine the kernel width.\n"

"(see kernel_adapt_width_mode) \n"

"If width_neighbors>1 we consider that many neighbors.\n"

"If width_neighbors>=0 and <=1 it's considered a coefficient by which to multiply\n"

"the square root of the numbder of training points, to yield the actual \n"

"number of neighbors used");

declareOption(ol, "width_factor", &LocallyMagnifiedDistribution::width_factor, OptionBase::buildoption,

"Only used if width_neighbors>0 (see width_neighbors)");

declareOption(ol, "width_optionname", &LocallyMagnifiedDistribution::width_optionname, OptionBase::buildoption,

"Only used if kernel_adapt_width_mode!=' '. The name of the option in the weighting kernel that should be used to set or modifiy its width");

declareOption(ol, "localdistr", &LocallyMagnifiedDistribution::localdistr, OptionBase::buildoption,

"The kind of distribution that will be trained with local weights obtained from the magnifying kernel.\n"

"If left unspecified (null), it will be set to GaussianDistribution by default.");

declareOption(ol, "fix_localdistr_center", &LocallyMagnifiedDistribution::fix_localdistr_center, OptionBase::buildoption,

"If true, and localdistr is GaussianDistribution, then the mu of the localdistr will be forced to be the given test point.");

declareOption(ol, "train_set", &LocallyMagnifiedDistribution::train_set, OptionBase::learntoption,

"We need to store the training set, as this learner is memory-based...");

declareOption(ol, "NN", &LocallyMagnifiedDistribution::NN, OptionBase::learntoption,

"The nearest neighbor algorithm used to find nearest neighbors");

// Now call the parent class' declareOptions().

inherited::declareOptions(ol);

}

| static const PPath& PLearn::LocallyMagnifiedDistribution::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PDistribution.

Definition at line 138 of file LocallyMagnifiedDistribution.h.

| LocallyMagnifiedDistribution * PLearn::LocallyMagnifiedDistribution::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

| void PLearn::LocallyMagnifiedDistribution::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!)

Reimplemented from PLearn::PDistribution.

Definition at line 389 of file LocallyMagnifiedDistribution.cc.

References PLearn::PP< T >::isNotNull(), and NN.

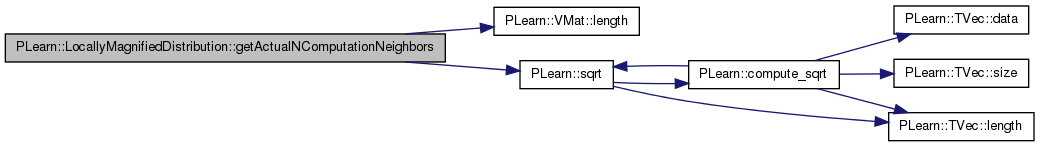

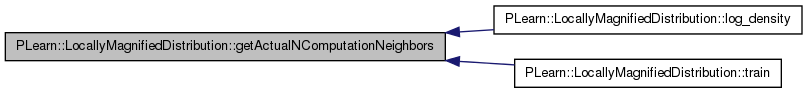

| int PLearn::LocallyMagnifiedDistribution::getActualNComputationNeighbors | ( | ) | const [protected] |

Definition at line 336 of file LocallyMagnifiedDistribution.cc.

References computation_neighbors, PLearn::VMat::length(), PLearn::sqrt(), and PLearn::PLearner::train_set.

Referenced by log_density(), and train().

{

if(computation_neighbors<=0)

return 0;

else if(computation_neighbors>1)

return int(computation_neighbors);

else

return int(computation_neighbors*sqrt(train_set->length()));

}

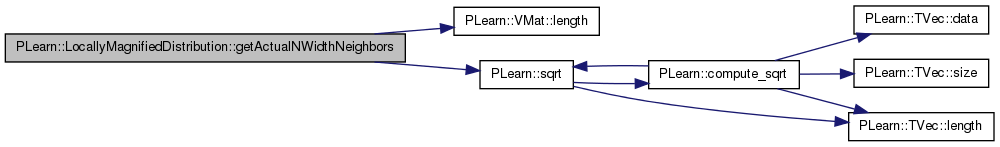

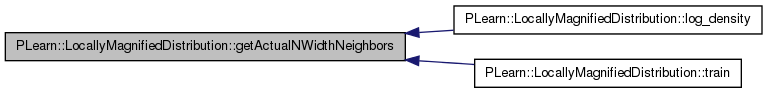

| int PLearn::LocallyMagnifiedDistribution::getActualNWidthNeighbors | ( | ) | const [protected] |

Definition at line 346 of file LocallyMagnifiedDistribution.cc.

References PLearn::VMat::length(), PLearn::sqrt(), PLearn::PLearner::train_set, and width_neighbors.

Referenced by log_density(), and train().

{

if(width_neighbors<0)

return 0;

else if(width_neighbors>1)

return int(width_neighbors);

return int(width_neighbors*sqrt(train_set->length()));

}

| OptionList & PLearn::LocallyMagnifiedDistribution::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

| OptionMap & PLearn::LocallyMagnifiedDistribution::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

| RemoteMethodMap & PLearn::LocallyMagnifiedDistribution::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::PDistribution.

Definition at line 72 of file LocallyMagnifiedDistribution.cc.

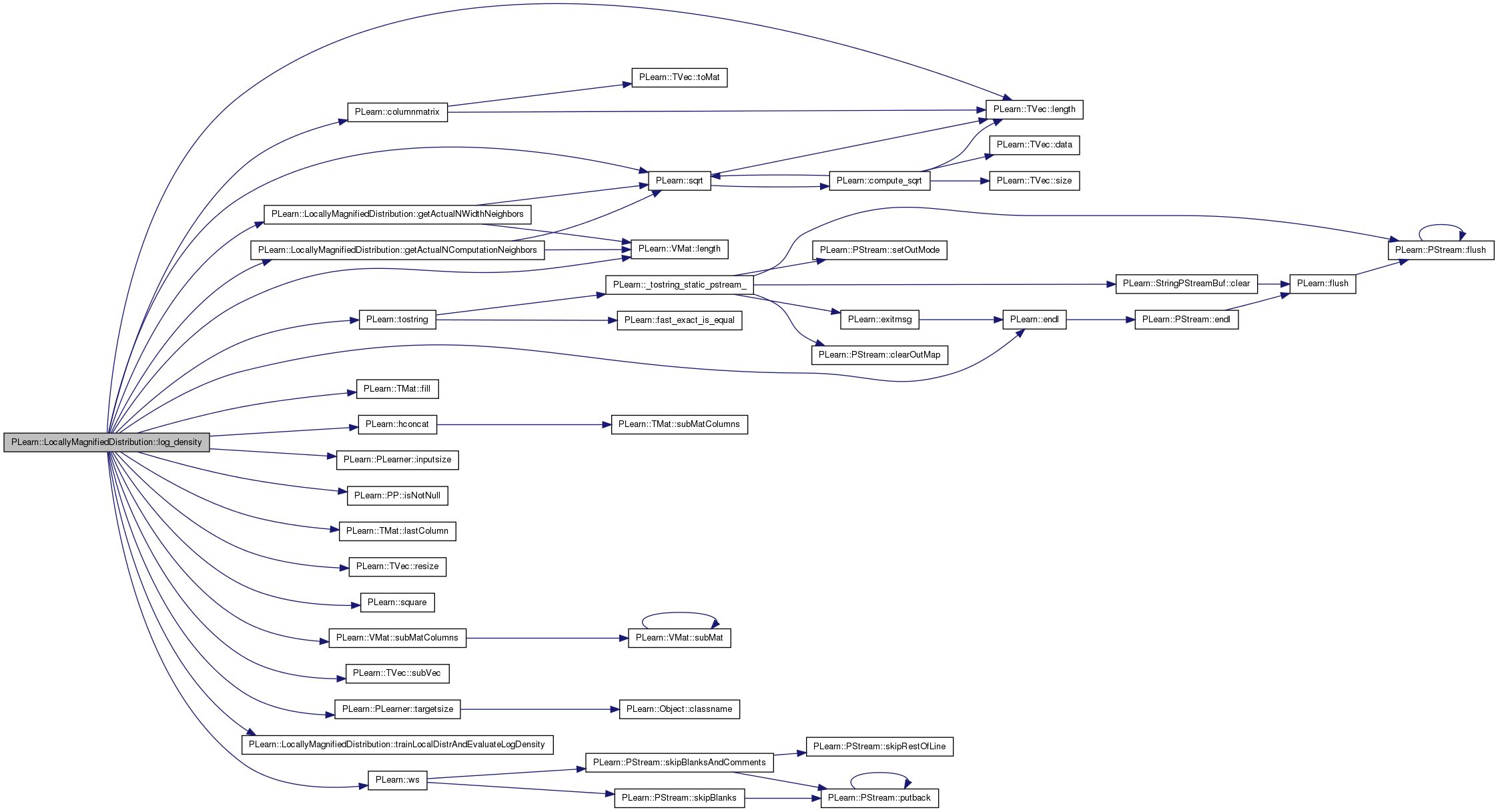

Return log of probability density log(p(y | x)).

Reimplemented from PLearn::PDistribution.

Definition at line 176 of file LocallyMagnifiedDistribution.cc.

References PLearn::columnmatrix(), display_adapted_width, emptyvec, PLearn::endl(), PLearn::TMat< T >::fill(), getActualNComputationNeighbors(), getActualNWidthNeighbors(), PLearn::hconcat(), i, PLearn::PLearner::inputsize(), PLearn::PP< T >::isNotNull(), kernel_adapt_width_mode, PLearn::TMat< T >::lastColumn(), PLearn::TVec< T >::length(), PLearn::VMat::length(), mode, n, NN, NN_costs, NN_outputs, PLearn::perr, pl_log, PLASSERT, PLERROR, PLearn::TVec< T >::resize(), PLearn::sqrt(), PLearn::square(), PLearn::VMat::subMatColumns(), PLearn::TVec< T >::subVec(), PLearn::PLearner::targetsize(), PLearn::tostring(), PLearn::PLearner::train_set, trainLocalDistrAndEvaluateLogDensity(), trainsample, w, weighting_kernel, weights, width_factor, width_optionname, and PLearn::ws().

{

int l = train_set.length();

int w = inputsize();

int ws = train_set->weightsize();

trainsample.resize(w+ws);

Vec input = trainsample.subVec(0,w);

PLASSERT(targetsize()==0);

int comp_n = getActualNComputationNeighbors();

int width_n = getActualNWidthNeighbors();

if(comp_n>0 || width_n>0)

NN->computeOutputAndCosts(y, emptyvec, NN_outputs, NN_costs);

if(kernel_adapt_width_mode!=' ')

{

real new_width = 0;

if(kernel_adapt_width_mode=='M')

{

new_width = width_factor*NN_costs[width_n-1];

// if(display_adapted_width)

// perr << "new_width=" << width_factor << " * NN_costs["<<width_n-1<<"] = "<< new_width << endl;

}

else if(kernel_adapt_width_mode=='Z')

{

new_width = width_factor*sqrt(square(NN_costs[width_n-1])/w);

}

else if(kernel_adapt_width_mode=='A')

{

for(int k=0; k<width_n; k++)

new_width += NN_costs[k];

new_width *= width_factor/width_n;

}

else

PLERROR("Invalid kernel_adapt_width_mode: %c",kernel_adapt_width_mode);

// hack to display only first adapted width

if(display_adapted_width)

{

/*

perr << "NN_outputs = " << NN_outputs << endl;

perr << "NN_costs = " << NN_costs << endl;

perr << "inutsize = " << w << endl;

perr << "length = " << l << endl;

*/

perr << "Adapted kernel width = " << new_width << endl;

display_adapted_width = false;

}

weighting_kernel->setOption(width_optionname,tostring(new_width));

weighting_kernel->build(); // rebuild to adapt to width change

}

double weightsum = 0;

VMat local_trainset;

if(comp_n>0) // we'll use only the neighbors

{

int n = NN_outputs.length();

Mat neighbors(n, w+1);

neighbors.lastColumn().fill(1.0); // default weight 1.0

for(int k=0; k<n; k++)

{

Vec neighbors_k = neighbors(k);

Vec neighbors_row = neighbors_k.subVec(0,w+ws);

Vec neighbors_input = neighbors_row.subVec(0,w);

train_set->getRow(int(NN_outputs[k]),neighbors_row);

real weight = 1.;

if(weighting_kernel.isNotNull())

weight = weighting_kernel(y,neighbors_input);

weightsum += weight;

neighbors_k[w] *= weight;

}

local_trainset = new MemoryVMatrix(neighbors);

local_trainset->defineSizes(w,0,1);

}

else // we'll use all the points

{

// 'weights' will contain the "localization" weights for the current test point.

weights.resize(l);

for(int i=0; i<l; i++)

{

train_set->getRow(i,trainsample);

real weight = 1.;

if(weighting_kernel.isNotNull())

weight = weighting_kernel(y,input);

if(ws==1)

weight *= trainsample[w];

weightsum += weight;

weights[i] = weight;

}

VMat weight_column(columnmatrix(weights));

if(ws==0) // append weight column

local_trainset = hconcat(train_set, weight_column);

else // replace last column by weight column

local_trainset = hconcat(train_set.subMatColumns(0,w), weight_column);

local_trainset->defineSizes(w,0,1);

}

// perr << "local_trainset =" << endl << local_trainset->toMat() << endl;

double log_local_p = 0;

switch(mode)

{

case 0:

log_local_p = trainLocalDistrAndEvaluateLogDensity(local_trainset, y);

return log_local_p + pl_log((double)weightsum) - pl_log((double)l) - pl_log((double)weighting_kernel(input,input));

case 1:

log_local_p = trainLocalDistrAndEvaluateLogDensity(local_trainset, y);

return log_local_p;

case 2:

return pl_log((double)weightsum) - pl_log((double)l);

case 3:

return pl_log((double)weightsum);

case 4:

log_local_p = trainLocalDistrAndEvaluateLogDensity(local_trainset, y);

return log_local_p+pl_log((double)width_n)-pl_log((double)l);

default:

PLERROR("Invalid mode %d", mode);

return 0;

}

}

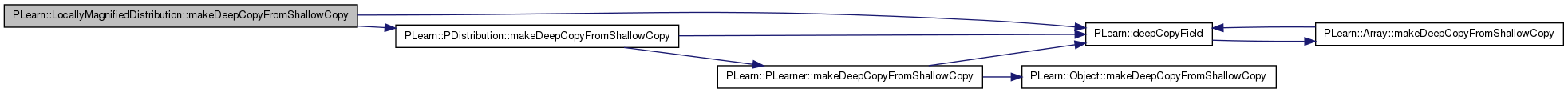

| void PLearn::LocallyMagnifiedDistribution::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transform a shallow copy into a deep copy.

Reimplemented from PLearn::PDistribution.

Definition at line 322 of file LocallyMagnifiedDistribution.cc.

References PLearn::deepCopyField(), localdistr, PLearn::PDistribution::makeDeepCopyFromShallowCopy(), NN, and weighting_kernel.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// ### Call deepCopyField on all "pointer-like" fields

// ### that you wish to be deepCopied rather than

// ### shallow-copied.

// ### ex:

// deepCopyField(trainvec, copies);

deepCopyField(weighting_kernel, copies);

deepCopyField(localdistr, copies);

deepCopyField(NN, copies);

}

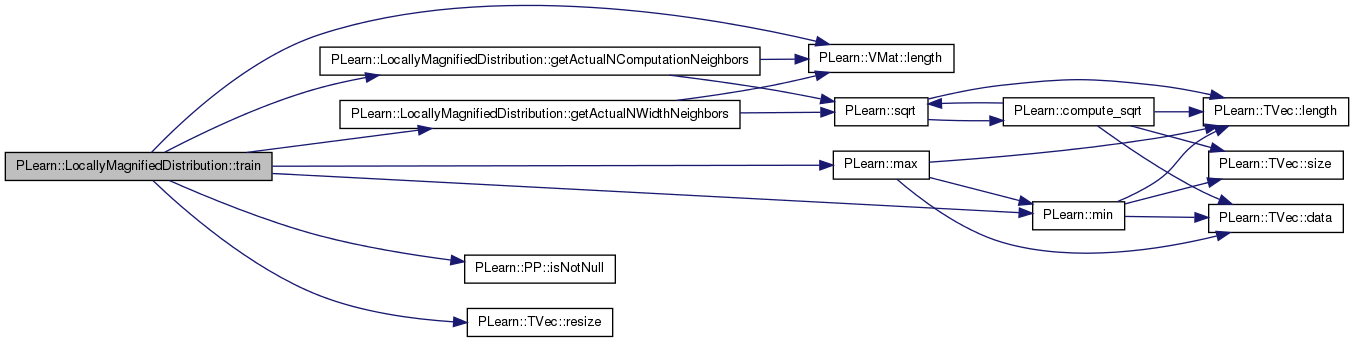

| void PLearn::LocallyMagnifiedDistribution::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage == nstages, updating the train_stats collector with training costs measured on-line in the process.

Reimplemented from PLearn::PDistribution.

Definition at line 360 of file LocallyMagnifiedDistribution.cc.

References getActualNComputationNeighbors(), getActualNWidthNeighbors(), PLearn::PP< T >::isNotNull(), PLearn::VMat::length(), PLearn::max(), PLearn::min(), NN, NN_costs, NN_outputs, PLearn::TVec< T >::resize(), and PLearn::PLearner::train_set.

{

int comp_n = getActualNComputationNeighbors();

int width_n = getActualNWidthNeighbors();

int actual_nneighbors = max(comp_n, width_n);

if(train_set.isNotNull())

actual_nneighbors = min(actual_nneighbors, train_set.length());

if(actual_nneighbors>0)

{

NN = new ExhaustiveNearestNeighbors(); // for now use Exhaustive search and default Euclidean distance

NN->num_neighbors = actual_nneighbors;

NN->copy_input = false;

NN->copy_target = false;

NN->copy_weight = false;

NN->copy_index = true;

NN->build();

if(train_set.isNotNull())

{

NN->setTrainingSet(train_set);

NN->train();

}

NN_outputs.resize(actual_nneighbors);

NN_costs.resize(actual_nneighbors);

}

}

| double PLearn::LocallyMagnifiedDistribution::trainLocalDistrAndEvaluateLogDensity | ( | VMat | local_trainset, |

| Vec | y | ||

| ) | const [protected] |

Definition at line 303 of file LocallyMagnifiedDistribution.cc.

References fix_localdistr_center, PLearn::GaussianDistribution::given_mu, and localdistr.

Referenced by log_density().

{

if(fix_localdistr_center)

{

GaussianDistribution* distr = dynamic_cast<GaussianDistribution*>((PDistribution*)localdistr);

if(distr!=0)

distr->given_mu = y;

}

localdistr->forget();

localdistr->setTrainingSet(local_trainset);

localdistr->train();

double log_local_p = localdistr->log_density(y);

return log_local_p;

}

Reimplemented from PLearn::PDistribution.

Definition at line 138 of file LocallyMagnifiedDistribution.h.

Definition at line 83 of file LocallyMagnifiedDistribution.h.

Referenced by declareOptions(), and getActualNComputationNeighbors().

bool PLearn::LocallyMagnifiedDistribution::display_adapted_width [mutable, protected] |

Definition at line 65 of file LocallyMagnifiedDistribution.h.

Referenced by log_density().

Vec PLearn::LocallyMagnifiedDistribution::emptyvec [protected] |

Definition at line 67 of file LocallyMagnifiedDistribution.h.

Referenced by log_density().

Definition at line 90 of file LocallyMagnifiedDistribution.h.

Referenced by declareOptions(), and trainLocalDistrAndEvaluateLogDensity().

Definition at line 86 of file LocallyMagnifiedDistribution.h.

Referenced by declareOptions(), and log_density().

The distribution that will be trained with local weights.

Definition at line 89 of file LocallyMagnifiedDistribution.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and trainLocalDistrAndEvaluateLogDensity().

Definition at line 82 of file LocallyMagnifiedDistribution.h.

Referenced by declareOptions(), and log_density().

Definition at line 75 of file LocallyMagnifiedDistribution.h.

Referenced by declareOptions(), forget(), log_density(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::LocallyMagnifiedDistribution::NN_costs [mutable, protected] |

Definition at line 69 of file LocallyMagnifiedDistribution.h.

Referenced by log_density(), and train().

Vec PLearn::LocallyMagnifiedDistribution::NN_outputs [mutable, protected] |

Definition at line 68 of file LocallyMagnifiedDistribution.h.

Referenced by log_density(), and train().

Vec PLearn::LocallyMagnifiedDistribution::trainsample [mutable, private] |

Global storage to save memory allocations.

Definition at line 61 of file LocallyMagnifiedDistribution.h.

Referenced by log_density().

Definition at line 85 of file LocallyMagnifiedDistribution.h.

Referenced by declareOptions(), log_density(), and makeDeepCopyFromShallowCopy().

Vec PLearn::LocallyMagnifiedDistribution::weights [mutable, private] |

Definition at line 61 of file LocallyMagnifiedDistribution.h.

Referenced by log_density().

Definition at line 93 of file LocallyMagnifiedDistribution.h.

Referenced by declareOptions(), and log_density().

Definition at line 92 of file LocallyMagnifiedDistribution.h.

Referenced by declareOptions(), and getActualNWidthNeighbors().

Definition at line 94 of file LocallyMagnifiedDistribution.h.

Referenced by declareOptions(), and log_density().

1.7.4

1.7.4