|

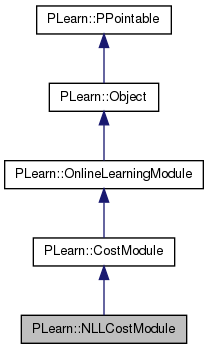

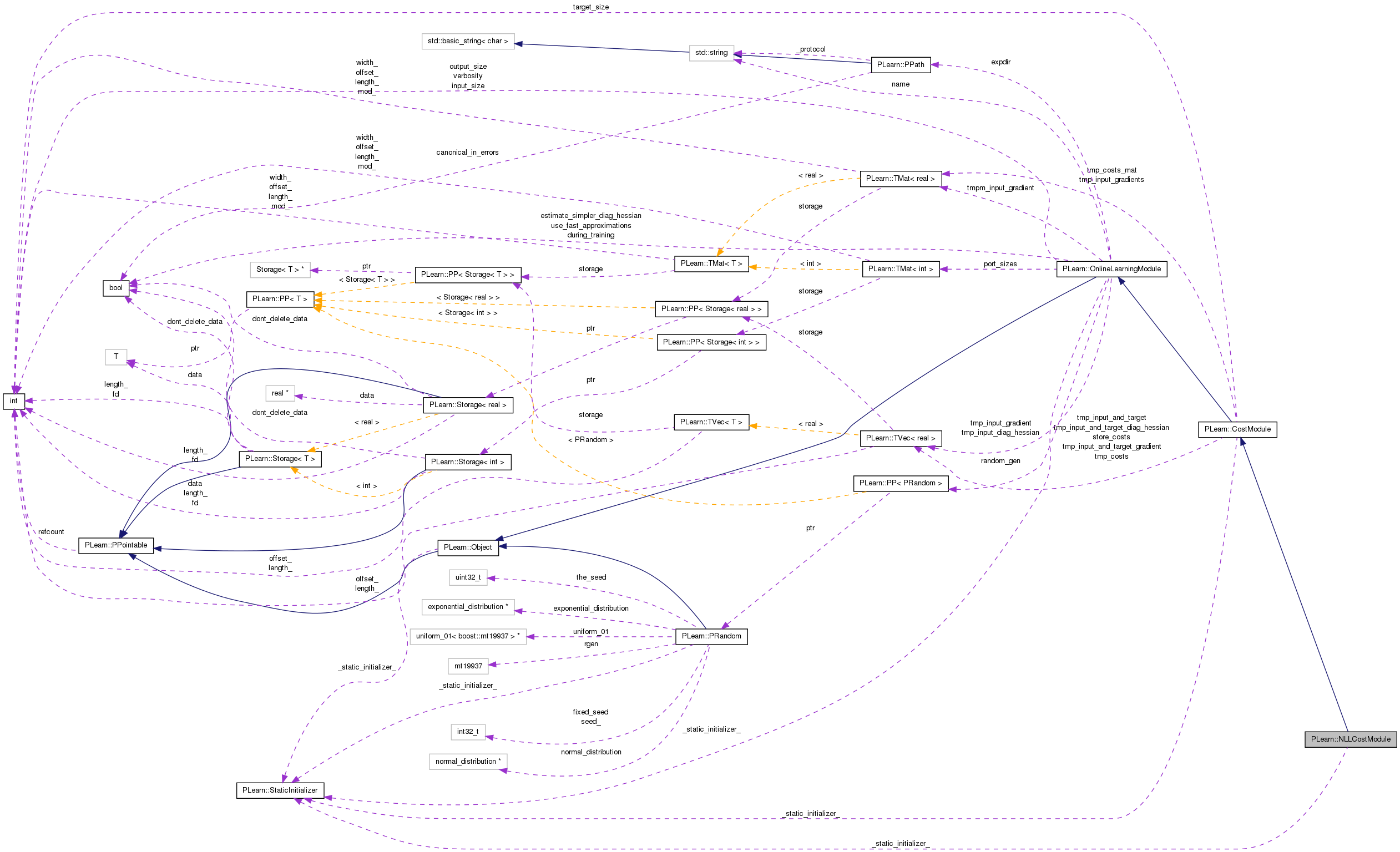

PLearn 0.1

|

|

PLearn 0.1

|

Computes the NLL, given a probability vector and the true class. More...

#include <NLLCostModule.h>

Public Member Functions | |

| NLLCostModule () | |

| Default constructor. | |

| virtual void | fprop (const Vec &input, const Vec &target, Vec &cost) const |

| given the input and target, compute the cost | |

| virtual void | fprop (const Mat &inputs, const Mat &targets, Mat &costs) const |

| batch version | |

| virtual void | fprop (const TVec< Mat * > &ports_value) |

| new version | |

| virtual void | bpropUpdate (const Vec &input, const Vec &target, real cost, Vec &input_gradient, bool accumulate=false) |

| Adapt based on the output gradient: this method should only be called just after a corresponding fprop. | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &targets, const Vec &costs, Mat &input_gradients, bool accumulate=false) |

| Overridden. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &target, real cost) |

| Does nothing. | |

| virtual void | bpropAccUpdate (const TVec< Mat * > &ports_value, const TVec< Mat * > &ports_gradient) |

| New version of backpropagation. | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &target, real cost, Vec &input_gradient, Vec &input_diag_hessian, bool accumulate=false) |

| Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back. | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &target, real cost) |

| Does nothing. | |

| virtual void | setLearningRate (real dynamic_learning_rate) |

| Overridden to do nothing (in particular, no warning). | |

| virtual TVec< string > | costNames () |

| Indicates the name of the computed costs. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual NLLCostModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef CostModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Computes the NLL, given a probability vector and the true class.

If input is the probability vector, and target the index of the true class, this module computes cost = -log( input[target] ), and back-propagates the gradient and diagonal of Hessian.

Definition at line 53 of file NLLCostModule.h.

typedef CostModule PLearn::NLLCostModule::inherited [private] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file NLLCostModule.h.

| PLearn::NLLCostModule::NLLCostModule | ( | ) |

Default constructor.

Definition at line 53 of file NLLCostModule.cc.

{

output_size = 1;

target_size = 1;

}

| string PLearn::NLLCostModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

| OptionList & PLearn::NLLCostModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

| RemoteMethodMap & PLearn::NLLCostModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

| Object * PLearn::NLLCostModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

| StaticInitializer NLLCostModule::_static_initializer_ & PLearn::NLLCostModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

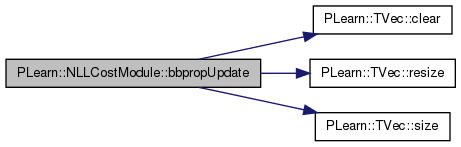

| virtual void PLearn::NLLCostModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost | ||

| ) | [inline, virtual] |

Does nothing.

Reimplemented from PLearn::CostModule.

Definition at line 103 of file NLLCostModule.h.

{}

| void PLearn::NLLCostModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost, | ||

| Vec & | input_gradient, | ||

| Vec & | input_diag_hessian, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back.

Reimplemented from PLearn::CostModule.

Definition at line 297 of file NLLCostModule.cc.

References PLearn::TVec< T >::clear(), PLASSERT_MSG, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::size().

{

if( accumulate )

{

PLASSERT_MSG( input_diag_hessian.size() == input_size,

"Cannot resize input_diag_hessian AND accumulate into it"

);

}

else

{

input_diag_hessian.resize( input_size );

input_diag_hessian.clear();

}

// input_diag_hessian[ i ] = 0 if i!=t

// input_diag_hessian[ t ] = 1/(x[t])^2

int the_target = (int) round( target[0] );

real input_t = input[ the_target ];

input_diag_hessian[ the_target ] += 1. / (input_t * input_t);

bpropUpdate( input, target, cost, input_gradient, accumulate );

}

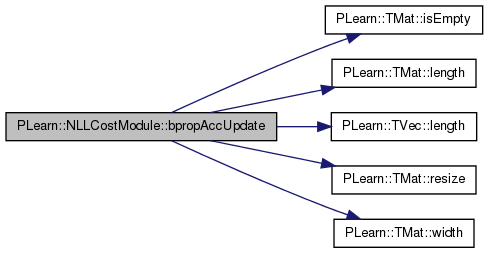

| void PLearn::NLLCostModule::bpropAccUpdate | ( | const TVec< Mat * > & | ports_value, |

| const TVec< Mat * > & | ports_gradient | ||

| ) | [virtual] |

New version of backpropagation.

Reimplemented from PLearn::CostModule.

Definition at line 236 of file NLLCostModule.cc.

References PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLASSERT, PLERROR, PLearn::TMat< T >::resize(), and PLearn::TMat< T >::width().

{

PLASSERT( ports_value.length() == nPorts() );

PLASSERT( ports_gradient.length() == nPorts() );

Mat* prediction = ports_value[0];

Mat* target = ports_value[1];

#ifndef NDEBUG

Mat* cost = ports_value[2];

#endif

Mat* prediction_grad = ports_gradient[0];

Mat* target_grad = ports_gradient[1];

Mat* cost_grad = ports_gradient[2];

// If we have cost_grad and we want prediction_grad

if( prediction_grad && prediction_grad->isEmpty()

&& cost_grad && !cost_grad->isEmpty() )

{

PLASSERT( prediction );

PLASSERT( target );

PLASSERT( cost );

PLASSERT( !target_grad );

PLASSERT( prediction->width() == port_sizes(0,1) );

PLASSERT( target->width() == port_sizes(1,1) );

PLASSERT( cost->width() == port_sizes(2,1) );

PLASSERT( prediction_grad->width() == port_sizes(0,1) );

PLASSERT( cost_grad->width() == port_sizes(2,1) );

PLASSERT( cost_grad->width() == 1 );

int batch_size = prediction->length();

PLASSERT( target->length() == batch_size );

PLASSERT( cost->length() == batch_size );

PLASSERT( cost_grad->length() == batch_size );

prediction_grad->resize(batch_size, port_sizes(0,1));

for( int k=0; k<batch_size; k++ )

{

// input_gradient[ i ] = 0 if i != t,

// input_gradient[ t ] = -1/x[t]

int target_k = (int) round((*target)(k, 0));

(*prediction_grad)(k, target_k) -=

(*cost_grad)(k, 0) / (*prediction)(k, target_k);

}

}

else if( !prediction_grad && !target_grad && !cost_grad )

return;

else if( !cost_grad && prediction_grad && prediction_grad->isEmpty() )

PLERROR("In NLLCostModule::bpropAccUpdate - cost gradient is NULL,\n"

"cannot compute prediction gradient. Maybe you should set\n"

"\"propagate_gradient = 0\" on the incoming connection.\n");

else

PLERROR("In OnlineLearningModule::bpropAccUpdate - Port configuration "

"not implemented for class '%s'", classname().c_str());

checkProp(ports_value);

checkProp(ports_gradient);

}

| void PLearn::NLLCostModule::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| const Vec & | costs, | ||

| Mat & | input_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Overridden.

Reimplemented from PLearn::CostModule.

Definition at line 210 of file NLLCostModule.cc.

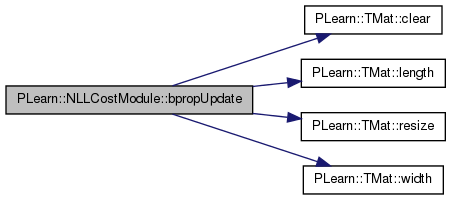

References PLearn::TMat< T >::clear(), i, PLearn::TMat< T >::length(), PLASSERT, PLASSERT_MSG, PLearn::TMat< T >::resize(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

PLASSERT( targets.width() == target_size );

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == input_size &&

input_gradients.length() == inputs.length(),

"Cannot resize input_gradients and accumulate into it" );

}

else

{

input_gradients.resize(inputs.length(), input_size );

input_gradients.clear();

}

// input_gradient[ i ] = 0 if i != t,

// input_gradient[ t ] = -1/x[t]

for (int i = 0; i < inputs.length(); i++) {

int the_target = (int) round( targets(i, 0) );

input_gradients(i, the_target) -= 1. / inputs(i, the_target);

}

}

| void PLearn::NLLCostModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost, | ||

| Vec & | input_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Adapt based on the output gradient: this method should only be called just after a corresponding fprop.

Reimplemented from PLearn::CostModule.

Definition at line 187 of file NLLCostModule.cc.

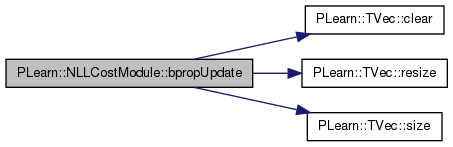

References PLearn::TVec< T >::clear(), PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::size().

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == input_size,

"Cannot resize input_gradient AND accumulate into it" );

}

else

{

input_gradient.resize( input_size );

input_gradient.clear();

}

int the_target = (int) round( target[0] );

// input_gradient[ i ] = 0 if i != t,

// input_gradient[ t ] = -1/x[t]

input_gradient[ the_target ] -= 1. / input[ the_target ];

}

| virtual void PLearn::NLLCostModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost | ||

| ) | [inline, virtual] |

Does nothing.

Reimplemented from PLearn::CostModule.

Definition at line 89 of file NLLCostModule.h.

{}

| void PLearn::NLLCostModule::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::CostModule.

Definition at line 74 of file NLLCostModule.cc.

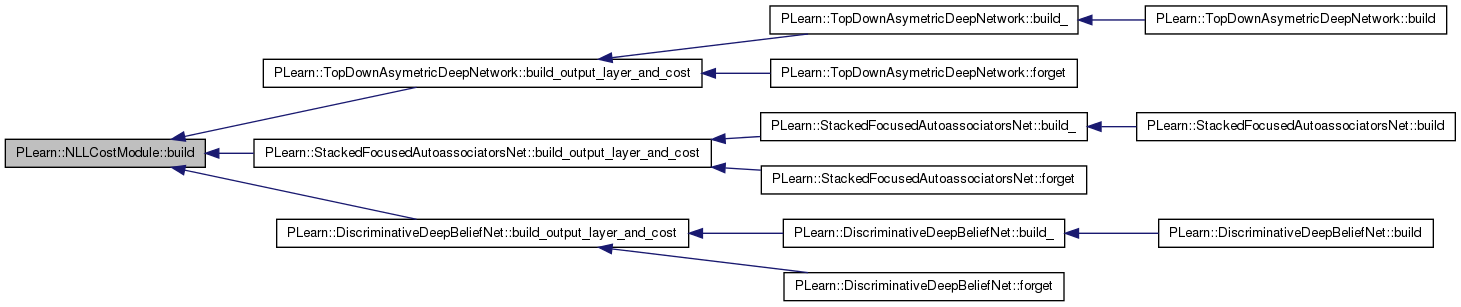

Referenced by PLearn::TopDownAsymetricDeepNetwork::build_output_layer_and_cost(), PLearn::StackedFocusedAutoassociatorsNet::build_output_layer_and_cost(), and PLearn::DiscriminativeDeepBeliefNet::build_output_layer_and_cost().

{

inherited::build();

build_();

}

| void PLearn::NLLCostModule::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::CostModule.

Definition at line 69 of file NLLCostModule.cc.

{

}

| string PLearn::NLLCostModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

| TVec< string > PLearn::NLLCostModule::costNames | ( | ) | [virtual] |

Indicates the name of the computed costs.

Reimplemented from PLearn::CostModule.

Definition at line 323 of file NLLCostModule.cc.

| void PLearn::NLLCostModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::CostModule.

Definition at line 59 of file NLLCostModule.cc.

{

// declareOption(ol, "myoption", &NLLCostModule::myoption,

// OptionBase::buildoption,

// "Help text describing this option");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::NLLCostModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::CostModule.

Definition at line 117 of file NLLCostModule.h.

:

//##### Protected Member Functions ######################################

| NLLCostModule * PLearn::NLLCostModule::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

| void PLearn::NLLCostModule::fprop | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| Mat & | costs | ||

| ) | const [virtual] |

batch version

Reimplemented from PLearn::CostModule.

Definition at line 110 of file NLLCostModule.cc.

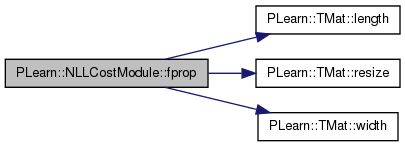

References PLearn::TMat< T >::length(), pl_log, PLASSERT, PLearn::TMat< T >::resize(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

PLASSERT( targets.width() == target_size );

int batch_size = inputs.length();

PLASSERT( inputs.length() == batch_size );

PLASSERT( targets.length() == batch_size );

costs.resize(batch_size, output_size);

for( int k=0; k<batch_size; k++ )

{

int target_k = (int) round( targets(k, 0) );

costs(k, 0) = -pl_log( inputs(k, target_k) );

}

}

| void PLearn::NLLCostModule::fprop | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | cost | ||

| ) | const [virtual] |

given the input and target, compute the cost

Reimplemented from PLearn::CostModule.

Definition at line 90 of file NLLCostModule.cc.

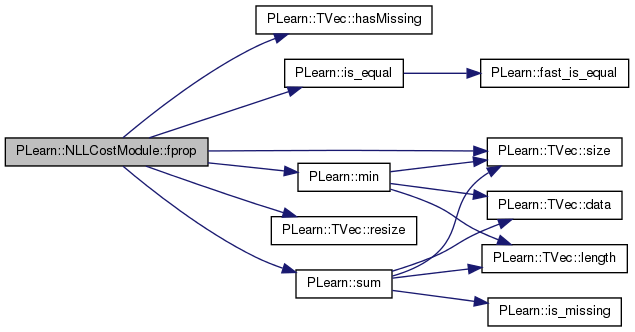

References PLearn::TVec< T >::hasMissing(), PLearn::is_equal(), PLearn::min(), MISSING_VALUE, pl_log, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), and PLearn::sum().

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

cost.resize( output_size );

if( input.hasMissing() )

cost[0] = MISSING_VALUE;

else

{

PLASSERT_MSG( min(input) >= 0.,

"Elements of \"input\" should be positive" );

PLASSERT_MSG( is_equal( sum(input), 1. ),

"Elements of \"input\" should sum to 1" );

int the_target = (int) round( target[0] );

cost[0] = -pl_log( input[ the_target ] );

}

}

new version

Reimplemented from PLearn::CostModule.

Definition at line 129 of file NLLCostModule.cc.

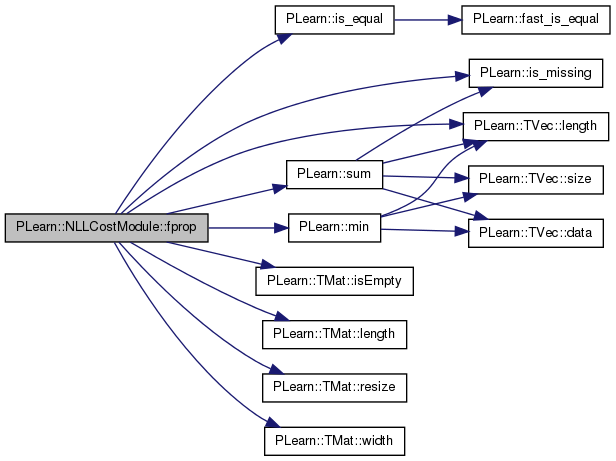

References i, PLearn::is_equal(), PLearn::is_missing(), PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::min(), MISSING_VALUE, pl_log, PLASSERT, PLASSERT_MSG, PLCHECK_MSG, PLERROR, PLearn::TMat< T >::resize(), PLearn::sum(), and PLearn::TMat< T >::width().

{

PLASSERT( ports_value.length() == nPorts() );

Mat* prediction = ports_value[0];

Mat* target = ports_value[1];

Mat* cost = ports_value[2];

// If we have prediction and target, and we want cost

if( prediction && !prediction->isEmpty()

&& target && !target->isEmpty()

&& cost && cost->isEmpty() )

{

PLASSERT( prediction->width() == port_sizes(0, 1) );

PLASSERT( target->width() == port_sizes(1, 1) );

int batch_size = prediction->length();

PLASSERT( target->length() == batch_size );

cost->resize(batch_size, port_sizes(2, 1));

for( int i=0; i<batch_size; i++ )

{

if( (*prediction)(i).hasMissing() || is_missing((*target)(i,0)) )

(*cost)(i,0) = MISSING_VALUE;

else

{

#ifdef BOUNDCHECK

PLASSERT_MSG( min((*prediction)(i)) >= 0.,

"Elements of \"prediction\" should be positive" );

// Ensure the distribution probabilities sum to 1. We relax a

// bit the default tolerance as probabilities using

// exponentials could suffer numerical imprecisions.

if (!is_equal( sum((*prediction)(i)), 1., 1., 1e-5, 1e-5 ))

PLERROR("In NLLCostModule::fprop - Elements of"

" \"prediction\" should sum to 1"

" (found a sum = %f at row %d)",

sum((*prediction)(i)), i);

#endif

int target_i = (int) round( (*target)(i,0) );

PLASSERT( is_equal( (*target)(i, 0), target_i ) );

(*cost)(i,0) = -pl_log( (*prediction)(i, target_i) );

}

}

}

else if( !prediction && !target && !cost )

return;

else

PLCHECK_MSG( false, "Unknown port configuration" );

checkProp(ports_value);

}

| OptionList & PLearn::NLLCostModule::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

| OptionMap & PLearn::NLLCostModule::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

| RemoteMethodMap & PLearn::NLLCostModule::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file NLLCostModule.cc.

| void PLearn::NLLCostModule::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::CostModule.

Definition at line 81 of file NLLCostModule.cc.

{

inherited::makeDeepCopyFromShallowCopy(copies);

}

| virtual void PLearn::NLLCostModule::setLearningRate | ( | real | dynamic_learning_rate | ) | [inline, virtual] |

Overridden to do nothing (in particular, no warning).

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 107 of file NLLCostModule.h.

{}

Reimplemented from PLearn::CostModule.

Definition at line 117 of file NLLCostModule.h.

1.7.4

1.7.4