|

PLearn 0.1

|

|

PLearn 0.1

|

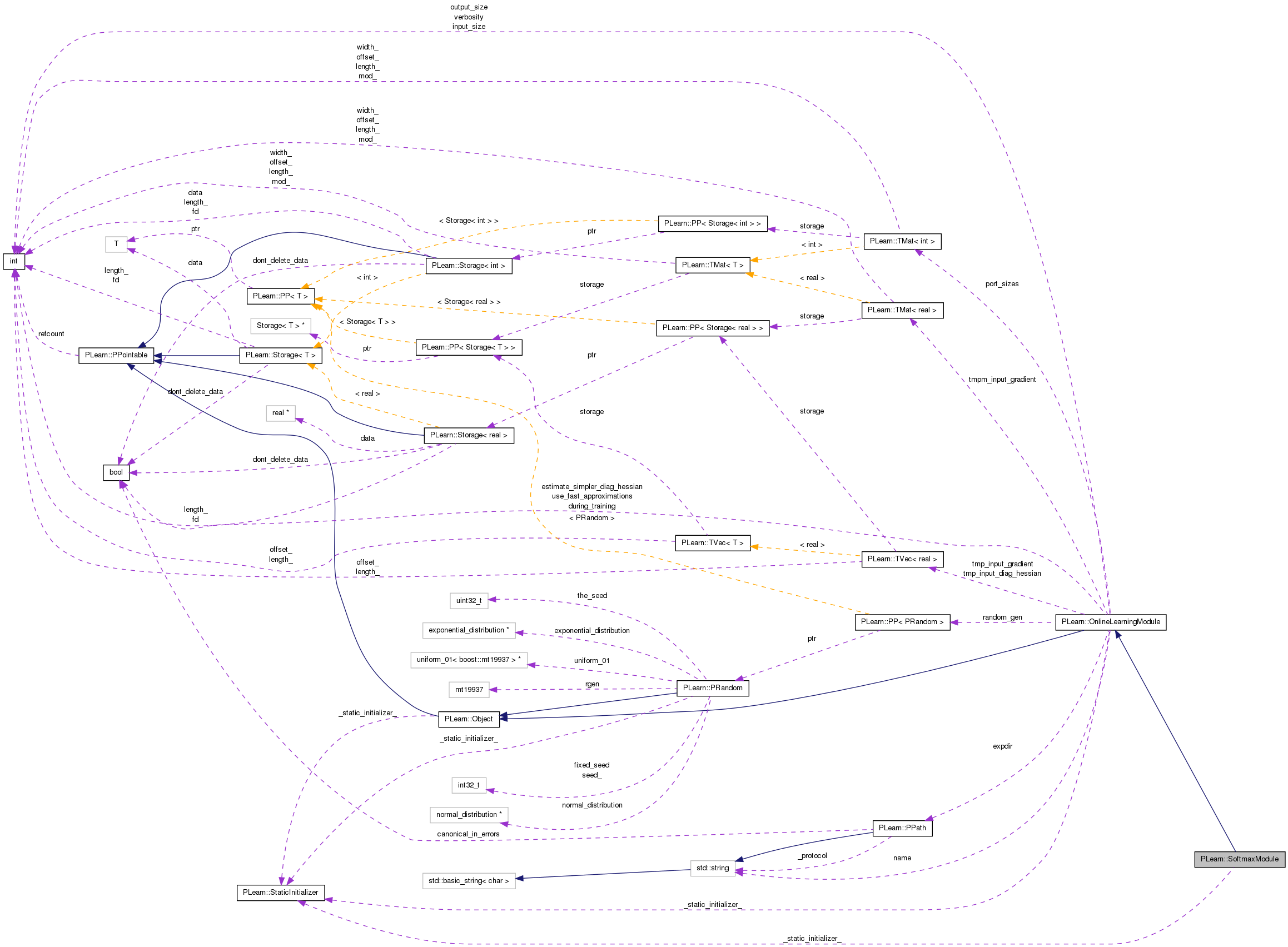

Computes the softmax function on a vector. More...

#include <SoftmaxModule.h>

Public Member Functions | |

| SoftmaxModule () | |

| Default constructor. | |

| virtual void | fprop (const Vec &input, Vec &output) const |

| given the input, compute the output (possibly resize it appropriately) | |

| virtual void | fprop (const Mat &inputs, Mat &outputs) |

| Overridden. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false) |

| this version allows to obtain the input gradient as well | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, Vec &input_diag_hessian, const Vec &output_diag_hessian, bool accumulate=false) |

| this version allows to obtain the input gradient and diag_hessian | |

| virtual void | forget () |

| reset the parameters to the state they would be BEFORE starting training. | |

| virtual void | setLearningRate (real dynamic_learning_rate) |

| Overridden to do nothing (no warning message in particular). | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual SoftmaxModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

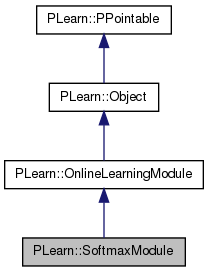

| typedef OnlineLearningModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Computes the softmax function on a vector.

Definition at line 50 of file SoftmaxModule.h.

typedef OnlineLearningModule PLearn::SoftmaxModule::inherited [private] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file SoftmaxModule.h.

| PLearn::SoftmaxModule::SoftmaxModule | ( | ) |

| string PLearn::SoftmaxModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 51 of file SoftmaxModule.cc.

| OptionList & PLearn::SoftmaxModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 51 of file SoftmaxModule.cc.

| RemoteMethodMap & PLearn::SoftmaxModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 51 of file SoftmaxModule.cc.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 51 of file SoftmaxModule.cc.

| Object * PLearn::SoftmaxModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftmaxModule.cc.

| StaticInitializer SoftmaxModule::_static_initializer_ & PLearn::SoftmaxModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 51 of file SoftmaxModule.cc.

| void PLearn::SoftmaxModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| Vec & | input_diag_hessian, | ||

| const Vec & | output_diag_hessian, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

this version allows to obtain the input gradient and diag_hessian

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 200 of file SoftmaxModule.cc.

References PLERROR.

{

PLERROR( "Not implemented yet, please come back later or complain to"

" lamblinp." );

}

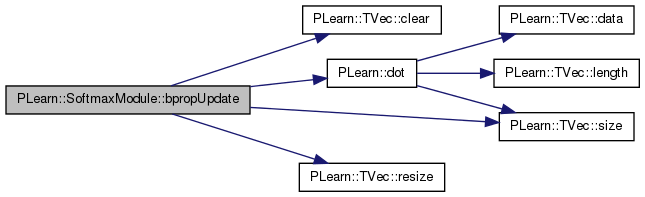

| void PLearn::SoftmaxModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

this version allows to obtain the input gradient as well

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 122 of file SoftmaxModule.cc.

References PLearn::TVec< T >::clear(), PLearn::dot(), i, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::size().

{

PLASSERT( input.size() == input_size );

PLASSERT( output.size() == output_size );

PLASSERT( output_gradient.size() == output_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == input_size,

"Cannot resize input_gradient AND accumulate into it" );

}

else

{

input_gradient.resize( input_size );

input_gradient.clear();

}

// input_gradient[i] = output_gradient[i] * output[i]

// - (output_gradient . output ) output[i]

real outg_dot_out = dot( output_gradient, output );

for( int i=0 ; i<input_size ; i++ )

{

real in_grad_i = (output_gradient[i] - outg_dot_out) * output[i];

input_gradient[i] += in_grad_i;

}

}

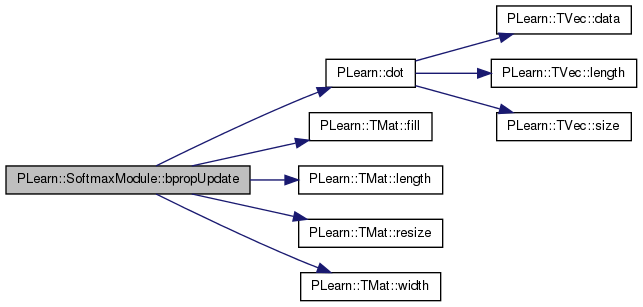

| void PLearn::SoftmaxModule::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 152 of file SoftmaxModule.cc.

References PLearn::dot(), PLearn::TMat< T >::fill(), i, j, PLearn::TMat< T >::length(), PLASSERT, PLASSERT_MSG, PLearn::TMat< T >::resize(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

PLASSERT( outputs.width() == output_size );

PLASSERT( output_gradients.width() == output_size );

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == input_size &&

input_gradients.length() == inputs.length(),

"Cannot resize input_gradients and accumulate into it" );

}

else

{

input_gradients.resize(inputs.length(), input_size);

input_gradients.fill(0);

}

for (int j = 0; j < inputs.length(); j++) {

// input_gradient[i] = output_gradient[i] * output[i]

// - (output_gradient . output ) output[i]

real outg_dot_out = dot(output_gradients(j), outputs(j));

for( int i=0 ; i<input_size ; i++ )

input_gradients(j, i) +=

(output_gradients(j, i) - outg_dot_out) * outputs(j, i);

}

}

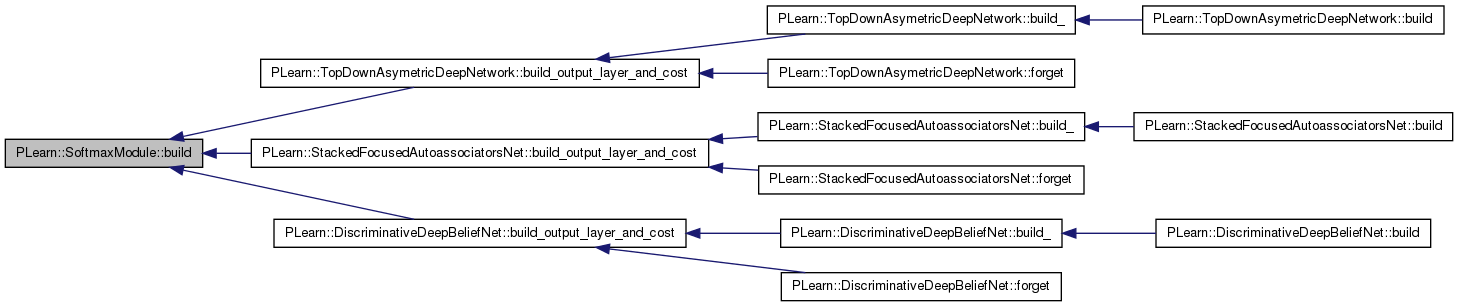

| void PLearn::SoftmaxModule::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 85 of file SoftmaxModule.cc.

Referenced by PLearn::TopDownAsymetricDeepNetwork::build_output_layer_and_cost(), PLearn::StackedFocusedAutoassociatorsNet::build_output_layer_and_cost(), and PLearn::DiscriminativeDeepBeliefNet::build_output_layer_and_cost().

{

inherited::build();

build_();

}

| void PLearn::SoftmaxModule::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 77 of file SoftmaxModule.cc.

{

output_size = input_size;

}

| string PLearn::SoftmaxModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftmaxModule.cc.

| void PLearn::SoftmaxModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 62 of file SoftmaxModule.cc.

References PLearn::OptionBase::nosave, PLearn::OnlineLearningModule::output_size, and PLearn::redeclareOption().

{

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

// Hide unused options.

redeclareOption(ol, "output_size", &SoftmaxModule::output_size,

OptionBase::nosave,

"Set at build time.");

}

| static const PPath& PLearn::SoftmaxModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 104 of file SoftmaxModule.h.

:

//##### Protected Member Functions ######################################

| SoftmaxModule * PLearn::SoftmaxModule::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 51 of file SoftmaxModule.cc.

| void PLearn::SoftmaxModule::forget | ( | ) | [virtual] |

reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Definition at line 186 of file SoftmaxModule.cc.

{

}

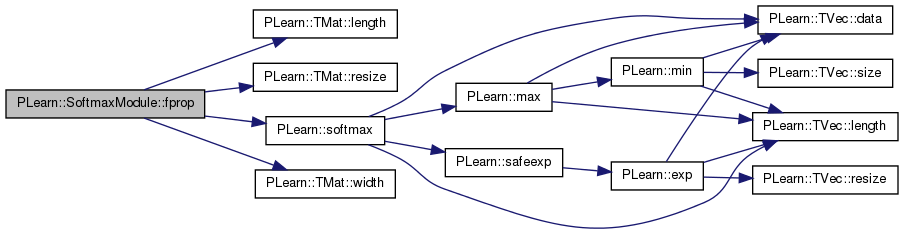

Overridden.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 110 of file SoftmaxModule.cc.

References i, PLearn::TMat< T >::length(), n, PLASSERT, PLearn::TMat< T >::resize(), PLearn::softmax(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

int n = inputs.length();

outputs.resize(n, output_size );

for (int i = 0; i < n; i++)

softmax(inputs(i), outputs(i));

}

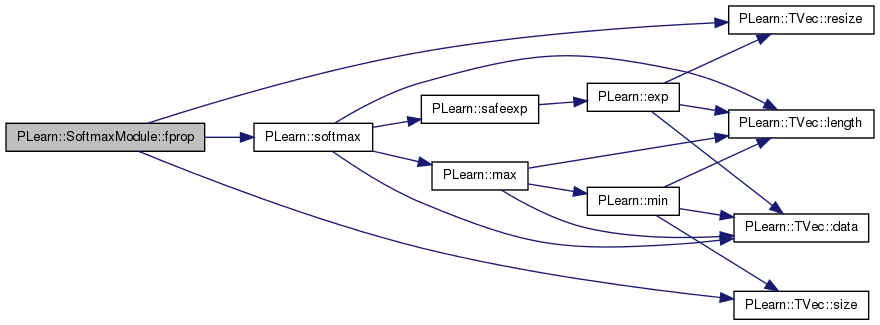

given the input, compute the output (possibly resize it appropriately)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 102 of file SoftmaxModule.cc.

References PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), and PLearn::softmax().

{

PLASSERT( input.size() == input_size );

output.resize( output_size );

softmax( input, output );

}

| OptionList & PLearn::SoftmaxModule::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftmaxModule.cc.

| OptionMap & PLearn::SoftmaxModule::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftmaxModule.cc.

| RemoteMethodMap & PLearn::SoftmaxModule::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftmaxModule.cc.

| void PLearn::SoftmaxModule::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 94 of file SoftmaxModule.cc.

{

inherited::makeDeepCopyFromShallowCopy(copies);

}

| void PLearn::SoftmaxModule::setLearningRate | ( | real | dynamic_learning_rate | ) | [virtual] |

Overridden to do nothing (no warning message in particular).

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 193 of file SoftmaxModule.cc.

{

}

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 104 of file SoftmaxModule.h.

1.7.4

1.7.4