|

PLearn 0.1

|

|

PLearn 0.1

|

#include <StackedLearner.h>

Public Member Functions | |

| StackedLearner () | |

| Default constructor. | |

| void | setTrainStatsCollector (PP< VecStatsCollector > statscol) |

| Forwarded to combiner. | |

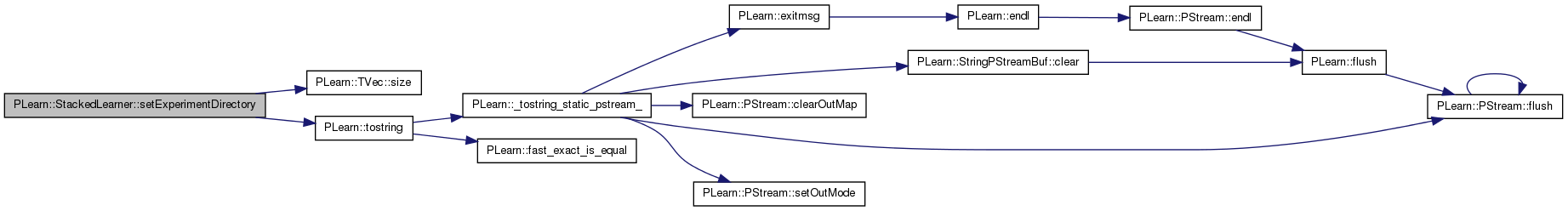

| virtual void | setExperimentDirectory (const PPath &the_expdir) |

| Forwarded to inner learners. | |

| virtual void | build () |

| simply calls inherited::build() then build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual StackedLearner * | deepCopy (CopiesMap &copies) const |

| virtual int | outputsize () const |

| returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options) | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual bool | computeConfidenceFromOutput (const Vec &input, const Vec &output, real probability, TVec< pair< real, real > > &intervals) const |

| Forwarded to combiner after passing input vector through baselearners. | |

| virtual TVec< string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method) | |

| virtual TVec< string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

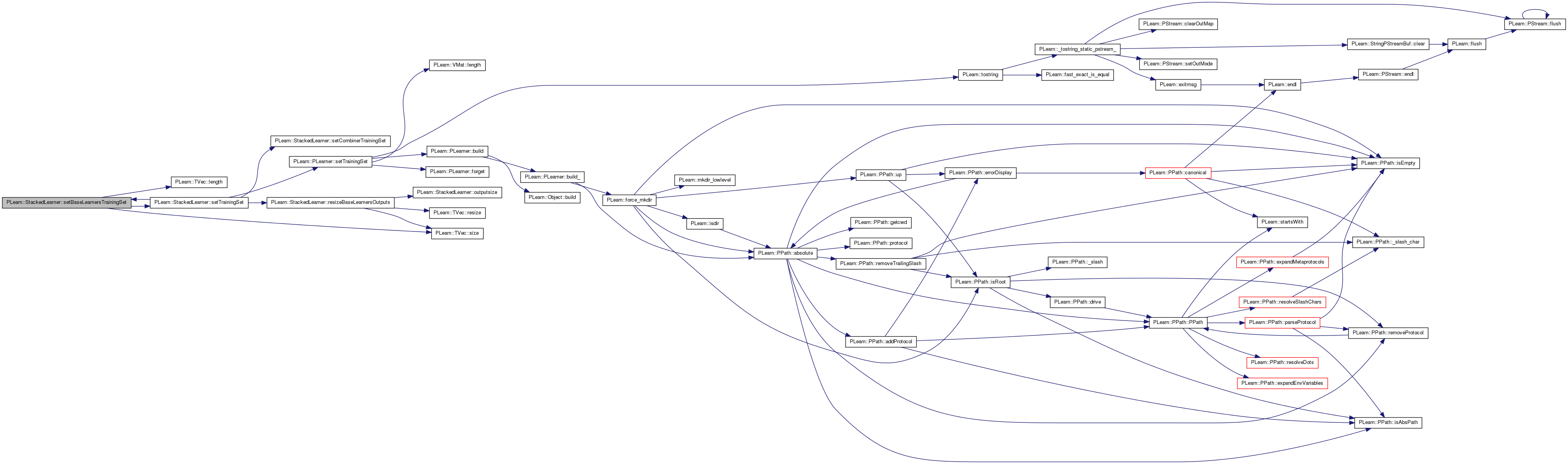

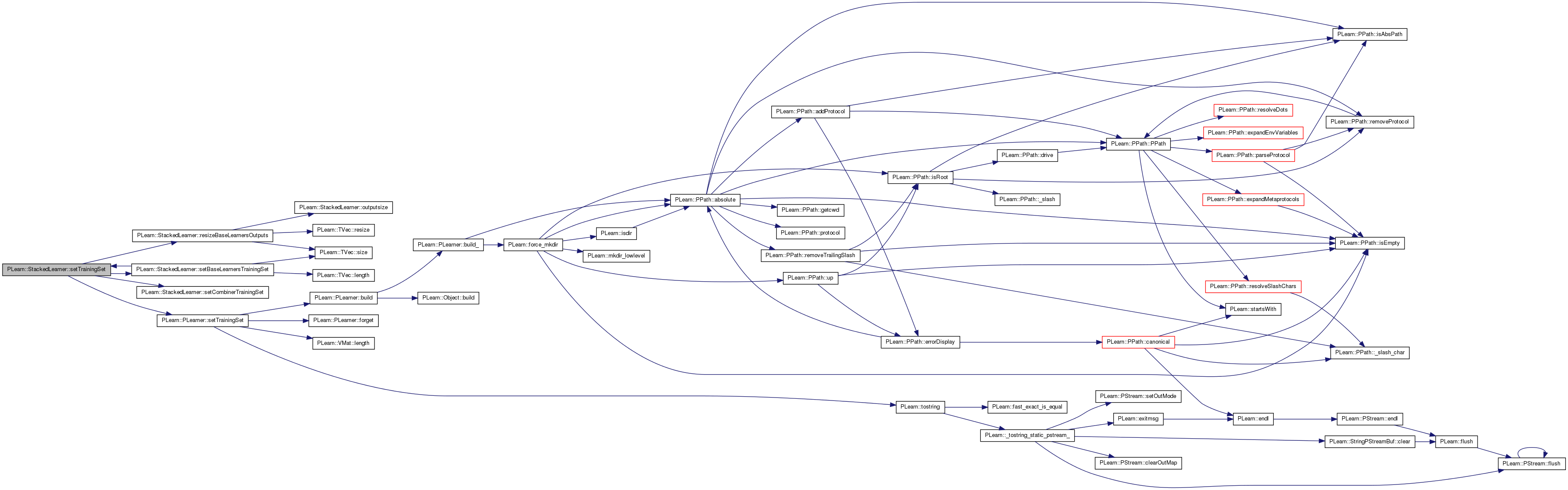

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Declares the train_set Then calls build() and forget() if necessary. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| TVec< PP< PLearner > > | base_learners |

| A set of 1st level base learners that are independently trained (here or elsewhere) and whose outputs will serve as inputs to the combiner. | |

| PP< PLearner > | combiner |

| A learner that is trained (possibly on a data set different from the one used to train the base_learners) using the outputs of the base_learners as inputs. | |

| string | default_operation |

| if no combiner is provided, simple operation to be performed on the outputs of the base_learners. | |

| PP< Splitter > | splitter |

| This can be and which data subset(s) goes to training the combiner. | |

| PP< Splitter > | base_train_splitter |

| This can be used to split the training set into different training sets for each base learner. | |

| bool | train_base_learners |

| whether to train the base learners in the method train (otherwise they should be initialized at construction / setOption time) | |

| bool | normalize_base_learners_output |

| If set to 1, the output of the base learners on the combiner training set will be normalized (zero mean, unit variance). | |

| bool | precompute_base_learners_output |

| If set to 1, the output of the base learners on the combiner training set will be precomputed in memory before training the combiner. | |

| bool | put_raw_input |

| Optionally put the raw input as additional input of the combiner. | |

| bool | share_learner |

| If set to 1, the input is divided in nsep equal parts, and a common learner is trained as if each part constitutes a training example. | |

| int | nsep |

| Number of input separations. | |

| bool | put_costs |

| optionally put the costs of all the learners as additional inputs of the combiner (NB: this option actually isn't implemented yet) | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

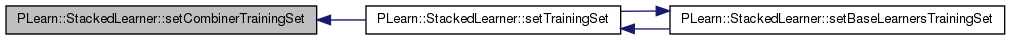

| void | setBaseLearnersTrainingSet (VMat base_trainset, bool call_forget) |

| Utility to set training set of base learners. | |

| void | setCombinerTrainingSet (VMat comb_trainset, bool call_forget) |

| Utility to set training set of combiner. | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| TVec< Vec > | base_learners_outputs |

| Temporary buffers for the output of base learners. | |

| Vec | all_base_learners_outputs |

| Buffer for concatenated output of base learners. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | resizeBaseLearnersOutputs () |

| Resize 'base_learners_outputs' to fit with current base learners. | |

Definition at line 52 of file StackedLearner.h.

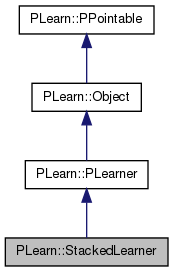

typedef PLearner PLearn::StackedLearner::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file StackedLearner.h.

| PLearn::StackedLearner::StackedLearner | ( | ) |

Default constructor.

Definition at line 53 of file StackedLearner.cc.

: default_operation("mean"), base_train_splitter(0), train_base_learners(true), normalize_base_learners_output(false), precompute_base_learners_output(false), put_raw_input(false), share_learner(false), nsep(1) { }

| string PLearn::StackedLearner::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 84 of file StackedLearner.cc.

| OptionList & PLearn::StackedLearner::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 84 of file StackedLearner.cc.

| RemoteMethodMap & PLearn::StackedLearner::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 84 of file StackedLearner.cc.

Reimplemented from PLearn::PLearner.

Definition at line 84 of file StackedLearner.cc.

| Object * PLearn::StackedLearner::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 84 of file StackedLearner.cc.

| StaticInitializer StackedLearner::_static_initializer_ & PLearn::StackedLearner::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 84 of file StackedLearner.cc.

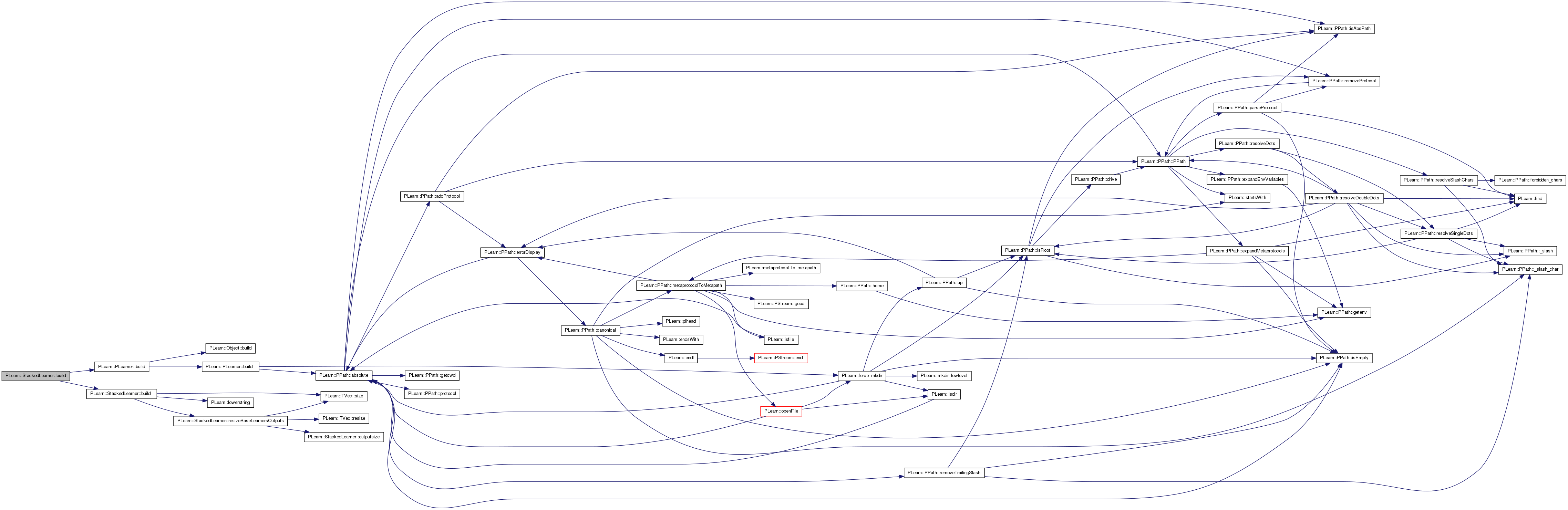

| void PLearn::StackedLearner::build | ( | ) | [virtual] |

simply calls inherited::build() then build_()

Reimplemented from PLearn::PLearner.

Definition at line 197 of file StackedLearner.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

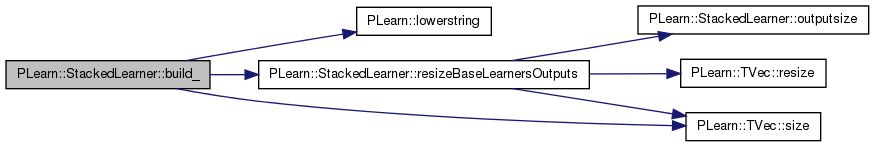

| void PLearn::StackedLearner::build_ | ( | ) | [private] |

This does the actual building.

NOTE BY NICOLAS: according to Pascal, it's not kosher to call build inside build for no reason, since the inner objects HAVE ALREADY been built. You should uncomment the following code only FOR GOOD REASON.

for (int i=0;i<base_learners.length();i++) { if (!base_learners[i]) PLERROR("StackedLearner::build: base learners have not been created!"); base_learners[i]->build(); if (i>0 && base_learners[i]->outputsize()!=base_learners[i-1]->outputsize()) PLERROR("StackedLearner: expecting base learners to have the same number of outputs!"); } if (combiner) combiner->build(); if (splitter) splitter->build();

Reimplemented from PLearn::PLearner.

Definition at line 160 of file StackedLearner.cc.

References base_learners, base_train_splitter, default_operation, PLearn::lowerstring(), PLERROR, resizeBaseLearnersOutputs(), share_learner, PLearn::TVec< T >::size(), and splitter.

Referenced by build().

{

if (base_learners.size() == 0)

PLERROR("StackedLearner::build_: no base learners specified! Use the "

"'base_learners' option");

if (splitter && splitter->nSetsPerSplit()!=2)

PLERROR("StackedLearner: the Splitter should produce only two sets per split, got %d",

splitter->nSetsPerSplit());

if(share_learner && base_train_splitter)

PLERROR("StackedLearner::build_: options 'base_train_splitter' and 'share_learner'\n"

"cannot both be true");

resizeBaseLearnersOutputs();

default_operation = lowerstring( default_operation );

}

| string PLearn::StackedLearner::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 84 of file StackedLearner.cc.

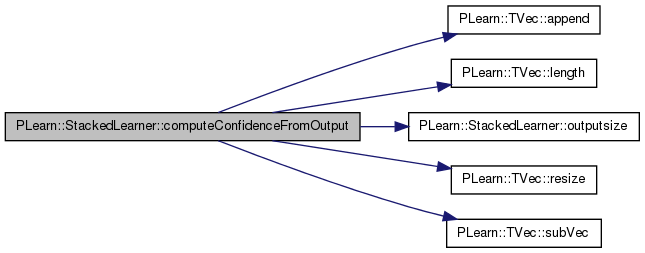

| bool PLearn::StackedLearner::computeConfidenceFromOutput | ( | const Vec & | input, |

| const Vec & | output, | ||

| real | probability, | ||

| TVec< pair< real, real > > & | intervals | ||

| ) | const [virtual] |

Forwarded to combiner after passing input vector through baselearners.

Reimplemented from PLearn::PLearner.

Definition at line 417 of file StackedLearner.cc.

References all_base_learners_outputs, PLearn::TVec< T >::append(), base_learners, base_learners_outputs, combiner, i, PLearn::TVec< T >::length(), nsep, outputsize(), PLERROR, put_raw_input, PLearn::TVec< T >::resize(), share_learner, and PLearn::TVec< T >::subVec().

{

if (! combiner)

PLERROR("StackedLearner::computeConfidenceFromOutput: a 'combiner' must be specified "

"in order to compute confidence intervals.");

all_base_learners_outputs.resize(0);

if(share_learner)

{

for (int i=0;i<nsep;i++)

{

if (!base_learners[0])

PLERROR("StackedLearner::computeOutput: base learners have not been created!");

base_learners_outputs[0].resize(base_learners[0]->outputsize());

base_learners[0]->computeOutput(input.subVec(i*input.length()/nsep,

input.length()/nsep),

base_learners_outputs[i]);

all_base_learners_outputs.append(base_learners_outputs[i]);

}

}

else

{

for (int i=0;i<base_learners.length();i++)

{

if (!base_learners[i])

PLERROR("StackedLearner::computeOutput: base learners have not been created!");

base_learners_outputs[i].resize(base_learners[i]->outputsize());

base_learners[i]->computeOutput(input, base_learners_outputs[i]);

all_base_learners_outputs.append(base_learners_outputs[i]);

}

}

if (put_raw_input)

all_base_learners_outputs.append(input);

return combiner->computeConfidenceFromOutput(all_base_learners_outputs,

output, probability, intervals);

}

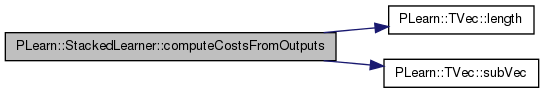

| void PLearn::StackedLearner::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 401 of file StackedLearner.cc.

References all_base_learners_outputs, base_learners, combiner, PLearn::TVec< T >::length(), nsep, share_learner, and PLearn::TVec< T >::subVec().

{

if (combiner)

combiner->computeCostsFromOutputs(all_base_learners_outputs,

output,target,costs);

else // cheat

{

if(share_learner)

base_learners[0]->computeCostsFromOutputs(input.subVec(0,input.length() / nsep),

output, target, costs);

else

base_learners[0]->computeCostsFromOutputs(input,output,target,costs);

}

}

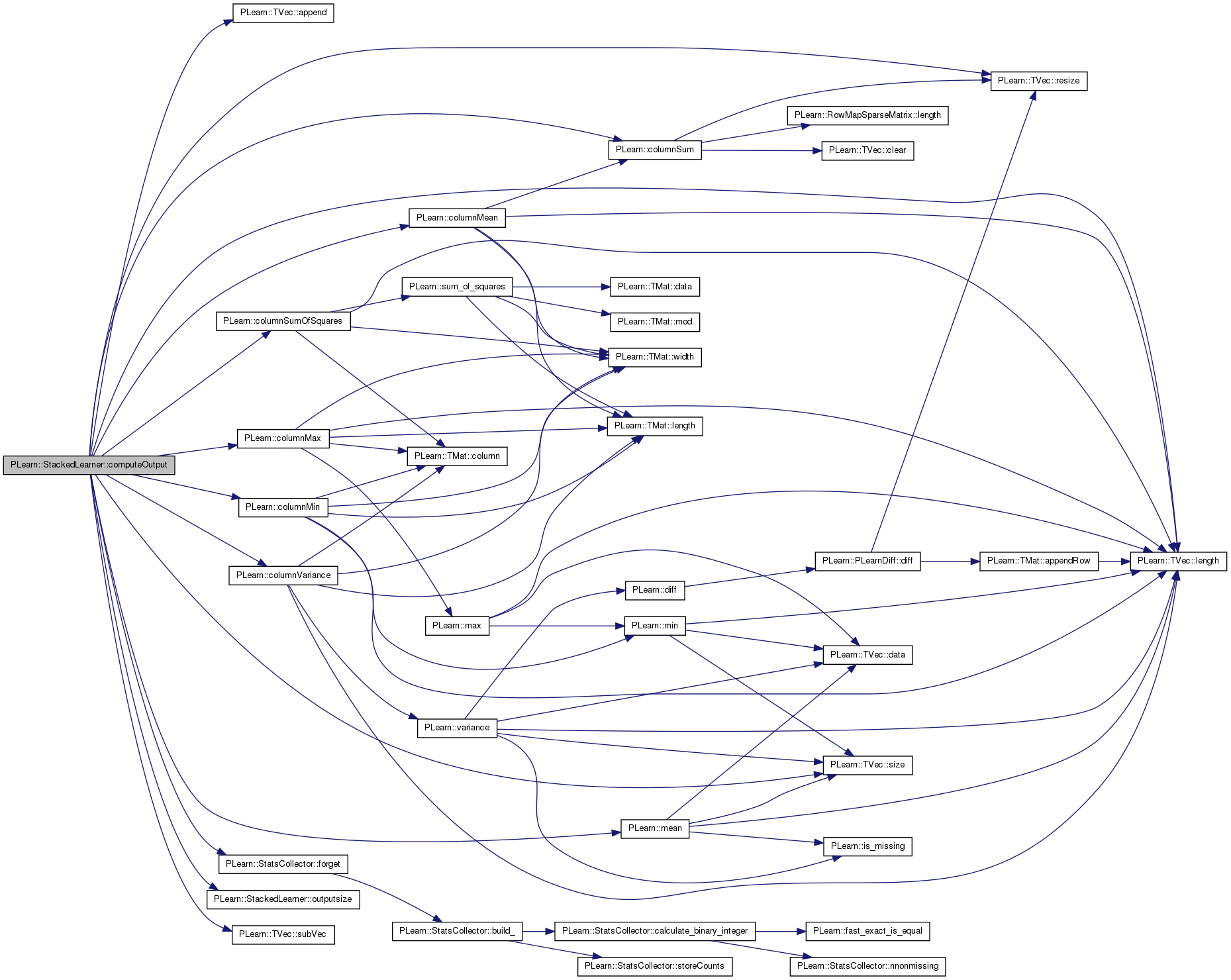

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 322 of file StackedLearner.cc.

References all_base_learners_outputs, PLearn::TVec< T >::append(), base_learners, base_learners_outputs, PLearn::columnMax(), PLearn::columnMean(), PLearn::columnMin(), PLearn::columnSum(), PLearn::columnSumOfSquares(), PLearn::columnVariance(), combiner, default_operation, PLearn::StatsCollector::forget(), i, j, PLearn::TVec< T >::length(), PLearn::mean(), n, nsep, outputsize(), PLASSERT, PLERROR, put_raw_input, PLearn::TVec< T >::resize(), share_learner, PLearn::TVec< T >::size(), and PLearn::TVec< T >::subVec().

{

all_base_learners_outputs.resize(0);

if(share_learner) {

for (int i=0;i<nsep;i++) {

if (!base_learners[0])

PLERROR("StackedLearner::computeOutput: base learners have not been created!");

base_learners_outputs[i].resize(base_learners[0]->outputsize());

base_learners[0]->computeOutput(input.subVec(i*input.length() / nsep,

input.length() / nsep),

base_learners_outputs[i]);

// append() will be costly only the first time computeOutputAndCosts

// is called; afterwards storage will NOT be reallocated

all_base_learners_outputs.append(base_learners_outputs[i]);

}

}

else {

for (int i=0;i<base_learners.length();i++) {

if (!base_learners[i])

PLERROR("StackedLearner::computeOutput: base learners have not been created!");

base_learners_outputs[i].resize(base_learners[i]->outputsize());

base_learners[i]->computeOutput(input, base_learners_outputs[i]);

// append() will be costly only the first time computeOutputAndCosts

// is called; afterwards storage will NOT be reallocated

all_base_learners_outputs.append(base_learners_outputs[i]);

}

}

if (put_raw_input)

all_base_learners_outputs.append(input);

if (combiner)

combiner->computeOutput(all_base_learners_outputs, output);

else // just performs default_operation on the outputs

{

// This is a bit inconvenient... Make it a temporary matrix

// If it's often needed, i'll optimize it further --Nicolas

PLASSERT( base_learners_outputs.size() > 0 );

Mat base_outputs_mat(base_learners_outputs.size(),

base_learners[0]->outputsize());

for (int i=0, n=base_learners_outputs.size() ; i<n ; ++i)

base_outputs_mat(i) << base_learners_outputs[i];

if( default_operation == "mean" )

columnMean(base_outputs_mat, output);

else if( default_operation == "min" )

columnMin(base_outputs_mat, output);

else if( default_operation == "max" )

columnMax(base_outputs_mat, output);

else if( default_operation == "sum" )

columnSum(base_outputs_mat, output);

else if( default_operation == "sumofsquares" )

columnSumOfSquares(base_outputs_mat, output);

else if( default_operation == "variance" )

{

Vec mean;

columnMean(base_outputs_mat, mean);

columnVariance(base_outputs_mat, output, mean);

}

else if( default_operation == "dmode")

{

// NC: should this vvvvvvvvvvvv be base_learners_outputs.length() for sharing?

StatsCollector sc(base_learners.length());

for(int o=0; o<output.length(); o++)

{

sc.forget();

for(int j=0; j<base_outputs_mat.length(); j++)

sc.update(base_outputs_mat(o,j),1);

output[o] = sc.dmode();

}

}

else

PLERROR("StackedLearner::computeOutput: unsupported default_operation");

}

}

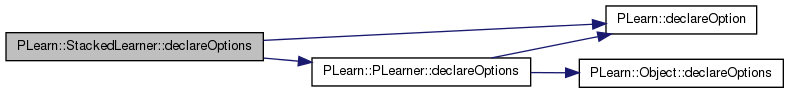

| void PLearn::StackedLearner::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 86 of file StackedLearner.cc.

References base_learners, base_train_splitter, PLearn::OptionBase::buildoption, combiner, PLearn::declareOption(), PLearn::PLearner::declareOptions(), default_operation, normalize_base_learners_output, nsep, precompute_base_learners_output, put_raw_input, share_learner, splitter, and train_base_learners.

{

// ### Declare all of this object's options here

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. Another possible flag to be combined with

// ### is OptionBase::nosave

declareOption(ol, "base_learners", &StackedLearner::base_learners, OptionBase::buildoption,

"A set of 1st level base learners that are independently trained (here or elsewhere)\n"

"and whose outputs will serve as inputs to the combiner (2nd level learner)");

declareOption(ol, "combiner", &StackedLearner::combiner, OptionBase::buildoption,

"A learner that is trained (possibly on a data set different from the\n"

"one used to train the base_learners) using the outputs of the\n"

"base_learners as inputs. If it is not provided, then the StackedLearner\n"

"simply performs \"default_operation\" on the outputs of the base_learners\n");

declareOption(ol, "default_operation", &StackedLearner::default_operation,

OptionBase::buildoption,

"If no combiner is provided, simple operation to be performed\n"

"on the outputs of the base_learners.\n"

"Supported: mean (default), min, max, variance, sum, sumofsquares, dmode (majority vote)\n");

declareOption(ol, "splitter", &StackedLearner::splitter, OptionBase::buildoption,

"A Splitter used to select which data subset(s) goes to training the base_learners\n"

"and which data subset(s) goes to training the combiner. If not provided then the\n"

"same data is used to train and test both levels. If provided, in each split, there should be\n"

"two sets: the set on which to train the first level and the set on which to train the second one\n");

declareOption(ol, "base_train_splitter", &StackedLearner::base_train_splitter, OptionBase::buildoption,

"This splitter can be used to split the training set into different training sets for each base learner\n"

"If it is not set, the same training set will be applied to the base learners.\n"

"If \"splitter\" is also used, it will be applied first to determine the training set used by base_train_splitter.\n"

"The splitter should give as many splits as base learners, and each split should contain one set.");

declareOption(ol, "train_base_learners", &StackedLearner::train_base_learners, OptionBase::buildoption,

"whether to train the base learners in the method train (otherwise they should be\n"

"initialized properly at construction / setOption time)\n");

declareOption(ol, "normalize_base_learners_output", &StackedLearner::normalize_base_learners_output, OptionBase::buildoption,

"If set to 1, the output of the base learners on the combiner training set\n"

"will be normalized (zero mean, unit variance) before training the combiner.");

declareOption(ol, "precompute_base_learners_output", &StackedLearner::precompute_base_learners_output, OptionBase::buildoption,

"If set to 1, the output of the base learners on the combiner training set\n"

"will be precomputed in memory before training the combiner (this may speed\n"

"up significantly the combiner training process).");

declareOption(ol, "put_raw_input", &StackedLearner::put_raw_input, OptionBase::buildoption,

"Whether to put the raw inputs in addition of the base learners\n"

"outputs, in input of the combiner. The raw_inputs are\n"

"appended AT THE END of the combiner input vector\n");

declareOption(ol, "share_learner", &StackedLearner::share_learner, OptionBase::buildoption,

"If set to 1, the input is divided in nsep equal parts, and a common learner\n"

"is trained as if each part constitutes a training example.");

declareOption(ol, "nsep", &StackedLearner::nsep, OptionBase::buildoption,

"Number of input separations. The input size needs to be a "

"multiple of that value\n");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::StackedLearner::declaringFile | ( | ) | [inline, static] |

| StackedLearner * PLearn::StackedLearner::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 84 of file StackedLearner.cc.

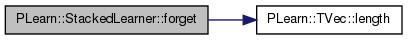

| void PLearn::StackedLearner::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!)

Reimplemented from PLearn::PLearner.

Definition at line 239 of file StackedLearner.cc.

References base_learners, combiner, i, PLearn::TVec< T >::length(), and train_base_learners.

{

if (train_base_learners)

for (int i=0;i<base_learners.length();i++)

base_learners[i]->forget();

if (combiner)

combiner->forget();

}

| OptionList & PLearn::StackedLearner::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 84 of file StackedLearner.cc.

| OptionMap & PLearn::StackedLearner::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 84 of file StackedLearner.cc.

| RemoteMethodMap & PLearn::StackedLearner::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 84 of file StackedLearner.cc.

| TVec< string > PLearn::StackedLearner::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method)

Implements PLearn::PLearner.

Definition at line 460 of file StackedLearner.cc.

References base_learners, and combiner.

{

// Return the names of the costs computed by computeCostsFromOutpus

// (these may or may not be exactly the same as what's returned by getTrainCostNames)

if (combiner)

return combiner->getTestCostNames();

else

return base_learners[0]->getTestCostNames();

}

| TVec< string > PLearn::StackedLearner::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 470 of file StackedLearner.cc.

References base_learners, and combiner.

{

// Return the names of the objective costs that the train method computes and

// for which it updates the VecStatsCollector train_stats

if (combiner)

return combiner->getTrainCostNames();

else

return base_learners[0]->getTrainCostNames();

}

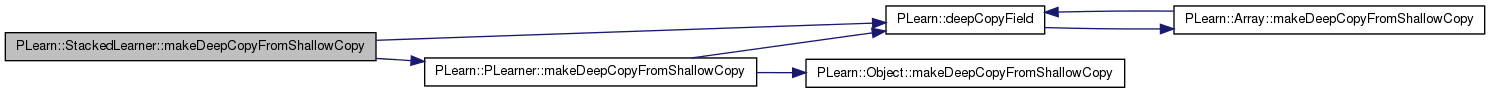

| void PLearn::StackedLearner::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 204 of file StackedLearner.cc.

References all_base_learners_outputs, base_learners, base_learners_outputs, base_train_splitter, combiner, PLearn::deepCopyField(), PLearn::PLearner::makeDeepCopyFromShallowCopy(), and splitter.

{

deepCopyField(base_learners_outputs, copies);

deepCopyField(all_base_learners_outputs, copies);

deepCopyField(base_learners, copies);

deepCopyField(combiner, copies);

deepCopyField(splitter, copies);

deepCopyField(base_train_splitter, copies);

inherited::makeDeepCopyFromShallowCopy(copies);

}

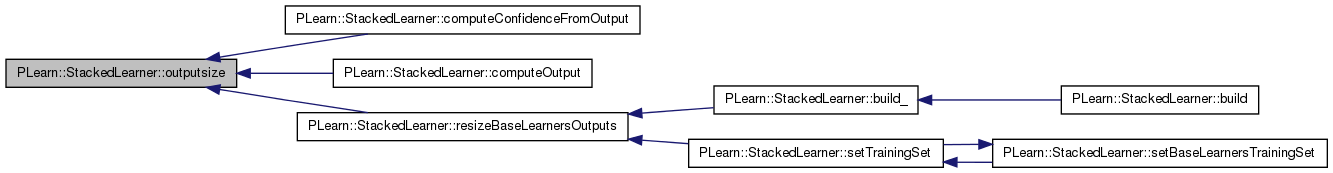

| int PLearn::StackedLearner::outputsize | ( | ) | const [virtual] |

returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options)

Implements PLearn::PLearner.

Definition at line 229 of file StackedLearner.cc.

References base_learners, and combiner.

Referenced by computeConfidenceFromOutput(), computeOutput(), and resizeBaseLearnersOutputs().

{

// compute and return the size of this learner's output, (which typically

// may depend on its inputsize(), targetsize() and set options)

if (combiner)

return combiner->outputsize();

else

return base_learners[0]->outputsize();

}

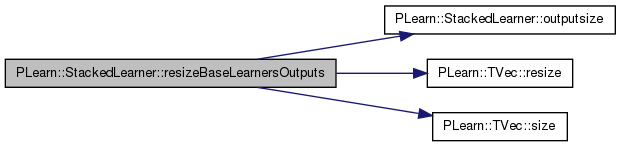

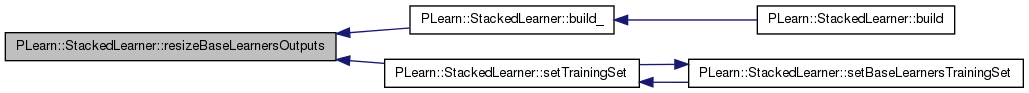

| void PLearn::StackedLearner::resizeBaseLearnersOutputs | ( | ) | [private] |

Resize 'base_learners_outputs' to fit with current base learners.

Definition at line 484 of file StackedLearner.cc.

References base_learners, base_learners_outputs, combiner, i, n, nsep, outputsize(), PLASSERT, PLERROR, PLearn::TVec< T >::resize(), share_learner, and PLearn::TVec< T >::size().

Referenced by build_(), and setTrainingSet().

{

// Ensure that all base learners have the same outputsize if we don't use

// a combiner

PLASSERT( base_learners.size() > 0 && base_learners[0] );

if (! combiner && ! share_learner) {

int outputsize = base_learners[0]->outputsize();

if (outputsize > 0) {

for (int i=1, n=base_learners.size() ; i<n ; ++i)

if (base_learners[i]->outputsize() != outputsize)

PLERROR("StackedLearner::build_: base learner #%d does not have the same "

"outputsize (=%d) as base learner #0 (=%d); all outputsizes for "

"base learners must be identical",

i, base_learners[i]->outputsize(), outputsize);

}

}

if(share_learner)

base_learners_outputs.resize(nsep);

else

base_learners_outputs.resize(base_learners.size());

}

| void PLearn::StackedLearner::setBaseLearnersTrainingSet | ( | VMat | base_trainset, |

| bool | call_forget | ||

| ) | [protected] |

Utility to set training set of base learners.

Definition at line 507 of file StackedLearner.cc.

References base_learners, base_train_splitter, i, PLearn::TVec< T >::length(), nsep, PLASSERT, setTrainingSet(), share_learner, PLearn::TVec< T >::size(), and train_base_learners.

Referenced by setTrainingSet().

{

PLASSERT( base_learners.size() > 0 );

// Handle parameter sharing

if(share_learner) {

base_learners[0]->setTrainingSet(

new SeparateInputVMatrix(base_trainset, nsep),

call_forget && train_base_learners);

}

else {

if (base_train_splitter) {

// Handle base splitter

base_train_splitter->setDataSet(base_trainset);

for (int i=0;i<base_learners.length();i++) {

base_learners[i]->setTrainingSet(base_train_splitter->getSplit(i)[0],

call_forget && train_base_learners);

}

}

else {

// Default situation: set the same training set into each base learner

for (int i=0;i<base_learners.length();i++)

base_learners[i]->setTrainingSet(base_trainset,

call_forget && train_base_learners);

}

}

}

| void PLearn::StackedLearner::setCombinerTrainingSet | ( | VMat | comb_trainset, |

| bool | call_forget | ||

| ) | [protected] |

Utility to set training set of combiner.

Definition at line 535 of file StackedLearner.cc.

References base_learners, combiner, nsep, put_raw_input, and share_learner.

Referenced by setTrainingSet().

{

// Handle combiner

if (combiner) {

VMat effective_trainset = comb_trainset;

if (share_learner)

effective_trainset = new SeparateInputVMatrix(comb_trainset, nsep);

combiner->setTrainingSet(

new PLearnerOutputVMatrix(effective_trainset, base_learners, put_raw_input),

call_forget);

}

}

| void PLearn::StackedLearner::setExperimentDirectory | ( | const PPath & | the_expdir | ) | [virtual] |

Forwarded to inner learners.

Reimplemented from PLearn::PLearner.

Definition at line 217 of file StackedLearner.cc.

References base_learners, combiner, i, n, PLearn::TVec< T >::size(), and PLearn::tostring().

{

if (the_expdir != "") {

for (int i=0, n=base_learners.size() ; i<n ; ++i)

base_learners[i]->setExperimentDirectory(the_expdir /

"Base"+tostring(i));

if (combiner)

combiner->setExperimentDirectory(the_expdir / "Combiner");

}

}

| void PLearn::StackedLearner::setTrainingSet | ( | VMat | training_set, |

| bool | call_forget = true |

||

| ) | [virtual] |

Declares the train_set Then calls build() and forget() if necessary.

Reimplemented from PLearn::PLearner.

Definition at line 248 of file StackedLearner.cc.

References PLERROR, resizeBaseLearnersOutputs(), setBaseLearnersTrainingSet(), setCombinerTrainingSet(), PLearn::PLearner::setTrainingSet(), and splitter.

Referenced by setBaseLearnersTrainingSet().

{

inherited::setTrainingSet(training_set, call_forget);

if (splitter) {

splitter->setDataSet(training_set);

if (splitter->nsplits() !=1 )

PLERROR("In StackedLearner::setTrainingSet - "

"The splitter provided should only return one split");

// Split[0] goes to the base learners; Split[1] goes to combiner

TVec<VMat> sets = splitter->getSplit();

setBaseLearnersTrainingSet(sets[0], call_forget);

setCombinerTrainingSet (sets[1], call_forget);

}

else {

setBaseLearnersTrainingSet(training_set, call_forget);

setCombinerTrainingSet (training_set, call_forget);

}

// Changing the training set may change the outputsize of the base learners.

resizeBaseLearnersOutputs();

}

| void PLearn::StackedLearner::setTrainStatsCollector | ( | PP< VecStatsCollector > | statscol | ) | [virtual] |

Forwarded to combiner.

Reimplemented from PLearn::PLearner.

Definition at line 153 of file StackedLearner.cc.

References combiner, and PLearn::PLearner::setTrainStatsCollector().

{

inherited::setTrainStatsCollector(statscol);

if (combiner)

combiner->setTrainStatsCollector(statscol);

}

| void PLearn::StackedLearner::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 272 of file StackedLearner.cc.

References base_learners, combiner, i, PLearn::TVec< T >::length(), normalize_base_learners_output, PLearn::PLearner::nstages, PLERROR, PLearn::VMat::precompute(), precompute_base_learners_output, splitter, PLearn::PLearner::stage, train_base_learners, and PLearn::PLearner::train_stats.

{

if (!train_stats)

PLERROR("StackedLearner::train: train_stats has not been set!");

if (splitter && splitter->nsplits() != 1)

PLERROR("StackedLearner: multi-splits case not implemented yet");

// --- PART 1: TRAIN THE BASE LEARNERS ---

if (train_base_learners) {

if(stage == 0) {

for (int i=0;i<base_learners.length();i++)

{

PP<VecStatsCollector> stats = new VecStatsCollector();

base_learners[i]->setTrainStatsCollector(stats);

base_learners[i]->nstages = nstages;

base_learners[i]->train();

stats->finalize(); // WE COULD OPTIONALLY SAVE THEM AS WELL!

}

stage++;

}

else

for (int i=0;i<base_learners.length();i++)

{

base_learners[i]->nstages = nstages;

base_learners[i]->train();

}

}

// --- PART 2: TRAIN THE COMBINER ---

if (combiner)

{

if (normalize_base_learners_output) {

// Normalize the combiner training set.

VMat normalized_trainset =

new ShiftAndRescaleVMatrix(combiner->getTrainingSet(), -1);

combiner->setTrainingSet(normalized_trainset);

}

if (precompute_base_learners_output) {

// First precompute the train set of the combiner in memory.

VMat precomputed_trainset = combiner->getTrainingSet();

precomputed_trainset.precompute();

combiner->setTrainingSet(precomputed_trainset, false);

}

combiner->setTrainStatsCollector(train_stats);

combiner->train();

}

}

Reimplemented from PLearn::PLearner.

Definition at line 165 of file StackedLearner.h.

Vec PLearn::StackedLearner::all_base_learners_outputs [mutable, protected] |

Buffer for concatenated output of base learners.

Definition at line 63 of file StackedLearner.h.

Referenced by computeConfidenceFromOutput(), computeCostsFromOutputs(), computeOutput(), and makeDeepCopyFromShallowCopy().

A set of 1st level base learners that are independently trained (here or elsewhere) and whose outputs will serve as inputs to the combiner.

Definition at line 74 of file StackedLearner.h.

Referenced by build_(), computeConfidenceFromOutput(), computeCostsFromOutputs(), computeOutput(), declareOptions(), forget(), getTestCostNames(), getTrainCostNames(), makeDeepCopyFromShallowCopy(), outputsize(), resizeBaseLearnersOutputs(), setBaseLearnersTrainingSet(), setCombinerTrainingSet(), setExperimentDirectory(), and train().

TVec< Vec > PLearn::StackedLearner::base_learners_outputs [protected] |

Temporary buffers for the output of base learners.

This is a TVec of Vec since we now allow each learner's outputsize to be different

Definition at line 60 of file StackedLearner.h.

Referenced by computeConfidenceFromOutput(), computeOutput(), makeDeepCopyFromShallowCopy(), and resizeBaseLearnersOutputs().

This can be used to split the training set into different training sets for each base learner.

If it is not set, the same training set will be applied to the base learners.

Definition at line 93 of file StackedLearner.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and setBaseLearnersTrainingSet().

A learner that is trained (possibly on a data set different from the one used to train the base_learners) using the outputs of the base_learners as inputs.

Definition at line 79 of file StackedLearner.h.

Referenced by computeConfidenceFromOutput(), computeCostsFromOutputs(), computeOutput(), declareOptions(), forget(), getTestCostNames(), getTrainCostNames(), makeDeepCopyFromShallowCopy(), outputsize(), resizeBaseLearnersOutputs(), setCombinerTrainingSet(), setExperimentDirectory(), setTrainStatsCollector(), and train().

if no combiner is provided, simple operation to be performed on the outputs of the base_learners.

Supported: mean (default), min, max, variance, sum, sumofsquares

Definition at line 84 of file StackedLearner.h.

Referenced by build_(), computeOutput(), and declareOptions().

If set to 1, the output of the base learners on the combiner training set will be normalized (zero mean, unit variance).

Definition at line 101 of file StackedLearner.h.

Referenced by declareOptions(), and train().

Number of input separations.

Definition at line 115 of file StackedLearner.h.

Referenced by computeConfidenceFromOutput(), computeCostsFromOutputs(), computeOutput(), declareOptions(), resizeBaseLearnersOutputs(), setBaseLearnersTrainingSet(), and setCombinerTrainingSet().

If set to 1, the output of the base learners on the combiner training set will be precomputed in memory before training the combiner.

Definition at line 105 of file StackedLearner.h.

Referenced by declareOptions(), and train().

optionally put the costs of all the learners as additional inputs of the combiner (NB: this option actually isn't implemented yet)

Definition at line 119 of file StackedLearner.h.

Optionally put the raw input as additional input of the combiner.

Definition at line 108 of file StackedLearner.h.

Referenced by computeConfidenceFromOutput(), computeOutput(), declareOptions(), and setCombinerTrainingSet().

If set to 1, the input is divided in nsep equal parts, and a common learner is trained as if each part constitutes a training example.

Definition at line 112 of file StackedLearner.h.

Referenced by build_(), computeConfidenceFromOutput(), computeCostsFromOutputs(), computeOutput(), declareOptions(), resizeBaseLearnersOutputs(), setBaseLearnersTrainingSet(), and setCombinerTrainingSet().

This can be and which data subset(s) goes to training the combiner.

Definition at line 88 of file StackedLearner.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), setTrainingSet(), and train().

whether to train the base learners in the method train (otherwise they should be initialized at construction / setOption time)

Definition at line 97 of file StackedLearner.h.

Referenced by declareOptions(), forget(), setBaseLearnersTrainingSet(), and train().

1.7.4

1.7.4