|

PLearn 0.1

|

|

PLearn 0.1

|

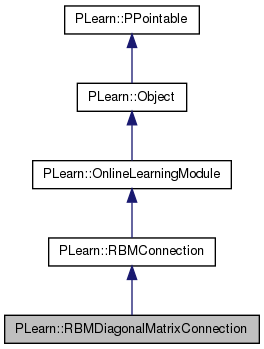

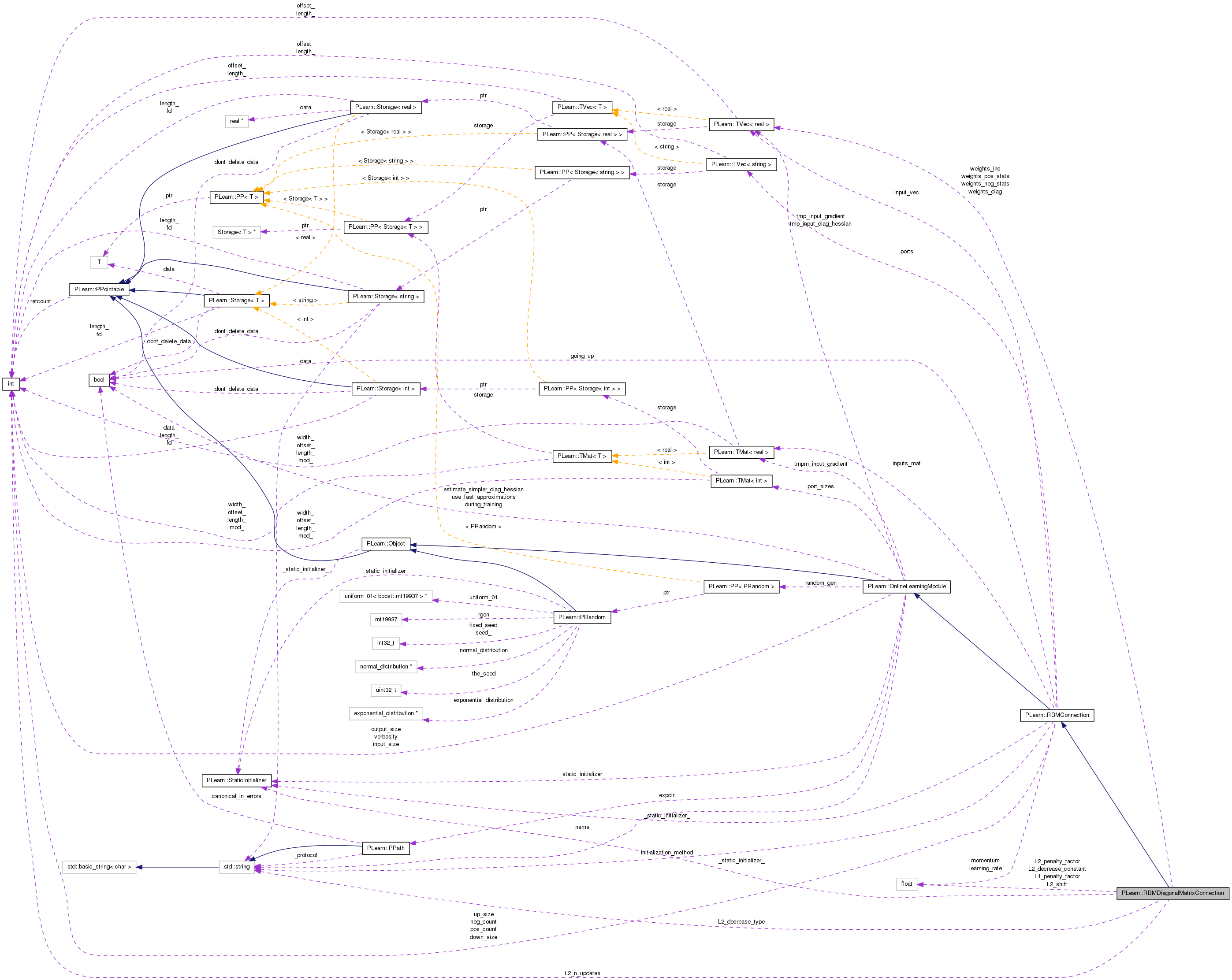

Stores and learns the parameters between two linear layers of an RBM. More...

#include <RBMDiagonalMatrixConnection.h>

Public Member Functions | |

| RBMDiagonalMatrixConnection (real the_learning_rate=0) | |

| Default constructor. | |

| virtual void | accumulatePosStats (const Vec &down_values, const Vec &up_values) |

| Accumulates positive phase statistics to *_pos_stats. | |

| virtual void | accumulatePosStats (const Mat &down_values, const Mat &up_values) |

| virtual void | accumulateNegStats (const Vec &down_values, const Vec &up_values) |

| Accumulates negative phase statistics to *_neg_stats. | |

| virtual void | accumulateNegStats (const Mat &down_values, const Mat &up_values) |

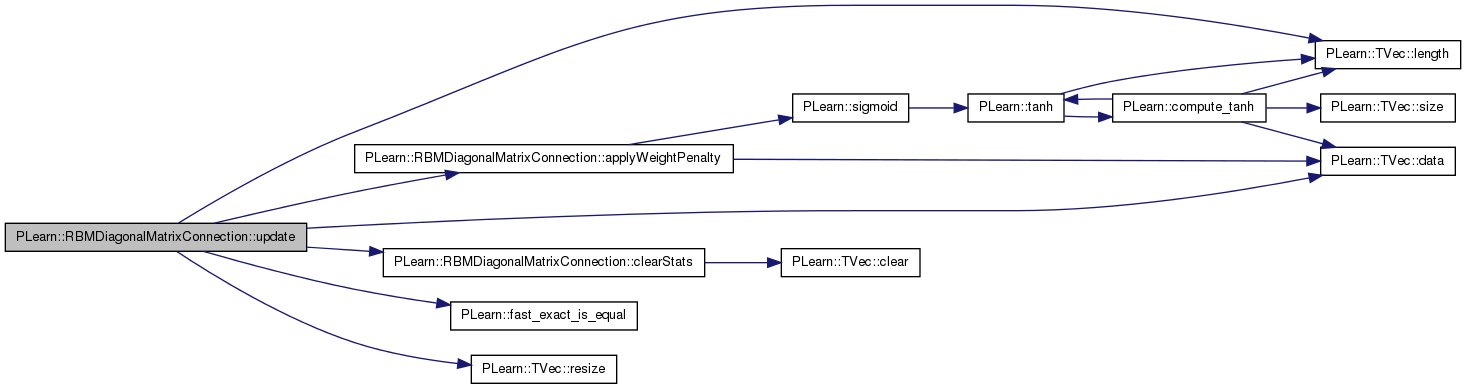

| virtual void | update () |

| Updates parameters according to contrastive divergence gradient. | |

| virtual void | update (const Vec &pos_down_values, const Vec &pos_up_values, const Vec &neg_down_values, const Vec &neg_up_values) |

| Updates parameters according to contrastive divergence gradient, not using the statistics but the explicit values passed. | |

| virtual void | update (const Mat &pos_down_values, const Mat &pos_up_values, const Mat &neg_down_values, const Mat &neg_up_values) |

| Not implemented. | |

| virtual void | clearStats () |

| Clear all information accumulated during stats. | |

| virtual void | computeProduct (int start, int length, const Vec &activations, bool accumulate=false) const |

| Computes the vectors of activation of "length" units, starting from "start", and stores (or add) them into "activations". | |

| virtual void | computeProducts (int start, int length, Mat &activations, bool accumulate=false) const |

| Same as 'computeProduct' but for mini-batches. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false) |

| Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then). | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) | |

| virtual void | applyWeightPenalty () |

| Applies penalty (decay) on weights. | |

| virtual void | addWeightPenalty (Vec weights_diag, Vec weights_diag_gradients) |

| Adds penalty (decay) gradient. | |

| virtual void | forget () |

| reset the parameters to the state they would be BEFORE starting training. | |

| virtual int | nParameters () const |

| optionally perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation. | |

| virtual Vec | makeParametersPointHere (const Vec &global_parameters) |

| Make the parameters data be sub-vectors of the given global_parameters. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMDiagonalMatrixConnection * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Vec | weights_diag |

| Vector containing the diagonal of the weight matrix. | |

| real | L1_penalty_factor |

| Optional (default=0) factor of L1 regularization term. | |

| real | L2_penalty_factor |

| Optional (default=0) factor of L2 regularization term. | |

| real | L2_decrease_constant |

| real | L2_shift |

| string | L2_decrease_type |

| int | L2_n_updates |

| Vec | weights_pos_stats |

| Accumulates positive contribution to the weights' gradient. | |

| Vec | weights_neg_stats |

| Accumulates negative contribution to the weights' gradient. | |

| Vec | weights_inc |

| Used if momentum != 0. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef RBMConnection | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Stores and learns the parameters between two linear layers of an RBM.

Definition at line 53 of file RBMDiagonalMatrixConnection.h.

typedef RBMConnection PLearn::RBMDiagonalMatrixConnection::inherited [private] |

Reimplemented from PLearn::RBMConnection.

Definition at line 55 of file RBMDiagonalMatrixConnection.h.

| PLearn::RBMDiagonalMatrixConnection::RBMDiagonalMatrixConnection | ( | real | the_learning_rate = 0 | ) |

Default constructor.

Definition at line 52 of file RBMDiagonalMatrixConnection.cc.

:

inherited(the_learning_rate)

{

}

| string PLearn::RBMDiagonalMatrixConnection::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMConnection.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

| OptionList & PLearn::RBMDiagonalMatrixConnection::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMConnection.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

| RemoteMethodMap & PLearn::RBMDiagonalMatrixConnection::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMConnection.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

Reimplemented from PLearn::RBMConnection.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

| Object * PLearn::RBMDiagonalMatrixConnection::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

| StaticInitializer RBMDiagonalMatrixConnection::_static_initializer_ & PLearn::RBMDiagonalMatrixConnection::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMConnection.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

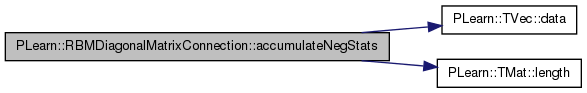

| void PLearn::RBMDiagonalMatrixConnection::accumulateNegStats | ( | const Mat & | down_values, |

| const Mat & | up_values | ||

| ) | [virtual] |

Implements PLearn::RBMConnection.

Definition at line 203 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::data(), i, PLearn::TMat< T >::length(), PLearn::RBMConnection::neg_count, PLASSERT, PLearn::RBMConnection::up_size, and weights_neg_stats.

{

int mbs=down_values.length();

PLASSERT(up_values.length()==mbs);

real* wns;

real* uv;

real* dv;

for( int t=0; t<mbs; t++ )

{

wns = weights_neg_stats.data();

uv = up_values[t];

dv = down_values[t];

for( int i=0; i<up_size; i++ )

wns[i] += uv[i]*dv[i];

}

neg_count+=mbs;

}

| void PLearn::RBMDiagonalMatrixConnection::accumulateNegStats | ( | const Vec & | down_values, |

| const Vec & | up_values | ||

| ) | [virtual] |

Accumulates negative phase statistics to *_neg_stats.

Implements PLearn::RBMConnection.

Definition at line 192 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::data(), i, PLearn::RBMConnection::neg_count, PLearn::RBMConnection::up_size, and weights_neg_stats.

{

real* wns = weights_neg_stats.data();

real* uv = up_values.data();

real* dv = down_values.data();

for( int i=0; i<up_size; i++ )

wns[i] += uv[i]*dv[i];

neg_count++;

}

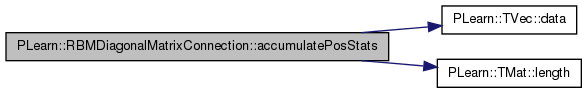

| void PLearn::RBMDiagonalMatrixConnection::accumulatePosStats | ( | const Mat & | down_values, |

| const Mat & | up_values | ||

| ) | [virtual] |

Implements PLearn::RBMConnection.

Definition at line 169 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::data(), i, PLearn::TMat< T >::length(), PLASSERT, PLearn::RBMConnection::pos_count, PLearn::RBMConnection::up_size, and weights_pos_stats.

{

int mbs=down_values.length();

PLASSERT(up_values.length()==mbs);

real* wps;

real* uv;

real* dv;

for( int t=0; t<mbs; t++ )

{

wps = weights_pos_stats.data();

uv = up_values[t];

dv = down_values[t];

for( int i=0; i<up_size; i++ )

wps[i] += uv[i]*dv[i];

}

pos_count+=mbs;

}

| void PLearn::RBMDiagonalMatrixConnection::accumulatePosStats | ( | const Vec & | down_values, |

| const Vec & | up_values | ||

| ) | [virtual] |

Accumulates positive phase statistics to *_pos_stats.

Implements PLearn::RBMConnection.

Definition at line 157 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::data(), i, PLearn::RBMConnection::pos_count, PLearn::RBMConnection::up_size, and weights_pos_stats.

{

real* wps = weights_pos_stats.data();

real* uv = up_values.data();

real* dv = down_values.data();

for( int i=0; i<up_size; i++ )

wps[i] += uv[i]*dv[i];

pos_count++;

}

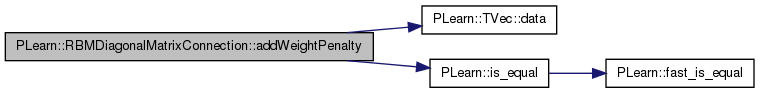

| void PLearn::RBMDiagonalMatrixConnection::addWeightPenalty | ( | Vec | weights_diag, |

| Vec | weights_diag_gradients | ||

| ) | [virtual] |

Adds penalty (decay) gradient.

Definition at line 555 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::data(), PLearn::RBMConnection::down_size, i, PLearn::is_equal(), L1_penalty_factor, L2_decrease_constant, L2_penalty_factor, L2_shift, and PLASSERT_MSG.

{

// Add penalty (decay) gradient.

real delta_L1 = L1_penalty_factor;

real delta_L2 = L2_penalty_factor;

PLASSERT_MSG( is_equal(L2_decrease_constant, 0) && is_equal(L2_shift, 100),

"L2 decrease not implemented in this method" );

real* w_ = weights_diag.data();

real* gw_ = weight_diag_gradients.data();

for( int i=0; i<down_size; i++ )

{

if( delta_L2 != 0. )

gw_[i] += delta_L2*w_[i];

if( delta_L1 != 0. )

{

if( w_[i] > 0 )

gw_[i] += delta_L1;

else if( w_[i] < 0 )

gw_[i] -= delta_L1;

}

}

}

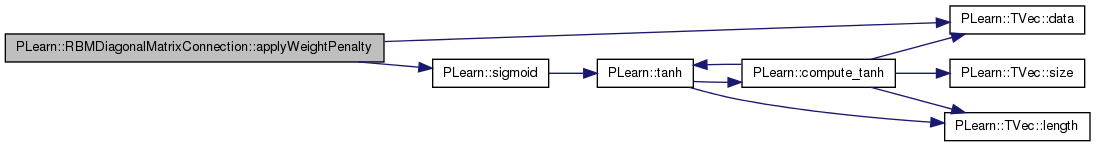

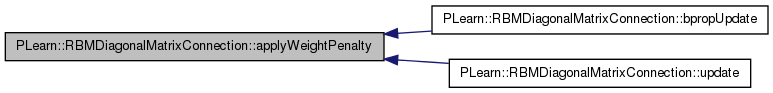

| void PLearn::RBMDiagonalMatrixConnection::applyWeightPenalty | ( | ) | [virtual] |

Applies penalty (decay) on weights.

Definition at line 519 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::data(), PLearn::RBMConnection::down_size, i, L1_penalty_factor, L2_decrease_constant, L2_decrease_type, L2_n_updates, L2_penalty_factor, L2_shift, PLearn::RBMConnection::learning_rate, PLERROR, PLearn::sigmoid(), and weights_diag.

Referenced by bpropUpdate(), and update().

{

// Apply penalty (decay) on weights.

real delta_L1 = learning_rate * L1_penalty_factor;

real delta_L2 = learning_rate * L2_penalty_factor;

if (L2_decrease_type == "one_over_t")

delta_L2 /= (1 + L2_decrease_constant * L2_n_updates);

else if (L2_decrease_type == "sigmoid_like")

delta_L2 *= sigmoid((L2_shift - L2_n_updates) * L2_decrease_constant);

else

PLERROR("In RBMDiagonalMatrixConnection::applyWeightPenalty - Invalid value "

"for L2_decrease_type: %s", L2_decrease_type.c_str());

real* w_ = weights_diag.data();

for( int i=0; i<down_size; i++ )

{

if( delta_L2 != 0. )

w_[i] *= (1 - delta_L2);

if( delta_L1 != 0. )

{

if( w_[i] > delta_L1 )

w_[i] -= delta_L1;

else if( w_[i] < -delta_L1 )

w_[i] += delta_L1;

else

w_[i] = 0.;

}

}

if (delta_L2 > 0)

L2_n_updates++;

}

| void PLearn::RBMDiagonalMatrixConnection::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then).

Since sub-classes are supposed to learn ONLINE, the object is 'ready-to-be-used' just after any bpropUpdate. N.B. A DEFAULT IMPLEMENTATION IS PROVIDED IN THE SUPER-CLASS, WHICH JUST CALLS bpropUpdate(input, output, input_gradient, output_gradient) AND IGNORES INPUT GRADIENT. this version allows to obtain the input gradient as well N.B. THE DEFAULT IMPLEMENTATION IN SUPER-CLASS JUST RAISES A PLERROR.

Reimplemented from PLearn::OnlineLearningModule.

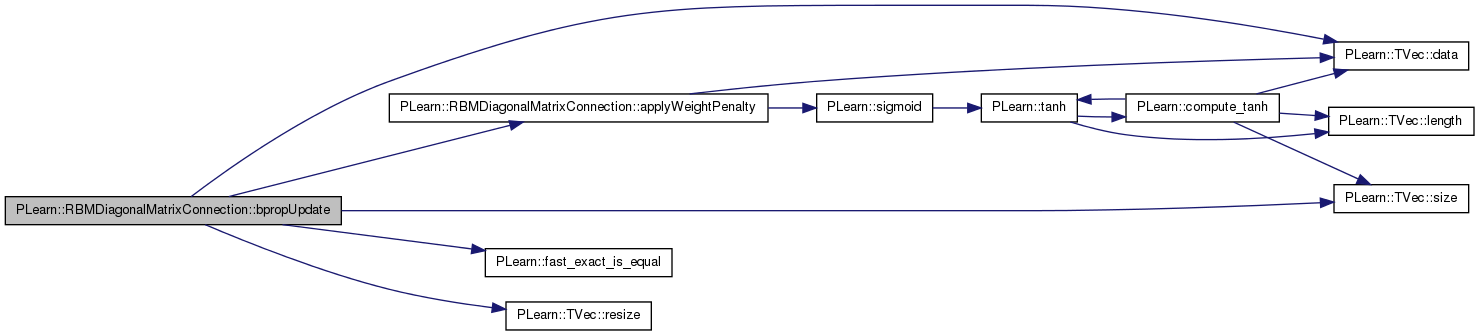

Definition at line 424 of file RBMDiagonalMatrixConnection.cc.

References applyWeightPenalty(), PLearn::TVec< T >::data(), PLearn::RBMConnection::down_size, PLearn::fast_exact_is_equal(), i, in, L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::RBMConnection::up_size, w, and weights_diag.

{

PLASSERT( input.size() == down_size );

PLASSERT( output.size() == up_size );

PLASSERT( output_gradient.size() == up_size );

real* w = weights_diag.data();

real* in = input.data();

real* ing = input_gradient.data();

real* outg = output_gradient.data();

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == down_size,

"Cannot resize input_gradient AND accumulate into it" );

for( int i=0; i<down_size; i++ )

{

ing[i] += outg[i]*w[i];

w[i] -= learning_rate * in[i] * outg[i];

}

}

else

{

input_gradient.resize( down_size );

for( int i=0; i<down_size; i++ )

{

ing[i] = outg[i]*w[i];

w[i] -= learning_rate * in[i] * outg[i];

}

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

| void PLearn::RBMDiagonalMatrixConnection::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient)

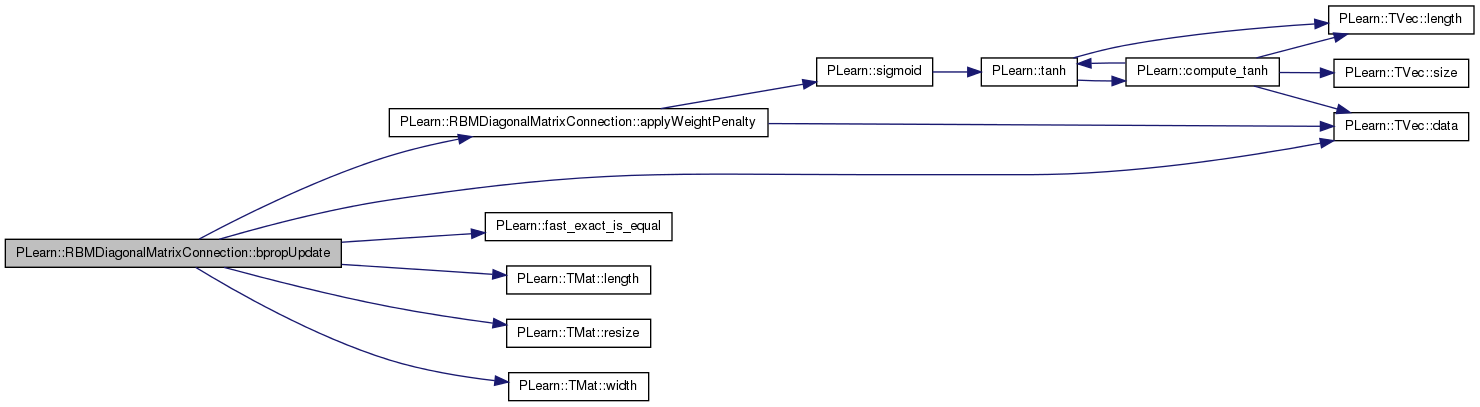

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 462 of file RBMDiagonalMatrixConnection.cc.

References applyWeightPenalty(), PLearn::TVec< T >::data(), PLearn::RBMConnection::down_size, PLearn::fast_exact_is_equal(), i, in, L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLASSERT, PLASSERT_MSG, PLearn::TMat< T >::resize(), PLearn::RBMConnection::up_size, w, weights_diag, and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == down_size );

PLASSERT( outputs.width() == up_size );

PLASSERT( output_gradients.width() == up_size );

int mbatch = inputs.length();

real* w = weights_diag.data();

real* in;

real* ing;

real* outg;

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == down_size &&

input_gradients.length() == inputs.length(),

"Cannot resize input_gradients and accumulate into it" );

for( int t=0; t<mbatch; t++ )

{

ing = input_gradients[t];

outg = output_gradients[t];

for( int i=0; i<down_size; i++ )

ing[i] += outg[i]*w[i];

}

}

else

{

input_gradients.resize(inputs.length(), down_size);

for( int t=0; t<mbatch; t++ )

{

ing = input_gradients[t];

outg = output_gradients[t];

for( int i=0; i<down_size; i++ )

ing[i] = outg[i]*w[i];

}

}

real avg_lr = learning_rate / mbatch;

for( int t=0; t<mbatch; t++ )

{

in = inputs[t];

outg = output_gradients[t];

for( int i=0; i<down_size; i++ )

w[i] -= avg_lr * in[i] * outg[i];

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

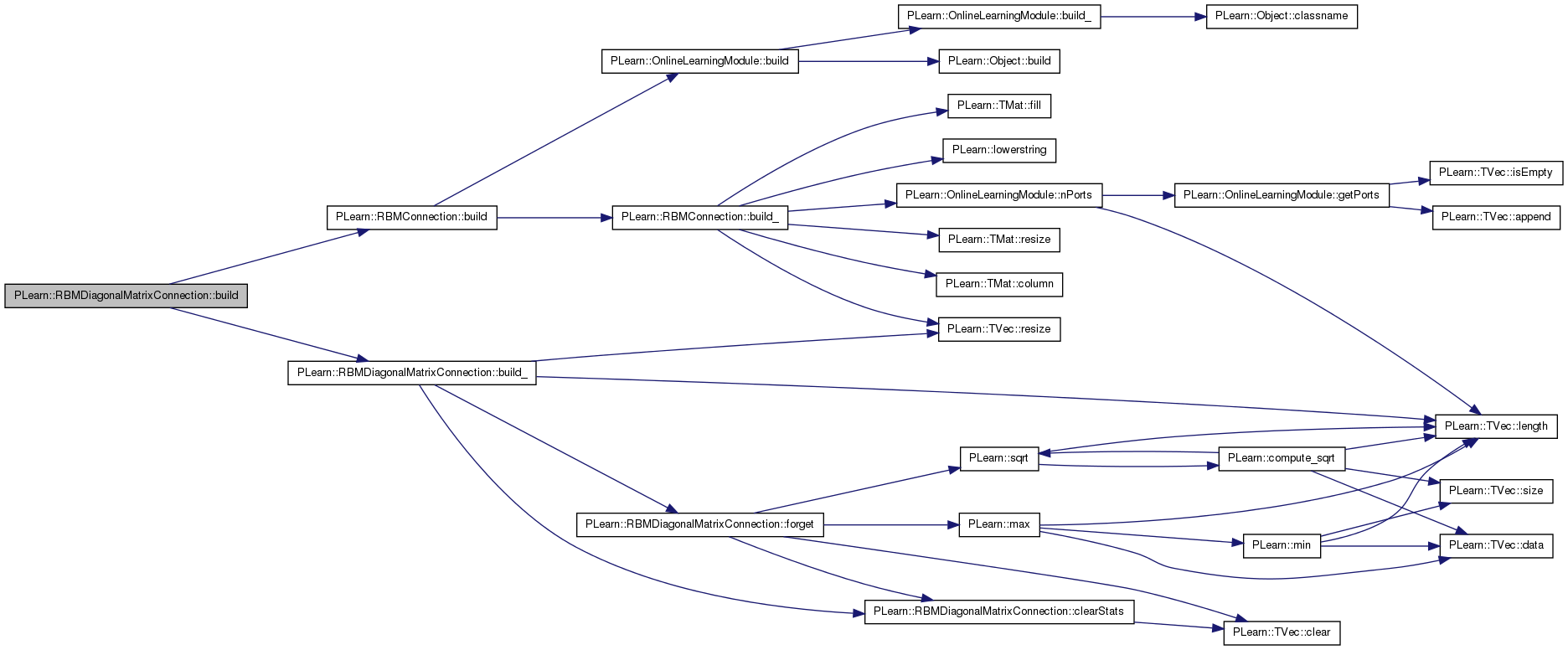

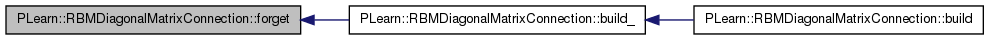

| void PLearn::RBMDiagonalMatrixConnection::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMConnection.

Definition at line 140 of file RBMDiagonalMatrixConnection.cc.

References PLearn::RBMConnection::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::RBMDiagonalMatrixConnection::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMConnection.

Definition at line 111 of file RBMDiagonalMatrixConnection.cc.

References clearStats(), PLearn::RBMConnection::down_size, forget(), PLearn::TVec< T >::length(), PLearn::RBMConnection::momentum, PLERROR, PLearn::TVec< T >::resize(), PLearn::RBMConnection::up_size, weights_diag, weights_inc, weights_neg_stats, and weights_pos_stats.

Referenced by build().

{

if( up_size <= 0 || down_size <= 0 )

return;

if( up_size != down_size )

PLERROR("In RBMDiagonalMatrixConnection::build_(): up_size should be "

"equal to down_size");

bool needs_forget = false; // do we need to reinitialize the parameters?

if( weights_diag.length() != up_size )

{

weights_diag.resize( up_size );

needs_forget = true;

}

weights_pos_stats.resize( up_size );

weights_neg_stats.resize( up_size );

if( momentum != 0. )

weights_inc.resize( up_size );

if( needs_forget )

forget();

clearStats();

}

| string PLearn::RBMDiagonalMatrixConnection::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

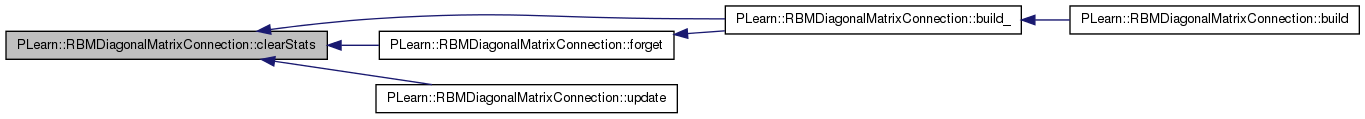

| void PLearn::RBMDiagonalMatrixConnection::clearStats | ( | ) | [virtual] |

Clear all information accumulated during stats.

Implements PLearn::RBMConnection.

Definition at line 362 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::clear(), PLearn::RBMConnection::neg_count, PLearn::RBMConnection::pos_count, weights_neg_stats, and weights_pos_stats.

Referenced by build_(), forget(), and update().

{

weights_pos_stats.clear();

weights_neg_stats.clear();

pos_count = 0;

neg_count = 0;

}

| void PLearn::RBMDiagonalMatrixConnection::computeProduct | ( | int | start, |

| int | length, | ||

| const Vec & | activations, | ||

| bool | accumulate = false |

||

| ) | const [virtual] |

Computes the vectors of activation of "length" units, starting from "start", and stores (or add) them into "activations".

"start" indexes an up unit if "going_up", else a down unit.

Implements PLearn::RBMConnection.

Definition at line 374 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::data(), i, PLearn::RBMConnection::input_vec, PLearn::TVec< T >::length(), PLASSERT, PLearn::RBMConnection::up_size, w, and weights_diag.

{

PLASSERT( activations.length() == length );

PLASSERT( start+length <= up_size );

real* act = activations.data();

real* w = weights_diag.data();

real* iv = input_vec.data();

if( accumulate )

for( int i=0; i<length; i++ )

act[i] += w[i+start] * iv[i+start];

else

for( int i=0; i<length; i++ )

act[i] = w[i+start] * iv[i+start];

}

| void PLearn::RBMDiagonalMatrixConnection::computeProducts | ( | int | start, |

| int | length, | ||

| Mat & | activations, | ||

| bool | accumulate = false |

||

| ) | const [virtual] |

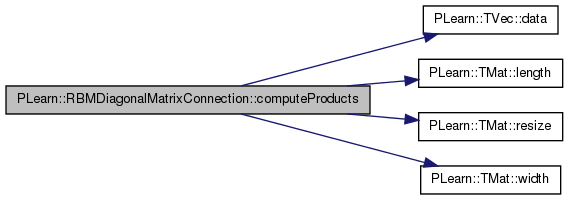

Same as 'computeProduct' but for mini-batches.

Implements PLearn::RBMConnection.

Definition at line 394 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::data(), i, PLearn::RBMConnection::inputs_mat, PLearn::TMat< T >::length(), PLASSERT, PLearn::TMat< T >::resize(), w, weights_diag, and PLearn::TMat< T >::width().

{

PLASSERT( activations.width() == length );

activations.resize(inputs_mat.length(), length);

real* act;

real* w = weights_diag.data();

real* iv;

if( accumulate )

for( int t=0; t<inputs_mat.length(); t++ )

{

act = activations[t];

iv = inputs_mat[t];

for( int i=0; i<length; i++ )

act[i] += w[i+start] * iv[i+start];

}

else

for( int t=0; t<inputs_mat.length(); t++ )

{

act = activations[t];

iv = inputs_mat[t];

for( int i=0; i<length; i++ )

act[i] = w[i+start] * iv[i+start];

}

}

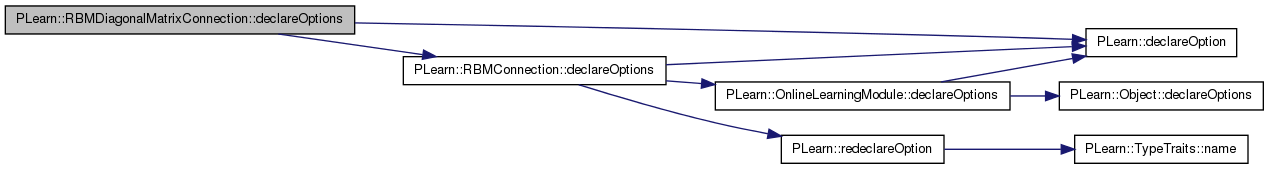

| void PLearn::RBMDiagonalMatrixConnection::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMConnection.

Definition at line 57 of file RBMDiagonalMatrixConnection.cc.

References PLearn::OptionBase::advanced_level, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::RBMConnection::declareOptions(), L1_penalty_factor, L2_decrease_constant, L2_decrease_type, L2_n_updates, L2_penalty_factor, L2_shift, PLearn::OptionBase::learntoption, and weights_diag.

{

declareOption(ol, "weights_diag", &RBMDiagonalMatrixConnection::weights_diag,

OptionBase::learntoption,

"Vector containing the diagonal of the weight matrix.\n");

declareOption(ol, "L1_penalty_factor",

&RBMDiagonalMatrixConnection::L1_penalty_factor,

OptionBase::buildoption,

"Optional (default=0) factor of L1 regularization term, i.e.\n"

"minimize L1_penalty_factor * sum_{ij} |weights(i,j)| "

"during training.\n");

declareOption(ol, "L2_penalty_factor",

&RBMDiagonalMatrixConnection::L2_penalty_factor,

OptionBase::buildoption,

"Optional (default=0) factor of L2 regularization term, i.e.\n"

"minimize 0.5 * L2_penalty_factor * sum_{ij} weights(i,j)^2 "

"during training.\n");

declareOption(ol, "L2_decrease_constant",

&RBMDiagonalMatrixConnection::L2_decrease_constant,

OptionBase::buildoption,

"Parameter of the L2 penalty decrease (see L2_decrease_type).",

OptionBase::advanced_level);

declareOption(ol, "L2_shift",

&RBMDiagonalMatrixConnection::L2_shift,

OptionBase::buildoption,

"Parameter of the L2 penalty decrease (see L2_decrease_type).",

OptionBase::advanced_level);

declareOption(ol, "L2_decrease_type",

&RBMDiagonalMatrixConnection::L2_decrease_type,

OptionBase::buildoption,

"The kind of L2 decrease that is being applied. The decrease\n"

"consists in scaling the L2 penalty by a factor that depends on the\n"

"number 't' of times this penalty has been used to modify the\n"

"weights of the connection. It can be one of:\n"

" - 'one_over_t': 1 / (1 + t * L2_decrease_constant)\n"

" - 'sigmoid_like': sigmoid((L2_shift - t) * L2_decrease_constant)",

OptionBase::advanced_level);

declareOption(ol, "L2_n_updates",

&RBMDiagonalMatrixConnection::L2_n_updates,

OptionBase::learntoption,

"Number of times that weights have been changed by the L2 penalty\n"

"update rule.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RBMDiagonalMatrixConnection::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::RBMConnection.

Definition at line 194 of file RBMDiagonalMatrixConnection.h.

:

//##### Protected Member Functions ######################################

| RBMDiagonalMatrixConnection * PLearn::RBMDiagonalMatrixConnection::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMConnection.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

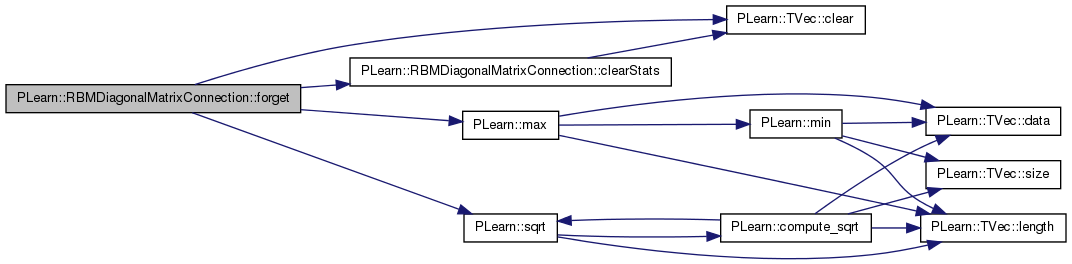

| void PLearn::RBMDiagonalMatrixConnection::forget | ( | ) | [virtual] |

reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Definition at line 584 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::clear(), clearStats(), d, PLearn::RBMConnection::down_size, PLearn::RBMConnection::initialization_method, L2_n_updates, PLearn::max(), PLWARNING, PLearn::OnlineLearningModule::random_gen, PLearn::sqrt(), PLearn::RBMConnection::up_size, and weights_diag.

Referenced by build_().

{

clearStats();

if( initialization_method == "zero" )

weights_diag.clear();

else

{

if( !random_gen )

{

PLWARNING( "RBMDiagonalMatrixConnection: cannot forget() without"

" random_gen" );

return;

}

//random_gen->manual_seed(1827);

real d = 1. / max( down_size, up_size );

if( initialization_method == "uniform_sqrt" )

d = sqrt( d );

random_gen->fill_random_uniform( weights_diag, -d, d );

}

L2_n_updates = 0;

}

| OptionList & PLearn::RBMDiagonalMatrixConnection::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

| OptionMap & PLearn::RBMDiagonalMatrixConnection::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

| RemoteMethodMap & PLearn::RBMDiagonalMatrixConnection::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMDiagonalMatrixConnection.cc.

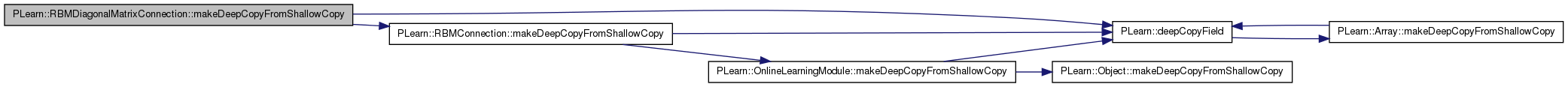

| void PLearn::RBMDiagonalMatrixConnection::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMConnection.

Definition at line 147 of file RBMDiagonalMatrixConnection.cc.

References PLearn::deepCopyField(), PLearn::RBMConnection::makeDeepCopyFromShallowCopy(), weights_diag, weights_inc, weights_neg_stats, and weights_pos_stats.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(weights_diag, copies);

deepCopyField(weights_pos_stats, copies);

deepCopyField(weights_neg_stats, copies);

deepCopyField(weights_inc, copies);

}

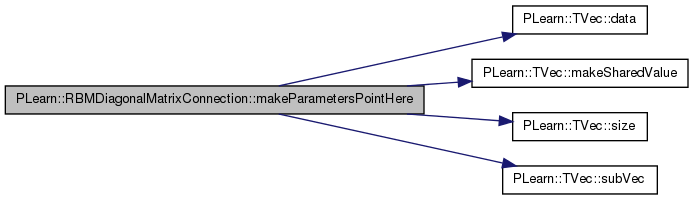

| Vec PLearn::RBMDiagonalMatrixConnection::makeParametersPointHere | ( | const Vec & | global_parameters | ) | [virtual] |

Make the parameters data be sub-vectors of the given global_parameters.

The argument should have size >= nParameters. The result is a Vec that starts just after this object's parameters end, i.e. result = global_parameters.subVec(nParameters(),global_parameters.size()-nParameters()); This allows to easily chain calls of this method on multiple RBMParameters.

Implements PLearn::RBMConnection.

Definition at line 631 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::data(), m, PLearn::TVec< T >::makeSharedValue(), n, PLERROR, PLearn::TVec< T >::size(), PLearn::TVec< T >::subVec(), and weights_diag.

{

int n=weights_diag.size();

int m = global_parameters.size();

if (m<n)

PLERROR("RBMDiagonalMatrixConnection::makeParametersPointHere: argument has length %d, should be longer than nParameters()=%d",m,n);

real* p = global_parameters.data();

weights_diag.makeSharedValue(p,n);

return global_parameters.subVec(n,m-n);

}

| int PLearn::RBMDiagonalMatrixConnection::nParameters | ( | ) | const [virtual] |

optionally perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation.

return the number of parameters

THE DEFAULT IMPLEMENTATION PROVIDED IN THE SUPER-CLASS DOES NOT DO ANYTHING. return the number of parameters

Implements PLearn::RBMConnection.

Definition at line 621 of file RBMDiagonalMatrixConnection.cc.

References PLearn::TVec< T >::size(), and weights_diag.

{

return weights_diag.size();

}

| void PLearn::RBMDiagonalMatrixConnection::update | ( | const Mat & | pos_down_values, |

| const Mat & | pos_up_values, | ||

| const Mat & | neg_down_values, | ||

| const Mat & | neg_up_values | ||

| ) | [virtual] |

Not implemented.

Reimplemented from PLearn::RBMConnection.

Definition at line 313 of file RBMDiagonalMatrixConnection.cc.

References applyWeightPenalty(), PLearn::TVec< T >::data(), PLearn::fast_exact_is_equal(), i, L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::RBMConnection::momentum, PLASSERT, PLERROR, weights_diag, and PLearn::TMat< T >::width().

{

// weights += learning_rate * ( h_0 v_0' - h_1 v_1' );

// or:

// weights[i][j] += learning_rate * (h_0[i] v_0[j] - h_1[i] v_1[j]);

int l = weights_diag.length();

PLASSERT( pos_up_values.width() == l );

PLASSERT( neg_up_values.width() == l );

PLASSERT( pos_down_values.width() == l );

PLASSERT( neg_down_values.width() == l );

real* w_i = weights_diag.data();

real* pdv;

real* puv;

real* ndv;

real* nuv;

if( momentum == 0. )

{

// We use the average gradient over a mini-batch.

real avg_lr = learning_rate / pos_down_values.length();

for( int t=0; t<pos_up_values.length(); t++ )

{

pdv = pos_down_values[t];

puv = pos_up_values[t];

ndv = neg_down_values[t];

nuv = neg_up_values[t];

for( int i=0 ; i<l ; i++)

w_i[i] += avg_lr * (puv[i] * pdv[i] - nuv[i] * ndv[i]);

}

}

else

{

PLERROR("RBMDiagonalMatrixConnection::update minibatch with momentum - Not implemented");

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

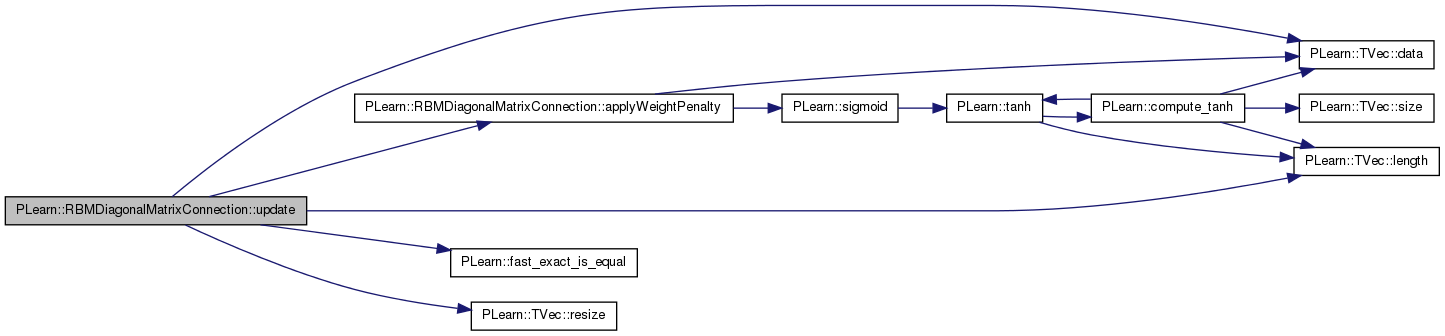

| void PLearn::RBMDiagonalMatrixConnection::update | ( | const Vec & | pos_down_values, |

| const Vec & | pos_up_values, | ||

| const Vec & | neg_down_values, | ||

| const Vec & | neg_up_values | ||

| ) | [virtual] |

Updates parameters according to contrastive divergence gradient, not using the statistics but the explicit values passed.

Reimplemented from PLearn::RBMConnection.

Definition at line 273 of file RBMDiagonalMatrixConnection.cc.

References applyWeightPenalty(), PLearn::TVec< T >::data(), PLearn::fast_exact_is_equal(), i, L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TVec< T >::length(), PLearn::RBMConnection::momentum, PLASSERT, PLearn::TVec< T >::resize(), weights_diag, and weights_inc.

{

int l = weights_diag.length();

PLASSERT( pos_up_values.length() == l );

PLASSERT( neg_up_values.length() == l );

PLASSERT( pos_down_values.length() == l );

PLASSERT( neg_down_values.length() == l );

real* w_i = weights_diag.data();

real* pdv = pos_down_values.data();

real* puv = pos_up_values.data();

real* ndv = neg_down_values.data();

real* nuv = neg_up_values.data();

if( momentum == 0. )

{

for( int i=0 ; i<l ; i++)

w_i[i] += learning_rate * (puv[i] * pdv[i] - nuv[i] * ndv[i]);

}

else

{

// ensure that weights_inc has the right size

weights_inc.resize( l );

real* winc_i = weights_inc.data();

for( int i=0 ; i<l ; i++ )

{

winc_i[i] = momentum * winc_i[i]

+ learning_rate * (puv[i] * pdv[i] - nuv[i] * ndv[i]);

w_i[i] += winc_i[i];

}

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

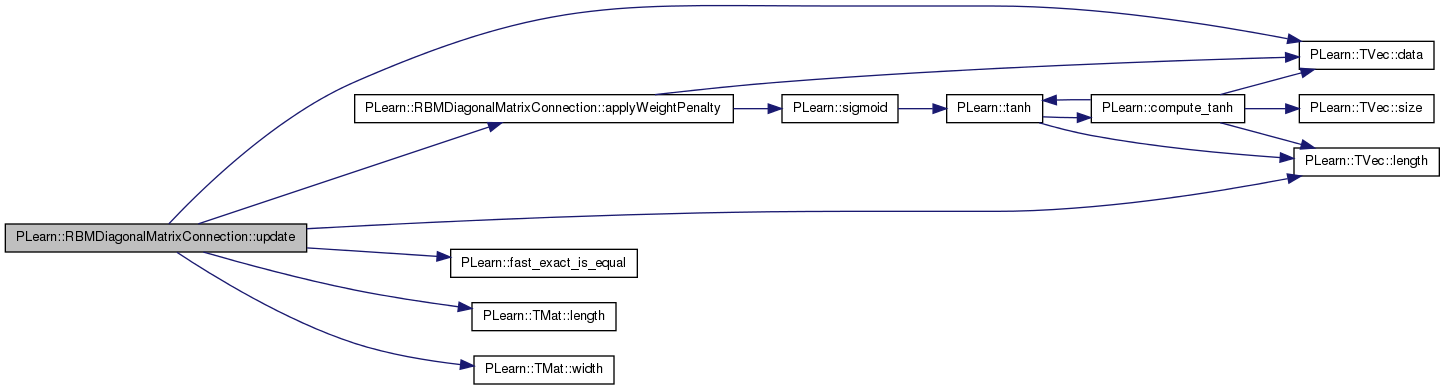

| void PLearn::RBMDiagonalMatrixConnection::update | ( | ) | [virtual] |

Updates parameters according to contrastive divergence gradient.

Implements PLearn::RBMConnection.

Definition at line 226 of file RBMDiagonalMatrixConnection.cc.

References applyWeightPenalty(), clearStats(), PLearn::TVec< T >::data(), PLearn::fast_exact_is_equal(), i, L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TVec< T >::length(), PLearn::RBMConnection::momentum, PLearn::RBMConnection::neg_count, PLearn::RBMConnection::pos_count, PLearn::TVec< T >::resize(), weights_diag, weights_inc, weights_neg_stats, and weights_pos_stats.

{

// updates parameters

//weights += learning_rate * (weights_pos_stats/pos_count

// - weights_neg_stats/neg_count)

real pos_factor = learning_rate / pos_count;

real neg_factor = -learning_rate / neg_count;

int l = weights_diag.length();

real* w_i = weights_diag.data();

real* wps_i = weights_pos_stats.data();

real* wns_i = weights_neg_stats.data();

if( momentum == 0. )

{

// no need to use weights_inc

for( int i=0 ; i<l ; i++ )

w_i[i] += pos_factor * wps_i[i] + neg_factor * wns_i[i];

}

else

{

// ensure that weights_inc has the right size

weights_inc.resize( l );

// The update rule becomes:

// weights_inc = momentum * weights_inc

// - learning_rate * (weights_pos_stats/pos_count

// - weights_neg_stats/neg_count);

// weights += weights_inc;

real* winc_i = weights_inc.data();

for( int i=0 ; i<l ; i++ )

{

winc_i[i] = momentum * winc_i[i]

+ pos_factor * wps_i[i] + neg_factor * wns_i[i];

w_i[i] += winc_i[i];

}

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

clearStats();

}

Reimplemented from PLearn::RBMConnection.

Definition at line 194 of file RBMDiagonalMatrixConnection.h.

Optional (default=0) factor of L1 regularization term.

Definition at line 64 of file RBMDiagonalMatrixConnection.h.

Referenced by addWeightPenalty(), applyWeightPenalty(), bpropUpdate(), declareOptions(), and update().

Definition at line 69 of file RBMDiagonalMatrixConnection.h.

Referenced by addWeightPenalty(), applyWeightPenalty(), and declareOptions().

Definition at line 71 of file RBMDiagonalMatrixConnection.h.

Referenced by applyWeightPenalty(), and declareOptions().

Definition at line 72 of file RBMDiagonalMatrixConnection.h.

Referenced by applyWeightPenalty(), declareOptions(), and forget().

Optional (default=0) factor of L2 regularization term.

Definition at line 67 of file RBMDiagonalMatrixConnection.h.

Referenced by addWeightPenalty(), applyWeightPenalty(), bpropUpdate(), declareOptions(), and update().

Definition at line 70 of file RBMDiagonalMatrixConnection.h.

Referenced by addWeightPenalty(), applyWeightPenalty(), and declareOptions().

Vector containing the diagonal of the weight matrix.

Definition at line 61 of file RBMDiagonalMatrixConnection.h.

Referenced by applyWeightPenalty(), bpropUpdate(), build_(), computeProduct(), computeProducts(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), makeParametersPointHere(), nParameters(), and update().

Used if momentum != 0.

Definition at line 84 of file RBMDiagonalMatrixConnection.h.

Referenced by build_(), makeDeepCopyFromShallowCopy(), and update().

Accumulates negative contribution to the weights' gradient.

Definition at line 81 of file RBMDiagonalMatrixConnection.h.

Referenced by accumulateNegStats(), build_(), clearStats(), makeDeepCopyFromShallowCopy(), and update().

Accumulates positive contribution to the weights' gradient.

Definition at line 78 of file RBMDiagonalMatrixConnection.h.

Referenced by accumulatePosStats(), build_(), clearStats(), makeDeepCopyFromShallowCopy(), and update().

1.7.4

1.7.4