|

PLearn 0.1

|

|

PLearn 0.1

|

#include <LocalMedBoost.h>

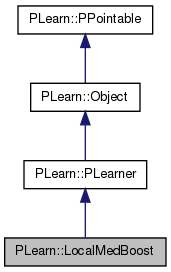

Definition at line 52 of file LocalMedBoost.h.

typedef PLearner PLearn::LocalMedBoost::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 54 of file LocalMedBoost.h.

| PLearn::LocalMedBoost::LocalMedBoost | ( | ) |

Definition at line 59 of file LocalMedBoost.cc.

: robustness(0.1), adapt_robustness_factor(0.0), loss_function_weight(1.0), objective_function("l2"), regression_tree(1), max_nstages(1) { }

| PLearn::LocalMedBoost::~LocalMedBoost | ( | ) | [virtual] |

Definition at line 69 of file LocalMedBoost.cc.

{

}

| string PLearn::LocalMedBoost::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file LocalMedBoost.cc.

| OptionList & PLearn::LocalMedBoost::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file LocalMedBoost.cc.

| RemoteMethodMap & PLearn::LocalMedBoost::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file LocalMedBoost.cc.

Reimplemented from PLearn::PLearner.

Definition at line 57 of file LocalMedBoost.cc.

| Object * PLearn::LocalMedBoost::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 57 of file LocalMedBoost.cc.

| StaticInitializer LocalMedBoost::_static_initializer_ & PLearn::LocalMedBoost::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file LocalMedBoost.cc.

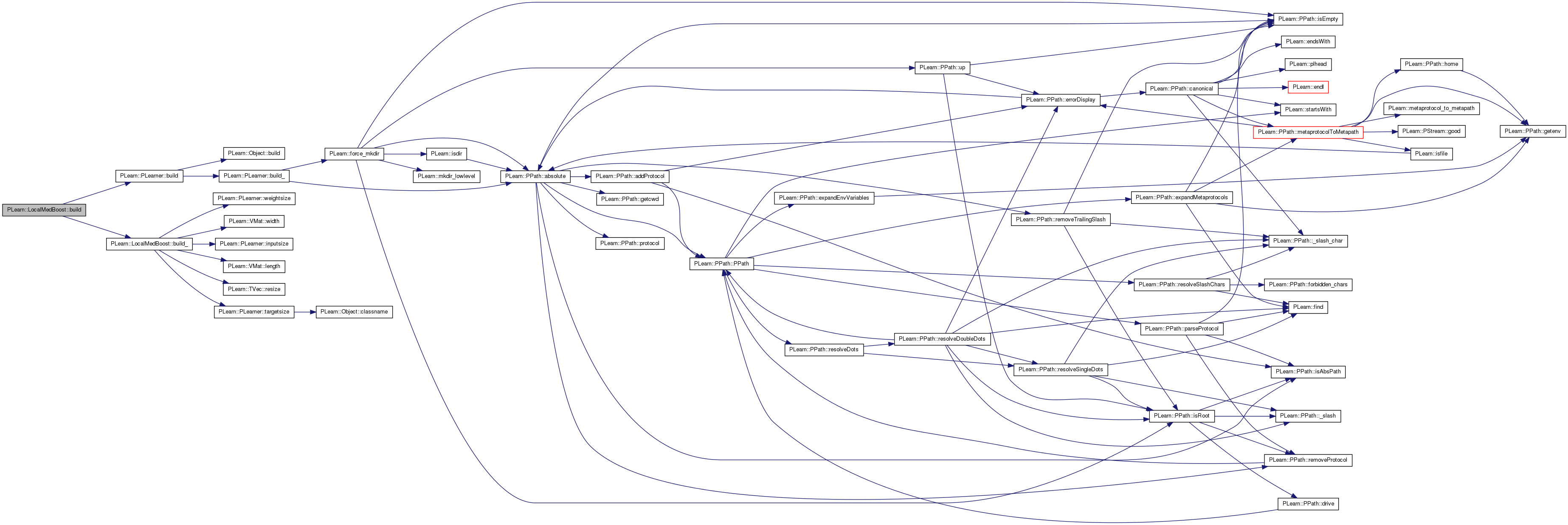

| void PLearn::LocalMedBoost::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 140 of file LocalMedBoost.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

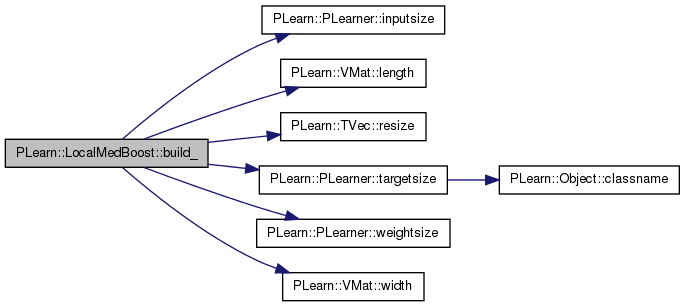

| void PLearn::LocalMedBoost::build_ | ( | ) | [private] |

**** SUBCLASS WRITING: ****

This method should finish building of the object, according to set 'options', in *any* situation.

Typical situations include:

You can assume that the parent class' build_() has already been called.

A typical build method will want to know the inputsize(), targetsize() and outputsize(), and may also want to check whether train_set->hasWeights(). All these methods require a train_set to be set, so the first thing you may want to do, is check if(train_set), before doing any heavy building...

Note: build() is always called by setTrainingSet.

Reimplemented from PLearn::PLearner.

Definition at line 146 of file LocalMedBoost.cc.

References base_awards, base_confidences, base_rewards, exp_weighted_edges, PLearn::PLearner::inputsize(), PLearn::VMat::length(), length, max_nstages, PLearn::PLearner::nstages, PLERROR, PLearn::TVec< T >::resize(), sample_costs, sample_input, sample_output, sample_target, sample_weights, PLearn::PLearner::targetsize(), PLearn::PLearner::train_set, PLearn::PLearner::weightsize(), PLearn::VMat::width(), and width.

Referenced by build().

{

if (train_set)

{

length = train_set->length();

width = train_set->width();

if (length < 2) PLERROR("LocalMedBoost: the training set must contain at least two samples, got %d", length);

inputsize = train_set->inputsize();

targetsize = train_set->targetsize();

weightsize = train_set->weightsize();

if (inputsize < 1) PLERROR("LocalMedBoost: expected inputsize greater than 0, got %d", inputsize);

if (targetsize != 1) PLERROR("LocalMedBoost: expected targetsize to be 1, got %d", targetsize);

if (weightsize != 1) PLERROR("LocalMedBoost: expected weightsize to be 1, got %d", weightsize);

sample_input.resize(inputsize);

sample_target.resize(targetsize);

sample_output.resize(4);

sample_costs.resize(6);

sample_weights.resize(length);

base_rewards.resize(length);

base_confidences.resize(length);

base_awards.resize(length);

exp_weighted_edges.resize(length);

if (max_nstages < nstages) max_nstages = nstages;

}

}

| string PLearn::LocalMedBoost::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file LocalMedBoost.cc.

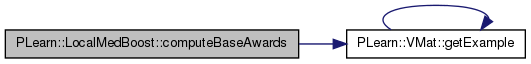

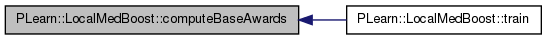

| void PLearn::LocalMedBoost::computeBaseAwards | ( | ) | [private] |

Definition at line 254 of file LocalMedBoost.cc.

References adapt_robustness_factor, base_awards, base_confidences, base_regressors, base_rewards, capacity_too_large, capacity_too_small, each_train_sample_index, edge, PLearn::VMat::getExample(), length, maxt_base_award, objective_function, robustness, sample_costs, sample_input, sample_output, sample_target, sample_weight, PLearn::PLearner::stage, and PLearn::PLearner::train_set.

Referenced by train().

{

edge = 0.0;

capacity_too_large = true;

capacity_too_small = true;

real mini_base_award = INT_MAX;

int sample_costs_index;

if (objective_function == "l1") sample_costs_index=3;

else sample_costs_index=2;

for (each_train_sample_index = 0; each_train_sample_index < length; each_train_sample_index++)

{

train_set->getExample(each_train_sample_index, sample_input, sample_target, sample_weight);

base_regressors[stage]->computeOutputAndCosts(sample_input, sample_target, sample_output, sample_costs);

base_rewards[each_train_sample_index] = sample_costs[sample_costs_index];

base_confidences[each_train_sample_index] = sample_costs[1];

base_awards[each_train_sample_index] = base_rewards[each_train_sample_index] * base_confidences[each_train_sample_index];

if (base_awards[each_train_sample_index] < mini_base_award) mini_base_award = base_awards[each_train_sample_index];

edge += sample_weight * base_awards[each_train_sample_index];

if (base_awards[each_train_sample_index] < robustness) capacity_too_large = false;

}

if (stage == 0) maxt_base_award = mini_base_award;

if (mini_base_award > maxt_base_award) maxt_base_award = mini_base_award;

if (adapt_robustness_factor > 0.0)

{

robustness = maxt_base_award + adapt_robustness_factor;

capacity_too_large = false;

}

if (edge >= robustness)

{

capacity_too_small = false;

}

}

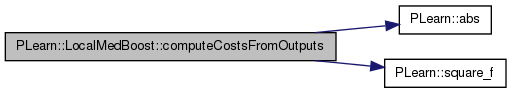

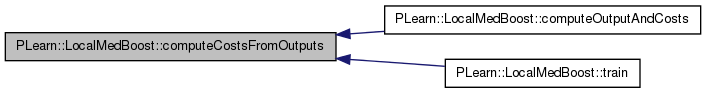

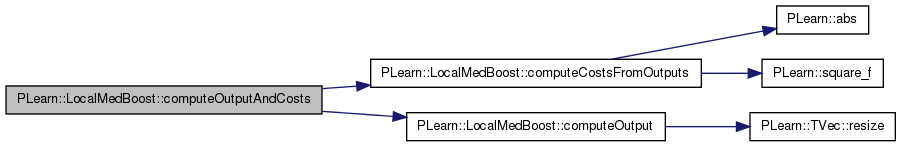

| void PLearn::LocalMedBoost::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the weighted costs from already computed output. The costs should correspond to the cost names returned by getTestCostNames().

NOTE: In exotic cases, the cost may also depend on some info in the input, that's why the method also gets so see it.

Implements PLearn::PLearner.

Definition at line 702 of file LocalMedBoost.cc.

References PLearn::abs(), loss_function_weight, and PLearn::square_f().

Referenced by computeOutputAndCosts(), and train().

{

costsv[0] = square_f(outputv[0] - targetv[0]);

costsv[1] = outputv[1];

if (abs(outputv[0] - targetv[0]) > loss_function_weight) costsv[2] = 1.0;

else costsv[2] = 0.0;

costsv[3] = outputv[3] - outputv[0];

costsv[4] = outputv[0] - outputv[2];

if (costsv[3] < costsv[4]) costsv[5] = costsv[3];

else costsv[5] = costsv[4];

}

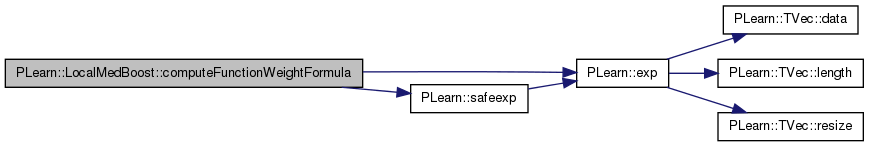

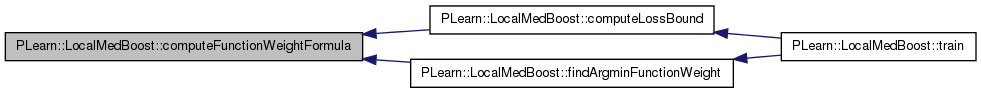

Definition at line 530 of file LocalMedBoost.cc.

References base_awards, each_train_sample_index, PLearn::exp(), length, robustness, PLearn::safeexp(), and sample_weights.

Referenced by computeLossBound(), and findArgminFunctionWeight().

{

real return_value = 0.0;

for (each_train_sample_index = 0; each_train_sample_index < length; each_train_sample_index++)

{

return_value += sample_weights[each_train_sample_index] *

exp(-1.0 * alpha * base_awards[each_train_sample_index]);

}

return_value *= safeexp(robustness * alpha);

return return_value;

}

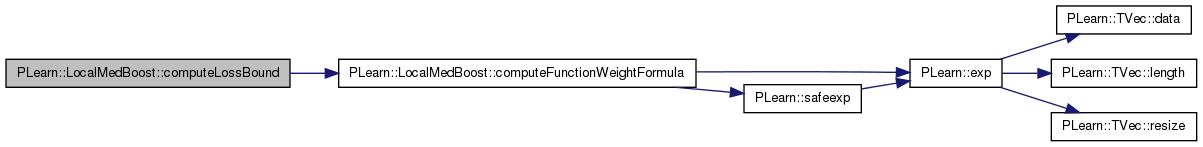

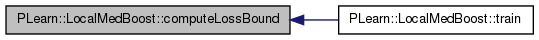

| void PLearn::LocalMedBoost::computeLossBound | ( | ) | [private] |

Definition at line 289 of file LocalMedBoost.cc.

References bound, computeFunctionWeightFormula(), function_weights, loss_function, and PLearn::PLearner::stage.

Referenced by train().

{

loss_function[stage] = computeFunctionWeightFormula(function_weights[stage]);

bound *= loss_function[stage];

}

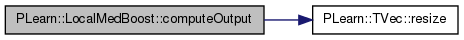

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 603 of file LocalMedBoost.cc.

References base_regressors, end_stage, function_weights, PLERROR, PLearn::TVec< T >::resize(), and robustness.

Referenced by computeOutputAndCosts(), and train().

{

if (end_stage < 1)

PLERROR("LocalMedBoost: No function has been built");

TVec<real> base_regressor_outputs; // vector of base regressor outputs for a sample

TVec<real> base_regressor_confidences; // vector of base regressor confidences for a sample

Vec base_regressor_outputv; // vector of a base regressor computed prediction

real sum_alpha;

real sum_function_weights; // sum of all regressor weighted confidences

real norm_sum_function_weights;

real sum_fplus_weights; // sum of the regressor weighted confidences for the f+ function

real sum_fminus_weights;

real zero_quantile;

real rob_quantile;

real output_rob_plus;

real output_rob_minus;

real output_rob_save;

int index_j; // index to go thru the base regressor's arrays

int index_t; // index to go thru the base regressor's arrays

base_regressor_outputs.resize(end_stage);

base_regressor_confidences.resize(end_stage);

base_regressor_outputv.resize(2);

sum_function_weights = 0.0;

sum_alpha = 0.0;

outputv[0] = -1E9;

outputv[1] = 0.0;

output_rob_plus = 1E9;

output_rob_minus = -1E9;

for (index_t = 0; index_t < end_stage; index_t++)

{

base_regressors[index_t]->computeOutput(inputv, base_regressor_outputv);

base_regressor_outputs[index_t] = base_regressor_outputv[0];

base_regressor_confidences[index_t] = base_regressor_outputv[1];

if (base_regressor_outputs[index_t] > outputv[0])

{

outputv[0] = base_regressor_outputs[index_t];

outputv[1] = base_regressor_confidences[index_t];

}

sum_alpha += function_weights[index_t];

sum_function_weights += function_weights[index_t] * base_regressor_confidences[index_t];

}

norm_sum_function_weights = sum_function_weights / sum_alpha;

if (norm_sum_function_weights > 0.0) rob_quantile = 0.5 * (1.0 - (robustness / norm_sum_function_weights) * sum_function_weights);

else rob_quantile = 0.0;

zero_quantile = 0.5 * sum_function_weights;

for (index_j = 0; index_j < end_stage; index_j++)

{

sum_fplus_weights = 0.0;

sum_fminus_weights = 0.0;

for (index_t = 0; index_t < end_stage; index_t++)

{

if (base_regressor_outputs[index_j] < base_regressor_outputs[index_t])

{

sum_fplus_weights += function_weights[index_t] * base_regressor_confidences[index_t];

}

if (base_regressor_outputs[index_j] > base_regressor_outputs[index_t])

{

sum_fminus_weights += function_weights[index_t] * base_regressor_confidences[index_t];

}

}

if (norm_sum_function_weights > 0.0 && sum_fplus_weights < zero_quantile)

{

if (base_regressor_outputs[index_j] < outputv[0])

{

outputv[0] = base_regressor_outputs[index_j];

outputv[1] = base_regressor_confidences[index_j];

}

}

if (norm_sum_function_weights > 0.0 && sum_fplus_weights < rob_quantile)

{

if (base_regressor_outputs[index_j] < output_rob_plus)

{

output_rob_plus = base_regressor_outputs[index_j];

}

}

if (norm_sum_function_weights > 0.0 && sum_fminus_weights < rob_quantile)

{

if (base_regressor_outputs[index_j] > output_rob_minus)

{

output_rob_minus = base_regressor_outputs[index_j];

}

}

}

if (output_rob_minus > output_rob_plus)

{

output_rob_save = output_rob_minus;

output_rob_minus = output_rob_plus;

output_rob_plus = output_rob_save;

}

outputv[2] = output_rob_minus;

outputv[3] = output_rob_plus;

}

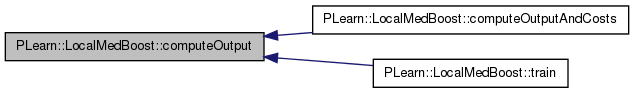

| void PLearn::LocalMedBoost::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 696 of file LocalMedBoost.cc.

References computeCostsFromOutputs(), and computeOutput().

{

computeOutput(inputv, outputv);

computeCostsFromOutputs(inputv, outputv, targetv, costsv);

}

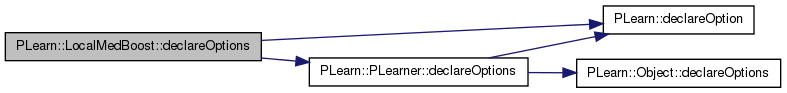

| void PLearn::LocalMedBoost::declareOptions | ( | OptionList & | ol | ) | [static] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 73 of file LocalMedBoost.cc.

References adapt_robustness_factor, base_regressor_template, base_regressors, bound, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), end_stage, function_weights, PLearn::OptionBase::learntoption, loss_function, loss_function_weight, max_nstages, maxt_base_award, objective_function, regression_tree, robustness, sample_weights, sorted_train_set, tree_regressor_template, and tree_wrapper_template.

{

declareOption(ol, "robustness", &LocalMedBoost::robustness, OptionBase::buildoption,

"The robustness parameter of the boosting algorithm.\n");

declareOption(ol, "adapt_robustness_factor", &LocalMedBoost::adapt_robustness_factor, OptionBase::buildoption,

"If not 0.0, robustness will be adapted at each stage with max(t)min(i) base_award + this constant.\n");

declareOption(ol, "loss_function_weight", &LocalMedBoost::loss_function_weight, OptionBase::buildoption,

"The hyper parameter to balance the error and the confidence factor\n");

declareOption(ol, "objective_function", &LocalMedBoost::objective_function, OptionBase::buildoption,

"Indicates which of the base reward to use. default is l2 and the other posibility is l1.\n"

"Normally it should be consistent with the objective function optimised by the base regressor.\n");

declareOption(ol, "regression_tree", &LocalMedBoost::regression_tree, OptionBase::buildoption,

"If set to 1, the tree_regressor_template is used instead of the base_regressor_template.\n"

"It permits to sort the train set only once for all boosting iterations.\n");

declareOption(ol, "max_nstages", &LocalMedBoost::max_nstages, OptionBase::buildoption,

"Maximum number of nstages in the hyper learner to size the vectors of base learners.\n"

"(If smaller than nstages, nstages is used)");

declareOption(ol, "base_regressor_template", &LocalMedBoost::base_regressor_template, OptionBase::buildoption,

"The template for the base regressor to be boosted (used if the regression_tree option is set to 0).\n");

declareOption(ol, "tree_regressor_template", &LocalMedBoost::tree_regressor_template, OptionBase::buildoption,

"The template for a RegressionTree base regressor when the regression_tree option is set to 1.\n");

declareOption(ol, "tree_wrapper_template", &LocalMedBoost::tree_wrapper_template, OptionBase::buildoption,

"The template for a RegressionTree base regressor to be boosted thru a wrapper."

"This is useful when you want to used a different confidence function."

"The regression_tree option needs to be set to 2.\n");

declareOption(ol, "end_stage", &LocalMedBoost::end_stage, OptionBase::learntoption,

"The last train stage after end of training\n");

declareOption(ol, "bound", &LocalMedBoost::bound, OptionBase::learntoption,

"Cumulative bound computed after each boosting stage\n");

declareOption(ol, "maxt_base_award", &LocalMedBoost::maxt_base_award, OptionBase::learntoption,

"max(t)min(i) base_award kept to adapt robustness at each stage.\n");

declareOption(ol, "sorted_train_set", &LocalMedBoost::sorted_train_set, OptionBase::learntoption,

"A sorted train set when using a tree as a base regressor\n");

declareOption(ol, "base_regressors", &LocalMedBoost::base_regressors, OptionBase::learntoption,

"The vector of base regressors built by the training at each boosting stage\n");

declareOption(ol, "function_weights", &LocalMedBoost::function_weights, OptionBase::learntoption,

"The array of function weights built by the boosting algorithm\n");

declareOption(ol, "loss_function", &LocalMedBoost::loss_function, OptionBase::learntoption,

"The array of loss_function values built by the boosting algorithm\n");

declareOption(ol, "sample_weights", &LocalMedBoost::sample_weights, OptionBase::learntoption,

"The array to represent different distributions on the samples of the training set.\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::LocalMedBoost::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 136 of file LocalMedBoost.h.

:

void build_();

| LocalMedBoost * PLearn::LocalMedBoost::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file LocalMedBoost.cc.

| real PLearn::LocalMedBoost::findArgminFunctionWeight | ( | ) | [private] |

Definition at line 306 of file LocalMedBoost.cc.

References bracket_a_start, bracket_b_start, bracketing_learning_rate, bracketing_zero, computeFunctionWeightFormula(), f_a, f_b, f_c, f_u, f_v, f_w, f_x, interpolation_learning_rate, interpolation_precision, iter, max_learning_rate, p_lim, p_step, p_tin, p_to1, p_tol1, p_tol2, t_p, t_q, t_r, t_sav, x_a, x_b, x_c, x_d, x_e, x_lim, x_u, x_v, x_w, x_x, and x_xmed.

Referenced by train().

{

p_step = bracketing_learning_rate;

p_lim = max_learning_rate;

p_tin = bracketing_zero;

x_a = bracket_a_start;

x_b = bracket_b_start;

f_a = computeFunctionWeightFormula(x_a);

f_b = computeFunctionWeightFormula(x_b);

x_lim = 0.0;

if (f_b > f_a)

{

t_sav = x_a; x_a = x_b; x_b = t_sav;

t_sav = f_a; f_a = f_b; f_b = t_sav;

}

x_c = x_b + p_step * (x_b - x_a);

f_c = computeFunctionWeightFormula(x_c);

while (f_b > f_c)

{

t_r = (x_b - x_a) * (f_b - f_c);

t_q = (x_b - x_c) * (f_b - f_a);

t_sav = t_q - t_r;

if (t_sav < 0.0)

{

t_sav *= -1.0;

if (t_sav < p_tin)

{

t_sav = p_tin;

}

t_sav *= -1.0;

}

else

{

if (t_sav < p_tin)

{

t_sav = p_tin;

}

}

x_u = (x_b - ((x_b - x_c) * t_q) - ((x_b - x_a) * t_r)) / (2 * t_sav);

x_lim = x_b + p_lim * (x_c - x_b);

if(((x_b -x_u) * (x_u - x_c)) > 0.0)

{

f_u = computeFunctionWeightFormula(x_u);

if (f_u < f_c)

{

x_a = x_b;

x_b = x_u;

f_a = f_b;

f_b = f_u;

break;

}

else

{

if (f_u > f_b)

{

x_c = x_u;

f_c = f_u;

break;

}

}

x_u = x_c + p_step * (x_c - x_b);

f_u = computeFunctionWeightFormula(x_u);

}

else

{

if (((x_c -x_u) * (x_u - x_lim)) > 0.0)

{

f_u = computeFunctionWeightFormula(x_u);

if (f_u < f_c)

{

x_b = x_c; x_c = x_u;

x_u = x_c + p_step * (x_c - x_b);

f_b = f_c; f_c = f_u;

f_u = computeFunctionWeightFormula(x_u);

}

}

else

{

if (((x_u -x_lim) * (x_lim - x_c)) >= 0.0)

{

x_u = x_lim;

f_u = computeFunctionWeightFormula(x_u);

}

else

{

x_u = x_c + p_step * (x_c - x_b);

f_u = computeFunctionWeightFormula(x_u);

}

}

}

x_a = x_b; x_b = x_c; x_c = x_u;

f_a = f_b; f_b = f_c; f_c = f_u;

}

p_step = interpolation_learning_rate;

p_to1 = interpolation_precision;

x_d = x_e = 0.0;

x_v = x_w = x_x = x_b;

f_v = f_w = f_x = f_b;

if (x_a < x_c)

{

x_b = x_c;

}

else

{

x_b = x_a;

x_a = x_c;

}

for (iter = 1; iter <= 100; iter++)

{

x_xmed = 0.5 * (x_a + x_b);

p_tol1 = p_to1 * fabs(x_x) + p_tin;

p_tol2 = 2.0 * p_tol1;

if (fabs(x_x - x_xmed) <= (p_tol2 - 0.5 * (x_b - x_a)))

{

break;

}

if (fabs(x_e) > p_tol1)

{

t_r = (x_x - x_w) * (f_x - f_v);

t_q = (x_x - x_v) * (f_x - f_w);

t_p = (x_x - x_v) * t_q - (x_x - x_w) * t_r;

t_q = 2.0 * (t_q - t_r);

if (t_q > 0.0)

{

t_p = -t_p;

}

t_q = fabs(t_q);

t_sav= x_e;

x_e = x_d;

if (fabs(t_p) >= fabs(0.5 * t_q * t_sav) ||

t_p <= t_q * (x_a - x_x) ||

t_p >= t_q * (x_b - x_x))

{

if (x_x >= x_xmed)

{

x_d = p_step * (x_a - x_x);

}

else

{

x_d = p_step * (x_b - x_x);

}

}

else

{

x_d = t_p / t_q;

x_u = x_x + x_d;

if (x_u - x_a < p_tol2 || x_b - x_u < p_tol2)

{

x_d = p_tol1;

if (x_xmed - x_x < 0.0)

{

x_d = -x_d;

}

}

}

}

else

{

if (x_x >= x_xmed)

{

x_d = p_step * (x_a - x_x);

}

else

{

x_d = p_step * (x_b - x_x);

}

}

if (fabs(x_d) >= p_tol1)

{

x_u = x_x + x_d;

}

else

{

if (x_d < 0.0)

{

x_u = x_x - p_tol1;

}

else

{

x_u = x_x + p_tol1;

}

}

f_u = computeFunctionWeightFormula(x_u);

if (f_u <= f_x)

{

if (x_u >= x_x)

{

x_a = x_x;

}

else

{

x_b = x_x;

}

x_v = x_w; x_w = x_x; x_x = x_u;

f_v = f_w; f_w = f_x; f_x = f_u;

}

else

{

if (x_u < x_x)

{

x_a = x_u;

}

else

{

x_b = x_u;

}

if (f_u <= f_w || x_w == x_x)

{

x_v = x_w; x_w = x_u;

f_v = f_w; f_w = f_u;

}

else

{

if (f_u <= f_v || x_v == x_x || x_v == x_w)

{

x_v = x_u;

f_v = f_u;

}

}

}

}

return x_x;

}

| void PLearn::LocalMedBoost::forget | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!)

A typical forget() method should do the following:

This method is typically called by the build_() method, after it has finished setting up the parameters, and if it deemed useful to set or reset the learner in its fresh state. (remember build may be called after modifying options that do not necessarily require the learner to restart from a fresh state...) forget is also called by the setTrainingSet method, after calling build(), so it will generally be called TWICE during setTrainingSet!

Reimplemented from PLearn::PLearner.

Definition at line 576 of file LocalMedBoost.cc.

References PLearn::PLearner::stage.

{

stage = 0;

}

| OptionList & PLearn::LocalMedBoost::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file LocalMedBoost.cc.

| OptionMap & PLearn::LocalMedBoost::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file LocalMedBoost.cc.

| RemoteMethodMap & PLearn::LocalMedBoost::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file LocalMedBoost.cc.

| TVec< string > PLearn::LocalMedBoost::getTestCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the costs computed by computeCostsFromOutputs.

Implements PLearn::PLearner.

Definition at line 598 of file LocalMedBoost.cc.

References getTrainCostNames().

{

return getTrainCostNames();

}

| TVec< string > PLearn::LocalMedBoost::getTrainCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 586 of file LocalMedBoost.cc.

Referenced by getTestCostNames().

{

TVec<string> return_msg(6);

return_msg[0] = "mse";

return_msg[1] = "base_confidence";

return_msg[2] = "l1";

return_msg[3] = "rob_minus";

return_msg[4] = "rob_plus";

return_msg[5] = "min_rob";

return return_msg;

}

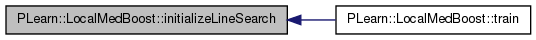

| void PLearn::LocalMedBoost::initializeLineSearch | ( | ) | [private] |

Definition at line 295 of file LocalMedBoost.cc.

References bracket_a_start, bracket_b_start, bracketing_learning_rate, bracketing_zero, interpolation_learning_rate, interpolation_precision, and max_learning_rate.

Referenced by train().

{

bracketing_learning_rate = 1.618034;

bracketing_zero = 1.0e-10;

interpolation_learning_rate = 0.381966;

interpolation_precision = 1.0e-5;

max_learning_rate = 100.0;

bracket_a_start = 0.0;

bracket_b_start = 1.0;

}

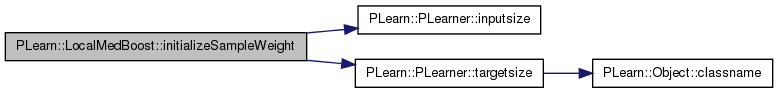

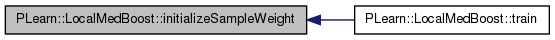

| void PLearn::LocalMedBoost::initializeSampleWeight | ( | ) | [private] |

Definition at line 542 of file LocalMedBoost.cc.

References each_train_sample_index, PLearn::PLearner::inputsize(), length, sample_weights, PLearn::PLearner::targetsize(), and PLearn::PLearner::train_set.

Referenced by train().

{

real init_weight = 1.0 / length;

for (each_train_sample_index = 0; each_train_sample_index < length; each_train_sample_index++)

{

sample_weights[each_train_sample_index] = init_weight;

train_set->put(each_train_sample_index, inputsize + targetsize, sample_weights[each_train_sample_index]);

}

}

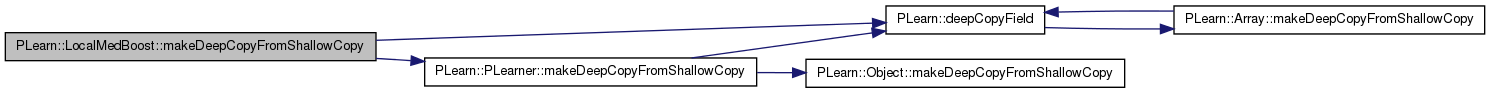

| void PLearn::LocalMedBoost::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 118 of file LocalMedBoost.cc.

References adapt_robustness_factor, base_regressor_template, base_regressors, bound, PLearn::deepCopyField(), end_stage, function_weights, loss_function, loss_function_weight, PLearn::PLearner::makeDeepCopyFromShallowCopy(), max_nstages, maxt_base_award, objective_function, regression_tree, robustness, sample_weights, sorted_train_set, tree_regressor_template, and tree_wrapper_template.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(robustness, copies);

deepCopyField(adapt_robustness_factor, copies);

deepCopyField(loss_function_weight, copies);

deepCopyField(objective_function, copies);

deepCopyField(regression_tree, copies);

deepCopyField(max_nstages, copies);

deepCopyField(base_regressor_template, copies);

deepCopyField(tree_regressor_template, copies);

deepCopyField(tree_wrapper_template, copies);

deepCopyField(end_stage, copies);

deepCopyField(bound, copies);

deepCopyField(maxt_base_award, copies);

deepCopyField(sorted_train_set, copies);

deepCopyField(base_regressors, copies);

deepCopyField(function_weights, copies);

deepCopyField(loss_function, copies);

deepCopyField(sample_weights, copies);

}

| int PLearn::LocalMedBoost::outputsize | ( | ) | const [virtual] |

SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options.

Implements PLearn::PLearner.

Definition at line 581 of file LocalMedBoost.cc.

{

return 4;

}

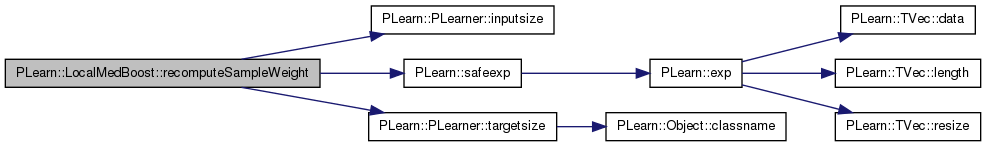

| void PLearn::LocalMedBoost::recomputeSampleWeight | ( | ) | [private] |

Definition at line 552 of file LocalMedBoost.cc.

References base_awards, each_train_sample_index, exp_weighted_edges, function_weights, PLearn::PLearner::inputsize(), length, PLearn::safeexp(), sample_weights, PLearn::PLearner::stage, sum_exp_weighted_edges, PLearn::PLearner::targetsize(), and PLearn::PLearner::train_set.

Referenced by train().

{

sum_exp_weighted_edges = 0.0;

for (each_train_sample_index = 0; each_train_sample_index < length; each_train_sample_index++)

{

exp_weighted_edges[each_train_sample_index] = sample_weights[each_train_sample_index] *

safeexp(-1.0 * function_weights[stage] * base_awards[each_train_sample_index]);

sum_exp_weighted_edges += exp_weighted_edges[each_train_sample_index];

}

for (each_train_sample_index = 0; each_train_sample_index < length; each_train_sample_index++)

{

sample_weights[each_train_sample_index] = exp_weighted_edges[each_train_sample_index] / sum_exp_weighted_edges;

train_set->put(each_train_sample_index, inputsize + targetsize,

sample_weights[each_train_sample_index]);

}

}

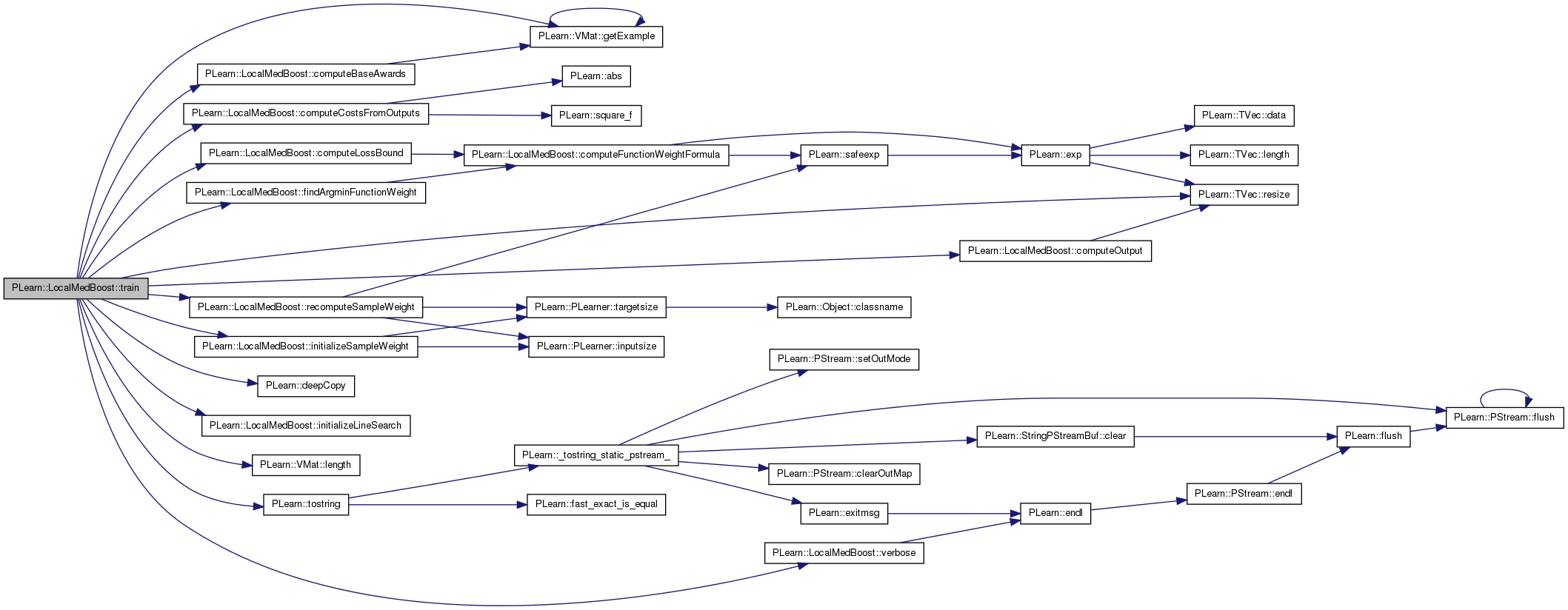

| void PLearn::LocalMedBoost::train | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

The role of the train method is to bring the learner up to stage==nstages, updating the stats with training costs measured on-line in the process.

TYPICAL CODE:

static Vec input; // static so we don't reallocate/deallocate memory each time... static Vec target; // (but be careful that static means shared!) input.resize(inputsize()); // the train_set's inputsize() target.resize(targetsize()); // the train_set's targetsize() real weight; if(!train_stats) // make a default stats collector, in case there's none train_stats = new VecStatsCollector(); if(nstages<stage) // asking to revert to a previous stage! forget(); // reset the learner to stage=0 while(stage<nstages) { // clear statistics of previous epoch train_stats->forget(); //... train for 1 stage, and update train_stats, // using train_set->getSample(input, target, weight); // and train_stats->update(train_costs) ++stage; train_stats->finalize(); // finalize statistics for this epoch }

Implements PLearn::PLearner.

Definition at line 172 of file LocalMedBoost.cc.

References base_regressor_template, base_regressors, bound, capacity_too_large, capacity_too_small, computeBaseAwards(), computeCostsFromOutputs(), computeLossBound(), computeOutput(), PLearn::deepCopy(), each_train_sample_index, edge, end_stage, findArgminFunctionWeight(), function_weights, PLearn::VMat::getExample(), initializeLineSearch(), initializeSampleWeight(), PLearn::VMat::length(), loss_function, loss_function_weight, max_nstages, min_margin, PLearn::PLearner::nstages, PLERROR, recomputeSampleWeight(), regression_tree, PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), robustness, sample_costs, sample_input, sample_output, sample_target, sample_weight, sorted_train_set, PLearn::PLearner::stage, PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, tree_regressor_template, tree_regressors, tree_wrapper_template, tree_wrappers, verbose(), and PLearn::PLearner::verbosity.

{

if (!train_set) PLERROR("LocalMedBoost: the learner has not been properly built");

if (stage == 0)

{

base_regressors.resize(max_nstages);

tree_regressors.resize(max_nstages);

tree_wrappers.resize(max_nstages);

function_weights.resize(max_nstages);

loss_function.resize(max_nstages);

initializeSampleWeight();

initializeLineSearch();

bound = 1.0;

if (regression_tree > 0)

sorted_train_set = new RegressionTreeRegisters(train_set,

report_progress,

verbosity);

}

PP<ProgressBar> pb;

if (report_progress) pb = new ProgressBar("LocalMedBoost: train stages: ", nstages);

for (; stage < nstages; stage++)

{

verbose("LocalMedBoost: The base regressor is being trained at stage: " + tostring(stage), 4);

if (regression_tree > 0)

{

if (regression_tree == 1)

{

tree_regressors[stage] = ::PLearn::deepCopy(tree_regressor_template);

tree_regressors[stage]->setTrainingSet(VMat(sorted_train_set));

base_regressors[stage] = tree_regressors[stage];

}

else

{

tree_wrappers[stage] = ::PLearn::deepCopy(tree_wrapper_template);

tree_wrappers[stage]->setSortedTrainSet(sorted_train_set);

base_regressors[stage] = tree_wrappers[stage];

}

}

else

{

base_regressors[stage] = ::PLearn::deepCopy(base_regressor_template);

}

base_regressors[stage]->setOption("loss_function_weight", tostring(loss_function_weight));

base_regressors[stage]->setTrainingSet(train_set, true);

base_regressors[stage]->setTrainStatsCollector(new VecStatsCollector);

base_regressors[stage]->train();

end_stage = stage + 1;

computeBaseAwards();

if (capacity_too_large)

{

verbose("LocalMedBoost: capacity too large, each base awards smaller than robustness: " + tostring(robustness), 2);

}

if (capacity_too_small)

{

verbose("LocalMedBoost: capacity too small, edge: " + tostring(edge), 2);

}

function_weights[stage] = findArgminFunctionWeight();

computeLossBound();

verbose("LocalMedBoost: stage: " + tostring(stage) + " alpha: " + tostring(function_weights[stage]) + " robustness: " + tostring(robustness), 3);

if (function_weights[stage] <= 0.0) break;

recomputeSampleWeight();

if (report_progress) pb->update(stage);

}

if (report_progress)

{

pb = new ProgressBar("LocalMedBoost : computing the statistics: ", train_set->length());

}

train_stats->forget();

min_margin = 1E15;

for (each_train_sample_index = 0; each_train_sample_index < train_set->length(); each_train_sample_index++)

{

train_set->getExample(each_train_sample_index, sample_input, sample_target, sample_weight);

computeOutput(sample_input, sample_output);

computeCostsFromOutputs(sample_input, sample_output, sample_target, sample_costs);

train_stats->update(sample_costs);

if (sample_costs[5] < min_margin) min_margin = sample_costs[5];

if (report_progress) pb->update(each_train_sample_index);

}

train_stats->finalize();

verbose("LocalMedBoost: we are done, thank you!", 3);

}

| void PLearn::LocalMedBoost::verbose | ( | string | the_msg, |

| int | the_level | ||

| ) | [private] |

Definition at line 569 of file LocalMedBoost.cc.

References PLearn::endl(), and PLearn::PLearner::verbosity.

Referenced by train().

Reimplemented from PLearn::PLearner.

Definition at line 136 of file LocalMedBoost.h.

Definition at line 62 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), declareOptions(), and makeDeepCopyFromShallowCopy().

TVec<real> PLearn::LocalMedBoost::base_awards [private] |

Definition at line 107 of file LocalMedBoost.h.

Referenced by build_(), computeBaseAwards(), computeFunctionWeightFormula(), and recomputeSampleWeight().

TVec<real> PLearn::LocalMedBoost::base_confidences [private] |

Definition at line 106 of file LocalMedBoost.h.

Referenced by build_(), and computeBaseAwards().

Definition at line 67 of file LocalMedBoost.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

TVec< PP<PLearner> > PLearn::LocalMedBoost::base_regressors [private] |

Definition at line 79 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

TVec<real> PLearn::LocalMedBoost::base_rewards [private] |

Definition at line 105 of file LocalMedBoost.h.

Referenced by build_(), and computeBaseAwards().

real PLearn::LocalMedBoost::bound [private] |

Definition at line 76 of file LocalMedBoost.h.

Referenced by computeLossBound(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

real PLearn::LocalMedBoost::bracket_a_start [private] |

Definition at line 120 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight(), and initializeLineSearch().

real PLearn::LocalMedBoost::bracket_b_start [private] |

Definition at line 121 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight(), and initializeLineSearch().

Definition at line 115 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight(), and initializeLineSearch().

real PLearn::LocalMedBoost::bracketing_zero [private] |

Definition at line 116 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight(), and initializeLineSearch().

Definition at line 96 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), and train().

Definition at line 97 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), and train().

Definition at line 90 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), computeFunctionWeightFormula(), initializeSampleWeight(), recomputeSampleWeight(), and train().

real PLearn::LocalMedBoost::edge [private] |

Definition at line 98 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), and train().

int PLearn::LocalMedBoost::end_stage [private] |

Definition at line 75 of file LocalMedBoost.h.

Referenced by computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

TVec<real> PLearn::LocalMedBoost::exp_weighted_edges [private] |

Definition at line 108 of file LocalMedBoost.h.

Referenced by build_(), and recomputeSampleWeight().

real PLearn::LocalMedBoost::f_a [private] |

Definition at line 126 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::f_b [private] |

Definition at line 126 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::f_c [private] |

Definition at line 126 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::f_u [private] |

Definition at line 127 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::f_v [private] |

Definition at line 127 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::f_w [private] |

Definition at line 127 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::f_x [private] |

Definition at line 127 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

TVec<real> PLearn::LocalMedBoost::function_weights [private] |

Definition at line 82 of file LocalMedBoost.h.

Referenced by computeLossBound(), computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), recomputeSampleWeight(), and train().

int PLearn::LocalMedBoost::inputsize [private] |

Definition at line 93 of file LocalMedBoost.h.

Definition at line 117 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight(), and initializeLineSearch().

Definition at line 118 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight(), and initializeLineSearch().

int PLearn::LocalMedBoost::iter [private] |

Definition at line 122 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

int PLearn::LocalMedBoost::length [private] |

Definition at line 91 of file LocalMedBoost.h.

Referenced by build_(), computeBaseAwards(), computeFunctionWeightFormula(), initializeSampleWeight(), and recomputeSampleWeight().

TVec<real> PLearn::LocalMedBoost::loss_function [private] |

Definition at line 83 of file LocalMedBoost.h.

Referenced by computeLossBound(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 63 of file LocalMedBoost.h.

Referenced by computeCostsFromOutputs(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

real PLearn::LocalMedBoost::max_learning_rate [private] |

Definition at line 119 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight(), and initializeLineSearch().

int PLearn::LocalMedBoost::max_nstages [private] |

Definition at line 66 of file LocalMedBoost.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

real PLearn::LocalMedBoost::maxt_base_award [private] |

Definition at line 77 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), declareOptions(), and makeDeepCopyFromShallowCopy().

real PLearn::LocalMedBoost::min_margin [private] |

Definition at line 99 of file LocalMedBoost.h.

Referenced by train().

string PLearn::LocalMedBoost::objective_function [private] |

Definition at line 64 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), declareOptions(), and makeDeepCopyFromShallowCopy().

real PLearn::LocalMedBoost::p_lim [private] |

Definition at line 129 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::p_step [private] |

Definition at line 129 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::p_tin [private] |

Definition at line 129 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::p_to1 [private] |

Definition at line 130 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::p_tol1 [private] |

Definition at line 130 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::p_tol2 [private] |

Definition at line 130 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

int PLearn::LocalMedBoost::regression_tree [private] |

Definition at line 65 of file LocalMedBoost.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

real PLearn::LocalMedBoost::robustness [private] |

Definition at line 61 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), computeFunctionWeightFormula(), computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::LocalMedBoost::sample_costs [private] |

Definition at line 104 of file LocalMedBoost.h.

Referenced by build_(), computeBaseAwards(), and train().

Vec PLearn::LocalMedBoost::sample_input [private] |

Definition at line 100 of file LocalMedBoost.h.

Referenced by build_(), computeBaseAwards(), and train().

Vec PLearn::LocalMedBoost::sample_output [private] |

Definition at line 103 of file LocalMedBoost.h.

Referenced by build_(), computeBaseAwards(), and train().

Vec PLearn::LocalMedBoost::sample_target [private] |

Definition at line 101 of file LocalMedBoost.h.

Referenced by build_(), computeBaseAwards(), and train().

real PLearn::LocalMedBoost::sample_weight [private] |

Definition at line 102 of file LocalMedBoost.h.

Referenced by computeBaseAwards(), and train().

TVec<real> PLearn::LocalMedBoost::sample_weights [private] |

Definition at line 84 of file LocalMedBoost.h.

Referenced by build_(), computeFunctionWeightFormula(), declareOptions(), initializeSampleWeight(), makeDeepCopyFromShallowCopy(), and recomputeSampleWeight().

Definition at line 78 of file LocalMedBoost.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 109 of file LocalMedBoost.h.

Referenced by recomputeSampleWeight().

real PLearn::LocalMedBoost::t_p [private] |

Definition at line 128 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::t_q [private] |

Definition at line 128 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::t_r [private] |

Definition at line 128 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::t_sav [private] |

Definition at line 128 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

int PLearn::LocalMedBoost::targetsize [private] |

Definition at line 94 of file LocalMedBoost.h.

Definition at line 68 of file LocalMedBoost.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

TVec< PP<RegressionTree> > PLearn::LocalMedBoost::tree_regressors [private] |

Definition at line 80 of file LocalMedBoost.h.

Referenced by train().

Definition at line 69 of file LocalMedBoost.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

TVec< PP<BaseRegressorWrapper> > PLearn::LocalMedBoost::tree_wrappers [private] |

Definition at line 81 of file LocalMedBoost.h.

Referenced by train().

int PLearn::LocalMedBoost::weightsize [private] |

Definition at line 95 of file LocalMedBoost.h.

int PLearn::LocalMedBoost::width [private] |

Definition at line 92 of file LocalMedBoost.h.

Referenced by build_().

real PLearn::LocalMedBoost::x_a [private] |

Definition at line 123 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_b [private] |

Definition at line 123 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_c [private] |

Definition at line 123 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_d [private] |

Definition at line 123 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_e [private] |

Definition at line 123 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_lim [private] |

Definition at line 125 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_u [private] |

Definition at line 124 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_v [private] |

Definition at line 124 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_w [private] |

Definition at line 124 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_x [private] |

Definition at line 124 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

real PLearn::LocalMedBoost::x_xmed [private] |

Definition at line 125 of file LocalMedBoost.h.

Referenced by findArgminFunctionWeight().

1.7.4

1.7.4