|

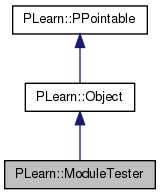

PLearn 0.1

|

|

PLearn 0.1

|

The first sentence should be a BRIEF DESCRIPTION of what the class does. More...

#include <ModuleTester.h>

Public Member Functions | |

| ModuleTester () | |

| Default constructor. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual ModuleTester * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| PP< OnlineLearningModule > | module |

| TVec< map< string, TVec < string > > > | configurations |

| map< string, PP< VMatrix > > | sampling_data |

| TVec< int32_t > | seeds |

| int | default_length |

| int | default_width |

| real | max_in |

| real | max_out_grad |

| real | min_in |

| real | min_out_grad |

| real | step |

| real | absolute_tolerance_threshold |

| real | absolute_tolerance |

| real | relative_tolerance |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef Object | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building (and performs testing of the module). | |

The first sentence should be a BRIEF DESCRIPTION of what the class does.

Place the rest of the class programmer documentation here. Doxygen supports Javadoc-style comments. See http://www.doxygen.org/manual.html

Definition at line 57 of file ModuleTester.h.

typedef Object PLearn::ModuleTester::inherited [private] |

Reimplemented from PLearn::Object.

Definition at line 59 of file ModuleTester.h.

| PLearn::ModuleTester::ModuleTester | ( | ) |

Default constructor.

Definition at line 55 of file ModuleTester.cc.

:

seeds(TVec<int32_t>(1, int32_t(1827))),

default_length(10),

default_width(5),

max_in(1),

max_out_grad(MISSING_VALUE),

min_in(0),

min_out_grad(MISSING_VALUE),

step(1e-6),

absolute_tolerance_threshold(1),

absolute_tolerance(1e-5),

relative_tolerance(1e-5)

{}

| string PLearn::ModuleTester::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

| OptionList & PLearn::ModuleTester::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

| RemoteMethodMap & PLearn::ModuleTester::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

| Object * PLearn::ModuleTester::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

| StaticInitializer ModuleTester::_static_initializer_ & PLearn::ModuleTester::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

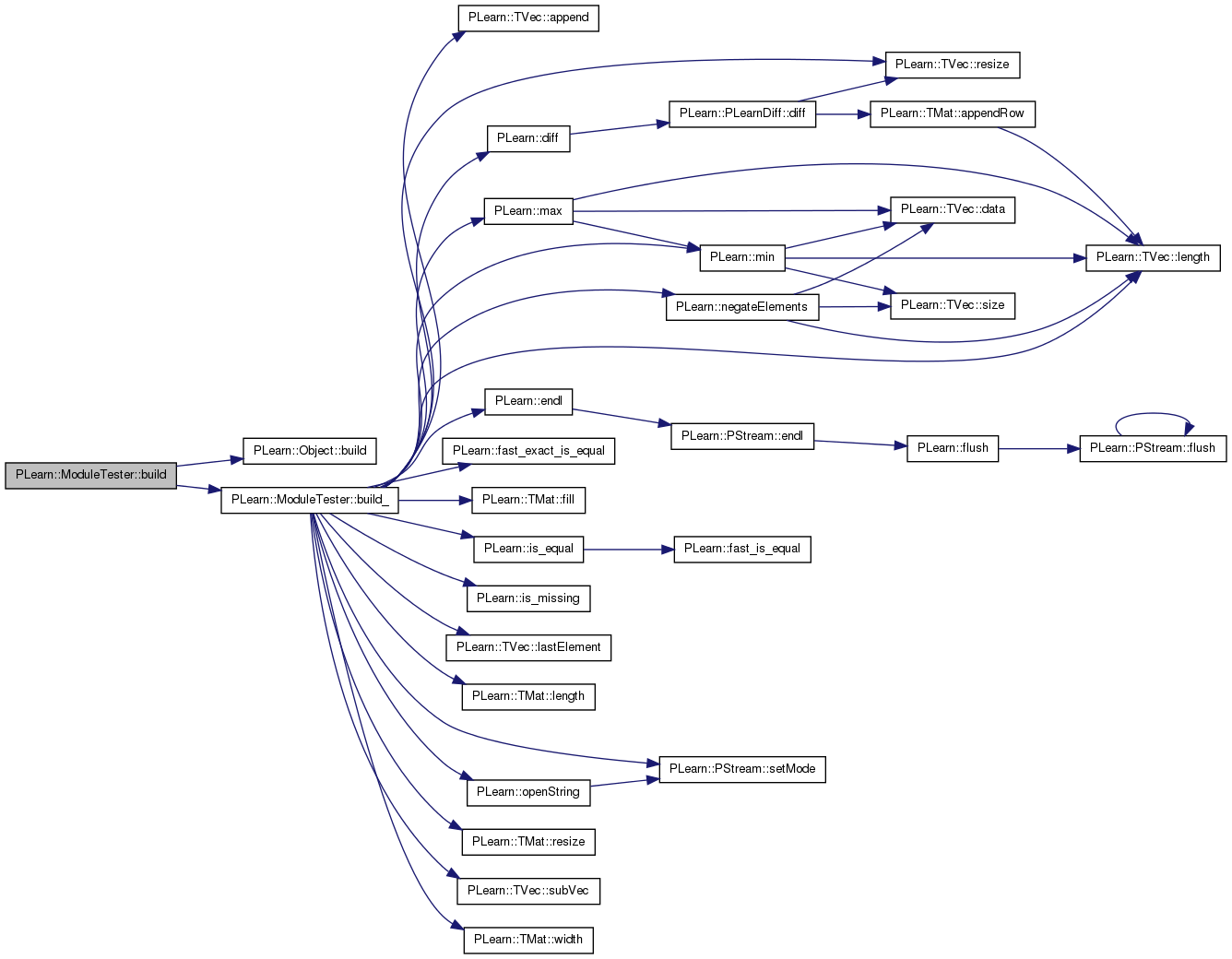

| void PLearn::ModuleTester::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::Object.

Definition at line 69 of file ModuleTester.cc.

References PLearn::Object::build(), and build_().

{

inherited::build();

build_();

}

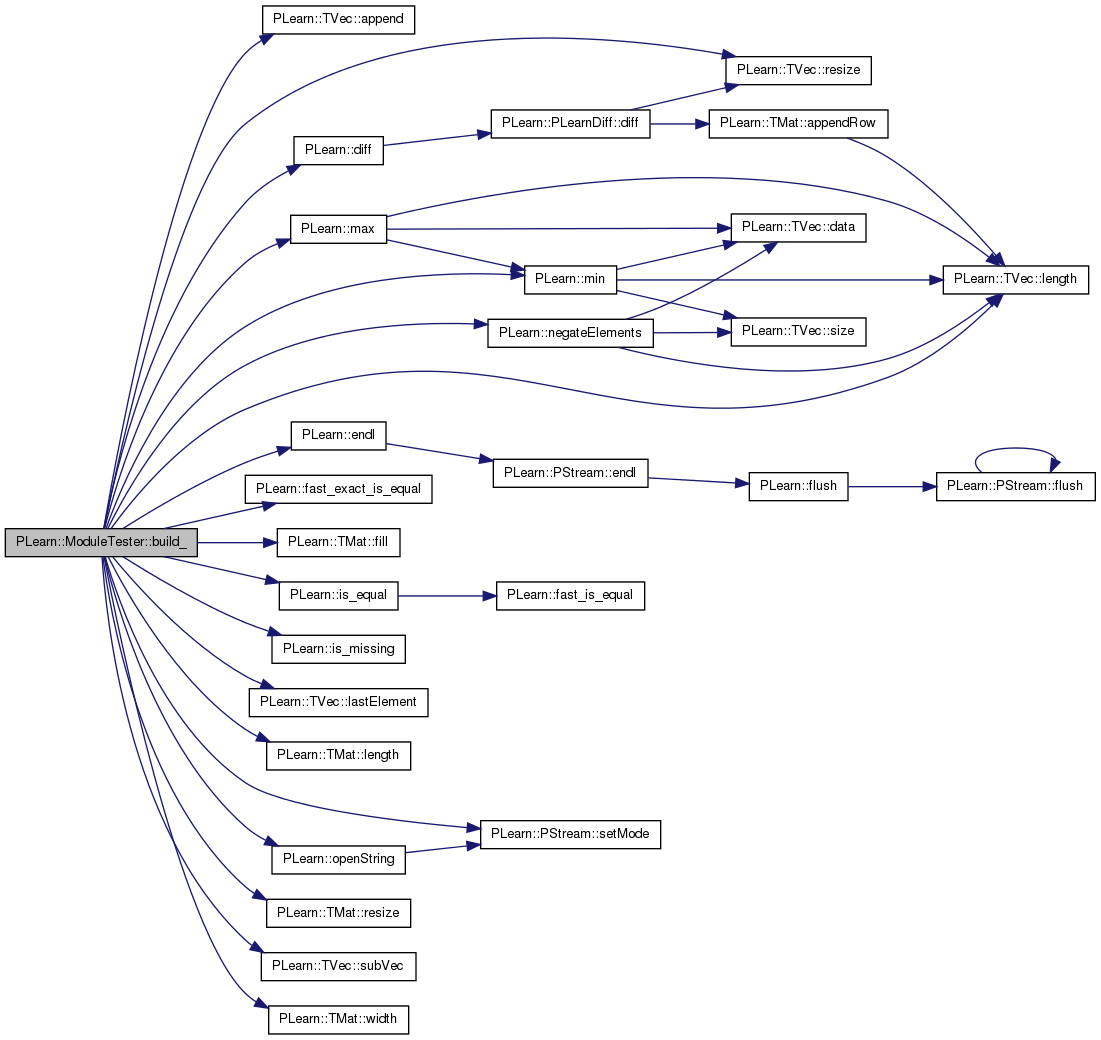

| void PLearn::ModuleTester::build_ | ( | ) | [private] |

This does the actual building (and performs testing of the module).

Reimplemented from PLearn::Object.

Definition at line 174 of file ModuleTester.cc.

References absolute_tolerance, absolute_tolerance_threshold, PLearn::TVec< T >::append(), configurations, default_length, default_width, PLearn::diff(), PLearn::endl(), PLearn::fast_exact_is_equal(), PLearn::TMat< T >::fill(), grad, i, PLearn::is_equal(), PLearn::is_missing(), j, PLearn::TVec< T >::lastElement(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), m, PLearn::max(), max_in, max_out_grad, PLearn::min(), min_in, min_out_grad, module, PLearn::negateElements(), PLearn::openString(), PLASSERT, PLCHECK, PLCHECK_MSG, PLearn::PStream::plearn_ascii, PLearn::pout, PLearn::PStream::raw_ascii, relative_tolerance, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), sampling_data, seeds, PLearn::PStream::setMode(), step, PLearn::TVec< T >::subVec(), and PLearn::TMat< T >::width().

Referenced by build().

{

if (!module)

return;

PP<PRandom> random_gen = new PRandom();

TVec<Mat*> fprop_data(module->nPorts()); // Input to 'fprop'.

TVec<Mat*> bprop_data(module->nPorts()); // Input to 'bpropAccUpdate'.

// We also use additional matrices to store gradients in order to ensure

// the module is properly accumulating.

TVec<Mat*> bprop_check(module->nPorts());

// Store previous fprop result in order to be able to estimate gradient.

TVec<Mat*> fprop_check(module->nPorts());

// Initialize workspace for matrices. Note that we need to allocate enough

// memory from start, as otherwise an append may make previous Mat*

// pointers invalid.

int max_mats_size = 1000;

TVec<Mat> mats(max_mats_size);

PP<PRandom> sub_rng = NULL;

int32_t default_seed = 1827;

if (!module->random_gen) {

// The module needs to be provided a random generator.

sub_rng = new PRandom();

module->random_gen = sub_rng;

module->build();

sub_rng->manual_seed(default_seed);

module->forget();

}

bool ok = true;

for (int j = 0; ok && j < seeds.length(); j++) {

random_gen->manual_seed(seeds[j]);

for (int i = 0; ok && i < configurations.length(); i++) {

map<string, TVec<string> >& conf = configurations[i];

const TVec<string>& in_grad = conf["in_grad"];

const TVec<string>& in_nograd = conf["in_nograd"];

const TVec<string>& out_grad = conf["out_grad"];

const TVec<string>& out_nograd = conf["out_nograd"];

TVec<string> all_in(in_grad.length() + in_nograd.length());

all_in.subVec(0, in_grad.length()) << in_grad;

all_in.subVec(in_grad.length(), in_nograd.length()) << in_nograd;

TVec<string> all_out(out_grad.length() + out_nograd.length());

all_out.subVec(0, out_grad.length()) << out_grad;

all_out.subVec(out_grad.length(), out_nograd.length()) << out_nograd;

mats.resize(0);

// Prepare fprop data.

fprop_data.fill(NULL);

fprop_check.fill(NULL);

for (int k = 0; k < all_in.length(); k++) {

const string& port = all_in[k];

int length = module->getPortLength(port);

int width = module->getPortWidth(port);

if (length < 0)

length = default_length;

if (width < 0)

width = default_width;

mats.append(Mat());

PLCHECK( mats.length() <= max_mats_size );

Mat* in_k = & mats.lastElement();

fprop_data[module->getPortIndex(port)] = in_k;

// Fill 'in_k' randomly.

map<string, PP<VMatrix> >::iterator it =

sampling_data.find(port);

if (it == sampling_data.end()) {

in_k->resize(length, width);

if (fast_exact_is_equal(min_in, max_in))

in_k->fill(min_in);

else

random_gen->fill_random_uniform(*in_k, min_in, max_in);

} else {

PP<VMatrix> vmat = it->second;

in_k->resize(vmat->length(), vmat->width());

*in_k << vmat->toMat();

}

}

for (int k = 0; k < all_out.length(); k++) {

const string& port = all_out[k];

mats.append(Mat());

PLCHECK( mats.length() <= max_mats_size );

Mat* out_k = & mats.lastElement();

int idx = module->getPortIndex(port);

fprop_data[idx] = out_k;

mats.append(Mat());

PLCHECK( mats.length() <= max_mats_size );

fprop_check[idx] = & mats.lastElement();

}

// Perform fprop.

if (sub_rng)

sub_rng->manual_seed(default_seed);

module->forget();

module->fprop(fprop_data);

// Debug output.

string output;

PStream out_s = openString(output, PStream::plearn_ascii, "w");

for (int k = 0; k < fprop_data.length(); k++) {

out_s.setMode(PStream::raw_ascii);

out_s << "FPROP(" + module->getPortName(k) + "):\n";

Mat* m = fprop_data[k];

if (!m) {

out_s << "null";

} else {

out_s.setMode(PStream::plearn_ascii);

out_s << *m;

}

}

out_s << endl;

out_s = NULL;

DBG_MODULE_LOG << output;

// Prepare bprop data.

bprop_data.fill(NULL);

bprop_check.fill(NULL);

for (int k = 0; k < in_grad.length(); k++) {

const string& port = in_grad[k];

mats.append(Mat());

PLCHECK( mats.length() <= max_mats_size );

Mat* in_grad_k = & mats.lastElement();

int idx = module->getPortIndex(port);

Mat* in_k = fprop_data[idx];

// We fill 'in_grad_k' with random elements to check proper

// accumulation.

in_grad_k->resize(in_k->length(), in_k->width());

random_gen->fill_random_uniform(*in_grad_k, -1, 1);

mats.append(Mat());

PLCHECK( mats.length() <= max_mats_size );

// Do a copy of initial gradient to allow comparison later.

Mat* in_check_k = & mats.lastElement();

in_check_k->resize(in_grad_k->length(), in_grad_k->width());

*in_check_k << *in_grad_k;

in_grad_k->resize(0, in_grad_k->width());

bprop_data[idx] = in_grad_k;

bprop_check[idx] = in_check_k;

}

for (int k = 0; k < out_grad.length(); k++) {

const string& port = out_grad[k];

mats.append(Mat());

PLCHECK( mats.length() <= max_mats_size );

Mat* out_grad_k = & mats.lastElement();

int idx = module->getPortIndex(port);

Mat* out_k = fprop_data[idx];

out_grad_k->resize(out_k->length(), out_k->width());

real min = is_missing(min_out_grad) ? min_in : min_out_grad;

real max = is_missing(max_out_grad) ? max_in : max_out_grad;

PLCHECK_MSG( sampling_data.find(port) == sampling_data.end(),

"Specific sampling data not yet implemented for output"

" gradients" );

if (fast_exact_is_equal(min, max))

// Special cast to handle in particular the case when we

// want the gradient to be exactly 1 (for instance for a

// cost).

out_grad_k->fill(min);

else

random_gen->fill_random_uniform(*out_grad_k, min, max);

bprop_data[idx] = out_grad_k;

}

// Perform bprop.

if (sub_rng)

sub_rng->manual_seed(default_seed);

module->forget();

module->bpropAccUpdate(fprop_data, bprop_data);

// Debug output.

out_s = openString(output, PStream::plearn_ascii, "w");

for (int k = 0; k < bprop_data.length(); k++) {

out_s.setMode(PStream::raw_ascii);

out_s << "BPROP(" + module->getPortName(k) + "):\n";

Mat* m = bprop_data[k];

if (!m) {

out_s << " *** NULL ***\n";

} else {

out_s.setMode(PStream::plearn_ascii);

out_s << *m;

}

}

out_s << endl;

out_s = NULL;

DBG_MODULE_LOG << output;

// Check the gradient was properly accumulated.

// First compute the difference between computed gradient and the

// initial value stored in the gradient matrix.

for (int k = 0; k < in_grad.length(); k++) {

int idx = module->getPortIndex(in_grad[k]);

Mat* grad = bprop_data[idx];

if (grad) {

Mat* grad_check = bprop_check[idx];

PLASSERT( grad_check );

*grad_check -= *grad;

negateElements(*grad_check);

}

}

// Then perform a new bprop pass, without accumulating.

for (int k = 0; k < in_grad.length(); k++) {

int idx = module->getPortIndex(in_grad[k]);

bprop_data[idx]->resize(0, bprop_data[idx]->width());

}

if (sub_rng)

sub_rng->manual_seed(default_seed);

module->forget(); // Ensure we are using same parameters.

module->bpropUpdate(fprop_data, bprop_data);

// Then compare 'bprop_data' and 'bprop_check'.

for (int k = 0; k < in_grad.length(); k++) {

int idx = module->getPortIndex(in_grad[k]);

Mat* grad = bprop_data[idx];

PLASSERT( grad );

Mat* check = bprop_check[idx];

PLASSERT( check );

// TODO Using the PLearn diff mechanism would be better.

for (int p = 0; p < grad->length(); p++)

for (int q = 0; q < grad->width(); q++)

if (!is_equal((*grad)(p,q), (*check)(p,q))) {

pout << "Gradient for port '" <<

module->getPortName(idx) << "' was not " <<

"properly accumulated: " << (*grad)(p,q) <<

" != " << (*check)(p,q) << endl;

ok = false;

}

}

// Continue only if accumulation test passed.

if (!ok)

return;

DBG_MODULE_LOG << "Accumulation test successful" << endl;

// Verify gradient is coherent with the input, through a subtle

// perturbation of said input.

// Save result of fprop.

for (int k = 0; k < all_out.length(); k++) {

int idx = module->getPortIndex(all_out[k]);

Mat* val = fprop_data[idx];

Mat* check = fprop_check[idx];

PLASSERT( val && check );

check->resize(val->length(), val->width());

*check << *val;

DBG_MODULE_LOG << "Reference fprop data (" << all_out[k] << ")"

<< ":" << endl << *check << endl;

}

for (int k = 0; ok && k < in_grad.length(); k++) {

int idx = module->getPortIndex(in_grad[k]);

Mat* grad = bprop_data[idx];

Mat* val = fprop_data[idx];

Mat* b_check = bprop_check[idx];

PLASSERT( grad && val && b_check );

grad->fill(0); // Will be used to store estimated gradient.

for (int p = 0; p < grad->length(); p++)

for (int q = 0; q < grad->width(); q++) {

real backup = (*val)(p, q);

(*val)(p, q) += step;

for (int r = 0; r < all_out.length(); r++) {

int to_clear = module->getPortIndex(all_out[r]);

PLASSERT( to_clear != idx );

fprop_data[to_clear]->resize(0, 0);

}

if (sub_rng)

sub_rng->manual_seed(default_seed);

module->forget();

module->fprop(fprop_data);

(*val)(p, q) = backup;

// Estimate gradient w.r.t. each output.

for (int r = 0; r < out_grad.length(); r++) {

int out_idx = module->getPortIndex(out_grad[r]);

Mat* out_val = fprop_data[out_idx];

Mat* out_prev = fprop_check[out_idx];

Mat* out_grad_ = bprop_data[out_idx];

PLASSERT( out_val && out_prev && out_grad_ );

for (int oi = 0; oi < out_val->length(); oi++)

for (int oj = 0; oj < out_val->width(); oj++) {

real diff = (*out_val)(oi, oj) -

(*out_prev)(oi, oj);

(*grad)(p, q) +=

diff * (*out_grad_)(oi, oj) / step;

DBG_MODULE_LOG << " diff = " << diff <<

endl << " step = " << step << endl <<

" out_grad = " << (*out_grad_)(oi, oj)

<< endl << " grad = " << (*grad)(p, q)

<< endl;

}

}

}

// Compare estimated and computed gradients.

for (int p = 0; p < grad->length(); p++)

for (int q = 0; q < grad->width(); q++)

if (!is_equal((*grad)(p,q), (*b_check)(p,q),

absolute_tolerance_threshold,

absolute_tolerance, relative_tolerance)) {

pout << "Gradient for port '" <<

module->getPortName(idx) << "' was not " <<

"properly computed: finite difference (" <<

(*grad)(p,q) << ") != computed (" <<

(*b_check)(p,q) << ")" << endl;

ok = false;

} else {

DBG_MODULE_LOG << "Gradient for port '" <<

module->getPortName(idx) << "' was " <<

"properly computed: finite difference (" <<

(*grad)(p,q) << ") == computed (" <<

(*b_check)(p,q) << ")" << endl;

}

}

}

}

if (ok)

pout << "All tests passed successfully on module " <<

module->classname() << endl;

else

pout << "*** ERRROR ***" << endl;

}

| string PLearn::ModuleTester::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

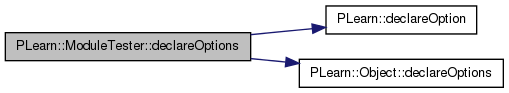

| void PLearn::ModuleTester::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::Object.

Definition at line 89 of file ModuleTester.cc.

References absolute_tolerance, absolute_tolerance_threshold, PLearn::OptionBase::buildoption, configurations, PLearn::declareOption(), PLearn::Object::declareOptions(), default_length, default_width, max_in, max_out_grad, min_in, min_out_grad, module, relative_tolerance, sampling_data, seeds, and step.

{

declareOption(ol, "module", &ModuleTester::module,

OptionBase::buildoption,

"The module to be tested.");

declareOption(ol, "configurations", &ModuleTester::configurations,

OptionBase::buildoption,

"List of port configurations to test. Each element is a map from a\n"

"string to a list of corresponding ports. This string can be one of:\n"

" - 'in_grad': input ports for which a gradient must be computed\n"

" - 'in_nograd': input ports for which no gradient is computed\n"

" - 'out_grad': output ports for which a gradient must be provided\n"

" - 'out_nograd': output ports for which no gradient is provided");

declareOption(ol, "min_in", &ModuleTester::min_in,

OptionBase::buildoption,

"Minimum value used when uniformly sampling input data.");

declareOption(ol, "max_in", &ModuleTester::max_in,

OptionBase::buildoption,

"Maximum value used when uniformly sampling input data.");

declareOption(ol, "min_out_grad", &ModuleTester::min_out_grad,

OptionBase::buildoption,

"Minimum value used when uniformly sampling output gradient data.\n"

"If missing, then 'min_in' is used.");

declareOption(ol, "max_out_grad", &ModuleTester::max_out_grad,

OptionBase::buildoption,

"Maximum value used when uniformly sampling output gradient data.\n"

"If missing, then 'max_in' is used.");

declareOption(ol, "sampling_data", &ModuleTester::sampling_data,

OptionBase::buildoption,

"A map from port names to specific data to use when sampling (either\n"

"input data or output gradient data, depending on whether the port\n"

"is an input or output) for this port. This mean the port data is\n"

"actually not sampled, but filled with the provided VMatrix (which\n"

"might be a VMatrixFromDistribution if sampling is needed).");

declareOption(ol, "seeds", &ModuleTester::seeds,

OptionBase::buildoption,

"Seeds used for random number generation. You can try different seeds "

"if you want to test more situations.");

declareOption(ol, "default_length", &ModuleTester::default_length,

OptionBase::buildoption,

"Default length of a port used when the module returns an undefined "

"port length (-1 in getPortLength())");

declareOption(ol, "default_width", &ModuleTester::default_width,

OptionBase::buildoption,

"Default width of a port used when the module returns an undefined "

"port width (-1 in getPortWidth())");

declareOption(ol, "step", &ModuleTester::step,

OptionBase::buildoption,

"Small offset used to modify inputs in order to estimate the\n"

"gradient by finite difference.");

declareOption(ol, "absolute_tolerance_threshold",

&ModuleTester::absolute_tolerance_threshold,

OptionBase::buildoption,

"Value below which we use absolute tolerance instead of relative in\n"

"order to compare gradients.");

declareOption(ol, "absolute_tolerance",

&ModuleTester::absolute_tolerance,

OptionBase::buildoption,

"Absolute tolerance when comparing gradients.");

declareOption(ol, "relative_tolerance",

&ModuleTester::relative_tolerance,

OptionBase::buildoption,

"Relative tolerance when comparing gradients.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::ModuleTester::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::Object.

Definition at line 98 of file ModuleTester.h.

:

//##### Protected Options ###############################################

| ModuleTester * PLearn::ModuleTester::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

| OptionList & PLearn::ModuleTester::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

| OptionMap & PLearn::ModuleTester::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

| RemoteMethodMap & PLearn::ModuleTester::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file ModuleTester.cc.

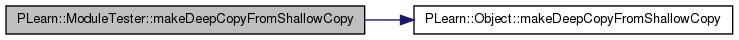

| void PLearn::ModuleTester::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::Object.

Definition at line 75 of file ModuleTester.cc.

References PLearn::Object::makeDeepCopyFromShallowCopy(), and PLERROR.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// ### Call deepCopyField on all "pointer-like" fields

// ### that you wish to be deepCopied rather than

// ### shallow-copied.

// ### ex:

// deepCopyField(trainvec, copies);

// ### Remove this line when you have fully implemented this method.

PLERROR("ModuleTester::makeDeepCopyFromShallowCopy not fully (correctly) implemented yet!");

}

Reimplemented from PLearn::Object.

Definition at line 98 of file ModuleTester.h.

Definition at line 81 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 80 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

| TVec< map<string, TVec<string> > > PLearn::ModuleTester::configurations |

Definition at line 66 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 71 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 72 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 74 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 75 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 76 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 77 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 64 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 82 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

| map< string, PP<VMatrix> > PLearn::ModuleTester::sampling_data |

Definition at line 67 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

| TVec<int32_t> PLearn::ModuleTester::seeds |

Definition at line 69 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

Definition at line 79 of file ModuleTester.h.

Referenced by build_(), and declareOptions().

1.7.4

1.7.4