|

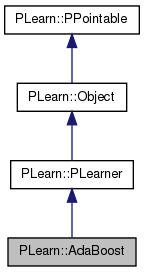

PLearn 0.1

|

|

PLearn 0.1

|

#include <AdaBoost.h>

Public Member Functions | |

| AdaBoost () | |

| virtual void | build () |

| simply calls inherited::build() then build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual AdaBoost * | deepCopy (CopiesMap &copies) const |

| virtual int | outputsize () const |

| returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options) This implementation of AdaBoost always performs two-class classification, hence returns 1 | |

| virtual void | finalize () |

| *** SUBCLASS WRITING: *** | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | test (VMat testset, PP< VecStatsCollector > test_stats, VMat testoutputs, VMat testcosts) const |

| Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method) | |

| virtual TVec< string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Declares the training set. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| PP< PLearner > | weak_learner_template |

| Weak learner to use as a template for each boosting round. | |

| real | target_error |

| bool | provide_learner_expdir |

| real | output_threshold |

| bool | compute_training_error |

| bool | pseudo_loss_adaboost |

| bool | conf_rated_adaboost |

| bool | weight_by_resampling |

| bool | early_stopping |

| bool | save_often |

| bool | forward_sub_learner_test_costs |

| bool | modif_train_set_weights |

| bool | reuse_test_results |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Vec | learners_error |

| Vec | example_weights |

| Vec | voting_weights |

| real | sum_voting_weights |

| real | initial_sum_weights |

| TVec< PP< PLearner > > | weak_learners |

| Vector of weak learners learned from boosting. | |

| bool | found_zero_error_weak_learner |

| Indication that a weak learner with 0 training error has been found. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | computeTrainingError (Vec input, Vec target) |

| void | computeOutput_ (const Vec &input, Vec &output, const int start=0, const real sum=0.) const |

Private Attributes | |

| Vec | weighted_costs |

| Global storage to save memory allocations. | |

| Vec | sum_weighted_costs |

| Vec | weak_learner_output |

| TVec< VMat > | saved_testset |

| Used with reuse_test_results. | |

| TVec< VMat > | saved_testoutputs |

| TVec< int > | saved_last_test_stages |

Definition at line 51 of file AdaBoost.h.

typedef PLearner PLearn::AdaBoost::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file AdaBoost.h.

| PLearn::AdaBoost::AdaBoost | ( | ) |

Definition at line 58 of file AdaBoost.cc.

: sum_voting_weights(0.0), initial_sum_weights(0.0), found_zero_error_weak_learner(0), target_error(0.5), provide_learner_expdir(false), output_threshold(0.5), compute_training_error(1), pseudo_loss_adaboost(1), conf_rated_adaboost(0), weight_by_resampling(1), early_stopping(1), save_often(0), forward_sub_learner_test_costs(false), modif_train_set_weights(false), reuse_test_results(false) { }

| string PLearn::AdaBoost::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 106 of file AdaBoost.cc.

| OptionList & PLearn::AdaBoost::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 106 of file AdaBoost.cc.

| RemoteMethodMap & PLearn::AdaBoost::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 106 of file AdaBoost.cc.

Reimplemented from PLearn::PLearner.

Definition at line 106 of file AdaBoost.cc.

| Object * PLearn::AdaBoost::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 106 of file AdaBoost.cc.

| StaticInitializer AdaBoost::_static_initializer_ & PLearn::AdaBoost::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 106 of file AdaBoost.cc.

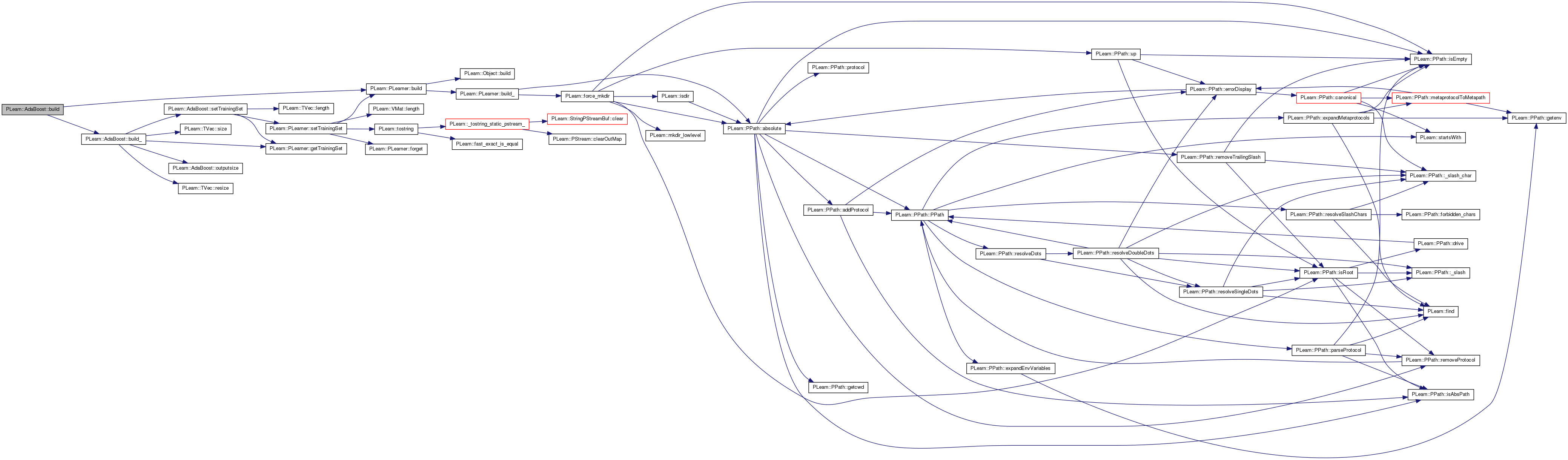

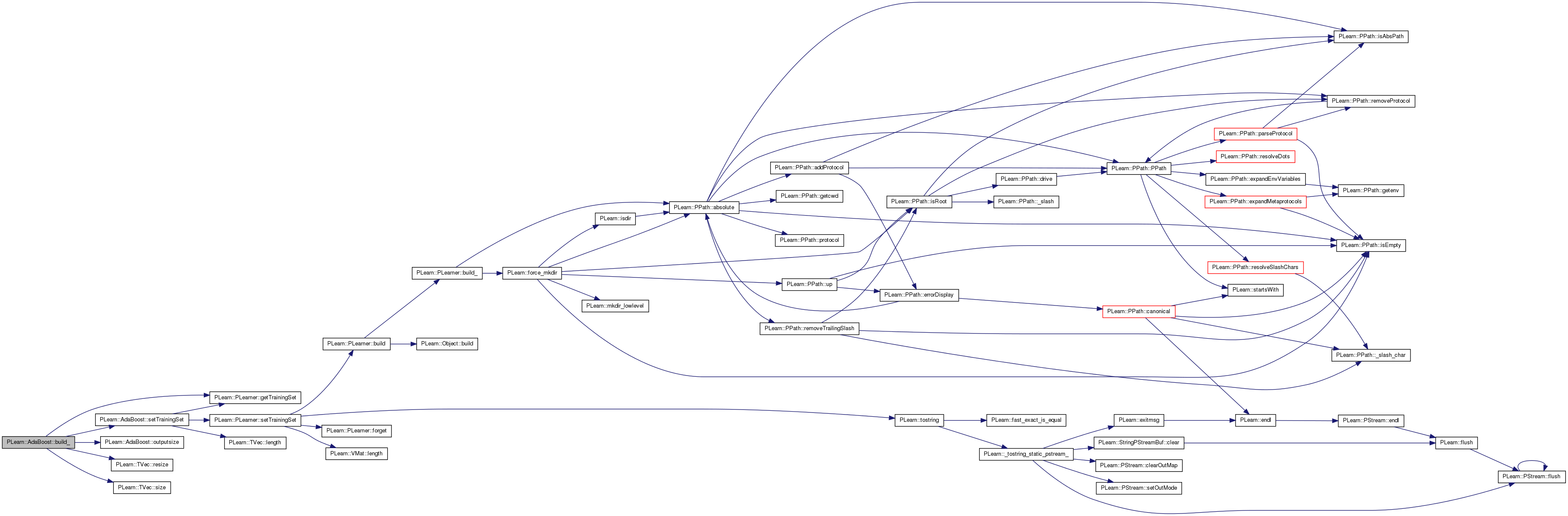

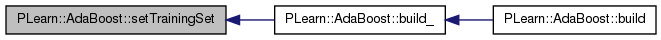

| void PLearn::AdaBoost::build | ( | ) | [virtual] |

simply calls inherited::build() then build_()

Reimplemented from PLearn::PLearner.

Definition at line 276 of file AdaBoost.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::AdaBoost::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 254 of file AdaBoost.cc.

References conf_rated_adaboost, PLearn::PLearner::getTrainingSet(), n, outputsize(), PLERROR, pseudo_loss_adaboost, PLearn::TVec< T >::resize(), setTrainingSet(), PLearn::TVec< T >::size(), weak_learner_output, weak_learner_template, and weak_learners.

Referenced by build().

{

if(conf_rated_adaboost && pseudo_loss_adaboost)

PLERROR("In Adaboost:build_(): conf_rated_adaboost and pseudo_loss_adaboost cannot both be true, a choice must be made");

int n = 0;

//why we don't always use weak_learner_template?

if(weak_learners.size()>0)

n=weak_learners[0]->outputsize();

else if(weak_learner_template)

n=weak_learner_template->outputsize();

weak_learner_output.resize(n);

//for RegressionTreeNode

if(getTrainingSet())

setTrainingSet(getTrainingSet(),false);

}

| string PLearn::AdaBoost::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 106 of file AdaBoost.cc.

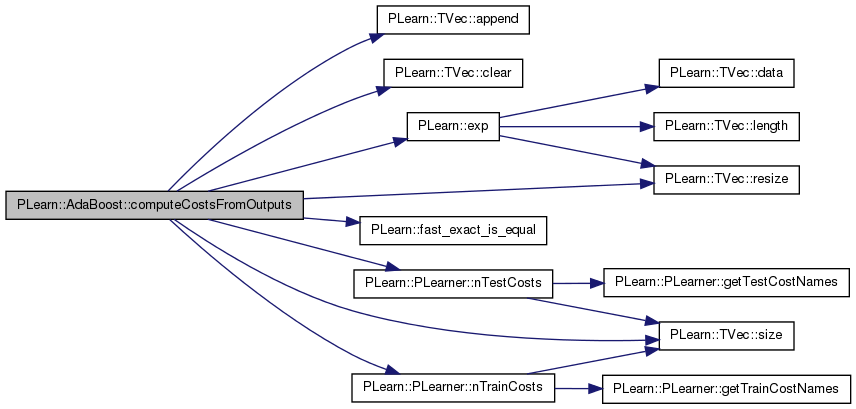

| void PLearn::AdaBoost::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 856 of file AdaBoost.cc.

References PLearn::TVec< T >::append(), PLearn::TVec< T >::clear(), PLearn::exp(), PLearn::fast_exact_is_equal(), forward_sub_learner_test_costs, i, MISSING_VALUE, PLearn::PLearner::nTestCosts(), PLearn::PLearner::nTrainCosts(), output_threshold, PLASSERT, PLERROR, PLWARNING, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sum_voting_weights, sum_weighted_costs, PLearn::PLearner::train_stats, voting_weights, weak_learner_template, weak_learners, and weighted_costs.

Referenced by test().

{

//when computing train stats, costs==nTrainCosts()

// and forward_sub_learner_test_costs==false

if(forward_sub_learner_test_costs)

PLASSERT(costs.size()==nTestCosts());

else

PLASSERT(costs.size()==nTrainCosts()||costs.size()==nTestCosts());

costs.resize(5);

// First cost is negative log-likelihood... output[0] is the likelihood

// of the first class

#ifdef BOUNDCHECK

if (target.size() > 1)

PLERROR("AdaBoost::computeCostsFromOutputs: target must contain "

"one element only: the 0/1 class");

#endif

if (fast_exact_is_equal(target[0], 0)) {

costs[0] = output[0] >= output_threshold;

}

else if (fast_exact_is_equal(target[0], 1)) {

costs[0] = output[0] < output_threshold;

}

else PLERROR("AdaBoost::computeCostsFromOutputs: target must be "

"either 0 or 1; current target=%f", target[0]);

costs[1] = exp(-1.0*sum_voting_weights*(2*output[0]-1)*(2*target[0]-1));

costs[2] = costs[0];

if(train_stats){

costs[3] = train_stats->getStat("E[avg_weight_class_0]");

costs[4] = train_stats->getStat("E[avg_weight_class_1]");

}

else

costs[3]=costs[4]=MISSING_VALUE;

if(forward_sub_learner_test_costs){

//slow as we already have calculated the output

//we should haved called computeOutputAndCosts.

PLWARNING("AdaBoost::computeCostsFromOutputs called with forward_sub_learner_test_costs true. This should be optimized!");

weighted_costs.resize(weak_learner_template->nTestCosts());

sum_weighted_costs.resize(weak_learner_template->nTestCosts());

sum_weighted_costs.clear();

for(int i=0;i<weak_learners.size();i++){

weak_learners[i]->computeCostsOnly(input, target, weighted_costs);

weighted_costs*=voting_weights[i];

sum_weighted_costs+=weighted_costs;

}

costs.append(sum_weighted_costs);

}

PLASSERT(costs.size()==nTrainCosts()||costs.size()==nTestCosts());

}

| virtual void PLearn::AdaBoost::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [inline, virtual] |

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 204 of file AdaBoost.h.

{

computeOutput_(input,output,0,0);}

| void PLearn::AdaBoost::computeOutput_ | ( | const Vec & | input, |

| Vec & | output, | ||

| const int | start = 0, |

||

| const real | sum = 0. |

||

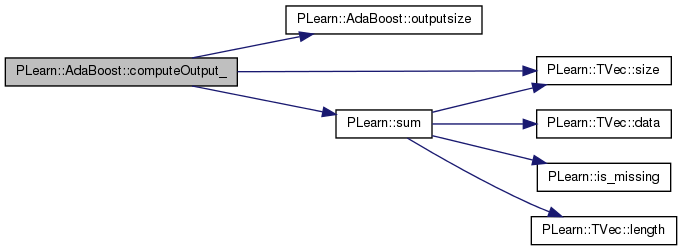

| ) | const [private] |

Definition at line 832 of file AdaBoost.cc.

References conf_rated_adaboost, i, output_threshold, outputsize(), PLASSERT, pseudo_loss_adaboost, reuse_test_results, PLearn::TVec< T >::size(), PLearn::sum(), sum_voting_weights, voting_weights, weak_learner_output, weak_learner_template, and weak_learners.

Referenced by test().

{

PLASSERT(weak_learners.size()>0);

PLASSERT(weak_learner_output.size()==weak_learner_template->outputsize());

PLASSERT(output.size()==outputsize());

real sum_out=sum;

if(!pseudo_loss_adaboost && !conf_rated_adaboost)

for (int i=start;i<weak_learners.size();i++){

weak_learners[i]->computeOutput(input,weak_learner_output);

sum_out += (weak_learner_output[0] < output_threshold ? 0 : 1)

*voting_weights[i];

}

else

for (int i=start;i<weak_learners.size();i++){

weak_learners[i]->computeOutput(input,weak_learner_output);

sum_out += weak_learner_output[0]*voting_weights[i];

}

output[0] = sum_out/sum_voting_weights;

if(reuse_test_results)

output[1] = sum_out;

}

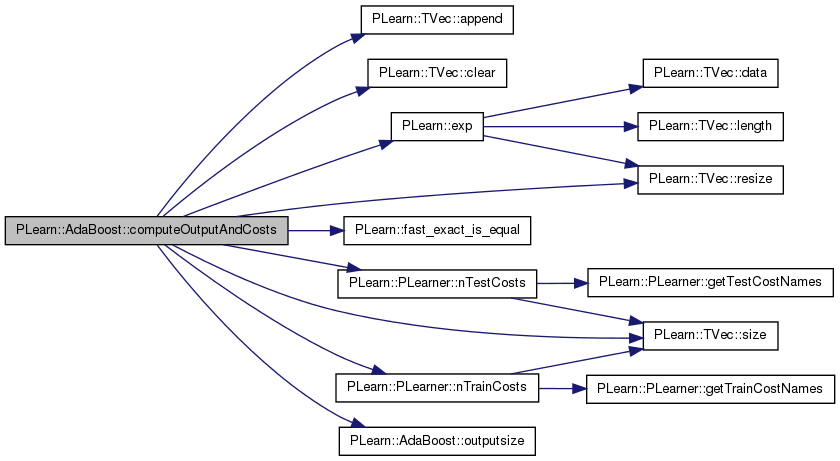

| void PLearn::AdaBoost::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 909 of file AdaBoost.cc.

References PLearn::TVec< T >::append(), PLearn::TVec< T >::clear(), conf_rated_adaboost, PLearn::exp(), PLearn::fast_exact_is_equal(), forward_sub_learner_test_costs, i, MISSING_VALUE, PLearn::PLearner::nTestCosts(), PLearn::PLearner::nTrainCosts(), output_threshold, outputsize(), PLASSERT, PLERROR, pseudo_loss_adaboost, PLearn::TVec< T >::resize(), reuse_test_results, PLearn::TVec< T >::size(), sum_voting_weights, sum_weighted_costs, PLearn::PLearner::train_stats, voting_weights, weak_learner_output, weak_learner_template, weak_learners, and weighted_costs.

Referenced by test().

{

PLASSERT(weak_learners.size()>0);

PLASSERT(weak_learner_output.size()==weak_learner_template->outputsize());

PLASSERT(output.size()==outputsize());

real sum_out=0;

if(forward_sub_learner_test_costs){

weighted_costs.resize(weak_learner_template->nTestCosts());

sum_weighted_costs.resize(weak_learner_template->nTestCosts());

sum_weighted_costs.clear();

if(!pseudo_loss_adaboost && !conf_rated_adaboost){

for (int i=0;i<weak_learners.size();i++){

weak_learners[i]->computeOutputAndCosts(input,target,

weak_learner_output,

weighted_costs);

sum_out += (weak_learner_output[0] < output_threshold ? 0 : 1)

*voting_weights[i];

weighted_costs*=voting_weights[i];

sum_weighted_costs+=weighted_costs;

}

}else{

for (int i=0;i<weak_learners.size();i++){

weak_learners[i]->computeOutputAndCosts(input,target,

weak_learner_output,

weighted_costs);

sum_out += weak_learner_output[0]*voting_weights[i];

weighted_costs*=voting_weights[i];

sum_weighted_costs+=weighted_costs;

}

}

}else{

if(!pseudo_loss_adaboost && !conf_rated_adaboost)

for (int i=0;i<weak_learners.size();i++){

weak_learners[i]->computeOutput(input,weak_learner_output);

sum_out += (weak_learner_output[0] < output_threshold ? 0 : 1)

*voting_weights[i];

}

else

for (int i=0;i<weak_learners.size();i++){

weak_learners[i]->computeOutput(input,weak_learner_output);

sum_out += weak_learner_output[0]*voting_weights[i];

}

}

output[0] = sum_out/sum_voting_weights;

if(reuse_test_results)

output[1] = sum_out;

//when computing train stats, costs==nTrainCosts()

// and forward_sub_learner_test_costs==false

if(forward_sub_learner_test_costs)

PLASSERT(costs.size()==nTestCosts());

else

PLASSERT(costs.size()==nTrainCosts()||costs.size()==nTestCosts());

costs.resize(5);

costs.clear();

// First cost is negative log-likelihood... output[0] is the likelihood

// of the first class

if (target.size() > 1)

PLERROR("AdaBoost::computeCostsFromOutputs: target must contain "

"one element only: the 0/1 class");

if (fast_exact_is_equal(target[0], 0)) {

costs[0] = output[0] >= output_threshold;

}

else if (fast_exact_is_equal(target[0], 1)) {

costs[0] = output[0] < output_threshold;

}

else PLERROR("AdaBoost::computeCostsFromOutputs: target must be "

"either 0 or 1; current target=%f", target[0]);

costs[1] = exp(-1.0*sum_voting_weights*(2*output[0]-1)*(2*target[0]-1));

costs[2] = costs[0];

if(train_stats){

costs[3] = train_stats->getStat("E[avg_weight_class_0]");

costs[4] = train_stats->getStat("E[avg_weight_class_1]");

}

else

costs[3]=costs[4]=MISSING_VALUE;

if(forward_sub_learner_test_costs){

costs.append(sum_weighted_costs);

}

PLASSERT(costs.size()==nTrainCosts()||costs.size()==nTestCosts());

}

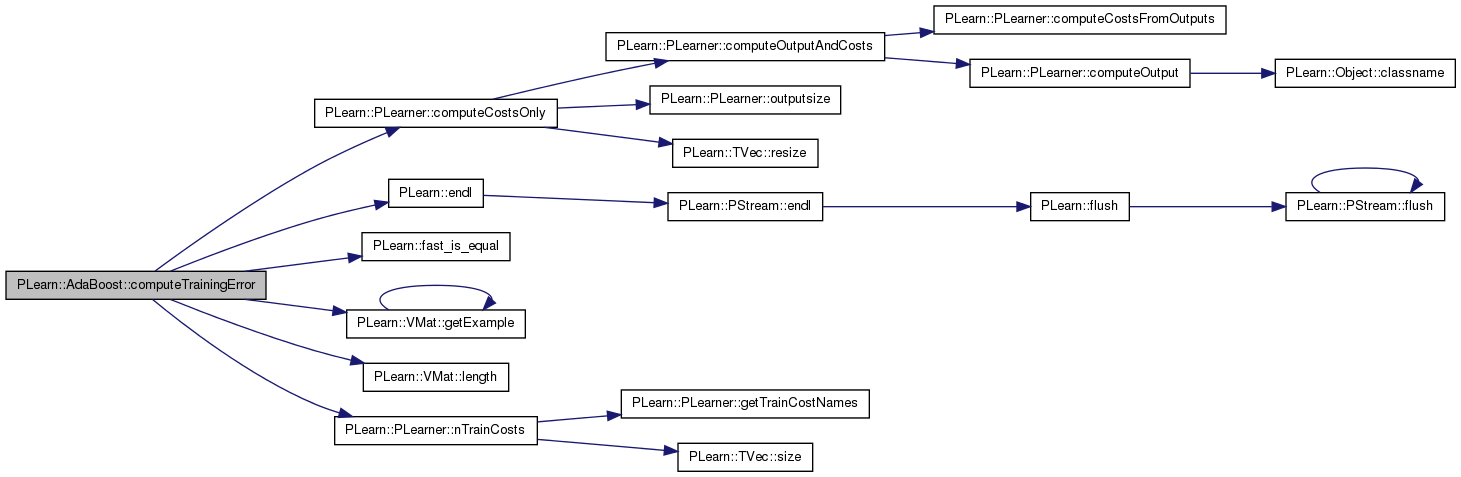

Definition at line 1031 of file AdaBoost.cc.

References compute_training_error, PLearn::PLearner::computeCostsOnly(), PLearn::endl(), example_weights, PLearn::fast_is_equal(), forward_sub_learner_test_costs, PLearn::VMat::getExample(), i, PLearn::VMat::length(), n, NORMAL_LOG, PLearn::PLearner::nTrainCosts(), PLASSERT, PLearn::PLearner::report_progress, PLearn::PLearner::stage, PLearn::PLearner::train_set, PLearn::PLearner::train_stats, and PLearn::PLearner::verbosity.

Referenced by train().

{

if (compute_training_error)

{

PLASSERT(train_set);

int n=train_set->length();

PP<ProgressBar> pb;

if(report_progress) pb = new ProgressBar("computing weighted training error of whole model",n);

train_stats->forget();

Vec err(nTrainCosts());

int nb_class_0=0;

int nb_class_1=0;

real cum_weights_0=0;

real cum_weights_1=0;

bool save_forward_sub_learner_test_costs =

forward_sub_learner_test_costs;

forward_sub_learner_test_costs=false;

real weight;

for (int i=0;i<n;i++)

{

if(report_progress) pb->update(i);

train_set->getExample(i, input, target, weight);

computeCostsOnly(input,target,err);

if(fast_is_equal(target[0],0.)){

cum_weights_0 += example_weights[i];

nb_class_0++;

}else{

cum_weights_1 += example_weights[i];

nb_class_1++;

}

err[3]=cum_weights_0/nb_class_0;

err[4]=cum_weights_1/nb_class_1;

train_stats->update(err);

}

train_stats->finalize();

forward_sub_learner_test_costs =

save_forward_sub_learner_test_costs;

if (verbosity>2)

NORMAL_LOG << "At stage " << stage <<

" boosted (weighted) classification error on training set = "

<< train_stats->getMean() << endl;

}

}

| void PLearn::AdaBoost::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 108 of file AdaBoost.cc.

References PLearn::OptionBase::buildoption, compute_training_error, conf_rated_adaboost, PLearn::declareOption(), PLearn::PLearner::declareOptions(), early_stopping, example_weights, forward_sub_learner_test_costs, found_zero_error_weak_learner, initial_sum_weights, learners_error, PLearn::OptionBase::learntoption, modif_train_set_weights, PLearn::OptionBase::nosave, output_threshold, provide_learner_expdir, pseudo_loss_adaboost, reuse_test_results, save_often, saved_last_test_stages, saved_testoutputs, saved_testset, sum_voting_weights, target_error, PLearn::PLearner::train_set, voting_weights, weak_learner_output, weak_learner_template, weak_learners, and weight_by_resampling.

{

declareOption(ol, "weak_learners", &AdaBoost::weak_learners,

OptionBase::learntoption,

"The vector of learned weak learners");

declareOption(ol, "voting_weights", &AdaBoost::voting_weights,

OptionBase::learntoption,

"Weights given to the weak learners (their output is\n"

"linearly combined with these weights\n"

"to form the output of the AdaBoost learner).\n");

declareOption(ol, "sum_voting_weights", &AdaBoost::sum_voting_weights,

OptionBase::learntoption,

"Sum of the weak learners voting weights.\n");

declareOption(ol, "initial_sum_weights", &AdaBoost::initial_sum_weights,

OptionBase::learntoption,

"Initial sum of weights on the examples. Do not temper with.\n");

declareOption(ol, "example_weights", &AdaBoost::example_weights,

OptionBase::learntoption,

"The current weights of the examples.\n");

declareOption(ol, "learners_error", &AdaBoost::learners_error,

OptionBase::learntoption,

"The error of each learners.\n");

declareOption(ol, "weak_learner_template", &AdaBoost::weak_learner_template,

OptionBase::buildoption,

"Template for the regression weak learner to be"

"boosted into a classifier");

declareOption(ol, "target_error", &AdaBoost::target_error,

OptionBase::buildoption,

"This is the target average weighted error below"

"which each weak learner\n"

"must reach after its training (ordinary adaboost:"

"target_error=0.5).");

declareOption(ol, "pseudo_loss_adaboost", &AdaBoost::pseudo_loss_adaboost,

OptionBase::buildoption,

"Whether to use Pseudo-loss Adaboost (see \"Experiments with\n"

"a New Boosting Algorithm\" by Freund and Schapire), which\n"

"takes into account the precise value outputted by\n"

"the soft classifier.");

declareOption(ol, "conf_rated_adaboost", &AdaBoost::conf_rated_adaboost,

OptionBase::buildoption,

"Whether to use Confidence-rated AdaBoost (see \"Improved\n"

"Boosting Algorithms Using Confidence-rated Predictions\" by\n"

"Schapire and Singer) which takes into account the precise\n"

"value outputted by the soft classifier. It also searchs\n"

"the weight of a weak learner using a line search according\n"

"to a criteria which is more appropriate for soft classifiers.\n"

"This option can also be used to obtain MarginBoost with the\n"

"exponential loss, provided that an appropriate choice of\n"

"weak learner is made by the user (see \"Functional Gradient\n"

"Techniques for Combining Hypotheses\" by Mason et al.).\n");

declareOption(ol, "weight_by_resampling", &AdaBoost::weight_by_resampling,

OptionBase::buildoption,

"Whether to train the weak learner using resampling"

" to represent the weighting\n"

"given to examples. If false then give these weights "

"explicitly in the training set\n"

"of the weak learner (note that some learners can accomodate "

"weights well, others not).\n");

declareOption(ol, "output_threshold", &AdaBoost::output_threshold,

OptionBase::buildoption,

"To interpret the output of the learner as a class, it is "

"compared to this\n"

"threshold: class 1 if greater than output_threshold, class "

"0 otherwise.\n");

declareOption(ol, "provide_learner_expdir", &AdaBoost::provide_learner_expdir,

OptionBase::buildoption,

"If true, each weak learner to be trained will have its\n"

"experiment directory set to WeakLearner#kExpdir/");

declareOption(ol, "early_stopping", &AdaBoost::early_stopping,

OptionBase::buildoption,

"If true, then boosting stops when the next weak learner\n"

"is too weak (avg error > target_error - .01)\n");

declareOption(ol, "save_often", &AdaBoost::save_often,

OptionBase::buildoption,

"If true, then save the model after training each weak\n"

"learner, under <expdir>/model.psave\n");

declareOption(ol, "compute_training_error",

&AdaBoost::compute_training_error, OptionBase::buildoption,

"Whether to compute training error at each stage.\n");

declareOption(ol, "forward_sub_learner_test_costs",

&AdaBoost::forward_sub_learner_test_costs, OptionBase::buildoption,

"Did we add the sub_learner_costs to our costs.\n");

declareOption(ol, "modif_train_set_weights",

&AdaBoost::modif_train_set_weights, OptionBase::buildoption,

"Did we modif directly the train_set weights?\n");

declareOption(ol, "found_zero_error_weak_learner",

&AdaBoost::found_zero_error_weak_learner,

OptionBase::learntoption,

"Indication that a weak learner with 0 training error"

"has been found.\n");

declareOption(ol, "weak_learner_output",

&AdaBoost::weak_learner_output,

OptionBase::nosave,

"A temp vector that contain the weak learner output\n");

declareOption(ol, "reuse_test_results",

&AdaBoost::reuse_test_results,

OptionBase::buildoption,

"If true we save and reuse previous call to test(). This is"

" usefull to have a test time that is independent of the"

" number of adaboost itaration.\n");

declareOption(ol, "saved_testset",

&AdaBoost::saved_testset,

OptionBase::nosave,

"Used with reuse_test_results\n");

declareOption(ol, "saved_testoutputs",

&AdaBoost::saved_testoutputs,

OptionBase::nosave,

"Used with reuse_test_results\n");

declareOption(ol, "saved_last_test_stages",

&AdaBoost::saved_last_test_stages,

OptionBase::nosave,

"Used with reuse_test_results\n");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

declareOption(ol, "train_set",

&AdaBoost::train_set,

OptionBase::learntoption|OptionBase::nosave,

"The training set, so we can reload it.\n");

}

| static const PPath& PLearn::AdaBoost::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 106 of file AdaBoost.cc.

Referenced by test().

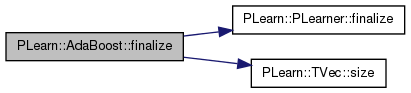

| void PLearn::AdaBoost::finalize | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

When this method is called the learner know it we will never train it again. So it can free resources that are needed only during the training. The functions test()/computeOutputs()/... should continue to work.

Reimplemented from PLearn::PLearner.

Definition at line 316 of file AdaBoost.cc.

References PLearn::PLearner::finalize(), i, PLearn::TVec< T >::size(), PLearn::PLearner::train_set, and weak_learners.

{

inherited::finalize();

for(int i=0;i<weak_learners.size();i++){

weak_learners[i]->finalize();

}

if(train_set && train_set->classname()=="RegressionTreeRegisters")

((PP<RegressionTreeRegisters>)train_set)->finalize();

}

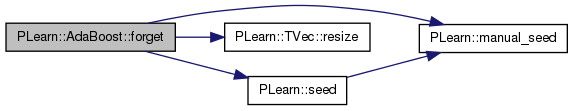

| void PLearn::AdaBoost::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!)

Reimplemented from PLearn::PLearner.

Definition at line 326 of file AdaBoost.cc.

References found_zero_error_weak_learner, learners_error, PLearn::manual_seed(), PLearn::PLearner::nstages, PLearn::TVec< T >::resize(), PLearn::seed(), PLearn::PLearner::seed_, PLearn::PLearner::stage, sum_voting_weights, voting_weights, and weak_learners.

{

stage = 0;

learners_error.resize(0, nstages);

weak_learners.resize(0, nstages);

voting_weights.resize(0, nstages);

sum_voting_weights = 0;

found_zero_error_weak_learner=false;

if (seed_ >= 0)

manual_seed(seed_);

else

PLearn::seed();

}

| OptionList & PLearn::AdaBoost::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 106 of file AdaBoost.cc.

| OptionMap & PLearn::AdaBoost::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 106 of file AdaBoost.cc.

| RemoteMethodMap & PLearn::AdaBoost::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 106 of file AdaBoost.cc.

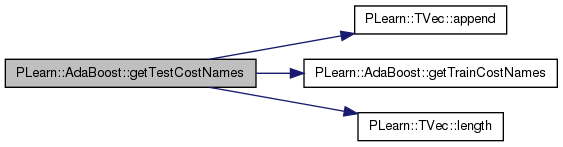

| TVec< string > PLearn::AdaBoost::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method)

Implements PLearn::PLearner.

Definition at line 998 of file AdaBoost.cc.

References PLearn::TVec< T >::append(), forward_sub_learner_test_costs, getTrainCostNames(), i, PLearn::TVec< T >::length(), weak_learner_template, and weak_learners.

{

TVec<string> costs=getTrainCostNames();

if(forward_sub_learner_test_costs){

TVec<string> subcosts;

//We try to find a weak_learner with a train set

//as a RegressionTree need it to generate the test costs names

if(weak_learner_template->getTrainingSet())

subcosts=weak_learner_template->getTestCostNames();

else if(weak_learners.length()>0)

subcosts=weak_learners[0]->getTestCostNames();

else

subcosts=weak_learner_template->getTestCostNames();

for(int i=0;i<subcosts.length();i++){

subcosts[i]="weighted_weak_learner."+subcosts[i];

}

costs.append(subcosts);

}

return costs;

}

| TVec< string > PLearn::AdaBoost::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 1020 of file AdaBoost.cc.

Referenced by getTestCostNames().

{

TVec<string> costs(5);

costs[0] = "binary_class_error";

costs[1] = "exp_neg_margin";

costs[2] = "class_error";

costs[3] = "avg_weight_class_0";

costs[4] = "avg_weight_class_1";

return costs;

}

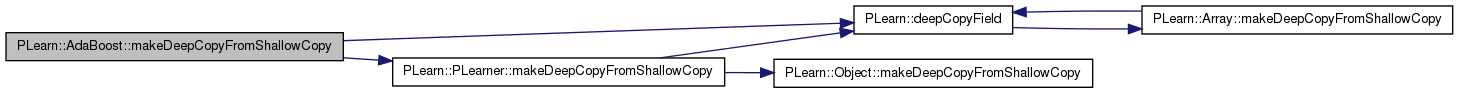

| void PLearn::AdaBoost::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 285 of file AdaBoost.cc.

References PLearn::deepCopyField(), example_weights, learners_error, PLearn::PLearner::makeDeepCopyFromShallowCopy(), saved_last_test_stages, saved_testoutputs, saved_testset, sum_weighted_costs, voting_weights, weak_learner_output, weak_learner_template, weak_learners, and weighted_costs.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(weighted_costs, copies);

deepCopyField(sum_weighted_costs, copies);

deepCopyField(saved_testset, copies);

deepCopyField(saved_testoutputs, copies);

deepCopyField(saved_last_test_stages, copies);

deepCopyField(learners_error, copies);

deepCopyField(example_weights, copies);

deepCopyField(weak_learner_output, copies);

deepCopyField(voting_weights, copies);

deepCopyField(weak_learners, copies);

deepCopyField(weak_learner_template, copies);

}

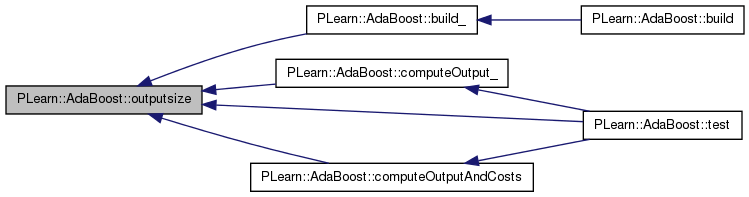

| int PLearn::AdaBoost::outputsize | ( | ) | const [virtual] |

returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options) This implementation of AdaBoost always performs two-class classification, hence returns 1

Implements PLearn::PLearner.

Definition at line 306 of file AdaBoost.cc.

References reuse_test_results.

Referenced by build_(), computeOutput_(), computeOutputAndCosts(), and test().

{

// Outputsize is always 2, since this is a 0-1 classifier

// and we append the weighted sum to allow the reuse of previous test

if(reuse_test_results)

return 2;

else

return 1;

}

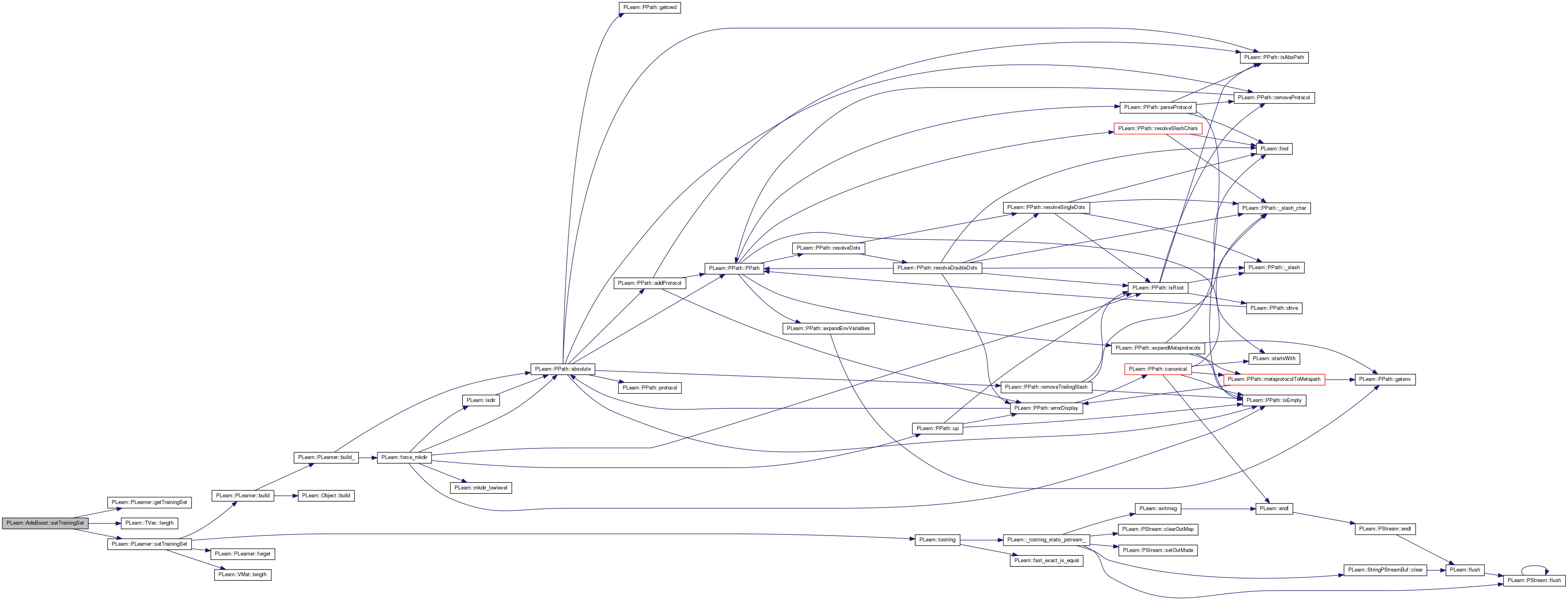

Declares the training set.

Then calls build() and forget() if necessary. Also sets this learner's inputsize_ targetsize_ weightsize_ from those of the training_set. Note: You shouldn't have to override this in subclasses, except in maybe to forward the call to an underlying learner.

Reimplemented from PLearn::PLearner.

Definition at line 1078 of file AdaBoost.cc.

References PLearn::PLearner::finalized, PLearn::PLearner::getTrainingSet(), i, PLearn::TVec< T >::length(), modif_train_set_weights, NORMAL_LOG, PLCHECK, PLearn::PLearner::report_progress, PLearn::PLearner::setTrainingSet(), PLearn::PLearner::verbosity, weak_learner_template, and weak_learners.

Referenced by build_().

{

PLCHECK(weak_learner_template);

if(weak_learner_template->classname()=="RegressionTree"){

//we do this for optimization. Otherwise we will creat a RegressionTreeRegister

//for each weak_learner. This is time consuming as it sort the dataset

if(training_set->classname()!="RegressionTreeRegisters")

training_set = new RegressionTreeRegisters(training_set,

report_progress,

verbosity,

!finalized, !finalized);

//we need to change the weight of the trainning set to reuse the RegressionTreeRegister

if(!modif_train_set_weights){

if(training_set->weightsize()==1)

modif_train_set_weights=1;

else

NORMAL_LOG<<"In AdaBoost::setTrainingSet() -"

<<" We have RegressionTree as weak_learner, but the"

<<" training_set don't have a weigth. This will cause"

<<" the creation of a RegressionTreeRegisters at"

<<" each stage of AdaBoost!";

}

//we do this as RegressionTreeNode need a train_set for getTestCostNames

if(!weak_learner_template->getTrainingSet())

weak_learner_template->setTrainingSet(training_set,call_forget);

for(int i=0;i<weak_learners.length();i++)

if(!weak_learners[i]->getTrainingSet())

weak_learners[i]->setTrainingSet(training_set,call_forget);

}

inherited::setTrainingSet(training_set, call_forget);

}

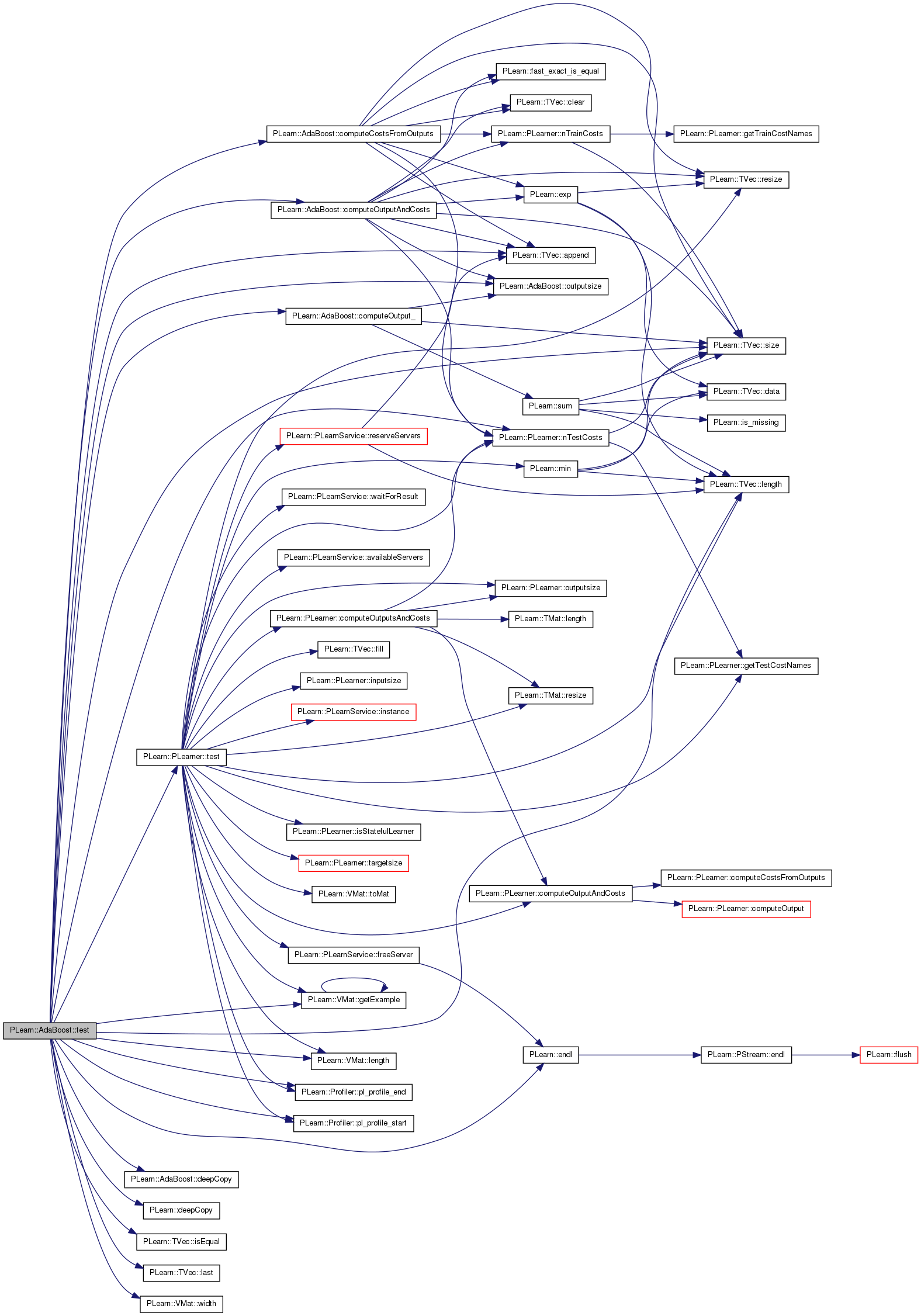

| void PLearn::AdaBoost::test | ( | VMat | testset, |

| PP< VecStatsCollector > | test_stats, | ||

| VMat | testoutputs, | ||

| VMat | testcosts | ||

| ) | const [virtual] |

Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts.

The default version repeatedly calls computeOutputAndCosts or computeCostsOnly. Note that neither test_stats->forget() nor test_stats->finalize() is called, so that you should call them yourself (respectively before and after calling this method) if you don't plan to accumulate statistics.

Reimplemented from PLearn::PLearner.

Definition at line 741 of file AdaBoost.cc.

References PLearn::TVec< T >::append(), computeCostsFromOutputs(), computeOutput_(), computeOutputAndCosts(), deepCopy(), PLearn::deepCopy(), PLearn::endl(), found_zero_error_weak_learner, PLearn::VMat::getExample(), i, PLearn::TVec< T >::isEqual(), PLearn::TVec< T >::last(), PLearn::VMat::length(), PLearn::TVec< T >::length(), PLearn::PLearner::nTestCosts(), outputsize(), PLearn::Profiler::pl_profile_end(), PLearn::Profiler::pl_profile_start(), PLCHECK, reuse_test_results, saved_last_test_stages, saved_testoutputs, saved_testset, PLearn::TVec< T >::size(), PLearn::PLearner::stage, PLearn::PLearner::test(), weak_learner_output, weak_learner_template, weak_learners, and PLearn::VMat::width().

{

if(!reuse_test_results){

inherited::test(testset, test_stats, testoutputs, testcosts);

return;

}

Profiler::pl_profile_start("AdaBoost::test()");

int index=-1;

for(int i=0;i<saved_testset.size();i++){

if(saved_testset[i]==testset){

index=i;

break;

}

}

if(index<0){

//first time the testset is seen

Profiler::pl_profile_start("AdaBoost::test() first" );

inherited::test(testset, test_stats, testoutputs, testcosts);

saved_testset.append(testset);

saved_testoutputs.append(PLearn::deepCopy(testoutputs));

PLCHECK(weak_learners.length()==stage || found_zero_error_weak_learner);

cout << weak_learners.length()<<" "<<stage<<endl;;

saved_last_test_stages.append(stage);

Profiler::pl_profile_end("AdaBoost::test() first" );

}else if(found_zero_error_weak_learner && saved_last_test_stages.last()==stage){

Vec input;

Vec output(outputsize());

Vec target;

Vec costs(nTestCosts());

real weight;

VMat old_outputs=saved_testoutputs[index];

PLCHECK(old_outputs->width()==testoutputs->width());

PLCHECK(old_outputs->length()==testset->length());

for(int row=0;row<testset.length();row++){

output=old_outputs(row);

testset.getExample(row, input, target, weight);

computeCostsFromOutputs(input,output,target,costs);

if(testoutputs)testoutputs->putOrAppendRow(row,output);

if(testcosts)testcosts->putOrAppendRow(row,costs);

if(test_stats)test_stats->update(costs,weight);

}

}else{

Profiler::pl_profile_start("AdaBoost::test() seconds" );

PLCHECK(weak_learners.size()>1);

PLCHECK(stage>1);

PLCHECK(weak_learner_output.size()==weak_learner_template->outputsize());

PLCHECK(saved_testset.length()>index);

PLCHECK(saved_testoutputs.length()>index);

PLCHECK(saved_last_test_stages.length()>index);

int stages_done = saved_last_test_stages[index];

PLCHECK(weak_learners.size()>=stages_done);

Vec input;

Vec output(outputsize());

Vec target;

Vec costs(nTestCosts());

real weight;

VMat old_outputs=saved_testoutputs[index];

PLCHECK(old_outputs->width()==testoutputs->width());

PLCHECK(old_outputs->length()==testset->length());

#ifndef NDEBUG

Vec output2(outputsize());

Vec costs2(nTestCosts());

#endif

for(int row=0;row<testset.length();row++){

output=old_outputs(row);

//compute the new testoutputs

Profiler::pl_profile_start("AdaBoost::test() getExample" );

testset.getExample(row, input, target, weight);

Profiler::pl_profile_end("AdaBoost::test() getExample" );

computeOutput_(input, output, stages_done, output[1]);

computeCostsFromOutputs(input,output,target,costs);

#ifndef NDEBUG

computeOutputAndCosts(input,target, output2, costs2);

PLCHECK(output==output2);

PLCHECK(costs.isEqual(costs2,true));

#endif

if(testoutputs)testoutputs->putOrAppendRow(row,output);

if(testcosts)testcosts->putOrAppendRow(row,costs);

if(test_stats)test_stats->update(costs,weight);

}

saved_testoutputs[index]=PLearn::deepCopy(testoutputs);

saved_last_test_stages[index]=stage;

Profiler::pl_profile_end("AdaBoost::test() seconds" );

}

Profiler::pl_profile_end("AdaBoost::test()");

}

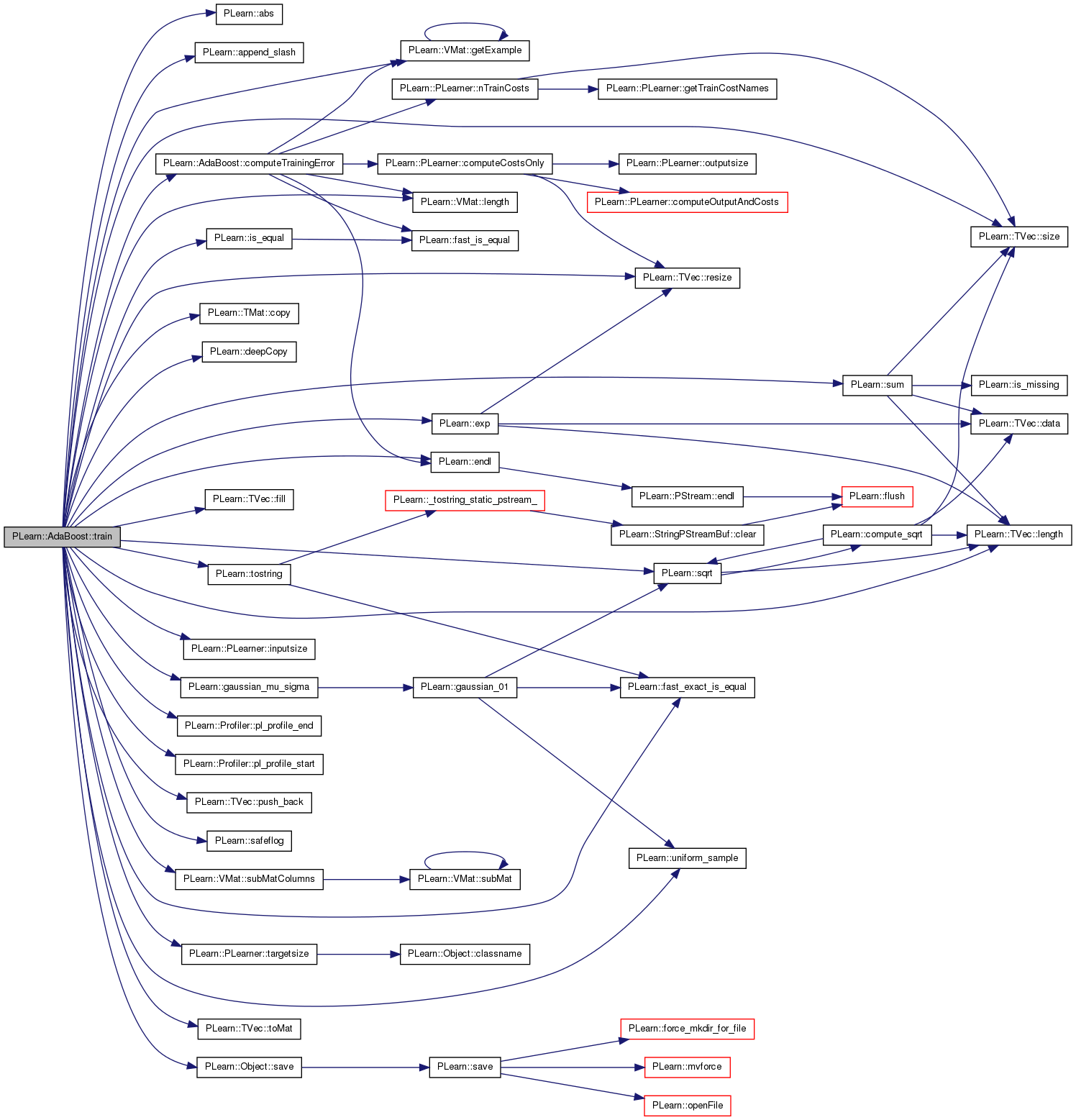

| void PLearn::AdaBoost::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

< Asking to revert to previous stage

Implements PLearn::PLearner.

Definition at line 340 of file AdaBoost.cc.

References PLearn::abs(), PLearn::append_slash(), compute_training_error, computeTrainingError(), conf_rated_adaboost, PLearn::TMat< T >::copy(), PLearn::deepCopy(), early_stopping, PLearn::endl(), example_weights, PLearn::exp(), PLearn::PLearner::expdir, PLearn::fast_exact_is_equal(), PLearn::TVec< T >::fill(), found_zero_error_weak_learner, PLearn::gaussian_mu_sigma(), PLearn::VMat::getExample(), i, initial_sum_weights, PLearn::PLearner::inputsize(), PLearn::is_equal(), j, learners_error, PLearn::TVec< T >::length(), PLearn::VMat::length(), modif_train_set_weights, n, NORMAL_LOG, PLearn::PLearner::nstages, output_threshold, PLearn::Profiler::pl_profile_end(), PLearn::Profiler::pl_profile_start(), PLASSERT_MSG, PLCHECK, PLCHECK_MSG, PLERROR, provide_learner_expdir, pseudo_loss_adaboost, PLearn::TVec< T >::push_back(), PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), PLearn::safeflog(), PLearn::Object::save(), save_often, PLearn::TVec< T >::size(), PLearn::sqrt(), PLearn::PLearner::stage, PLearn::VMat::subMatColumns(), PLearn::sum(), sum_voting_weights, target_error, PLearn::PLearner::targetsize(), PLearn::TVec< T >::toMat(), PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, PLearn::uniform_sample(), PLearn::PLearner::verbosity, voting_weights, weak_learner_template, weak_learners, and weight_by_resampling.

{

if(nstages==stage)

return;

else if (nstages < stage){

PLCHECK(nstages>0); // should use forget

NORMAL_LOG<<"In AdaBoost::train() - reverting from stage "<<stage

<<" to stage "<<nstages<<endl;

stage = nstages;

PLCHECK(learners_error.size()>=stage);

PLCHECK(weak_learners.size()>=stage);

PLCHECK(voting_weights.size()>=stage);

PLCHECK(nstages>0);

learners_error.resize(stage);

weak_learners.resize(stage);

voting_weights.resize(stage);

sum_voting_weights = sum(voting_weights);

found_zero_error_weak_learner=false;

example_weights.resize(0);

return;

//need examples_weights

//computeTrainingError();

}else if(nstages>0 && stage>0 && example_weights.size()==0){

PLERROR("In AdaBoost::train() - we can't retrain a reverted learner...");

}

if(found_zero_error_weak_learner) // Training is over...

return;

Profiler::pl_profile_start("AdaBoost::train");

if(!train_set)

PLERROR("In AdaBoost::train, you did not setTrainingSet");

if(!train_stats && compute_training_error)

PLERROR("In AdaBoost::train, you did not setTrainStatsCollector");

if (train_set->targetsize()!=1)

PLERROR("In AdaBoost::train, targetsize should be 1, found %d",

train_set->targetsize());

if(modif_train_set_weights && train_set->weightsize()!=1)

PLERROR("In AdaBoost::train, when modif_train_set_weights is true"

" the weightsize of the trainset must be one.");

PLCHECK_MSG(train_set->inputsize()>0, "In AdaBoost::train, the inputsize"

" of the train_set must be know.");

Vec input;

Vec output;

Vec target;

real weight;

Vec examples_error;

const int n = train_set.length();

TVec<int> train_indices;

Vec pseudo_loss;

input.resize(inputsize());

output.resize(weak_learner_template->outputsize());// We use only the first one as the output from the weak learner

target.resize(targetsize());

examples_error.resize(n);

if (stage==0)

{

example_weights.resize(n);

if (train_set->weightsize()>0)

{

PP<ProgressBar> pb;

initial_sum_weights=0;

int weight_col = train_set->inputsize()+train_set->targetsize();

for (int i=0; i<n; ++i) {

weight=train_set->get(i,weight_col);

example_weights[i]=weight;

initial_sum_weights += weight;

}

example_weights *= real(1.0)/initial_sum_weights;

}

else

{

example_weights.fill(1.0/n);

initial_sum_weights = 1;

}

sum_voting_weights = 0;

voting_weights.resize(0,nstages);

} else

PLCHECK_MSG(example_weights.length()==n,"In AdaBoost::train - the train"

" set should not change between each train without a forget!");

VMat unweighted_data = train_set.subMatColumns(0, inputsize()+1);

learners_error.resize(nstages);

for ( ; stage < nstages ; ++stage)

{

VMat weak_learner_training_set;

{

// We shall now construct a training set for the new weak learner:

if (weight_by_resampling)

{

PP<ProgressBar> pb;

if(report_progress) pb = new ProgressBar(

"AdaBoost round " + tostring(stage) +

": making training set for weak learner", n);

// use a "smart" resampling that approximated sampling

// with replacement with the probabilities given by

// example_weights.

map<real,int> indices;

for (int i=0; i<n; ++i) {

if(report_progress) pb->update(i);

real p_i = example_weights[i];

// randomly choose how many repeats of example i

int n_samples_of_row_i =

int(rint(gaussian_mu_sigma(n*p_i,sqrt(n*p_i*(1-p_i)))));

for (int j=0;j<n_samples_of_row_i;j++)

{

if (j==0)

indices[i]=i;

else

{

// put the others in random places

real k=n*uniform_sample();

// while avoiding collisions

indices[k]=i;

}

}

}

train_indices.resize(0,n);

map<real,int>::iterator it = indices.begin();

map<real,int>::iterator last = indices.end();

for (;it!=last;++it)

train_indices.push_back(it->second);

weak_learner_training_set =

new SelectRowsVMatrix(unweighted_data, train_indices);

weak_learner_training_set->defineSizes(inputsize(), 1, 0);

}

else if(modif_train_set_weights)

{

//No Need for deep copy of the sorted_train_set as after the train it is not used anymore

// and the data are not modofied, but we need to change the weight

weak_learner_training_set = train_set;

int weight_col=train_set->inputsize()+train_set->targetsize();

for(int i=0;i<train_set->length();i++)

train_set->put(i,weight_col,example_weights[i]);

}

else

{

Mat data_weights_column = example_weights.toMat(n,1).copy();

// to bring the weights to the same average level as

// the original ones

data_weights_column *= initial_sum_weights;

VMat data_weights = VMat(data_weights_column);

weak_learner_training_set =

new ConcatColumnsVMatrix(unweighted_data,data_weights);

weak_learner_training_set->defineSizes(inputsize(), 1, 1);

}

}

// Create new weak-learner and train it

PP<PLearner> new_weak_learner = ::PLearn::deepCopy(weak_learner_template);

new_weak_learner->setTrainingSet(weak_learner_training_set);

new_weak_learner->setTrainStatsCollector(new VecStatsCollector);

if(expdir!="" && provide_learner_expdir)

new_weak_learner->setExperimentDirectory( expdir / ("WeakLearner"+tostring(stage)+"Expdir") );

new_weak_learner->train();

new_weak_learner->finalize();

// calculate its weighted training error

{

PP<ProgressBar> pb;

if(report_progress && verbosity >1) pb = new ProgressBar("computing weighted training error of weak learner",n);

learners_error[stage] = 0;

for (int i=0; i<n; ++i) {

if(pb) pb->update(i);

train_set->getExample(i, input, target, weight);

#ifdef BOUNDCHECK

if(!(is_equal(target[0],0)||is_equal(target[0],1)))

PLERROR("In AdaBoost::train() - target is %f in the training set. It should be 0 or 1 as we implement only two class boosting.",target[0]);

#endif

new_weak_learner->computeOutput(input,output);

real y_i=target[0];

real f_i=output[0];

if(conf_rated_adaboost)

{

PLASSERT_MSG(f_i>=0,"In AdaBoost.cc::train() - output[0] should be >= 0 ");

// an error between 0 and 1 (before weighting)

examples_error[i] = 2*(f_i+y_i-2*f_i*y_i);

learners_error[stage] += example_weights[i]*

examples_error[i]/2;

}

else

{

// an error between 0 and 1 (before weighting)

if (pseudo_loss_adaboost)

{

PLASSERT_MSG(f_i>=0,"In AdaBoost.cc::train() - output[0] should be >= 0 ");

examples_error[i] = 2*(f_i+y_i-2*f_i*y_i);

learners_error[stage] += example_weights[i]*

examples_error[i]/2;

}

else

{

if (fast_exact_is_equal(y_i, 1))

{

if (f_i<output_threshold)

{

learners_error[stage] += example_weights[i];

examples_error[i]=2;

}

else examples_error[i] = 0;

}

else

{

if (f_i>=output_threshold) {

learners_error[stage] += example_weights[i];

examples_error[i]=2;

}

else examples_error[i]=0;

}

}

}

}

}

if (verbosity>1)

NORMAL_LOG << "weak learner at stage " << stage

<< " has average loss = " << learners_error[stage] << endl;

weak_learners.push_back(new_weak_learner);

if (save_often && expdir!="")

PLearn::save(append_slash(expdir)+"model.psave", *this);

// compute the new learner's weight

if(conf_rated_adaboost)

{

// Find optimal weight with line search

real ax = -10;

real bx = 1;

real cx = 100;

real xmin;

real tolerance = 0.001;

int itmax = 100000;

int iter;

real xtmp;

real fa, fb, fc, ftmp;

// compute function for fa, fb and fc

fa = 0;

fb = 0;

fc = 0;

for (int i=0; i<n; ++i) {

train_set->getExample(i, input, target, weight);

new_weak_learner->computeOutput(input,output);

real y_i=(2*target[0]-1);

real f_i=(2*output[0]-1);

fa += example_weights[i]*exp(-1*ax*f_i*y_i);

fb += example_weights[i]*exp(-1*bx*f_i*y_i);

fc += example_weights[i]*exp(-1*cx*f_i*y_i);

}

for(iter=1;iter<=itmax;iter++)

{

if(verbosity>4)

NORMAL_LOG << "iteration " << iter << ": fx = " << fb << endl;

if (abs(cx-ax) <= tolerance)

{

xmin=bx;

if(verbosity>3)

{

NORMAL_LOG << "nIters for minimum: " << iter << endl;

NORMAL_LOG << "xmin = " << xmin << endl;

NORMAL_LOG << "fx = " << fb << endl;

}

break;

}

if (abs(bx-ax) > abs(bx-cx))

{

xtmp = (bx + ax) * 0.5;

ftmp = 0;

for (int i=0; i<n; ++i) {

train_set->getExample(i, input, target, weight);

new_weak_learner->computeOutput(input,output);

real y_i=(2*target[0]-1);

real f_i=(2*output[0]-1);

ftmp += example_weights[i]*exp(-1*xtmp*f_i*y_i);

}

if (ftmp > fb)

{

ax = xtmp;

fa = ftmp;

}

else

{

cx = bx;

fc = fb;

bx = xtmp;

fb = ftmp;

}

}

else

{

xtmp = (bx + cx) * 0.5;

ftmp = 0;

for (int i=0; i<n; ++i) {

train_set->getExample(i, input, target, weight);

new_weak_learner->computeOutput(input,output);

real y_i=(2*target[0]-1);

real f_i=(2*output[0]-1);

ftmp += example_weights[i]*exp(-1*xtmp*f_i*y_i);

}

if (ftmp > fb)

{

cx = xtmp;

fc = ftmp;

}

else

{

ax = bx;

fa = fb;

bx = xtmp;

fb = ftmp;

}

}

}

if(verbosity>3)

{

NORMAL_LOG << "Too many iterations in Brent" << endl;

}

xmin=bx;

voting_weights.push_back(xmin);

sum_voting_weights += abs(voting_weights[stage]);

}

else

{

voting_weights.push_back(

0.5*safeflog(((1-learners_error[stage])*target_error)

/(learners_error[stage]*(1-target_error))));

sum_voting_weights += abs(voting_weights[stage]);

}

real sum_w=0;

for (int i=0;i<n;i++)

{

example_weights[i] *= exp(-voting_weights[stage]*

(1-examples_error[i]));

sum_w += example_weights[i];

}

example_weights *= real(1.0)/sum_w;

computeTrainingError(input, target);

if(fast_exact_is_equal(learners_error[stage], 0))

{

NORMAL_LOG << "AdaBoost::train found weak learner with 0 training "

<< "error at stage "

<< stage << " is " << learners_error[stage] << endl;

// Simulate infinite weight on new_weak_learner

weak_learners.resize(0);

weak_learners.push_back(new_weak_learner);

voting_weights.resize(0);

voting_weights.push_back(1);

sum_voting_weights = 1;

found_zero_error_weak_learner = true;

stage++;

break;

}

// stopping criterion (in addition to n_stages)

if (early_stopping && learners_error[stage] >= target_error)

{

nstages = stage;

NORMAL_LOG <<

"AdaBoost::train early stopping because learner's loss at stage "

<< stage << " is " << learners_error[stage] << endl;

break;

}

}

PLCHECK(stage==weak_learners.length() || found_zero_error_weak_learner);

Profiler::pl_profile_end("AdaBoost::train");

}

Reimplemented from PLearn::PLearner.

Definition at line 178 of file AdaBoost.h.

Definition at line 109 of file AdaBoost.h.

Referenced by computeTrainingError(), declareOptions(), and train().

Definition at line 115 of file AdaBoost.h.

Referenced by build_(), computeOutput_(), computeOutputAndCosts(), declareOptions(), and train().

Definition at line 121 of file AdaBoost.h.

Referenced by declareOptions(), and train().

Vec PLearn::AdaBoost::example_weights [protected] |

Definition at line 69 of file AdaBoost.h.

Referenced by computeTrainingError(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 127 of file AdaBoost.h.

Referenced by computeCostsFromOutputs(), computeOutputAndCosts(), computeTrainingError(), declareOptions(), and getTestCostNames().

bool PLearn::AdaBoost::found_zero_error_weak_learner [protected] |

Indication that a weak learner with 0 training error has been found.

Definition at line 85 of file AdaBoost.h.

Referenced by declareOptions(), forget(), test(), and train().

real PLearn::AdaBoost::initial_sum_weights [protected] |

Definition at line 79 of file AdaBoost.h.

Referenced by declareOptions(), and train().

Vec PLearn::AdaBoost::learners_error [protected] |

Definition at line 67 of file AdaBoost.h.

Referenced by declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 130 of file AdaBoost.h.

Referenced by declareOptions(), setTrainingSet(), and train().

Definition at line 106 of file AdaBoost.h.

Referenced by computeCostsFromOutputs(), computeOutput_(), computeOutputAndCosts(), declareOptions(), and train().

Definition at line 103 of file AdaBoost.h.

Referenced by declareOptions(), and train().

Definition at line 112 of file AdaBoost.h.

Referenced by build_(), computeOutput_(), computeOutputAndCosts(), declareOptions(), and train().

Definition at line 135 of file AdaBoost.h.

Referenced by computeOutput_(), computeOutputAndCosts(), declareOptions(), outputsize(), and test().

Definition at line 124 of file AdaBoost.h.

Referenced by declareOptions(), and train().

TVec<int> PLearn::AdaBoost::saved_last_test_stages [mutable, private] |

Definition at line 63 of file AdaBoost.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and test().

TVec<VMat> PLearn::AdaBoost::saved_testoutputs [mutable, private] |

Definition at line 62 of file AdaBoost.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and test().

TVec<VMat> PLearn::AdaBoost::saved_testset [mutable, private] |

Used with reuse_test_results.

Definition at line 61 of file AdaBoost.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and test().

real PLearn::AdaBoost::sum_voting_weights [protected] |

Definition at line 78 of file AdaBoost.h.

Referenced by computeCostsFromOutputs(), computeOutput_(), computeOutputAndCosts(), declareOptions(), forget(), and train().

Vec PLearn::AdaBoost::sum_weighted_costs [mutable, private] |

Definition at line 57 of file AdaBoost.h.

Referenced by computeCostsFromOutputs(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

Definition at line 100 of file AdaBoost.h.

Referenced by declareOptions(), and train().

Vec PLearn::AdaBoost::voting_weights [protected] |

Definition at line 77 of file AdaBoost.h.

Referenced by computeCostsFromOutputs(), computeOutput_(), computeOutputAndCosts(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::AdaBoost::weak_learner_output [mutable, private] |

Definition at line 58 of file AdaBoost.h.

Referenced by build_(), computeOutput_(), computeOutputAndCosts(), declareOptions(), makeDeepCopyFromShallowCopy(), and test().

Weak learner to use as a template for each boosting round.

AdaBoost requires classification weak-learners that provide an essential non-linearity (e.g. linear regression does not work) NOTE: this weak learner must support deepCopy().

Definition at line 97 of file AdaBoost.h.

Referenced by build_(), computeCostsFromOutputs(), computeOutput_(), computeOutputAndCosts(), declareOptions(), getTestCostNames(), makeDeepCopyFromShallowCopy(), setTrainingSet(), test(), and train().

TVec< PP<PLearner> > PLearn::AdaBoost::weak_learners [protected] |

Vector of weak learners learned from boosting.

Definition at line 82 of file AdaBoost.h.

Referenced by build_(), computeCostsFromOutputs(), computeOutput_(), computeOutputAndCosts(), declareOptions(), finalize(), forget(), getTestCostNames(), makeDeepCopyFromShallowCopy(), setTrainingSet(), test(), and train().

Definition at line 118 of file AdaBoost.h.

Referenced by declareOptions(), and train().

Vec PLearn::AdaBoost::weighted_costs [mutable, private] |

Global storage to save memory allocations.

Definition at line 56 of file AdaBoost.h.

Referenced by computeCostsFromOutputs(), computeOutputAndCosts(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4