|

PLearn 0.1

|

|

PLearn 0.1

|

Computes the cross-entropy, given two activation vectors. More...

#include <CrossEntropyCostModule.h>

Public Member Functions | |

| CrossEntropyCostModule () | |

| Default constructor. | |

| virtual void | fprop (const Vec &input, const Vec &target, real &cost) const |

| given the input and target, compute the cost | |

| virtual void | fprop (const Vec &input, const Vec &target, Vec &cost) const |

| this version allows for several costs | |

| virtual void | fprop (const Mat &input, const Mat &target, Mat &cost) const |

| Mini-batch version with several costs.. | |

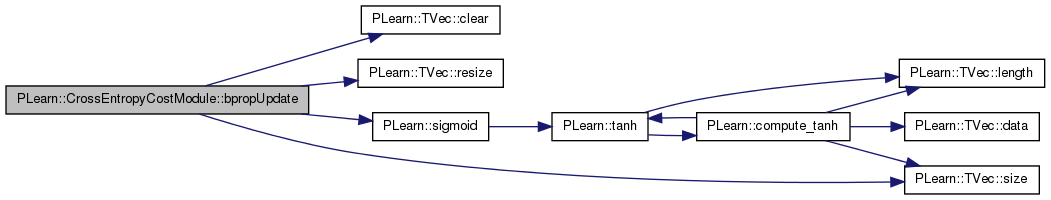

| virtual void | bpropUpdate (const Vec &input, const Vec &target, real cost, Vec &input_gradient, bool accumulate=false) |

| Standard backpropagation. | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &targets, const Vec &costs, Mat &input_gradients, bool accumulate=false) |

| Minibatch backpropagation. | |

| virtual void | bpropAccUpdate (const TVec< Mat * > &ports_value, const TVec< Mat * > &ports_gradient) |

| New version of backpropagation. | |

| virtual void | setLearningRate (real dynamic_learning_rate) |

| Overridden to do nothing (in particular, no warning). | |

| virtual TVec< string > | costNames () |

| Indicates the name of the computed costs. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual CrossEntropyCostModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef CostModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

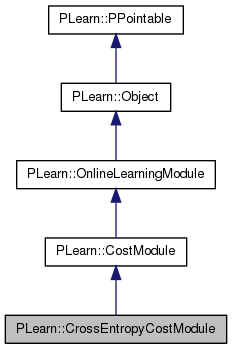

Computes the cross-entropy, given two activation vectors.

Backpropagates it.

Definition at line 50 of file CrossEntropyCostModule.h.

typedef CostModule PLearn::CrossEntropyCostModule::inherited [private] |

Reimplemented from PLearn::CostModule.

Definition at line 52 of file CrossEntropyCostModule.h.

| PLearn::CrossEntropyCostModule::CrossEntropyCostModule | ( | ) |

| string PLearn::CrossEntropyCostModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

| OptionList & PLearn::CrossEntropyCostModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

| RemoteMethodMap & PLearn::CrossEntropyCostModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

| Object * PLearn::CrossEntropyCostModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

| StaticInitializer CrossEntropyCostModule::_static_initializer_ & PLearn::CrossEntropyCostModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

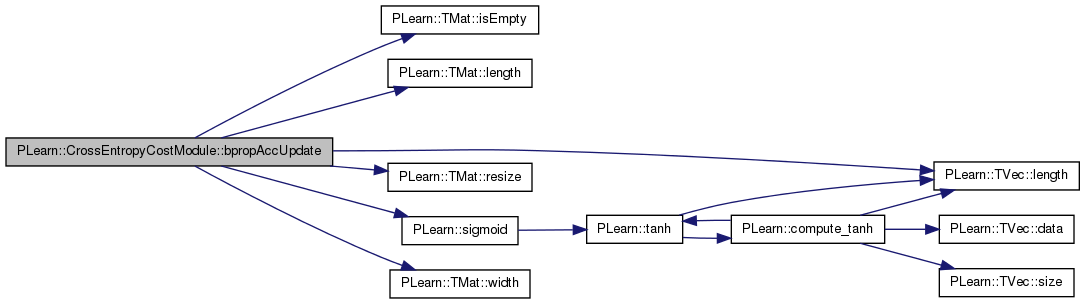

| void PLearn::CrossEntropyCostModule::bpropAccUpdate | ( | const TVec< Mat * > & | ports_value, |

| const TVec< Mat * > & | ports_gradient | ||

| ) | [virtual] |

New version of backpropagation.

Reimplemented from PLearn::CostModule.

Definition at line 177 of file CrossEntropyCostModule.cc.

References i, PLearn::TMat< T >::isEmpty(), j, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLASSERT, PLERROR, PLearn::TMat< T >::resize(), PLearn::sigmoid(), and PLearn::TMat< T >::width().

{

PLASSERT( ports_value.length() == nPorts() );

PLASSERT( ports_gradient.length() == nPorts() );

Mat* prediction = ports_value[0];

Mat* target = ports_value[1];

#ifndef NDEBUG

Mat* cost = ports_value[2];

#endif

Mat* prediction_grad = ports_gradient[0];

Mat* target_grad = ports_gradient[1];

Mat* cost_grad = ports_gradient[2];

// If we have cost_grad and we want prediction_grad

if( prediction_grad && prediction_grad->isEmpty()

&& cost_grad && !cost_grad->isEmpty() )

{

PLASSERT( prediction );

PLASSERT( target );

PLASSERT( cost );

PLASSERT( !target_grad );

PLASSERT( prediction->width() == getPortSizes()(0,1) );

PLASSERT( target->width() == getPortSizes()(1,1) );

PLASSERT( cost->width() == getPortSizes()(2,1) );

PLASSERT( prediction_grad->width() == getPortSizes()(0,1) );

PLASSERT( cost_grad->width() == getPortSizes()(2,1) );

int batch_size = prediction->length();

PLASSERT( target->length() == batch_size );

PLASSERT( cost->length() == batch_size );

PLASSERT( cost_grad->length() == batch_size );

prediction_grad->resize(batch_size, getPortSizes()(0,1));

for( int i=0; i < batch_size; i++ )

for ( int j=0; j < target->width(); j++ )

(*prediction_grad)(i, j) +=

(*cost_grad)(i,0)*(sigmoid((*prediction)(i,j)) - (*target)(i,j));

}

else if( !prediction_grad && !target_grad &&

(!cost_grad || !cost_grad->isEmpty()) )

// We do not care about the gradient w.r.t prediction and target, and

// either we do not care about the gradient w.r.t. cost or there is a

// gradient provided (that we will not use).

// In such situations, there is nothing to do.

return;

else if( !cost_grad && prediction_grad && prediction_grad->isEmpty() )

PLERROR("In CrossEntropyCostModule::bpropAccUpdate - cost gradient is NULL,\n"

"cannot compute prediction gradient. Maybe you should set\n"

"\"propagate_gradient = 0\" on the incoming connection.\n");

else

PLERROR("In OnlineLearningModule::bpropAccUpdate - Port configuration "

"not implemented for class '%s'", classname().c_str());

}

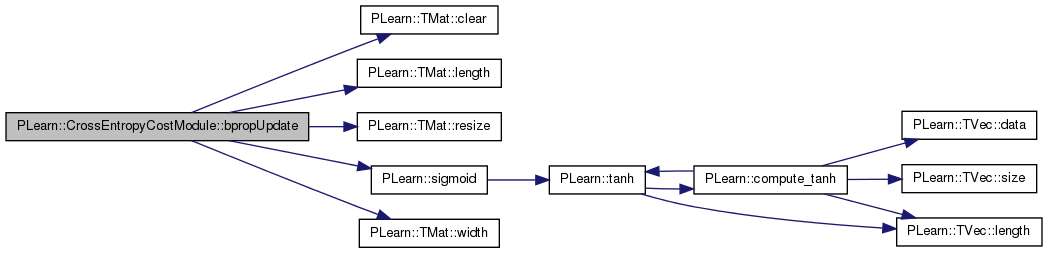

| void PLearn::CrossEntropyCostModule::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| const Vec & | costs, | ||

| Mat & | input_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Minibatch backpropagation.

Reimplemented from PLearn::CostModule.

Definition at line 151 of file CrossEntropyCostModule.cc.

References PLearn::TMat< T >::clear(), i, j, PLearn::TMat< T >::length(), PLASSERT, PLASSERT_MSG, PLearn::TMat< T >::resize(), PLearn::sigmoid(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

PLASSERT( targets.width() == target_size );

int batch_size = inputs.length();

if (accumulate)

{

PLASSERT_MSG( input_gradients.width() == input_size &&

input_gradients.length() == batch_size,

"Cannot resize input_gradients AND accumulate into it" );

}

else

{

input_gradients.resize(batch_size, input_size);

input_gradients.clear();

}

for (int i=0; i < batch_size; i++)

for (int j=0; j < target_size; j++)

input_gradients(i, j) += sigmoid(inputs(i, j)) - targets(i, j);

}

| void PLearn::CrossEntropyCostModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost, | ||

| Vec & | input_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Standard backpropagation.

Reimplemented from PLearn::CostModule.

Definition at line 129 of file CrossEntropyCostModule.cc.

References PLearn::TVec< T >::clear(), i, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::sigmoid(), and PLearn::TVec< T >::size().

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

if (accumulate)

{

PLASSERT_MSG( input_gradient.size() == input_size,

"Cannot resize input_gradient AND accumulate into it" );

}

else

{

input_gradient.resize(input_size);

input_gradient.clear();

}

for (int i=0; i < target_size; i++)

input_gradient[i] += sigmoid(input[i]) - target[i];

}

| void PLearn::CrossEntropyCostModule::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::CostModule.

Definition at line 72 of file CrossEntropyCostModule.cc.

{

inherited::build();

build_();

}

| void PLearn::CrossEntropyCostModule::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::CostModule.

Definition at line 66 of file CrossEntropyCostModule.cc.

{

target_size = input_size;

}

| string PLearn::CrossEntropyCostModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

| TVec< string > PLearn::CrossEntropyCostModule::costNames | ( | ) | [virtual] |

Indicates the name of the computed costs.

Reimplemented from PLearn::CostModule.

Definition at line 236 of file CrossEntropyCostModule.cc.

| void PLearn::CrossEntropyCostModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::CostModule.

Definition at line 55 of file CrossEntropyCostModule.cc.

References PLearn::OptionBase::nosave, PLearn::redeclareOption(), and PLearn::CostModule::target_size.

{

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

redeclareOption(ol, "target_size", &CrossEntropyCostModule::target_size,

OptionBase::nosave,

"equals to input_size");

}

| static const PPath& PLearn::CrossEntropyCostModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::CostModule.

Definition at line 95 of file CrossEntropyCostModule.h.

:

//##### Protected Member Functions ######################################

| CrossEntropyCostModule * PLearn::CrossEntropyCostModule::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

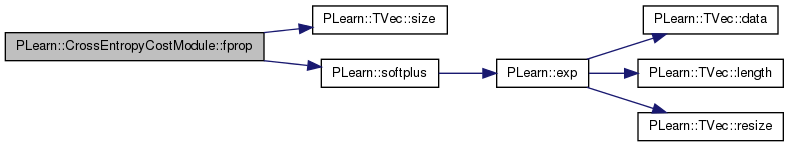

| void PLearn::CrossEntropyCostModule::fprop | ( | const Vec & | input, |

| const Vec & | target, | ||

| real & | cost | ||

| ) | const [virtual] |

given the input and target, compute the cost

Reimplemented from PLearn::CostModule.

Definition at line 88 of file CrossEntropyCostModule.cc.

References i, PLASSERT, PLearn::TVec< T >::size(), and PLearn::softplus().

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

cost = 0;

real target_i, activation_i;

for( int i=0 ; i < target_size ; i++ )

{

// nll = - target*log(sigmoid(act)) -(1-target)*log(1-sigmoid(act))

// but it is numerically unstable, so use instead the following:

// = target*softplus(-act) +(1-target)*(act+softplus(-act))

// = act + softplus(-act) - target*act

// = softplus(act) - target*act

target_i = target[i];

activation_i = input[i];

cost += softplus(activation_i) - target_i * activation_i;

}

}

| void PLearn::CrossEntropyCostModule::fprop | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | cost | ||

| ) | const [virtual] |

this version allows for several costs

Reimplemented from PLearn::CostModule.

Definition at line 109 of file CrossEntropyCostModule.cc.

References PLearn::TVec< T >::resize().

{

cost.resize( output_size );

fprop( input, target, cost[0] );

}

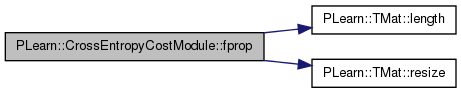

| void PLearn::CrossEntropyCostModule::fprop | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| Mat & | costs | ||

| ) | const [virtual] |

Mini-batch version with several costs..

Reimplemented from PLearn::CostModule.

Definition at line 115 of file CrossEntropyCostModule.cc.

References i, PLearn::TMat< T >::length(), and PLearn::TMat< T >::resize().

{

costs.resize( inputs.length(), output_size );

for (int i = 0; i < inputs.length(); i++)

fprop(inputs(i), targets(i), costs(i,0));

}

| OptionList & PLearn::CrossEntropyCostModule::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

| OptionMap & PLearn::CrossEntropyCostModule::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

| RemoteMethodMap & PLearn::CrossEntropyCostModule::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 48 of file CrossEntropyCostModule.cc.

| void PLearn::CrossEntropyCostModule::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::CostModule.

Definition at line 79 of file CrossEntropyCostModule.cc.

{

inherited::makeDeepCopyFromShallowCopy(copies);

}

| virtual void PLearn::CrossEntropyCostModule::setLearningRate | ( | real | dynamic_learning_rate | ) | [inline, virtual] |

Overridden to do nothing (in particular, no warning).

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 85 of file CrossEntropyCostModule.h.

{}

Reimplemented from PLearn::CostModule.

Definition at line 95 of file CrossEntropyCostModule.h.

1.7.4

1.7.4