|

PLearn 0.1

|

|

PLearn 0.1

|

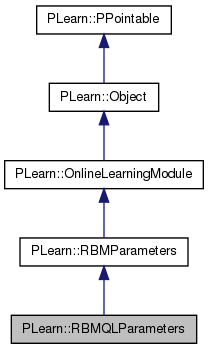

Stores and learns the parameters between one quadratic layer and one linear layer of an RBM. More...

#include <RBMQLParameters.h>

Public Member Functions | |

| RBMQLParameters (real the_learning_rate=0) | |

| Default constructor. | |

| RBMQLParameters (string down_types, string up_types, real the_learning_rate=0) | |

| Constructor from two string prototymes. | |

| virtual void | accumulatePosStats (const Vec &down_values, const Vec &up_values) |

| Accumulates positive phase statistics to *_pos_stats. | |

| virtual void | accumulateNegStats (const Vec &down_values, const Vec &up_values) |

| Accumulates negative phase statistics to *_neg_stats. | |

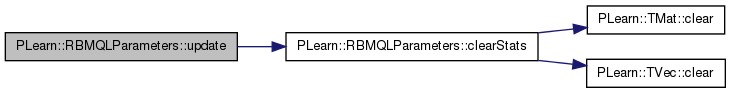

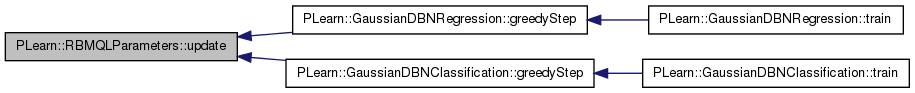

| virtual void | update () |

| Updates parameters according to contrastive divergence gradient. | |

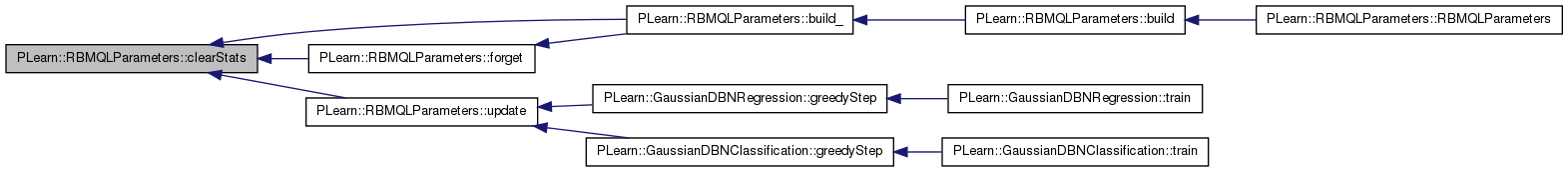

| virtual void | clearStats () |

| Clear all information accumulated during stats. | |

| virtual void | computeUnitActivations (int start, int length, const Vec &activations) const |

| Computes the vectors of activation of "length" units, starting from "start", and concatenates them into "activations". | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient) |

| Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then). | |

| virtual void | forget () |

| reset the parameters to the state they would be BEFORE starting training. | |

| virtual int | nParameters (bool share_up_params, bool share_down_params) const |

| optionally perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation. | |

| virtual Vec | makeParametersPointHere (const Vec &global_parameters, bool share_up_params, bool share_down_params) |

| Make the parameters data be sub-vectors of the given global_parameters. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMQLParameters * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Mat | weights |

| Matrix containing unit-to-unit weights (output_size × input_size) | |

| Vec | up_units_bias |

| Element i contains the bias of linear up unit i. | |

| TVec< Vec > | down_units_params |

| Element 1,i contains the bias of down unit i, element (2,i) contains the. | |

| Mat | weights_pos_stats |

| Accumulates positive contribution to the weights' gradient. | |

| Mat | weights_neg_stats |

| Accumulates negative contribution to the weights' gradient. | |

| Vec | up_units_bias_pos_stats |

| Accumulates positive contribution to the gradient of up_units_bias. | |

| Vec | up_units_bias_neg_stats |

| Accumulates negative contribution to the gradient of up_units_params. | |

| TVec< Vec > | down_units_params_pos_stats |

| Accumulates positive contribution to the gradient of down_units_params. | |

| TVec< Vec > | down_units_params_neg_stats |

| Accumulates negative contribution to the gradient of down_units_params. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef RBMParameters | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Stores and learns the parameters between one quadratic layer and one linear layer of an RBM.

The lower layer is quadratic and the upper one is linear. See RBMLQParameters.* when the layers are switched.

Definition at line 56 of file RBMQLParameters.h.

typedef RBMParameters PLearn::RBMQLParameters::inherited [private] |

Reimplemented from PLearn::RBMParameters.

Definition at line 58 of file RBMQLParameters.h.

| PLearn::RBMQLParameters::RBMQLParameters | ( | real | the_learning_rate = 0 | ) |

Default constructor.

Definition at line 52 of file RBMQLParameters.cc.

:

inherited(the_learning_rate)

{

}

| PLearn::RBMQLParameters::RBMQLParameters | ( | string | down_types, |

| string | up_types, | ||

| real | the_learning_rate = 0 |

||

| ) |

Constructor from two string prototymes.

Definition at line 57 of file RBMQLParameters.cc.

References build().

:

inherited( down_types, up_types, the_learning_rate )

{

// We're not sure inherited::build() has been called

build();

}

| string PLearn::RBMQLParameters::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMParameters.

Definition at line 50 of file RBMQLParameters.cc.

| OptionList & PLearn::RBMQLParameters::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMParameters.

Definition at line 50 of file RBMQLParameters.cc.

| RemoteMethodMap & PLearn::RBMQLParameters::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMParameters.

Definition at line 50 of file RBMQLParameters.cc.

Reimplemented from PLearn::RBMParameters.

Definition at line 50 of file RBMQLParameters.cc.

| Object * PLearn::RBMQLParameters::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMQLParameters.cc.

| StaticInitializer RBMQLParameters::_static_initializer_ & PLearn::RBMQLParameters::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMParameters.

Definition at line 50 of file RBMQLParameters.cc.

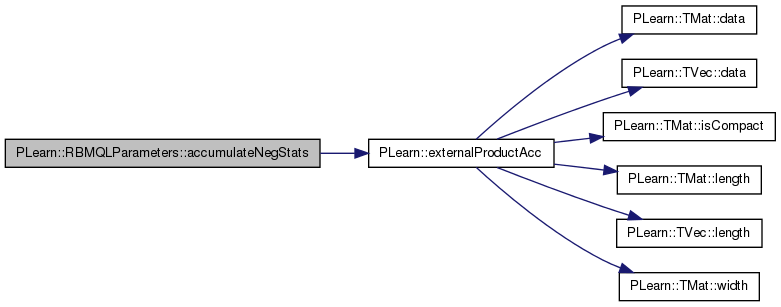

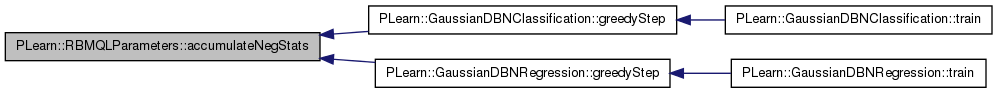

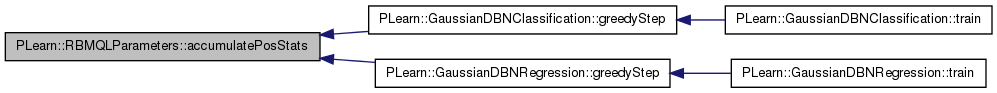

| void PLearn::RBMQLParameters::accumulateNegStats | ( | const Vec & | down_values, |

| const Vec & | up_values | ||

| ) | [virtual] |

Accumulates negative phase statistics to *_neg_stats.

Implements PLearn::RBMParameters.

Definition at line 193 of file RBMQLParameters.cc.

References PLearn::RBMParameters::down_layer_size, down_units_params, down_units_params_neg_stats, PLearn::externalProductAcc(), i, PLearn::RBMParameters::neg_count, up_units_bias_neg_stats, and weights_neg_stats.

Referenced by PLearn::GaussianDBNClassification::greedyStep(), and PLearn::GaussianDBNRegression::greedyStep().

{

// weights_pos_stats += up_values * down_values'

externalProductAcc( weights_neg_stats, up_values, down_values );

down_units_params_neg_stats[0] += down_values;

up_units_bias_neg_stats += up_values;

for(int i=0 ; i<down_layer_size ; ++i) {

down_units_params_neg_stats[1][i] += 2 * down_units_params[1][i] *

down_values[i] *down_values[i];

}

neg_count++;

}

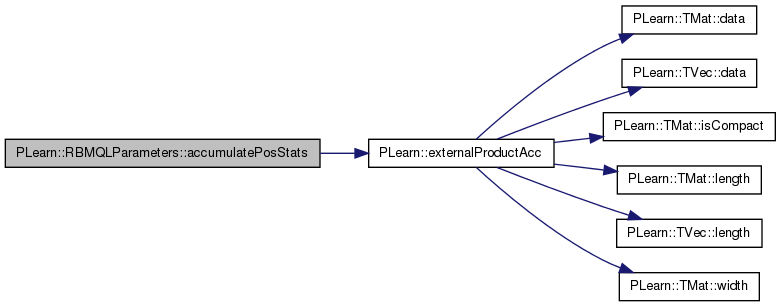

| void PLearn::RBMQLParameters::accumulatePosStats | ( | const Vec & | down_values, |

| const Vec & | up_values | ||

| ) | [virtual] |

Accumulates positive phase statistics to *_pos_stats.

Implements PLearn::RBMParameters.

Definition at line 176 of file RBMQLParameters.cc.

References PLearn::RBMParameters::down_layer_size, down_units_params, down_units_params_pos_stats, PLearn::externalProductAcc(), i, PLearn::RBMParameters::pos_count, up_units_bias_pos_stats, and weights_pos_stats.

Referenced by PLearn::GaussianDBNClassification::greedyStep(), and PLearn::GaussianDBNRegression::greedyStep().

{

// weights_pos_stats += up_values * down_values'

externalProductAcc( weights_pos_stats, up_values, down_values );

down_units_params_pos_stats[0] += down_values;

up_units_bias_pos_stats += up_values;

for(int i=0 ; i<down_layer_size ; ++i) {

down_units_params_pos_stats[1][i] += 2 * down_units_params[1][i] *

down_values[i] *down_values[i];

}

pos_count++;

}

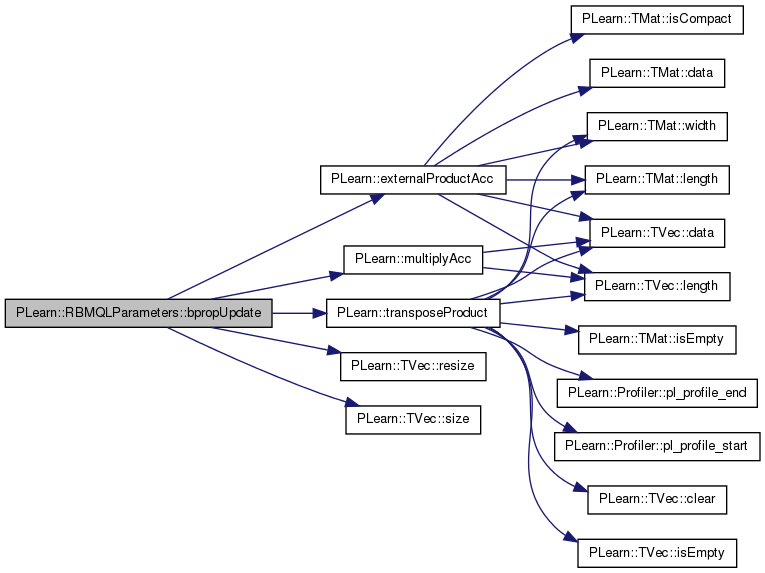

| void PLearn::RBMQLParameters::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then).

this version allows to obtain the input gradient as well

Since sub-classes are supposed to learn ONLINE, the object is 'ready-to-be-used' just after any bpropUpdate. N.B. A DEFAULT IMPLEMENTATION IS PROVIDED IN THE SUPER-CLASS, WHICH JUST CALLS bpropUpdate(input, output, input_gradient, output_gradient) AND IGNORES INPUT GRADIENT. this version allows to obtain the input gradient as well N.B. THE DEFAULT IMPLEMENTATION IN SUPER-CLASS JUST RAISES A PLERROR.

Definition at line 305 of file RBMQLParameters.cc.

References PLearn::RBMParameters::down_layer_size, PLearn::externalProductAcc(), PLearn::RBMParameters::learning_rate, PLearn::multiplyAcc(), PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::transposeProduct(), PLearn::RBMParameters::up_layer_size, up_units_bias, and weights.

{

PLASSERT( input.size() == down_layer_size );

PLASSERT( output.size() == up_layer_size );

PLASSERT( output_gradient.size() == up_layer_size );

input_gradient.resize( down_layer_size );

// input_gradient = weights' * output_gradient

transposeProduct( input_gradient, weights, output_gradient );

// weights -= learning_rate * output_gradient * input'

externalProductAcc( weights, (-learning_rate)*output_gradient, input );

// (up) bias -= learning_rate * output_gradient

multiplyAcc( up_units_bias, output_gradient, -learning_rate );

}

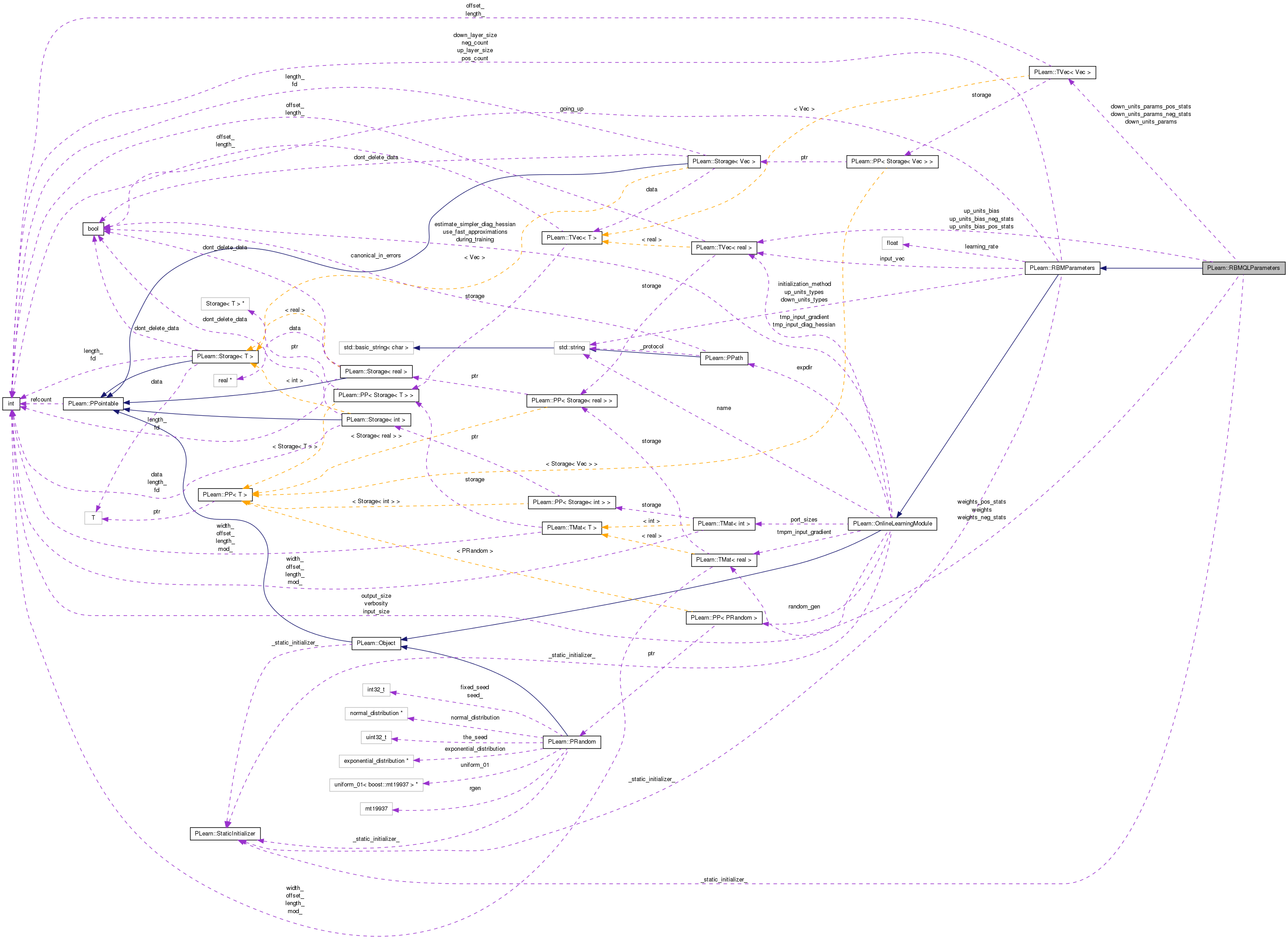

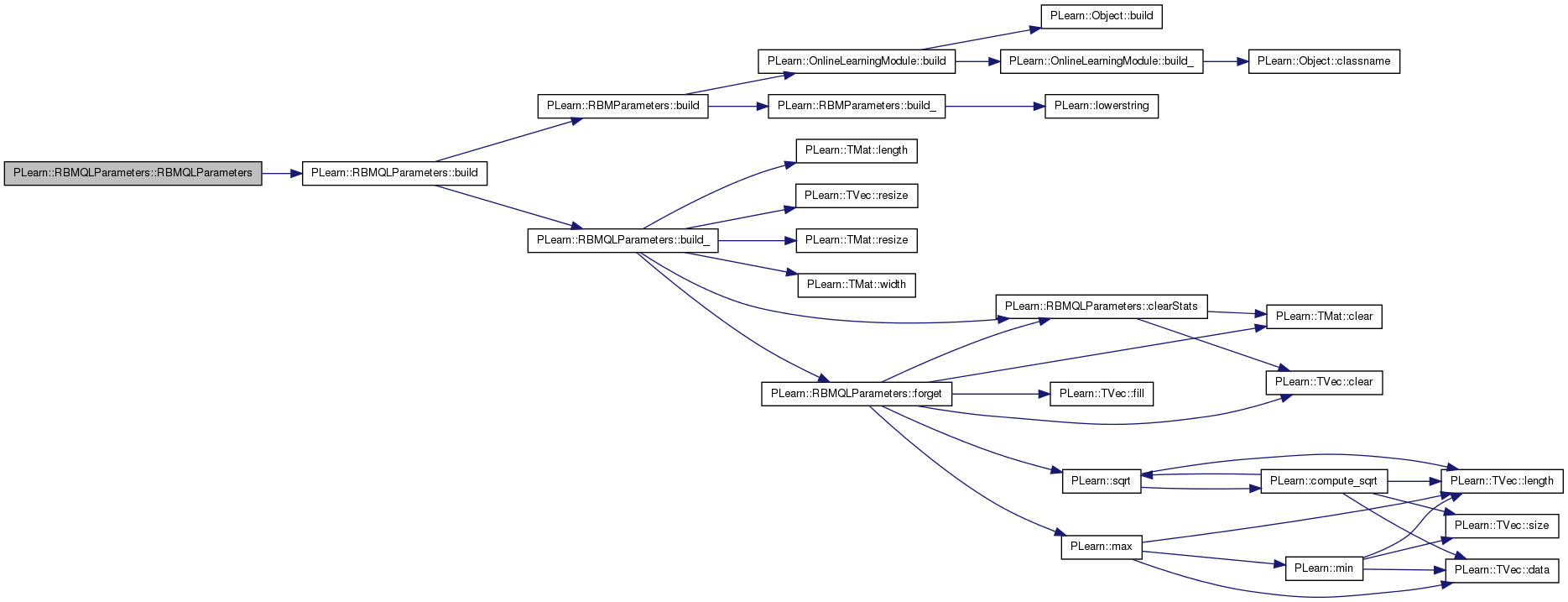

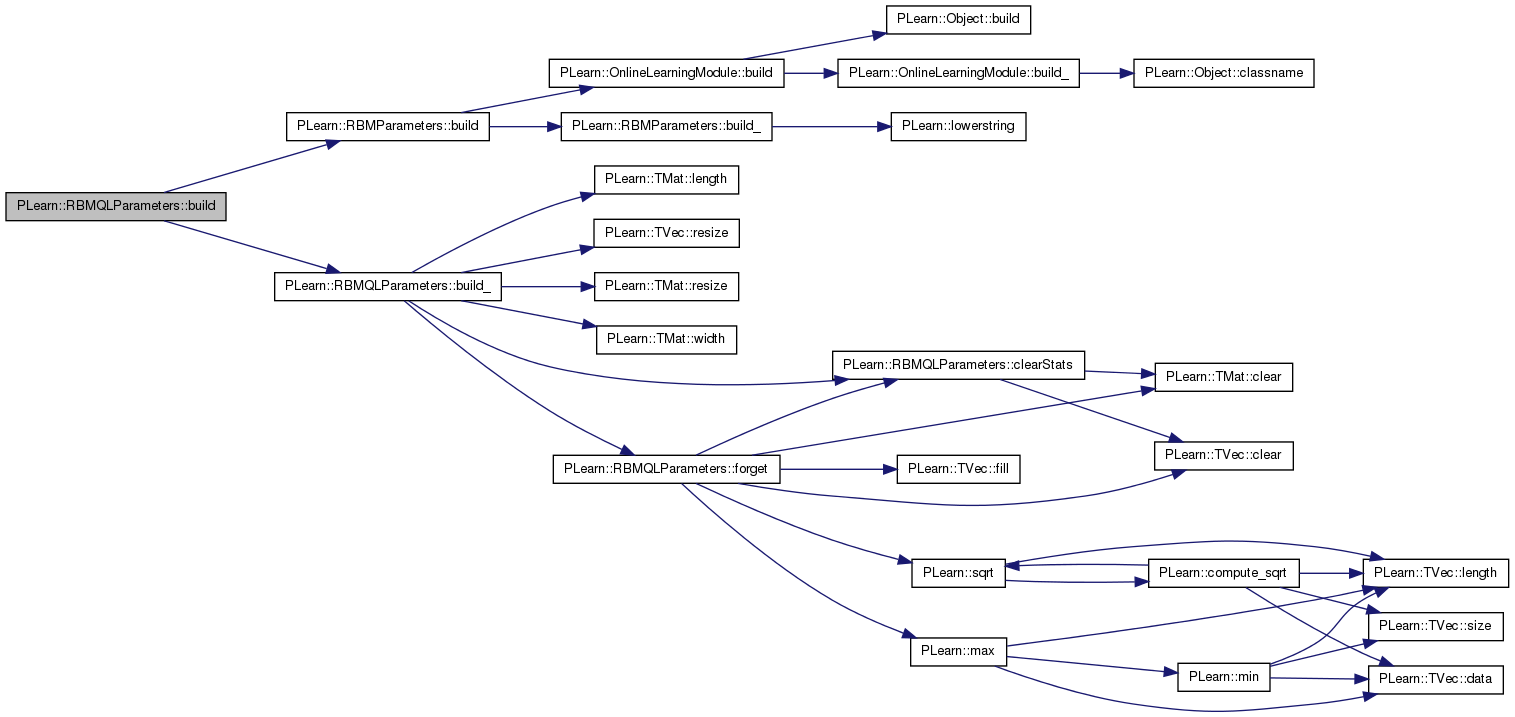

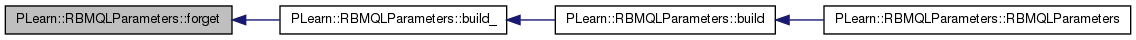

| void PLearn::RBMQLParameters::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMParameters.

Definition at line 154 of file RBMQLParameters.cc.

References PLearn::RBMParameters::build(), and build_().

Referenced by RBMQLParameters().

{

inherited::build();

build_();

}

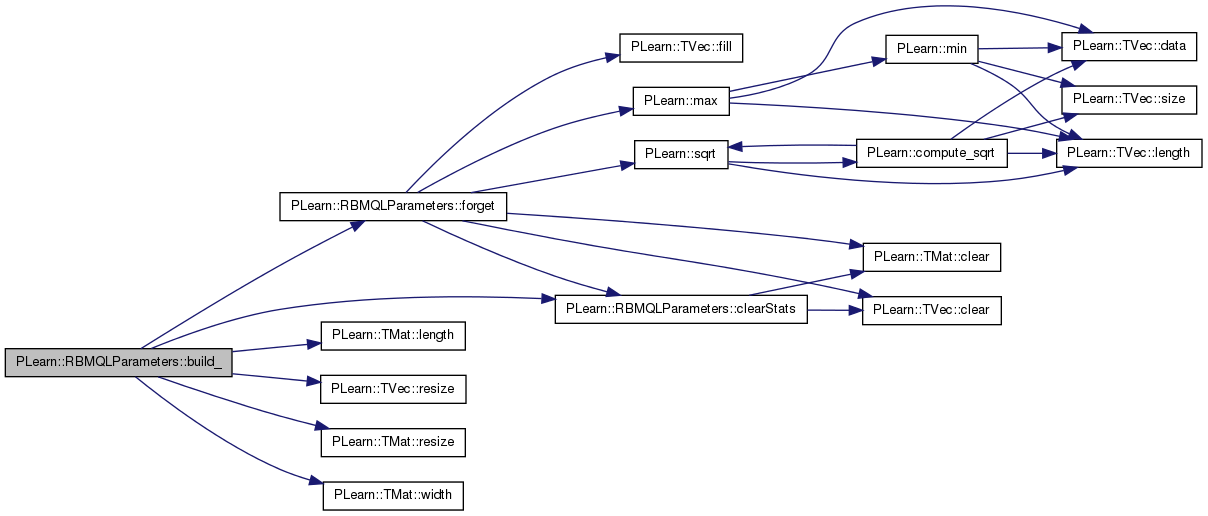

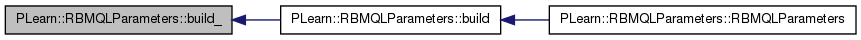

| void PLearn::RBMQLParameters::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMParameters.

Definition at line 95 of file RBMQLParameters.cc.

References clearStats(), PLearn::RBMParameters::down_layer_size, down_units_params, down_units_params_neg_stats, down_units_params_pos_stats, PLearn::RBMParameters::down_units_types, forget(), i, PLearn::TMat< T >::length(), PLearn::OnlineLearningModule::output_size, PLERROR, PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), PLearn::RBMParameters::up_layer_size, up_units_bias, up_units_bias_neg_stats, up_units_bias_pos_stats, PLearn::RBMParameters::up_units_types, weights, weights_neg_stats, weights_pos_stats, and PLearn::TMat< T >::width().

Referenced by build().

{

if( up_layer_size == 0 || down_layer_size == 0 )

return;

output_size = 0;

bool needs_forget = false; // do we need to reinitialize the parameters?

if( weights.length() != up_layer_size ||

weights.width() != down_layer_size )

{

weights.resize( up_layer_size, down_layer_size );

needs_forget = true;

}

weights_pos_stats.resize( up_layer_size, down_layer_size );

weights_neg_stats.resize( up_layer_size, down_layer_size );

down_units_params.resize( 2 );

down_units_params[0].resize( down_layer_size ) ;

down_units_params[1].resize( down_layer_size ) ;

down_units_params_pos_stats.resize( 2 );

down_units_params_pos_stats[0].resize( down_layer_size );

down_units_params_pos_stats[1].resize( down_layer_size );

down_units_params_neg_stats.resize( 2 );

down_units_params_neg_stats[0].resize( down_layer_size );

down_units_params_neg_stats[1].resize( down_layer_size );

for( int i=0 ; i<down_layer_size ; i++ )

{

char dut_i = down_units_types[i];

if( dut_i != 'q' ) // not quadratic activation unit

PLERROR( "RBMQLParameters::build_() - value '%c' for"

" down_units_types[%d]\n"

"should be 'q'.\n",

dut_i, i );

}

up_units_bias.resize( up_layer_size );

up_units_bias_pos_stats.resize( up_layer_size );

up_units_bias_neg_stats.resize( up_layer_size );

for( int i=0 ; i<up_layer_size ; i++ )

{

char uut_i = up_units_types[i];

if( uut_i != 'l' ) // not linear activation unit

PLERROR( "RBMQLParameters::build_() - value '%c' for"

" up_units_types[%d]\n"

"should be 'l'.\n",

uut_i, i );

}

if( needs_forget )

forget();

clearStats();

}

| string PLearn::RBMQLParameters::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMQLParameters.cc.

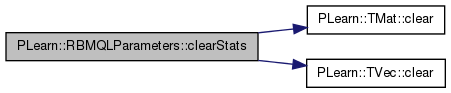

| void PLearn::RBMQLParameters::clearStats | ( | ) | [virtual] |

Clear all information accumulated during stats.

Implements PLearn::RBMParameters.

Definition at line 249 of file RBMQLParameters.cc.

References PLearn::TMat< T >::clear(), PLearn::TVec< T >::clear(), down_units_params_neg_stats, down_units_params_pos_stats, PLearn::RBMParameters::neg_count, PLearn::RBMParameters::pos_count, up_units_bias_neg_stats, up_units_bias_pos_stats, weights_neg_stats, and weights_pos_stats.

Referenced by build_(), forget(), and update().

{

weights_pos_stats.clear();

weights_neg_stats.clear();

down_units_params_pos_stats[0].clear();

down_units_params_pos_stats[1].clear();

down_units_params_neg_stats[0].clear();

down_units_params_neg_stats[1].clear();

up_units_bias_pos_stats.clear();

up_units_bias_neg_stats.clear();

pos_count = 0;

neg_count = 0;

}

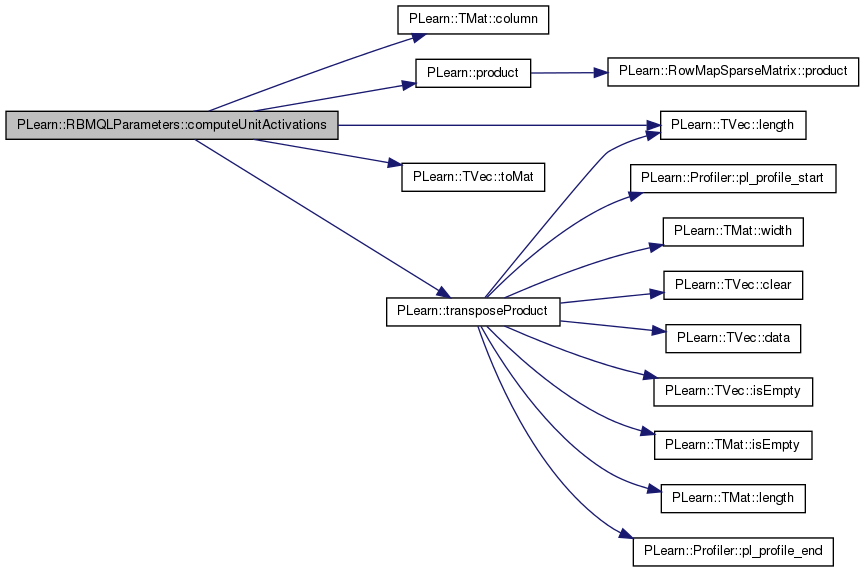

| void PLearn::RBMQLParameters::computeUnitActivations | ( | int | start, |

| int | length, | ||

| const Vec & | activations | ||

| ) | const [virtual] |

Computes the vectors of activation of "length" units, starting from "start", and concatenates them into "activations".

"start" indexes an up unit if "going_up", else a down unit.

Implements PLearn::RBMParameters.

Definition at line 268 of file RBMQLParameters.cc.

References PLearn::TMat< T >::column(), i, PLearn::TVec< T >::length(), PLASSERT, PLearn::product(), PLearn::TVec< T >::toMat(), and PLearn::transposeProduct().

{

//activations[2 * i] = mu of unit (i - start)

//activations[2 * i + 1] = sigma of unit (i - start)

if( going_up )

{

PLASSERT( activations.length() == length );

PLASSERT( start+length <= up_layer_size );

// product( weights, input_vec , activations) ;

product( activations , weights, input_vec ) ;

activations += up_units_bias ;

}

else

{

// mu = activations[i] = -(sum_j weights(i,j) input_vec[j] + b[i])

// / (2 * up_units_params[i][1]^2)

// TODO: change it to work with start and length

PLASSERT( start+length <= down_layer_size );

Mat activations_mat = activations.toMat( activations.length()/2 , 2);

Mat mu = activations_mat.column(0) ;

Mat sigma = activations_mat.column(1) ;

transposeProduct( mu , weights , input_vec.toMat(input_vec.length() , 1) );

// activations[i-start] = sum_j weights(j,i) input_vec[j] + b[i]

for(int i=0 ; i<length ; ++i) {

real a_i = down_units_params[1][i] ;

mu[i][0] = - (mu[i][0] + down_units_params[0][i]) / (2 * a_i * a_i) ;

sigma[i][0] = 1 / (2. * a_i * a_i) ;

}

}

}

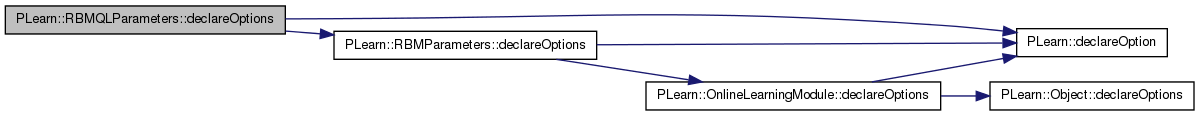

| void PLearn::RBMQLParameters::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMParameters.

Definition at line 65 of file RBMQLParameters.cc.

References PLearn::declareOption(), PLearn::RBMParameters::declareOptions(), down_units_params, PLearn::OptionBase::learntoption, up_units_bias, and weights.

{

// ### Declare all of this object's options here.

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. If you don't provide one of these three,

// ### this option will be ignored when loading values from a script.

// ### You can also combine flags, for example with OptionBase::nosave:

// ### (OptionBase::buildoption | OptionBase::nosave)

declareOption(ol, "weights", &RBMQLParameters::weights,

OptionBase::learntoption,

"Matrix containing unit-to-unit weights (output_size ×"

" input_size)");

declareOption(ol, "up_units_bias",

&RBMQLParameters::up_units_bias,

OptionBase::learntoption,

"Element i contains the bias of up unit i");

declareOption(ol, "down_units_params",

&RBMQLParameters::down_units_params,

OptionBase::learntoption,

"Element 0,i contains the bias of down unit i. Element 1,i"

"contains the quadratic term of down unit i ");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RBMQLParameters::declaringFile | ( | ) | [inline, static] |

| RBMQLParameters * PLearn::RBMQLParameters::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMParameters.

Definition at line 50 of file RBMQLParameters.cc.

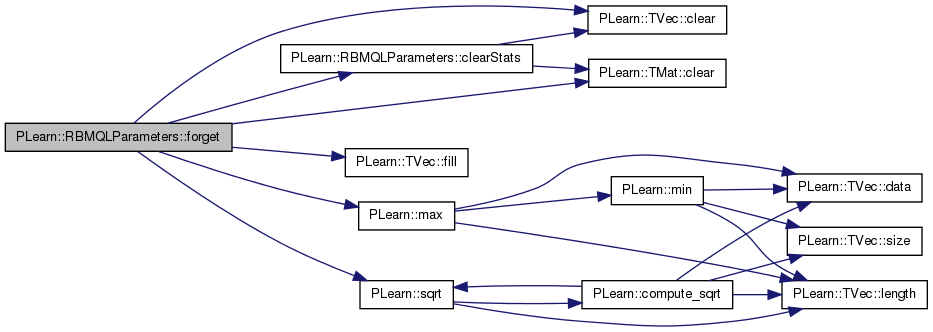

| void PLearn::RBMQLParameters::forget | ( | ) | [virtual] |

reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Definition at line 327 of file RBMQLParameters.cc.

References PLearn::TVec< T >::clear(), PLearn::TMat< T >::clear(), clearStats(), d, PLearn::RBMParameters::down_layer_size, down_units_params, PLearn::TVec< T >::fill(), PLearn::RBMParameters::initialization_method, PLearn::max(), PLearn::OnlineLearningModule::random_gen, PLearn::sqrt(), PLearn::RBMParameters::up_layer_size, up_units_bias, and weights.

Referenced by build_().

{

if( initialization_method == "zero" )

weights.clear();

else

{

if( !random_gen )

random_gen = new PRandom();

real d = 1. / max( down_layer_size, up_layer_size );

if( initialization_method == "uniform_sqrt" )

d = sqrt( d );

random_gen->fill_random_uniform( weights, -d, d );

}

down_units_params[0].clear();

down_units_params[1].fill(1.);

up_units_bias.clear();

clearStats();

}

| OptionList & PLearn::RBMQLParameters::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMQLParameters.cc.

| OptionMap & PLearn::RBMQLParameters::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMQLParameters.cc.

| RemoteMethodMap & PLearn::RBMQLParameters::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 50 of file RBMQLParameters.cc.

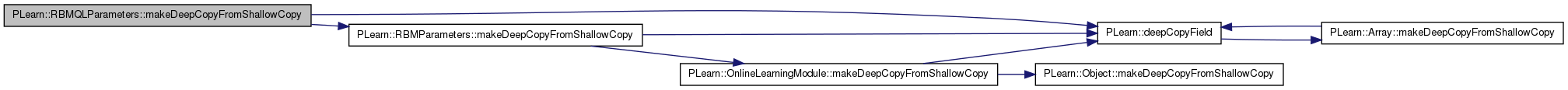

| void PLearn::RBMQLParameters::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMParameters.

Definition at line 161 of file RBMQLParameters.cc.

References PLearn::deepCopyField(), down_units_params, down_units_params_neg_stats, down_units_params_pos_stats, PLearn::RBMParameters::makeDeepCopyFromShallowCopy(), up_units_bias, up_units_bias_neg_stats, up_units_bias_pos_stats, weights, weights_neg_stats, and weights_pos_stats.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(weights, copies);

deepCopyField(up_units_bias, copies);

deepCopyField(down_units_params, copies);

deepCopyField(weights_pos_stats, copies);

deepCopyField(weights_neg_stats, copies);

deepCopyField(up_units_bias_pos_stats, copies);

deepCopyField(up_units_bias_neg_stats, copies);

deepCopyField(down_units_params_pos_stats, copies);

deepCopyField(down_units_params_neg_stats, copies);

}

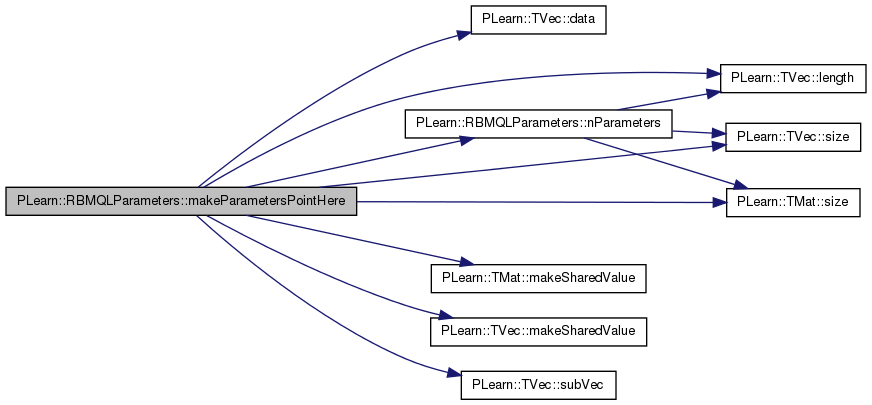

| Vec PLearn::RBMQLParameters::makeParametersPointHere | ( | const Vec & | global_parameters, |

| bool | share_up_params, | ||

| bool | share_down_params | ||

| ) | [virtual] |

Make the parameters data be sub-vectors of the given global_parameters.

The argument should have size >= nParameters. The result is a Vec that starts just after this object's parameters end, i.e. result = global_parameters.subVec(nParameters(),global_parameters.size()-nParameters()); This allows to easily chain calls of this method on multiple RBMParameters.

Implements PLearn::RBMParameters.

Definition at line 377 of file RBMQLParameters.cc.

References PLearn::TVec< T >::data(), down_units_params, i, PLearn::TVec< T >::length(), m, PLearn::TMat< T >::makeSharedValue(), PLearn::TVec< T >::makeSharedValue(), n, nParameters(), PLERROR, PLearn::TVec< T >::size(), PLearn::TMat< T >::size(), PLearn::TVec< T >::subVec(), up_units_bias, and weights.

{

int n = nParameters(share_up_params,share_down_params);

int m = global_parameters.size();

if (m<n)

PLERROR("RBMLLParameters::makeParametersPointHere: argument has length %d, should be longer than nParameters()=%d",m,n);

real* p = global_parameters.data();

weights.makeSharedValue(p,weights.size());

p+=weights.size();

if (share_up_params)

{

up_units_bias.makeSharedValue(p,up_units_bias.size());

p+=up_units_bias.size();

}

if (share_down_params)

for (int i=0;i<down_units_params.length();i++)

{

down_units_params[i].makeSharedValue(p,down_units_params[i].size());

p+=down_units_params[i].size();

}

return global_parameters.subVec(n,m-n);

}

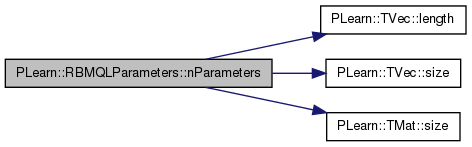

| int PLearn::RBMQLParameters::nParameters | ( | bool | share_up_params, |

| bool | share_down_params | ||

| ) | const [virtual] |

optionally perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation.

return the number of parameters

THE DEFAULT IMPLEMENTATION PROVIDED IN THE SUPER-CLASS DOES NOT DO ANYTHING. return the number of parameters

Implements PLearn::RBMParameters.

Definition at line 363 of file RBMQLParameters.cc.

References down_units_params, i, PLearn::TVec< T >::length(), m, PLearn::TVec< T >::size(), PLearn::TMat< T >::size(), up_units_bias, and weights.

Referenced by makeParametersPointHere().

{

int m = weights.size() + (share_up_params?up_units_bias.size():0);

if (share_down_params)

for (int i=0;i<down_units_params.length();i++)

m += down_units_params[i].size();

return m;

}

| void PLearn::RBMQLParameters::update | ( | ) | [virtual] |

Updates parameters according to contrastive divergence gradient.

Implements PLearn::RBMParameters.

Definition at line 212 of file RBMQLParameters.cc.

References clearStats(), PLearn::RBMParameters::down_layer_size, down_units_params, down_units_params_neg_stats, down_units_params_pos_stats, i, PLearn::RBMParameters::learning_rate, PLearn::RBMParameters::neg_count, PLearn::RBMParameters::pos_count, PLearn::RBMParameters::up_layer_size, up_units_bias, up_units_bias_neg_stats, up_units_bias_pos_stats, weights, weights_neg_stats, and weights_pos_stats.

Referenced by PLearn::GaussianDBNRegression::greedyStep(), and PLearn::GaussianDBNClassification::greedyStep().

{

// updates parameters

//weights -= learning_rate * (weights_pos_stats/pos_count

// - weights_neg_stats/neg_count)

weights_pos_stats /= pos_count;

weights_neg_stats /= neg_count;

weights_pos_stats -= weights_neg_stats;

weights_pos_stats *= learning_rate;

weights -= weights_pos_stats;

for( int i=0 ; i<up_layer_size ; i++ )

{

up_units_bias[i] -=

learning_rate * (up_units_bias_pos_stats[i]/pos_count

- up_units_bias_neg_stats[i]/neg_count);

}

for( int i=0 ; i<down_layer_size ; i++ )

{

// update the bias of the down units

down_units_params[0][i] -=

learning_rate * (down_units_params_pos_stats[0][i]/pos_count

- down_units_params_neg_stats[0][i]/neg_count);

// update the quadratic term of the down units

down_units_params[1][i] -=

learning_rate * (down_units_params_pos_stats[1][i]/pos_count

- down_units_params_neg_stats[1][i]/neg_count);

// cout << "bias[i] " << down_units_params[0][i] << endl ;

// cout << "a[i] " << down_units_params[1][i] << endl ;

}

clearStats();

}

Reimplemented from PLearn::RBMParameters.

Definition at line 167 of file RBMQLParameters.h.

Element 1,i contains the bias of down unit i, element (2,i) contains the.

Definition at line 73 of file RBMQLParameters.h.

Referenced by accumulateNegStats(), accumulatePosStats(), build_(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), makeParametersPointHere(), nParameters(), and update().

Accumulates negative contribution to the gradient of down_units_params.

Definition at line 90 of file RBMQLParameters.h.

Referenced by accumulateNegStats(), build_(), clearStats(), makeDeepCopyFromShallowCopy(), and update().

Accumulates positive contribution to the gradient of down_units_params.

Definition at line 88 of file RBMQLParameters.h.

Referenced by accumulatePosStats(), build_(), clearStats(), makeDeepCopyFromShallowCopy(), and update().

Element i contains the bias of linear up unit i.

Definition at line 69 of file RBMQLParameters.h.

Referenced by bpropUpdate(), build_(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), makeParametersPointHere(), nParameters(), and update().

Accumulates negative contribution to the gradient of up_units_params.

Definition at line 86 of file RBMQLParameters.h.

Referenced by accumulateNegStats(), build_(), clearStats(), makeDeepCopyFromShallowCopy(), and update().

Accumulates positive contribution to the gradient of up_units_bias.

Definition at line 84 of file RBMQLParameters.h.

Referenced by accumulatePosStats(), build_(), clearStats(), makeDeepCopyFromShallowCopy(), and update().

Matrix containing unit-to-unit weights (output_size × input_size)

Definition at line 66 of file RBMQLParameters.h.

Referenced by bpropUpdate(), build_(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), makeParametersPointHere(), nParameters(), and update().

Accumulates negative contribution to the weights' gradient.

Definition at line 81 of file RBMQLParameters.h.

Referenced by accumulateNegStats(), build_(), clearStats(), makeDeepCopyFromShallowCopy(), and update().

Accumulates positive contribution to the weights' gradient.

Definition at line 78 of file RBMQLParameters.h.

Referenced by accumulatePosStats(), build_(), clearStats(), makeDeepCopyFromShallowCopy(), and update().

1.7.4

1.7.4