|

PLearn 0.1

|

|

PLearn 0.1

|

#include <RegressionTreeNode.h>

Public Member Functions | |

| RegressionTreeNode () | |

| RegressionTreeNode (int missing_is_valid) | |

| virtual | ~RegressionTreeNode () |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RegressionTreeNode * | deepCopy (CopiesMap &copies) const |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. | |

| virtual void | build () |

| Post-constructor. | |

| void | finalize () |

| void | initNode (PP< RegressionTree > tree, PP< RegressionTreeLeave > leave) |

| void | lookForBestSplit () |

| void | compareSplit (int col, real left_leave_last_feature, real right_leave_first_feature, Vec left_error, Vec right_error, Vec missing_error) |

| int | expandNode () |

| int | getSplitBalance () const |

| real | getErrorImprovment () const |

| int | getSplitCol () const |

| real | getSplitValue () const |

| TVec< PP< RegressionTreeNode > > | getNodes () |

| void | computeOutputAndNodes (const Vec &inputv, Vec &outputv, TVec< PP< RegressionTreeNode > > *nodes=0) |

| void | computeOutput (const Vec &inputv, Vec &outputv) |

| bool | haveChildrenNode () |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static void | declareOptions (OptionList &ol) |

| Declare options (data fields) for the class. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Private Types | |

| typedef Object | inherited |

Private Member Functions | |

| void | build_ () |

| Object-specific post-constructor. | |

| void | verbose (string msg, int level) |

Static Private Member Functions | |

| static tuple< real, real, int > | bestSplitInRow (int col, TVec< RTR_type > &candidates, Vec left_error, Vec right_error, const Vec missing_error, PP< RegressionTreeLeave > right_leave, PP< RegressionTreeLeave > left_leave, PP< RegressionTreeRegisters > train_set, Vec values, TVec< pair< RTR_target_t, RTR_weight_t > > t_w) |

Private Attributes | |

| int | missing_is_valid |

| PP< RegressionTree > | tree |

| PP< RegressionTreeLeave > | leave |

| Vec | leave_output |

| Vec | leave_error |

| int | split_col |

| int | split_balance |

| real | split_feature_value |

| real | after_split_error |

| PP< RegressionTreeNode > | missing_node |

| PP< RegressionTreeLeave > | missing_leave |

| PP< RegressionTreeNode > | left_node |

| PP< RegressionTreeLeave > | left_leave |

| PP< RegressionTreeNode > | right_node |

| PP< RegressionTreeLeave > | right_leave |

Static Private Attributes | |

| static int | dummy_int = 0 |

| static Vec | tmp_vec |

| static PP< RegressionTreeLeave > | dummy_leave_template |

| static PP < RegressionTreeRegisters > | dummy_train_set |

Friends | |

| class | RegressionTree |

Definition at line 58 of file RegressionTreeNode.h.

typedef Object PLearn::RegressionTreeNode::inherited [private] |

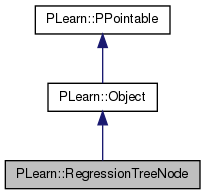

Reimplemented from PLearn::Object.

Definition at line 61 of file RegressionTreeNode.h.

| PLearn::RegressionTreeNode::RegressionTreeNode | ( | ) |

Definition at line 64 of file RegressionTreeNode.cc.

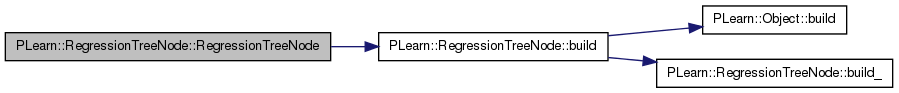

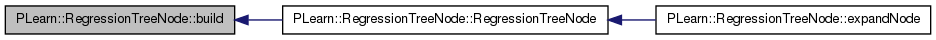

References build().

Referenced by expandNode().

:

missing_is_valid(0),

split_col(-1),

split_balance(INT_MAX),

split_feature_value(REAL_MAX),

after_split_error(REAL_MAX)

{

build();

}

| PLearn::RegressionTreeNode::RegressionTreeNode | ( | int | missing_is_valid | ) |

Definition at line 73 of file RegressionTreeNode.cc.

References build().

:

missing_is_valid(missing_is_valid_),

split_col(-1),

split_balance(INT_MAX),

split_feature_value(REAL_MAX),

after_split_error(REAL_MAX)

{

build();

}

| PLearn::RegressionTreeNode::~RegressionTreeNode | ( | ) | [virtual] |

Definition at line 83 of file RegressionTreeNode.cc.

{

}

| string PLearn::RegressionTreeNode::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

| OptionList & PLearn::RegressionTreeNode::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

| RemoteMethodMap & PLearn::RegressionTreeNode::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

| Object * PLearn::RegressionTreeNode::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

| StaticInitializer RegressionTreeNode::_static_initializer_ & PLearn::RegressionTreeNode::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

| static tuple<real,real,int> PLearn::RegressionTreeNode::bestSplitInRow | ( | int | col, |

| TVec< RTR_type > & | candidates, | ||

| Vec | left_error, | ||

| Vec | right_error, | ||

| const Vec | missing_error, | ||

| PP< RegressionTreeLeave > | right_leave, | ||

| PP< RegressionTreeLeave > | left_leave, | ||

| PP< RegressionTreeRegisters > | train_set, | ||

| Vec | values, | ||

| TVec< pair< RTR_target_t, RTR_weight_t > > | t_w | ||

| ) | [static, private] |

| void PLearn::RegressionTreeNode::build | ( | ) | [virtual] |

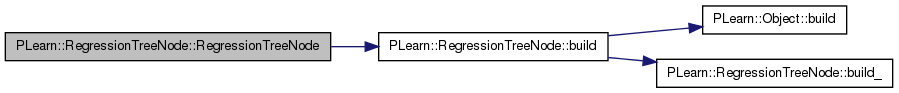

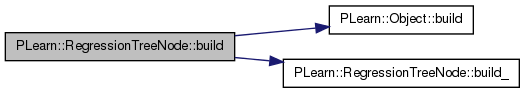

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::Object.

Definition at line 210 of file RegressionTreeNode.cc.

References PLearn::Object::build(), and build_().

Referenced by RegressionTreeNode().

{

inherited::build();

build_();

}

| void PLearn::RegressionTreeNode::build_ | ( | ) | [private] |

Object-specific post-constructor.

This method should be redefined in subclasses and do the actual building of the object according to previously set option fields. Constructors can just set option fields, and then call build_. This method is NOT virtual, and will typically be called only from three places: a constructor, the public virtual build() method, and possibly the public virtual read method (which calls its parent's read). build_() can assume that its parent's build_() has already been called.

Reimplemented from PLearn::Object.

Definition at line 216 of file RegressionTreeNode.cc.

Referenced by build().

{

}

| string PLearn::RegressionTreeNode::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

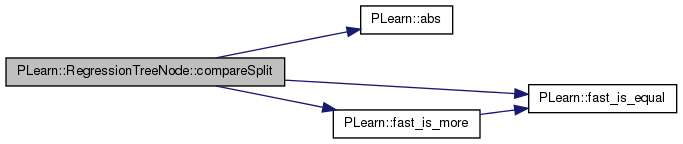

| void PLearn::RegressionTreeNode::compareSplit | ( | int | col, |

| real | left_leave_last_feature, | ||

| real | right_leave_first_feature, | ||

| Vec | left_error, | ||

| Vec | right_error, | ||

| Vec | missing_error | ||

| ) | [inline] |

Definition at line 511 of file RegressionTreeNode.cc.

References PLearn::abs(), after_split_error, PLearn::fast_is_equal(), PLearn::fast_is_more(), left_leave, PLASSERT, right_leave, split_balance, split_col, and split_feature_value.

{

PLASSERT(left_leave_last_feature<=right_leave_first_feature);

if (left_leave_last_feature >= right_leave_first_feature) return;

real work_error = missing_error[0] + missing_error[1] + left_error[0] + left_error[1] + right_error[0] + right_error[1];

int work_balance = abs(left_leave->length() - right_leave->length());

if (fast_is_more(work_error,after_split_error)) return;

else if (fast_is_equal(work_error,after_split_error) &&

fast_is_more(work_balance,split_balance)) return;

split_col = col;

split_feature_value = 0.5 * (right_leave_first_feature + left_leave_last_feature);

after_split_error = work_error;

split_balance = work_balance;

}

Definition at line 129 of file RegressionTreeNode.h.

{computeOutputAndNodes(inputv,outputv);}

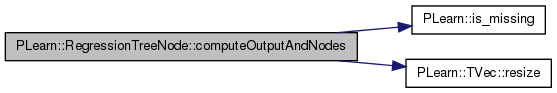

| void PLearn::RegressionTreeNode::computeOutputAndNodes | ( | const Vec & | inputv, |

| Vec & | outputv, | ||

| TVec< PP< RegressionTreeNode > > * | nodes = 0 |

||

| ) |

Definition at line 587 of file RegressionTreeNode.cc.

References PLearn::is_missing(), leave_output, left_node, missing_is_valid, missing_leave, missing_node, PLearn::TVec< T >::resize(), right_node, RTR_HAVE_MISSING, split_col, split_feature_value, and tmp_vec.

{

if(nodes)

nodes->append(this);

if (!left_node)

{

outputv << leave_output;

return;

}

if (RTR_HAVE_MISSING && is_missing(inputv[split_col]))

{

if (missing_is_valid > 0)

{

missing_node->computeOutputAndNodes(inputv, outputv, nodes);

}

else

{

tmp_vec.resize(3);

missing_leave->getOutputAndError(outputv,tmp_vec);

}

return;

}

if (inputv[split_col] > split_feature_value)

{

right_node->computeOutputAndNodes(inputv, outputv, nodes);

return;

}

else

{

left_node->computeOutputAndNodes(inputv, outputv, nodes);

return;

}

}

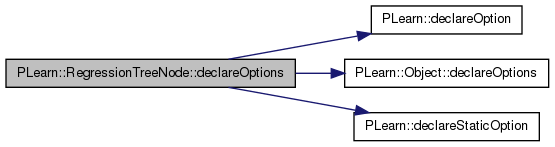

| void PLearn::RegressionTreeNode::declareOptions | ( | OptionList & | ol | ) | [static] |

Declare options (data fields) for the class.

Redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options). Please call the inherited method AT THE END to get the options listed in a consistent order (from most recently defined to least recently defined).

static void MyDerivedClass::declareOptions(OptionList& ol) { declareOption(ol, "inputsize", &MyObject::inputsize_, OptionBase::buildoption, "The size of the input; it must be provided"); declareOption(ol, "weights", &MyObject::weights, OptionBase::learntoption, "The learned model weights"); inherited::declareOptions(ol); }

| ol | List of options that is progressively being constructed for the current class. |

Reimplemented from PLearn::Object.

Definition at line 101 of file RegressionTreeNode.cc.

References after_split_error, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::Object::declareOptions(), PLearn::declareStaticOption(), dummy_int, dummy_leave_template, dummy_train_set, PLearn::OptionBase::learntoption, leave, leave_error, leave_output, left_leave, left_node, missing_is_valid, missing_leave, missing_node, PLearn::OptionBase::nosave, right_leave, right_node, split_balance, split_col, split_feature_value, and tmp_vec.

{

declareOption(ol, "missing_is_valid", &RegressionTreeNode::missing_is_valid, OptionBase::buildoption,

"If set to 1, missing values will be treated as valid, and missing nodes will be potential for splits.\n");

declareOption(ol, "leave", &RegressionTreeNode::leave, OptionBase::buildoption,

"The leave of all the belonging rows when this node is a leave\n");

declareOption(ol, "leave_output", &RegressionTreeNode::leave_output, OptionBase::learntoption,

"The leave output vector\n");

declareOption(ol, "leave_error", &RegressionTreeNode::leave_error, OptionBase::learntoption,

"The leave error vector\n");

declareOption(ol, "split_col", &RegressionTreeNode::split_col, OptionBase::learntoption,

"The dimension of the best split of leave\n");

declareOption(ol, "split_balance", &RegressionTreeNode::split_balance, OptionBase::learntoption,

"The balance between the left and the right leave\n");

declareOption(ol, "split_feature_value", &RegressionTreeNode::split_feature_value, OptionBase::learntoption,

"The feature value of the split\n");

declareOption(ol, "after_split_error", &RegressionTreeNode::after_split_error, OptionBase::learntoption,

"The error after split\n");

declareOption(ol, "missing_node", &RegressionTreeNode::missing_node, OptionBase::learntoption,

"The node for the missing values when missing_is_valid is set to 1\n");

declareOption(ol, "missing_leave", &RegressionTreeNode::missing_leave, OptionBase::learntoption,

"The leave containing rows with missing values after split\n");

declareOption(ol, "left_node", &RegressionTreeNode::left_node, OptionBase::learntoption,

"The node on the left of the split decision\n");

declareOption(ol, "left_leave", &RegressionTreeNode::left_leave, OptionBase::learntoption,

"The leave with the rows lower than the split feature value after split\n");

declareOption(ol, "right_node", &RegressionTreeNode::right_node, OptionBase::learntoption,

"The node on the right of the split decision\n");

declareOption(ol, "right_leave", &RegressionTreeNode::right_leave, OptionBase::learntoption,

"The leave with the rows greater thean the split feature value after split\n");

declareStaticOption(ol, "left_error", &RegressionTreeNode::tmp_vec,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The left leave error vector\n");

declareStaticOption(ol, "right_error", &RegressionTreeNode::tmp_vec,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The right leave error vector\n");

declareStaticOption(ol, "missing_error", &RegressionTreeNode::tmp_vec,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The missing leave error vector\n");

declareStaticOption(ol, "left_output", &RegressionTreeNode::tmp_vec,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The left leave output vector\n");

declareStaticOption(ol, "right_output", &RegressionTreeNode::tmp_vec,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The right leave output vector\n");

declareStaticOption(ol, "missing_output", &RegressionTreeNode::tmp_vec,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The mising leave output vector\n");

declareStaticOption(ol, "right_leave_id", &RegressionTreeNode::dummy_int,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The id of the right leave\n");

declareStaticOption(ol, "left_leave_id", &RegressionTreeNode::dummy_int,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The id of the left leave\n");

declareStaticOption(ol, "missing_leave_id", &RegressionTreeNode::dummy_int,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The id of the missing leave\n");

declareStaticOption(ol, "leave_id", &RegressionTreeNode::dummy_int,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The id of the leave\n");

declareStaticOption(ol, "length", &RegressionTreeNode::dummy_int,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The length of the train set\n");

declareStaticOption(ol, "inputsize", &RegressionTreeNode::dummy_int,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The inputsize of the train set\n");

declareStaticOption(ol, "inputsize", &RegressionTreeNode::dummy_int,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED The inputsize of the train set\n");

declareStaticOption(ol, "loss_function_weight",

&RegressionTreeNode::dummy_int,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED Only to reload old saved learner\n");

declareStaticOption(ol, "verbosity",

&RegressionTreeNode::dummy_int,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED Only to reload old saved learner\n");

declareStaticOption(ol, "leave_template",

&RegressionTreeNode::dummy_leave_template,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED Only to reload old saved learner\n");

declareStaticOption(ol, "train_set",

&RegressionTreeNode::dummy_train_set,

OptionBase::learntoption | OptionBase::nosave,

"DEPRECATED Only to reload old saved learner\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RegressionTreeNode::declaringFile | ( | ) | [inline, static] |

| RegressionTreeNode * PLearn::RegressionTreeNode::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

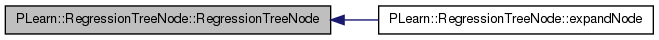

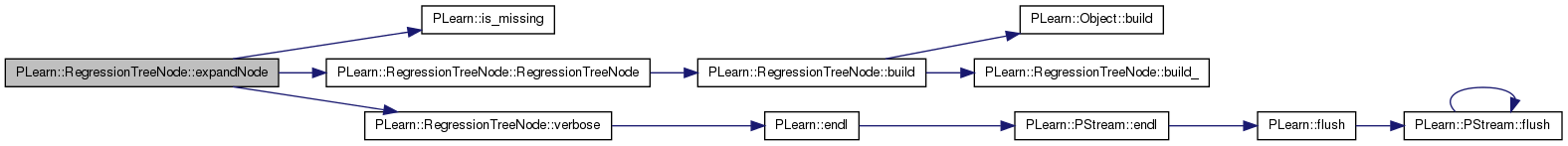

| int PLearn::RegressionTreeNode::expandNode | ( | ) |

Definition at line 528 of file RegressionTreeNode.cc.

References PLearn::is_missing(), leave, left_leave, left_node, missing_is_valid, missing_leave, missing_node, PLASSERT, RegressionTreeNode(), right_leave, right_node, RTR_HAVE_MISSING, split_col, split_feature_value, tree, and verbose().

{

if (split_col < 0)

{

verbose("RegressionTreeNode: there is no more split candidate", 3);

return -1;

}

missing_leave->initStats();

left_leave->initStats();

right_leave->initStats();

TVec<RTR_type>registered_row(leave->length());

PP<RegressionTreeRegisters> train_set = tree->getSortedTrainingSet();

train_set->getAllRegisteredRow(leave->getId(),split_col,registered_row);

for (int row_index = 0;row_index<registered_row.size();row_index++)

{

int row=registered_row[row_index];

if (RTR_HAVE_MISSING && is_missing(train_set->get(row, split_col)))

{

missing_leave->addRow(row);

missing_leave->registerRow(row);

}

else

{

if (train_set->get(row, split_col) < split_feature_value)

{

left_leave->addRow(row);

left_leave->registerRow(row);

}

else

{

right_leave->addRow(row);

right_leave->registerRow(row);

}

}

}

PLASSERT(left_leave->length()>0);

PLASSERT(right_leave->length()>0);

PLASSERT(left_leave->length() + right_leave->length() +

missing_leave->length() == registered_row.size());

// leave->printStats();

// left_leave->printStats();

// right_leave->printStats();

if (RTR_HAVE_MISSING && missing_is_valid > 0)

{

missing_node = new RegressionTreeNode(missing_is_valid);

missing_node->initNode(tree, missing_leave);

missing_node->lookForBestSplit();

}

left_node = new RegressionTreeNode(missing_is_valid);

left_node->initNode(tree, left_leave);

left_node->lookForBestSplit();

right_node = new RegressionTreeNode(missing_is_valid);

right_node->initNode(tree, right_leave);

right_node->lookForBestSplit();

return split_col;

}

| void PLearn::RegressionTreeNode::finalize | ( | ) |

Definition at line 87 of file RegressionTreeNode.cc.

References leave, left_leave, left_node, missing_node, right_leave, and right_node.

{

//those variable are not needed after training.

right_leave = 0;

left_leave = 0;

leave = 0;

//missing_leave used in computeOutputsAndNodes

if(right_node)

right_node->finalize();

if(left_node)

left_node->finalize();

if(missing_node)

missing_node->finalize();

}

| real PLearn::RegressionTreeNode::getErrorImprovment | ( | ) | const [inline] |

Definition at line 118 of file RegressionTreeNode.h.

References PLearn::is_equal(), and PLASSERT.

{

if (split_col < 0) return -1.0;

real err=leave_error[0] + leave_error[1] - after_split_error;

PLASSERT(is_equal(err,0)||err>0);

return err;

}

| TVec< PP<RegressionTreeNode> > PLearn::RegressionTreeNode::getNodes | ( | ) |

| OptionList & PLearn::RegressionTreeNode::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

| OptionMap & PLearn::RegressionTreeNode::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

| RemoteMethodMap & PLearn::RegressionTreeNode::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file RegressionTreeNode.cc.

| int PLearn::RegressionTreeNode::getSplitBalance | ( | ) | const [inline] |

Definition at line 115 of file RegressionTreeNode.h.

{

if (split_col < 0) return tree->getSortedTrainingSet()->length();

return split_balance;}

| int PLearn::RegressionTreeNode::getSplitCol | ( | ) | const [inline] |

Definition at line 124 of file RegressionTreeNode.h.

{return split_col;}

| real PLearn::RegressionTreeNode::getSplitValue | ( | ) | const [inline] |

Definition at line 125 of file RegressionTreeNode.h.

{return split_feature_value;}

| bool PLearn::RegressionTreeNode::haveChildrenNode | ( | ) | [inline] |

Definition at line 131 of file RegressionTreeNode.h.

{return left_node;}

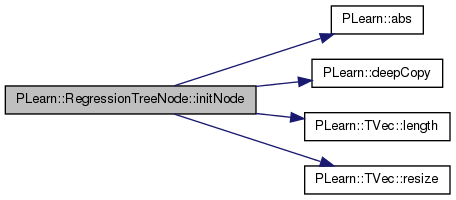

| void PLearn::RegressionTreeNode::initNode | ( | PP< RegressionTree > | tree, |

| PP< RegressionTreeLeave > | leave | ||

| ) |

Definition at line 220 of file RegressionTreeNode.cc.

References PLearn::abs(), PLearn::deepCopy(), leave, leave_error, leave_output, left_leave, PLearn::TVec< T >::length(), missing_is_valid, missing_leave, PLearn::TVec< T >::resize(), right_leave, and tree.

{

tree=the_tree;

leave=the_leave;

PP<RegressionTreeRegisters> the_train_set = tree->getSortedTrainingSet();

PP<RegressionTreeLeave> leave_template = tree->leave_template;

int missing_leave_id = the_train_set->getNextId();

int left_leave_id = the_train_set->getNextId();

int right_leave_id = the_train_set->getNextId();

missing_leave = ::PLearn::deepCopy(leave_template);

missing_leave->initLeave(the_train_set, missing_leave_id, missing_is_valid);

left_leave = ::PLearn::deepCopy(leave_template);

left_leave->initLeave(the_train_set, left_leave_id);

right_leave = ::PLearn::deepCopy(leave_template);

right_leave->initLeave(the_train_set, right_leave_id);

leave_output.resize(leave_template->outputsize());

leave_error.resize(3);

leave->getOutputAndError(leave_output,leave_error);

//we do it here as an optimization

//this don't change the leave_error.

//If you want the leave_error to include this rounding,

// use the RegressionTreeMultiVlassLeave

Vec multiclass_outputs = tree->multiclass_outputs;

if (multiclass_outputs.length() <= 0) return;

real closest_value=multiclass_outputs[0];

real margin_to_closest_value=abs(leave_output[0] - multiclass_outputs[0]);

for (int value_ind = 1; value_ind < multiclass_outputs.length(); value_ind++)

{

real v=abs(leave_output[0] - multiclass_outputs[value_ind]);

if (v < margin_to_closest_value)

{

closest_value = multiclass_outputs[value_ind];

margin_to_closest_value = v;

}

}

leave_output[0] = closest_value;

}

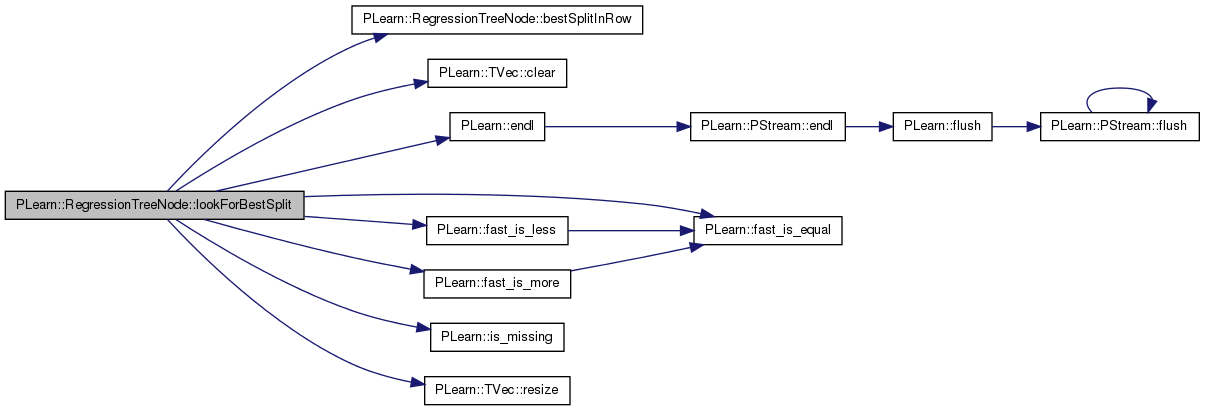

| void PLearn::RegressionTreeNode::lookForBestSplit | ( | ) |

Definition at line 266 of file RegressionTreeNode.cc.

References after_split_error, bestSplitInRow(), PLearn::TVec< T >::clear(), PLearn::endl(), PLearn::fast_is_equal(), PLearn::fast_is_less(), PLearn::fast_is_more(), PLearn::is_missing(), leave, left_leave, missing_leave, PLASSERT, PLCHECK, PLearn::TVec< T >::resize(), right_leave, RTR_HAVE_MISSING, split_balance, split_col, split_feature_value, tmp_vec, and tree.

{

if(leave->length()<=1)

return;

TVec<RTR_type> candidate(0, leave->length());//list of candidate row to split

TVec<RTR_type> registered_row(leave->length());

TVec<pair<RTR_target_t,RTR_weight_t> > registered_target_weight(leave->length());

registered_target_weight.resize(leave->length());

registered_target_weight.resize(0);

Vec registered_value(0, leave->length());

tmp_vec.resize(leave->outputsize());

Vec left_error(3);

Vec right_error(3);

Vec missing_error(3);

missing_error.clear();

PP<RegressionTreeRegisters> train_set = tree->getSortedTrainingSet();

bool one_pass_on_data=!train_set->haveMissing();

int inputsize = train_set->inputsize();

#ifdef RCMP

Vec row_split_err(inputsize);

Vec row_split_value(inputsize);

Vec row_split_balance(inputsize);

row_split_err.clear();

row_split_value.clear();

row_split_balance.clear();

#endif

int leave_id = leave->getId();

int l_length = 0;

real l_weights_sum = 0;

real l_targets_sum = 0;

real l_weighted_targets_sum = 0;

real l_weighted_squared_targets_sum = 0;

for (int col = 0; col < inputsize; col++)

{

missing_leave->initStats();

left_leave->initStats();

right_leave->initStats();

PLASSERT(registered_row.size()==leave->length());

PLASSERT(candidate.size()==0);

tuple<real,real,int> ret;

#ifdef NPREFETCH

//The ifdef is in case we don't want to use the optimized version with

//prefetch of memory. Maybe the optimization is hurtfull for some computer.

train_set->getAllRegisteredRow(leave_id, col, registered_row,

registered_target_weight,

registered_value);

PLASSERT(registered_row.size()==leave->length());

PLASSERT(candidate.size()==0);

//we do this optimization in case their is many row with the same value

//at the end as with binary variable.

int row_idx_end = registered_row.size() - 1;

int prev_row=registered_row[row_idx_end];

real prev_val=registered_value[row_idx_end];

for( ;row_idx_end>0;row_idx_end--)

{

int row=prev_row;

real val=prev_val;

prev_row = registered_row[row_idx_end - 1];

prev_val = registered_value[row_idx_end - 1];

if (RTR_HAVE_MISSING && is_missing(val))

missing_leave->addRow(row, registered_target_weight[row_idx_end].first,

registered_target_weight[row_idx_end].second);

else if(val==prev_val)

right_leave->addRow(row, registered_target_weight[row_idx_end].first,

registered_target_weight[row_idx_end].second);

else

break;

}

for(int row_idx = 0;row_idx<=row_idx_end;row_idx++)

{

int row=registered_row[row_idx];

if (RTR_HAVE_MISSING && is_missing(registered_value[row_idx]))

missing_leave->addRow(row, registered_target_weight[row_idx].first,

registered_target_weight[row_idx].second);

else {

left_leave->addRow(row, registered_target_weight[row_idx].first,

registered_target_weight[row_idx].second);

candidate.append(row);

}

}

missing_leave->getOutputAndError(tmp_vec, missing_error);

ret=bestSplitInRow(col, candidate, left_error,

right_error, missing_error,

right_leave, left_leave,

train_set, registered_value,

registered_target_weight);

#else

if(!one_pass_on_data){

train_set->getAllRegisteredRowLeave(leave_id, col, registered_row,

registered_target_weight,

registered_value,

missing_leave,

left_leave,

right_leave, candidate);

PLASSERT(registered_target_weight.size()==candidate.size());

PLASSERT(registered_value.size()==candidate.size());

PLASSERT(left_leave->length()+right_leave->length()

+missing_leave->length()==leave->length());

PLASSERT(candidate.size()>0||(left_leave->length()+right_leave->length()==0));

missing_leave->getOutputAndError(tmp_vec, missing_error);

ret=bestSplitInRow(col, candidate, left_error,

right_error, missing_error,

right_leave, left_leave,

train_set, registered_value,

registered_target_weight);

}else{

ret=train_set->bestSplitInRow(leave_id, col, registered_row,

left_leave,

right_leave, left_error,

right_error);

}

PLASSERT(registered_row.size()==leave->length());

#endif

if(col==0){

l_length=left_leave->length()+right_leave->length()+missing_leave->length();

l_weights_sum=left_leave->weights_sum+right_leave->weights_sum+missing_leave->weights_sum;

l_targets_sum=left_leave->targets_sum+right_leave->targets_sum+missing_leave->targets_sum;

l_weighted_targets_sum=left_leave->weighted_targets_sum

+right_leave->weighted_targets_sum+missing_leave->weighted_targets_sum;

l_weighted_squared_targets_sum=left_leave->weighted_squared_targets_sum

+right_leave->weighted_squared_targets_sum+missing_leave->weighted_squared_targets_sum;

}else if(!one_pass_on_data){

PLCHECK(l_length==left_leave->length()+right_leave->length()

+missing_leave->length());

PLCHECK(fast_is_equal(l_weights_sum,

left_leave->weights_sum+right_leave->weights_sum

+missing_leave->weights_sum));

PLCHECK(fast_is_equal(l_targets_sum,

left_leave->targets_sum+right_leave->targets_sum

+missing_leave->targets_sum));

PLCHECK(fast_is_equal(l_weighted_targets_sum,

left_leave->weighted_targets_sum

+right_leave->weighted_targets_sum

+missing_leave->weighted_targets_sum));

PLCHECK(fast_is_equal(l_weighted_squared_targets_sum,

left_leave->weighted_squared_targets_sum

+right_leave->weighted_squared_targets_sum

+missing_leave->weighted_squared_targets_sum));

}

#ifdef RCMP

row_split_err[col] = get<0>(ret);

row_split_value[col] = get<1>(ret);

row_split_balance[col] = get<2>(ret);

#endif

if (fast_is_more(get<0>(ret), after_split_error)) continue;

else if (fast_is_equal(get<0>(ret), after_split_error) &&

fast_is_more(get<2>(ret), split_balance)) continue;

else if (fast_is_equal(get<0>(ret), REAL_MAX)) continue;

split_col = col;

after_split_error = get<0>(ret);

split_feature_value = get<1>(ret);

split_balance = get<2>(ret);

PLASSERT(fast_is_less(after_split_error,REAL_MAX)||split_col==-1);

}

PLASSERT(fast_is_less(after_split_error,REAL_MAX)||split_col==-1);

EXTREME_MODULE_LOG<<"error after split: "<<after_split_error<<endl;

EXTREME_MODULE_LOG<<"split value: "<<split_feature_value<<endl;

EXTREME_MODULE_LOG<<"split_col: "<<split_col;

if(split_col>=0)

EXTREME_MODULE_LOG<<" "<<train_set->fieldName(split_col);

EXTREME_MODULE_LOG<<endl;

}

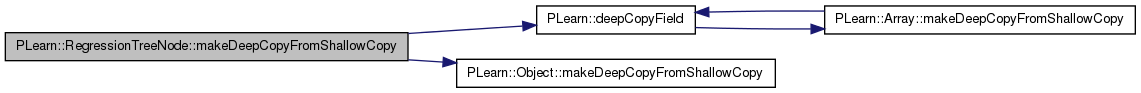

| void PLearn::RegressionTreeNode::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be.

This needs to be overridden by every class that adds "complex" data members to the class, such as Vec, Mat, PP<Something>, etc. Typical implementation:

void CLASS_OF_THIS::makeDeepCopyFromShallowCopy(CopiesMap& copies) { inherited::makeDeepCopyFromShallowCopy(copies); deepCopyField(complex_data_member1, copies); deepCopyField(complex_data_member2, copies); ... }

| copies | A map used by the deep-copy mechanism to keep track of already-copied objects. |

Reimplemented from PLearn::Object.

Definition at line 193 of file RegressionTreeNode.cc.

References PLearn::deepCopyField(), leave, leave_error, leave_output, left_leave, left_node, PLearn::Object::makeDeepCopyFromShallowCopy(), missing_leave, missing_node, right_leave, and right_node.

{

inherited::makeDeepCopyFromShallowCopy(copies);

//not done as the template don't change

deepCopyField(leave, copies);

deepCopyField(leave_output, copies);

deepCopyField(leave_error, copies);

deepCopyField(missing_node, copies);

deepCopyField(missing_leave, copies);

deepCopyField(left_node, copies);

deepCopyField(left_leave, copies);

deepCopyField(right_node, copies);

deepCopyField(right_leave, copies);

}

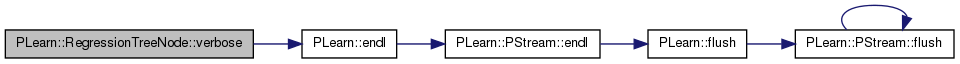

| void PLearn::RegressionTreeNode::verbose | ( | string | msg, |

| int | level | ||

| ) | [private] |

Definition at line 622 of file RegressionTreeNode.cc.

References PLearn::endl(), and tree.

Referenced by expandNode().

friend class RegressionTree [friend] |

Definition at line 60 of file RegressionTreeNode.h.

Reimplemented from PLearn::Object.

Definition at line 101 of file RegressionTreeNode.h.

Definition at line 83 of file RegressionTreeNode.h.

Referenced by compareSplit(), declareOptions(), and lookForBestSplit().

int PLearn::RegressionTreeNode::dummy_int = 0 [static, private] |

Definition at line 92 of file RegressionTreeNode.h.

Referenced by declareOptions().

PP< RegressionTreeLeave > PLearn::RegressionTreeNode::dummy_leave_template [static, private] |

Definition at line 94 of file RegressionTreeNode.h.

Referenced by declareOptions().

PP< RegressionTreeRegisters > PLearn::RegressionTreeNode::dummy_train_set [static, private] |

Definition at line 95 of file RegressionTreeNode.h.

Referenced by declareOptions().

Definition at line 72 of file RegressionTreeNode.h.

Referenced by declareOptions(), expandNode(), finalize(), initNode(), lookForBestSplit(), and makeDeepCopyFromShallowCopy().

Vec PLearn::RegressionTreeNode::leave_error [private] |

Definition at line 79 of file RegressionTreeNode.h.

Referenced by declareOptions(), initNode(), and makeDeepCopyFromShallowCopy().

Vec PLearn::RegressionTreeNode::leave_output [private] |

Definition at line 78 of file RegressionTreeNode.h.

Referenced by computeOutputAndNodes(), declareOptions(), initNode(), and makeDeepCopyFromShallowCopy().

Definition at line 87 of file RegressionTreeNode.h.

Referenced by compareSplit(), declareOptions(), expandNode(), finalize(), initNode(), lookForBestSplit(), and makeDeepCopyFromShallowCopy().

Definition at line 86 of file RegressionTreeNode.h.

Referenced by computeOutputAndNodes(), declareOptions(), expandNode(), finalize(), and makeDeepCopyFromShallowCopy().

Definition at line 69 of file RegressionTreeNode.h.

Referenced by computeOutputAndNodes(), declareOptions(), expandNode(), and initNode().

Definition at line 85 of file RegressionTreeNode.h.

Referenced by computeOutputAndNodes(), declareOptions(), expandNode(), initNode(), lookForBestSplit(), and makeDeepCopyFromShallowCopy().

Definition at line 84 of file RegressionTreeNode.h.

Referenced by computeOutputAndNodes(), declareOptions(), expandNode(), finalize(), and makeDeepCopyFromShallowCopy().

Definition at line 89 of file RegressionTreeNode.h.

Referenced by compareSplit(), declareOptions(), expandNode(), finalize(), initNode(), lookForBestSplit(), and makeDeepCopyFromShallowCopy().

Definition at line 88 of file RegressionTreeNode.h.

Referenced by computeOutputAndNodes(), declareOptions(), expandNode(), finalize(), and makeDeepCopyFromShallowCopy().

int PLearn::RegressionTreeNode::split_balance [private] |

Definition at line 81 of file RegressionTreeNode.h.

Referenced by compareSplit(), declareOptions(), and lookForBestSplit().

int PLearn::RegressionTreeNode::split_col [private] |

Definition at line 80 of file RegressionTreeNode.h.

Referenced by compareSplit(), computeOutputAndNodes(), declareOptions(), expandNode(), and lookForBestSplit().

Definition at line 82 of file RegressionTreeNode.h.

Referenced by compareSplit(), computeOutputAndNodes(), declareOptions(), expandNode(), and lookForBestSplit().

Vec PLearn::RegressionTreeNode::tmp_vec [static, private] |

Definition at line 93 of file RegressionTreeNode.h.

Referenced by computeOutputAndNodes(), declareOptions(), and lookForBestSplit().

PP<RegressionTree> PLearn::RegressionTreeNode::tree [private] |

Definition at line 71 of file RegressionTreeNode.h.

Referenced by expandNode(), initNode(), lookForBestSplit(), and verbose().

1.7.4

1.7.4