|

PLearn 0.1

|

|

PLearn 0.1

|

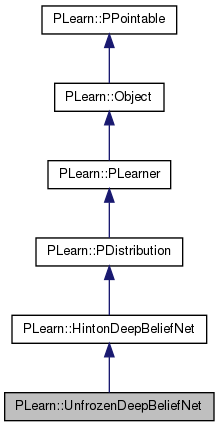

Does the same thing as Hinton's deep belief nets without freezing weights of earlier layers. More...

#include <UnfrozenDeepBeliefNet.h>

Public Member Functions | |

| UnfrozenDeepBeliefNet () | |

| Default constructor. | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage == nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual UnfrozenDeepBeliefNet * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| REDEFINE test FOR PARALLELIZATION OF THE TEST. | |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Vec | learning_rates |

| The learning rates. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef HintonDeepBeliefNet | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Does the same thing as Hinton's deep belief nets without freezing weights of earlier layers.

Definition at line 63 of file UnfrozenDeepBeliefNet.h.

typedef HintonDeepBeliefNet PLearn::UnfrozenDeepBeliefNet::inherited [private] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 65 of file UnfrozenDeepBeliefNet.h.

| PLearn::UnfrozenDeepBeliefNet::UnfrozenDeepBeliefNet | ( | ) |

| string PLearn::UnfrozenDeepBeliefNet::_classname_ | ( | ) | [static] |

REDEFINE test FOR PARALLELIZATION OF THE TEST.

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

| OptionList & PLearn::UnfrozenDeepBeliefNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

| RemoteMethodMap & PLearn::UnfrozenDeepBeliefNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

| Object * PLearn::UnfrozenDeepBeliefNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

| StaticInitializer UnfrozenDeepBeliefNet::_static_initializer_ & PLearn::UnfrozenDeepBeliefNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

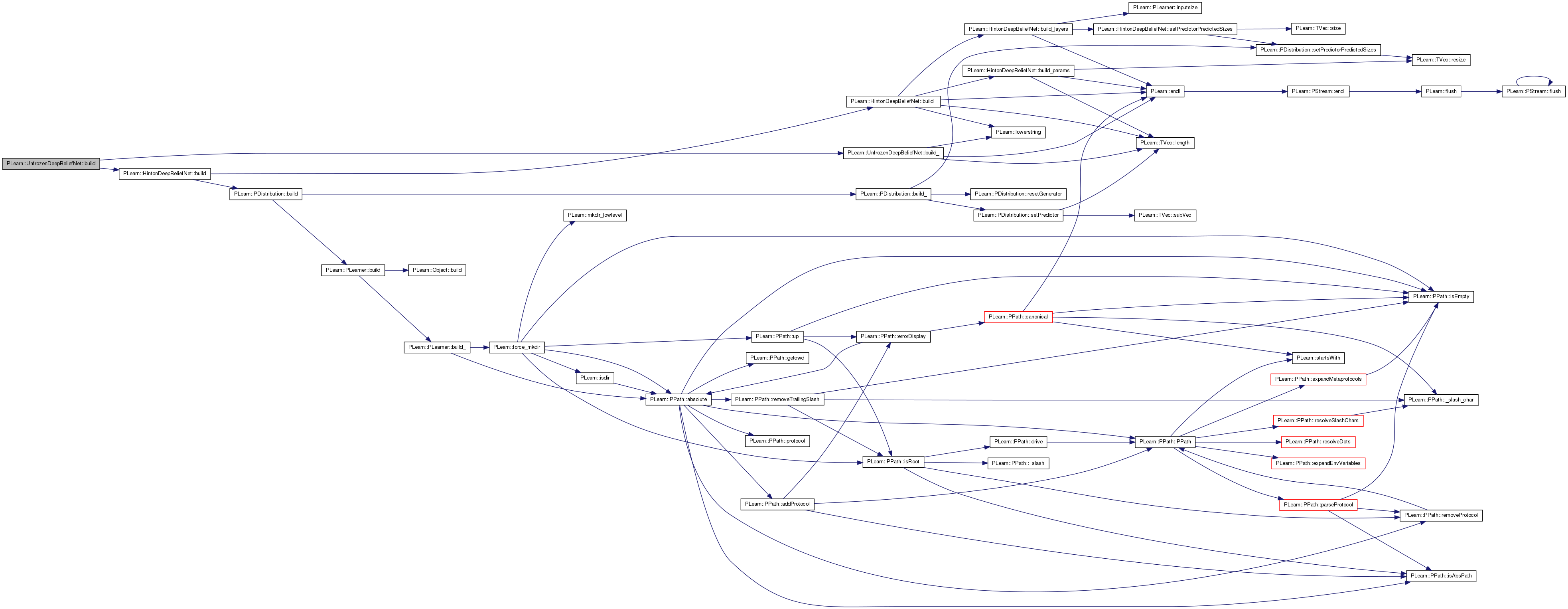

| void PLearn::UnfrozenDeepBeliefNet::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 98 of file UnfrozenDeepBeliefNet.cc.

References PLearn::HintonDeepBeliefNet::build(), and build_().

{

// ### Nothing to add here, simply calls build_().

inherited::build();

build_();

}

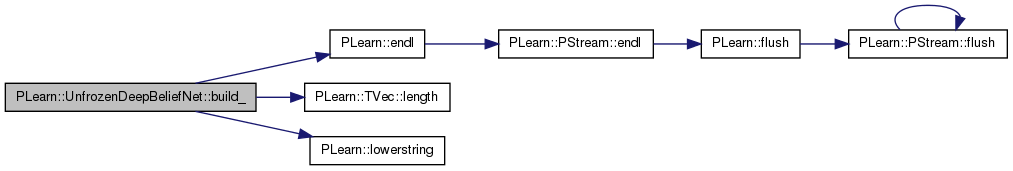

| void PLearn::UnfrozenDeepBeliefNet::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 108 of file UnfrozenDeepBeliefNet.cc.

References PLearn::endl(), i, PLearn::HintonDeepBeliefNet::joint_params, PLearn::HintonDeepBeliefNet::learning_rate, learning_rates, PLearn::TVec< T >::length(), PLearn::lowerstring(), PLearn::HintonDeepBeliefNet::n_layers, PLearn::HintonDeepBeliefNet::params, PLERROR, and PLearn::PLearner::stage.

Referenced by build().

{

MODULE_LOG << "build_() called" << endl;

MODULE_LOG << "stage = " << stage << endl;

// check value of fine_tuning_method

string ftm = lowerstring( fine_tuning_method );

if( ftm == "" | ftm == "none" )

fine_tuning_method = "";

else

PLERROR( "UnfrozenDeepBeliefNet::build_ - fine_tuning_method \"%s\"\n"

"is unknown.\n", fine_tuning_method.c_str() );

MODULE_LOG << " fine_tuning_method = \"" << fine_tuning_method << "\""

<< endl;

if( learning_rates.length() != n_layers-1 )

learning_rates = Vec( n_layers-1, learning_rate );

for( int i=0 ; i<n_layers-2 ; i++ )

params[i]->learning_rate = learning_rates[i];

joint_params->learning_rate = learning_rates[n_layers-2];

MODULE_LOG << "end of build_()" << endl;

}

| string PLearn::UnfrozenDeepBeliefNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

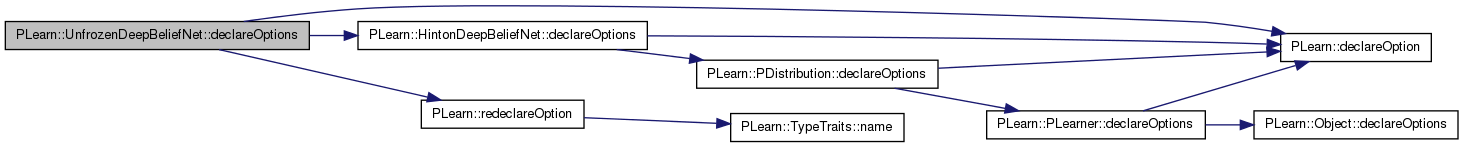

| void PLearn::UnfrozenDeepBeliefNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 70 of file UnfrozenDeepBeliefNet.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::HintonDeepBeliefNet::declareOptions(), PLearn::HintonDeepBeliefNet::learning_rate, PLearn::OptionBase::nosave, PLearn::redeclareOption(), and PLearn::HintonDeepBeliefNet::training_schedule.

{

declareOption(ol, "learning_rates", &UnfrozenDeepBeliefNet::learning_rate,

OptionBase::buildoption,

"Learning rate of each layer");

// Now call the parent class' declareOptions().

inherited::declareOptions(ol);

redeclareOption(ol, "learning_rate", &UnfrozenDeepBeliefNet::learning_rate,

OptionBase::buildoption,

"Global learning rate, will not be used if learning_rates"

" is provided.");

redeclareOption(ol, "training_schedule",

&UnfrozenDeepBeliefNet::training_schedule,

OptionBase::buildoption,

"No training_schedule, all layers are always learned.");

redeclareOption(ol, "fine_tuning_method",

&UnfrozenDeepBeliefNet::fine_tuning_method,

OptionBase::nosave,

"No fine-tuning");

}

| static const PPath& PLearn::UnfrozenDeepBeliefNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 96 of file UnfrozenDeepBeliefNet.h.

:

//##### Protected Options ###############################################

| UnfrozenDeepBeliefNet * PLearn::UnfrozenDeepBeliefNet::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

| OptionList & PLearn::UnfrozenDeepBeliefNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

| OptionMap & PLearn::UnfrozenDeepBeliefNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

| RemoteMethodMap & PLearn::UnfrozenDeepBeliefNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 57 of file UnfrozenDeepBeliefNet.cc.

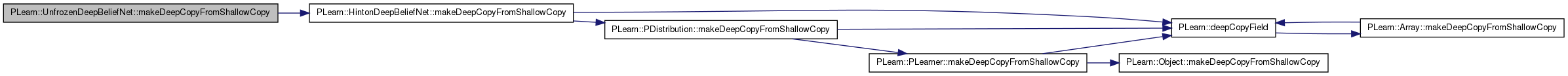

| void PLearn::UnfrozenDeepBeliefNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 136 of file UnfrozenDeepBeliefNet.cc.

References PLearn::HintonDeepBeliefNet::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

}

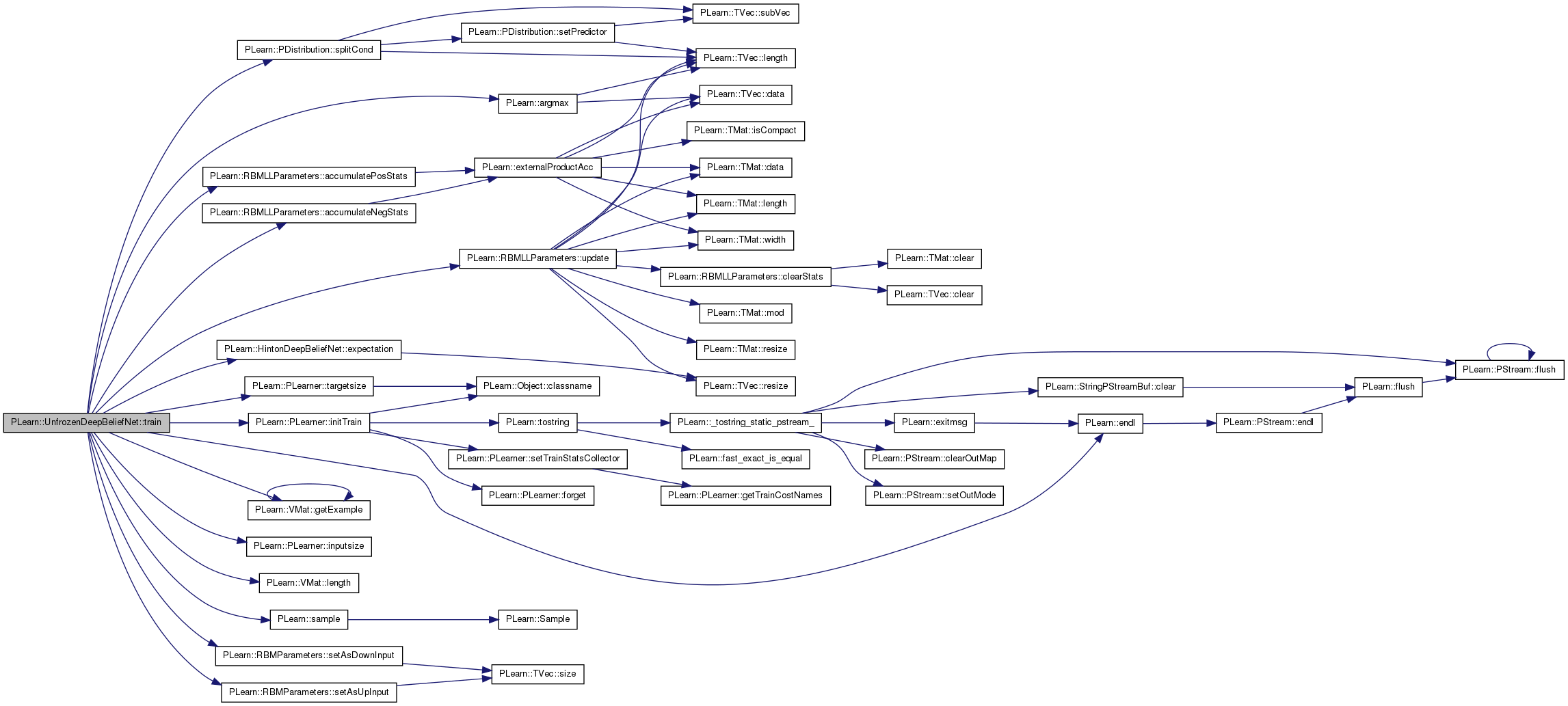

| void PLearn::UnfrozenDeepBeliefNet::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage == nstages, updating the train_stats collector with training costs measured on-line in the process.

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 145 of file UnfrozenDeepBeliefNet.cc.

References PLearn::RBMLLParameters::accumulateNegStats(), PLearn::RBMLLParameters::accumulatePosStats(), PLearn::argmax(), PLearn::endl(), PLearn::HintonDeepBeliefNet::expectation(), PLearn::VMat::getExample(), i, PLearn::PLearner::initTrain(), PLearn::PLearner::inputsize(), PLearn::HintonDeepBeliefNet::joint_layer, PLearn::HintonDeepBeliefNet::joint_params, PLearn::HintonDeepBeliefNet::last_layer, PLearn::HintonDeepBeliefNet::layers, PLearn::VMat::length(), PLearn::HintonDeepBeliefNet::n_layers, PLearn::PLearner::nstages, PLearn::HintonDeepBeliefNet::params, pl_log, PLearn::PDistribution::predicted_part, PLearn::PDistribution::predictor_part, PLearn::sample(), PLearn::RBMParameters::setAsDownInput(), PLearn::RBMParameters::setAsUpInput(), PLearn::PDistribution::splitCond(), PLearn::PLearner::stage, PLearn::HintonDeepBeliefNet::target_layer, PLearn::PLearner::targetsize(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, and PLearn::RBMLLParameters::update().

{

MODULE_LOG << "train() called" << endl;

// The role of the train method is to bring the learner up to

// stage==nstages, updating train_stats with training costs measured

// on-line in the process.

/* TYPICAL CODE:

static Vec input; // static so we don't reallocate memory each time...

static Vec target; // (but be careful that static means shared!)

input.resize(inputsize()); // the train_set's inputsize()

target.resize(targetsize()); // the train_set's targetsize()

real weight;

// This generic PLearner method does a number of standard stuff useful for

// (almost) any learner, and return 'false' if no training should take

// place. See PLearner.h for more details.

if (!initTrain())

return;

while(stage<nstages)

{

// clear statistics of previous epoch

train_stats->forget();

//... train for 1 stage, and update train_stats,

// using train_set->getExample(input, target, weight)

// and train_stats->update(train_costs)

++stage;

train_stats->finalize(); // finalize statistics for this epoch

}

*/

Vec input( inputsize() );

Vec target( targetsize() ); // unused

real weight; // unused

Vec train_costs( 2 );

if( !initTrain() )

{

MODULE_LOG << "train() aborted" << endl;

return;

}

int nsamples = train_set->length();

MODULE_LOG << "nsamples = " << nsamples << endl;

MODULE_LOG << "initial stage = " << stage << endl;

MODULE_LOG << "objective: nstages = " << nstages << endl;

for( ; stage < nstages ; stage++ )

{

// sample is the index in the training set

int sample = stage % nsamples;

if( sample == 0 )

{

MODULE_LOG << "train_stats->forget() called" << endl;

train_stats->forget();

}

/*

MODULE_LOG << "stage = " << stage << endl;

MODULE_LOG << "sample = " << sample << endl;

// */

if( (100*stage) % nsamples == 0 )

MODULE_LOG << "stage = " << stage << endl;

train_set->getExample(sample, input, target, weight);

splitCond( input );

// deterministic forward propagation

layers[0]->expectation << predictor_part;

for( int i=0 ; i<n_layers-2 ; i++ )

{

params[i]->setAsDownInput( layers[i]->expectation );

layers[i+1]->getAllActivations( (RBMLLParameters*) params[i] );

layers[i+1]->computeExpectation();

layers[i+1]->generateSample();

params[i]->accumulatePosStats( layers[i]->expectation,

layers[i+1]->expectation );

}

// compute output and cost at this point, even though it is not the

// criterion that will be directly optimized

joint_params->setAsCondInput( layers[n_layers-2]->expectation );

target_layer->getAllActivations( (RBMLLParameters*) joint_params );

target_layer->computeExpectation();

// get costs

int actual_index = argmax(predicted_part);

train_costs[0] = -pl_log( target_layer->expectation[actual_index] );

if( argmax( target_layer->expectation ) == actual_index )

train_costs[1] = 0;

else

train_costs[1] = 1;

// end of the forward propagation

target_layer->expectation << predicted_part;

joint_params->setAsDownInput( joint_layer->expectation );

last_layer->getAllActivations( (RBMLLParameters*) joint_params );

last_layer->computeExpectation();

last_layer->generateSample();

joint_params->accumulatePosStats( joint_layer->expectation,

last_layer->expectation );

// for each params, one step of CD

for( int i=0 ; i<n_layers-2 ; i++ )

{

// down propagation

params[i]->setAsUpInput( layers[i+1]->sample );

layers[i]->getAllActivations( (RBMLLParameters*) params[i] );

// negative phase

layers[i]->generateSample();

params[i]->setAsDownInput( layers[i]->sample );

layers[i+1]->getAllActivations( (RBMLLParameters*) params[i] );

layers[i+1]->computeExpectation();

params[i]->accumulateNegStats( layers[i]->sample,

layers[i+1]->expectation );

params[i]->update();

}

// down propagation

joint_params->setAsUpInput( last_layer->sample );

joint_layer->getAllActivations( (RBMLLParameters*) joint_params );

// negative phase

joint_layer->generateSample();

joint_params->setAsDownInput( joint_layer->sample );

last_layer->getAllActivations( (RBMLLParameters*) joint_params );

last_layer->computeExpectation();

joint_params->accumulateNegStats( joint_layer->sample,

last_layer->expectation );

//update

joint_params->update();

train_stats->update( train_costs );

}

train_stats->finalize();

MODULE_LOG << endl;

}

Reimplemented from PLearn::HintonDeepBeliefNet.

Definition at line 96 of file UnfrozenDeepBeliefNet.h.

1.7.4

1.7.4