|

PLearn 0.1

|

|

PLearn 0.1

|

#include <WPLS.h>

Public Types | |

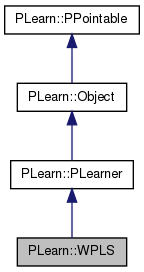

| typedef PLearner | inherited |

Public Member Functions | |

| WPLS () | |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

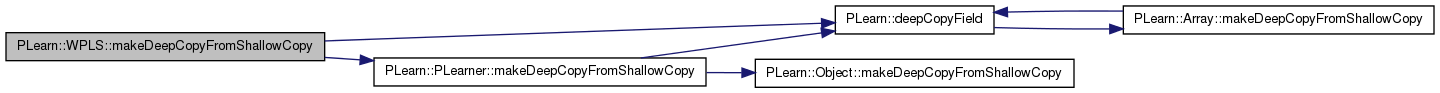

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual WPLS * | deepCopy (CopiesMap &copies) const |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

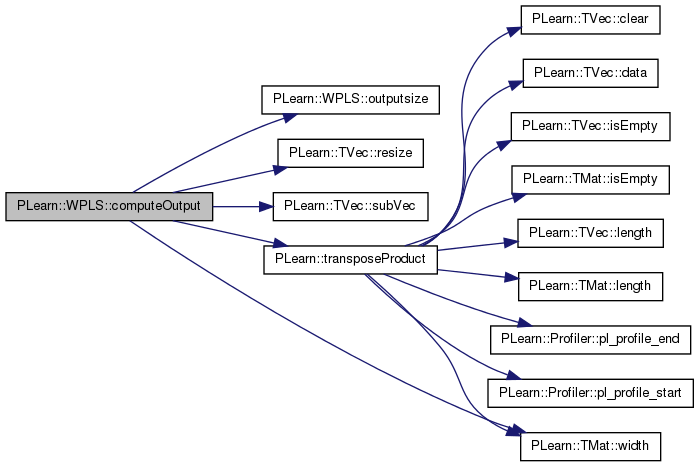

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

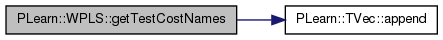

| virtual TVec< string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static void | NIPALSEigenvector (const Mat &m, Vec &v, real precision) |

| Compute the largest eigenvector of m with the NIPALS algorithm: (1) v <- random initialization (but normalized) (2) v = m.v, normalize v (3) if there is a v[i] that has changed by more than 'preicision', go to (2), otherwise return v. | |

Public Attributes | |

| string | method |

| real | precision |

| bool | output_the_score |

| bool | output_the_target |

| string | parent_filename |

| int | parent_sub |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

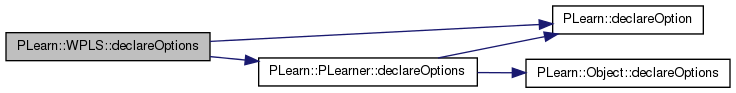

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Mat | B |

| int | m |

| Vec | mean_input |

| Vec | mean_target |

| int | p |

| Vec | stddev_input |

| Vec | stddev_target |

| int | w |

| Mat | W |

| Mat | P |

| Mat | Q |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| typedef PLearner PLearn::WPLS::inherited |

Reimplemented from PLearn::PLearner.

| PLearn::WPLS::WPLS | ( | ) |

Definition at line 57 of file WPLS.cc.

: m(-1), p(-1), w(0), method("kernel"), precision(1e-6), output_the_score(0), output_the_target(1), parent_filename("tmp"), parent_sub(0) {}

| string PLearn::WPLS::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

| OptionList & PLearn::WPLS::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

| RemoteMethodMap & PLearn::WPLS::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented from PLearn::PLearner.

| Object * PLearn::WPLS::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

| StaticInitializer WPLS::_static_initializer_ & PLearn::WPLS::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

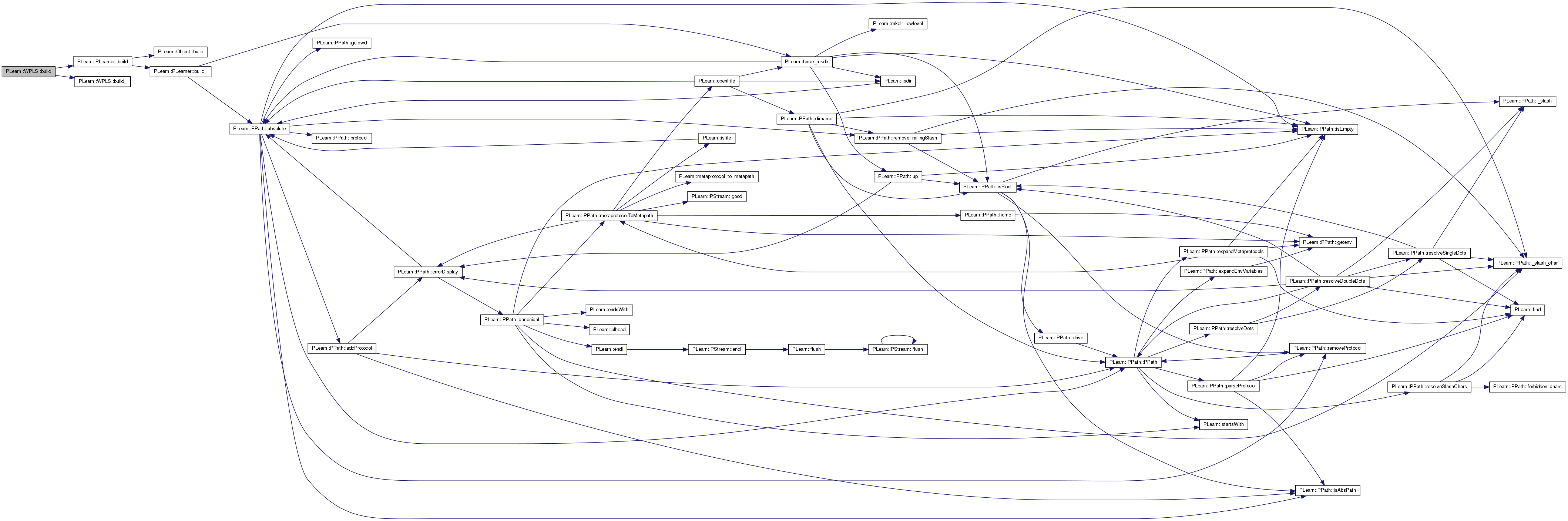

| void PLearn::WPLS::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PLearner.

Definition at line 202 of file WPLS.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::WPLS::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 211 of file WPLS.cc.

References m, method, output_the_score, output_the_target, p, PLASSERT, PLERROR, PLWARNING, precision, PLearn::PLearner::train_set, and w.

Referenced by build().

{

PLASSERT(precision > 0);

if (train_set) {

this->m = train_set->targetsize();

this->p = train_set->inputsize();

this->w = train_set->weightsize();

// Check method consistency.

if (method == "wpls1") {

// Make sure the target is 1-dimensional.

if (m != 1) {

PLERROR("In WPLS::build_ - With the 'wpls1' method, target should be 1-dimensional");

}

} else if (method == "kernel") {

PLERROR("In WPLS::build_ - option 'method=kernel' not implemented yet");

} else {

PLERROR("In WPLS::build_ - Unknown value for option 'method'");

}

}

if (!output_the_score && !output_the_target) {

// Weird, we don't want any output ??

PLWARNING("In WPLS::build_ - There will be no output");

}

}

| string PLearn::WPLS::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

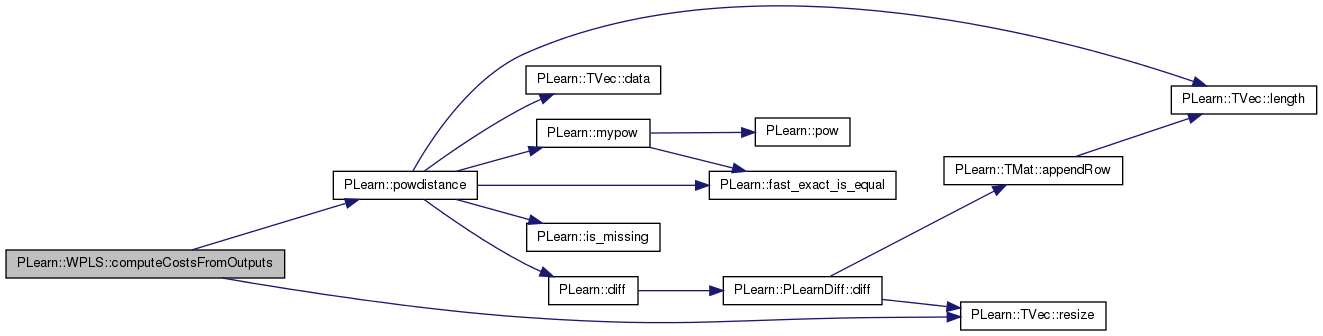

| void PLearn::WPLS::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 240 of file WPLS.cc.

References PLearn::powdistance(), and PLearn::TVec< T >::resize().

{

costs.resize(1);

costs[0] = powdistance(output,target,2.0);

// No cost computed.

}

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 251 of file WPLS.cc.

References B, m, mean_input, mean_target, PLearn::PLearner::nstages, output_the_score, output_the_target, outputsize(), p, PLERROR, PLWARNING, PLearn::TVec< T >::resize(), stddev_input, stddev_target, PLearn::TVec< T >::subVec(), PLearn::transposeProduct(), W, and PLearn::TMat< T >::width().

{

static Vec input_copy;

if (W.width()==0)

PLERROR("WPLS::computeOutput but model was not trained!");

// Compute the output from the input

int nout = outputsize();

output.resize(nout);

// First normalize the input.

input_copy.resize(this->p);

input_copy << input;

input_copy -= mean_input;

input_copy /= stddev_input;

int target_start = 0;

if (output_the_score) {

transposeProduct(output.subVec(0, this->nstages), W, input_copy);

target_start = this->nstages;

}

if (output_the_target) {

if (this->m > 0) {

Vec target = output.subVec(target_start, this->m);

transposeProduct(target, B, input_copy);

target *= stddev_target;

target += mean_target;

} else {

// This is just a safety check, since it should never happen.

PLWARNING("In WPLS::computeOutput - You ask to output the target but the target size is <= 0");

}

}

}

| void PLearn::WPLS::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 132 of file WPLS.cc.

References B, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), PLearn::OptionBase::learntoption, m, mean_input, mean_target, method, output_the_score, output_the_target, P, p, parent_filename, parent_sub, precision, Q, stddev_input, stddev_target, and W.

{

// Build options.

declareOption(ol, "method", &WPLS::method, OptionBase::buildoption,

"The WPLS algorithm used ('wpls1' or 'kernel', see help for more details).\n");

declareOption(ol, "output_the_score", &WPLS::output_the_score, OptionBase::buildoption,

"If set to 1, then the score (the low-dimensional representation of the input)\n"

"will be included in the output (before the target).");

declareOption(ol, "output_the_target", &WPLS::output_the_target, OptionBase::buildoption,

"If set to 1, then (the prediction of) the target will be included in the\n"

"output (after the score).");

declareOption(ol, "parent_filename", &WPLS::parent_filename, OptionBase::buildoption,

"For hyper-parameter selection purposes: use incremental learning to speed-up process");

declareOption(ol, "parent_sub", &WPLS::parent_sub, OptionBase::buildoption,

"Tells which of the sublearners (of the combined learner) should be used.");

declareOption(ol, "precision", &WPLS::precision, OptionBase::buildoption,

"The precision to which we compute the eigenvectors.");

// Learnt options.

declareOption(ol, "B", &WPLS::B, OptionBase::learntoption,

"The regression matrix in Y = X.B + E.");

declareOption(ol, "m", &WPLS::m, OptionBase::learntoption,

"Used to store the target size.");

declareOption(ol, "mean_input", &WPLS::mean_input, OptionBase::learntoption,

"The mean of the input data X.");

declareOption(ol, "mean_target", &WPLS::mean_target, OptionBase::learntoption,

"The mean of the target data Y.");

declareOption(ol, "p", &WPLS::p, OptionBase::learntoption,

"Used to store the input size.");

declareOption(ol, "P", &WPLS::P, OptionBase::learntoption,

"Matrix that maps features to observed inputs: X = T.P' + E.");

declareOption(ol, "Q", &WPLS::Q, OptionBase::learntoption,

"Matrix that maps features to observed outputs: Y = T.P' + F.");

declareOption(ol, "stddev_input", &WPLS::stddev_input, OptionBase::learntoption,

"The standard deviation of the input data X.");

declareOption(ol, "stddev_target", &WPLS::stddev_target, OptionBase::learntoption,

"The standard deviation of the target data Y.");

declareOption(ol, "w", &WPLS::p, OptionBase::learntoption,

"Used to store the weight size (0 or 1).");

declareOption(ol, "W", &WPLS::W, OptionBase::learntoption,

"The regression matrix in T = X.W.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::WPLS::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Reimplemented from PLearn::PLearner.

| void PLearn::WPLS::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 285 of file WPLS.cc.

References B, P, Q, PLearn::PLearner::stage, and W.

| OptionList & PLearn::WPLS::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| OptionMap & PLearn::WPLS::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| RemoteMethodMap & PLearn::WPLS::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| TVec< string > PLearn::WPLS::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 298 of file WPLS.cc.

References PLearn::TVec< T >::append().

{

// No cost computed.

TVec<string> t;

t.append("mse");

return t;

}

| TVec< string > PLearn::WPLS::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 309 of file WPLS.cc.

{

// No cost computed.

TVec<string> t;

return t;

}

| void PLearn::WPLS::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 319 of file WPLS.cc.

References B, PLearn::deepCopyField(), PLearn::PLearner::makeDeepCopyFromShallowCopy(), mean_input, mean_target, P, Q, stddev_input, stddev_target, and W.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// ### Call deepCopyField on all "pointer-like" fields

// ### that you wish to be deepCopied rather than

// ### shallow-copied.

// ### ex:

deepCopyField(B, copies);

deepCopyField(mean_input, copies);

deepCopyField(mean_target, copies);

deepCopyField(stddev_input, copies);

deepCopyField(stddev_target, copies);

deepCopyField(W, copies);

deepCopyField(P, copies);

deepCopyField(Q, copies);

}

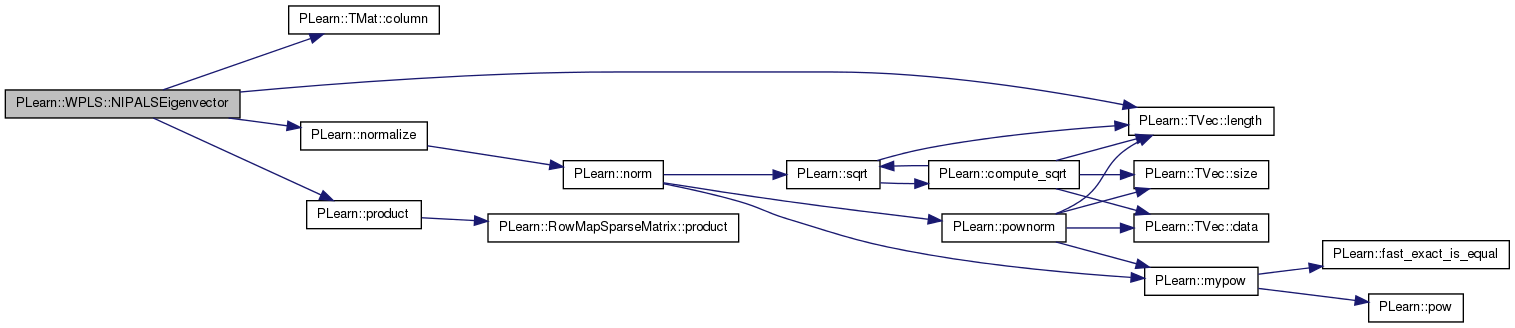

Compute the largest eigenvector of m with the NIPALS algorithm: (1) v <- random initialization (but normalized) (2) v = m.v, normalize v (3) if there is a v[i] that has changed by more than 'preicision', go to (2), otherwise return v.

Definition at line 340 of file WPLS.cc.

References PLearn::TMat< T >::column(), i, PLearn::TVec< T >::length(), n, PLearn::normalize(), and PLearn::product().

{

int n = v.length();

Vec wtmp(n);

v << m.column(0);

normalize(v, 2.0);

bool ok = false;

while (!ok) {

wtmp << v;

product(v, m, wtmp);

normalize(v, 2.0);

ok = true;

for (int i = 0; i < n && ok; i++) {

if (fabs(v[i] - wtmp[i]) > precision) {

ok = false;

}

}

}

}

| int PLearn::WPLS::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 362 of file WPLS.cc.

References m, PLearn::PLearner::nstages, output_the_score, and output_the_target.

Referenced by computeOutput().

{

int os = 0;

if (output_the_score) {

os += this->nstages;

}

if (output_the_target && m >= 0) {

// If m < 0, this means we don't know yet the target size, thus we

// shouldn't report it here.

os += this->m;

}

return os;

}

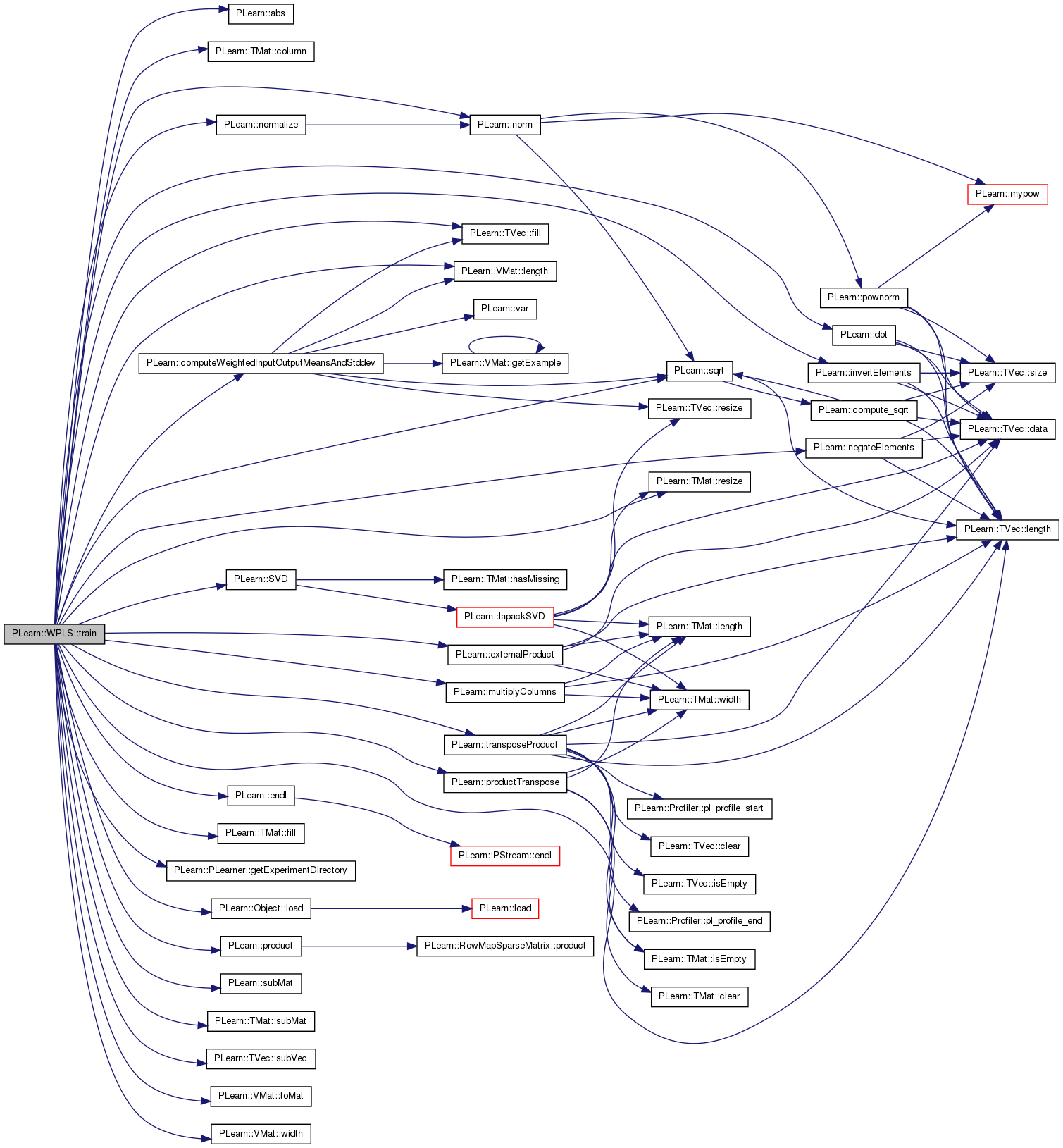

| void PLearn::WPLS::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 445 of file WPLS.cc.

References PLearn::abs(), B, PLearn::TMat< T >::column(), PLearn::computeWeightedInputOutputMeansAndStddev(), d, PLearn::dot(), PLearn::endl(), PLearn::PLearner::expdir, PLearn::externalProduct(), PLearn::TMat< T >::fill(), PLearn::TVec< T >::fill(), PLearn::PLearner::getExperimentDirectory(), i, PLearn::invertElements(), PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::Object::load(), m, mean_input, mean_target, method, PLearn::multiplyColumns(), n, PLearn::negateElements(), PLearn::norm(), PLearn::normalize(), PLearn::PLearner::nstages, P, p, parent_filename, parent_sub, PLERROR, PLearn::pout, precision, PLearn::product(), PLearn::productTranspose(), Q, PLearn::PLearner::report_progress, PLearn::TMat< T >::resize(), PLearn::sqrt(), PLearn::PLearner::stage, stddev_input, stddev_target, PLearn::subMat(), PLearn::TMat< T >::subMat(), PLearn::TVec< T >::subVec(), PLearn::SVD(), PLearn::VMat::toMat(), PLearn::PLearner::train_set, PLearn::transposeProduct(), PLearn::PLearner::verbosity, W, and PLearn::VMat::width().

{

if (stage == nstages) {

// Already trained.

if (verbosity >= 1)

pout << "Skipping WPLS training" << endl;

return;

}

if (verbosity >= 1)

pout << "WPLS training started" << endl;

int n = train_set->length();

int wlen = train_set->weightsize();

VMat d = new SubVMatrix(train_set,0,0,train_set->length(), train_set->width());

d->defineSizes(train_set->inputsize(), train_set->targetsize(), train_set->weightsize(), 0);

Vec means, stddev;

computeWeightedInputOutputMeansAndStddev(d, means, stddev);

if (verbosity >= 2) {

pout << "means = " << means << endl;

pout << "stddev = " << stddev << endl;

}

normalize(d, means, stddev);

mean_input = means.subVec(0, p);

mean_target = means.subVec(p, m);

stddev_input = stddev.subVec(0, p);

stddev_target = stddev.subVec(p, m);

Vec shift_input(p), scale_input(p), shift_target(m), scale_target(m);

shift_input << mean_input;

scale_input << stddev_input;

shift_target << mean_target;

scale_target << stddev_target;

negateElements(shift_input);

invertElements(scale_input);

negateElements(shift_target);

invertElements(scale_target);

VMat input_part = new SubVMatrix(train_set,

0, 0,

train_set->length(),

train_set->inputsize());

PP<ShiftAndRescaleVMatrix> X_vmat =

new ShiftAndRescaleVMatrix(input_part, shift_input, scale_input, true);

X_vmat->verbosity = this->verbosity;

VMat X_vmatrix = static_cast<ShiftAndRescaleVMatrix*>(X_vmat);

Mat X = X_vmatrix->toMat();

VMat target_part = new SubVMatrix( train_set,

0, train_set->inputsize(),

train_set->length(),

train_set->targetsize());

PP<ShiftAndRescaleVMatrix> Y_vmat =

new ShiftAndRescaleVMatrix(target_part, shift_target, scale_target, true);

Y_vmat->verbosity = this->verbosity;

VMat Y_vmatrix = static_cast<ShiftAndRescaleVMatrix*>(Y_vmat);

Vec Y(n);

Y << Y_vmatrix->toMat();

VMat weight_part = new SubVMatrix( train_set,

0, train_set->inputsize() + train_set->targetsize(),

train_set->length(),

train_set->weightsize());

PP<ShiftAndRescaleVMatrix> WE_vmat;

VMat WE_vmatrix;

Vec WE(n);

Vec sqrtWE(n);

if (wlen > 0) {

WE_vmat = new ShiftAndRescaleVMatrix(weight_part);

WE_vmat->verbosity = this->verbosity;

WE_vmatrix = static_cast<ShiftAndRescaleVMatrix*>(WE_vmat);

WE << WE_vmatrix->toMat();

sqrtWE << sqrt(WE);

multiplyColumns(X,sqrtWE);

Y *= sqrtWE;

} else

WE.fill(1.0);

// Some common initialization.

W.resize(p, nstages);

P.resize(p, nstages);

Q.resize(m, nstages);

if (method == "kernel") {

PLERROR("You shouldn't be here... !?");

} else if (method == "wpls1") {

Vec s(n);

Vec old_s(n);

Vec lx(p);

Vec ly(1);

Mat T(n,nstages);

Mat tmp_np(n,p), tmp_pp(p,p);

PP<ProgressBar> pb;

if(report_progress) {

pb = new ProgressBar("Computing the components", nstages);

}

bool finished;

real dold;

if (parent_filename != "") {

string expdir = getExperimentDirectory();

int pos = expdir.find("Split");

string the_split = expdir.substr(pos,6);

int pos2 = parent_filename.find("Split");

int nremain = parent_filename.length() - 6 - pos2;

parent_filename = parent_filename.substr(0,pos2) + the_split + parent_filename.substr(pos2+6,nremain);

PP<VPLCombinedLearner> combined_parent;

PLearn::load(parent_filename, combined_parent);

//if (VPLPreprocessedLearner2* vplpl = dynamic_cast<VPLPreprocessedLearner2*>(

// combined_parent->sublearners_[parent_sub]))

//{

// parent = vplpl->learner_;

//}

//else

// PLERROR("Unsupported type for sublearners of the combined

// learner");

PP<VPLPreprocessedLearner2> vplpl = (PP<VPLPreprocessedLearner2>)(combined_parent->sublearners_[parent_sub]);

PP<WPLS> parent = (PP<WPLS>)(vplpl->learner_);

//VPLPreprocessedLearner2* vplpl = (VPLPreprocessedLearner2*)(combined_parent->sublearners_[parent_sub]);

//WPLS* parent (WPLS*)(vplpl->learner_);

int k = parent->nstages;

Mat tmp_nk(n,k);

if (parent->stage < nstages) {

Mat tmp_n1(n,1);

product(tmp_n1, X, parent->B);

for (int i=0; i<n; i++)

Y[i] -= tmp_n1(i,0);

product(tmp_nk,X,parent->W);

productTranspose(tmp_np,tmp_nk,parent->P);

X -= tmp_np;

stage = k;

} else {

product(tmp_nk,X,parent->W);

T = tmp_nk.subMat(0,0,n,nstages);

P = (parent->P).subMat(0,0,p,nstages);

Q = (parent->Q).subMat(0,0,m,nstages);

stage = nstages;

}

}

while (stage < nstages) {

if (verbosity >= 1)

pout << "stage=" << stage << endl;

s << Y;

normalize(s, 2.0);

finished = false;

int count = 0;

while (!finished) {

count++;

old_s << s;

transposeProduct(lx, X, s);

product(s, X, lx);

normalize(s, 2.0);

dold = norm(old_s -s);

if(isnan(dold))

PLERROR("dold is nan");

if (dold < precision)

finished = true;

else {

if (verbosity >= 2)

pout << "dold = " << dold << endl;

if (count%100==0 && verbosity>=1)

pout << "loop counts = " << count << endl;

}

}

transposeProduct(lx, X, s);

ly[0] = dot(s, Y);

T.column(stage) << s;

P.column(stage) << lx;

Q.column(stage) << ly;

externalProduct(tmp_np,s,lx);

X -= tmp_np;

Y -= ly[0] * s;

if (report_progress)

pb->update(stage);

stage++;

}

productTranspose(tmp_np, T, P);

if (verbosity >= 2) {

pout << "T = " << endl << T << endl;

pout << "P = " << endl << P << endl;

pout << "Q = " << endl << Q << endl;

pout << "tmp_np = " << endl << tmp_np << endl;

pout << endl;

}

Mat U, Vt;

Vec D;

real safeguard = 1.1;

SVD(tmp_np, U, D, Vt, 'S', safeguard);

if (verbosity >= 2) {

pout << "U = " << endl << U << endl;

pout << "D = " << endl << D << endl;

pout << "Vt = " << endl << Vt << endl;

pout << endl;

}

Mat invDmat(p,p);

invDmat.fill(0.0);

for (int i = 0; i < D.length(); i++) {

if (abs(D[i]) < precision)

invDmat(i,i) = 0.0;

else

invDmat(i,i) = 1.0 / D[i];

}

product(tmp_pp,invDmat,Vt);

product(tmp_np,U,tmp_pp);

transposeProduct(W, tmp_np, T);

B.resize(p,1);

productTranspose(B, W, Q);

if (verbosity >= 2) {

pout << "W = " << W << endl;

pout << "B = " << B << endl;

}

if (verbosity >= 1)

pout << "WPLS training ended" << endl;

}

}

Reimplemented from PLearn::PLearner.

Mat PLearn::WPLS::B [protected] |

Definition at line 65 of file WPLS.h.

Referenced by computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

int PLearn::WPLS::m [protected] |

Definition at line 66 of file WPLS.h.

Referenced by build_(), computeOutput(), declareOptions(), outputsize(), and train().

Vec PLearn::WPLS::mean_input [protected] |

Definition at line 67 of file WPLS.h.

Referenced by computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::WPLS::mean_target [protected] |

Definition at line 68 of file WPLS.h.

Referenced by computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

| string PLearn::WPLS::method |

Definition at line 84 of file WPLS.h.

Referenced by build_(), declareOptions(), and train().

Definition at line 86 of file WPLS.h.

Referenced by build_(), computeOutput(), declareOptions(), and outputsize().

Definition at line 87 of file WPLS.h.

Referenced by build_(), computeOutput(), declareOptions(), and outputsize().

int PLearn::WPLS::p [protected] |

Definition at line 69 of file WPLS.h.

Referenced by build_(), computeOutput(), declareOptions(), and train().

Mat PLearn::WPLS::P [protected] |

Definition at line 74 of file WPLS.h.

Referenced by declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 88 of file WPLS.h.

Referenced by declareOptions(), and train().

Definition at line 89 of file WPLS.h.

Referenced by declareOptions(), and train().

Definition at line 85 of file WPLS.h.

Referenced by build_(), declareOptions(), and train().

Mat PLearn::WPLS::Q [protected] |

Definition at line 75 of file WPLS.h.

Referenced by declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::WPLS::stddev_input [protected] |

Definition at line 70 of file WPLS.h.

Referenced by computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::WPLS::stddev_target [protected] |

Definition at line 71 of file WPLS.h.

Referenced by computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

int PLearn::WPLS::w [protected] |

Mat PLearn::WPLS::W [protected] |

Definition at line 73 of file WPLS.h.

Referenced by computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

1.7.4

1.7.4