|

PLearn 0.1

|

|

PLearn 0.1

|

Multi-layer neural network trained with an efficient Natural Gradient optimization. More...

#include <NatGradSMPNNet.h>

Public Member Functions | |

| NatGradSMPNNet () | |

| virtual | ~NatGradSMPNNet () |

| Destructor (to free shared memory). | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual NatGradSMPNNet * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| bool | delayed_update |

| bool | wait_for_final_update |

| bool | synchronize_update |

| int | noutputs |

| TVec< int > | hidden_layer_sizes |

| sizes of hidden layers, provided by the user. | |

| TVec< Mat > | layer_params |

| layer_params[i] is a matrix of dimension layer_sizes[i+1] x (layer_sizes[i]+1) containing the neuron biases in its first column. | |

| TVec< Mat > | layer_mparams |

| mean layer_params, averaged over past updates (moving average) | |

| real | params_averaging_coeff |

| mparams <-- (1-params_averaging_coeff)mparams + params_averaging_coeff*params | |

| int | params_averaging_freq |

| how often (in terms of minibatches, i.e. weight updates) do we perform the above? | |

| real | init_lrate |

| initial learning rate | |

| real | lrate_decay |

| learning rate decay factor | |

| real | output_layer_L1_penalty_factor |

| L1 penalty applied to the output layer's parameters. | |

| real | output_layer_lrate_scale |

| scaling factor of the learning rate for the output layer | |

| int | minibatch_size |

| update the parameters only so often | |

| PP< GradientCorrector > | neurons_natgrad_template |

| natural gradient estimator for neurons (if 0 then do not correct the gradient on neurons) | |

| TVec< PP< GradientCorrector > > | neurons_natgrad_per_layer |

| PP< GradientCorrector > | params_natgrad_template |

| natural gradient estimator for the parameters within each neuron (if 0 then do not correct the gradient on each neuron weight) | |

| PP< GradientCorrector > | params_natgrad_per_input_template |

| natural gradient estimator solely for the parameters of the first layer. | |

| TVec< PP< GradientCorrector > > | params_natgrad_per_group |

| the above templates are used by the user to specifiy all the elements of the vector below | |

| PP< GradientCorrector > | full_natgrad |

| optionally, if neurons_natgrad==0 and params_natgrad_template==0, one can have regular stochastic gradient descent, or full-covariance natural gradient using the natural gradient estimator below | |

| string | output_type |

| type of output cost: "NLL" for classification problems, "MSE" for regression | |

| real | input_size_lrate_normalization_power |

| 0 does not scale the learning rate 1 scales it by 1 / the nb of inputs of the neuron 2 scales it by 1 / sqrt(the nb of inputs of the neuron) etc. | |

| real | lrate_scale_factor |

| scale the learning rate in different neurons by a factor taken randomly as follows: choose integer n uniformly between lrate_scale_factor_min_power and lrate_scale_factor_max_power inclusively, and then scale learning rate by lrate_scale_factor^n. | |

| int | lrate_scale_factor_max_power |

| int | lrate_scale_factor_min_power |

| bool | self_adjusted_scaling_and_bias |

| Let each neuron self-adjust its bias and scaling factor of its activations so that the mean and standard deviation of the activations reach the target_mean_activation and target_stdev_activation. | |

| real | target_mean_activation |

| real | target_stdev_activation |

| real | activation_statistics_moving_average_coefficient |

| int | verbosity |

| Level of verbosity. | |

| bool | use_pvgrad |

| Stages for profiling the correlation between the gradients' elements. | |

| real | pv_initial_stepsize |

| Initial size of steps in parameter space. | |

| real | pv_acceleration |

| Coefficient by which to multiply/divide the step sizes. | |

| int | pv_min_samples |

| PV's gradient minimum number of samples to estimate confidence. | |

| real | pv_required_confidence |

| Minimum required confidence (probability of being positive or negative) for taking a step. | |

| bool | pv_random_sample_step |

| If this is set to true, then we will randomly choose the step sign for. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

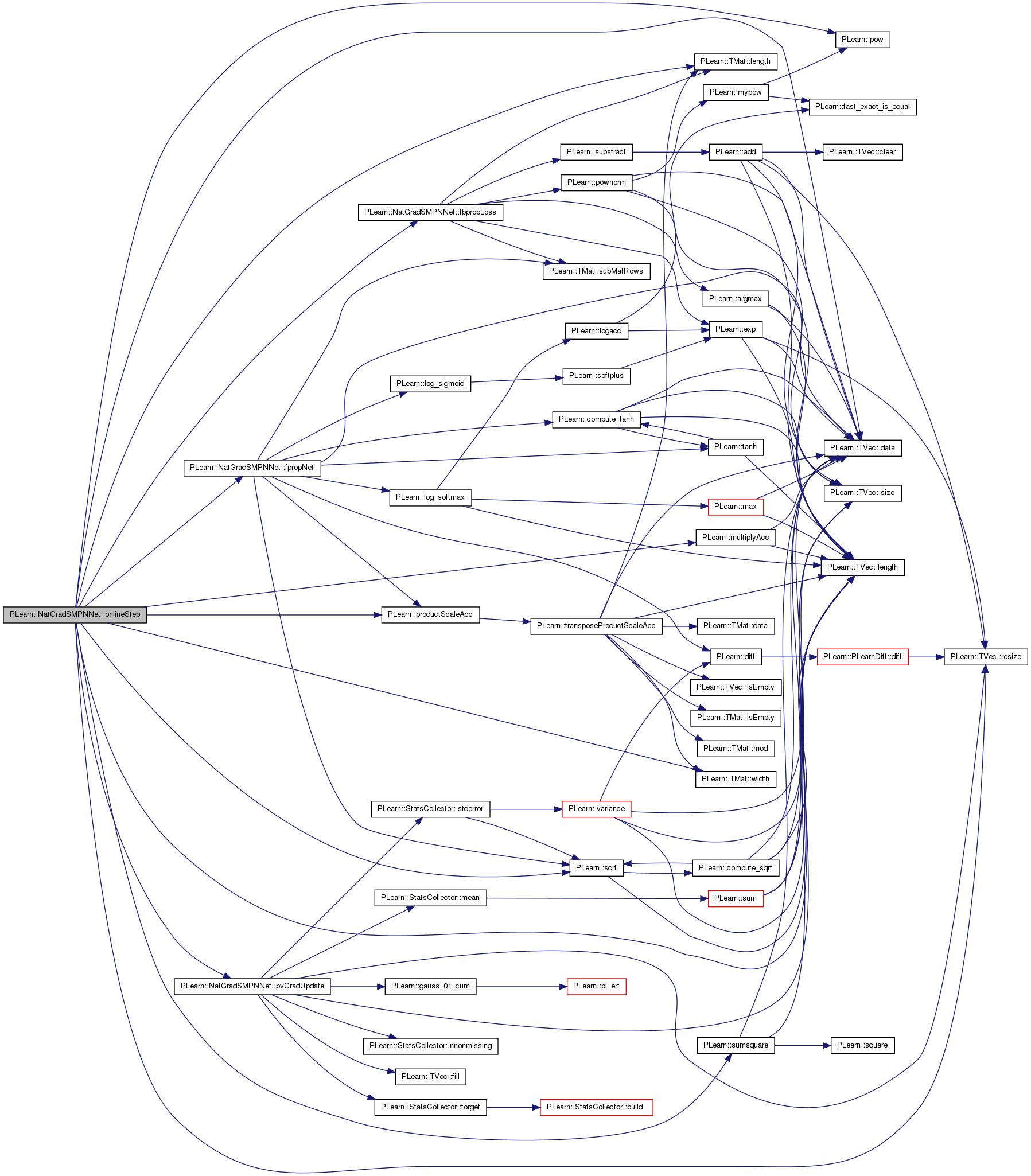

| void | onlineStep (int t, const Mat &targets, Mat &train_costs, Vec example_weights) |

| one minibatch training step | |

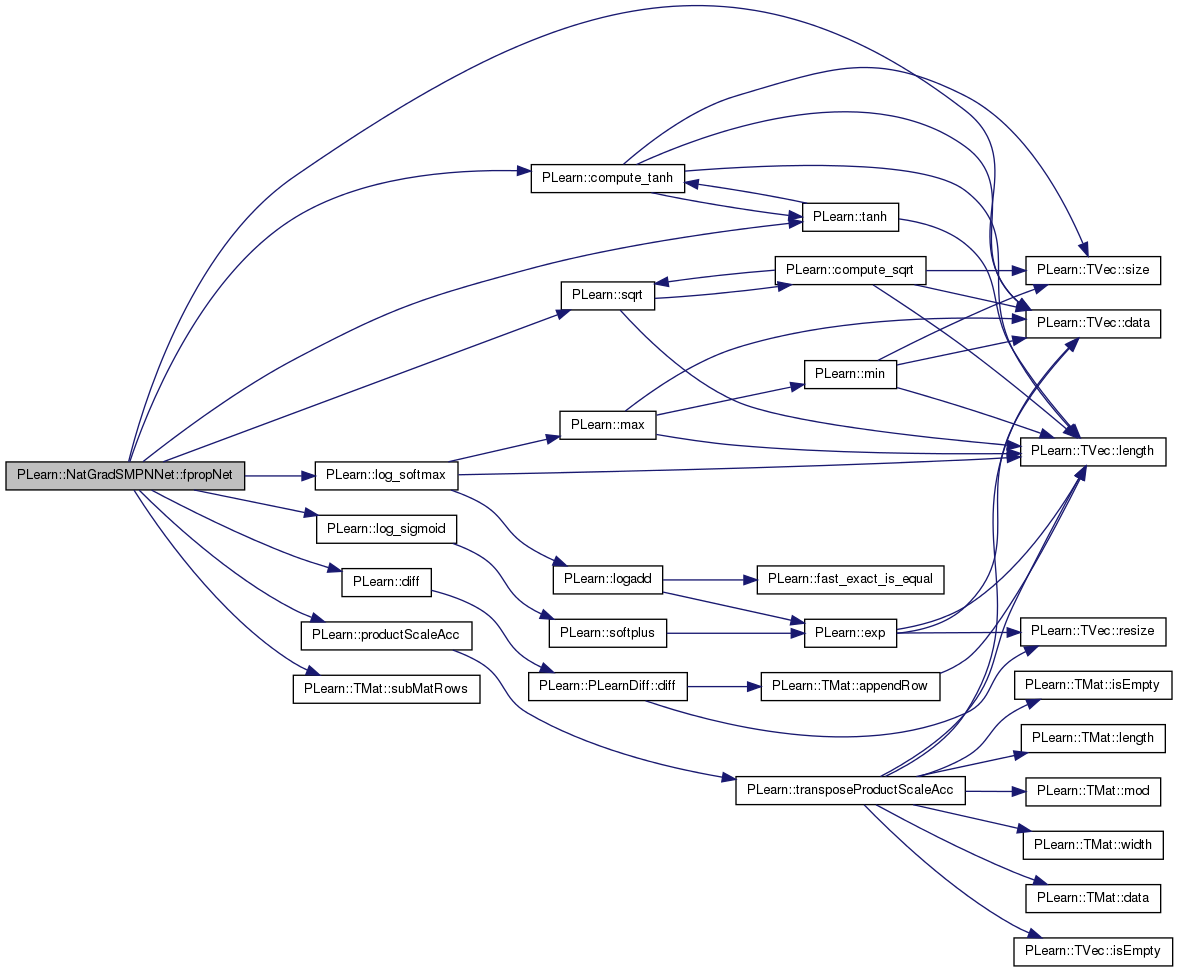

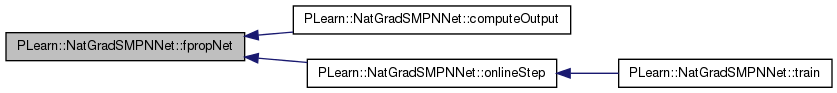

| void | fpropNet (int n_examples, bool during_training) const |

| compute a minibatch of size n_examples network top-layer output given layer 0 output (= network input) (note that log-probabilities are computed for classification tasks, output_type=NLL) | |

| void | fbpropLoss (const Mat &output, const Mat &target, const Vec &example_weights, Mat &train_costs) const |

| compute train costs given the network top-layer output and write into neuron_gradients_per_layer[n_layers-2], gradient on pre-non-linearity activation | |

| void | pvGradUpdate () |

| gradient computation and weight update in Pascal Vincent's gradient technique | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

| static void | declareMethods (RemoteMethodMap &rmm) |

| Declares the class methods. | |

Protected Attributes | |

| PP< VecStatsCollector > | pv_gradstats |

| accumulated statistics of gradients on each parameter. | |

| Vec | pv_stepsizes |

| The step size (absolute value) to be taken for each parameter. | |

| TVec< bool > | pv_stepsigns |

| Indicates whether the previous step was positive (true) or negative (false) | |

| int | n_layers |

| number of layers of weights (2 for a neural net with one hidden layer) | |

| TVec< int > | layer_sizes |

| layer sizes (derived from hidden_layer_sizes, inputsize_ and outputsize_) | |

| TVec< Mat > | biases |

| pointers into the layer_params | |

| TVec< Mat > | weights |

| TVec< Mat > | mweights |

| TVec< Vec > | activations_scaling |

| TVec< Vec > | mean_activations |

| TVec< Vec > | var_activations |

| real | cumulative_training_time |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

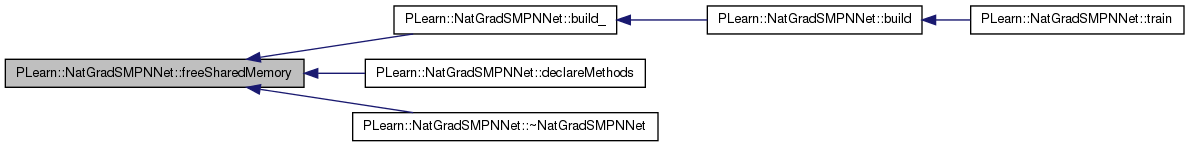

| void | build_ () |

| This does the actual building. | |

| void | freeSharedMemory () |

| Free shared memory that may still be allocated. | |

Private Attributes | |

| Vec | all_params |

| Vec | all_params_delta |

| Vec | all_params_gradient |

| Vec | all_mparams |

| TVec< Mat > | layer_params_gradient |

| TVec< Vec > | layer_params_delta |

| TVec< Vec > | group_params |

| TVec< Vec > | group_params_delta |

| TVec< Vec > | group_params_gradient |

| Mat | neuron_gradients |

| TVec< Mat > | neuron_gradients_per_layer |

| TVec< Mat > | neuron_outputs_per_layer |

| TVec< Mat > | neuron_extended_outputs_per_layer |

| Mat | targets |

| Vec | example_weights |

| Mat | train_costs |

| real * | params_ptr |

| int | params_id |

| int * | params_int_ptr |

| int | params_int_id |

| int | nsteps |

| Number of samples seen since the last global parameter update. | |

| int | semaphore_id |

| Semaphore used to control which CPU must perform an update. | |

| Vec | params_update |

| Used to store the cumulative updates to the parameters, when the 'delayed_update' option is set. | |

| TVec< Mat > | layer_params_update |

| Used to store updates to the parameters of each layer (points into the 'params_update' vector). | |

Multi-layer neural network trained with an efficient Natural Gradient optimization.

Definition at line 53 of file NatGradSMPNNet.h.

typedef PLearner PLearn::NatGradSMPNNet::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 55 of file NatGradSMPNNet.h.

| PLearn::NatGradSMPNNet::NatGradSMPNNet | ( | ) |

Definition at line 74 of file NatGradSMPNNet.cc.

References PLearn::PLearner::random_gen.

:

delayed_update(true),

wait_for_final_update(true),

synchronize_update(false),

noutputs(-1),

params_averaging_coeff(1.0),

params_averaging_freq(5),

init_lrate(0.01),

lrate_decay(0),

output_layer_L1_penalty_factor(0.0),

output_layer_lrate_scale(1),

minibatch_size(1),

output_type("NLL"),

input_size_lrate_normalization_power(0),

lrate_scale_factor(3),

lrate_scale_factor_max_power(0),

lrate_scale_factor_min_power(0),

self_adjusted_scaling_and_bias(false),

target_mean_activation(-4), //

target_stdev_activation(3), // 2.5% of the time we are above 1

verbosity(0),

//corr_profiling_start(0),

//corr_profiling_end(0),

use_pvgrad(false),

pv_initial_stepsize(1e-6),

pv_acceleration(2),

pv_min_samples(2),

pv_required_confidence(0.80),

pv_random_sample_step(false),

pv_gradstats(new VecStatsCollector()),

n_layers(-1),

cumulative_training_time(0),

params_ptr(NULL),

params_id(-1),

params_int_ptr(NULL),

params_int_id(-1),

nsteps(0),

semaphore_id(-1)

{

random_gen = new PRandom();

}

| PLearn::NatGradSMPNNet::~NatGradSMPNNet | ( | ) | [virtual] |

Destructor (to free shared memory).

Definition at line 1625 of file NatGradSMPNNet.cc.

References freeSharedMemory(), PLERROR, and semaphore_id.

{

freeSharedMemory();

if (semaphore_id >= 0) {

int success = semctl(semaphore_id, 0, IPC_RMID);

if (success < 0)

PLERROR("In NatGradSMPNNet::train - Could not remove previous "

"semaphore (errno = %d)", errno);

semaphore_id = -1;

}

}

| string PLearn::NatGradSMPNNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 72 of file NatGradSMPNNet.cc.

| OptionList & PLearn::NatGradSMPNNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 72 of file NatGradSMPNNet.cc.

| RemoteMethodMap & PLearn::NatGradSMPNNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 72 of file NatGradSMPNNet.cc.

Reimplemented from PLearn::PLearner.

Definition at line 72 of file NatGradSMPNNet.cc.

| Object * PLearn::NatGradSMPNNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 72 of file NatGradSMPNNet.cc.

| StaticInitializer NatGradSMPNNet::_static_initializer_ & PLearn::NatGradSMPNNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 72 of file NatGradSMPNNet.cc.

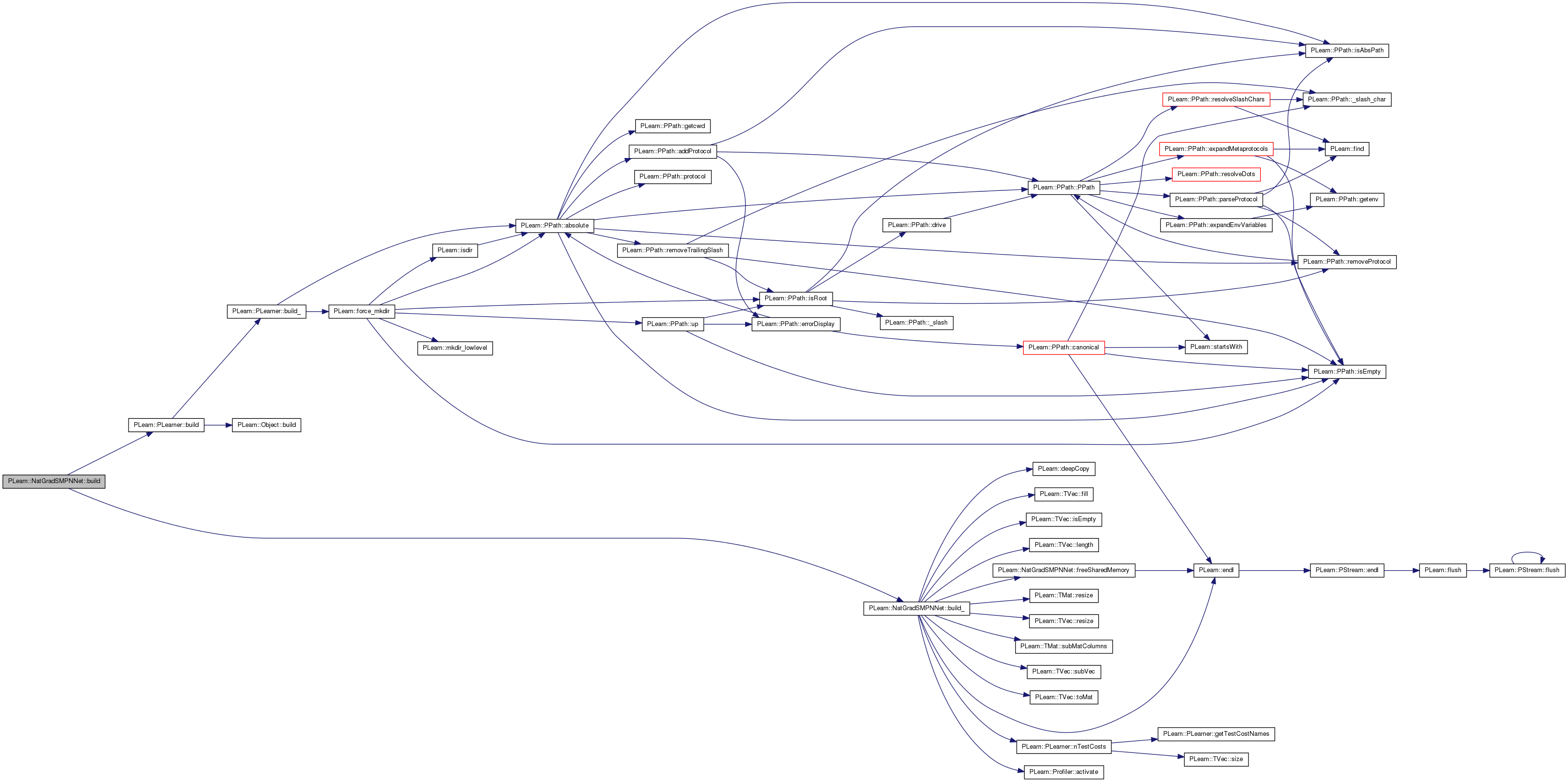

| void PLearn::NatGradSMPNNet::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 600 of file NatGradSMPNNet.cc.

References PLearn::PLearner::build(), and build_().

Referenced by train().

{

inherited::build();

build_();

}

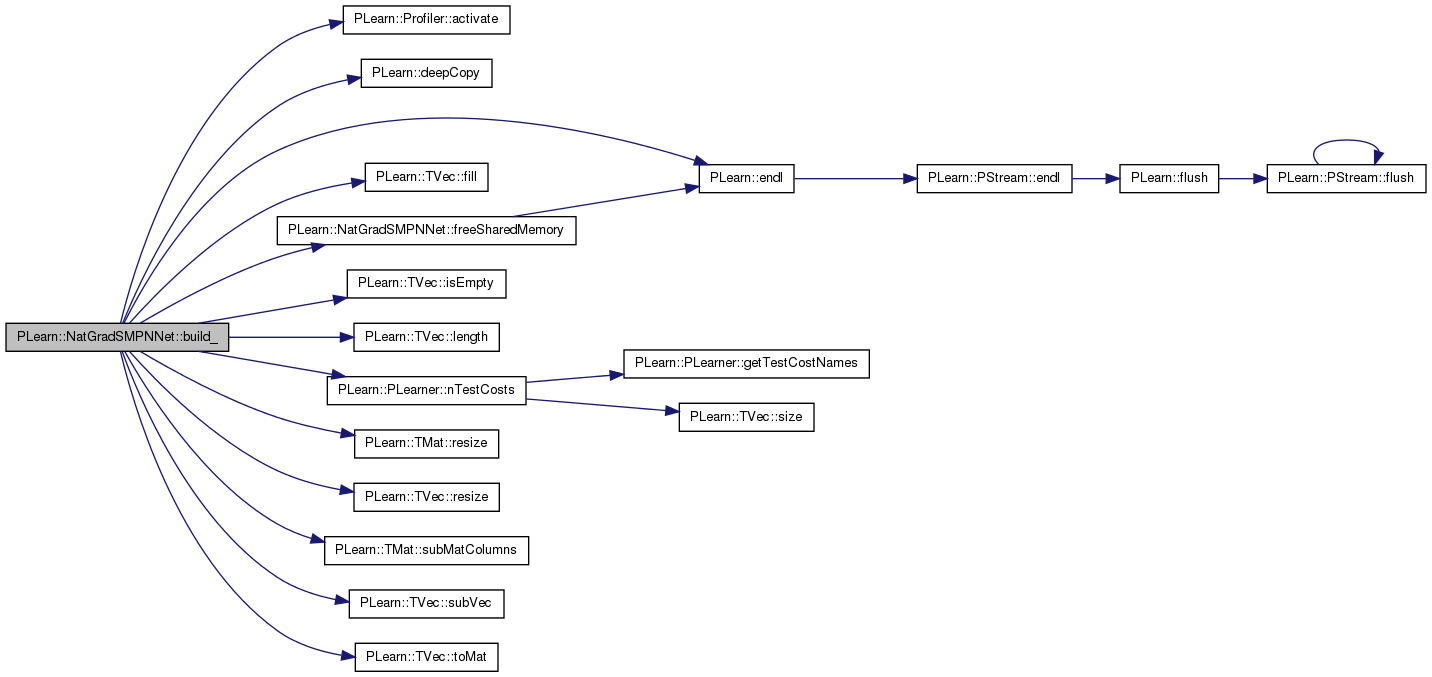

| void PLearn::NatGradSMPNNet::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 385 of file NatGradSMPNNet.cc.

References PLearn::Profiler::activate(), activations_scaling, all_mparams, all_params, all_params_delta, all_params_gradient, biases, PLearn::deepCopy(), delayed_update, PLearn::endl(), example_weights, PLearn::TVec< T >::fill(), freeSharedMemory(), group_params, group_params_delta, group_params_gradient, hidden_layer_sizes, i, PLearn::PLearner::inputsize_, PLearn::TVec< T >::isEmpty(), j, layer_mparams, layer_params, layer_params_delta, layer_params_gradient, layer_params_update, layer_sizes, PLearn::TVec< T >::length(), mean_activations, minibatch_size, mweights, n_layers, neuron_extended_outputs_per_layer, neuron_gradients, neuron_gradients_per_layer, neuron_outputs_per_layer, neurons_natgrad_per_layer, neurons_natgrad_template, noutputs, PLearn::PLearner::nTestCosts(), output_layer_L1_penalty_factor, output_type, params_id, params_int_id, params_int_ptr, params_natgrad_per_group, params_natgrad_per_input_template, params_natgrad_template, params_ptr, params_update, PLASSERT_MSG, PLCHECK, PLCHECK_MSG, PLERROR, PLWARNING, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::TMat< T >::subMatColumns(), PLearn::TVec< T >::subVec(), synchronize_update, PLearn::PLearner::targetsize_, PLearn::TVec< T >::toMat(), train_costs, PLearn::PLearner::train_set, use_pvgrad, var_activations, and weights.

Referenced by build().

{

if (!train_set)

return;

inputsize_ = train_set->inputsize();

if (output_type=="MSE")

{

if (noutputs<0) noutputs = targetsize_;

else PLASSERT_MSG(noutputs==targetsize_,"NatGradSMPNNet: noutputs should be -1 or match data's targetsize");

}

else if (output_type=="NLL")

{

if (noutputs<0)

PLERROR("NatGradSMPNNet: if output_type=NLL (classification), one \n"

"should provide noutputs = number of classes, or possibly\n"

"1 when 2 classes\n");

}

else if (output_type=="cross_entropy")

{

if(noutputs!=1)

PLERROR("NatGradSMPNNet: if output_type=cross_entropy, then \n"

"noutputs should be 1.\n");

}

else PLERROR("NatGradSMPNNet: output_type should be cross_entropy, NLL or MSE\n");

if( output_layer_L1_penalty_factor < 0. )

PLWARNING("NatGradSMPNNet::build_ - output_layer_L1_penalty_factor is negative!\n");

if(use_pvgrad && minibatch_size!=1)

PLERROR("PV's gradient technique (triggered by use_pvgrad): support for minibatch not yet implemented (must have minibatch_size=1)");

while (hidden_layer_sizes.length()>0 && hidden_layer_sizes[hidden_layer_sizes.length()-1]==0)

hidden_layer_sizes.resize(hidden_layer_sizes.length()-1);

n_layers = hidden_layer_sizes.length()+2;

layer_sizes.resize(n_layers);

layer_sizes.subVec(1,n_layers-2) << hidden_layer_sizes;

layer_sizes[0]=inputsize_;

layer_sizes[n_layers-1]=noutputs;

if (!layer_params.isEmpty())

PLERROR("In NatGradSMPNNet::build_ - Currently, one can only build "

"a network from scratch");

layer_params.resize(n_layers-1);

layer_mparams.resize(n_layers-1);

layer_params_delta.resize(n_layers-1);

layer_params_gradient.resize(n_layers-1);

layer_params_update.resize(n_layers - 1);

biases.resize(n_layers-1);

activations_scaling.resize(n_layers-1);

weights.resize(n_layers-1);

mweights.resize(n_layers-1);

mean_activations.resize(n_layers-1);

var_activations.resize(n_layers-1);

int n_neurons=0;

int n_params=0;

for (int i=0;i<n_layers-1;i++)

{

n_neurons+=layer_sizes[i+1];

n_params+=layer_sizes[i+1]*(1+layer_sizes[i]);

}

// Allocate shared memory for parameters.

freeSharedMemory(); // First deallocate memory if needed.

long total_memory_needed = long(n_params) * sizeof(real);

params_id = shmget(IPC_PRIVATE, total_memory_needed, 0666 | IPC_CREAT);

DBG_MODULE_LOG << "params_id = " << params_id << endl;

if (params_id == -1) {

PLERROR("In NatGradSMPNNet::build_ - Error while allocating shared "

"memory (errno = %d)", errno);

}

params_ptr = (real*) shmat(params_id, 0, 0);

PLCHECK( params_ptr );

long total_int_memory_needed = 1 * sizeof(int);

params_int_id = shmget(IPC_PRIVATE, total_int_memory_needed, 0666 | IPC_CREAT);

DBG_MODULE_LOG << "params_int_id = " << params_int_id << endl;

PLCHECK( params_int_id != -1 );

params_int_ptr = (int*) shmat(params_int_id, 0, 0);

PLCHECK( params_int_ptr );

// We should have copied data from 'all_params' first if there were some!

PLCHECK_MSG( all_params.isEmpty(), "Multiple builds not implemented yet" );

all_params = Vec(n_params, params_ptr);

all_params.resize(n_params);

all_mparams.resize(n_params);

all_params_gradient.resize(n_params);

all_params_delta.resize(n_params);

params_update.resize(n_params);

params_update.fill(0);

// depending on how parameters are grouped on the first layer

int n_groups = params_natgrad_per_input_template ? (n_neurons-layer_sizes[1]+layer_sizes[0]+1) : n_neurons;

group_params.resize(n_groups);

group_params_delta.resize(n_groups);

group_params_gradient.resize(n_groups);

for (int i=0,k=0,p=0;i<n_layers-1;i++)

{

int np=layer_sizes[i+1]*(1+layer_sizes[i]);

// First layer has natural gradient applied on groups of parameters

// linked to the same input -> parameters must be stored TRANSPOSED!

if( i==0 && params_natgrad_per_input_template ) {

PLERROR("This should not be executed");

layer_params[i]=all_params.subVec(p,np).toMat(layer_sizes[i]+1,layer_sizes[i+1]);

layer_params_update[i] = params_update.subVec(p,np).toMat(

layer_sizes[i] + 1, layer_sizes[i+1]);

layer_mparams[i]=all_mparams.subVec(p,np).toMat(layer_sizes[i]+1,layer_sizes[i+1]);

biases[i]=layer_params[i].subMatRows(0,1);

weights[i]=layer_params[i].subMatRows(1,layer_sizes[i]); //weights[0] from layer 0 to layer 1

mweights[i]=layer_mparams[i].subMatRows(1,layer_sizes[i]); //weights[0] from layer 0 to layer 1

layer_params_gradient[i]=all_params_gradient.subVec(p,np).toMat(layer_sizes[i]+1,layer_sizes[i+1]);

layer_params_delta[i]=all_params_delta.subVec(p,np);

for (int j=0;j<layer_sizes[i]+1;j++,k++) // include a bias input

{

group_params[k]=all_params.subVec(p,layer_sizes[i+1]);

group_params_delta[k]=all_params_delta.subVec(p,layer_sizes[i+1]);

group_params_gradient[k]=all_params_gradient.subVec(p,layer_sizes[i+1]);

p+=layer_sizes[i+1];

}

// Usual parameter storage

} else {

layer_params[i]=all_params.subVec(p,np).toMat(layer_sizes[i+1],layer_sizes[i]+1);

layer_params_update[i] = params_update.subVec(p, np).toMat(

layer_sizes[i+1], layer_sizes[i] + 1);

layer_mparams[i]=all_mparams.subVec(p,np).toMat(layer_sizes[i+1],layer_sizes[i]+1);

biases[i]=layer_params[i].subMatColumns(0,1);

weights[i]=layer_params[i].subMatColumns(1,layer_sizes[i]); // weights[0] from layer 0 to layer 1

mweights[i]=layer_mparams[i].subMatColumns(1,layer_sizes[i]); // weights[0] from layer 0 to layer 1

layer_params_gradient[i]=all_params_gradient.subVec(p,np).toMat(layer_sizes[i+1],layer_sizes[i]+1);

layer_params_delta[i]=all_params_delta.subVec(p,np);

for (int j=0;j<layer_sizes[i+1];j++,k++)

{

group_params[k]=all_params.subVec(p,1+layer_sizes[i]);

group_params_delta[k]=all_params_delta.subVec(p,1+layer_sizes[i]);

group_params_gradient[k]=all_params_gradient.subVec(p,1+layer_sizes[i]);

p+=1+layer_sizes[i];

}

}

activations_scaling[i].resize(layer_sizes[i+1]);

mean_activations[i].resize(layer_sizes[i+1]);

var_activations[i].resize(layer_sizes[i+1]);

}

if (params_natgrad_template || params_natgrad_per_input_template)

{

int n_input_groups=0;

int n_neuron_groups=0;

if(params_natgrad_template)

n_neuron_groups = n_neurons;

if( params_natgrad_per_input_template ) {

n_input_groups = layer_sizes[0]+1;

if(params_natgrad_template) // override first layer groups if present

n_neuron_groups -= layer_sizes[1];

}

params_natgrad_per_group.resize(n_input_groups+n_neuron_groups);

for (int i=0;i<n_input_groups;i++)

params_natgrad_per_group[i] = PLearn::deepCopy(params_natgrad_per_input_template);

for (int i=n_input_groups; i<n_input_groups+n_neuron_groups;i++)

params_natgrad_per_group[i] = PLearn::deepCopy(params_natgrad_template);

}

if (neurons_natgrad_template && neurons_natgrad_per_layer.length()==0)

{

neurons_natgrad_per_layer.resize(n_layers); // 0 not used

for (int i=1;i<n_layers;i++) // no need for correcting input layer

neurons_natgrad_per_layer[i] = PLearn::deepCopy(neurons_natgrad_template);

}

neuron_gradients.resize(minibatch_size,n_neurons);

neuron_outputs_per_layer.resize(n_layers); // layer 0 = input, layer n_layers-1 = output

neuron_extended_outputs_per_layer.resize(n_layers); // layer 0 = input, layer n_layers-1 = output

neuron_gradients_per_layer.resize(n_layers); // layer 0 not used

neuron_extended_outputs_per_layer[0].resize(minibatch_size,1+layer_sizes[0]);

neuron_outputs_per_layer[0]=neuron_extended_outputs_per_layer[0].subMatColumns(1,layer_sizes[0]);

neuron_extended_outputs_per_layer[0].column(0).fill(1.0); // for biases

for (int i=1,k=0;i<n_layers;k+=layer_sizes[i],i++)

{

neuron_extended_outputs_per_layer[i].resize(minibatch_size,1+layer_sizes[i]);

neuron_outputs_per_layer[i]=neuron_extended_outputs_per_layer[i].subMatColumns(1,layer_sizes[i]);

neuron_extended_outputs_per_layer[i].column(0).fill(1.0); // for biases

neuron_gradients_per_layer[i] =

neuron_gradients.subMatColumns(k,layer_sizes[i]);

}

example_weights.resize(minibatch_size);

train_costs.resize(minibatch_size, nTestCosts());

Profiler::activate();

// Gradient correlation profiling

//if( corr_profiling_start != corr_profiling_end ) {

// PLASSERT( (0<=corr_profiling_start) && (corr_profiling_start<corr_profiling_end) );

// cout << "n_params " << n_params << endl;

// // Build the names.

// stringstream ss_suffix;

// for (int i=0;i<n_layers;i++) {

// ss_suffix << "_" << layer_sizes[i];

// }

// ss_suffix << "_stages_" << corr_profiling_start << "_" << corr_profiling_end;

// string str_gc_name = "gCcorr" + ss_suffix.str();

// string str_ngc_name;

// if( full_natgrad ) {

// str_ngc_name = "ngCcorr_full" + ss_suffix.str();

// } else if (params_natgrad_template) {

// str_ngc_name = "ngCcorr_params" + ss_suffix.str();

// }

// // Build the profilers.

// g_corrprof = new CorrelationProfiler( n_params, str_gc_name);

// g_corrprof->build();

// ng_corrprof = new CorrelationProfiler( n_params, str_ngc_name);

// ng_corrprof->build();

//}

if (synchronize_update && !delayed_update)

PLERROR("NatGradSMPNNet::build_ - 'synchronize_update' cannot be used "

"when 'delayed_update' is false");

}

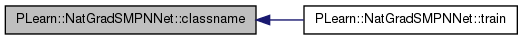

| string PLearn::NatGradSMPNNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 72 of file NatGradSMPNNet.cc.

Referenced by train().

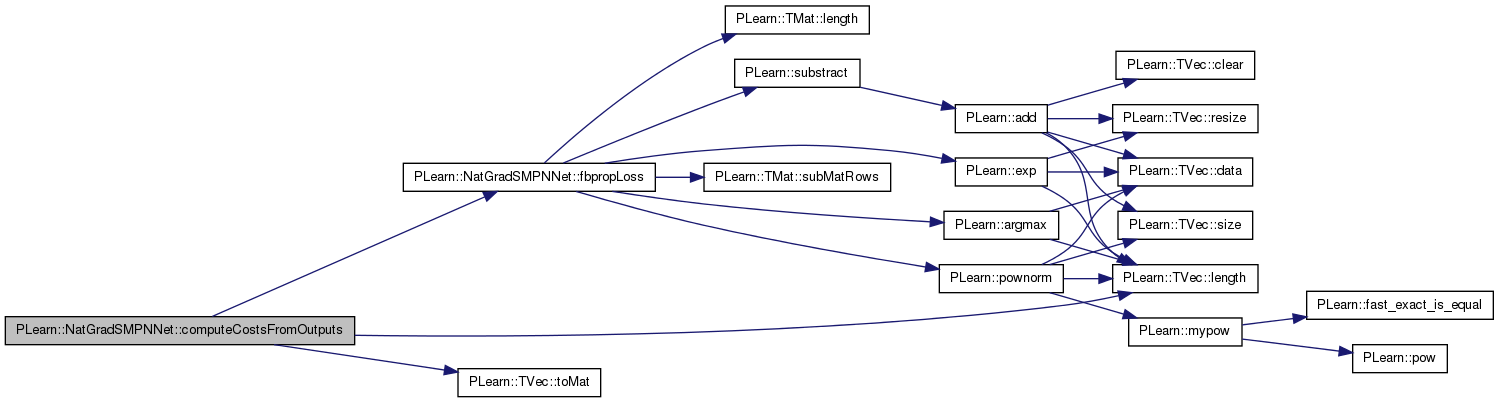

| void PLearn::NatGradSMPNNet::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 1582 of file NatGradSMPNNet.cc.

References fbpropLoss(), PLearn::TVec< T >::length(), PLearn::TVec< T >::toMat(), and w.

{

Vec w(1);

w[0]=1;

Mat outputM = output.toMat(1,output.length());

Mat targetM = target.toMat(1,output.length());

Mat costsM = costs.toMat(1,costs.length());

fbpropLoss(outputM,targetM,w,costsM);

}

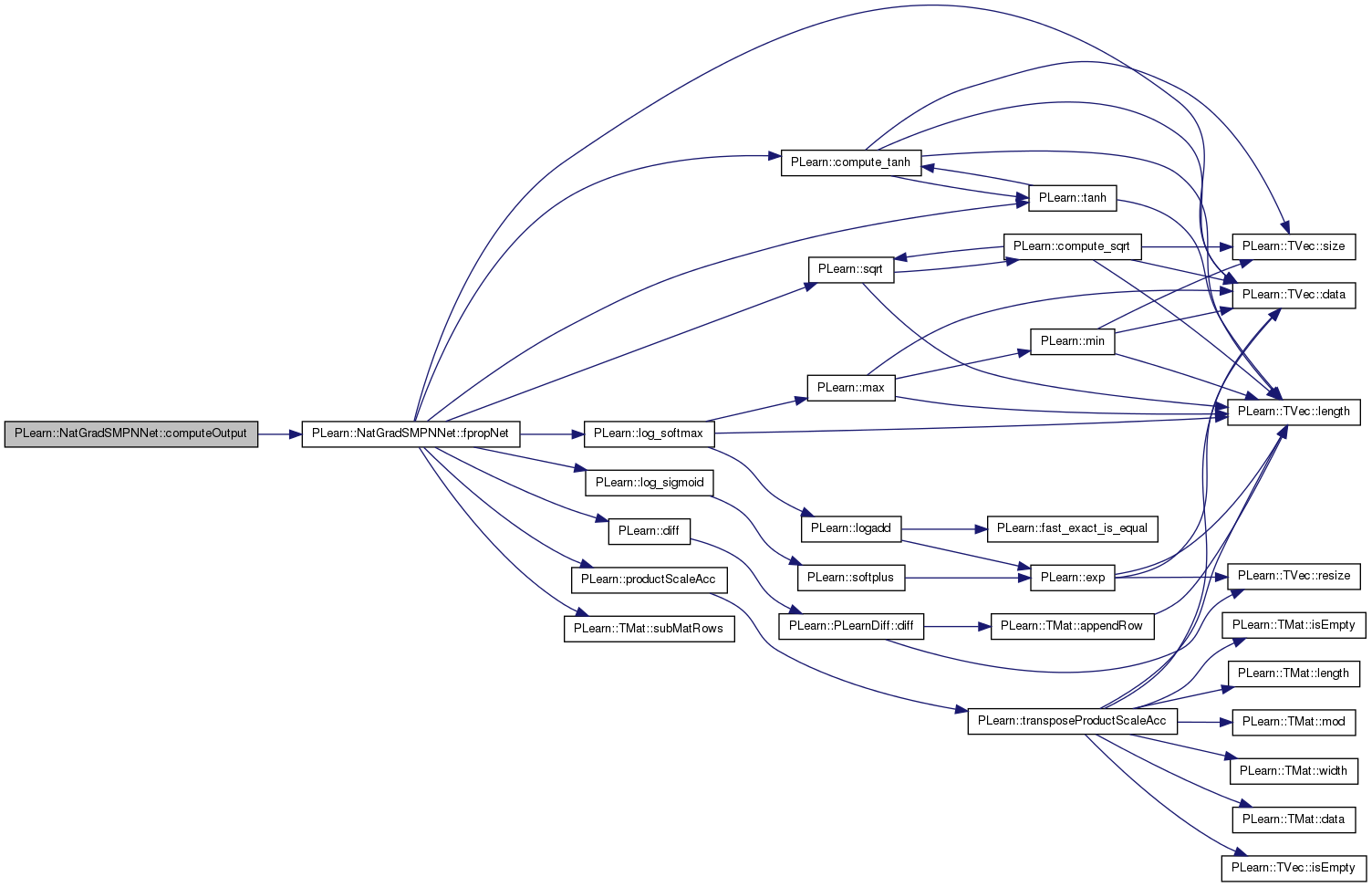

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 1411 of file NatGradSMPNNet.cc.

References fpropNet(), n_layers, and neuron_outputs_per_layer.

{

/*

static int out_idx = -1;

out_idx = (out_idx + 1) % 50;

PStream out_log_file = openFile("/u/delallea/tmp/out_log_" +

tostring(out_idx), PStream::raw_ascii, "w");

out_log_file << "Starting to compute output on " << input << endl;

out_log_file.flush();

*/

//Profiler::pl_profile_start("computeOutput");

neuron_outputs_per_layer[0](0) << input;

fpropNet(1,false);

output << neuron_outputs_per_layer[n_layers-1](0);

//Profiler::pl_profile_end("computeOutput");

/*

out_log_file << "Output computed" << endl;

out_log_file.flush();

*/

}

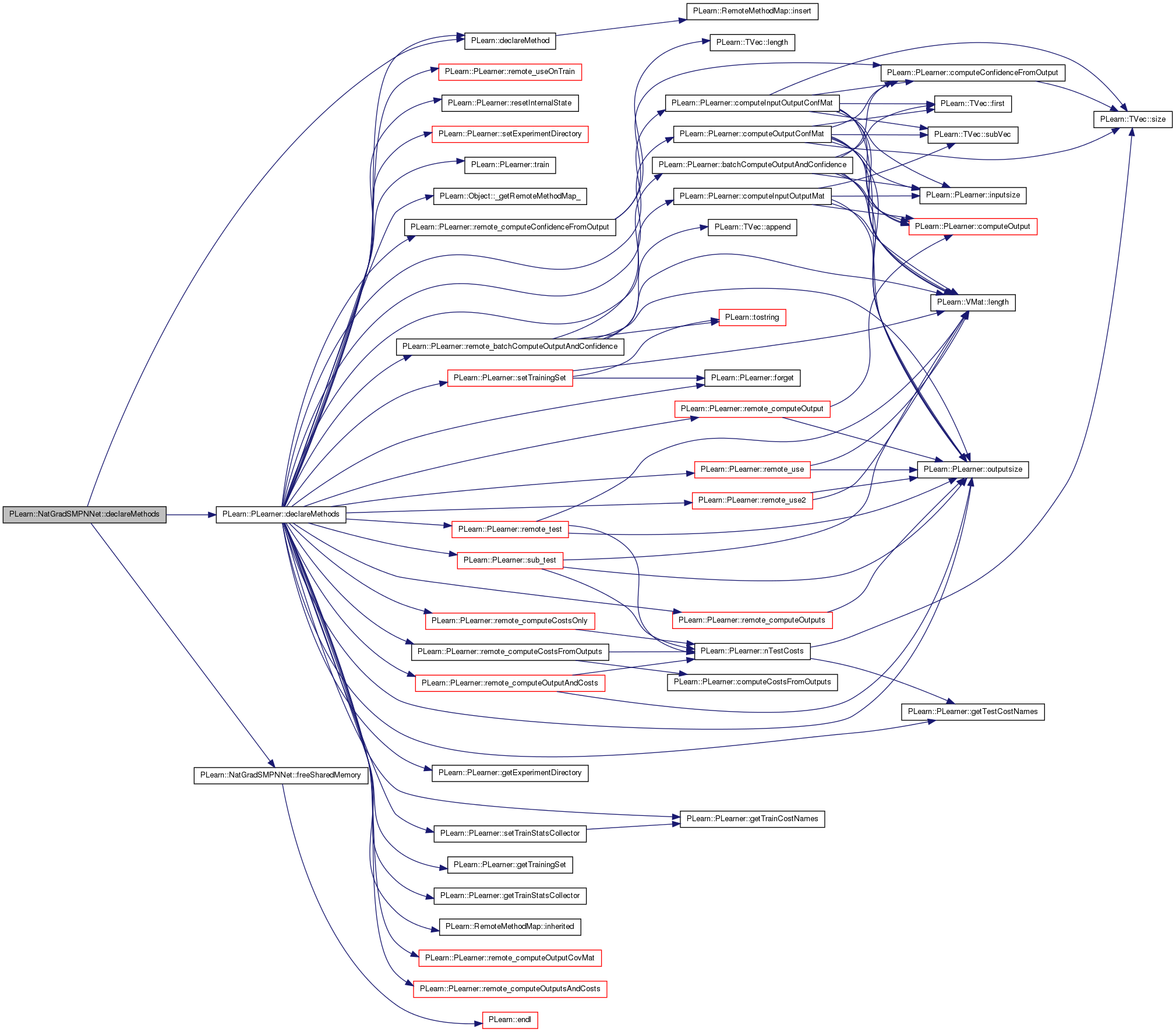

| void PLearn::NatGradSMPNNet::declareMethods | ( | RemoteMethodMap & | rmm | ) | [static, protected] |

Declares the class methods.

Reimplemented from PLearn::PLearner.

Definition at line 374 of file NatGradSMPNNet.cc.

References PLearn::declareMethod(), PLearn::PLearner::declareMethods(), and freeSharedMemory().

{

declareMethod(rmm, "freeSharedMemory", &NatGradSMPNNet::freeSharedMemory,

(BodyDoc("Free shared memory ressources.")));

inherited::declareMethods(rmm);

}

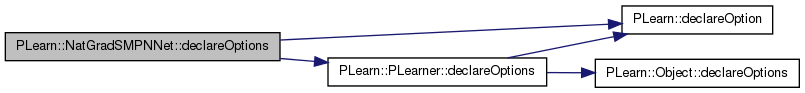

| void PLearn::NatGradSMPNNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 119 of file NatGradSMPNNet.cc.

References activation_statistics_moving_average_coefficient, activations_scaling, PLearn::OptionBase::buildoption, cumulative_training_time, PLearn::declareOption(), PLearn::PLearner::declareOptions(), delayed_update, full_natgrad, hidden_layer_sizes, init_lrate, input_size_lrate_normalization_power, layer_mparams, layer_params, layer_sizes, PLearn::OptionBase::learntoption, lrate_decay, lrate_scale_factor, lrate_scale_factor_max_power, lrate_scale_factor_min_power, minibatch_size, n_layers, neurons_natgrad_per_layer, neurons_natgrad_template, noutputs, output_layer_L1_penalty_factor, output_layer_lrate_scale, output_type, params_averaging_coeff, params_averaging_freq, params_natgrad_per_group, params_natgrad_per_input_template, params_natgrad_template, pv_acceleration, pv_initial_stepsize, pv_min_samples, pv_random_sample_step, pv_required_confidence, self_adjusted_scaling_and_bias, synchronize_update, target_mean_activation, target_stdev_activation, use_pvgrad, and wait_for_final_update.

{

declareOption(ol, "delayed_update", &NatGradSMPNNet::delayed_update,

OptionBase::buildoption,

"If true, then each CPU's update will be delayed until it is its own\n"

"turn to update. This ensures no two CPUs are modifying parameters\n"

"at the same time.");

declareOption(ol, "wait_for_final_update",

&NatGradSMPNNet::wait_for_final_update,

OptionBase::buildoption,

"If true, each CPU will wait its turn before performing its final\n"

"update. It should impact performance only when 'delayed_update' is\n"

"also true.");

declareOption(ol, "synchronize_update", &NatGradSMPNNet::synchronize_update,

OptionBase::buildoption,

"If true, then processors will in turn update the shared paremeters\n"

"after each mini-batch and will wait until all processors did their\n"

"update before processing the next mini-batch. Otherwise, no\n"

"synchronization is performed and a processor may process multiple\n"

"mini-batches before doing a parameter update.");

declareOption(ol, "noutputs", &NatGradSMPNNet::noutputs,

OptionBase::buildoption,

"Number of outputs of the neural network, which can be derived from output_type and targetsize_\n");

declareOption(ol, "n_layers", &NatGradSMPNNet::n_layers,

OptionBase::learntoption,

"Number of layers of weights (ie. 2 for a neural net with one hidden layer).\n"

"Needs not be specified explicitly (derived from hidden_layer_sizes).\n");

declareOption(ol, "hidden_layer_sizes", &NatGradSMPNNet::hidden_layer_sizes,

OptionBase::buildoption,

"Defines the architecture of the multi-layer neural network by\n"

"specifying the number of hidden units in each hidden layer.\n");

declareOption(ol, "layer_sizes", &NatGradSMPNNet::layer_sizes,

OptionBase::learntoption,

"Derived from hidden_layer_sizes, inputsize_ and noutputs\n");

declareOption(ol, "cumulative_training_time", &NatGradSMPNNet::cumulative_training_time,

OptionBase::learntoption,

"Cumulative training time since age=0, in seconds.\n");

declareOption(ol, "layer_params", &NatGradSMPNNet::layer_params,

OptionBase::learntoption,

"Parameters used while training, for each layer, organized as follows: layer_params[i] \n"

"is a matrix of dimension layer_sizes[i+1] x (layer_sizes[i]+1)\n"

"containing the neuron biases in its first column.\n");

declareOption(ol, "activations_scaling", &NatGradSMPNNet::activations_scaling,

OptionBase::learntoption,

"Scaling coefficients for each neuron of each layer, if self_adjusted_scaling_and_bias:\n"

" output = tanh(activations_scaling[layer][neuron] * (biases[layer][neuron] + weights[layer]*input[layer-1])\n");

declareOption(ol, "layer_mparams", &NatGradSMPNNet::layer_mparams,

OptionBase::learntoption,

"Test parameters for each layer, organized like layer_params.\n"

"This is a moving average of layer_params, computed with\n"

"coefficient params_averaging_coeff. Thus the mparams are\n"

"a smoothed version of the params, and they are used only\n"

"during testing.\n");

declareOption(ol, "params_averaging_coeff", &NatGradSMPNNet::params_averaging_coeff,

OptionBase::buildoption,

"Coefficient used to control how fast we forget old parameters\n"

"in the moving average performed as follows:\n"

"mparams <-- (1-params_averaging_coeff)mparams + params_averaging_coeff*params\n");

declareOption(ol, "params_averaging_freq", &NatGradSMPNNet::params_averaging_freq,

OptionBase::buildoption,

"How often (in terms of number of minibatches, i.e. weight updates)\n"

"do we perform the moving average update calculation\n"

"mparams <-- (1-params_averaging_coeff)mparams + params_averaging_coeff*params\n");

declareOption(ol, "init_lrate", &NatGradSMPNNet::init_lrate,

OptionBase::buildoption,

"Initial learning rate\n");

declareOption(ol, "lrate_decay", &NatGradSMPNNet::lrate_decay,

OptionBase::buildoption,

"Learning rate decay factor\n");

declareOption(ol, "output_layer_L1_penalty_factor",

&NatGradSMPNNet::output_layer_L1_penalty_factor,

OptionBase::buildoption,

"Optional (default=0) factor of L1 regularization term, i.e.\n"

"minimize L1_penalty_factor * sum_{ij} |weights(i,j)| during training.\n"

"Gets multiplied by the learning rate. Only on output layer!!");

declareOption(ol, "output_layer_lrate_scale", &NatGradSMPNNet::output_layer_lrate_scale,

OptionBase::buildoption,

"Scaling factor of the learning rate for the output layer. Values less than 1"

"mean that the output layer parameters have a smaller learning rate than the others.\n");

declareOption(ol, "minibatch_size", &NatGradSMPNNet::minibatch_size,

OptionBase::buildoption,

"Update the parameters only so often (number of examples).\n");

declareOption(ol, "neurons_natgrad_template", &NatGradSMPNNet::neurons_natgrad_template,

OptionBase::buildoption,

"Optional template GradientCorrector for the neurons gradient.\n"

"If not provided, then the natural gradient correction\n"

"on the neurons gradient is not performed.\n");

declareOption(ol, "neurons_natgrad_per_layer",

&NatGradSMPNNet::neurons_natgrad_per_layer,

OptionBase::learntoption,

"Vector of GradientCorrector objects for the gradient on the neurons of each layer.\n"

"They are copies of the neuron_natgrad_template provided by the user.\n");

declareOption(ol, "params_natgrad_template",

&NatGradSMPNNet::params_natgrad_template,

OptionBase::buildoption,

"Optional template GradientCorrector object for the gradient of the parameters inside each neuron\n"

"It is replicated in the params_natgrad vector, for each neuron\n"

"If not provided, then the neuron-specific natural gradient estimator is not used.\n");

declareOption(ol, "params_natgrad_per_input_template",

&NatGradSMPNNet::params_natgrad_per_input_template,

OptionBase::buildoption,

"Optional template GradientCorrector object for the gradient of the parameters of the first layer\n"

"grouped based upon their input. It is replicated in the params_natgrad_per_group vector, for each group.\n"

"If provided, overides the params_natgrad_template for the parameters of the first layer.\n");

declareOption(ol, "params_natgrad_per_group",

&NatGradSMPNNet::params_natgrad_per_group,

OptionBase::learntoption,

"Vector of GradientCorrector objects for the gradient inside groups of parameters.\n"

"They are copies of the params_natgrad_template and params_natgrad_per_input_template\n"

"templates provided by the user.\n");

declareOption(ol, "full_natgrad", &NatGradSMPNNet::full_natgrad,

OptionBase::buildoption,

"GradientCorrector for all the parameter gradients simultaneously.\n"

"This should not be set if neurons_natgrad or params_natgrad_template\n"

"is provided. If none of the GradientCorrectors is provided, then\n"

"regular stochastic gradient is performed.\n");

declareOption(ol, "output_type",

&NatGradSMPNNet::output_type,

OptionBase::buildoption,

"type of output cost: 'cross_entropy' for binary classification,\n"

"'NLL' for classification problems, or 'MSE' for regression.\n");

declareOption(ol, "input_size_lrate_normalization_power",

&NatGradSMPNNet::input_size_lrate_normalization_power,

OptionBase::buildoption,

"Scale the learning rate neuron-wise (or layer-wise actually, here):\n"

"-1 scales by 1 / ||x||^2, where x is the 1-extended input vector of the neuron\n"

"0 does not scale the learning rate\n"

"1 scales it by 1 / the nb of inputs of the neuron\n"

"2 scales it by 1 / sqrt(the nb of inputs of the neuron), etc.\n");

declareOption(ol, "lrate_scale_factor",

&NatGradSMPNNet::lrate_scale_factor,

OptionBase::buildoption,

"scale the learning rate in different neurons by a factor\n"

"taken randomly as follows: choose integer n uniformly between\n"

"lrate_scale_factor_min_power and lrate_scale_factor_max_power\n"

"inclusively, and then scale learning rate by lrate_scale_factor^n.\n");

declareOption(ol, "lrate_scale_factor_max_power",

&NatGradSMPNNet::lrate_scale_factor_max_power,

OptionBase::buildoption,

"See help on lrate_scale_factor\n");

declareOption(ol, "lrate_scale_factor_min_power",

&NatGradSMPNNet::lrate_scale_factor_min_power,

OptionBase::buildoption,

"See help on lrate_scale_factor\n");

declareOption(ol, "self_adjusted_scaling_and_bias",

&NatGradSMPNNet::self_adjusted_scaling_and_bias,

OptionBase::buildoption,

"If true, let each neuron self-adjust its bias and scaling factor\n"

"of its activations so that the mean and standard deviation of the\n"

"activations reach the target_mean_activation and target_stdev_activation.\n"

"The activations mean and variance are estimated by a moving average with\n"

"coefficient given by activations_statistics_moving_average_coefficient\n");

declareOption(ol, "target_mean_activation",

&NatGradSMPNNet::target_mean_activation,

OptionBase::buildoption,

"See help on self_adjusted_scaling_and_bias\n");

declareOption(ol, "target_stdev_activation",

&NatGradSMPNNet::target_stdev_activation,

OptionBase::buildoption,

"See help on self_adjusted_scaling_and_bias\n");

declareOption(ol, "activation_statistics_moving_average_coefficient",

&NatGradSMPNNet::activation_statistics_moving_average_coefficient,

OptionBase::buildoption,

"The activations mean and variance used for self_adjusted_scaling_and_bias\n"

"are estimated by a moving average with this coefficient:\n"

" xbar <-- coefficient * xbar + (1-coefficient) x\n"

"where x could be the activation or its square\n");

//declareOption(ol, "corr_profiling_start",

// &NatGradSMPNNet::corr_profiling_start,

// OptionBase::buildoption,

// "Stage to start the profiling of the gradients' and the\n"

// "natural gradients' correlation.\n");

//declareOption(ol, "corr_profiling_end",

// &NatGradSMPNNet::corr_profiling_end,

// OptionBase::buildoption,

// "Stage to end the profiling of the gradients' and the\n"

// "natural gradients' correlations.\n");

declareOption(ol, "use_pvgrad",

&NatGradSMPNNet::use_pvgrad,

OptionBase::buildoption,

"Use Pascal Vincent's gradient technique.\n"

"All options specific to this technique start with pv_...\n"

"This is currently very experimental. Current code is \n"

"NOT YET optimised for speed (nor supports minibatch).");

declareOption(ol, "pv_initial_stepsize",

&NatGradSMPNNet::pv_initial_stepsize,

OptionBase::buildoption,

"Initial size of steps in parameter space");

declareOption(ol, "pv_acceleration",

&NatGradSMPNNet::pv_acceleration,

OptionBase::buildoption,

"Coefficient by which to multiply/divide the step sizes");

declareOption(ol, "pv_min_samples",

&NatGradSMPNNet::pv_min_samples,

OptionBase::buildoption,

"PV's minimum number of samples to estimate gradient sign.\n"

"This should at least be 2.");

declareOption(ol, "pv_required_confidence",

&NatGradSMPNNet::pv_required_confidence,

OptionBase::buildoption,

"Minimum required confidence (probability of being positive or negative) for taking a step.");

declareOption(ol, "pv_random_sample_step",

&NatGradSMPNNet::pv_random_sample_step,

OptionBase::buildoption,

"If this is set to true, then we will randomly choose the step sign\n"

"for each parameter based on the estimated probability of it being\n"

"positive or negative.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::NatGradSMPNNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 255 of file NatGradSMPNNet.h.

:

//##### Protected Options ###############################################

| NatGradSMPNNet * PLearn::NatGradSMPNNet::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 72 of file NatGradSMPNNet.cc.

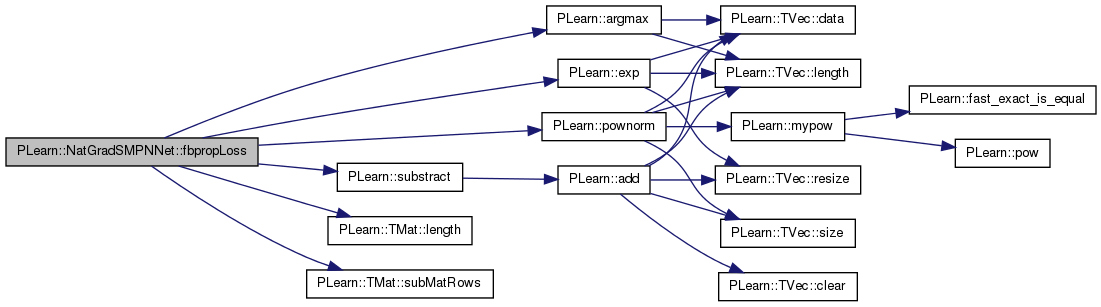

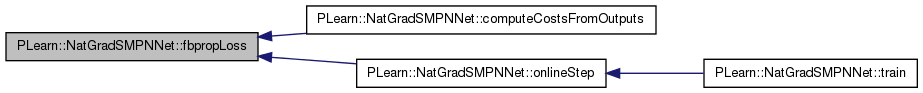

| void PLearn::NatGradSMPNNet::fbpropLoss | ( | const Mat & | output, |

| const Mat & | target, | ||

| const Vec & | example_weights, | ||

| Mat & | train_costs | ||

| ) | const [protected] |

compute train costs given the network top-layer output and write into neuron_gradients_per_layer[n_layers-2], gradient on pre-non-linearity activation

compute train costs given the (pre-final-non-linearity) network top-layer output

Definition at line 1524 of file NatGradSMPNNet.cc.

References PLearn::argmax(), PLearn::exp(), grad, i, PLearn::TMat< T >::length(), minibatch_size, PLearn::PLearner::n_examples, n_layers, neuron_gradients_per_layer, output_type, pl_log, PLearn::pownorm(), PLearn::TMat< T >::subMatRows(), and PLearn::substract().

Referenced by computeCostsFromOutputs(), and onlineStep().

{

int n_examples = output.length();

Mat out_grad = neuron_gradients_per_layer[n_layers-1];

if (n_examples!=minibatch_size)

out_grad = out_grad.subMatRows(0,n_examples);

if (output_type=="NLL")

{

for (int i=0;i<n_examples;i++)

{

int target_class = int(round(target(i,0)));

Vec outp = output(i);

Vec grad = out_grad(i);

exp(outp,grad); // map log-prob to prob

costs(i,0) = -outp[target_class];

costs(i,1) = (target_class == argmax(outp))?0:1;

grad[target_class]-=1;

if (example_weight[i]!=1.0)

costs(i,0) *= example_weight[i];

}

}

else if(output_type=="cross_entropy") {

for (int i=0;i<n_examples;i++)

{

int target_class = int(round(target(i,0)));

Vec outp = output(i);

Vec grad = out_grad(i);

exp(outp,grad); // map log-prob to prob

if( target_class == 1 ) {

costs(i,0) = - outp[0];

costs(i,1) = (grad[0]>0.5)?0:1;

} else {

costs(i,0) = - pl_log( 1.0 - grad[0] );

costs(i,1) = (grad[0]>0.5)?1:0;

}

grad[0] -= (real)target_class;

if (example_weight[i]!=1.0)

costs(i,0) *= example_weight[i];

}

//cout << "costs\t" << costs(0) << endl;

//cout << "gradient\t" << out_grad(0) << endl;

}

else // if (output_type=="MSE")

{

substract(output,target,out_grad);

for (int i=0;i<n_examples;i++)

{

costs(i,0) = pownorm(out_grad(i));

if (example_weight[i]!=1.0)

{

out_grad(i) *= example_weight[i];

costs(i,0) *= example_weight[i];

}

}

}

}

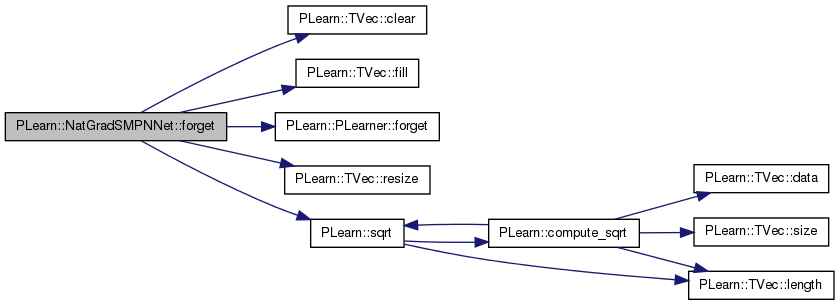

| void PLearn::NatGradSMPNNet::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

(Re-)initialize the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!)

Reimplemented from PLearn::PLearner.

Definition at line 689 of file NatGradSMPNNet.cc.

References activations_scaling, all_mparams, all_params, biases, PLearn::TVec< T >::clear(), cumulative_training_time, PLearn::TVec< T >::fill(), PLearn::PLearner::forget(), i, layer_sizes, mean_activations, n, n_layers, nsteps, params_averaging_coeff, params_update, pv_gradstats, pv_initial_stepsize, pv_stepsigns, pv_stepsizes, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), PLearn::sqrt(), PLearn::PLearner::stage, use_pvgrad, var_activations, and weights.

{

inherited::forget();

for (int i=0;i<n_layers-1;i++)

{

real delta = 1/sqrt(real(layer_sizes[i]));

random_gen->fill_random_uniform(weights[i],-delta,delta);

biases[i].clear();

activations_scaling[i].fill(1.0);

mean_activations[i].clear();

var_activations[i].fill(1.0);

}

stage = 0;

cumulative_training_time=0;

if (params_averaging_coeff!=1.0)

all_mparams << all_params;

if(use_pvgrad)

{

pv_gradstats->forget();

int n = all_params.length();

pv_stepsizes.resize(n);

pv_stepsizes.fill(pv_initial_stepsize);

pv_stepsigns.resize(n);

pv_stepsigns.fill(true);

}

nsteps = 0;

params_update.fill(0);

}

compute a minibatch of size n_examples network top-layer output given layer 0 output (= network input) (note that log-probabilities are computed for classification tasks, output_type=NLL)

compute (pre-final-non-linearity) network top-layer output given input

Definition at line 1433 of file NatGradSMPNNet.cc.

References a, activation_statistics_moving_average_coefficient, activations_scaling, b, biases, PLearn::compute_tanh(), PLearn::TVec< T >::data(), PLearn::diff(), i, j, layer_mparams, layer_params, layer_sizes, PLearn::log_sigmoid(), PLearn::log_softmax(), m, mean_activations, minibatch_size, mweights, PLearn::PLearner::n_examples, n_layers, neuron_extended_outputs_per_layer, neuron_outputs_per_layer, output_type, params_averaging_coeff, params_natgrad_per_input_template, PLASSERT_MSG, PLearn::productScaleAcc(), self_adjusted_scaling_and_bias, PLearn::sqrt(), PLearn::TMat< T >::subMatRows(), PLearn::tanh(), target_mean_activation, target_stdev_activation, var_activations, w, and weights.

Referenced by computeOutput(), and onlineStep().

{

PLASSERT_MSG(n_examples<=minibatch_size,"NatGradSMPNNet::fpropNet: nb input vectors treated should be <= minibatch_size\n");

for (int i=0;i<n_layers-1;i++)

{

Mat prev_layer = (self_adjusted_scaling_and_bias && i+1<n_layers-1)?

neuron_outputs_per_layer[i]:neuron_extended_outputs_per_layer[i];

Mat next_layer = neuron_outputs_per_layer[i+1];

if (n_examples!=minibatch_size)

{

prev_layer = prev_layer.subMatRows(0,n_examples);

next_layer = next_layer.subMatRows(0,n_examples);

}

//alternate

// Are the input weights transposed? (because of ...)

bool tw = true;

if( params_natgrad_per_input_template && i==0 )

tw = false;

// try to use BLAS for the expensive operation

if (self_adjusted_scaling_and_bias && i+1<n_layers-1){

productScaleAcc(next_layer, prev_layer, false,

(during_training || params_averaging_coeff==1.0)?

weights[i]:mweights[i],

tw, 1, 0);

}else{

productScaleAcc(next_layer, prev_layer, false,

(during_training || params_averaging_coeff==1.0)?

layer_params[i]:layer_mparams[i],

tw, 1, 0);

}

// compute layer's output non-linearity

if (i+1<n_layers-1)

for (int k=0;k<n_examples;k++)

{

Vec L=next_layer(k);

if (self_adjusted_scaling_and_bias)

{

real* m=mean_activations[i].data();

real* v=var_activations[i].data();

real* a=L.data();

real* s=activations_scaling[i].data();

real* b=biases[i].data(); // biases[i] is a 1-column matrix

int bmod = biases[i].mod();

for (int j=0;j<layer_sizes[i+1];j++,b+=bmod,m++,v++,a++,s++)

{

if (during_training)

{

real diff = *a - *m;

*v = (1-activation_statistics_moving_average_coefficient) * *v

+ activation_statistics_moving_average_coefficient * diff*diff;

*m = (1-activation_statistics_moving_average_coefficient) * *m

+ activation_statistics_moving_average_coefficient * *a;

*b = target_mean_activation - *m;

if (*v<100*target_stdev_activation*target_stdev_activation)

*s = target_stdev_activation/sqrt(*v);

else // rescale the weights and the statistics for that neuron

{

real rescale_factor = target_stdev_activation/sqrt(*v);

Vec w = weights[i](j);

w *= rescale_factor;

*b *= rescale_factor;

*s = 1;

*m *= rescale_factor;

*v *= rescale_factor*rescale_factor;

}

}

*a = tanh((*a + *b) * *s);

}

}

else{

compute_tanh(L,L);

}

}

else if (output_type=="NLL")

for (int k=0;k<n_examples;k++)

{

Vec L=next_layer(k);

log_softmax(L,L);

}

else if (output_type=="cross_entropy") {

for (int k=0;k<n_examples;k++)

{

Vec L=next_layer(k);

log_sigmoid(L,L);

}

}

}

}

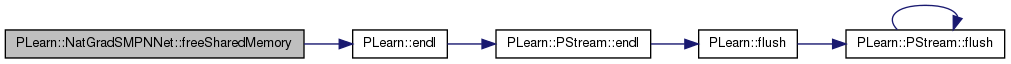

| void PLearn::NatGradSMPNNet::freeSharedMemory | ( | ) | [private] |

Free shared memory that may still be allocated.

Definition at line 609 of file NatGradSMPNNet.cc.

References PLearn::endl(), params_id, params_int_id, params_int_ptr, and params_ptr.

Referenced by build_(), declareMethods(), and ~NatGradSMPNNet().

{

DBG_MODULE_LOG << "Freeing shared memory" << endl;

if (params_ptr) {

shmctl(params_id, IPC_RMID, 0);

params_ptr = NULL;

params_id = -1;

}

if (params_int_ptr) {

shmctl(params_int_id, IPC_RMID, 0);

params_int_ptr = NULL;

params_int_id = -1;

}

}

| OptionList & PLearn::NatGradSMPNNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 72 of file NatGradSMPNNet.cc.

| OptionMap & PLearn::NatGradSMPNNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 72 of file NatGradSMPNNet.cc.

| RemoteMethodMap & PLearn::NatGradSMPNNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 72 of file NatGradSMPNNet.cc.

| TVec< string > PLearn::NatGradSMPNNet::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 1593 of file NatGradSMPNNet.cc.

References output_type, and PLearn::TVec< T >::resize().

Referenced by getTrainCostNames().

{

TVec<string> costs;

if (output_type=="NLL")

{

costs.resize(2);

costs[0]="NLL";

costs[1]="class_error";

}

else if (output_type=="cross_entropy") {

costs.resize(2);

costs[0]="cross_entropy";

costs[1]="class_error";

}

else if (output_type=="MSE")

{

costs.resize(1);

costs[0]="MSE";

}

return costs;

}

| TVec< string > PLearn::NatGradSMPNNet::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 1615 of file NatGradSMPNNet.cc.

References PLearn::TVec< T >::append(), and getTestCostNames().

{

TVec<string> costs = getTestCostNames();

costs.append("train_seconds");

costs.append("cum_train_seconds");

costs.append("big_loop_seconds_1");

costs.append("big_loop_seconds_2");

return costs;

}

| void PLearn::NatGradSMPNNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 628 of file NatGradSMPNNet.cc.

References activations_scaling, all_mparams, all_params, all_params_delta, all_params_gradient, biases, PLearn::deepCopyField(), example_weights, full_natgrad, group_params, group_params_delta, group_params_gradient, hidden_layer_sizes, layer_mparams, layer_params, layer_params_delta, layer_params_gradient, layer_sizes, PLearn::PLearner::makeDeepCopyFromShallowCopy(), mweights, neuron_extended_outputs_per_layer, neuron_gradients, neuron_gradients_per_layer, neuron_outputs_per_layer, neurons_natgrad_per_layer, neurons_natgrad_template, params_int_ptr, params_natgrad_per_group, params_natgrad_per_input_template, params_natgrad_template, params_ptr, PLCHECK_MSG, PLERROR, pv_gradstats, pv_stepsigns, pv_stepsizes, targets, train_costs, and weights.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(hidden_layer_sizes, copies);

deepCopyField(layer_params, copies);

deepCopyField(layer_mparams, copies);

deepCopyField(biases, copies);

deepCopyField(weights, copies);

deepCopyField(mweights, copies);

deepCopyField(activations_scaling, copies);

deepCopyField(neurons_natgrad_template, copies);

deepCopyField(neurons_natgrad_per_layer, copies);

deepCopyField(params_natgrad_template, copies);

deepCopyField(params_natgrad_per_input_template, copies);

deepCopyField(params_natgrad_per_group, copies);

deepCopyField(full_natgrad, copies);

deepCopyField(layer_sizes, copies);

deepCopyField(targets, copies);

deepCopyField(example_weights, copies);

deepCopyField(train_costs, copies);

deepCopyField(neuron_outputs_per_layer, copies);

deepCopyField(neuron_extended_outputs_per_layer, copies);

deepCopyField(all_params, copies);

deepCopyField(all_mparams, copies);

deepCopyField(all_params_gradient, copies);

deepCopyField(layer_params_gradient, copies);

deepCopyField(neuron_gradients, copies);

deepCopyField(neuron_gradients_per_layer, copies);

deepCopyField(all_params_delta, copies);

deepCopyField(group_params, copies);

deepCopyField(group_params_gradient, copies);

deepCopyField(group_params_delta, copies);

deepCopyField(layer_params_delta, copies);

deepCopyField(pv_gradstats, copies);

deepCopyField(pv_stepsizes, copies);

deepCopyField(pv_stepsigns, copies);

PLCHECK_MSG(false, "Not fully implemented");

if (params_ptr)

PLERROR("In NatGradSMPNNet::makeDeepCopyFromShallowCopy - Deep copy of"

" 'params_ptr' not implemented");

if (params_int_ptr)

PLERROR("In NatGradSMPNNet::makeDeepCopyFromShallowCopy - Deep copy of"

" 'params_int_ptr' not implemented");

/*

deepCopyField(, copies);

*/

}

| void PLearn::NatGradSMPNNet::onlineStep | ( | int | t, |

| const Mat & | targets, | ||

| Mat & | train_costs, | ||

| Vec | example_weights | ||

| ) | [protected] |

one minibatch training step

Definition at line 1191 of file NatGradSMPNNet.cc.

References activations_scaling, all_params, all_params_delta, PLearn::TVec< T >::data(), delayed_update, fbpropLoss(), fpropNet(), full_natgrad, g, grad, i, init_lrate, input_size_lrate_normalization_power, j, layer_params, layer_params_delta, layer_params_gradient, layer_params_update, layer_sizes, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), lrate_decay, minibatch_size, PLearn::multiplyAcc(), n_layers, neuron_extended_outputs_per_layer, neuron_gradients_per_layer, neuron_outputs_per_layer, neurons_natgrad_per_layer, neurons_natgrad_template, output_layer_L1_penalty_factor, output_layer_lrate_scale, params_natgrad_per_group, params_natgrad_per_input_template, params_natgrad_template, PLASSERT, PLERROR, PLearn::pow(), PLearn::productScaleAcc(), pvGradUpdate(), PLearn::TVec< T >::resize(), self_adjusted_scaling_and_bias, PLearn::sqrt(), PLearn::sumsquare(), use_pvgrad, weights, and PLearn::TMat< T >::width().

Referenced by train().

{

// mean gradient over minibatch_size examples has less variance, can afford larger learning rate

// TODO Note that this scaling formula is disabled to avoid confusion about

// what learning rates are being used in experiments.

real lrate = /*sqrt(real(minibatch_size))* */ init_lrate/(1 + cur_stage * lrate_decay);

PLASSERT(targets.length()==minibatch_size && train_costs.length()==minibatch_size && example_weights.length()==minibatch_size);

fpropNet(minibatch_size, true);

fbpropLoss(neuron_outputs_per_layer[n_layers-1],targets,example_weights,train_costs);

for (int i=n_layers-1;i>0;i--)

{

// here neuron_gradients_per_layer[i] contains the gradient on activations (weighted sums)

// (minibatch_size x layer_size[i])

Mat previous_neurons_gradient = neuron_gradients_per_layer[i-1];

Mat next_neurons_gradient = neuron_gradients_per_layer[i];

Mat previous_neurons_output = neuron_outputs_per_layer[i-1];

real layer_lrate_factor = (i==n_layers-1)?output_layer_lrate_scale:1;

if (self_adjusted_scaling_and_bias && i+1<n_layers-1)

for (int k=0;k<minibatch_size;k++)

{

Vec g=next_neurons_gradient(k);

g*=activations_scaling[i-1]; // pass gradient through scaling

}

if (input_size_lrate_normalization_power==-1)

layer_lrate_factor /= sumsquare(neuron_extended_outputs_per_layer[i-1]);

else if (input_size_lrate_normalization_power==-2)

layer_lrate_factor /= sqrt(sumsquare(neuron_extended_outputs_per_layer[i-1]));

else if (input_size_lrate_normalization_power!=0)

{

int fan_in = neuron_extended_outputs_per_layer[i-1].length();

if (input_size_lrate_normalization_power==1)

layer_lrate_factor/=fan_in;

else if (input_size_lrate_normalization_power==2)

layer_lrate_factor/=sqrt(real(fan_in));

else layer_lrate_factor/=pow(fan_in,1.0/input_size_lrate_normalization_power);

}

// optionally correct the gradient on neurons using their covariance

if (neurons_natgrad_template && neurons_natgrad_per_layer[i])

{

static Vec tmp;

tmp.resize(layer_sizes[i]);

for (int k=0;k<minibatch_size;k++)

{

Vec g_k = next_neurons_gradient(k);

PLERROR("Not implemented (t not available)");

//(*neurons_natgrad_per_layer[i])(t-minibatch_size+1+k,g_k,tmp);

g_k << tmp;

}

}

if (i>1) // compute gradient on previous layer

{

// propagate gradients

//Profiler::pl_profile_start("ProducScaleAccOnlineStep");

productScaleAcc(previous_neurons_gradient,next_neurons_gradient,false,

weights[i-1],false,1,0);

//Profiler::pl_profile_end("ProducScaleAccOnlineStep");

// propagate through tanh non-linearity

for (int j=0;j<previous_neurons_gradient.length();j++)

{

real* grad = previous_neurons_gradient[j];

real* out = previous_neurons_output[j];

for (int k=0;k<previous_neurons_gradient.width();k++,out++)

grad[k] *= (1 - *out * *out); // gradient through tanh derivative

}

}

// compute gradient on parameters, possibly update them

if (use_pvgrad)

{

PLERROR("What is this?");

productScaleAcc(layer_params_gradient[i-1],next_neurons_gradient,true,

neuron_extended_outputs_per_layer[i-1],false,1,0);

}

else if (full_natgrad || params_natgrad_template || params_natgrad_per_input_template)

{

//alternate

PLERROR("No, I just want stochastic gradient!");

if( params_natgrad_per_input_template && i==1 ){ // parameters are transposed

productScaleAcc(layer_params_gradient[i-1],

neuron_extended_outputs_per_layer[i-1], true,

next_neurons_gradient, false,

1, 0);

}else{

productScaleAcc(layer_params_gradient[i-1],next_neurons_gradient,true,

neuron_extended_outputs_per_layer[i-1],false,1,0);

}

layer_params_gradient[i-1] *= 1.0/minibatch_size; // use the MEAN gradient

} else {// just regular stochastic gradient

// compute gradient on weights and update them in one go (more efficient)

// mean gradient has less variance, can afford larger learning rate

//Profiler::pl_profile_start("ProducScaleAccOnlineStep");

if (delayed_update) {

// Store updates in 'layer_params_update'.

//layer_params_update[i - 1].fill(0);

productScaleAcc(layer_params_update[i - 1],

next_neurons_gradient, true,

neuron_extended_outputs_per_layer[i-1], false,

-layer_lrate_factor*lrate, 1);

} else {

// Directly update the parameters.

productScaleAcc(layer_params[i-1],next_neurons_gradient,true,

neuron_extended_outputs_per_layer[i-1],false,

-layer_lrate_factor*lrate /* /minibatch_size */, 1);

}

//Profiler::pl_profile_end("ProducScaleAccOnlineStep");

}

}

if (use_pvgrad)

{

PLERROR("What is this?");

pvGradUpdate();

}

else if (full_natgrad)

{

PLERROR("Not implemented (t not available)");

//(*full_natgrad)(t/minibatch_size,all_params_gradient,all_params_delta); // compute update direction by natural gradient

if (output_layer_lrate_scale!=1.0)

layer_params_delta[n_layers-2] *= output_layer_lrate_scale; // scale output layer's learning rate

multiplyAcc(all_params,all_params_delta,-lrate); // update

// Hack to apply batch gradient even in this case (used for profiling

// the gradient correlations)

//if (output_layer_lrate_scale!=1.0)

// layer_params_gradient[n_layers-2] *= output_layer_lrate_scale; // scale output layer's learning rate

// multiplyAcc(all_params,all_params_gradient,-lrate); // update

} else if (params_natgrad_template || params_natgrad_per_input_template)

{

PLERROR("Not implemented (t not available)");

for (int i=0;i<params_natgrad_per_group.length();i++)

{

//GradientCorrector& neuron_natgrad = *(params_natgrad_per_group[i]);

//neuron_natgrad(t/minibatch_size,group_params_gradient[i],group_params_delta[i]); // compute update direction by natural gradient

}

//alternate

if (output_layer_lrate_scale!=1.0)

layer_params_delta[n_layers-2] *= output_layer_lrate_scale; // scale output layer's learning rate

multiplyAcc(all_params,all_params_delta,-lrate); // update

}

// profiling gradient correlation

//if( (t>=corr_profiling_start) && (t<=corr_profiling_end) && g_corrprof ) {

// (*g_corrprof)(all_params_gradient);

// (*ng_corrprof)(all_params_delta);

//}

// Output layer L1 regularization

if( output_layer_L1_penalty_factor != 0. ) {

PLERROR("Not implemented");

real L1_delta = lrate * output_layer_L1_penalty_factor;

real* m_i = layer_params[n_layers-2].data();

for(int i=0; i<layer_params[n_layers-2].length(); i++,m_i+=layer_params[n_layers-2].mod()) {

for(int j=0; j<layer_params[n_layers-2].width(); j++) {

if( m_i[j] > L1_delta )

m_i[j] -= L1_delta;

else if( m_i[j] < -L1_delta )

m_i[j] += L1_delta;

else

m_i[j] = 0.;

}

}

}

}

| int PLearn::NatGradSMPNNet::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 684 of file NatGradSMPNNet.cc.

References noutputs.

{

return noutputs;

}

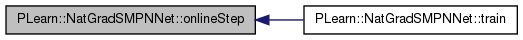

| void PLearn::NatGradSMPNNet::pvGradUpdate | ( | ) | [protected] |

gradient computation and weight update in Pascal Vincent's gradient technique

Definition at line 1357 of file NatGradSMPNNet.cc.

References all_params, all_params_gradient, PLearn::TVec< T >::fill(), PLearn::StatsCollector::forget(), PLearn::gauss_01_cum(), PLearn::TVec< T >::length(), m, PLearn::StatsCollector::mean(), n, PLearn::StatsCollector::nnonmissing(), pv_acceleration, pv_gradstats, pv_initial_stepsize, pv_min_samples, pv_random_sample_step, pv_required_confidence, pv_stepsigns, pv_stepsizes, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), and PLearn::StatsCollector::stderror().

Referenced by onlineStep().

{

int n = all_params_gradient.length();

if(pv_stepsizes.length()==0)

{

pv_stepsizes.resize(n);

pv_stepsizes.fill(pv_initial_stepsize);

pv_stepsigns.resize(n);

pv_stepsigns.fill(true);

}

pv_gradstats->update(all_params_gradient);

real pv_deceleration = 1.0/pv_acceleration;

for(int k=0; k<n; k++)

{

StatsCollector& st = pv_gradstats->getStats(k);

int n = (int)st.nnonmissing();

if(n>pv_min_samples)

{

real m = st.mean();

real e = st.stderror();

real prob_pos = gauss_01_cum(m/e);

real prob_neg = 1.-prob_pos;

if(!pv_random_sample_step)

{

if(prob_pos>=pv_required_confidence)

{

all_params[k] += pv_stepsizes[k];

pv_stepsizes[k] *= (pv_stepsigns[k]?pv_acceleration:pv_deceleration);

pv_stepsigns[k] = true;

st.forget();

}

else if(prob_neg>=pv_required_confidence)

{

all_params[k] -= pv_stepsizes[k];

pv_stepsizes[k] *= ((!pv_stepsigns[k])?pv_acceleration:pv_deceleration);

pv_stepsigns[k] = false;

st.forget();

}

}

else // random sample update direction (sign)

{

bool ispos = (random_gen->binomial_sample(prob_pos)>0);

if(ispos) // picked positive

all_params[k] += pv_stepsizes[k];

else // picked negative

all_params[k] -= pv_stepsizes[k];

pv_stepsizes[k] *= (pv_stepsigns[k]==ispos) ?pv_acceleration :pv_deceleration;

pv_stepsigns[k] = ispos;

st.forget();

}

}

}

}

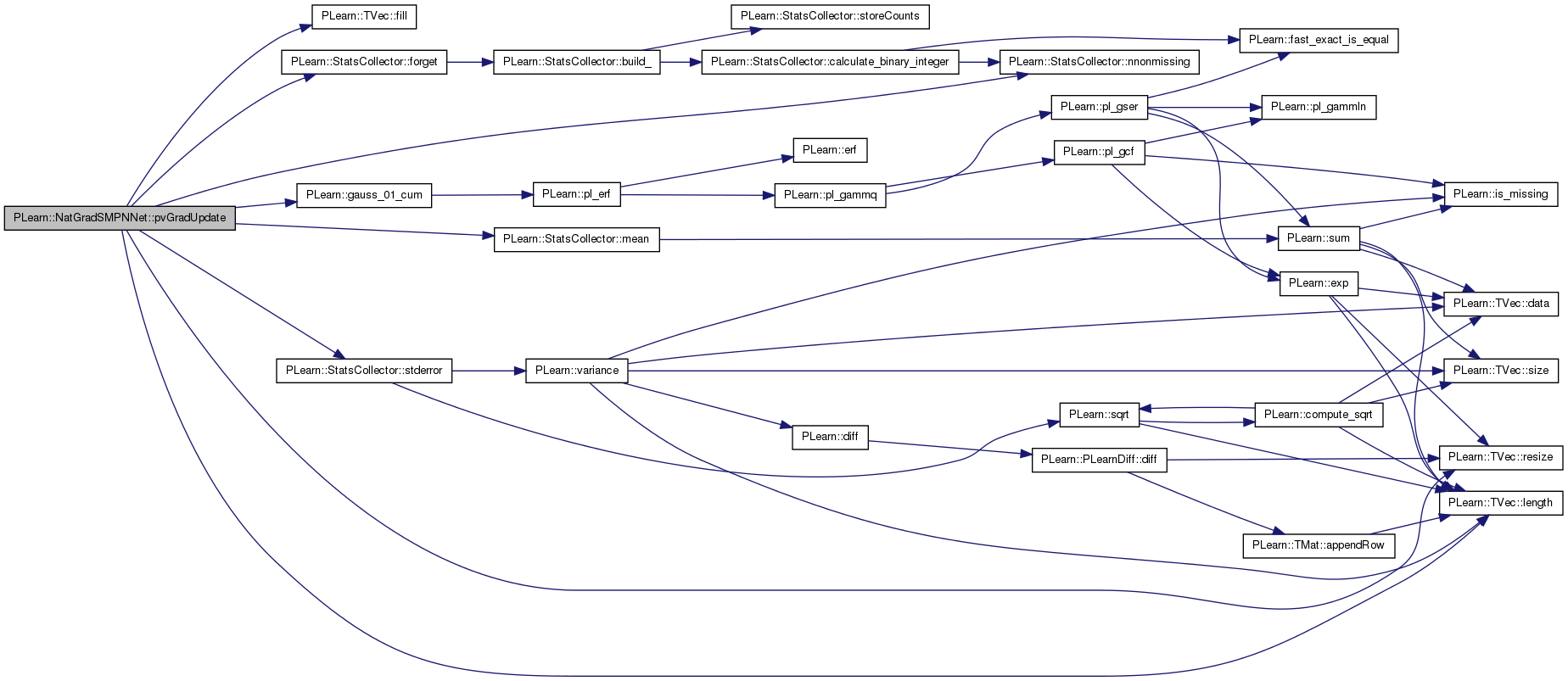

| void PLearn::NatGradSMPNNet::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 726 of file NatGradSMPNNet.cc.

References all_params, b, build(), classname(), PLearn::TVec< T >::clear(), cumulative_training_time, delayed_update, PLearn::Profiler::end(), PLearn::endl(), example_weights, PLearn::TVec< T >::fill(), PLearn::TMat< T >::fill(), PLearn::VMat::getExample(), PLearn::Profiler::getStats(), i, PLearn::PLearner::inputsize_, PLearn::VMat::length(), PLearn::StatsCollector::mean(), minibatch_size, MISSING_VALUE, neuron_outputs_per_layer, PLearn::PLearner::nstages, nsteps, PLearn::PLearner::nTrainCosts(), onlineStep(), params_int_ptr, params_update, PLASSERT, PLASSERT_MSG, PLCHECK, PLERROR, PLWARNING, PLearn::pout, PLearn::StatsCollector::pseudo_quantile(), PLearn::PLearner::report_progress, PLearn::Profiler::reset(), PLearn::TMat< T >::resize(), PLearn::sample(), semaphore_id, PLearn::PLearner::setTrainStatsCollector(), PLearn::PLearner::stage, PLearn::Profiler::start(), PLearn::StatsCollector::stderror(), PLearn::TVec< T >::subVec(), synchronize_update, PLearn::Profiler::Stats::system_duration, targets, PLearn::PLearner::targetsize(), PLearn::Profiler::ticksPerSecond(), train_costs, PLearn::PLearner::train_set, PLearn::PLearner::train_stats, PLearn::StatsCollector::update(), PLearn::Profiler::Stats::user_duration, wait_for_final_update, and PLearn::TMat< T >::width().

{

static int log_idx = -1;

log_idx = (log_idx + 1) % 50;

/*

PStream tmp_log = openFile("/u/delallea/tmp/tmp_log" + tostring(log_idx),

PStream::raw_ascii, "w");

tmp_log << "Starting train " << endl;

tmp_log.flush();

*/

if (inputsize_<0) {

/*

tmp_log << "Calling build" << endl;

tmp_log.flush();

*/

build();

}

targets.resize(minibatch_size,targetsize()); // the train_set's targetsize()

if(!train_set)

PLERROR("In NNet::train, you did not setTrainingSet");

if(!train_stats)

setTrainStatsCollector(new VecStatsCollector());

train_costs.fill(MISSING_VALUE) ;

train_stats->forget();

PP<ProgressBar> pb;

//tmp_log << "Beginning stuff done" << endl;

//tmp_log.flush();

Profiler::reset("training");

Profiler::start("training");

//Profiler::pl_profile_start("Totaltraining");

if( report_progress && stage < nstages )

pb = new ProgressBar( "Training "+classname(),

nstages - stage );

Vec costs_plus_time(nTrainCosts(), MISSING_VALUE);

Vec costs = costs_plus_time.subVec(0, train_costs.width());

int nsamples = train_set->length();

// Obtain the number of CPUs we want to use.

char* ncpus_ptr = getenv("NCPUS");

if (!ncpus_ptr)

PLERROR("In NatGradSMPNNet::train - The environment variable 'NCPUS' "

"must be set (to the number of CPUs being used)");

int ncpus = atoi(ncpus_ptr);

// Semaphore to know which cpu should be updating weights next.

if (semaphore_id >= 0) {

// First get rid of existing semaphore.

int success = semctl(semaphore_id, 0, IPC_RMID);

if (success < 0)

PLERROR("In NatGradSMPNNet::train - Could not remove previous "

"semaphore (errno = %d)", errno);

semaphore_id = -1;

}

// The semaphore has 'ncpus' + 2 values.

// The first one is the index of the CPU that will be next to update

// weights.

// The other ones are 0/1 values that are initialized with 0, and take 1

// once the corresponding CPU has finished all updates for this training

// period.

// Finally, the last value is 0 when 'synchronize_update' is false, and

// otherwise is:

// - in a first step, the number of CPUs that have finished performing

// their mini-batch computation,

// - in a second step, the number of CPUs that have finished updating the

// shared parameters.

semaphore_id = semget(IPC_PRIVATE, ncpus + 2, 0666 | IPC_CREAT);

if (semaphore_id == -1)

PLERROR("In NatGradSMPNNet::train - Could not create semaphore "

"(errno = %d)", errno);

// Initialize all values in the semaphore to zero.

semun semun_v;

semun_v.val = 0;

for (int i = 0; i < ncpus + 2; i++) {

int success = semctl(semaphore_id, i, SETVAL, semun_v);

if (success != 0)

PLERROR("In NatGradSMPNNet::train - Could not initialize semaphore"

" value (errno = %d)", errno);

}

// Initialize current stage, stored in integer shared memory.

int stage_idx = 0;

params_int_ptr[stage_idx] = stage;

//tmp_log << "Ready to fork" << endl;

//tmp_log.flush();

// No need to call wait() to acknowledge the death of a child process in

// order to avoid defunct processes.

signal(SIGCLD, SIG_IGN);

// Fork one process/cpu.

int iam = 0;

for (int cpu = 1; cpu < ncpus ; cpu++)

if (fork() == 0) {

iam = cpu;

break;

}

if (!iam) {

//tmp_log << "Forked" << endl;

//tmp_log.flush();

}

// Each processor computes gradient over its own subset of samples (between

// indices 'start' and 'start + my_n_samples' in the training set).

int n_left = nsamples % ncpus;

int n_per_cpu = nsamples / ncpus;

int start, my_n_samples;

if (iam < n_left) {

// This CPU is given one extra training sample to compensate for the

// fact that the number of samples is not an exact multiple of the

// number of CPUs.

start = (n_per_cpu + 1) * iam;

my_n_samples = n_per_cpu + 1;

} else {

start = (n_per_cpu + 1) * n_left + n_per_cpu * (iam - n_left);

my_n_samples = n_per_cpu;

}

if (iam == 0)

PLASSERT_MSG( start == 0, "First CPU must start at first sample" );

if (iam == ncpus - 1)

PLASSERT_MSG( start + my_n_samples == nsamples,

"Last CPU must start at last sample" );

// The total number of examples that must be seen is given by 'stage_incr',

// computed as 'nstages - stage'. Each CPU is responsible for going through

// a fraction of 'stage_incr', denoted by 'my_stage_incr'.

int stage_incr = nstages - stage;

int stage_incr_per_cpu = stage_incr / ncpus;

int stage_incr_left = stage_incr % ncpus;

int my_stage_incr = iam >= stage_incr_left ? stage_incr_per_cpu

: stage_incr_per_cpu + 1;

PP<PTimer> ptimer;

// Number of mini-batches that have been processed before one update.

int n_minibatches_per_update = 0;

StatsCollector nmbpu_stats; // Use -1 in constructor if you want the median.

if (iam == 0) {

//tmp_log << "Starting loop" << endl;

//tmp_log.flush();

ptimer = new PTimer();

Profiler::reset("big_loop");

Profiler::start("big_loop");

ptimer->startTimer("big_loop");

}

// TODO Maybe...

// - see if it has anything to do with accessing shared memory

// - try to mix in data with a lower or higher measure_every, just to see

// if the difference in behaviors in speedup_whilefalse is due to having

// less examples to process.

//pout << "CPU " << iam << ": my_stage_incr = " << my_stage_incr << endl;

for(int i = 0; i < my_stage_incr; i++)

{

int sample = start + i % my_n_samples;

int b = i % minibatch_size;

Vec input = neuron_outputs_per_layer[0](b);

Vec target = targets(b);

//Profiler::pl_profile_start("getting_data");

train_set->getExample(sample, input, target, example_weights[b]);

//Profiler::pl_profile_end("getting_data");

if (b == minibatch_size - 1 || i == my_stage_incr - 1 )

{

// Read the current stage value (will be used to compute the

// current learning rate).

int cur_stage = params_int_ptr[stage_idx];

PLASSERT( cur_stage >= 0 );

// Note that we should actually call onlineStep only on the subset

// of samples that are new (compared to the previous mini-batch).

// This is left as a TODO since it is not a priority.

/*

string samples_str = tostring(samples);

printf("CPU %d computing (cur_stage = %d) on samples: %s\n",

iam, cur_stage, samples_str.c_str());

*/

onlineStep(cur_stage, targets, train_costs, example_weights );

n_minibatches_per_update++;

/*

pout << "CPU " << iam << ": n_minibatches_per_update = "

<< n_minibatches_per_update << endl;

*/

/*

sleep(iam);

string update = tostring(params_update);

printf("\nCPU %d's current update: %s\n", iam, update.c_str());

*/

nsteps += b + 1;

/*

for (int i=0;i<minibatch_size;i++)

{

costs << train_costs(b);

train_stats->update( costs_plus_time );

}

*/

// Update weights if it is this cpu's turn.

bool performed_update = false; // True when this CPU has updated.

while (true) {

int sem_value = semctl(semaphore_id, 0, GETVAL);

if (sem_value == iam) {

int n_ready = 0;

if (synchronize_update && !performed_update) {

// We first indicate that this CPU is ready to perform his

// update.

n_ready = semctl(semaphore_id, ncpus + 1, GETVAL);

n_ready++;

semun_v.val = n_ready;

int success = semctl(semaphore_id, ncpus + 1, SETVAL,

semun_v);

PLCHECK( success == 0 );

}

if (delayed_update && (!synchronize_update ||

(!performed_update && n_ready > ncpus)))

{

// Once all CPUs are ready we can actually perform the

// updates.

//printf("CPU %d updating (nsteps = %d)\n", iam, nsteps);

all_params += params_update;

//params_update += all_params;

params_update.clear();

nmbpu_stats.update(real(n_minibatches_per_update));

n_minibatches_per_update = 0;

performed_update = true;

}

if (nsteps > 0) {

// Update the current stage.

cur_stage = params_int_ptr[stage_idx];

PLASSERT( cur_stage >= 0 );

int new_stage = cur_stage + nsteps;

params_int_ptr[stage_idx] = new_stage;

nsteps = 0;

}

if (n_ready == 2 * ncpus) {

// The last CPU has updated the parameters. All CPUs can

// now break out of this loop.

n_ready = semun_v.val = 0;

int success = semctl(semaphore_id, ncpus + 1, SETVAL,

semun_v);

PLCHECK( success == 0 );

}

// Give update token to next CPU.

sem_value = (sem_value + 1) % ncpus;

semun_v.val = sem_value;

int success = semctl(semaphore_id, 0, SETVAL, semun_v);

if (success != 0)

PLERROR("In NatGradSMPNNet::train - Could not update "

"semaphore with next CPU (errno = %d, returned "

"value = %d, set value = %d)", errno, success,

semun_v.val);

if (!delayed_update || n_ready == 0)

// If 'synchronize_update' is false this is always true.

// If 'synchronize_update' is true this means all CPUs have

// updated the parameters.

break;

} else {

if (!synchronize_update)

// We do not wait our turn: instead we move on to the next

// minibatch.

break;

if (performed_update) {

// TODO We could break here by checking the 'n_ready'

// semaphore: once it is reset to zero everyone can exit at

// once without necessarily doing it in turn.

}

}

}

}

/*

if (params_averaging_coeff!=1.0 &&

b==minibatch_size-1 &&

(stage+1)%(minibatch_size*params_averaging_freq)==0)

{

PLERROR("Not implemented for SMP");

multiplyScaledAdd(all_params, 1-params_averaging_coeff,

params_averaging_coeff, all_mparams);

}

if( pb ) {

PLERROR("Progress bar not implemented for SMP");

pb->update( stage + 1 );

}

*/

}

if (iam == 0) {

//tmp_log << "Loop ended" << endl;

//tmp_log.flush();

Profiler::end("big_loop");

ptimer->stopTimer("big_loop");

}

if (!wait_for_final_update) {

if (nsteps > 0) {

//printf("CPU %d final updating (nsteps =%d)\n", iam, nsteps);

if (delayed_update) {

all_params += params_update;

params_update.clear();

}

// Note that the line below is not safe: if two CPUs are running it