|

PLearn 0.1

|

|

PLearn 0.1

|

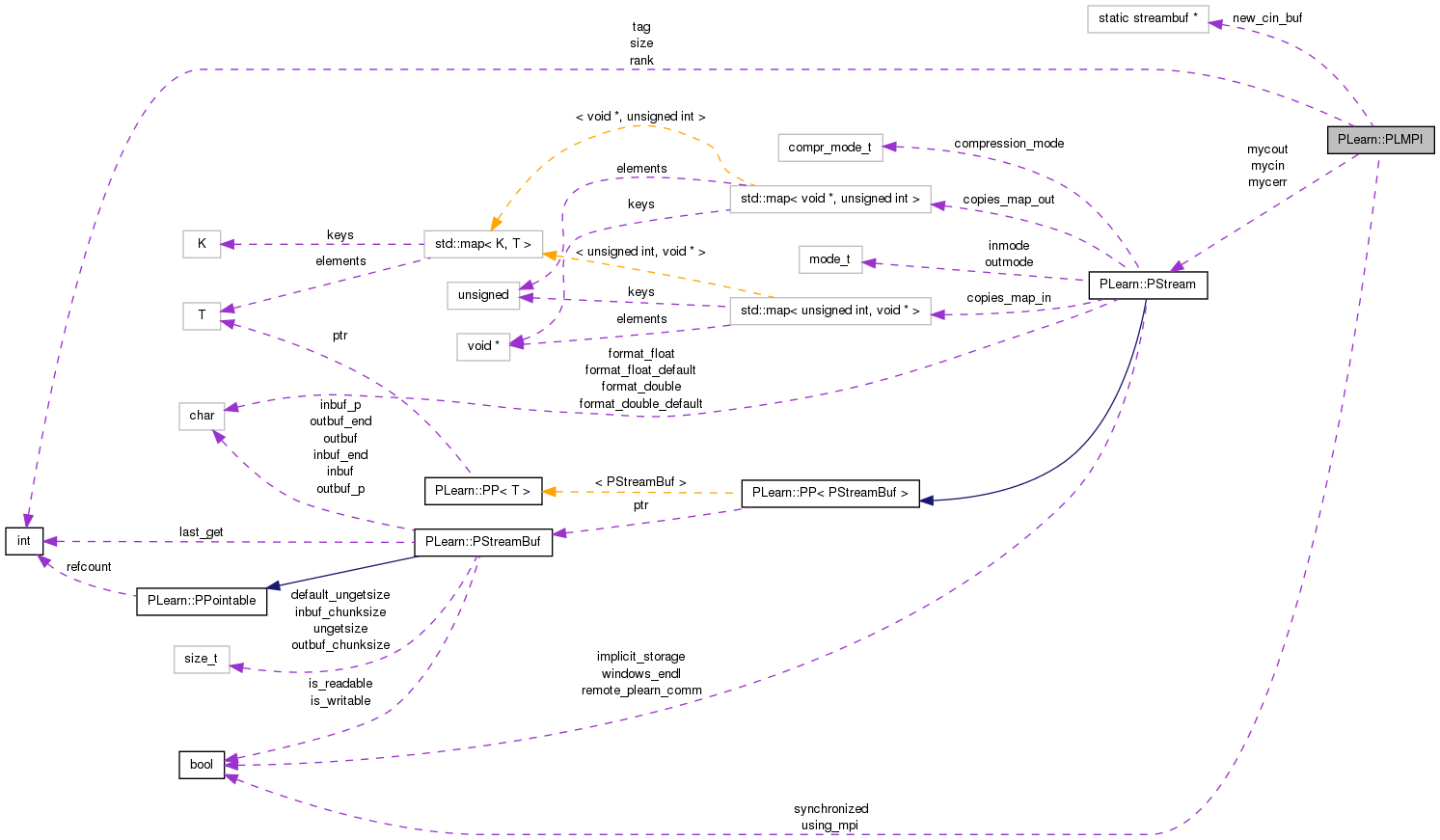

** PLMPI is just a "namespace holder" (because we're not actually using namespaces) for a few MPI related variables. All members are static ** More...

#include <PLMPI.h>

Static Public Member Functions | |

| static void | init (int *argc, char ***argv) |

| static void | finalize () |

| static void | exchangeBlocks (double *data, int n, int blocksize, double *buffer=0) |

| static void | exchangeColumnBlocks (Mat sourceBlock, Mat destBlocks) |

| static void | exchangeBlocks (float *data, int n, int blocksize, float *buffer=0) |

Static Public Attributes | |

| static bool | using_mpi = false |

| true when USING_MPI is defined, false otherwise | |

| static int | size = 0 |

| total number of nodes (or processes) running in this MPI_COMM_WORLD (0 if not using mpi) | |

| static int | rank = 0 |

| rank of this node (if not using mpi it's always 0) | |

| static bool | synchronized = true |

| Do ALL the nodes have a synchronized state and are carrying the same sequential instructions? | |

| static PStream | mycout |

| static PStream | mycerr |

| static PStream | mycin |

| static int | tag = 2909 |

| The default tag to be used by all send/receive (we typically use this single tag through all of PLearn) | |

Static Protected Attributes | |

| static streambuf * | new_cin_buf = 0 |

** PLMPI is just a "namespace holder" (because we're not actually using namespaces) for a few MPI related variables. All members are static **

Example of code using the PLMPI facility:

In your main function, make sure to call

int main(int argc, char** argv) { PLMPI::init(&argc,&argv); ... [ your code here ] PLMPI::finalize(); }

Inside the if USING_MPI section, you can use any MPI calls you see fit.

Note the useful global static variables PLMPI::rank that gives you the rank of your process and PLMPI::size that gives the number of parallel processes running in MPI_COMM_WORLD. These are initialised in the init code by calling MPI_Comm_size and MPI_Commm_rank. They are provided so you don't have to call those functions each time....

File input/output -----------------

NFS shared files can typically be opened for *reading* from all the nodes simultaneously without a problem.

For performance reason, it may sometimes be useful to have local copies (on a /tmp like directory) of heavily accessed files so tat each node can open its own local copy for reading, resulting in no NFS network traffic and no file-server overload.

In general a file cannot be opened for writing (or read&write) simultaneously by several processes/nodes. Special handling will typically be necessary. A strategy consists in having only rank#0 responsible for the file, and all other nodes communicating with rank#0 to access it.

Direct non-read operations on file descriptors or file-like objects through system or C calls, are likely to result in an undesired behaviour, when done simultaneously on all nodes!!! This includes calls to the C library's printf and scanf functions. Such code will generally require specific handling/rewriting for parallel execution.

cin, cout and cerr ------------------

Currently, the init function redirects the cout and cin of all processes of rank other than #0 to/from /dev/null but cerr is left as is. Thus sections not specifically written for parallel execution will have the following (somewhat reasonable) default behaviour:

If for some strange reason you *do* want a non-rank#0 node to write to your terminal's cout, you can use PLMPI::mycout (which is copied from the initial cout), but result will probably be ugly and intermixed with the other processes' cout.

pofstream ---------

NOTE: While using pofstream may be useful for quickly adapting old code, I rather recommend using the regular ofstream in conjunction with if(PLMPI::rank==0) blocks to control what to do.

The PLMPI::synchronization flag -------------------------------

This flag is important for a particular form of parallelism:

In this paradigm, the data on all nodes (running an identical program) are required to be in the same synchronized state before they enter a parallel computation. In this paradigm, the nodes will typically all execute the same "sequential" section of the code, on identical data, roughly at the same time. This state of things will be denoted by "synchronized=true". Then at some point they may temporarily enter a section where each node will carry different computation (or a similar computation but on different parts of the data). This state will be denoted by "synchronized=false". They typically resynchronize their states before leaving the section, and resume their synchronized sequential behaviour until they encounter the next parallel section.

It is very important that sections using this type of paralelism, check if synchronized==true prior to entering their parallel implementation and fall back to a strictly sequential impementation otherwise. They should also set the flag to false at entry (so that functions they call will stay sequential) and then set it back to true after resynchronization, prior to resuming the synchronized "sequential" computations.

The real meaning of synchronized=true prior to entering a parallel code is actually "all the data *this section uses* is the same on all nodes, when they reach this point" rather than "all the data in the whole program is the same". This is a subtle difference, but can allow you to set or unset the flag on a fine grain basis prior to calling potentially parallel functions.

Here is what typical code should look like under this paradigm:

int do_something( ... parameters ...) { ... sequential part

if(USING_MPI && PLMPI::synchronized && size_of_problem_is_worth_a_parallel_computation) { // Parallel implementation if USING_MPI PLMPI::synchronized = false;

... each node starts doing sometihng different ex: switch(PLMPI::rank) ...

Here we may call other functions, that should entirely run in sequential mode (unless you know what you are doing!)

... we resynchronize the state of each node. (collect their collective answer, etc...) For ex: MPI_Allgather(...)

PLMPI::synchronized = true; #endif } else // default sequential implementation { }

}

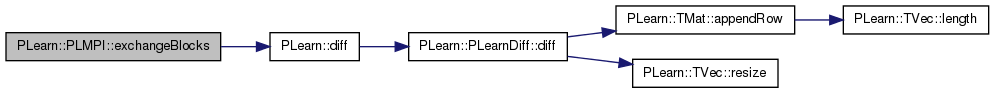

| void PLearn::PLMPI::exchangeBlocks | ( | double * | data, |

| int | n, | ||

| int | blocksize, | ||

| double * | buffer = 0 |

||

| ) | [static] |

Definition at line 100 of file PLMPI.cc.

References PLearn::diff(), i, PLERROR, rank, and size.

{

//#if USING_MPI

int blockstart = PLMPI::rank*blocksize;

//fprintf(stderr,"blockstart = %d\n",blockstart);

int theblocksize = blocksize;

int diff=n-blockstart;

if (blocksize>diff) theblocksize=diff;

if (theblocksize<=0)

PLERROR("PLMPI::exchangeBlocks with block of size %d (b=%d,n=%d,r=%d,N=%d)",

theblocksize,blocksize,n,PLMPI::rank,PLMPI::size);

//fprintf(stderr,"theblocksize = %d\n",theblocksize);

if (blocksize*PLMPI::size==n && buffer)

{

memcpy(buffer,&data[blockstart],theblocksize*sizeof(double));

#if USING_MPI

MPI_Allgather(buffer,blocksize,MPI_DOUBLE,

data,blocksize,MPI_DOUBLE,MPI_COMM_WORLD);

#endif

}

else

{

for (int i=0;i<PLMPI::size;i++)

{

int bstart = i*blocksize;

diff = n-bstart;

int bsize = blocksize;

if (bsize>diff) bsize=diff;

//if (i==PLMPI::rank)

//{

//fprintf(stderr,"start broadcast of %d, bstart=%d, size=%d\n",i,bstart,bsize);

//for (int j=0;j<bsize;j++)

//cerr << data[bstart+j] << endl;

//}

#if USING_MPI

MPI_Bcast(&data[bstart],bsize,MPI_DOUBLE,i,MPI_COMM_WORLD);

#endif

//printf("done broadcast of %d",i);

}

}

//#endif

}

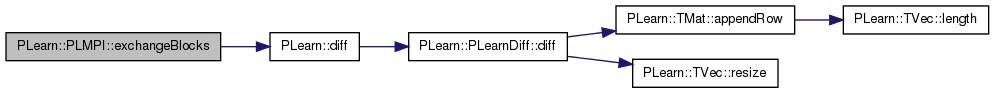

| void PLearn::PLMPI::exchangeBlocks | ( | float * | data, |

| int | n, | ||

| int | blocksize, | ||

| float * | buffer = 0 |

||

| ) | [static] |

Definition at line 143 of file PLMPI.cc.

References PLearn::diff(), i, PLERROR, rank, and size.

{

//#if USING_MPI

int blockstart = PLMPI::rank*blocksize;

//fprintf(stderr,"blockstart = %d\n",blockstart);

int theblocksize = blocksize;

int diff=n-blockstart;

if (blocksize>diff) theblocksize=diff;

if (theblocksize<=0)

PLERROR("PLMPI::exchangeBlocks with block of size %d (b=%d,n=%d,r=%d,N=%d)",

theblocksize,blocksize,n,PLMPI::rank,PLMPI::size);

//fprintf(stderr,"theblocksize = %d\n",theblocksize);

if (blocksize*PLMPI::size==n && buffer)

{

memcpy(buffer,&data[blockstart],theblocksize*sizeof(float));

#if USING_MPI

MPI_Allgather(buffer,blocksize,MPI_FLOAT,

data,blocksize,MPI_FLOAT,MPI_COMM_WORLD);

#endif

}

else

{

for (int i=0;i<PLMPI::size;i++)

{

int bstart = i*blocksize;

diff = n-bstart;

int bsize = blocksize;

if (bsize>diff) bsize=diff;

//if (i==PLMPI::rank)

//{

//fprintf(stderr,"start broadcast of %d, bstart=%d, size=%d\n",i,bstart,bsize);

//for (int j=0;j<bsize;j++)

//cerr << data[bstart+j] << endl;

//}

#if USING_MPI

MPI_Bcast(&data[bstart],bsize,MPI_FLOAT,i,MPI_COMM_WORLD);

#endif

//printf("done broadcast of %d",i);

}

}

//#endif

}

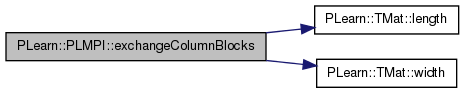

Definition at line 186 of file PLMPI.cc.

References i, PLearn::TMat< T >::length(), PLERROR, PLWARNING, rank, size, and PLearn::TMat< T >::width().

{

#if USING_MPI

int width = sourceBlock.width();

int length = sourceBlock.length();

int total_width = destBlocks.width();

if (total_width%PLMPI::size == 0) // dest width is a multiple of size

{

for (int i=0; i<length; i++)

{

MPI_Allgather(sourceBlock[i],width,PLMPI_REAL,

destBlocks[i],width,PLMPI_REAL,MPI_COMM_WORLD);

}

}

else // last block has different width

{

int size_minus_one = PLMPI::size-1;

int* counts = (int*)malloc(sizeof(int)*PLMPI::size);

int* displs = (int*)malloc(sizeof(int)*PLMPI::size);

int* ptr_counts = counts;

int* ptr_displs = displs;

int norm_width = total_width / PLMPI::size + 1; // width of all blocks except last

int last_width = total_width - norm_width*size_minus_one; // width of last block

if (last_width<=0)

PLERROR("In PLMPI::exchangeColumnsBlocks: unproper choice of processes (%d) for matrix width (%d) leads "

"to width = %d for first mats and width = %d for last mat.",PLMPI::size,total_width,norm_width,last_width);

// sanity check

if (PLMPI::rank==size_minus_one)

{

if (width!=last_width)

PLERROR("In PLMPI::exchangeColumnsBlocks: width of last block is %d, should be %d.",width,last_width);

}

else

if (width!=norm_width)

PLERROR("In PLMPI::exchangeColumnsBlocks: width of block %d is %d, should be %d.",PLMPI::rank,width,last_width);

for (int i=0;i<size_minus_one;i++)

{

*ptr_counts++ = norm_width;

*ptr_displs++ = norm_width*i;

}

// last block

*ptr_counts = last_width;

*ptr_displs = norm_width*size_minus_one;

for (int i=0; i<length; i++)

{

MPI_Allgatherv(sourceBlock[i],width,PLMPI_REAL,

destBlocks[i],counts,displs,PLMPI_REAL,MPI_COMM_WORLD);

}

free(counts);

free(displs);

}

#else

PLWARNING("PLMPI::exchangeColumnsBlocks: in order to use this function, you should recompile with the flag USING_MPI set to 1.");

#endif

}

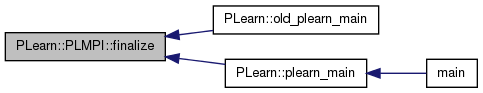

| void PLearn::PLMPI::finalize | ( | ) | [static] |

Definition at line 92 of file PLMPI.cc.

Referenced by PLearn::old_plearn_main(), and PLearn::plearn_main().

{

#if USING_MPI

MPI_Finalize();

#endif

}

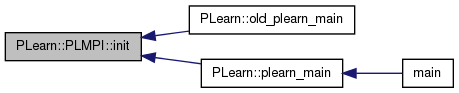

| void PLearn::PLMPI::init | ( | int * | argc, |

| char *** | argv | ||

| ) | [static] |

Definition at line 71 of file PLMPI.cc.

References PLearn::nullin, and PLearn::nullout.

Referenced by PLearn::old_plearn_main(), and PLearn::plearn_main().

{

#ifndef WIN32

mycin = new FdPStreamBuf(0, -1);

mycout = new FdPStreamBuf(-1, 1);

mycerr = new FdPStreamBuf(-1, 2, false, false);

#if USING_MPI

MPI_Init( argc, argv );

MPI_Comm_size( MPI_COMM_WORLD, &size) ;

MPI_Comm_rank( MPI_COMM_WORLD, &rank );

if(rank!=0)

{

cout.rdbuf(nullout.rdbuf());

cin.rdbuf(nullin.rdbuf());

}

#endif

#endif // WIN32

}

PStream PLearn::PLMPI::mycerr [static] |

PStream PLearn::PLMPI::mycin [static] |

PStream PLearn::PLMPI::mycout [static] |

streambuf * PLearn::PLMPI::new_cin_buf = 0 [static, protected] |

int PLearn::PLMPI::rank = 0 [static] |

rank of this node (if not using mpi it's always 0)

Definition at line 234 of file PLMPI.h.

Referenced by PLearn::TextProgressBarPlugin::addProgressBar(), PLearn::NegLogProbCostFunction::evaluate(), exchangeBlocks(), exchangeColumnBlocks(), PLearn::SumOfVariable::fbprop(), PLearn::SumOfVariable::fprop(), PLearn::Learner::measure(), PLearn::Experiment::run(), PLearn::Learner::save(), PLearn::Learner::setExperimentDirectory(), PLearn::Learner::stop_if_wanted(), PLearn::Learner::test(), PLearn::SupervisedDBN::train(), PLearn::PartSupervisedDBN::train(), PLearn::HintonDeepBeliefNet::train(), PLearn::GaussPartSupervisedDBN::train(), PLearn::TextProgressBarPlugin::update(), and PLearn::verrormsg().

int PLearn::PLMPI::size = 0 [static] |

total number of nodes (or processes) running in this MPI_COMM_WORLD (0 if not using mpi)

Definition at line 233 of file PLMPI.h.

Referenced by PLearn::NegLogProbCostFunction::evaluate(), exchangeBlocks(), exchangeColumnBlocks(), PLearn::SumOfVariable::fbprop(), PLearn::SumOfVariable::fprop(), PLearn::Learner::test(), PLearn::SupervisedDBN::train(), PLearn::PartSupervisedDBN::train(), PLearn::HintonDeepBeliefNet::train(), and PLearn::GaussPartSupervisedDBN::train().

bool PLearn::PLMPI::synchronized = true [static] |

Do ALL the nodes have a synchronized state and are carrying the same sequential instructions?

The synchronized flag is used for a particular kind of parallelism and is described in more details above, including a sample of how it should typically be used. When synchronized is true at a given point in the instruciton stream, it roughly means: *** all the data *used by the following section* is the same on all nodes when they are at this point***". It's set to true initially. (But will be set to false if you launch the PLMPIServ server, which uses a different parallelisation paradigm).

Definition at line 245 of file PLMPI.h.

Referenced by PLearn::Learner::measure(), and PLearn::Learner::test().

int PLearn::PLMPI::tag = 2909 [static] |

The default tag to be used by all send/receive (we typically use this single tag through all of PLearn)

Defaults to 2909

Definition at line 260 of file PLMPI.h.

Referenced by PLearn::MPIPStreamBuf::fill_mpibuf(), PLearn::MPIPStreamBuf::read_(), and PLearn::MPIPStreamBuf::write_().

bool PLearn::PLMPI::using_mpi = false [static] |

1.7.4

1.7.4