|

PLearn 0.1

|

|

PLearn 0.1

|

#include <RegressionTreeRegisters.h>

Public Member Functions | |

| RegressionTreeRegisters () | |

| RegressionTreeRegisters (VMat source_, bool report_progress_=false, bool vebosity_=false, bool do_sort_rows=true, bool mem_tsource_=true) | |

| RegressionTreeRegisters (VMat source_, TMat< RTR_type > tsorted_row_, VMat tsource_, bool report_progress_=false, bool vebosity_=false, bool do_sort_rows=true, bool mem_tsource_=true) | |

| virtual | ~RegressionTreeRegisters () |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RegressionTreeRegisters * | deepCopy (CopiesMap &copies) const |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. | |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

| void | reinitRegisters () |

| void | registerLeave (RTR_type_id leave_id, int row) |

| virtual real | get (int i, int j) const |

| This method must be implemented in all subclasses. | |

| real | getTarget (int row) const |

| real | getWeight (int row) const |

| void | setWeight (int row, real val) |

| bool | haveMissing () const |

| RTR_type_id | getNextId () |

| void | getAllRegisteredRow (RTR_type_id leave_id, TVec< RTR_type > ®) const |

| reg.size() == the number of row that we will put in it. | |

| void | getAllRegisteredRow (RTR_type_id leave_id, int col, TVec< RTR_type > ®) const |

| reg.size() == the number of row that we will put in it. | |

| void | getAllRegisteredRow (RTR_type_id leave_id, int col, TVec< RTR_type > ®, TVec< pair< RTR_target_t, RTR_weight_t > > &t_w, Vec &value) const |

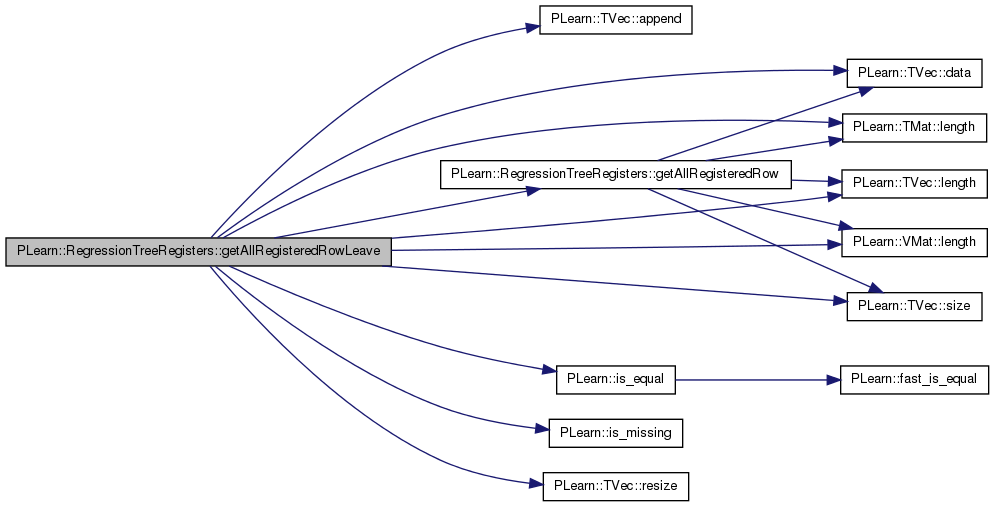

| void | getAllRegisteredRowLeave (RTR_type_id leave_id, int col, TVec< RTR_type > ®, TVec< pair< RTR_target_t, RTR_weight_t > > &t_w, Vec &value, PP< RegressionTreeLeave > missing_leave, PP< RegressionTreeLeave > left_leave, PP< RegressionTreeLeave > right_leave, TVec< RTR_type > &candidate) const |

| tuple< real, real, int > | bestSplitInRow (RTR_type_id leave_id, int col, TVec< RTR_type > ®, PP< RegressionTreeLeave > left_leave, PP< RegressionTreeLeave > right_leave, Vec left_error, Vec right_error) const |

| void | printRegisters () |

| void | getExample (int i, Vec &input, Vec &target, real &weight) |

| Default version calls getSubRow based on inputsize_ targetsize_ weightsize_ But exotic subclasses may construct, input, target and weight however they please. | |

| virtual void | put (int i, int j, real value) |

| This method must be implemented in all subclasses of writable matrices. | |

| TMat< RTR_type > | getTSortedRow () |

| usefull in MultiClassAdaBoost to save memory | |

| VMat | getTSource () |

| virtual void | finalize () |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static void | declareOptions (OptionList &ol) |

| Declare this class' options. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Private Types | |

| typedef VMatrix | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | sortRows () |

| void | sortEachDim (int dim) |

| void | verbose (string msg, int level) |

| void | checkMissing () |

| check if their is missing in the input value. | |

Private Attributes | |

| int | report_progress |

| int | verbosity |

| int | next_id |

| TMat< RTR_type > | tsorted_row |

| TVec< RTR_type_id > | leave_register |

| VMat | tsource |

| Mat | tsource_mat |

| TVec< pair< RTR_target_t, RTR_weight_t > > | target_weight |

| VMat | source |

| bool | do_sort_rows |

| bool | mem_tsource |

| bool | have_missing |

| vector< bool > | compact_reg |

| int | compact_reg_leave |

| PP< RegressionTreeLeave > | tmp_leave |

| used in bestSplitInRow to save data | |

| Vec | tmp_vec |

| used in bestSplitInRow to don't allocate a new vector each time. | |

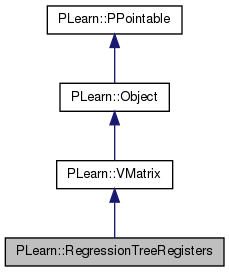

Definition at line 78 of file RegressionTreeRegisters.h.

typedef VMatrix PLearn::RegressionTreeRegisters::inherited [private] |

Reimplemented from PLearn::VMatrix.

Definition at line 80 of file RegressionTreeRegisters.h.

| PLearn::RegressionTreeRegisters::RegressionTreeRegisters | ( | ) |

Definition at line 65 of file RegressionTreeRegisters.cc.

References build().

:

report_progress(0),

verbosity(0),

next_id(0),

do_sort_rows(true),

mem_tsource(true),

have_missing(true),

compact_reg_leave(-1)

{

build();

}

| PLearn::RegressionTreeRegisters::RegressionTreeRegisters | ( | VMat | source_, |

| bool | report_progress_ = false, |

||

| bool | vebosity_ = false, |

||

| bool | do_sort_rows = true, |

||

| bool | mem_tsource_ = true |

||

| ) |

Definition at line 101 of file RegressionTreeRegisters.cc.

References build(), and source.

:

report_progress(report_progress_),

verbosity(verbosity_),

next_id(0),

do_sort_rows(do_sort_rows_),

mem_tsource(mem_tsource_),

have_missing(true),

compact_reg_leave(-1)

{

source = source_;

build();

}

| PLearn::RegressionTreeRegisters::RegressionTreeRegisters | ( | VMat | source_, |

| TMat< RTR_type > | tsorted_row_, | ||

| VMat | tsource_, | ||

| bool | report_progress_ = false, |

||

| bool | vebosity_ = false, |

||

| bool | do_sort_rows = true, |

||

| bool | mem_tsource_ = true |

||

| ) |

Definition at line 77 of file RegressionTreeRegisters.cc.

References build(), checkMissing(), source, PLearn::VMat::toMat(), tsorted_row, tsource, and tsource_mat.

:

report_progress(report_progress_),

verbosity(verbosity_),

next_id(0),

do_sort_rows(do_sort_rows_),

mem_tsource(mem_tsource_),

have_missing(true),

compact_reg_leave(-1)

{

source = source_;

tsource = tsource_;

if(tsource->classname()=="MemoryVMatrixNoSave")

tsource_mat = tsource.toMat();

tsorted_row = tsorted_row_;

checkMissing();

build();

}

| PLearn::RegressionTreeRegisters::~RegressionTreeRegisters | ( | ) | [virtual] |

Definition at line 118 of file RegressionTreeRegisters.cc.

{

}

| string PLearn::RegressionTreeRegisters::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::VMatrix.

Definition at line 63 of file RegressionTreeRegisters.cc.

| OptionList & PLearn::RegressionTreeRegisters::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::VMatrix.

Definition at line 63 of file RegressionTreeRegisters.cc.

| RemoteMethodMap & PLearn::RegressionTreeRegisters::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::VMatrix.

Definition at line 63 of file RegressionTreeRegisters.cc.

Reimplemented from PLearn::VMatrix.

Definition at line 63 of file RegressionTreeRegisters.cc.

| Object * PLearn::RegressionTreeRegisters::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 63 of file RegressionTreeRegisters.cc.

| StaticInitializer RegressionTreeRegisters::_static_initializer_ & PLearn::RegressionTreeRegisters::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::VMatrix.

Definition at line 63 of file RegressionTreeRegisters.cc.

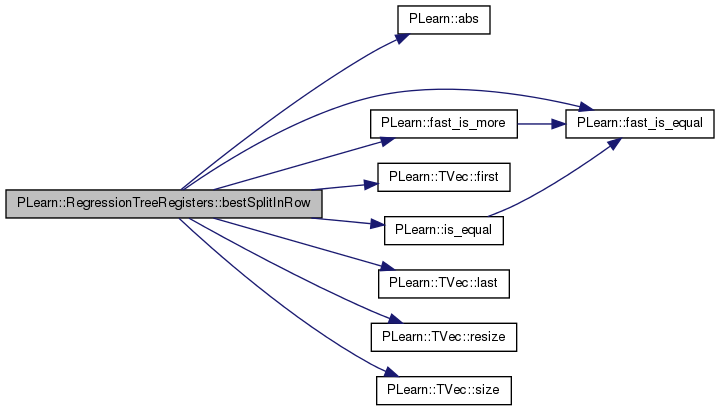

| tuple< real, real, int > PLearn::RegressionTreeRegisters::bestSplitInRow | ( | RTR_type_id | leave_id, |

| int | col, | ||

| TVec< RTR_type > & | reg, | ||

| PP< RegressionTreeLeave > | left_leave, | ||

| PP< RegressionTreeLeave > | right_leave, | ||

| Vec | left_error, | ||

| Vec | right_error | ||

| ) | const |

Definition at line 442 of file RegressionTreeNode.cc.

References PLearn::abs(), PLearn::fast_is_equal(), PLearn::fast_is_more(), PLearn::TVec< T >::first(), i, PLearn::is_equal(), PLearn::TVec< T >::last(), PLASSERT, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::size().

{

int best_balance=INT_MAX;

real best_feature_value = REAL_MAX;

real best_split_error = REAL_MAX;

//in case of only missing value

if(candidates.size()==0)

return make_tuple(best_feature_value, best_split_error, best_balance);

int row = candidates.last();

Vec tmp(3);

real missing_errors = missing_error[0] + missing_error[1];

real first_value=values.first();

real next_feature=values.last();

//next_feature!=first_value is to check if their is more split point

// in case of binary variable or variable with few different value,

// this give a great speed up.

for(int i=candidates.size()-2;i>=0&&next_feature!=first_value;i--)

{

int next_row = candidates[i];

real row_feature=next_feature;

PLASSERT(is_equal(row_feature,values[i+1]));

// ||(is_missing(row_feature)&&is_missing(values[i+1])));

next_feature=values[i];

real target=t_w[i+1].first;

real weight=t_w[i+1].second;

PLASSERT(train_set->get(next_row, col)==values[i]);

PLASSERT(train_set->get(row, col)==values[i+1]);

PLASSERT(next_feature<=row_feature);

left_leave->removeRow(row, target, weight);

right_leave->addRow(row, target, weight);

row = next_row;

if (next_feature < row_feature){

left_leave->getOutputAndError(tmp, left_error);

right_leave->getOutputAndError(tmp, right_error);

}else

continue;

real work_error = missing_errors + left_error[0]

+ left_error[1] + right_error[0] + right_error[1];

int work_balance = abs(left_leave->length() -

right_leave->length());

if (fast_is_more(work_error,best_split_error)) continue;

else if (fast_is_equal(work_error,best_split_error) &&

fast_is_more(work_balance,best_balance)) continue;

best_feature_value = 0.5 * (row_feature + next_feature);

best_split_error = work_error;

best_balance = work_balance;

}

candidates.resize(0);

return make_tuple(best_split_error, best_feature_value, best_balance);

}

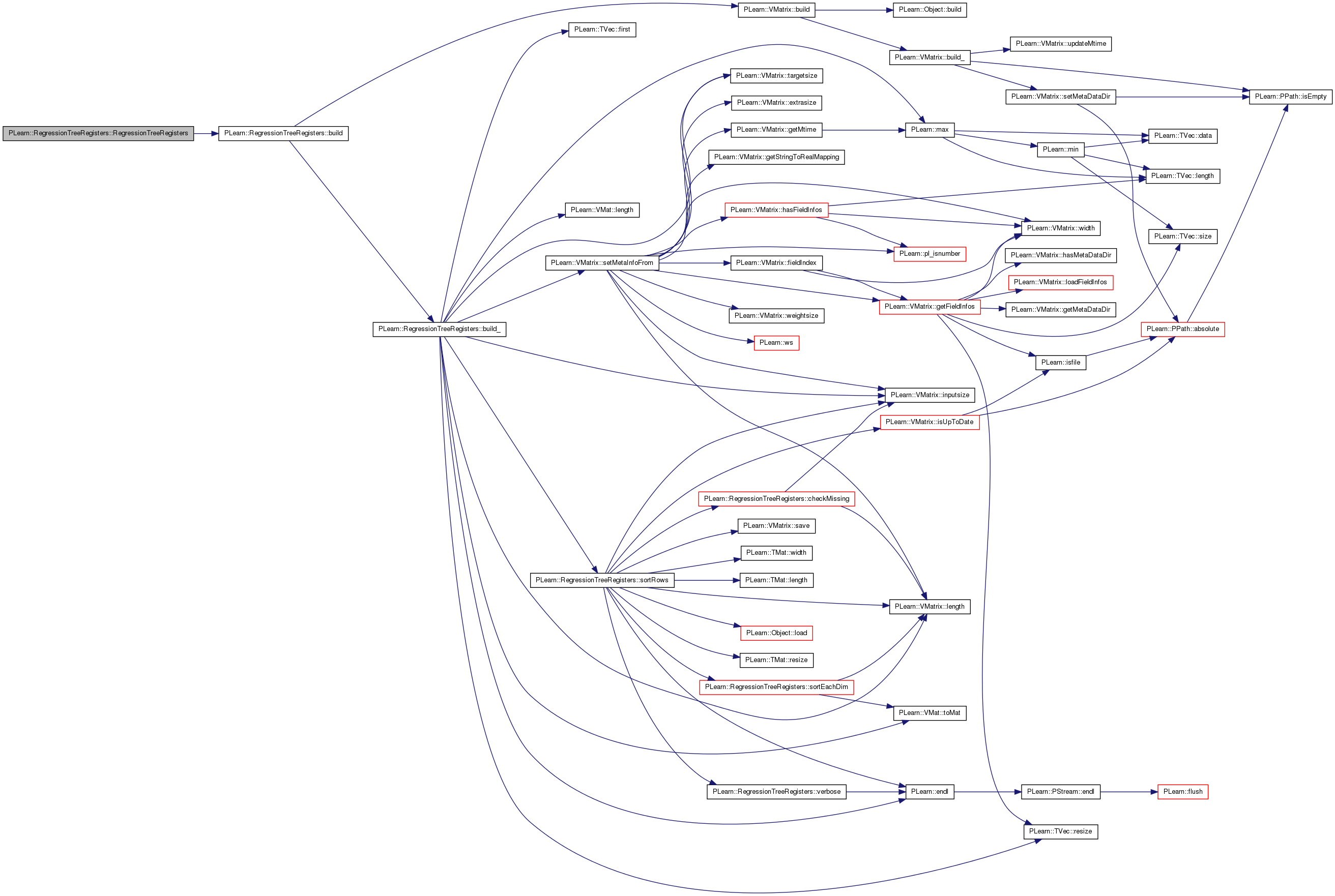

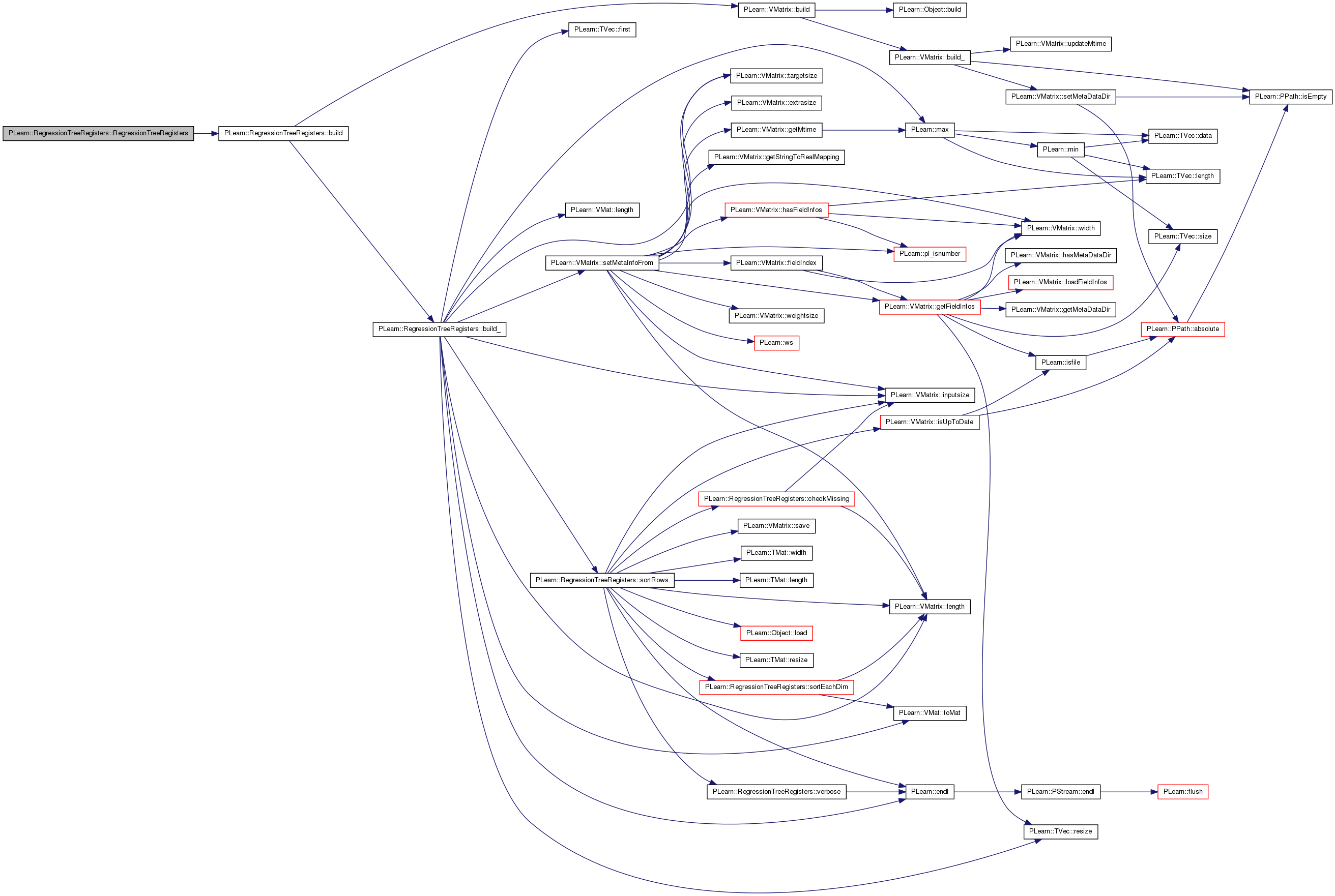

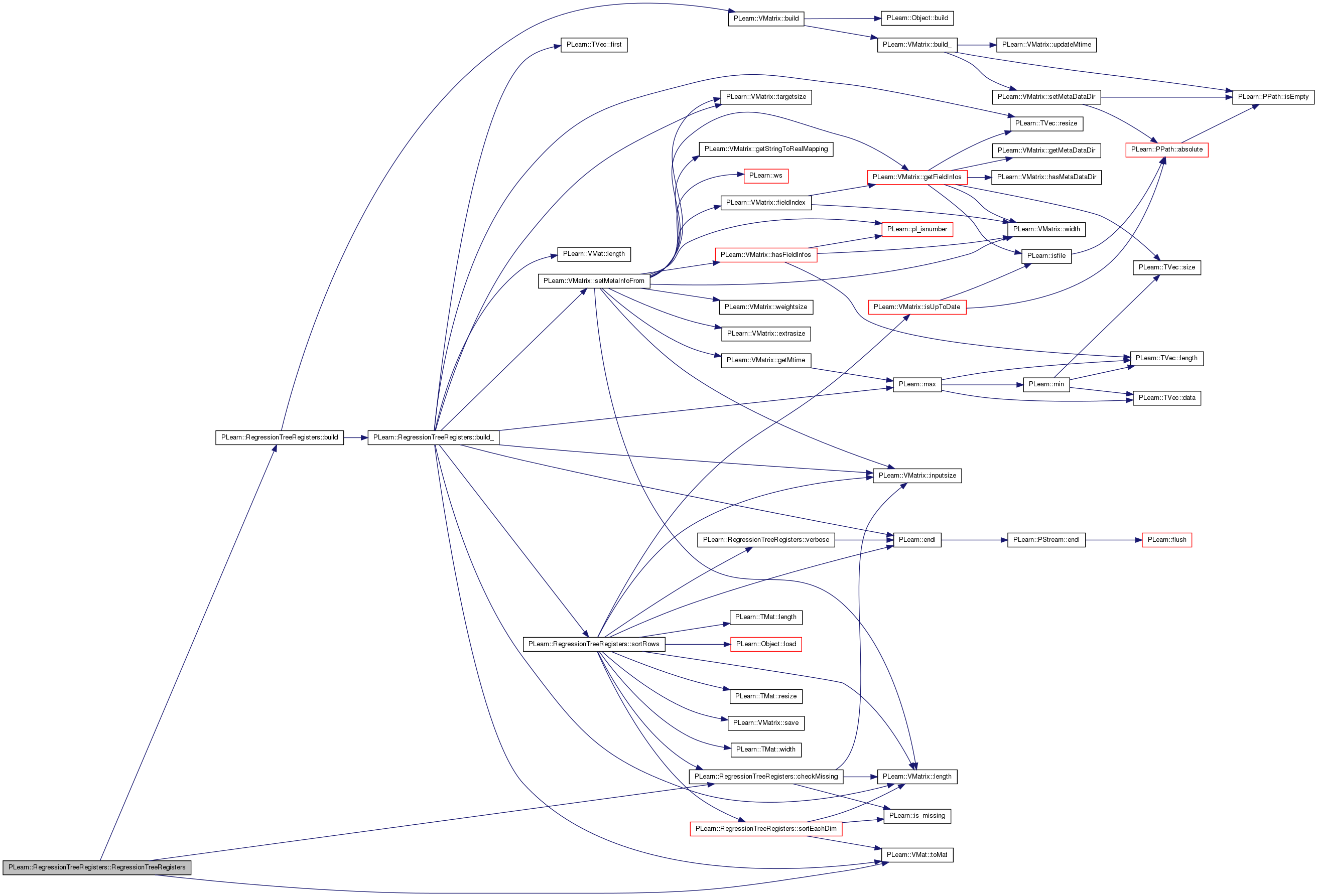

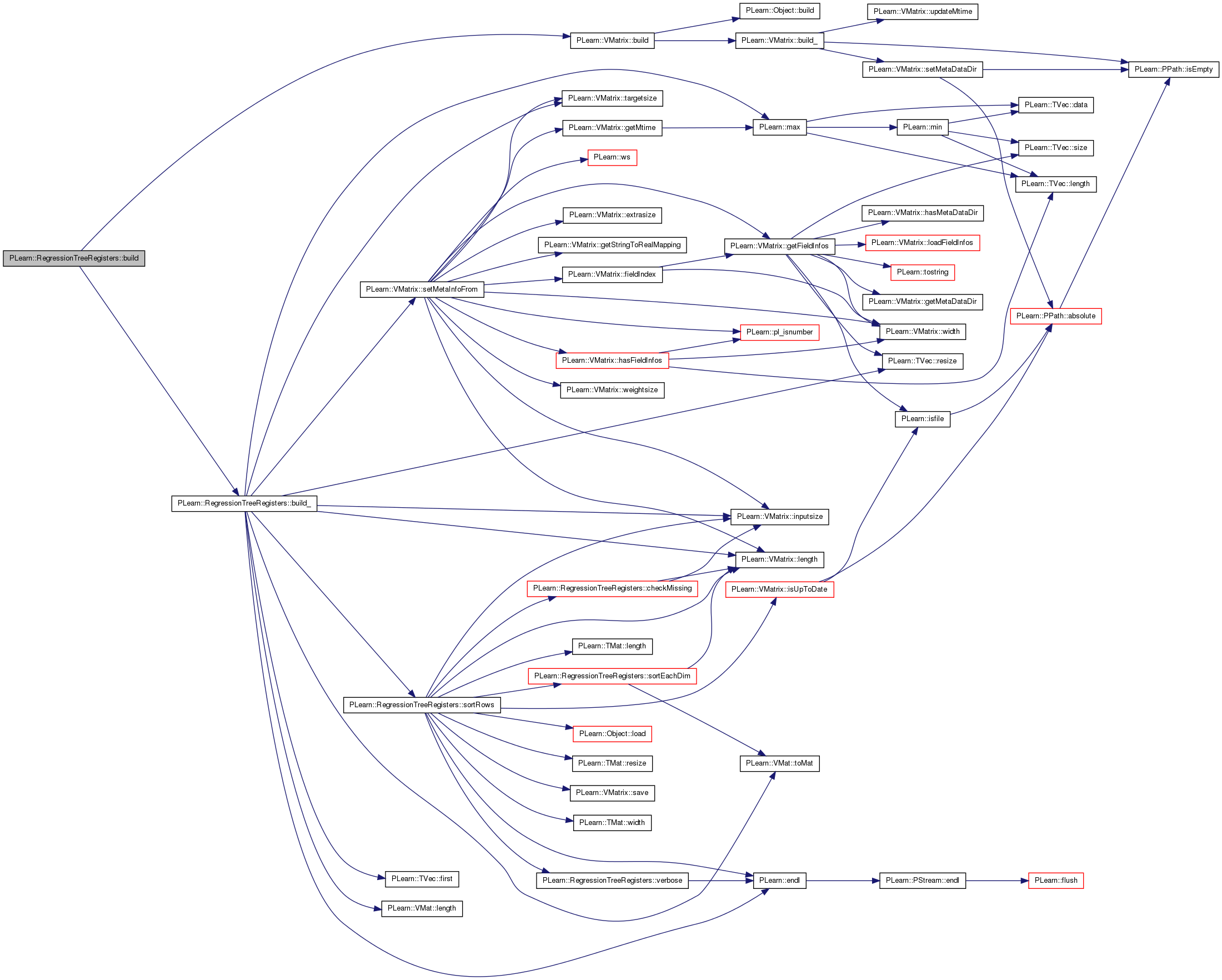

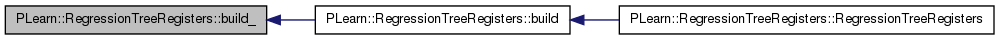

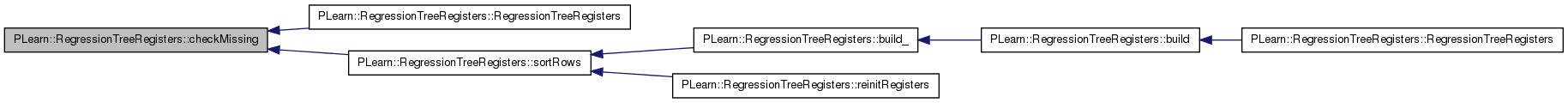

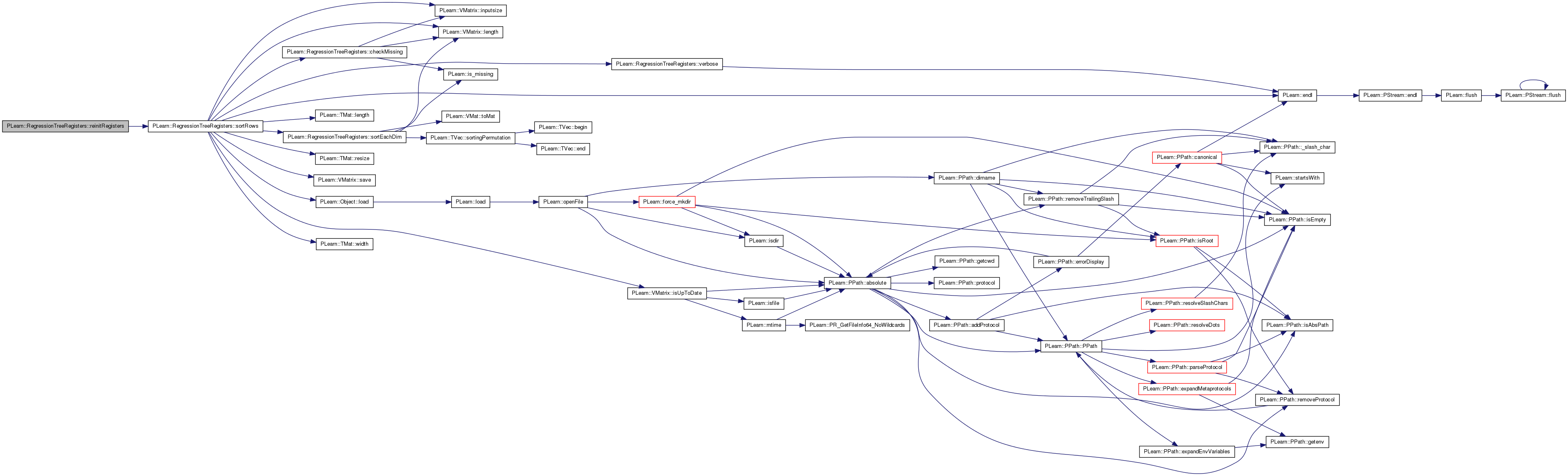

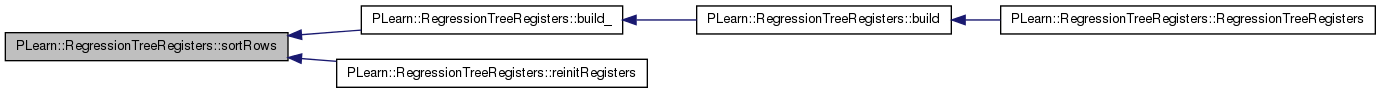

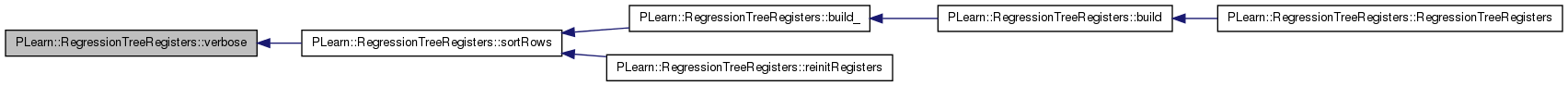

| void PLearn::RegressionTreeRegisters::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::VMatrix.

Definition at line 170 of file RegressionTreeRegisters.cc.

References PLearn::VMatrix::build(), and build_().

Referenced by RegressionTreeRegisters().

{

inherited::build();

build_();

}

| void PLearn::RegressionTreeRegisters::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::VMatrix.

Definition at line 176 of file RegressionTreeRegisters.cc.

References PLearn::endl(), PLearn::TVec< T >::first(), i, PLearn::VMatrix::inputsize(), leave_register, PLearn::VMatrix::length(), PLearn::VMat::length(), PLearn::max(), mem_tsource, PLCHECK, PLearn::pout, PLearn::TVec< T >::resize(), PLearn::VMatrix::setMetaInfoFrom(), sortRows(), source, target_weight, PLearn::VMatrix::targetsize(), PLearn::VMatrix::targetsize_, PLearn::VMat::toMat(), tsource, tsource_mat, PLearn::VMatrix::VMat, PLearn::VMatrix::weightsize_, and PLearn::VMatrix::width_.

Referenced by build().

{

if(!source)

return;

//check that we can put all the examples of the train_set

//with respect to the size of RTR_type who limit the capacity

PLCHECK(source.length()>0

&& (unsigned)source.length()

<= std::numeric_limits<RTR_type>::max());

PLCHECK(source->targetsize()==1);

PLCHECK(source->weightsize()<=1);

PLCHECK(source->inputsize()>0);

if(!tsource){

tsource = VMat(new TransposeVMatrix(new SubVMatrix(

source, 0,0,source->length(),

source->inputsize())));

if(mem_tsource){

PP<MemoryVMatrixNoSave> tmp = new MemoryVMatrixNoSave(tsource);

tsource = VMat(tmp);

}

if(tsource->classname()=="MemoryVMatrixNoSave")

tsource_mat = tsource.toMat();

}

setMetaInfoFrom(source);

weightsize_=1;

targetsize_=1;

target_weight.resize(source->length());

if(source->weightsize()<=0){

width_++;

for(int i=0;i<source->length();i++){

target_weight[i].first=source->get(i,inputsize());

target_weight[i].second=1.0 / length();

}

}else

for(int i=0;i<source->length();i++){

target_weight[i].first=source->get(i,inputsize());

target_weight[i].second=source->get(i,inputsize()+targetsize());

}

#if 0

//usefull to weight the dataset to have the sum of weight==1 or ==length()

real weights_sum=0;

for(int i=0;i<source->length();i++){

weights_sum+=target_weight[i].second;

}

pout<<weights_sum<<endl;

// real t=length()/weights_sum;

real t=1/weights_sum;

for(int i=0;i<source->length();i++){

target_weight[i].second*=t;

}

weights_sum=0;

for(int i=0;i<source->length();i++){

weights_sum+=target_weight[i].second;

}

pout<<weights_sum<<endl;

#endif

leave_register.resize(length());

sortRows();

// compact_reg.resize(length());

}

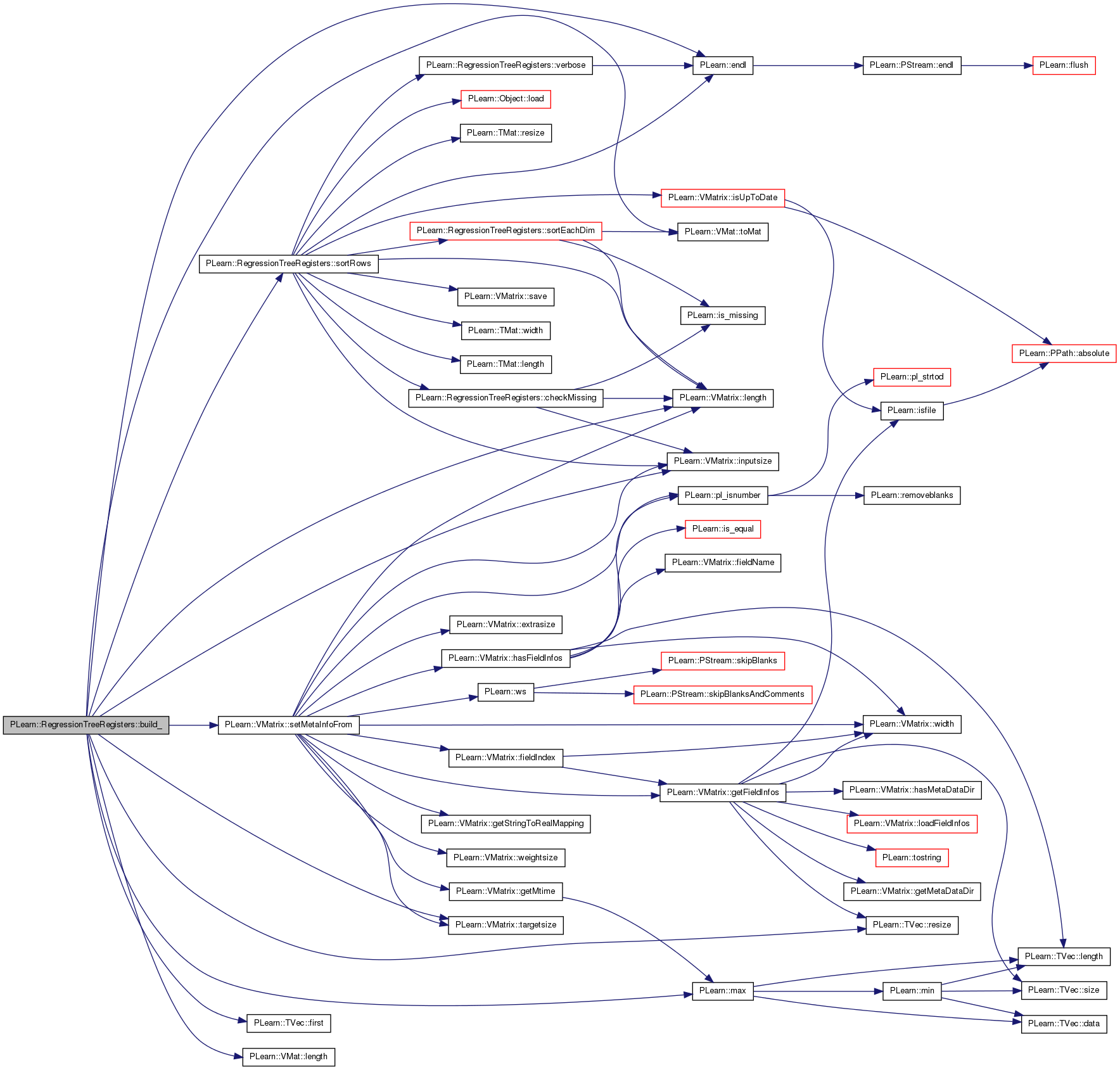

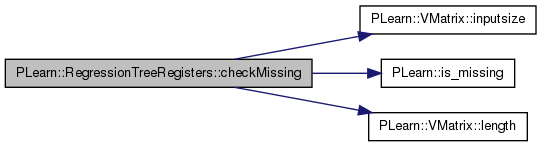

| void PLearn::RegressionTreeRegisters::checkMissing | ( | ) | [private] |

check if their is missing in the input value.

Definition at line 657 of file RegressionTreeRegisters.cc.

References have_missing, i, PLearn::VMatrix::inputsize(), PLearn::is_missing(), j, PLearn::VMatrix::length(), and tsource.

Referenced by RegressionTreeRegisters(), and sortRows().

{

if(have_missing==false)

return;

bool found_missing=false;

for(int j=0;j<inputsize()&&!found_missing;j++)

for(int i=0;i<length()&&!found_missing;i++)

if(is_missing(tsource(j,i)))

found_missing=true;

if(!found_missing)

have_missing=false;

}

| string PLearn::RegressionTreeRegisters::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 63 of file RegressionTreeRegisters.cc.

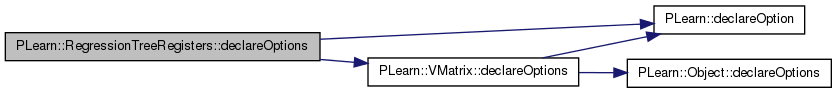

| void PLearn::RegressionTreeRegisters::declareOptions | ( | OptionList & | ol | ) | [static] |

Declare this class' options.

Reimplemented from PLearn::VMatrix.

Definition at line 122 of file RegressionTreeRegisters.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::VMatrix::declareOptions(), do_sort_rows, PLearn::OptionBase::learntoption, leave_register, mem_tsource, next_id, PLearn::OptionBase::nosave, report_progress, source, tsorted_row, tsource, and verbosity.

{

declareOption(ol, "report_progress", &RegressionTreeRegisters::report_progress, OptionBase::buildoption,

"The indicator to report progress through a progress bar\n");

declareOption(ol, "verbosity", &RegressionTreeRegisters::verbosity, OptionBase::buildoption,

"The desired level of verbosity\n");

declareOption(ol, "tsource", &RegressionTreeRegisters::tsource,

OptionBase::learntoption | OptionBase::nosave,

"The source VMatrix transposed");

declareOption(ol, "source", &RegressionTreeRegisters::source,

OptionBase::buildoption,

"The source VMatrix");

declareOption(ol, "next_id", &RegressionTreeRegisters::next_id, OptionBase::learntoption,

"The next id for creating a new leave\n");

declareOption(ol, "leave_register", &RegressionTreeRegisters::leave_register, OptionBase::learntoption,

"The vector identifying the leave to which, each row belongs\n");

declareOption(ol, "do_sort_rows", &RegressionTreeRegisters::do_sort_rows,

OptionBase::buildoption,

"Do we generate the sorted rows? Not usefull if used only to test.\n");

declareOption(ol, "mem_tsource", &RegressionTreeRegisters::mem_tsource,

OptionBase::buildoption,

"Do we put the tsource in memory? default to true as this"

" give an great speed up for the trainning of RegressionTree.\n");

//too big to save

declareOption(ol, "tsorted_row", &RegressionTreeRegisters::tsorted_row, OptionBase::nosave,

"The matrix holding the sequence of samples in ascending value order for each dimension\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RegressionTreeRegisters::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::VMatrix.

Definition at line 130 of file RegressionTreeRegisters.h.

{ leave_register[row] = leave_id; }

| RegressionTreeRegisters * PLearn::RegressionTreeRegisters::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::VMatrix.

Definition at line 63 of file RegressionTreeRegisters.cc.

| virtual void PLearn::RegressionTreeRegisters::finalize | ( | ) | [inline, virtual] |

Definition at line 185 of file RegressionTreeRegisters.h.

{tsorted_row = TMat<RTR_type>();}

This method must be implemented in all subclasses.

Returns element (i,j).

Implements PLearn::VMatrix.

Definition at line 138 of file RegressionTreeRegisters.h.

{

if(j<inputsize())return tsource->get(j,i);

if(j==inputsize())return target_weight[i].first;

else return target_weight[i].second;

}

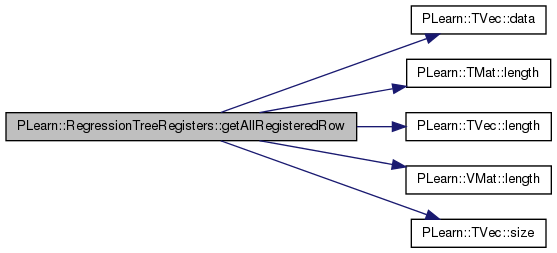

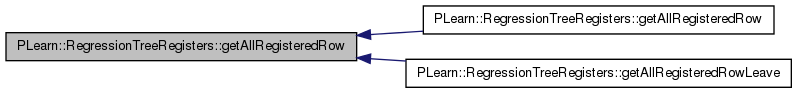

| void PLearn::RegressionTreeRegisters::getAllRegisteredRow | ( | RTR_type_id | leave_id, |

| TVec< RTR_type > & | reg | ||

| ) | const |

reg.size() == the number of row that we will put in it.

the register are not sorted. They are in increasing order.

Definition at line 384 of file RegressionTreeRegisters.cc.

References PLearn::TVec< T >::data(), i, leave_register, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::VMat::length(), n, PLASSERT, RTR_type, RTR_type_id, PLearn::TVec< T >::size(), tsource, and tsource_mat.

Referenced by getAllRegisteredRow(), and getAllRegisteredRowLeave().

{

PLASSERT(tsource_mat.length()==tsource.length());

int idx=0;

int n=reg.length();

RTR_type* preg = reg.data();

RTR_type_id* pleave_register = leave_register.data();

for(int i=0;i<length() && n> idx;i++){

if (pleave_register[i] == leave_id){

preg[idx++]=i;

PLASSERT(reg[idx-1]==i);

}

}

PLASSERT(idx==reg->size());

}

| void PLearn::RegressionTreeRegisters::getAllRegisteredRow | ( | RTR_type_id | leave_id, |

| int | col, | ||

| TVec< RTR_type > & | reg | ||

| ) | const |

reg.size() == the number of row that we will put in it.

the register are sorted by col.

Definition at line 404 of file RegressionTreeRegisters.cc.

References compact_reg, compact_reg_leave, PLearn::TVec< T >::data(), i, leave_register, PLearn::TMat< T >::length(), PLearn::VMatrix::length(), PLearn::TVec< T >::length(), PLearn::VMat::length(), n, PLASSERT, RTR_type, RTR_type_id, PLearn::TVec< T >::size(), tsorted_row, tsource, and tsource_mat.

{

PLASSERT(tsource_mat.length()==tsource.length());

int idx=0;

int n=reg.length();

RTR_type* preg = reg.data();

RTR_type* ptsorted_row = tsorted_row[col];

RTR_type_id* pleave_register = leave_register.data();

if(reg.size()==length()){

//get the full row

reg<<tsorted_row(col);

idx=length();

}else if(compact_reg.size()==0){

for(int i=0;i<length() && n> idx;i++){

PLASSERT(ptsorted_row[i]==tsorted_row(col, i));

RTR_type srow = ptsorted_row[i];

if ( pleave_register[srow] == leave_id){

PLASSERT(leave_register[srow] == leave_id);

PLASSERT(preg[idx]==reg[idx]);

preg[idx++]=srow;

}

}

}else if(compact_reg_leave==leave_id){

//compact_reg is used as an optimization.

//as it is more compact in memory then leave_register

//we are more memory friendly.

for(int i=0;i<length() && n> idx;i++){

PLASSERT(ptsorted_row[i]==tsorted_row(col, i));

RTR_type srow = ptsorted_row[i];

if ( compact_reg[srow] ){

PLASSERT(leave_register[srow] == leave_id);

PLASSERT(preg[idx]==reg[idx]);

preg[idx++]=srow;

}

}

}else{

compact_reg.resize(0);

compact_reg.resize(length(),false);

// for(uint i=0;i<compact_reg.size();i++)

// compact_reg[i]=false;

for(int i=0;i<length() && n> idx;i++){

PLASSERT(ptsorted_row[i]==tsorted_row(col, i));

RTR_type srow = ptsorted_row[i];

if ( pleave_register[srow] == leave_id){

PLASSERT(leave_register[srow] == leave_id);

PLASSERT(preg[idx]==reg[idx]);

preg[idx++]=srow;

compact_reg[srow]=true;

}

}

compact_reg_leave = leave_id;

}

PLASSERT(idx==reg->size());

}

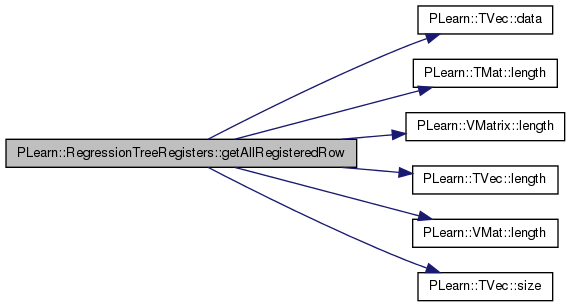

| void PLearn::RegressionTreeRegisters::getAllRegisteredRow | ( | RTR_type_id | leave_id, |

| int | col, | ||

| TVec< RTR_type > & | reg, | ||

| TVec< pair< RTR_target_t, RTR_weight_t > > & | t_w, | ||

| Vec & | value | ||

| ) | const |

Definition at line 341 of file RegressionTreeRegisters.cc.

References PLearn::TVec< T >::data(), getAllRegisteredRow(), i, PLearn::TMat< T >::length(), PLearn::VMatrix::length(), PLearn::TVec< T >::length(), PLearn::VMat::length(), PLASSERT, PLearn::TVec< T >::resize(), RTR_type, RTR_weight_t, target_weight, tsource, tsource_mat, w, and PLearn::VMatrix::weightsize().

{

PLASSERT(tsource_mat.length()==tsource.length());

getAllRegisteredRow(leave_id,col,reg);

t_w.resize(reg.length());

value.resize(reg.length());

real * p = tsource_mat[col];

pair<RTR_target_t,RTR_weight_t> * ptw = target_weight.data();

pair<RTR_target_t,RTR_weight_t>* ptwd = t_w.data();

real * pv = value.data();

RTR_type * preg = reg.data();

if(weightsize() <= 0){

RTR_weight_t w = 1.0 / length();

for(int i=0;i<reg.length();i++){

PLASSERT(tsource->get(col, reg[i])==p[reg[i]]);

int idx = int(preg[i]);

ptwd[i].first = ptw[idx].first;

ptwd[i].second = w;

pv[i] = p[idx];

}

} else {

//It is better to do multiple pass for memory access.

for(int i=0;i<reg.length();i++){

int idx = int(preg[i]);

ptwd[i].first = ptw[idx].first;

ptwd[i].second = ptw[idx].second;

}

for(int i=0;i<reg.length();i++){

PLASSERT(tsource->get(col, reg[i])==p[reg[i]]);

int idx = int(preg[i]);

pv[i] = p[idx];

}

}

}

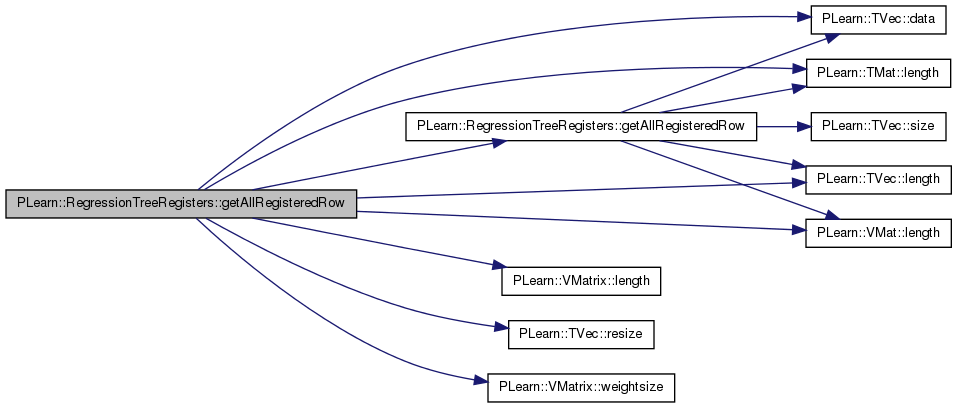

| void PLearn::RegressionTreeRegisters::getAllRegisteredRowLeave | ( | RTR_type_id | leave_id, |

| int | col, | ||

| TVec< RTR_type > & | reg, | ||

| TVec< pair< RTR_target_t, RTR_weight_t > > & | t_w, | ||

| Vec & | value, | ||

| PP< RegressionTreeLeave > | missing_leave, | ||

| PP< RegressionTreeLeave > | left_leave, | ||

| PP< RegressionTreeLeave > | right_leave, | ||

| TVec< RTR_type > & | candidate | ||

| ) | const |

Definition at line 247 of file RegressionTreeRegisters.cc.

References PLearn::TVec< T >::append(), PLearn::TVec< T >::data(), getAllRegisteredRow(), PLearn::is_equal(), PLearn::is_missing(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::VMat::length(), PLASSERT, PLearn::TVec< T >::resize(), RTR_HAVE_MISSING, RTR_target_t, RTR_type, RTR_weight_t, PLearn::TVec< T >::size(), target_weight, tsource, and tsource_mat.

{

PLASSERT(tsource_mat.length()==tsource.length());

getAllRegisteredRow(leave_id,col,reg);

t_w.resize(reg.length());

value.resize(reg.length());

real * p = tsource_mat[col];

pair<RTR_target_t,RTR_weight_t> * ptw = target_weight.data();

pair<RTR_target_t,RTR_weight_t>* ptwd = t_w.data();

real * pv = value.data();

RTR_type * preg = reg.data();

//It is better to do multiple pass for memory access.

//we do this optimization in case their is many row with the same value

//at the end as with binary variable.

//we do it here to overlap computation and memory access

int row_idx_end = reg.size() - 1;

int prev_row=preg[row_idx_end];

real prev_val=p[prev_row];

PLASSERT(reg.size()>row_idx_end && row_idx_end>=0);

PLASSERT(is_equal(p[prev_row],tsource(col,prev_row)));

for( ;row_idx_end>0;row_idx_end--)

{

int futur_row = preg[row_idx_end-8];

__builtin_prefetch(&ptw[futur_row],1,2);

__builtin_prefetch(&p[futur_row],1,2);

int row=prev_row;

real val=prev_val;

prev_row = preg[row_idx_end-1];

prev_val = p[prev_row];

PLASSERT(reg.size()>row_idx_end && row_idx_end>0);

PLASSERT(target_weight.size()>row && row>=0);

PLASSERT(is_equal(p[row],tsource(col,row)));

RTR_target_t target = ptw[row].first;

RTR_weight_t weight = ptw[row].second;

if (RTR_HAVE_MISSING && is_missing(val))

missing_leave->addRow(row, target, weight);

else if(val==prev_val)

right_leave->addRow(row, target, weight);

else

break;

}

//We need the last data for an optimization in RTN

{

int idx=reg.size()-1;

PLASSERT(reg.size()>idx && idx>=0);

int row=int(preg[idx]);

PLASSERT(target_weight.size()>row && row>=0);

PLASSERT(is_equal(p[row],tsource(col,row)));

pv[idx]=p[row];

}

for(int row_idx = 0;row_idx<=row_idx_end;row_idx++)

{

int futur_row = preg[row_idx+8];

__builtin_prefetch(&ptw[futur_row],1,2);

__builtin_prefetch(&p[futur_row],1,2);

PLASSERT(reg.size()>row_idx && row_idx>=0);

int row=int(preg[row_idx]);

real val=p[row];

PLASSERT(target_weight.size()>row && row>=0);

PLASSERT(is_equal(p[row],tsource(col,row)));

RTR_target_t target = ptw[row].first;

RTR_weight_t weight = ptw[row].second;

if (RTR_HAVE_MISSING && is_missing(val)){

missing_leave->addRow(row, target, weight);

}else {

left_leave->addRow(row, target, weight);

candidate.append(row);

ptwd[row_idx].first=ptw[row].first;

ptwd[row_idx].second=ptw[row].second;

pv[row_idx]=val;

}

}

t_w.resize(candidate.size());

value.resize(candidate.size());

}

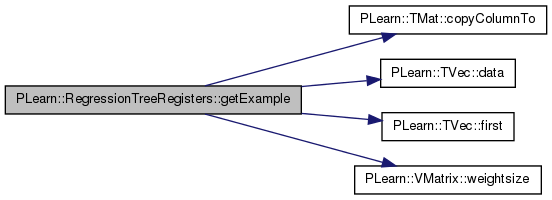

| void PLearn::RegressionTreeRegisters::getExample | ( | int | i, |

| Vec & | input, | ||

| Vec & | target, | ||

| real & | weight | ||

| ) | [virtual] |

Default version calls getSubRow based on inputsize_ targetsize_ weightsize_ But exotic subclasses may construct, input, target and weight however they please.

If not a weighted matrix, weight should be set to default value 1.

Reimplemented from PLearn::VMatrix.

Definition at line 712 of file RegressionTreeRegisters.cc.

References PLearn::TMat< T >::copyColumnTo(), PLearn::TVec< T >::data(), PLearn::TVec< T >::first(), i, PLearn::VMatrix::inputsize_, PLERROR, target_weight, PLearn::VMatrix::targetsize_, tsource_mat, and PLearn::VMatrix::weightsize().

{

#ifdef BOUNDCHECK

if(inputsize_<0)

PLERROR("In RegressionTreeRegisters::getExample, inputsize_ not defined for this vmat");

if(targetsize_<0)

PLERROR("In RegressionTreeRegisters::getExample, targetsize_ not defined for this vmat");

if(weightsize()<0)

PLERROR("In RegressionTreeRegisters::getExample, weightsize_ not defined for this vmat");

#endif

//going by tsource is not thread safe as PP are not thread safe.

//so we use tsource_mat.copyColumnTo that is thread safe.

tsource_mat.copyColumnTo(i,input.data());

target[0]=target_weight[i].first;

weight = target_weight[i].second;

}

| RTR_type_id PLearn::RegressionTreeRegisters::getNextId | ( | ) | [inline] |

Definition at line 152 of file RegressionTreeRegisters.h.

{

PLCHECK(next_id<std::numeric_limits<RTR_type_id>::max());

next_id += 1;return next_id;}

| OptionList & PLearn::RegressionTreeRegisters::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 63 of file RegressionTreeRegisters.cc.

| OptionMap & PLearn::RegressionTreeRegisters::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 63 of file RegressionTreeRegisters.cc.

| RemoteMethodMap & PLearn::RegressionTreeRegisters::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 63 of file RegressionTreeRegisters.cc.

Definition at line 143 of file RegressionTreeRegisters.h.

{return target_weight[row].first;}

| TMat<RTR_type> PLearn::RegressionTreeRegisters::getTSortedRow | ( | ) | [inline] |

usefull in MultiClassAdaBoost to save memory

Definition at line 183 of file RegressionTreeRegisters.h.

{return tsorted_row;}

| VMat PLearn::RegressionTreeRegisters::getTSource | ( | ) | [inline] |

Definition at line 184 of file RegressionTreeRegisters.h.

{return tsource;}

Definition at line 145 of file RegressionTreeRegisters.h.

{

return target_weight[row].second;

}

| bool PLearn::RegressionTreeRegisters::haveMissing | ( | ) | const [inline] |

Definition at line 151 of file RegressionTreeRegisters.h.

{return have_missing;}

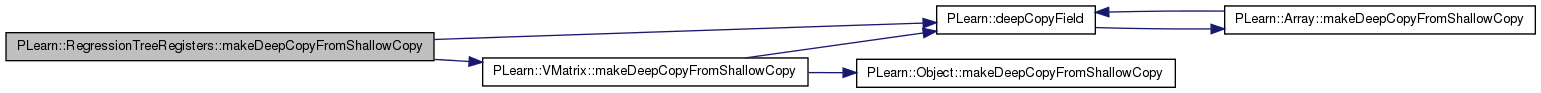

| void PLearn::RegressionTreeRegisters::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be.

This needs to be overridden by every class that adds "complex" data members to the class, such as Vec, Mat, PP<Something>, etc. Typical implementation:

void CLASS_OF_THIS::makeDeepCopyFromShallowCopy(CopiesMap& copies) { inherited::makeDeepCopyFromShallowCopy(copies); deepCopyField(complex_data_member1, copies); deepCopyField(complex_data_member2, copies); ... }

| copies | A map used by the deep-copy mechanism to keep track of already-copied objects. |

Reimplemented from PLearn::VMatrix.

Definition at line 157 of file RegressionTreeRegisters.cc.

References PLearn::deepCopyField(), leave_register, and PLearn::VMatrix::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(leave_register, copies);

//tsource and tsorted_row should be deep copied, but currently when it is deep copied

// the copy is modified. To save memory we don't do it.

// It is deep copied eavily by HyperLearner and HyperOptimizer

// deepCopyField(tsorted_row, copies);

// deepCopyField(tsource,copies);

//no need to deep copy source as we don't reuse it after initialization

// deepCopyField(source,copies);

}

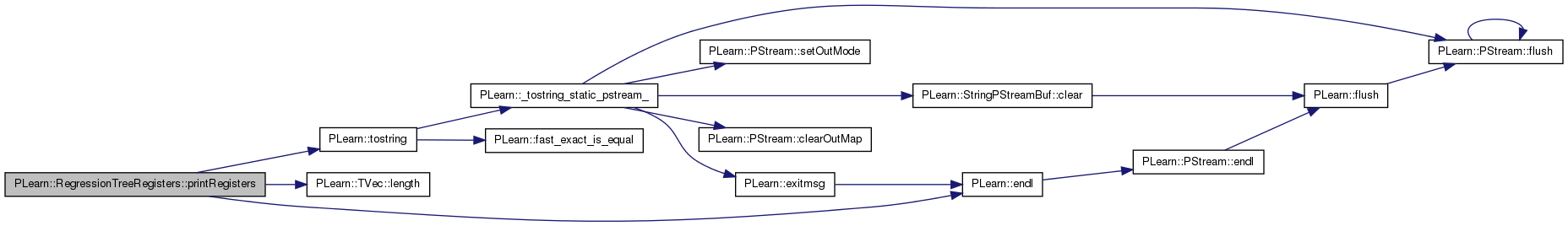

| void PLearn::RegressionTreeRegisters::printRegisters | ( | ) |

Definition at line 698 of file RegressionTreeRegisters.cc.

References PLearn::endl(), leave_register, PLearn::TVec< T >::length(), and PLearn::tostring().

{

cout << " register: ";

for (int ii = 0; ii < leave_register.length(); ii++)

cout << " " << tostring(leave_register[ii]);

cout << endl;

}

This method must be implemented in all subclasses of writable matrices.

Sets element (i,j) to value.

Reimplemented from PLearn::VMatrix.

Definition at line 173 of file RegressionTreeRegisters.h.

References PLASSERT, and PLERROR.

{

PLASSERT(inputsize()>0&&targetsize()>0);

if(j!=inputsize()+targetsize())

PLERROR("In RegressionTreeRegisters::put - implemented the put of "

"the weightsize only");

setWeight(i,value);

}

| void PLearn::RegressionTreeRegisters::registerLeave | ( | RTR_type_id | leave_id, |

| int | row | ||

| ) | [inline] |

Definition at line 136 of file RegressionTreeRegisters.h.

{ leave_register[row] = leave_id; }

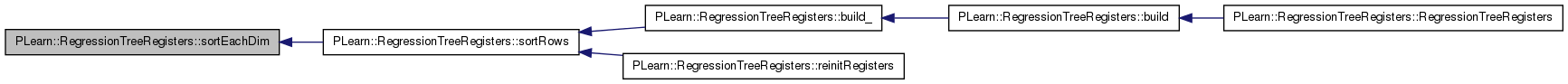

| void PLearn::RegressionTreeRegisters::reinitRegisters | ( | ) |

Definition at line 239 of file RegressionTreeRegisters.cc.

References next_id, and sortRows().

Definition at line 148 of file RegressionTreeRegisters.h.

{

target_weight[row].second = val;

}

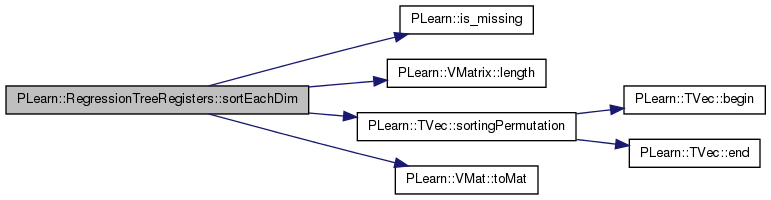

| void PLearn::RegressionTreeRegisters::sortEachDim | ( | int | dim | ) | [private] |

Definition at line 670 of file RegressionTreeRegisters.cc.

References i, PLearn::is_missing(), PLearn::VMatrix::length(), m, PLASSERT, PLCHECK_MSG, PLWARNING, PLearn::TVec< T >::sortingPermutation(), PLearn::VMat::toMat(), tsorted_row, and tsource.

Referenced by sortRows().

{

PLCHECK_MSG(tsource->classname()=="MemoryVMatrixNoSave",tsource->classname().c_str());

Mat m = tsource.toMat();

Vec v = m(dim);

TVec<int> order = v.sortingPermutation(true, true);

tsorted_row(dim)<<order;

#ifndef NDEBUG

for(int i=0;i<length()-1;i++){

int reg1 = tsorted_row(dim,i);

int reg2 = tsorted_row(dim,i+1);

real v1 = tsource(dim,reg1);

real v2 = tsource(dim,reg2);

//check that the sort is valid.

PLASSERT(v1<=v2 || is_missing(v2));

//check that the sort is stable

if(v1==v2 && reg1>reg2)

PLWARNING("In RegressionTreeRegisters::sortEachDim(%d) - "

"sort is not stable. make it stable to be more optimized:"

" reg1=%d, reg2=%d, v1=%f, v2=%f",

dim, reg1, reg2, v1, v2);

}

#endif

return;

}

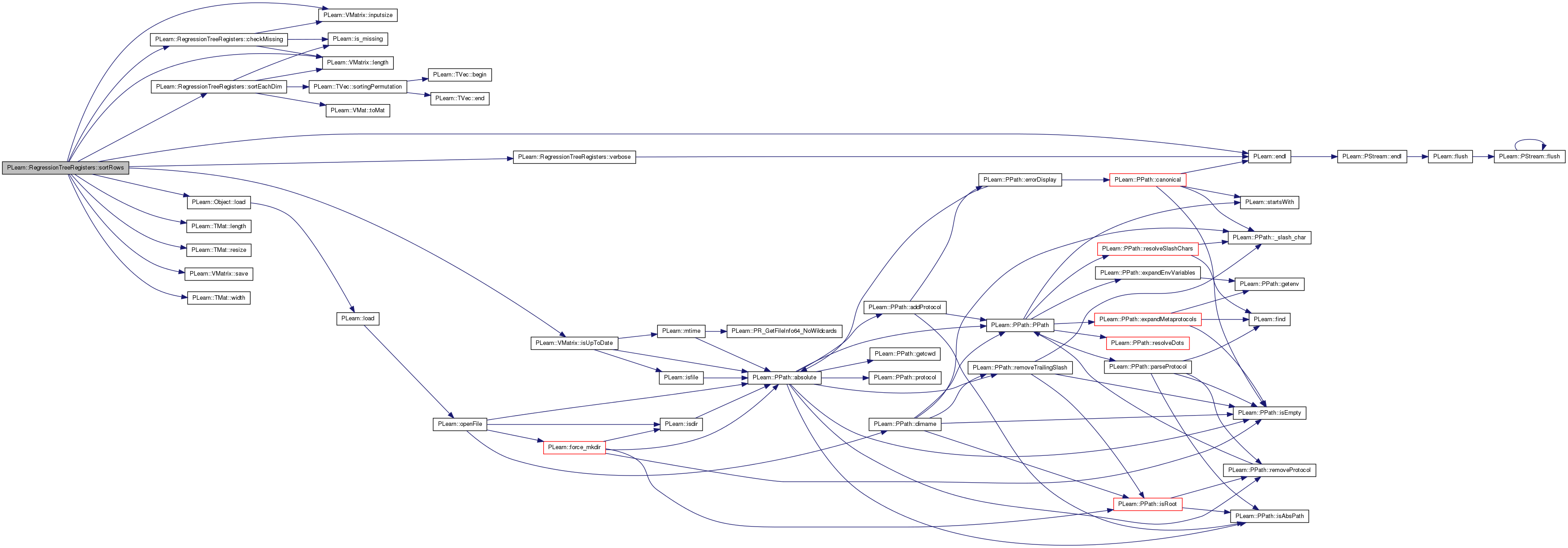

| void PLearn::RegressionTreeRegisters::sortRows | ( | ) | [private] |

Definition at line 605 of file RegressionTreeRegisters.cc.

References checkMissing(), DBG_LOG, do_sort_rows, PLearn::endl(), PLearn::VMatrix::inputsize(), PLearn::VMatrix::isUpToDate(), PLearn::VMatrix::length(), PLearn::TMat< T >::length(), PLearn::Object::load(), next_id, report_progress, PLearn::TMat< T >::resize(), PLearn::VMatrix::save(), sortEachDim(), source, tsorted_row, verbose(), and PLearn::TMat< T >::width().

Referenced by build_(), and reinitRegisters().

{

next_id = 0;

if(!do_sort_rows)

return;

if (tsorted_row.length() == inputsize() && tsorted_row.width() == length())

{

verbose("RegressionTreeRegisters: Sorted train set indices are present, no sort required", 3);

return;

}

string f=source->getMetaDataDir()+"RTR_tsorted_row.psave";

if(isUpToDate(f)){

DBG_LOG<<"RegressionTreeRegisters:: Reloading the sorted source VMatrix: "<<f<<endl;

PLearn::load(f,tsorted_row);

checkMissing();

return;

}

verbose("RegressionTreeRegisters: The train set is being sorted", 3);

tsorted_row.resize(inputsize(), length());

PP<ProgressBar> pb;

if (report_progress)

{

pb = new ProgressBar("RegressionTreeRegisters : sorting the train set on input dimensions: ", inputsize());

}

for(int row=0;row<tsorted_row.length();row++)

for(int col=0;col<tsorted_row.width(); col++)

tsorted_row(row,col)=col;

// for (int each_train_sample_index = 0; each_train_sample_index < length(); each_train_sample_index++)

// {

// sorted_row(each_train_sample_index).fill(each_train_sample_index);

// }

#ifdef _OPENMP

#pragma omp parallel for default(none) shared(pb)

#endif

for (int sample_dim = 0; sample_dim < inputsize(); sample_dim++)

{

sortEachDim(sample_dim);

if (report_progress) pb->update(sample_dim+1);

}

checkMissing();

if (report_progress) pb->close();//in case of parallel sort.

if(source->hasMetaDataDir()){

DBG_LOG<<"RegressionTreeRegisters:: Saving the sorted source VMatrix: "<<f<<endl;

PLearn::save(f,tsorted_row);

}else{

}

}

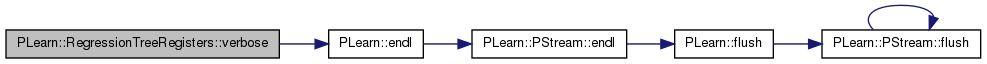

| void PLearn::RegressionTreeRegisters::verbose | ( | string | msg, |

| int | level | ||

| ) | [private] |

Definition at line 706 of file RegressionTreeRegisters.cc.

References PLearn::endl(), and verbosity.

Referenced by sortRows().

Reimplemented from PLearn::VMatrix.

Definition at line 130 of file RegressionTreeRegisters.h.

vector<bool> PLearn::RegressionTreeRegisters::compact_reg [mutable, private] |

Definition at line 110 of file RegressionTreeRegisters.h.

Referenced by getAllRegisteredRow().

int PLearn::RegressionTreeRegisters::compact_reg_leave [mutable, private] |

Definition at line 111 of file RegressionTreeRegisters.h.

Referenced by getAllRegisteredRow().

Definition at line 106 of file RegressionTreeRegisters.h.

Referenced by declareOptions(), and sortRows().

Definition at line 108 of file RegressionTreeRegisters.h.

Referenced by checkMissing().

TVec<RTR_type_id> PLearn::RegressionTreeRegisters::leave_register [private] |

Definition at line 98 of file RegressionTreeRegisters.h.

Referenced by build_(), declareOptions(), getAllRegisteredRow(), makeDeepCopyFromShallowCopy(), and printRegisters().

Definition at line 107 of file RegressionTreeRegisters.h.

Referenced by build_(), and declareOptions().

int PLearn::RegressionTreeRegisters::next_id [private] |

Definition at line 95 of file RegressionTreeRegisters.h.

Referenced by declareOptions(), reinitRegisters(), and sortRows().

Definition at line 88 of file RegressionTreeRegisters.h.

Referenced by declareOptions(), and sortRows().

VMat PLearn::RegressionTreeRegisters::source [private] |

Definition at line 104 of file RegressionTreeRegisters.h.

Referenced by build_(), declareOptions(), RegressionTreeRegisters(), and sortRows().

TVec<pair<RTR_target_t,RTR_weight_t> > PLearn::RegressionTreeRegisters::target_weight [private] |

Definition at line 103 of file RegressionTreeRegisters.h.

Referenced by build_(), getAllRegisteredRow(), getAllRegisteredRowLeave(), and getExample().

PP<RegressionTreeLeave> PLearn::RegressionTreeRegisters::tmp_leave [mutable, private] |

used in bestSplitInRow to save data

Definition at line 114 of file RegressionTreeRegisters.h.

Vec PLearn::RegressionTreeRegisters::tmp_vec [mutable, private] |

used in bestSplitInRow to don't allocate a new vector each time.

Definition at line 116 of file RegressionTreeRegisters.h.

TMat<RTR_type> PLearn::RegressionTreeRegisters::tsorted_row [private] |

Definition at line 97 of file RegressionTreeRegisters.h.

Referenced by declareOptions(), getAllRegisteredRow(), RegressionTreeRegisters(), sortEachDim(), and sortRows().

VMat PLearn::RegressionTreeRegisters::tsource [private] |

Definition at line 99 of file RegressionTreeRegisters.h.

Referenced by build_(), checkMissing(), declareOptions(), getAllRegisteredRow(), getAllRegisteredRowLeave(), RegressionTreeRegisters(), and sortEachDim().

Definition at line 100 of file RegressionTreeRegisters.h.

Referenced by build_(), getAllRegisteredRow(), getAllRegisteredRowLeave(), getExample(), and RegressionTreeRegisters().

Definition at line 89 of file RegressionTreeRegisters.h.

Referenced by declareOptions(), and verbose().

1.7.4

1.7.4