|

PLearn 0.1

|

|

PLearn 0.1

|

#include <ModuleLearner.h>

Public Member Functions | |

| ModuleLearner () | |

| Default constructor. | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual void | computeOutputsAndCosts (const Mat &input, const Mat &target, Mat &output, Mat &costs) const |

| minibatch version of computeOutputAndCosts | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual ModuleLearner * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| PP< OnlineLearningModule > | module |

| int | batch_size |

| TVec< string > | cost_ports |

| TVec< string > | input_ports |

| TVec< string > | target_ports |

| TVec< string > | weight_ports |

| string | output_port |

| bool | operate_on_bags |

| int | reset_seed_upon_train |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | trainingStep (const Mat &inputs, const Mat &targets, const Vec &weights) |

| Perform one training step for the given batch inputs, targets and weights. | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| PP< MatrixModule > | store_inputs |

| YB NOTE: MAYBE WE DO NOT NEED store_* to be MatrixModules, just Mat? | |

| PP< MatrixModule > | store_targets |

| Simple module used to initialize the network's targets. | |

| PP< MatrixModule > | store_weights |

| Simple module used to initialize the network's weights. | |

| PP< MatrixModule > | store_outputs |

| Simple module that will contain the network's outputs at the end of a fprop step. | |

| TVec< PP< MatrixModule > > | store_costs |

| Simple modules that will contain the network's costs at the end of a fprop step. | |

| PP< NetworkModule > | network |

| The network consisting of the optimized module and the additional modules described above. | |

| TVec< Mat * > | null_pointers |

| The list of (null) pointers to matrices being given as argument to the network's fprop and bpropAccUpdate methods. | |

| int | mbatch_size |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| Mat | all_ones |

| Matrix that contains only ones (used to fill weights at test time). | |

| Mat | tmp_costs |

| Matrix that stores a copy of the costs (used to update the cost statistics). | |

Definition at line 51 of file ModuleLearner.h.

typedef PLearner PLearn::ModuleLearner::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 53 of file ModuleLearner.h.

| PLearn::ModuleLearner::ModuleLearner | ( | ) |

Default constructor.

Definition at line 76 of file ModuleLearner.cc.

References PLearn::PLearner::random_gen, and PLearn::PLearner::test_minibatch_size.

:

batch_size(1),

cost_ports(TVec<string>(1, "cost")),

input_ports(TVec<string>(1, "input")),

target_ports(TVec<string>(1, "target")),

output_port("output"),

// Note: many learners do not use weights, thus the default behavior is not

// to have a 'weight' port in 'weight_ports'.

operate_on_bags(false),

reset_seed_upon_train(0),

mbatch_size(-1)

{

random_gen = new PRandom();

test_minibatch_size = 1000;

}

| string PLearn::ModuleLearner::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file ModuleLearner.cc.

| OptionList & PLearn::ModuleLearner::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file ModuleLearner.cc.

| RemoteMethodMap & PLearn::ModuleLearner::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file ModuleLearner.cc.

Reimplemented from PLearn::PLearner.

Definition at line 71 of file ModuleLearner.cc.

| Object * PLearn::ModuleLearner::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 71 of file ModuleLearner.cc.

| StaticInitializer ModuleLearner::_static_initializer_ & PLearn::ModuleLearner::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file ModuleLearner.cc.

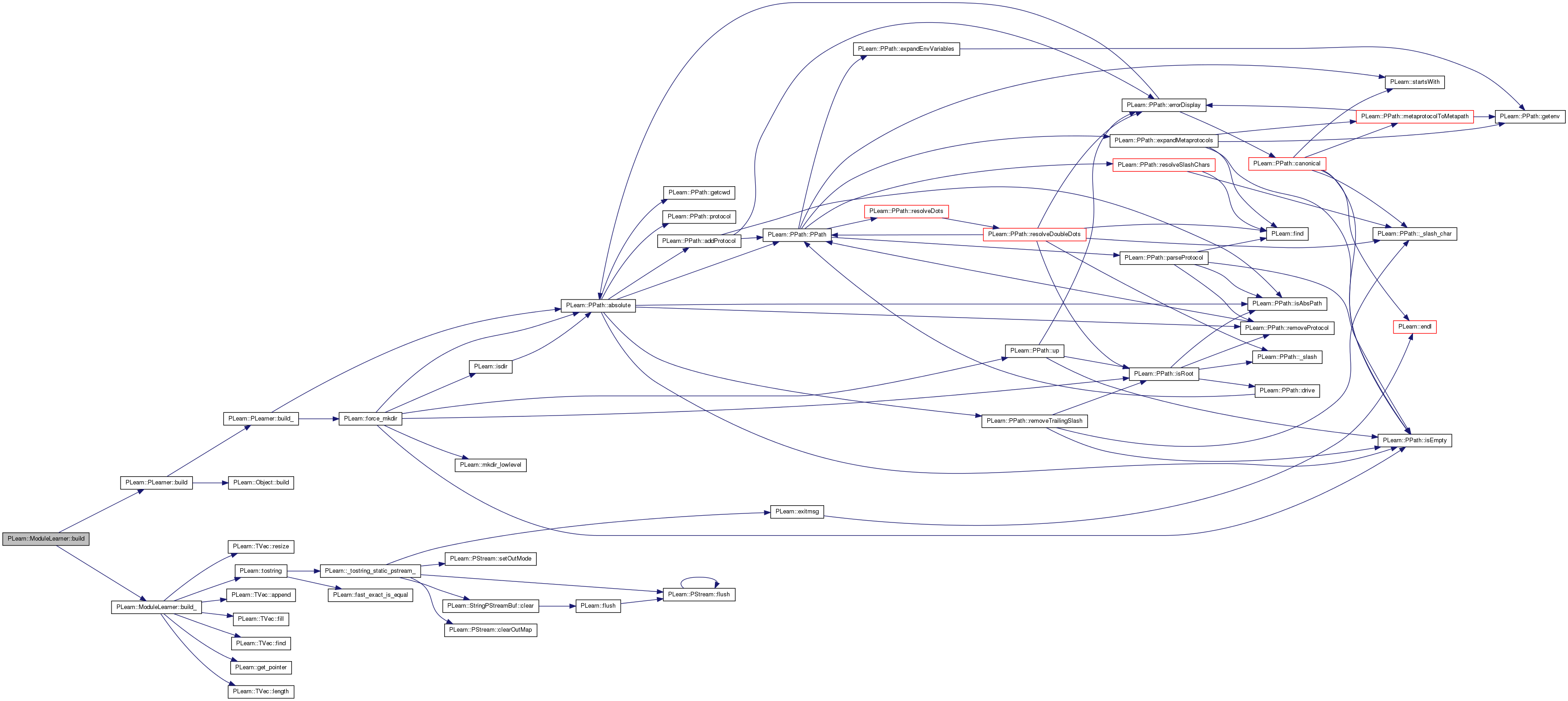

| void PLearn::ModuleLearner::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 240 of file ModuleLearner.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

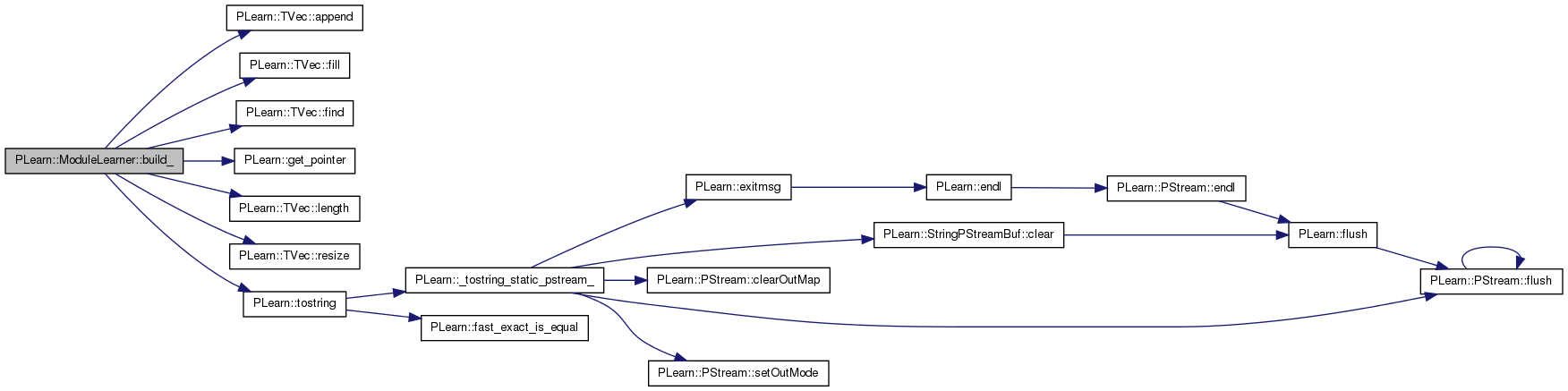

| void PLearn::ModuleLearner::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 151 of file ModuleLearner.cc.

References PLearn::TVec< T >::append(), cost_ports, PLearn::TVec< T >::fill(), PLearn::TVec< T >::find(), PLearn::get_pointer(), i, input_ports, PLearn::TVec< T >::length(), module, network, null_pointers, output_port, PLCHECK, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), store_costs, store_inputs, store_outputs, store_targets, store_weights, target_ports, PLearn::tostring(), and weight_ports.

Referenced by build().

{

if (!module)

// Cannot do anything without an underlying module.

return;

// Forward random number generator to underlying module.

if (!module->random_gen) {

module->random_gen = random_gen;

module->build();

module->forget();

}

// Create a new NetworkModule that connects the ports of the underlying

// module to simple MatrixModules that will provide/store data.

const TVec<string>& ports = module->getPorts();

TVec< PP<OnlineLearningModule> > all_modules;

all_modules.append(module);

TVec< PP<NetworkConnection> > all_connections;

store_inputs = store_targets = store_weights = NULL;

for (int i = 0; i < input_ports.length(); i++) {

if (!store_inputs) {

store_inputs = new MatrixModule("store_inputs", true);

all_modules.append(get_pointer(store_inputs));

}

all_connections.append(new NetworkConnection(

get_pointer(store_inputs), "data",

module, input_ports[i], false));

}

for (int i = 0; i < target_ports.length(); i++) {

if (!store_targets) {

store_targets = new MatrixModule("store_targets", true);

all_modules.append(get_pointer(store_targets));

}

all_connections.append(new NetworkConnection(

get_pointer(store_targets), "data",

module, target_ports[i], false));

}

for (int i = 0; i < weight_ports.length(); i++) {

if (!store_weights) {

store_weights = new MatrixModule("store_weights", true);

all_modules.append(get_pointer(store_weights));

}

all_connections.append(new NetworkConnection(

get_pointer(store_weights), "data",

module, weight_ports[i], false));

}

if (ports.find(output_port) >= 0) {

store_outputs = new MatrixModule("store_outputs", true);

all_modules.append(get_pointer(store_outputs));

all_connections.append(new NetworkConnection(

module, output_port,

get_pointer(store_outputs), "data", false));

} else

store_outputs = NULL;

store_costs.resize(0);

for (int i = 0; i < cost_ports.length(); i++) {

const string& cost_port = cost_ports[i];

PLCHECK( ports.find(cost_port) >= 0 );

PP<MatrixModule> store = new MatrixModule("store_costs_" + tostring(i),

true);

all_modules.append(get_pointer(store));

// Note that only the first connection propagates the gradient (we

// only optimize the first cost).

all_connections.append(new NetworkConnection(

module, cost_port,

get_pointer(store), "data", i == 0));

store_costs.append(store);

}

network = new NetworkModule();

network->modules = all_modules;

network->connections = all_connections;

network->build();

// Initialize the list of null pointers used for forward and backward

// propagation.

null_pointers.resize(module->nPorts());

null_pointers.fill(NULL);

}

| string PLearn::ModuleLearner::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 71 of file ModuleLearner.cc.

Referenced by train().

| void PLearn::ModuleLearner::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 547 of file ModuleLearner.cc.

References computeOutputAndCosts(), PLearn::fast_exact_is_equal(), i, PLearn::TVec< T >::length(), and PLASSERT.

{

// Unefficient implementation (recompute the output too).

Vec the_output;

computeOutputAndCosts(input, target, the_output, costs);

#ifdef BOUNDCHECK

// Ensure the computed output is the same as the one provided in this

// method.

PLASSERT( output.length() == the_output.length() );

for (int i = 0; i < output.length(); i++) {

PLASSERT( fast_exact_is_equal(output[i], the_output[i]) );

}

#endif

}

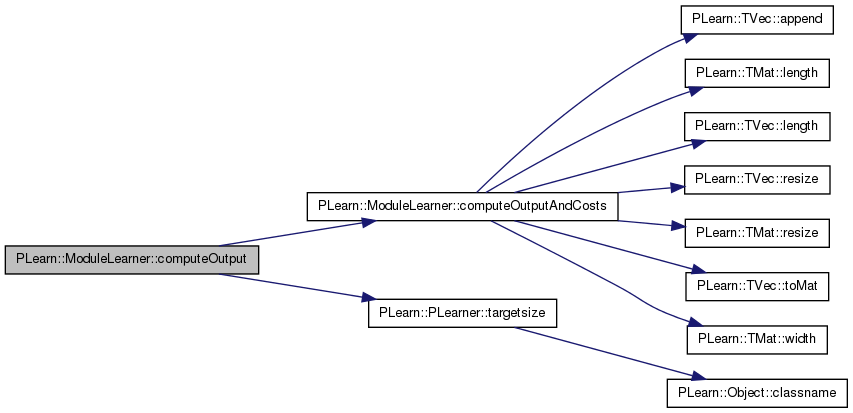

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 536 of file ModuleLearner.cc.

References computeOutputAndCosts(), MISSING_VALUE, and PLearn::PLearner::targetsize().

{

// Unefficient implementation.

Vec target(targetsize(), MISSING_VALUE);

Vec costs;

computeOutputAndCosts(input, target, output, costs);

}

| void PLearn::ModuleLearner::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 440 of file ModuleLearner.cc.

References all_ones, PLearn::TVec< T >::append(), i, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), network, null_pointers, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), store_costs, store_inputs, store_outputs, store_targets, store_weights, PLearn::TVec< T >::toMat(), and PLearn::TMat< T >::width().

Referenced by computeCostsFromOutputs(), and computeOutput().

{

if (store_inputs)

store_inputs->setData(input.toMat(1, input.length()));

if (store_targets)

store_targets->setData(target.toMat(1, target.length()));

if (store_weights) {

all_ones.resize(1, 1);

all_ones(0, 0) = 1;

store_weights->setData(all_ones);

}

// Forward propagation.

network->fprop(null_pointers);

// Store output.

if (store_outputs) {

const Mat& net_out = store_outputs->getData();

PLASSERT( net_out.length() == 1 );

output.resize(net_out.width());

output << net_out;

} else

output.resize(0);

// Store costs.

costs.resize(0);

for (int i = 0; i < store_costs.length(); i++) {

const Mat& cost_i = store_costs[i]->getData();

PLASSERT( cost_i.length() == 1 );

costs.append(cost_i(0));

}

}

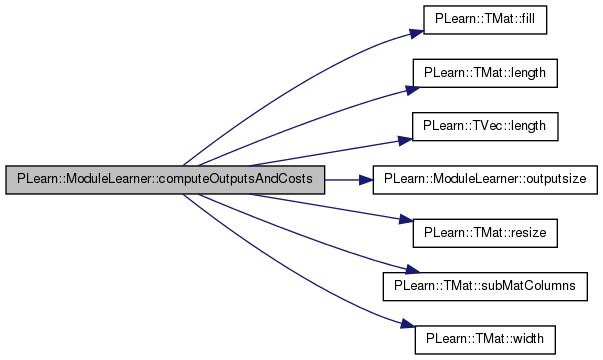

| void PLearn::ModuleLearner::computeOutputsAndCosts | ( | const Mat & | input, |

| const Mat & | target, | ||

| Mat & | output, | ||

| Mat & | costs | ||

| ) | const [virtual] |

minibatch version of computeOutputAndCosts

Reimplemented from PLearn::PLearner.

Definition at line 477 of file ModuleLearner.cc.

References all_ones, PLearn::TMat< T >::fill(), i, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), network, null_pointers, outputsize(), PLASSERT, PLearn::TMat< T >::resize(), store_costs, store_inputs, store_outputs, store_targets, store_weights, PLearn::TMat< T >::subMatColumns(), and PLearn::TMat< T >::width().

{

static Mat one;

if (store_inputs)

store_inputs->setData(input);

if (store_targets)

store_targets->setData(target);

if (store_weights) {

if (all_ones.width() != 1 || all_ones.length() != input.length()) {

all_ones.resize(input.length(), 1);

all_ones.fill(1.0);

}

store_weights->setData(all_ones);

}

// Make the store_output temporarily point to output

Mat old_net_out;

Mat* net_out = store_outputs ? &store_outputs->getData()

: NULL;

output.resize(input.length(),outputsize() >= 0 ? outputsize() : 0);

if (net_out) {

old_net_out = *net_out;

*net_out = output;

}

// Forward propagation.

network->fprop(null_pointers);

// Restore store_outputs.

if (net_out)

*net_out = old_net_out;

if (!store_costs) {

// Do not bother with costs.

costs.resize(input.length(), 0);

return;

}

// Copy costs.

// Note that a more efficient implementation may be done when only one cost

// is computed (see code in previous version).

// First compute total size.

int cost_size = 0;

for (int i = 0; i < store_costs.length(); i++)

cost_size += store_costs[i]->getData().width();

// Then resize the 'costs' matrix and fill it.

costs.resize(input.length(), cost_size);

int cost_idx = 0;

for (int i = 0; i < store_costs.length(); i++) {

const Mat& cost_i = store_costs[i]->getData();

PLASSERT( cost_i.length() == costs.length() );

costs.subMatColumns(cost_idx, cost_i.width()) << cost_i;

cost_idx += cost_i.width();

}

}

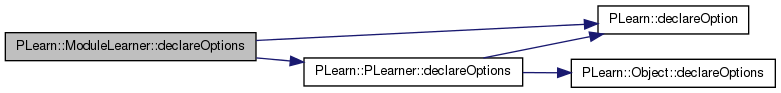

| void PLearn::ModuleLearner::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 95 of file ModuleLearner.cc.

References batch_size, PLearn::OptionBase::buildoption, cost_ports, PLearn::declareOption(), PLearn::PLearner::declareOptions(), input_ports, PLearn::OptionBase::learntoption, mbatch_size, module, operate_on_bags, output_port, reset_seed_upon_train, target_ports, and weight_ports.

{

declareOption(ol, "module", &ModuleLearner::module,

OptionBase::buildoption,

"The module being optimized.");

declareOption(ol, "batch_size", &ModuleLearner::batch_size,

OptionBase::buildoption,

"User-specified number of samples fed to the network at each iteration of learning.\n"

"Use '0' for full batch learning.");

declareOption(ol, "reset_seed_upon_train", &ModuleLearner::reset_seed_upon_train,

OptionBase::buildoption,

"Whether to reset the random generator seed upon starting the train\n"

"method. If positive this is the seed. If -1 use the value of the\n"

"option 'use_a_separate_random_generator_for_testing'.\n");

declareOption(ol, "cost_ports", &ModuleLearner::cost_ports,

OptionBase::buildoption,

"List of ports that contain costs being computed (the first cost is\n"

"also the only one being optimized by this learner).");

declareOption(ol, "input_ports", &ModuleLearner::input_ports,

OptionBase::buildoption,

"List of ports that take the input part of a sample as input.");

declareOption(ol, "target_ports", &ModuleLearner::target_ports,

OptionBase::buildoption,

"List of ports that take the target part of a sample as input.");

declareOption(ol, "weight_ports", &ModuleLearner::weight_ports,

OptionBase::buildoption,

"List of ports that take the weight part of a sample as input.");

declareOption(ol, "output_port", &ModuleLearner::output_port,

OptionBase::buildoption,

"The port that will contain the output of the learner.");

declareOption(ol, "operate_on_bags", &ModuleLearner::operate_on_bags,

OptionBase::buildoption,

"If true, then each training step will be done on batch_size *bags*\n"

"of samples (instead of batch_size samples).");

declareOption(ol, "mbatch_size", &ModuleLearner::mbatch_size,

OptionBase::learntoption,

"Effective 'batch_size': it takes the same value as 'batch_size'\n"

"except when 'batch_size' is set to 0, and this\n"

"option takes the value of the size of the training set.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::ModuleLearner::declaringFile | ( | ) | [inline, static] |

| ModuleLearner * PLearn::ModuleLearner::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file ModuleLearner.cc.

| void PLearn::ModuleLearner::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 282 of file ModuleLearner.cc.

References PLearn::PLearner::forget(), mbatch_size, and module.

{

inherited::forget();

if (module)

module->forget();

mbatch_size = -1;

}

| OptionList & PLearn::ModuleLearner::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 71 of file ModuleLearner.cc.

| OptionMap & PLearn::ModuleLearner::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 71 of file ModuleLearner.cc.

| RemoteMethodMap & PLearn::ModuleLearner::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 71 of file ModuleLearner.cc.

| TVec< string > PLearn::ModuleLearner::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 566 of file ModuleLearner.cc.

References cost_ports.

{

return cost_ports;

}

| TVec< string > PLearn::ModuleLearner::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 574 of file ModuleLearner.cc.

References cost_ports.

{

return cost_ports;

}

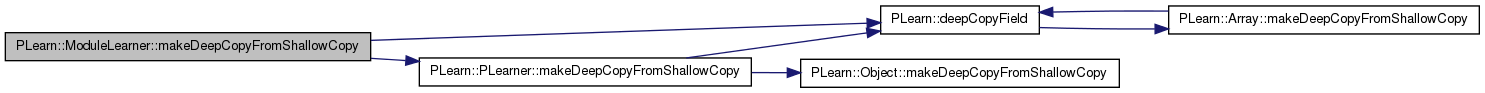

| void PLearn::ModuleLearner::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 249 of file ModuleLearner.cc.

References all_ones, cost_ports, PLearn::deepCopyField(), input_ports, PLearn::PLearner::makeDeepCopyFromShallowCopy(), module, network, null_pointers, store_costs, store_inputs, store_outputs, store_targets, store_weights, target_ports, tmp_costs, and weight_ports.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(module, copies);

deepCopyField(cost_ports, copies);

deepCopyField(input_ports, copies);

deepCopyField(target_ports, copies);

deepCopyField(weight_ports, copies);

deepCopyField(store_inputs, copies);

deepCopyField(store_targets, copies);

deepCopyField(store_weights, copies);

deepCopyField(store_outputs, copies);

deepCopyField(store_costs, copies);

deepCopyField(network, copies);

deepCopyField(null_pointers, copies);

deepCopyField(all_ones, copies);

deepCopyField(tmp_costs, copies);

}

| int PLearn::ModuleLearner::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 271 of file ModuleLearner.cc.

References module, output_port, and store_outputs.

Referenced by computeOutputsAndCosts().

{

if ( module && store_outputs )

return module->getPortWidth(output_port);

else

return -1; // Undefined.

}

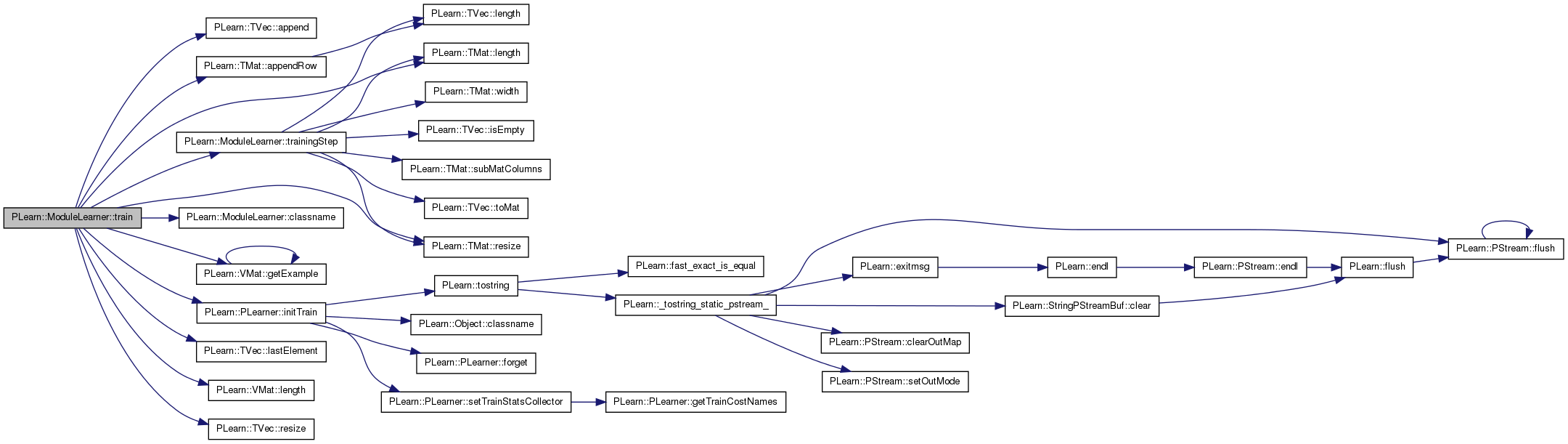

| void PLearn::ModuleLearner::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 295 of file ModuleLearner.cc.

References PLearn::TVec< T >::append(), PLearn::TMat< T >::appendRow(), batch_size, classname(), PLearn::OnlineLearningModule::during_training, PLearn::VMat::getExample(), PLearn::PLearner::initTrain(), PLearn::TVec< T >::lastElement(), PLearn::TMat< T >::length(), PLearn::VMat::length(), mbatch_size, PLearn::PLearner::nstages, operate_on_bags, PLASSERT, PLERROR, PLWARNING, PLearn::PLearner::random_gen, PLearn::PLearner::report_progress, reset_seed_upon_train, PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), PLearn::PLearner::stage, store_weights, PLearn::SumOverBagsVariable::TARGET_COLUMN_LAST, PLearn::PLearner::train_set, PLearn::PLearner::train_stats, trainingStep(), and PLearn::PLearner::use_a_separate_random_generator_for_testing.

{

if (!initTrain())

return;

if (reset_seed_upon_train)

{

if (reset_seed_upon_train>0)

random_gen->manual_seed(reset_seed_upon_train);

else if (reset_seed_upon_train==-1)

random_gen->manual_seed(use_a_separate_random_generator_for_testing);

else PLERROR("ModuleLearner::reset_seed_upon_train should be >=-1");

}

OnlineLearningModule::during_training=true;

// Perform training set-dependent initialization here.

if (batch_size == 0)

mbatch_size = train_set->length();

else

mbatch_size = batch_size;

if (train_set->weightsize() >= 1 && !store_weights)

PLWARNING("In ModuleLearner::train - The training set contains "

"weights, but the network is not using them");

Mat inputs, targets;

Vec weights;

PP<ProgressBar> pb = NULL;

// clear statistics of previous calls

train_stats->forget();

int stage_init = stage;

if (report_progress)

pb = new ProgressBar( "Training " + classname(), nstages - stage);

if( operate_on_bags && batch_size>0 )

while ( stage < nstages ) {

// Obtain training samples.

int sample_start = stage % train_set->length();

int isample = sample_start;

inputs.resize(0,0);

targets.resize(0,0);

weights.resize(0);

for( int nbags = 0; nbags < mbatch_size; nbags++ ) {

int bag_info = 0;

while( !(bag_info & SumOverBagsVariable::TARGET_COLUMN_LAST) ) {

PLASSERT( isample < train_set->length() );

Vec input, target; real weight;

train_set->getExample(isample, input, target, weight);

inputs.appendRow(input);

targets.appendRow(target);

weights.append( weight );

bag_info = int(round(target.lastElement()));

isample ++;

}

isample = isample % train_set->length();

}

if( stage + inputs.length() > nstages )

break;

// Perform a training step.

trainingStep(inputs, targets, weights);

// Handle training progress.

stage += inputs.length();

if (report_progress)

pb->update(stage - stage_init);

}

else

while (stage + mbatch_size <= nstages) {

// Obtain training samples.

int sample_start = stage % train_set->length();

train_set->getExamples(sample_start, mbatch_size, inputs, targets,

weights, NULL, true);

// Perform a training step.

trainingStep(inputs, targets, weights);

// Handle training progress.

stage += mbatch_size;

if (report_progress)

pb->update(stage - stage_init);

}

if (stage != nstages)

{

if( operate_on_bags && batch_size>0 )

PLWARNING("In ModuleLearner::train - The network was trained for "

"only %d stages (instead of nstages = %d, which could not "

"be fulfilled with batch_size of %d bags)", stage, nstages, batch_size);

else

PLWARNING("In ModuleLearner::train - The network was trained for "

"only %d stages (instead of nstages = %d, which is not a "

"multiple of batch_size = %d)", stage, nstages, batch_size);

}

OnlineLearningModule::during_training=false;

// finalize statistics for this call

train_stats->finalize();

}

| void PLearn::ModuleLearner::trainingStep | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| const Vec & | weights | ||

| ) | [protected] |

Perform one training step for the given batch inputs, targets and weights.

Definition at line 394 of file ModuleLearner.cc.

References i, PLearn::TVec< T >::isEmpty(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), network, null_pointers, PLASSERT, PLearn::TMat< T >::resize(), store_costs, store_inputs, store_targets, store_weights, PLearn::TMat< T >::subMatColumns(), tmp_costs, PLearn::TVec< T >::toMat(), PLearn::PLearner::train_stats, and PLearn::TMat< T >::width().

Referenced by train().

{

// Fill in the provided batch values (only if they are actually used by the

// network).

if (store_inputs)

store_inputs->setData(inputs);

if (store_targets)

store_targets->setData(targets);

if (store_weights)

store_weights->setData(weights.toMat(weights.length(), 1));

// Forward propagation.

network->fprop(null_pointers);

// Copy the costs into a single matrix.

// First compute total size.

int cost_size = 0;

for (int i = 0; i < store_costs.length(); i++)

cost_size += store_costs[i]->getData().width();

// Then resize the 'tmp_costs' matrix and fill it.

tmp_costs.resize(inputs.length(), cost_size);

int cost_idx = 0;

for (int i = 0; i < store_costs.length(); i++) {

const Mat& cost_i = store_costs[i]->getData();

PLASSERT( cost_i.length() == tmp_costs.length() );

tmp_costs.subMatColumns(cost_idx, cost_i.width()) << cost_i;

cost_idx += cost_i.width();

}

// Then update the training statistics.

train_stats->update(tmp_costs);

// Initialize cost gradients to 1.

// Note that we may not need to re-do it at every iteration, but this is so

// cheap it should not impact performance.

if (!store_costs.isEmpty())

store_costs[0]->setGradientTo(1);

// Backpropagation.

network->bpropAccUpdate(null_pointers, null_pointers);

}

Reimplemented from PLearn::PLearner.

Definition at line 136 of file ModuleLearner.h.

Mat PLearn::ModuleLearner::all_ones [mutable, private] |

Matrix that contains only ones (used to fill weights at test time).

Definition at line 192 of file ModuleLearner.h.

Referenced by computeOutputAndCosts(), computeOutputsAndCosts(), and makeDeepCopyFromShallowCopy().

Definition at line 60 of file ModuleLearner.h.

Referenced by declareOptions(), and train().

Definition at line 61 of file ModuleLearner.h.

Referenced by build_(), declareOptions(), getTestCostNames(), getTrainCostNames(), and makeDeepCopyFromShallowCopy().

Definition at line 62 of file ModuleLearner.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

int PLearn::ModuleLearner::mbatch_size [protected] |

Definition at line 176 of file ModuleLearner.h.

Referenced by declareOptions(), forget(), and train().

Definition at line 58 of file ModuleLearner.h.

Referenced by build_(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and outputsize().

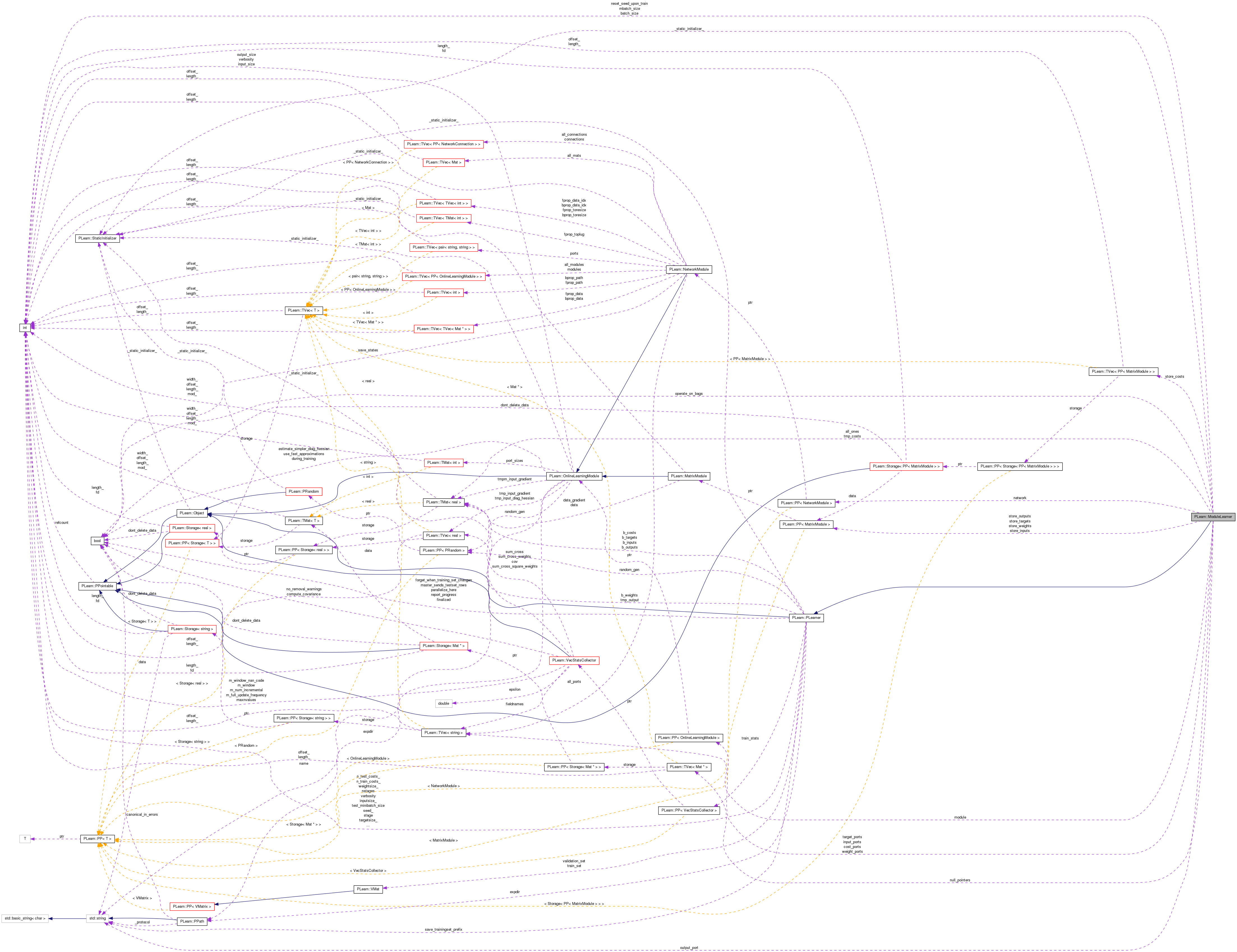

PP<NetworkModule> PLearn::ModuleLearner::network [protected] |

The network consisting of the optimized module and the additional modules described above.

Definition at line 168 of file ModuleLearner.h.

Referenced by build_(), computeOutputAndCosts(), computeOutputsAndCosts(), makeDeepCopyFromShallowCopy(), and trainingStep().

TVec<Mat*> PLearn::ModuleLearner::null_pointers [protected] |

The list of (null) pointers to matrices being given as argument to the network's fprop and bpropAccUpdate methods.

Definition at line 172 of file ModuleLearner.h.

Referenced by build_(), computeOutputAndCosts(), computeOutputsAndCosts(), makeDeepCopyFromShallowCopy(), and trainingStep().

Definition at line 67 of file ModuleLearner.h.

Referenced by declareOptions(), and train().

Definition at line 65 of file ModuleLearner.h.

Referenced by build_(), declareOptions(), and outputsize().

Definition at line 69 of file ModuleLearner.h.

Referenced by declareOptions(), and train().

TVec< PP<MatrixModule> > PLearn::ModuleLearner::store_costs [protected] |

Simple modules that will contain the network's costs at the end of a fprop step.

Definition at line 164 of file ModuleLearner.h.

Referenced by build_(), computeOutputAndCosts(), computeOutputsAndCosts(), makeDeepCopyFromShallowCopy(), and trainingStep().

PP<MatrixModule> PLearn::ModuleLearner::store_inputs [protected] |

YB NOTE: MAYBE WE DO NOT NEED store_* to be MatrixModules, just Mat?

Simple module used to initialize the network's inputs.

Definition at line 150 of file ModuleLearner.h.

Referenced by build_(), computeOutputAndCosts(), computeOutputsAndCosts(), makeDeepCopyFromShallowCopy(), and trainingStep().

PP<MatrixModule> PLearn::ModuleLearner::store_outputs [protected] |

Simple module that will contain the network's outputs at the end of a fprop step.

Definition at line 160 of file ModuleLearner.h.

Referenced by build_(), computeOutputAndCosts(), computeOutputsAndCosts(), makeDeepCopyFromShallowCopy(), and outputsize().

PP<MatrixModule> PLearn::ModuleLearner::store_targets [protected] |

Simple module used to initialize the network's targets.

Definition at line 153 of file ModuleLearner.h.

Referenced by build_(), computeOutputAndCosts(), computeOutputsAndCosts(), makeDeepCopyFromShallowCopy(), and trainingStep().

PP<MatrixModule> PLearn::ModuleLearner::store_weights [protected] |

Simple module used to initialize the network's weights.

Definition at line 156 of file ModuleLearner.h.

Referenced by build_(), computeOutputAndCosts(), computeOutputsAndCosts(), makeDeepCopyFromShallowCopy(), train(), and trainingStep().

Definition at line 63 of file ModuleLearner.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

Mat PLearn::ModuleLearner::tmp_costs [mutable, private] |

Matrix that stores a copy of the costs (used to update the cost statistics).

Definition at line 196 of file ModuleLearner.h.

Referenced by makeDeepCopyFromShallowCopy(), and trainingStep().

Definition at line 64 of file ModuleLearner.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4