|

PLearn 0.1

|

|

PLearn 0.1

|

#include <WordNetOntology.h>

Definition at line 162 of file WordNetOntology.h.

| PLearn::WordNetOntology::WordNetOntology | ( | ) |

Definition at line 51 of file WordNetOntology.cc.

{

init();

createBaseSynsets();

}

| PLearn::WordNetOntology::WordNetOntology | ( | string | voc_file, |

| bool | differentiate_unknown_words, | ||

| bool | pre_compute_ancestors, | ||

| bool | pre_compute_descendants, | ||

| int | wn_pos_type = ALL_WN_TYPE, |

||

| int | word_coverage_threshold = -1 |

||

| ) |

Definition at line 57 of file WordNetOntology.cc.

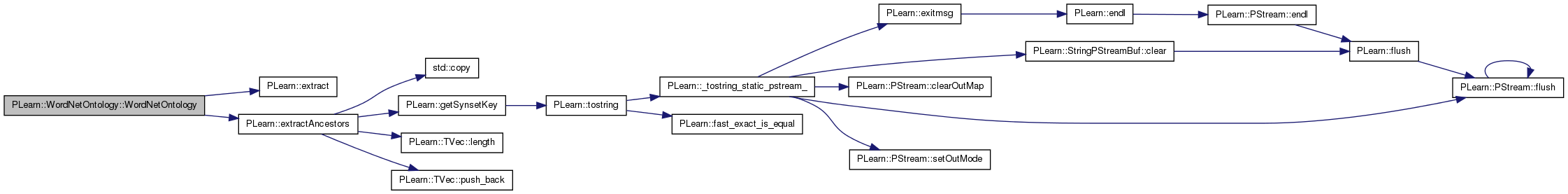

References PLearn::extract(), and PLearn::extractAncestors().

{

init(differentiate_unknown_words);

createBaseSynsets();

extract(voc_file, wn_pos_type);

if (pre_compute_descendants)

extractDescendants();

if (pre_compute_ancestors)

extractAncestors(word_coverage_threshold, true, true);

}

| PLearn::WordNetOntology::WordNetOntology | ( | string | voc_file, |

| string | synset_file, | ||

| string | ontology_file, | ||

| bool | pre_compute_ancestors, | ||

| bool | pre_compute_descendants, | ||

| int | word_coverage_threshold = -1 |

||

| ) |

Definition at line 73 of file WordNetOntology.cc.

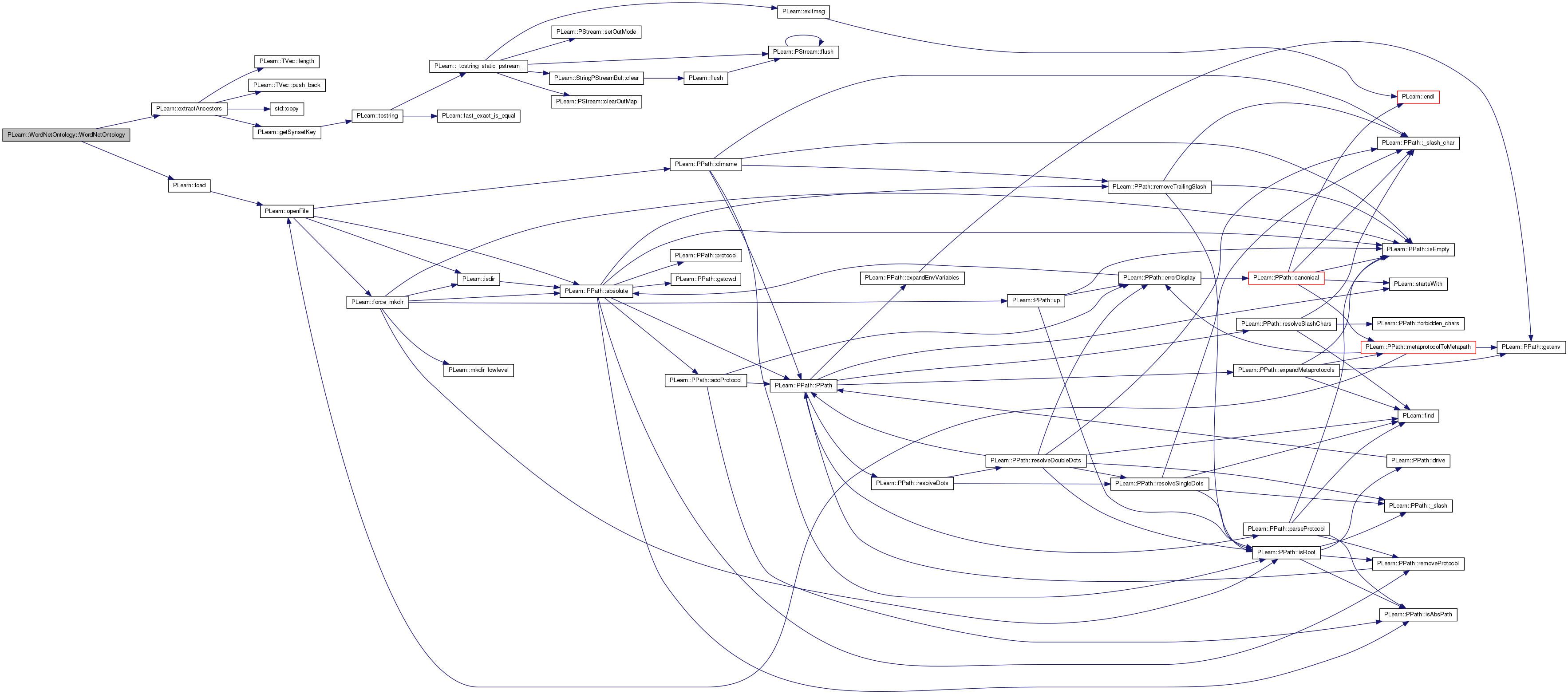

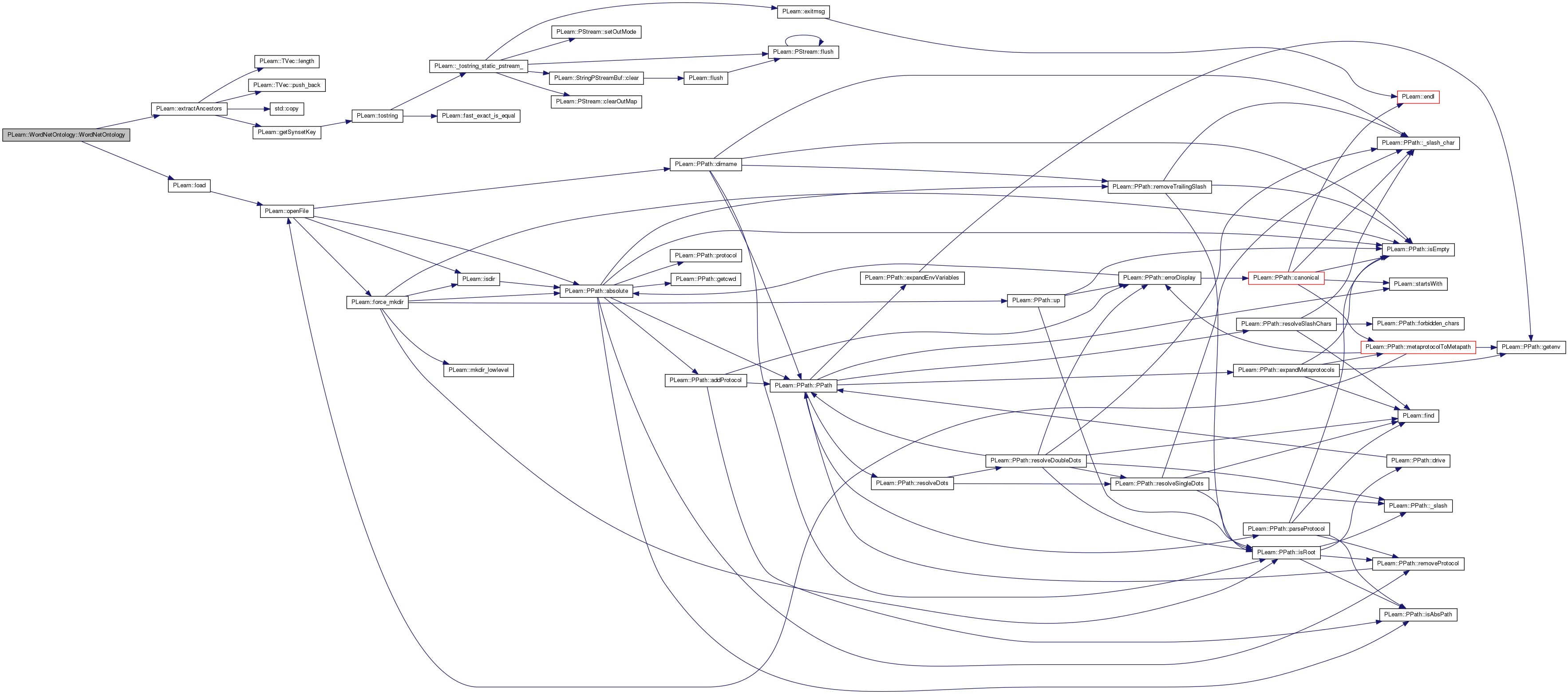

References PLearn::extractAncestors(), and PLearn::load().

{

init();

//createBaseSynsets();

load(voc_file, synset_file, ontology_file);

if (pre_compute_descendants)

extractDescendants();

if (pre_compute_ancestors)

extractAncestors(word_coverage_threshold, true, true);

}

| PLearn::WordNetOntology::WordNetOntology | ( | string | voc_file, |

| string | synset_file, | ||

| string | ontology_file, | ||

| string | sense_key_file, | ||

| bool | pre_compute_ancestors, | ||

| bool | pre_compute_descendants, | ||

| int | word_coverage_threshold = -1 |

||

| ) |

Definition at line 89 of file WordNetOntology.cc.

References PLearn::extractAncestors(), and PLearn::load().

{

init();

//createBaseSynsets();

load(voc_file, synset_file, ontology_file, sense_key_file);

if (pre_compute_descendants)

extractDescendants();

if (pre_compute_ancestors)

extractAncestors(word_coverage_threshold, true, true);

}

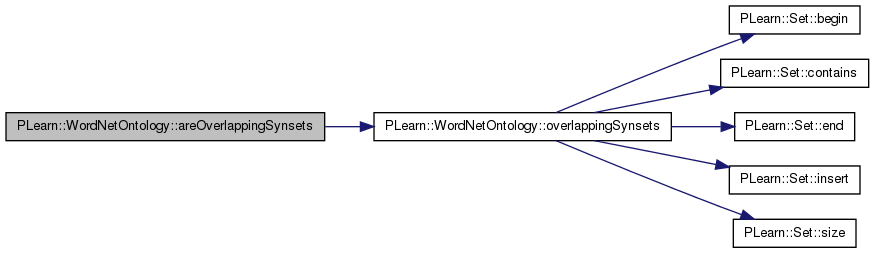

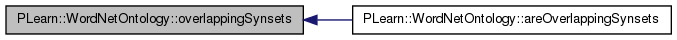

Definition at line 334 of file WordNetOntology.h.

References overlappingSynsets().

{ return (overlappingSynsets(ss_id1, ss_id2) > 1); }

| bool PLearn::WordNetOntology::catchSpecialTags | ( | string | word | ) |

Definition at line 763 of file WordNetOntology.cc.

References BOS_SS_ID, BOS_TAG, EOS_SS_ID, EOS_TAG, NUMERIC_SS_ID, NUMERIC_TAG, OOV_SS_ID, OOV_TAG, PROPER_NOUN_SS_ID, PROPER_NOUN_TAG, PUNCTUATION_SS_ID, PUNCTUATION_TAG, STOP_SS_ID, and STOP_TAG.

{

int word_id = words_id[word];

if (word == OOV_TAG)

{

word_to_senses[word_id].insert(OOV_SS_ID);

sense_to_words[OOV_SS_ID].insert(word_id);

return true;

} else if (word == PROPER_NOUN_TAG)

{

word_to_senses[word_id].insert(PROPER_NOUN_SS_ID);

sense_to_words[PROPER_NOUN_SS_ID].insert(word_id);

return true;

} else if (word == NUMERIC_TAG)

{

word_to_senses[word_id].insert(NUMERIC_SS_ID);

sense_to_words[NUMERIC_SS_ID].insert(word_id);

return true;

} else if (word == PUNCTUATION_TAG)

{

word_to_senses[word_id].insert(PUNCTUATION_SS_ID);

sense_to_words[PUNCTUATION_SS_ID].insert(word_id);

return true;

} else if (word == STOP_TAG)

{

word_to_senses[word_id].insert(STOP_SS_ID);

sense_to_words[STOP_SS_ID].insert(word_id);

return true;

} else if (word == BOS_TAG)

{

word_to_senses[word_id].insert(BOS_SS_ID);

sense_to_words[BOS_SS_ID].insert(word_id);

return true;

} else if (word == EOS_TAG)

{

word_to_senses[word_id].insert(EOS_SS_ID);

sense_to_words[EOS_SS_ID].insert(word_id);

return true;

}

return false;

}

| Node * PLearn::WordNetOntology::checkForAlreadyExtractedSynset | ( | SynsetPtr | ssp | ) |

Definition at line 1038 of file WordNetOntology.cc.

References PLearn::Node::fnum, PLearn::Node::gloss, PLearn::Node::hereiam, and PLearn::Node::syns.

{

vector<string> syns = getSynsetWords(ssp);

string gloss = ssp->defn;

long offset = ssp->hereiam;

int fnum = ssp->fnum;

for (map<int, Node*>::iterator it = synsets.begin(); it != synsets.end(); ++it)

{

Node* node = it->second;

if (node->syns == syns && node->gloss == gloss && node->hereiam == offset && node->fnum == fnum)

{

return node;

}

}

return NULL;

}

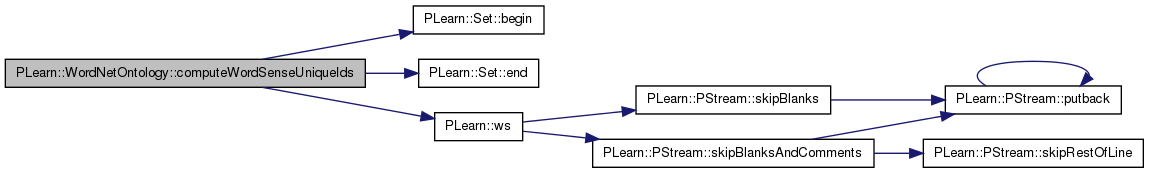

| void PLearn::WordNetOntology::computeWordSenseUniqueIds | ( | ) |

Definition at line 2788 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::end(), PLERROR, w, and PLearn::ws().

{

int unique_id = 0;

for (map<int, Set>::iterator wit = word_to_senses.begin(); wit != word_to_senses.end(); ++wit)

{

int w = wit->first;

Set senses = wit->second;

for (SetIterator sit = senses.begin(); sit != senses.end(); ++sit)

{

int s = *sit;

pair<int, int> ws(w, s);

if (word_sense_to_unique_id.find(ws) != word_sense_to_unique_id.end())

PLERROR("in computeWordSenseUniqueIds(): dupe word/sense keys (w = %d, s = %d)", w, s);

word_sense_to_unique_id[ws] = unique_id++;

}

}

are_word_sense_unique_ids_computed = true;

}

| bool PLearn::WordNetOntology::containsWord | ( | string | word | ) | [inline] |

Definition at line 315 of file WordNetOntology.h.

References words.

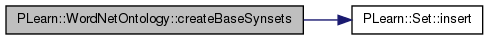

| void PLearn::WordNetOntology::createBaseSynsets | ( | ) |

Definition at line 140 of file WordNetOntology.cc.

References ADJ_OFFSET, ADJ_SS_ID, ADV_OFFSET, ADV_SS_ID, BOS_OFFSET, BOS_SS_ID, PLearn::Node::children, EOS_OFFSET, EOS_SS_ID, PLearn::Node::gloss, PLearn::Node::hereiam, PLearn::Set::insert(), NOUN_OFFSET, NOUN_SS_ID, NUMERIC_OFFSET, NUMERIC_SS_ID, OOV_OFFSET, OOV_SS_ID, PLearn::Node::parents, PROPER_NOUN_OFFSET, PROPER_NOUN_SS_ID, PUNCTUATION_OFFSET, PUNCTUATION_SS_ID, ROOT_OFFSET, ROOT_SS_ID, STOP_OFFSET, STOP_SS_ID, SUPER_UNKNOWN_OFFSET, SUPER_UNKNOWN_SS_ID, PLearn::Node::syns, PLearn::Node::types, UNDEFINED_TYPE, VERB_OFFSET, and VERB_SS_ID.

{

// create ROOT synset

Node* root_node = new Node(ROOT_SS_ID);

root_node->syns.push_back("ROOT");

root_node->types.insert(UNDEFINED_TYPE);

root_node->gloss = "(root concept)";

root_node->hereiam = ROOT_OFFSET;

synsets[ROOT_SS_ID] = root_node;

//root_node->visited = true;

// create SUPER-UNKNOWN synset

Node* unk_node = new Node(SUPER_UNKNOWN_SS_ID);

unk_node->syns.push_back("SUPER_UNKNOWN");

unk_node->types.insert(UNDEFINED_TYPE);

unk_node->gloss = "(super-unknown concept)";

unk_node->hereiam = SUPER_UNKNOWN_OFFSET;

synsets[SUPER_UNKNOWN_SS_ID] = unk_node;

//unk_node->visited = true;

// link it <-> ROOT

unk_node->parents.insert(ROOT_SS_ID);

root_node->children.insert(SUPER_UNKNOWN_SS_ID);

// create OOV (out-of-vocabulary) synset

Node* oov_node = new Node(OOV_SS_ID);

oov_node->syns.push_back("OOV");

oov_node->types.insert(UNDEFINED_TYPE);

oov_node->gloss = "(out-of-vocabulary)";

oov_node->hereiam = OOV_OFFSET;

synsets[OOV_SS_ID] = oov_node;

//oov_node->visited = true;

// link it <-> SUPER-UNKNOWN

oov_node->parents.insert(SUPER_UNKNOWN_SS_ID);

unk_node->children.insert(OOV_SS_ID);

// create PROPER_NOUN, NUMERIC, PUNCTUATION, BOS, EOS and STOP synsets

Node* proper_node = new Node(PROPER_NOUN_SS_ID);

proper_node->syns.push_back("PROPER NOUN");

proper_node->types.insert(UNDEFINED_TYPE);

proper_node->gloss = "(proper noun)";

proper_node->hereiam = PROPER_NOUN_OFFSET;

synsets[PROPER_NOUN_SS_ID] = proper_node;

//proper_node->visited = true;

Node* num_node = new Node(NUMERIC_SS_ID);

num_node->syns.push_back("NUMERIC");

num_node->types.insert(UNDEFINED_TYPE);

num_node->gloss = "(numeric)";

num_node->hereiam = NUMERIC_OFFSET;

synsets[NUMERIC_SS_ID] = num_node;

//num_node->visited = true;

Node* punct_node = new Node(PUNCTUATION_SS_ID);

punct_node->syns.push_back("PUNCTUATION");

punct_node->types.insert(UNDEFINED_TYPE);

punct_node->gloss = "(punctuation)";

punct_node->hereiam = PUNCTUATION_OFFSET;

synsets[PUNCTUATION_SS_ID] = punct_node;

//punct_node->visited = true;

Node* stop_node = new Node(STOP_SS_ID);

stop_node->syns.push_back("STOP");

stop_node->types.insert(UNDEFINED_TYPE);

stop_node->gloss = "(stop)";

stop_node->hereiam = STOP_OFFSET;

synsets[STOP_SS_ID] = stop_node;

Node* bos_node = new Node(BOS_SS_ID);

bos_node->syns.push_back("BOS");

bos_node->types.insert(UNDEFINED_TYPE);

bos_node->gloss = "(BOS)";

bos_node->hereiam = BOS_OFFSET;

synsets[BOS_SS_ID] = bos_node;

Node* eos_node = new Node(EOS_SS_ID);

eos_node->syns.push_back("EOS");

eos_node->types.insert(UNDEFINED_TYPE);

eos_node->gloss = "(EOS)";

eos_node->hereiam = EOS_OFFSET;

synsets[EOS_SS_ID] = eos_node;

// link them <-> SUPER-UNKNOWN

proper_node->parents.insert(SUPER_UNKNOWN_SS_ID);

unk_node->children.insert(PROPER_NOUN_SS_ID);

num_node->parents.insert(SUPER_UNKNOWN_SS_ID);

unk_node->children.insert(NUMERIC_SS_ID);

punct_node->parents.insert(SUPER_UNKNOWN_SS_ID);

unk_node->children.insert(PUNCTUATION_SS_ID);

stop_node->parents.insert(SUPER_UNKNOWN_SS_ID);

unk_node->children.insert(STOP_SS_ID);

bos_node->parents.insert(SUPER_UNKNOWN_SS_ID);

unk_node->children.insert(BOS_SS_ID);

eos_node->parents.insert(SUPER_UNKNOWN_SS_ID);

unk_node->children.insert(EOS_SS_ID);

// create NOUN, VERB, ADJECTIVE and ADVERB synsets

Node* noun_node = new Node(NOUN_SS_ID);

noun_node->syns.push_back("NOUN");

noun_node->types.insert(UNDEFINED_TYPE);

noun_node->gloss = "(noun concept)";

noun_node->hereiam = NOUN_OFFSET;

synsets[NOUN_SS_ID] = noun_node;

//noun_node->visited = true;

Node* verb_node = new Node(VERB_SS_ID);

verb_node->syns.push_back("VERB");

verb_node->types.insert(UNDEFINED_TYPE);

verb_node->gloss = "(verb concept)";

verb_node->hereiam = VERB_OFFSET;

synsets[VERB_SS_ID] = verb_node;

//verb_node->visited = true;

Node* adj_node = new Node(ADJ_SS_ID);

adj_node->syns.push_back("ADJECTIVE");

adj_node->types.insert(UNDEFINED_TYPE);

adj_node->gloss = "(adjective concept)";

adj_node->hereiam = ADJ_OFFSET;

synsets[ADJ_SS_ID] = adj_node;

//adj_node->visited = true;

Node* adv_node = new Node(ADV_SS_ID);

adv_node->syns.push_back("ADVERB");

adv_node->types.insert(UNDEFINED_TYPE);

adv_node->gloss = "(adverb concept)";

adv_node->hereiam = ADV_OFFSET;

synsets[ADV_SS_ID] = adv_node;

//adv_node->visited = true;

// link them <-> ROOT

noun_node->parents.insert(ROOT_SS_ID);

root_node->children.insert(NOUN_SS_ID);

verb_node->parents.insert(ROOT_SS_ID);

root_node->children.insert(VERB_SS_ID);

adj_node->parents.insert(ROOT_SS_ID);

root_node->children.insert(ADJ_SS_ID);

adv_node->parents.insert(ROOT_SS_ID);

root_node->children.insert(ADV_SS_ID);

}

| void PLearn::WordNetOntology::detectWordsWithoutOntology | ( | ) |

Definition at line 2489 of file WordNetOntology.cc.

References PLearn::Set::isEmpty(), and PLWARNING.

Referenced by main().

{

for (map<int, Set>::iterator it = word_to_senses.begin(); it != word_to_senses.end(); ++it)

{

int word_id = it->first;

Set senses = it->second;

if (senses.isEmpty())

PLWARNING("word %d (%s) has no attached ontology", word_id, words[word_id].c_str());

}

}

| void PLearn::WordNetOntology::extract | ( | string | voc_file, |

| int | wn_pos_type | ||

| ) |

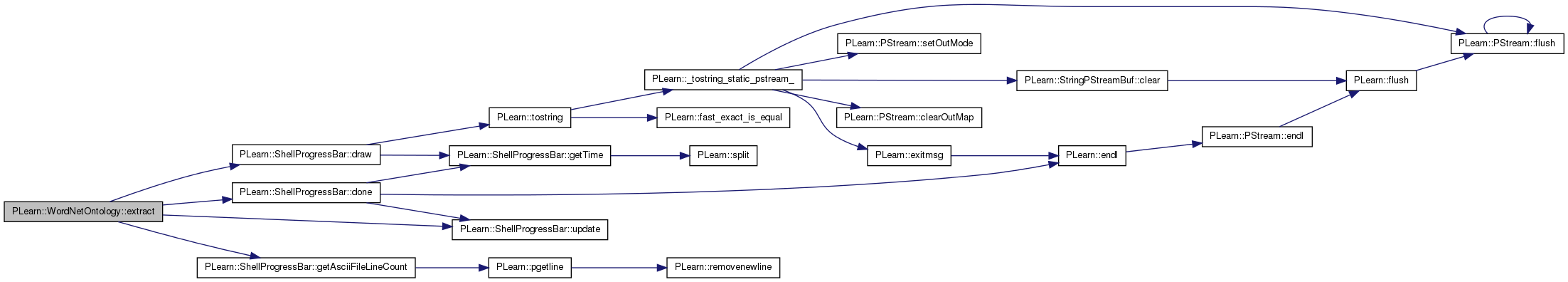

Definition at line 282 of file WordNetOntology.cc.

References PLearn::ShellProgressBar::done(), PLearn::ShellProgressBar::draw(), PLearn::ShellProgressBar::getAsciiFileLineCount(), and PLearn::ShellProgressBar::update().

{

int n_lines = ShellProgressBar::getAsciiFileLineCount(voc_file);

ShellProgressBar progress(0, n_lines - 1, "extracting ontology", 50);

progress.draw();

ifstream input_if(voc_file.c_str());

string word;

while (!input_if.eof())

{

getline(input_if, word, '\n');

if (word == "") continue;

if (word[0] == '#' && word[1] == '#') continue;

extractWord(word, wn_pos_type, true, true, false);

progress.update(word_index);

}

input_if.close();

progress.done();

finalize();

input_if.close();

}

| void PLearn::WordNetOntology::extractAncestors | ( | Node * | node, |

| Set | ancestors, | ||

| int | level, | ||

| int | level_threshold | ||

| ) |

Definition at line 1517 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::end(), PLearn::extractAncestors(), PLearn::Set::insert(), and PLearn::Node::parents.

{

for (SetIterator it = node->parents.begin(); it != node->parents.end(); ++it)

{

ancestors.insert(*it);

if (level_threshold == -1 || level < level_threshold)

extractAncestors(synsets[*it], ancestors, level + 1, level_threshold);

}

}

| void PLearn::WordNetOntology::extractAncestors | ( | Node * | node, |

| Set | ancestors, | ||

| int | word_coverage_threshold | ||

| ) |

Definition at line 1491 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::end(), PLearn::extractAncestors(), PLearn::Set::insert(), and PLearn::Node::parents.

{

/*

int ss_id = node->ss_id;

if (word_coverage_threshold == -1 || synset_to_word_descendants[ss_id].size() < word_coverage_threshold)

{

ancestors.insert(ss_id);

for (SetIterator it = node->parents.begin(); it != node->parents.end(); ++it)

{

extractAncestors(synsets[*it], ancestors, word_coverage_threshold);

}

}

*/

for (SetIterator it = node->parents.begin(); it != node->parents.end(); ++it)

{

int ss_id = *it;

if (word_coverage_threshold == -1 || synset_to_word_descendants[ss_id].size() < word_coverage_threshold)

{

ancestors.insert(ss_id);

extractAncestors(synsets[ss_id], ancestors, word_coverage_threshold);

}

}

}

| void PLearn::WordNetOntology::extractAncestors | ( | int | threshold, |

| bool | cut_with_word_coverage, | ||

| bool | exclude_itself | ||

| ) |

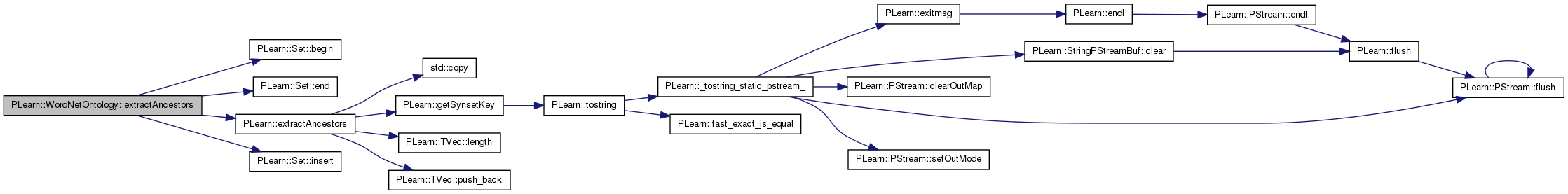

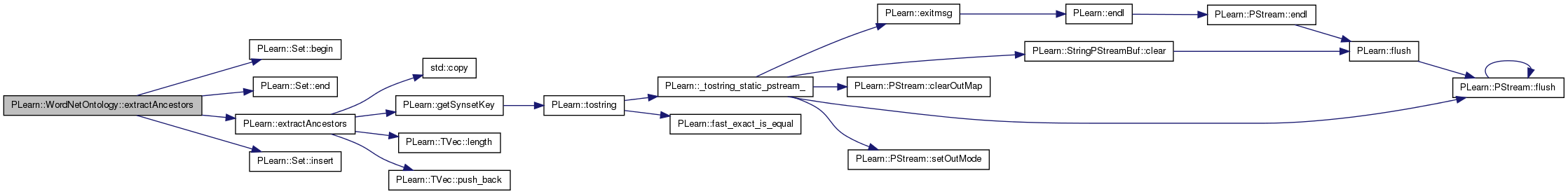

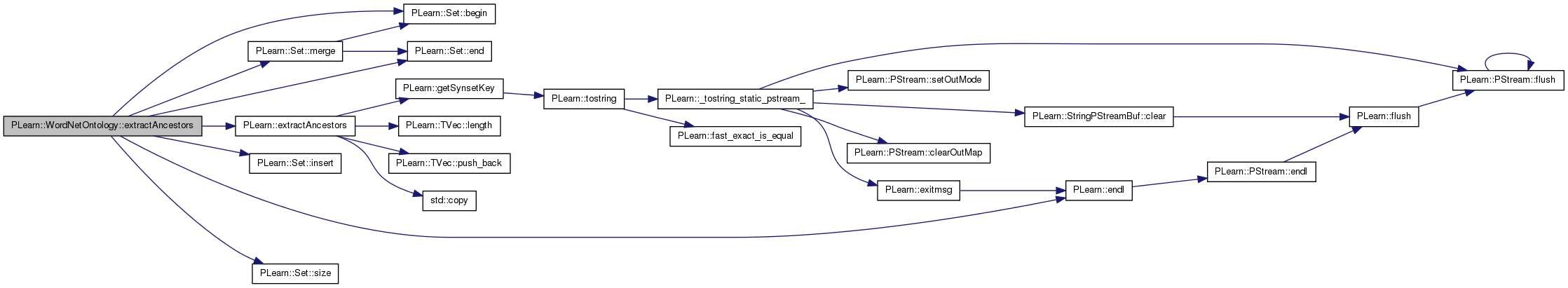

Definition at line 1435 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::end(), PLearn::endl(), PLearn::extractAncestors(), PLearn::Set::insert(), PLearn::Set::merge(), and PLearn::Set::size().

Referenced by PLearn::GraphicalBiText::compute_nodemap().

{

#ifdef VERBOSE

cout << "extracting ancestors... ";

#endif

if (cut_with_word_coverage && !are_descendants_extracted)

{

cout << "*** I need to extract descendants before I can extract ancestors with a word coverage threshold ***" << endl;

extractDescendants();

}

// synsets -> ancestors

int n_sense_ancestors = 0;

for (map<int, Node*>::iterator it = synsets.begin(); it != synsets.end(); ++it)

{

int ss = it->first;

Node* node = it->second;

Set ancestors;

if (cut_with_word_coverage)

extractAncestors(node, ancestors, threshold);

else

extractAncestors(node, ancestors, 1, threshold);

if (!exclude_itself)

ancestors.insert(ss);

synset_to_ancestors[ss] = ancestors;

n_sense_ancestors += ancestors.size();

}

are_ancestors_extracted = true;

// words -> ancestors

int n_word_ancestors = 0;

for (map<int, Set>::iterator it = word_to_senses.begin(); it != word_to_senses.end(); ++it)

{

int word_id = it->first;

Set senses = it->second;

Set word_ancestors;

for (SetIterator it = senses.begin(); it != senses.end(); it++)

{

int sense_id = *it;

Set ancestors = getSynsetAncestors(sense_id);

word_ancestors.merge(ancestors);

word_ancestors.insert(sense_id);

}

word_to_ancestors[word_id] = word_ancestors;

n_word_ancestors += word_ancestors.size();

}

#ifdef VERBOSE

cout << "(" << n_sense_ancestors << " sense ancestors, " << n_word_ancestors << " word ancestors)" << endl;

#endif

}

| void PLearn::WordNetOntology::extractDescendants | ( | Node * | node, |

| Set | sense_descendants, | ||

| Set | word_descendants | ||

| ) |

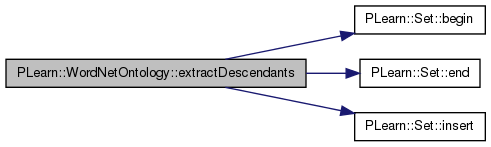

Definition at line 1859 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Node::children, PLearn::Set::end(), PLearn::Set::insert(), and PLearn::Node::ss_id.

{

int ss_id = node->ss_id;

if (isSense(ss_id)) // is a sense

{

sense_descendants.insert(ss_id);

for (SetIterator it = sense_to_words[ss_id].begin(); it != sense_to_words[ss_id].end(); ++it)

{

int word_id = *it;

word_descendants.insert(word_id);

}

}

for (SetIterator it = node->children.begin(); it != node->children.end(); ++it)

{

extractDescendants(synsets[*it], sense_descendants, word_descendants);

}

}

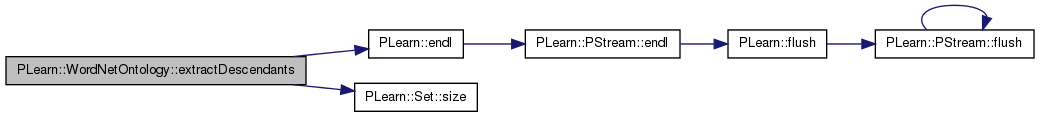

| void PLearn::WordNetOntology::extractDescendants | ( | ) |

Definition at line 1833 of file WordNetOntology.cc.

References PLearn::endl(), and PLearn::Set::size().

{

#ifdef VERBOSE

cout << "extracting descendants... ";

#endif

int n_sense_descendants = 0;

int n_word_descendants = 0;

for (map<int, Node*>::iterator it = synsets.begin(); it != synsets.end(); ++it)

{

Set sense_descendants;

Set word_descendants;

extractDescendants(it->second, sense_descendants, word_descendants);

synset_to_sense_descendants[it->first] = sense_descendants;

synset_to_word_descendants[it->first] = word_descendants;

n_sense_descendants += sense_descendants.size();

n_word_descendants += word_descendants.size();

}

are_descendants_extracted = true;

#ifdef VERBOSE

cout << "(" << n_sense_descendants << " senses, " << n_word_descendants << " words)" << endl;

#endif

}

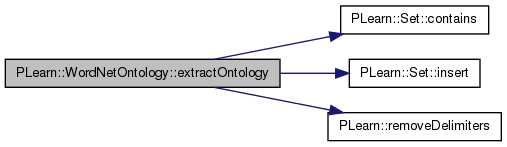

| Node * PLearn::WordNetOntology::extractOntology | ( | SynsetPtr | ssp | ) |

Definition at line 729 of file WordNetOntology.cc.

References PLearn::Node::children, PLearn::Set::contains(), PLearn::Node::fnum, PLearn::Node::gloss, PLearn::Node::hereiam, PLearn::Set::insert(), PLearn::Node::is_unknown, PLearn::Node::parents, PLearn::removeDelimiters(), PLearn::Node::ss_id, and PLearn::Node::syns.

{

Node* node = new Node(synset_index++); // increment synset counter

node->syns = getSynsetWords(ssp);

string defn = ssp->defn;

removeDelimiters(defn, "*", "%");

removeDelimiters(defn, "|", "/");

node->gloss = defn;

node->hereiam = ssp->hereiam;

node->fnum = ssp->fnum;

node->is_unknown = false;

synsets[node->ss_id] = node;

ssp = ssp->ptrlist;

while (ssp != NULL)

{

Node* parent_node = checkForAlreadyExtractedSynset(ssp);

if (parent_node == NULL) // create new synset Node

{

parent_node = extractOntology(ssp);

}

if (parent_node->ss_id != node->ss_id && !(node->children.contains(parent_node->ss_id))) // avoid cycles (that are in fact due to errors in the WordNet database)

{

node->parents.insert(parent_node->ss_id);

parent_node->children.insert(node->ss_id);

}

ssp = ssp->nextss;

}

return node;

}

| void PLearn::WordNetOntology::extractPredominentSyntacticClasses | ( | ) |

Definition at line 2579 of file WordNetOntology.cc.

{

for (map<int, Set>::iterator it = word_to_senses.begin(); it != word_to_senses.end(); ++it)

{

int word_id = it->first;

word_to_predominent_pos[word_id] = getPredominentSyntacticClassForWord(word_id);

}

are_predominent_pos_extracted = true;

}

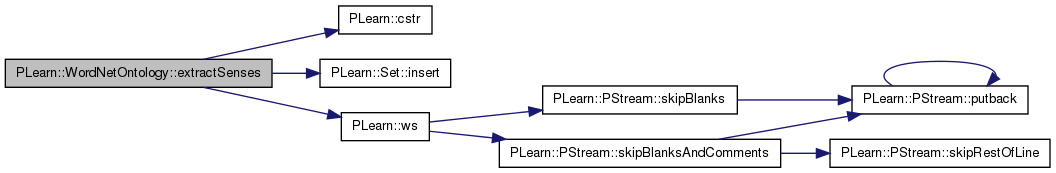

| bool PLearn::WordNetOntology::extractSenses | ( | string | original_word, |

| string | processed_word, | ||

| int | wn_pos_type | ||

| ) |

Definition at line 604 of file WordNetOntology.cc.

References ADJ_TYPE, ADV_TYPE, PLearn::cstr(), PLearn::Set::insert(), NOUN_TYPE, PLearn::Node::ss_id, PLearn::Node::types, VERB_TYPE, and PLearn::ws().

{

//char* cword = const_cast<char*>(processed_word.c_str());

char* cword = cstr(processed_word);

SynsetPtr ssp = NULL;

IndexPtr idx = getindex(cword, wn_pos_type);

switch (wn_pos_type)

{

case NOUN_TYPE:

ssp = findtheinfo_ds(cword, NOUN, -HYPERPTR, ALLSENSES);

break;

case VERB_TYPE:

ssp = findtheinfo_ds(cword, VERB, -HYPERPTR, ALLSENSES);

break;

case ADJ_TYPE:

ssp = findtheinfo_ds(cword, ADJ, -HYPERPTR, ALLSENSES);

break;

case ADV_TYPE:

ssp = findtheinfo_ds(cword, ADV, -HYPERPTR, ALLSENSES);

break;

}

if (ssp == NULL)

{

return false;

} else

{

switch (wn_pos_type)

{

case NOUN_TYPE:

noun_count++;

break;

case VERB_TYPE:

verb_count++;

break;

case ADJ_TYPE:

adj_count++;

break;

case ADV_TYPE:

adv_count++;

break;

}

int wnsn = 0;

// extract all senses for a given word

while (ssp != NULL)

{

wnsn++;

Node* node = checkForAlreadyExtractedSynset(ssp);

if (node == NULL) // not found

{

switch (wn_pos_type)

{

case NOUN_TYPE:

noun_sense_count++;

break;

case VERB_TYPE:

verb_sense_count++;

break;

case ADJ_TYPE:

adj_sense_count++;

break;

case ADV_TYPE:

adv_sense_count++;

break;

}

// create a new sense (1rst-level synset Node)

node = extractOntology(ssp);

}

int word_id = words_id[original_word];

node->types.insert(wn_pos_type);

word_to_senses[word_id].insert(node->ss_id);

sense_to_words[node->ss_id].insert(word_id);

char *charsk = WNSnsToStr(idx, wnsn);

string sense_key(charsk);

pair<int, string> ss(word_id,sense_key);

if (sense_key_to_ss_id.find(ss) == sense_key_to_ss_id.end())

sense_key_to_ss_id[ss] = node->ss_id;

pair<int, int> ws(word_id, node->ss_id);

//cout << sense_key << "word_id: " << word_id << "synset " << node->ss_id << endl;

// e.g. green%1:13:00:: and greens%1:13:00::

// correspond to the same synset

if (ws_id_to_sense_key.find(ws) == ws_id_to_sense_key.end())

ws_id_to_sense_key[ws] = sense_key;

// warning : should check if inserting a given sense twice (vector)

// (should not happen if vocabulary contains only unique values)

switch(wn_pos_type)

{

case NOUN_TYPE:

word_to_noun_wnsn[word_id].push_back(node->ss_id);

word_to_noun_senses[word_id].insert(node->ss_id);

break;

case VERB_TYPE:

word_to_verb_wnsn[word_id].push_back(node->ss_id);

word_to_verb_senses[word_id].insert(node->ss_id);

break;

case ADJ_TYPE:

word_to_adj_wnsn[word_id].push_back(node->ss_id);

word_to_adj_senses[word_id].insert(node->ss_id);

break;

case ADV_TYPE:

word_to_adv_wnsn[word_id].push_back(node->ss_id);

word_to_adv_senses[word_id].insert(node->ss_id);

break;

}

ssp = ssp->nextss;

}

free_syns(ssp);

return true;

}

}

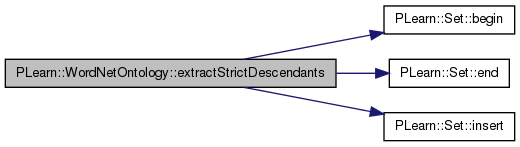

| void PLearn::WordNetOntology::extractStrictDescendants | ( | Node * | node, |

| Set | sense_descendants, | ||

| Set | word_descendants | ||

| ) |

Definition at line 1878 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Node::children, PLearn::Set::end(), PLearn::Set::insert(), and PLearn::Node::ss_id.

{

int ss_id = node->ss_id;

if (isSense(ss_id)){ // is a sense

for (SetIterator it = sense_to_words[ss_id].begin(); it != sense_to_words[ss_id].end(); ++it){

int word_id = *it;

word_descendants.insert(word_id);

}

}

for (SetIterator it = node->children.begin(); it != node->children.end(); ++it){

extractDescendants(synsets[*it], sense_descendants, word_descendants);

}

}

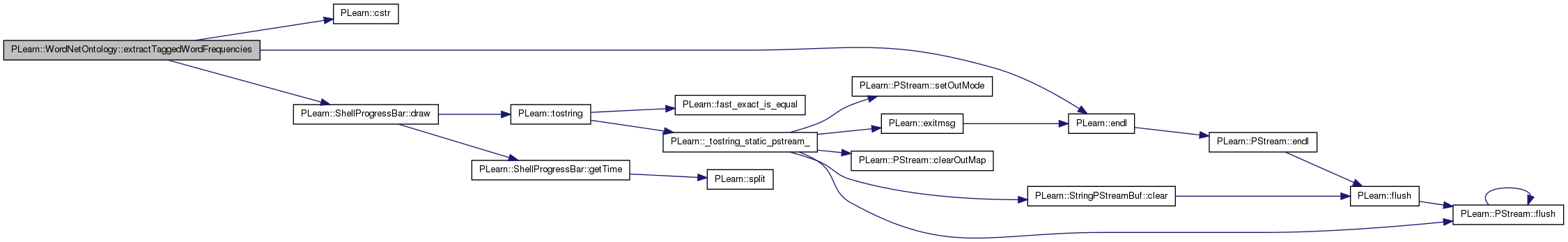

| void PLearn::WordNetOntology::extractTaggedWordFrequencies | ( | map< int, map< int, int > > & | word_senses_to_tagged_frequencies | ) |

Definition at line 489 of file WordNetOntology.cc.

References PLearn::cstr(), PLearn::ShellProgressBar::draw(), PLearn::endl(), i, and PLearn::Node::ss_id.

{

// NOTE: The 'word_senses_to_tagged_frequencies' is a map where the key to the

// map is a 'word_id' and the value associated with the key is another

// map. This other map takes a 'synset_id' as its key and associates

// a frequency value. Thus the data structure associates a frequency

// to a (word_id, synset_id) couple.

cout << "in WordNetOntology::extractTaggedWordFrequencies()" << endl;

vector<int> dbases;

dbases.reserve(4);

dbases.push_back(NOUN);

dbases.push_back(VERB);

dbases.push_back(ADJ);

dbases.push_back(ADV);

int dbases_size = dbases.size();

word_senses_to_tagged_frequencies.clear();

vector<string> syns;

string gloss;

long offset;

int fnum;

int total_senses_found = 0;

ShellProgressBar progress(0, words.size() * dbases_size, "[Extracting word-sense tagged frequencies]", 50);

progress.draw();

int ws2tf_i = 0;

// Go through all databases

for (int i = 0; i < dbases_size; ++i) {

// Go through all words in the ontology

for (map<int, string>::iterator w_it = words.begin(); w_it != words.end(); ++w_it) {

progress.update(++ws2tf_i);

char *cword = cstr(w_it->second);

wnresults.numforms = wnresults.printcnt = 0; // Useful??

SynsetPtr ssp = findtheinfo_ds(cword, dbases[i], -HYPERPTR, ALLSENSES);

if (ssp != NULL) {

IndexPtr idx;

SynsetPtr cursyn;

while ((idx = getindex(cword, dbases[i])) != NULL) {

cword = NULL;

if (idx->tagged_cnt) {

map<int, map<int, int> >::iterator ws2tf_it = word_senses_to_tagged_frequencies.find(w_it->first);

if (ws2tf_it == word_senses_to_tagged_frequencies.end()) {

word_senses_to_tagged_frequencies[w_it->first] = map<int, int>();

ws2tf_it = word_senses_to_tagged_frequencies.find(w_it->first);

}

//for (int l = 0; l < idx->tagged_cnt; ++l) {

for (int l = 0; l < idx->sense_cnt; ++l) {

if ((cursyn = read_synset(dbases[i], idx->offset[l], idx->wd)) != NULL) {

//int freq = GetTagcnt(idx, l + 1);

int freq = -1;

wnresults.OutSenseCount[wnresults.numforms]++;

// Find if synset is in ontology

//if (freq) {

// NOTE: We extract zero frequencies even though

// this is not useful...

syns = getSynsetWords(cursyn);

gloss = string(cursyn->defn);

offset = cursyn->hereiam;

fnum = cursyn->fnum;

Node *node = findSynsetFromSynsAndGloss(syns, gloss, offset, fnum);

if (node != NULL) {

(ws2tf_it->second)[node->ss_id] = freq;

++total_senses_found;

}

//}

free_synset(cursyn);

}

}

}

wnresults.numforms++;

free_index(idx);

} // while()

free_syns(ssp);

} // ssp != NULL

}

}

progress.done();

cout << "FOUND A GRAND TOTAL OF " << total_senses_found << " senses" << endl;

}

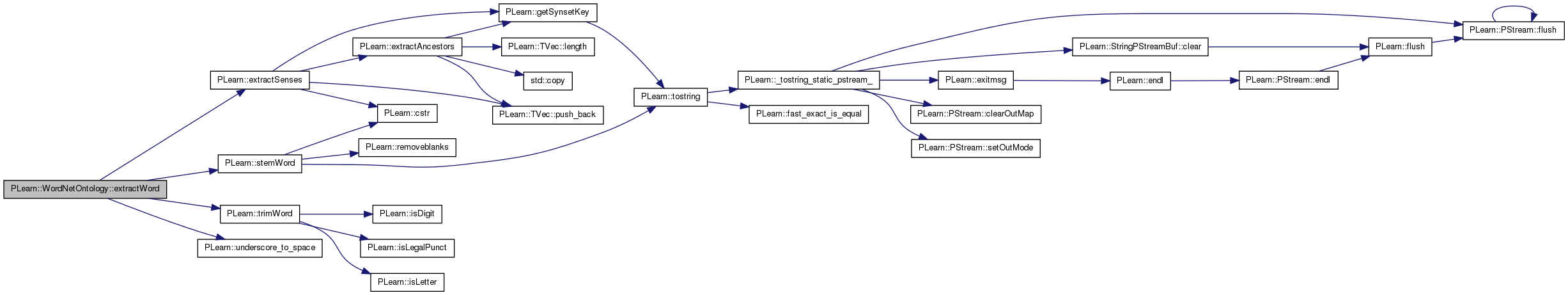

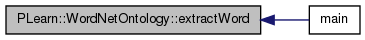

| void PLearn::WordNetOntology::extractWord | ( | string | original_word, |

| int | wn_pos_type, | ||

| bool | trim_word, | ||

| bool | stem_word, | ||

| bool | remove_underscores | ||

| ) |

Definition at line 383 of file WordNetOntology.cc.

References ADJ_TYPE, ADV_TYPE, ALL_WN_TYPE, PLearn::extractSenses(), NOUN_TYPE, NULL_TAG, PLWARNING, PLearn::stemWord(), PLearn::trimWord(), PLearn::underscore_to_space(), and VERB_TYPE.

Referenced by main().

{

bool found_noun = false;

bool found_verb = false;

bool found_adj = false;

bool found_adv = false;

bool found_stemmed_noun = false;

bool found_stemmed_verb = false;

bool found_stemmed_adj = false;

bool found_stemmed_adv = false;

bool found = false;

string processed_word = original_word;

string stemmed_word;

words[word_index] = original_word;

words_id[original_word] = word_index;

if (!catchSpecialTags(original_word))

{

if (trim_word)

processed_word = trimWord(original_word);

if (remove_undescores)

processed_word = underscore_to_space(processed_word);

if (processed_word == NULL_TAG)

{

out_of_wn_word_count++;

processUnknownWord(word_index);

word_is_in_wn[word_index] = false;

} else

{

if (wn_pos_type == NOUN_TYPE || wn_pos_type == ALL_WN_TYPE)

found_noun = extractSenses(original_word, processed_word, NOUN_TYPE);

if (wn_pos_type == VERB_TYPE || wn_pos_type == ALL_WN_TYPE)

found_verb = extractSenses(original_word, processed_word, VERB_TYPE);

if (wn_pos_type == ADJ_TYPE || wn_pos_type == ALL_WN_TYPE)

found_adj = extractSenses(original_word, processed_word, ADJ_TYPE);

if (wn_pos_type == ADV_TYPE || wn_pos_type == ALL_WN_TYPE)

found_adv = extractSenses(original_word, processed_word, ADV_TYPE);

if (stem_word)

{

if (wn_pos_type == NOUN_TYPE || wn_pos_type == ALL_WN_TYPE)

{

stemmed_word = stemWord(processed_word, NOUN);

if (stemmed_word != processed_word)

found_stemmed_noun = extractSenses(original_word, stemmed_word, NOUN_TYPE);

}

if (wn_pos_type == VERB_TYPE || wn_pos_type == ALL_WN_TYPE)

{

stemmed_word = stemWord(processed_word, VERB);

if (stemmed_word != processed_word)

found_stemmed_verb = extractSenses(original_word, stemmed_word, VERB_TYPE);

}

if (wn_pos_type == ADJ_TYPE || wn_pos_type == ALL_WN_TYPE)

{

stemmed_word = stemWord(processed_word, ADJ);

if (stemmed_word != processed_word)

found_stemmed_adj = extractSenses(original_word, stemmed_word, ADJ_TYPE);

}

if (wn_pos_type == ADV_TYPE || wn_pos_type == ALL_WN_TYPE)

{

stemmed_word = stemWord(processed_word, ADV);

if (stemmed_word != processed_word)

found_stemmed_adv = extractSenses(original_word, stemmed_word, ADV_TYPE);

}

}

found = (found_noun || found_verb || found_adj || found_adv ||

found_stemmed_noun || found_stemmed_verb || found_stemmed_adj || found_stemmed_adv);

if (found)

{

in_wn_word_count++;

word_is_in_wn[word_index] = true;

} else

{

out_of_wn_word_count++;

processUnknownWord(word_index);

word_is_in_wn[word_index] = false;

}

}

} else // word is a "special tag" (<OOV>, etc...)

{

out_of_wn_word_count++;

word_is_in_wn[word_index] = false;

}

if (word_to_senses[word_index].isEmpty())

PLWARNING("word %d (%s) was not processed correctly (found = %d)", word_index, words[word_index].c_str(), found);

word_index++;

}

| void PLearn::WordNetOntology::extractWordHighLevelSenses | ( | int | noun_depth, |

| int | verb_depth, | ||

| int | adj_depth, | ||

| int | adv_depth, | ||

| int | unk_depth | ||

| ) |

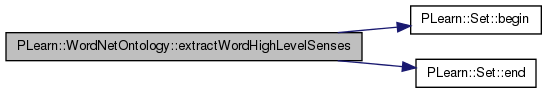

Definition at line 2652 of file WordNetOntology.cc.

References ADJ_SS_ID, ADV_SS_ID, PLearn::Set::begin(), PLearn::Set::end(), NOUN_SS_ID, SUPER_UNKNOWN_SS_ID, and VERB_SS_ID.

{

Set noun_categories;

getDescendantCategoriesAtLevel(NOUN_SS_ID, 0, noun_depth, noun_categories);

for (SetIterator sit = noun_categories.begin(); sit != noun_categories.end(); ++sit)

{

int ss_id = *sit;

Set word_descendants = getSynsetWordDescendants(ss_id);

for (SetIterator wit = word_descendants.begin(); wit != word_descendants.end(); ++wit)

{

int word_id = *wit;

word_to_high_level_senses[word_id].insert(ss_id);

}

}

Set verb_categories;

getDescendantCategoriesAtLevel(VERB_SS_ID, 0, verb_depth, verb_categories);

for (SetIterator sit = verb_categories.begin(); sit != verb_categories.end(); ++sit)

{

int ss_id = *sit;

Set word_descendants = getSynsetWordDescendants(ss_id);

for (SetIterator wit = word_descendants.begin(); wit != word_descendants.end(); ++wit)

{

int word_id = *wit;

word_to_high_level_senses[word_id].insert(ss_id);

}

}

Set adj_categories;

getDescendantCategoriesAtLevel(ADJ_SS_ID, 0, adj_depth, adj_categories);

for (SetIterator sit = adj_categories.begin(); sit != adj_categories.end(); ++sit)

{

int ss_id = *sit;

Set word_descendants = getSynsetWordDescendants(ss_id);

for (SetIterator wit = word_descendants.begin(); wit != word_descendants.end(); ++wit)

{

int word_id = *wit;

word_to_high_level_senses[word_id].insert(ss_id);

}

}

Set adv_categories;

getDescendantCategoriesAtLevel(ADV_SS_ID, 0, adv_depth, adv_categories);

for (SetIterator sit = adv_categories.begin(); sit != adv_categories.end(); ++sit)

{

int ss_id = *sit;

Set word_descendants = getSynsetWordDescendants(ss_id);

for (SetIterator wit = word_descendants.begin(); wit != word_descendants.end(); ++wit)

{

int word_id = *wit;

word_to_high_level_senses[word_id].insert(ss_id);

}

}

Set unk_categories;

getDescendantCategoriesAtLevel(SUPER_UNKNOWN_SS_ID, 0, unk_depth, unk_categories);

for (SetIterator sit = unk_categories.begin(); sit != unk_categories.end(); ++sit)

{

int ss_id = *sit;

Set word_descendants = getSynsetWordDescendants(ss_id);

for (SetIterator wit = word_descendants.begin(); wit != word_descendants.end(); ++wit)

{

int word_id = *wit;

word_to_high_level_senses[word_id].insert(ss_id);

}

}

// This role is deprecated: integrity verification : to each word should be assigned at least 1 high-level sense

// The new role is now to assign "normal" senses to word that didn't get high-level senses

for (map<int, string>::iterator it = words.begin(); it != words.end(); ++it)

{

int word_id = it->first;

if (word_to_high_level_senses[word_id].size() == 0)

word_to_high_level_senses[word_id] = word_to_senses[word_id];

// This is deprecated: PLWARNING("word '%s' (%d) has no high-level sense", words[word_id].c_str(), word_id);

}

are_word_high_level_senses_extracted = true;

}

| void PLearn::WordNetOntology::extractWordNounAndVerbHighLevelSenses | ( | int | noun_depth, |

| int | verb_depth | ||

| ) |

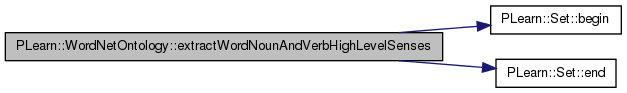

Definition at line 2728 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::end(), NOUN_SS_ID, and VERB_SS_ID.

{

for (map<int, string>::iterator it = words.begin(); it != words.end(); ++it)

{

int word_id = it->first;

word_to_high_level_senses[word_id] = word_to_adv_senses[word_id];

word_to_high_level_senses[word_id].merge(word_to_adj_senses[word_id]);

}

Set noun_categories;

getDescendantCategoriesAtLevel(NOUN_SS_ID, 0, noun_depth, noun_categories);

for (SetIterator sit = noun_categories.begin(); sit != noun_categories.end(); ++sit)

{

int ss_id = *sit;

Set word_descendants = getSynsetWordDescendants(ss_id);

for (SetIterator wit = word_descendants.begin(); wit != word_descendants.end(); ++wit)

{

int word_id = *wit;

word_to_high_level_senses[word_id].insert(ss_id);

}

}

Set verb_categories;

getDescendantCategoriesAtLevel(VERB_SS_ID, 0, verb_depth, verb_categories);

for (SetIterator sit = verb_categories.begin(); sit != verb_categories.end(); ++sit)

{

int ss_id = *sit;

Set word_descendants = getSynsetWordDescendants(ss_id);

for (SetIterator wit = word_descendants.begin(); wit != word_descendants.end(); ++wit)

{

int word_id = *wit;

word_to_high_level_senses[word_id].insert(ss_id);

}

}

// BIG HACK!!!

for (map<int, Set>::iterator it = word_to_under_target_level_high_level_senses.begin(); it != word_to_under_target_level_high_level_senses.end(); ++it)

{

word_to_high_level_senses[it->first].merge(it->second);

}

for (map<int, string>::iterator it = words.begin(); it != words.end(); ++it)

{

int word_id = it->first;

if (word_to_high_level_senses[word_id].size() == 0)

word_to_high_level_senses[word_id] = word_to_senses[word_id];

}

are_word_high_level_senses_extracted = true;

}

| void PLearn::WordNetOntology::fillTempWordToHighLevelSensesTVecMap | ( | ) | [inline] |

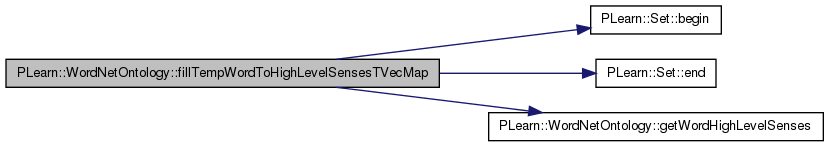

Definition at line 441 of file WordNetOntology.h.

References PLearn::Set::begin(), PLearn::Set::end(), getWordHighLevelSenses(), temp_word_to_high_level_senses, w, and words.

{

for (map<int, string>::iterator it = words.begin(); it != words.end(); ++it)

{

int w = it->first;

Set hl_senses = getWordHighLevelSenses(w);

for (SetIterator sit = hl_senses.begin(); sit != hl_senses.end(); ++sit)

temp_word_to_high_level_senses[w].push_back(*sit);

}

}

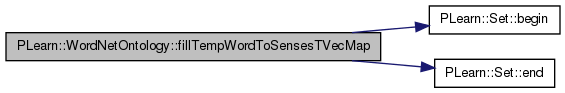

| void PLearn::WordNetOntology::fillTempWordToSensesTVecMap | ( | ) | [inline] |

Definition at line 396 of file WordNetOntology.h.

References PLearn::Set::begin(), PLearn::Set::end(), temp_word_to_adj_senses, temp_word_to_adv_senses, temp_word_to_noun_senses, temp_word_to_senses, temp_word_to_verb_senses, w, word_to_adj_senses, word_to_adv_senses, word_to_noun_senses, word_to_senses, and word_to_verb_senses.

Referenced by PLearn::GraphicalBiText::build_().

{

for (map<int, Set>::iterator it = word_to_senses.begin(); it != word_to_senses.end(); ++it)

{

int w = it->first;

Set senses = it->second;

for (SetIterator sit = senses.begin(); sit != senses.end(); ++sit)

temp_word_to_senses[w].push_back(*sit);

}

for (map<int, Set>::iterator it = word_to_noun_senses.begin(); it != word_to_noun_senses.end(); ++it)

{

int w = it->first;

Set senses = it->second;

for (SetIterator sit = senses.begin(); sit != senses.end(); ++sit)

temp_word_to_noun_senses[w].push_back(*sit);

}

for (map<int, Set>::iterator it = word_to_verb_senses.begin(); it != word_to_verb_senses.end(); ++it)

{

int w = it->first;

Set senses = it->second;

for (SetIterator sit = senses.begin(); sit != senses.end(); ++sit)

temp_word_to_verb_senses[w].push_back(*sit);

}

for (map<int, Set>::iterator it = word_to_adj_senses.begin(); it != word_to_adj_senses.end(); ++it)

{

int w = it->first;

Set senses = it->second;

for (SetIterator sit = senses.begin(); sit != senses.end(); ++sit)

temp_word_to_adj_senses[w].push_back(*sit);

}

for (map<int, Set>::iterator it = word_to_adv_senses.begin(); it != word_to_adv_senses.end(); ++it)

{

int w = it->first;

Set senses = it->second;

for (SetIterator sit = senses.begin(); sit != senses.end(); ++sit)

temp_word_to_adv_senses[w].push_back(*sit);

}

}

| void PLearn::WordNetOntology::finalize | ( | ) |

Definition at line 819 of file WordNetOntology.cc.

| Node * PLearn::WordNetOntology::findSynsetFromSynsAndGloss | ( | const vector< string > & | syns, |

| const string & | gloss, | ||

| const long | offset, | ||

| const int | fnum | ||

| ) |

Definition at line 478 of file WordNetOntology.cc.

References PLearn::Node::fnum, PLearn::Node::gloss, PLearn::Node::hereiam, and PLearn::Node::syns.

| Set PLearn::WordNetOntology::getAllCategories | ( | ) |

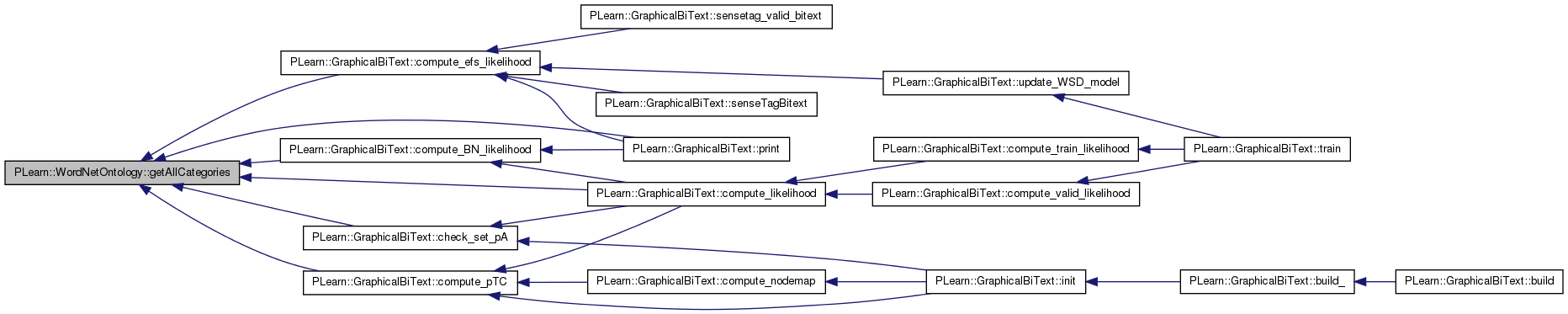

Definition at line 2035 of file WordNetOntology.cc.

References PLearn::Set::insert().

Referenced by PLearn::GraphicalBiText::check_set_pA(), PLearn::GraphicalBiText::compute_BN_likelihood(), PLearn::GraphicalBiText::compute_efs_likelihood(), PLearn::GraphicalBiText::compute_likelihood(), PLearn::GraphicalBiText::compute_pTC(), and PLearn::GraphicalBiText::print().

{

Set categories;

for (map<int, Node*>::iterator it = synsets.begin(); it != synsets.end(); ++it)

{

categories.insert(it->first);

}

return categories;

}

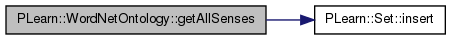

| Set PLearn::WordNetOntology::getAllSenses | ( | ) |

Definition at line 2025 of file WordNetOntology.cc.

References PLearn::Set::insert().

{

Set senses;

for (map<int, Set>::iterator it = sense_to_words.begin(); it != sense_to_words.end(); ++it)

{

senses.insert(it->first);

}

return senses;

}

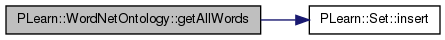

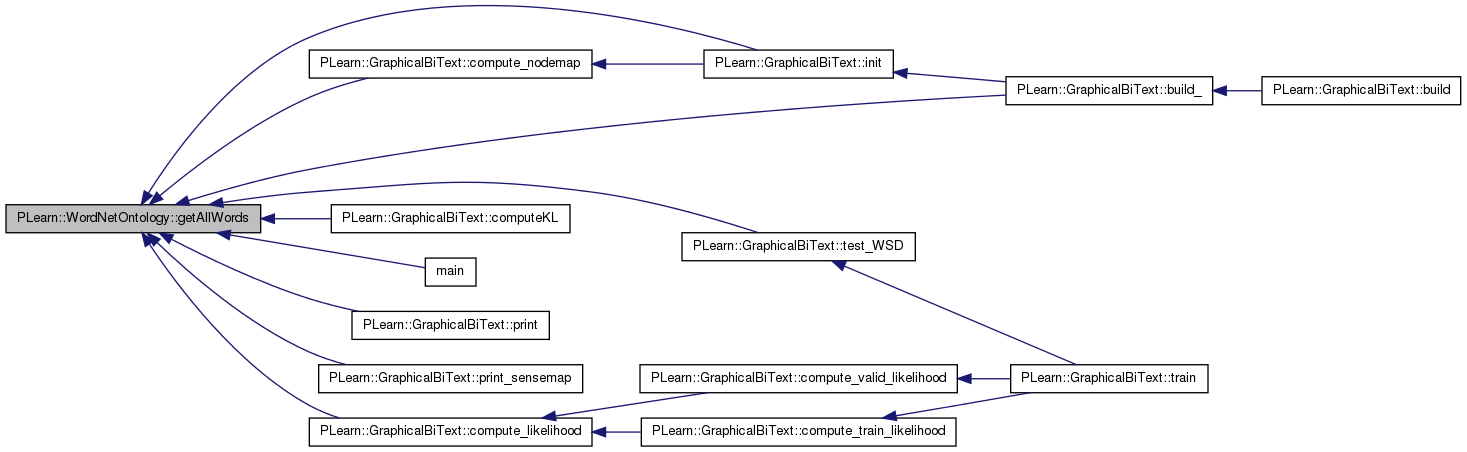

| Set PLearn::WordNetOntology::getAllWords | ( | ) | const |

Definition at line 2016 of file WordNetOntology.cc.

References PLearn::Set::insert().

Referenced by PLearn::GraphicalBiText::build_(), PLearn::GraphicalBiText::compute_likelihood(), PLearn::GraphicalBiText::compute_nodemap(), PLearn::GraphicalBiText::computeKL(), PLearn::GraphicalBiText::init(), main(), PLearn::GraphicalBiText::print(), PLearn::GraphicalBiText::print_sensemap(), and PLearn::GraphicalBiText::test_WSD().

{

Set all_words;

for (map<int, string>::const_iterator it = words.begin(); it != words.end(); ++it){

all_words.insert(it->first);

}

return all_words;

}

| void PLearn::WordNetOntology::getCategoriesAtLevel | ( | int | ss_id, |

| int | cur_level, | ||

| int | target_level, | ||

| set< int > & | categories | ||

| ) |

Definition at line 2193 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::end(), and PLearn::Node::parents.

{

Node* node = synsets[ss_id];

if (cur_level == target_level && !isTopLevelCategory(ss_id))

{

categories.insert(ss_id);

} else

{

for (SetIterator it = node->parents.begin(); it != node->parents.end(); ++it)

{

getCategoriesAtLevel(*it, cur_level + 1, target_level, categories);

}

}

}

| void PLearn::WordNetOntology::getCategoriesUnderLevel | ( | int | ss_id, |

| int | cur_level, | ||

| int | target_level, | ||

| Set | categories | ||

| ) |

Definition at line 2208 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::end(), PLearn::Set::insert(), and PLearn::Node::parents.

{

Node* node = synsets[ss_id];

if (!isTopLevelCategory(ss_id))

categories.insert(ss_id);

if (cur_level != target_level)

{

for (SetIterator it = node->parents.begin(); it != node->parents.end(); ++it)

getCategoriesUnderLevel(*it, cur_level + 1, target_level, categories);

}

}

| void PLearn::WordNetOntology::getDescendantCategoriesAtLevel | ( | int | ss_id, |

| int | cur_level, | ||

| int | target_level, | ||

| Set | categories | ||

| ) |

Definition at line 2627 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Node::children, PLearn::Set::end(), and PLearn::Set::insert().

{

if (isSynset(ss_id))

{

Node* node = synsets[ss_id];

// WARNING: HERE IS A HUGE HACK!!!

if (cur_level < target_level && isSense(ss_id))

{

Set words = sense_to_words[ss_id];

for (SetIterator wit = words.begin(); wit != words.end(); ++wit)

word_to_under_target_level_high_level_senses[*wit].insert(ss_id);

}

if (cur_level == target_level)

categories.insert(ss_id);

else

{

for (SetIterator it = node->children.begin(); it != node->children.end(); ++it)

getDescendantCategoriesAtLevel(*it, cur_level + 1, target_level, categories);

}

}

}

| void PLearn::WordNetOntology::getDownToUpParentCategoriesAtLevel | ( | int | ss_id, |

| int | target_level, | ||

| Set | categories, | ||

| int | cur_level = 0 |

||

| ) |

Definition at line 2180 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::end(), PLearn::Set::insert(), and PLearn::Node::parents.

{

Node* node = synsets[ss_id];

if (cur_level == target_level && !isTopLevelCategory(ss_id))

{

categories.insert(ss_id);

} else

{

for (SetIterator it = node->parents.begin(); it != node->parents.end(); ++it)

getDownToUpParentCategoriesAtLevel(*it, target_level, categories, cur_level + 1);

}

}

Definition at line 451 of file WordNetOntology.h.

References temp_word_to_high_level_senses, and w.

{ return temp_word_to_high_level_senses[w]; }

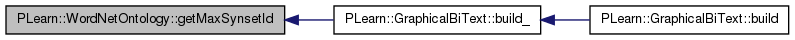

| int PLearn::WordNetOntology::getMaxSynsetId | ( | ) |

Definition at line 2500 of file WordNetOntology.cc.

Referenced by PLearn::GraphicalBiText::build_().

{

return synsets.rbegin()->first;

}

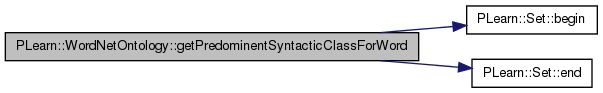

Definition at line 2535 of file WordNetOntology.cc.

References ADJ_TYPE, ADV_TYPE, PLearn::Set::begin(), PLearn::Set::end(), NOUN_TYPE, PLWARNING, UNDEFINED_TYPE, and VERB_TYPE.

{

#ifndef NOWARNING

if (!isWord(word_id))

PLWARNING("asking for a non-word id (%d)", word_id);

#endif

if (are_predominent_pos_extracted)

return word_to_predominent_pos[word_id];

int n_noun = 0;

int n_verb = 0;

int n_adj = 0;

int n_adv = 0;

Set senses = word_to_senses[word_id];

for (SetIterator it = senses.begin(); it != senses.end(); ++it)

{

int sense_id = *it;

int type = getSyntacticClassForSense(sense_id);

switch (type)

{

case NOUN_TYPE:

n_noun++;

break;

case VERB_TYPE:

n_verb++;

break;

case ADJ_TYPE:

n_adj++;

break;

case ADV_TYPE:

n_adv++;

}

}

if (n_noun == 0 && n_verb == 0 && n_adj == 0 && n_adv == 0)

return UNDEFINED_TYPE;

else if (n_noun >= n_verb && n_noun >= n_adj && n_noun >= n_adv)

return NOUN_TYPE;

else if (n_verb >= n_noun && n_verb >= n_adj && n_verb >= n_adv)

return VERB_TYPE;

else if (n_adj >= n_noun && n_adj >= n_verb && n_adj >= n_adv)

return ADJ_TYPE;

else

return ADV_TYPE;

}

| Node* PLearn::WordNetOntology::getRootSynset | ( | ) | [inline] |

Definition at line 285 of file WordNetOntology.h.

References ROOT_SS_ID, and synsets.

{ return synsets[ROOT_SS_ID]; }

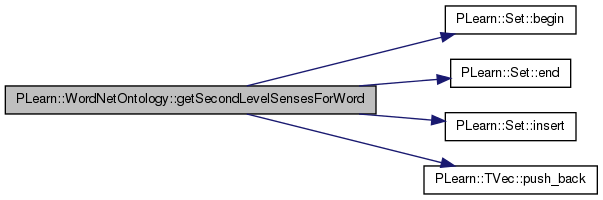

Definition at line 453 of file WordNetOntology.h.

References PLearn::Set::begin(), PLearn::Set::end(), PLearn::Set::insert(), PLearn::Node::parents, PLearn::TVec< T >::push_back(), synsets, w, and word_to_senses.

{

Set sl_senses;

Set senses = word_to_senses[w];

for (SetIterator sit = senses.begin(); sit != senses.end(); ++sit)

{

int s = *sit;

Node* node = synsets[s];

for (SetIterator ssit = node->parents.begin(); ssit != node->parents.end(); ++ssit)

{

sl_senses.insert(*ssit);

}

}

TVec<int> sl_senses_vec;

for (SetIterator slit = sl_senses.begin(); slit != sl_senses.end(); ++slit)

sl_senses_vec.push_back(*slit);

return sl_senses_vec;

}

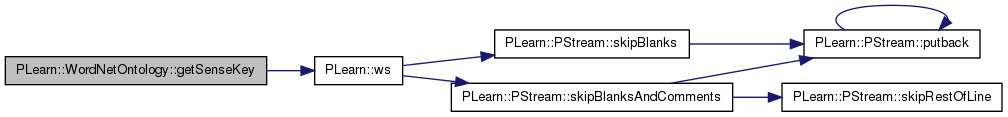

Definition at line 1630 of file WordNetOntology.cc.

References PLWARNING, and PLearn::ws().

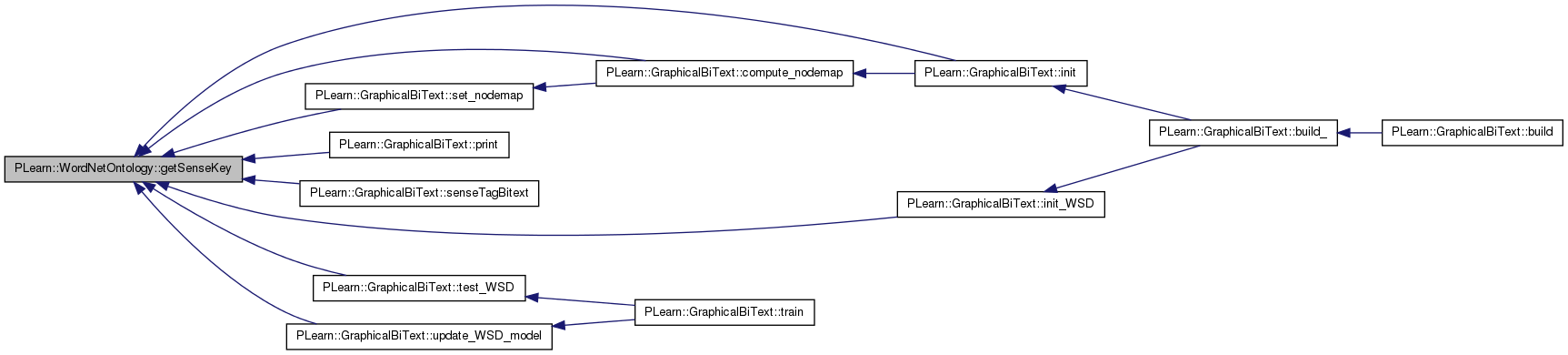

Referenced by PLearn::GraphicalBiText::compute_nodemap(), PLearn::GraphicalBiText::init(), PLearn::GraphicalBiText::init_WSD(), PLearn::GraphicalBiText::print(), PLearn::GraphicalBiText::senseTagBitext(), PLearn::GraphicalBiText::set_nodemap(), PLearn::GraphicalBiText::test_WSD(), and PLearn::GraphicalBiText::update_WSD_model().

{

pair<int, int> ws(word_id, ss_id);

if (ws_id_to_sense_key.find(ws) == ws_id_to_sense_key.end()){

PLWARNING("getSenseKey: can't find sense key word %d ss_id %d",word_id,ss_id);

return "";

}

return ws_id_to_sense_key.find(ws)->second;

}

Definition at line 439 of file WordNetOntology.h.

References temp_word_to_senses.

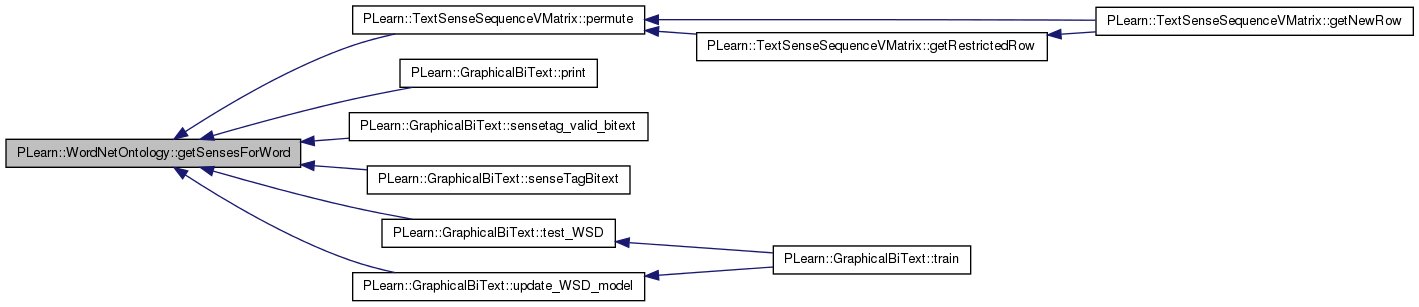

Referenced by PLearn::TextSenseSequenceVMatrix::permute(), PLearn::GraphicalBiText::print(), PLearn::GraphicalBiText::sensetag_valid_bitext(), PLearn::GraphicalBiText::senseTagBitext(), PLearn::GraphicalBiText::test_WSD(), and PLearn::GraphicalBiText::update_WSD_model().

{ return temp_word_to_senses.find(w)->second; }

| int PLearn::WordNetOntology::getSenseSize | ( | ) | [inline] |

Definition at line 290 of file WordNetOntology.h.

References sense_to_words.

Referenced by PLearn::GraphicalBiText::build_(), and PLearn::TextSenseSequenceVMatrix::build_().

{ return sense_to_words.size(); }

Definition at line 1781 of file WordNetOntology.cc.

References PLWARNING.

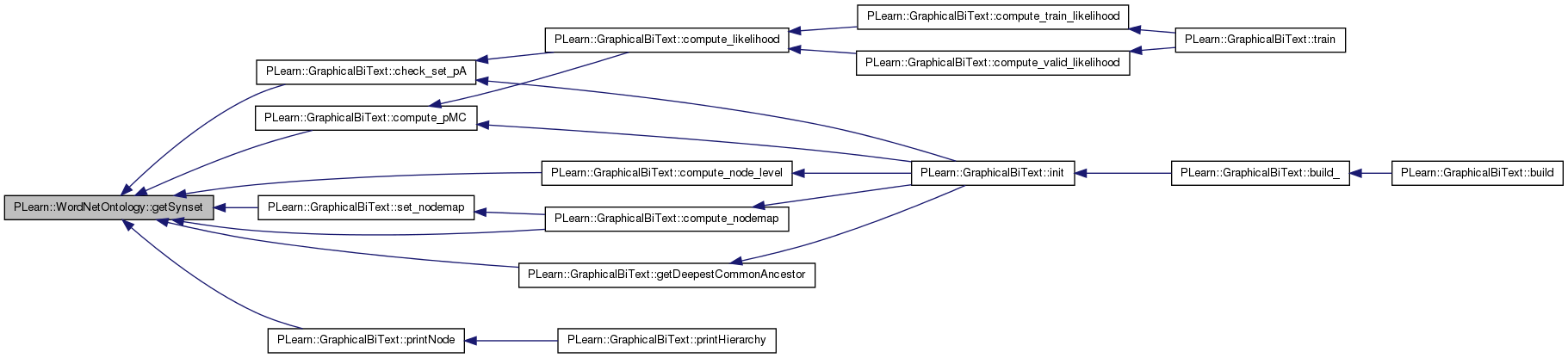

Referenced by PLearn::GraphicalBiText::check_set_pA(), PLearn::GraphicalBiText::compute_node_level(), PLearn::GraphicalBiText::compute_nodemap(), PLearn::GraphicalBiText::compute_pMC(), PLearn::GraphicalBiText::getDeepestCommonAncestor(), PLearn::GraphicalBiText::printNode(), and PLearn::GraphicalBiText::set_nodemap().

{

#ifndef NOWARNING

if (!isSynset(id))

{

PLWARNING("asking for a non-synset id (%d)", id);

return NULL;

}

#endif

#ifndef NOWARNING

if (synsets.find(id) == synsets.end()) {

PLWARNING("Asking for a non-existent synset id (%d)", id);

return NULL;

}

#endif

return synsets[id];

}

Definition at line 1558 of file WordNetOntology.cc.

References PLERROR, and PLWARNING.

{

if (are_ancestors_extracted)

{

if (!isSynset(id))

{

#ifndef NOWARNING

PLWARNING("asking for a non-synset id (%d)", id);

#endif

}

return synset_to_ancestors.find(id)->second;

} else

{

PLERROR("You must extract ancestors before calling getSynsetAncestors const ");

}

}

Definition at line 1527 of file WordNetOntology.cc.

References PLearn::extractAncestors(), and PLWARNING.

Referenced by PLearn::GraphicalBiText::compute_BN_likelihood(), PLearn::GraphicalBiText::compute_efs_likelihood(), and PLearn::GraphicalBiText::getDeepestCommonAncestor().

{

if (are_ancestors_extracted)

{

if (!isSynset(id))

{

#ifndef NOWARNING

PLWARNING("asking for a non-synset id (%d)", id);

#endif

}

return synset_to_ancestors[id];

} else

{

Set ancestors;

if (isSynset(id))

{

#ifndef NOWARNING

PLWARNING("using non-pre-computed version");

#endif

extractAncestors(synsets[id], ancestors, 1, max_level);

} else

{

#ifndef NOWARNING

PLWARNING("asking for a non-synset id (%d)", id);

#endif

}

return ancestors;

}

}

Definition at line 1641 of file WordNetOntology.cc.

Referenced by PLearn::GraphicalBiText::init(), PLearn::GraphicalBiText::init_WSD(), and PLearn::GraphicalBiText::test_WSD().

{

pair<int, string> ss(word_id,sense_key);

map< pair<int, string>, int>::const_iterator it = sense_key_to_ss_id.find(ss);

if(it == sense_key_to_ss_id.end())

return -1;

else

return it->second;

}

Definition at line 1576 of file WordNetOntology.cc.

Referenced by PLearn::GraphicalBiText::distribute_pS_on_ancestors().

{

return synsets[id]->parents;

}

Definition at line 1893 of file WordNetOntology.cc.

References PLWARNING.

{

if (are_descendants_extracted)

{

if (!isSynset(id))

{

#ifndef NOWARNING

PLWARNING("asking for a non-synset id (%d)", id);

#endif

}

return synset_to_sense_descendants[id];

}

Set sense_descendants;

if (isSynset(id))

{

#ifndef NOWARNING

PLWARNING("using non-pre-computed version");

#endif

extractDescendants(synsets[id], sense_descendants, Set());

} else

{

#ifndef NOWARNING

PLWARNING("asking for non-synset id (%d)", id);

#endif

}

return sense_descendants;

}

| int PLearn::WordNetOntology::getSynsetSize | ( | ) | [inline] |

Definition at line 1922 of file WordNetOntology.cc.

References PLWARNING.

Referenced by PLearn::GraphicalBiText::printNode().

{

if (are_descendants_extracted)

{

if (!isSynset(id))

{

#ifndef NOWARNING

PLWARNING("asking for a non-synset id (%d)", id);

#endif

}

return synset_to_word_descendants[id];

}

Set word_descendants;

if (isSynset(id))

{

#ifndef NOWARNING

PLWARNING("using non-pre-computed version");

#endif

extractDescendants(synsets[id], Set(), word_descendants);

} else

{

#ifndef NOWARNING

PLWARNING("asking for non-synset id (%d)", id);

#endif

}

return word_descendants;

}

| vector< string > PLearn::WordNetOntology::getSynsetWords | ( | SynsetPtr | ssp | ) |

Definition at line 1056 of file WordNetOntology.cc.

References i, and PLearn::removeDelimiters().

{

vector<string> syns;

for (int i = 0; i < ssp->wcount; i++)

{

strsubst(ssp->words[i], '_', ' ');

string word_i = ssp->words[i];

removeDelimiters(word_i, "*", "%");

removeDelimiters(word_i, "|", "/");

syns.push_back(word_i);

}

return syns;

}

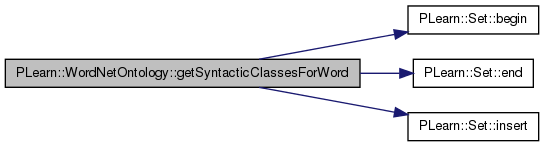

Definition at line 2505 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::end(), PLearn::Set::insert(), PLWARNING, and PLearn::Node::types.

{

#ifndef NOWARNING

if (!isWord(word_id))

PLWARNING("asking for a non-word id (%d)", word_id);

#endif

Set syntactic_classes;

Set senses = word_to_senses[word_id];

for (SetIterator it = senses.begin(); it != senses.end(); ++it)

{

Node* node = synsets[*it];

for (SetIterator tit = node->types.begin(); tit != node->types.end(); ++tit)

syntactic_classes.insert(*tit);

}

return syntactic_classes;

}

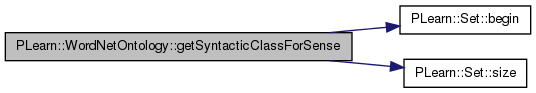

Definition at line 2522 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLWARNING, PLearn::Set::size(), and PLearn::Node::types.

{

#ifndef NOWARNING

if (!isSense(sense_id))

PLWARNING("asking for a non-sense id (%d)", sense_id);

#endif

Node* sense = synsets[sense_id];

if (sense->types.size() > 1)

PLWARNING("a sense has more than 1 POS type");

int type = *(sense->types.begin());

return type;

}

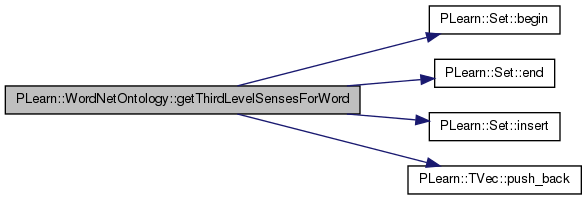

Definition at line 472 of file WordNetOntology.h.

References PLearn::Set::begin(), PLearn::Set::end(), PLearn::Set::insert(), PLearn::Node::parents, PLearn::TVec< T >::push_back(), synsets, w, and word_to_senses.

{

Set tl_senses;

Set senses = word_to_senses[w];

for (SetIterator sit = senses.begin(); sit != senses.end(); ++sit)

{

int s = *sit;

Node* node = synsets[s];

for (SetIterator slit = node->parents.begin(); slit != node->parents.end(); ++slit)

{

int sl_sense = *slit;

Node* node = synsets[sl_sense];

for (SetIterator tlit = node->parents.begin(); tlit != node->parents.end(); ++tlit)

{

tl_senses.insert(*tlit);

}

}

}

TVec<int> tl_senses_vec;

for (SetIterator tlit = tl_senses.begin(); tlit != tl_senses.end(); ++tlit)

tl_senses_vec.push_back(*tlit);

return tl_senses_vec;

}

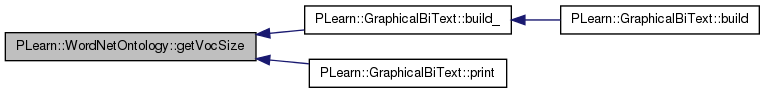

| int PLearn::WordNetOntology::getVocSize | ( | ) | [inline] |

Definition at line 289 of file WordNetOntology.h.

References words.

Referenced by PLearn::GraphicalBiText::build_(), and PLearn::GraphicalBiText::print().

{ return words.size(); }

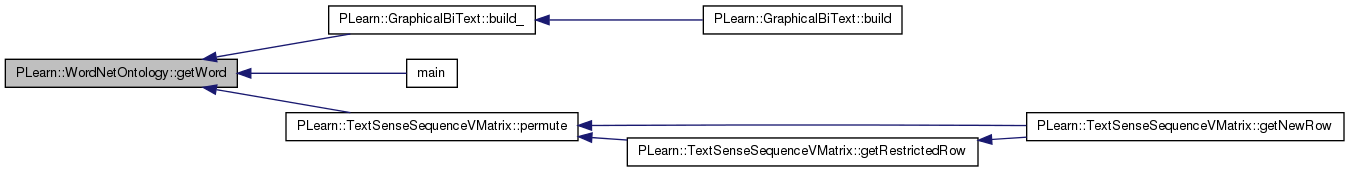

| string PLearn::WordNetOntology::getWord | ( | int | id | ) | const |

Definition at line 1676 of file WordNetOntology.cc.

References NULL_TAG, and PLWARNING.

Referenced by PLearn::GraphicalBiText::build_(), main(), and PLearn::TextSenseSequenceVMatrix::permute().

{

#ifndef NOWARNING

if (!isWord(id))

{

PLWARNING("asking for a non-word id (%d)", id);

return NULL_TAG;

}

#endif

return words.find(id)->second;

}

Definition at line 1745 of file WordNetOntology.cc.

References PLWARNING.

{

#ifndef NOWARNING

if (!isWord(id))

{

PLWARNING("asking for a non-word id (%d)", id);

return Set();

}

#endif

return word_to_adj_senses[id];

}

Definition at line 1757 of file WordNetOntology.cc.

References PLWARNING.

{

#ifndef NOWARNING

if (!isWord(id))

{

PLWARNING("asking for a non-word id (%d)", id);

return Set();

}

#endif

return word_to_adv_senses[id];

}

Definition at line 1581 of file WordNetOntology.cc.

References PLearn::Set::insert(), PLearn::Set::merge(), and PLWARNING.

{

if (are_ancestors_extracted)

{

if (!isWord(id))

{

#ifndef NOWARNING

PLWARNING("asking for a non-word id (%d)", id);

#endif

}

return word_to_ancestors[id];

} else

{

Set word_ancestors;

if (isWord(id))

{

#ifndef NOWARNING

PLWARNING("using non-pre-computed version");

#endif

for (SetIterator it = word_to_senses[id].begin(); it != word_to_senses[id].end(); ++it)

{

int sense_id = *it;

word_ancestors.insert(sense_id);

Set synset_ancestors = getSynsetAncestors(sense_id, max_level);

word_ancestors.merge(synset_ancestors);

}

} else

{

#ifndef NOWARNING

PLWARNING("asking for a non-word id");

#endif

}

return word_ancestors;

}

}

Definition at line 1705 of file WordNetOntology.cc.

References PLERROR, and PLWARNING.

Referenced by fillTempWordToHighLevelSensesTVecMap().

{

#ifndef NOWARNING

if (!isWord(id))

{

PLWARNING("asking for a non-word id (%d)", id);

return Set();

}

#endif

if (!are_word_high_level_senses_extracted)

PLERROR("word high-level senses have not been extracted");

return word_to_high_level_senses[id];

}

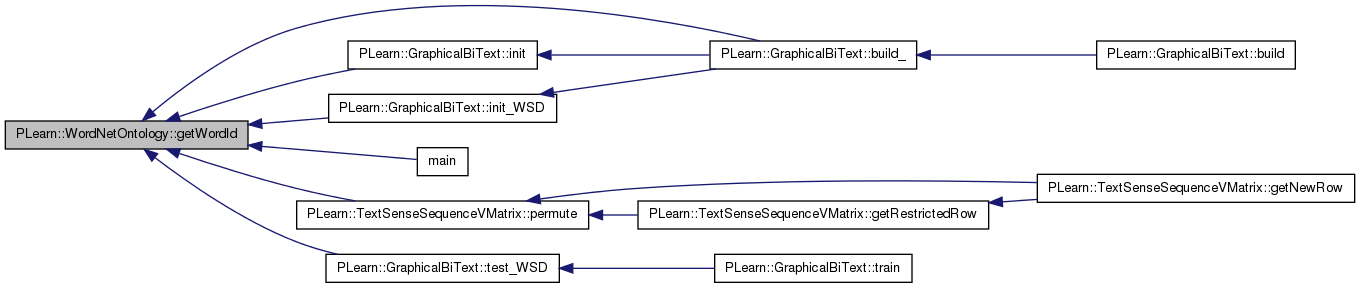

| int PLearn::WordNetOntology::getWordId | ( | string | word | ) | const |

Definition at line 1651 of file WordNetOntology.cc.

References OOV_TAG.

Referenced by PLearn::GraphicalBiText::build_(), PLearn::GraphicalBiText::init(), PLearn::GraphicalBiText::init_WSD(), main(), PLearn::TextSenseSequenceVMatrix::permute(), and PLearn::GraphicalBiText::test_WSD().

{

map<string, int>::const_iterator it = words_id.find(word);

if (it == words_id.end())

{

map<string, int>::const_iterator iit = words_id.find(OOV_TAG);

if (iit == words_id.end())

return -1;

else

return iit->second;

} else

{

return it->second;

}

// #ifndef NOWARNING

// if (words_id.find(word) == words_id.end())

// {

// PLWARNING("asking for a non-word (%s)", word.c_str());

// return -1;

// }

// #endif

// return words_id[word];

}

Definition at line 1721 of file WordNetOntology.cc.

References PLWARNING.

Referenced by PLearn::GraphicalBiText::init().

{

#ifndef NOWARNING

if (!isWord(id))

{

PLWARNING("asking for a non-word id (%d)", id);

return Set();

}

#endif

return word_to_noun_senses[id];

}

| map<int,string> PLearn::WordNetOntology::getWords | ( | ) | [inline] |

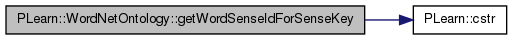

| int PLearn::WordNetOntology::getWordSenseIdForSenseKey | ( | string | lemma, |

| string | lexsn, | ||

| string | word | ||

| ) |

Definition at line 887 of file WordNetOntology.cc.

References PLearn::cstr(), PLearn::Node::fnum, PLearn::Node::gloss, PLearn::Node::hereiam, PLearn::Node::ss_id, PLearn::Node::syns, and WNO_ERROR.

Referenced by main().

{

string sense_key = lemma + "%" + lexsn;

char* csense_key = cstr(sense_key);

SynsetPtr ssp = GetSynsetForSense(csense_key);

if (ssp != NULL)

{

vector<string> synset_words = getSynsetWords(ssp);

string gloss = ssp->defn;

int word_id = words_id[word];

long offset = ssp->hereiam;

int fnum = ssp->fnum;

for (SetIterator it = word_to_senses[word_id].begin(); it != word_to_senses[word_id].end(); ++it)

{

Node* node = synsets[*it];

if (node->syns == synset_words && node->gloss == gloss && node->hereiam == offset && node->fnum == fnum)

return node->ss_id;

}

}

return WNO_ERROR;

}

Definition at line 826 of file WordNetOntology.cc.

References ADJ_TYPE, ADV_TYPE, NOUN_TYPE, PLWARNING, VERB_TYPE, and WNO_ERROR.

{

if (!isWord(word))

{

#ifndef NOWARNING

PLWARNING("asking for a non-word (%s)", word.c_str());

#endif

return WNO_ERROR;

}

int word_id = words_id[word];

switch (wn_pos_type)

{

case NOUN_TYPE:

if (wnsn > (int)word_to_noun_wnsn[word_id].size())

{

#ifndef NOWARNING

PLWARNING("invalid noun wnsn (%d)", wnsn);

#endif

return WNO_ERROR;

} else

return word_to_noun_wnsn[word_id][wnsn - 1];

break;

case VERB_TYPE:

if (wnsn > (int)word_to_verb_wnsn[word_id].size())

{

#ifndef NOWARNING

PLWARNING("invalid verb wnsn (%d)", wnsn);

#endif

return WNO_ERROR;

} else

return word_to_verb_wnsn[word_id][wnsn - 1];

break;

case ADJ_TYPE:

if (wnsn > (int)word_to_adj_wnsn[word_id].size())

{

#ifndef NOWARNING

PLWARNING("invalid adj wnsn (%d)", wnsn);

#endif

return WNO_ERROR;

} else

return word_to_adj_wnsn[word_id][wnsn - 1];

break;

case ADV_TYPE:

if (wnsn > (int)word_to_adv_wnsn[word_id].size())

{

#ifndef NOWARNING

PLWARNING("invalid adv wnsn (%d)", wnsn);

#endif

return WNO_ERROR;

} else

return word_to_adv_wnsn[word_id][wnsn - 1];

break;

default:

#ifndef NOWARNING

PLWARNING("undefined type");

#endif

return WNO_ERROR;

}

}

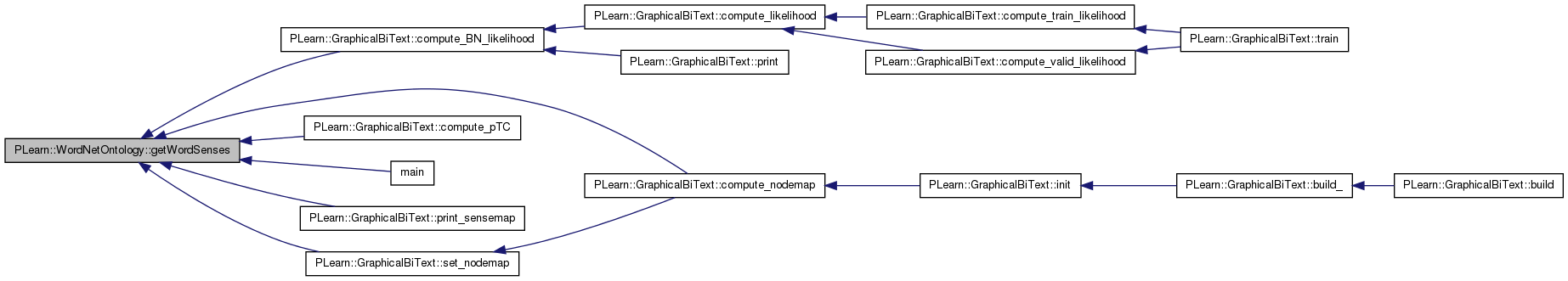

Definition at line 1688 of file WordNetOntology.cc.

References PLWARNING.

Referenced by PLearn::GraphicalBiText::compute_BN_likelihood(), PLearn::GraphicalBiText::compute_nodemap(), PLearn::GraphicalBiText::compute_pTC(), main(), PLearn::GraphicalBiText::print_sensemap(), and PLearn::GraphicalBiText::set_nodemap().

{

#ifndef NOWARNING

if (!isWord(id))

{

PLWARNING("asking for a non-word id (%d)", id);

return Set();

}

#endif

map<int, Set>::const_iterator it = word_to_senses.find(id);

if(it==word_to_senses.end()){

return Set();

}else{

return it->second;

}

}

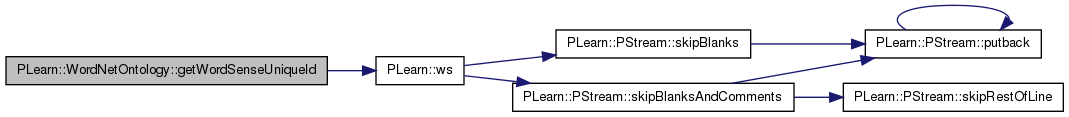

Definition at line 2778 of file WordNetOntology.cc.

References PLearn::ws().

{

if (!are_word_sense_unique_ids_computed)

computeWordSenseUniqueIds();

pair<int, int> ws(word, sense);

if (word_sense_to_unique_id.find(ws) == word_sense_to_unique_id.end())

return -1;

return word_sense_to_unique_id[ws];

}

| int PLearn::WordNetOntology::getWordSenseUniqueIdSize | ( | ) |

Definition at line 2807 of file WordNetOntology.cc.

{

if (!are_word_sense_unique_ids_computed)

computeWordSenseUniqueIds();

return (int)word_sense_to_unique_id.size();

}

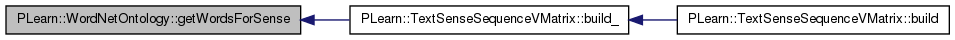

Definition at line 1769 of file WordNetOntology.cc.

References PLWARNING.

Referenced by PLearn::TextSenseSequenceVMatrix::build_().

{

#ifndef NOWARNING

if (!isSense(id))

{

PLWARNING("asking for a non-sense id (%d)", id);

return Set();

}

#endif

return sense_to_words[id];

}

| map<string,int> PLearn::WordNetOntology::getWordsId | ( | ) | [inline] |

Definition at line 1733 of file WordNetOntology.cc.

References PLWARNING.

{

#ifndef NOWARNING

if (!isWord(id))

{

PLWARNING("asking for a non-word id (%d)", id);

return Set();

}

#endif

return word_to_verb_senses[id];

}

Definition at line 352 of file WordNetOntology.cc.

References ADJ_TYPE, ADV_TYPE, PLearn::cstr(), NOUN_TYPE, and VERB_TYPE.

{

//char* cword = const_cast<char*>(word.c_str());

char* cword = cstr(word);

SynsetPtr ssp = NULL;

switch (wn_pos_type)

{

case NOUN_TYPE:

ssp = findtheinfo_ds(cword, NOUN, -HYPERPTR, ALLSENSES);

break;

case VERB_TYPE:

ssp = findtheinfo_ds(cword, VERB, -HYPERPTR, ALLSENSES);

break;

case ADJ_TYPE:

ssp = findtheinfo_ds(cword, ADJ, -HYPERPTR, ALLSENSES);

break;

case ADV_TYPE:

ssp = findtheinfo_ds(cword, ADV, -HYPERPTR, ALLSENSES);

break;

}

bool ssp_is_null = (ssp == NULL);

delete(cword);

free_syns(ssp);

return !ssp_is_null;

}

| void PLearn::WordNetOntology::init | ( | bool | differentiate_unknown_words = true | ) |

Definition at line 106 of file WordNetOntology.cc.

References EOS_SS_ID.

{

if (wninit() != 0) {

// PLERROR("WordNet init error");

}

noun_count = 0;

verb_count = 0;

adj_count = 0;

adv_count = 0;

synset_index = EOS_SS_ID + 1; // first synset id

word_index = 0;

unknown_sense_index = 0;

noun_sense_count = 0;

verb_sense_count = 0;

adj_sense_count = 0;

adv_sense_count = 0;

in_wn_word_count = 0;

out_of_wn_word_count = 0;

are_ancestors_extracted = false;

are_descendants_extracted = false;

are_predominent_pos_extracted = false;

are_word_high_level_senses_extracted = false;

are_word_sense_unique_ids_computed = false;

n_word_high_level_senses = 0;

differentiate_unknown_words = the_differentiate_unknown_words;

}

Definition at line 2078 of file WordNetOntology.cc.

References PLearn::Set::begin(), PLearn::Set::clear(), PLearn::Set::contains(), PLearn::Set::end(), and PLearn::Set::insert().

{

// pour tous les mappings "mot -> ancetres", fait une intersection de "ancetres"

// avec "categories"

for (map<int, Set>::iterator it = word_to_ancestors.begin(); it != word_to_ancestors.end(); ++it)

{

it->second.intersection(categories);

}

// pour tous les mappings "synset -> ancetres" (ou "synset" = "sense" U "category")

// enleve le mapping complet, si "synset" (la cle) n'intersecte pas avec "categories"

Set keys_to_be_removed;

for (map<int, Set>::iterator it = synset_to_ancestors.begin(); it != synset_to_ancestors.end(); ++it)

{

if (!categories.contains(it->first) && !senses.contains(it->first))

keys_to_be_removed.insert(it->first);

}

// purge synset_to_ancestors

for (SetIterator it = keys_to_be_removed.begin(); it != keys_to_be_removed.end(); ++it)

{

synset_to_ancestors.erase(*it);

synsets.erase(*it);

}

// pour tous les mappings "synset -> ancetres" restants (ou "synset" = "sense" U "category")

// fait une intersection de "ancetres" avec "categories"

for (map<int, Set>::iterator it = synset_to_ancestors.begin(); it != synset_to_ancestors.end(); ++it)

{

it->second.intersection(categories);

}

// pour tous les mappings "mot -> senses", fait une intersection de "senses"

// avec "senses"

for (map<int, Set>::iterator it = word_to_senses.begin(); it != word_to_senses.end(); ++it)

{

it->second.intersection(senses);

}

keys_to_be_removed->clear();

for (map<int, Set>::iterator it = sense_to_words.begin(); it != sense_to_words.end(); ++it)

{

if (!senses.contains(it->first))

keys_to_be_removed.insert(it->first);

}

for (SetIterator it = keys_to_be_removed.begin(); it != keys_to_be_removed.end(); ++it)

{

sense_to_words.erase(*it);

}

}

Definition at line 1988 of file WordNetOntology.cc.

{

return isSynset(id);

}

| bool PLearn::WordNetOntology::isInWordNet | ( | string | word, |

| bool | trim_word = true, |

||

| bool | stem_word = true, |

||

| bool | remove_undescores = false |

||

| ) |

Definition at line 303 of file WordNetOntology.cc.

References ADJ_TYPE, ADV_TYPE, NOUN_TYPE, NULL_TAG, PLearn::stemWord(), PLearn::trimWord(), PLearn::underscore_to_space(), and VERB_TYPE.

Referenced by main().

{

if (trim_word)

word = trimWord(word);

if (remove_undescores)

word = underscore_to_space(word);

if (word == NULL_TAG)

{

return false;

} else

{

bool found_noun = hasSenseInWordNet(word, NOUN_TYPE);

bool found_verb = hasSenseInWordNet(word, VERB_TYPE);

bool found_adj = hasSenseInWordNet(word, ADJ_TYPE);

bool found_adv = hasSenseInWordNet(word, ADV_TYPE);

bool found_stemmed_noun = false;

bool found_stemmed_verb = false;

bool found_stemmed_adj = false;

bool found_stemmed_adv = false;

if (stem_word)

{

string stemmed_word = stemWord(word, NOUN);

if (stemmed_word != word)

found_stemmed_noun = hasSenseInWordNet(stemmed_word, NOUN_TYPE);

stemmed_word = stemWord(word, VERB);

if (stemmed_word != word)

found_stemmed_verb = hasSenseInWordNet(stemmed_word, VERB_TYPE);

stemmed_word = stemWord(word, ADJ);

if (stemmed_word != word)

found_stemmed_adj = hasSenseInWordNet(stemmed_word, ADJ_TYPE);

stemmed_word = stemWord(word, ADV);

if (stemmed_word != word)

found_stemmed_adv = hasSenseInWordNet(stemmed_word, ADV_TYPE);

}

if (found_noun || found_verb || found_adj || found_adv ||

found_stemmed_noun || found_stemmed_verb || found_stemmed_adj || found_stemmed_adv)

{

return true;

} else

{

return false;

}

}

}

Definition at line 1618 of file WordNetOntology.cc.

References PLWARNING.

{

#ifndef NOWARNING

if (!isWord(word_id))

{

PLWARNING("asking for a non-word id (%d)", word_id);

return false;

}

#endif

return word_is_in_wn.find(word_id)->second;

}

Definition at line 1993 of file WordNetOntology.cc.

Referenced by PLearn::GraphicalBiText::compute_pTC().

{

return (isCategory(id) && !isSense(id));

}

Definition at line 1983 of file WordNetOntology.cc.

Referenced by PLearn::GraphicalBiText::compute_pTC().

Definition at line 1978 of file WordNetOntology.cc.

Referenced by PLearn::GraphicalBiText::init(), and PLearn::GraphicalBiText::init_WSD().

{

return (sense_to_words.find(id) != sense_to_words.end());

}

Definition at line 1998 of file WordNetOntology.cc.

Referenced by PLearn::GraphicalBiText::compute_node_level(), PLearn::GraphicalBiText::compute_nodemap(), PLearn::GraphicalBiText::compute_pMC(), and PLearn::GraphicalBiText::set_nodemap().

Definition at line 2145 of file WordNetOntology.cc.

{

return synsets[id]->is_unknown;

}

Definition at line 2616 of file WordNetOntology.cc.

References ADJ_SS_ID, ADV_SS_ID, BOS_SS_ID, EOS_SS_ID, NOUN_SS_ID, NUMERIC_SS_ID, OOV_SS_ID, PROPER_NOUN_SS_ID, PUNCTUATION_SS_ID, ROOT_SS_ID, STOP_SS_ID, SUPER_UNKNOWN_SS_ID, UNDEFINED_SS_ID, and VERB_SS_ID.

{

return (ss_id == ROOT_SS_ID || ss_id == SUPER_UNKNOWN_SS_ID ||

ss_id == NOUN_SS_ID || ss_id == VERB_SS_ID ||

ss_id == ADJ_SS_ID || ss_id == ADV_SS_ID ||

ss_id == OOV_SS_ID || ss_id == PROPER_NOUN_SS_ID ||

ss_id == NUMERIC_SS_ID || ss_id == PUNCTUATION_SS_ID ||

ss_id == STOP_SS_ID || ss_id == UNDEFINED_SS_ID ||

ss_id == BOS_SS_ID || ss_id == EOS_SS_ID);

}

| bool PLearn::WordNetOntology::isWord | ( | string | word | ) |

Definition at line 1973 of file WordNetOntology.cc.

Definition at line 1968 of file WordNetOntology.cc.

Referenced by PLearn::GraphicalBiText::compute_likelihood(), PLearn::GraphicalBiText::init(), PLearn::GraphicalBiText::init_WSD(), PLearn::GraphicalBiText::sensetag_valid_bitext(), PLearn::GraphicalBiText::senseTagBitext(), PLearn::GraphicalBiText::test_WSD(), and PLearn::GraphicalBiText::update_WSD_model().

| bool PLearn::WordNetOntology::isWordUnknown | ( | string | word | ) |

Definition at line 2129 of file WordNetOntology.cc.

{

return isWordUnknown(words_id[word]);

}

Definition at line 2134 of file WordNetOntology.cc.

{

bool is_unknown = true;

for (SetIterator it = word_to_senses[id].begin(); it != word_to_senses[id].end(); ++it)

{

if (!synsets[*it]->is_unknown)

is_unknown = false;

}

return is_unknown;

}

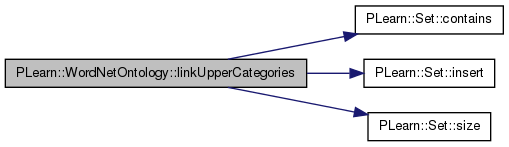

| void PLearn::WordNetOntology::linkUpperCategories | ( | ) |

Definition at line 974 of file WordNetOntology.cc.

References ADJ_SS_ID, ADJ_TYPE, ADV_SS_ID, ADV_TYPE, PLearn::Set::contains(), PLearn::Set::insert(), NOUN_SS_ID, NOUN_TYPE, PLearn::Node::parents, ROOT_SS_ID, PLearn::Set::size(), PLearn::Node::types, VERB_SS_ID, and VERB_TYPE.

{

for (map<int, Node*>::iterator it = synsets.begin(); it != synsets.end(); ++it)

{

int ss_id = it->first;

Node* node = it->second;

if (node->parents.size() == 0 && ss_id != ROOT_SS_ID)

{

bool link_directly_to_root = true;

if (node->types.contains(NOUN_TYPE))

{

node->parents.insert(NOUN_SS_ID);

synsets[NOUN_SS_ID]->children.insert(ss_id);

link_directly_to_root = false;

}

if (node->types.contains(VERB_TYPE))

{

node->parents.insert(VERB_SS_ID);

synsets[VERB_SS_ID]->children.insert(ss_id);

link_directly_to_root = false;

}

if (node->types.contains(ADJ_TYPE))

{

node->parents.insert(ADJ_SS_ID);

synsets[ADJ_SS_ID]->children.insert(ss_id);

link_directly_to_root = false;

}

if (node->types.contains(ADV_TYPE))

{

node->parents.insert(ADV_SS_ID);

synsets[ADV_SS_ID]->children.insert(ss_id);

link_directly_to_root = false;

}

if (link_directly_to_root)

{

node->parents.insert(ROOT_SS_ID);

synsets[ROOT_SS_ID]->children.insert(ss_id);

}

}

}

}

| void PLearn::WordNetOntology::load | ( | string | voc_file, |

| string | synset_file, | ||

| string | ontology_file | ||

| ) |

Definition at line 1267 of file WordNetOntology.cc.

References ADJ_TYPE, ADV_TYPE, PLearn::ShellProgressBar::done(), PLearn::ShellProgressBar::draw(), PLearn::Node::fnum, PLearn::ShellProgressBar::getAsciiFileLineCount(), PLearn::Node::gloss, PLearn::Node::hereiam, i, PLearn::Set::insert(), NOUN_TYPE, PLERROR, PLWARNING, PLearn::split(), PLearn::Node::ss_id, PLearn::startsWith(), PLearn::Node::syns, PLearn::tobool(), PLearn::toint(), PLearn::tolong(), PLearn::Node::types, PLearn::ShellProgressBar::update(), and VERB_TYPE.

{

ifstream if_voc(voc_file.c_str());

if (!if_voc) PLERROR("can't open %s", voc_file.c_str());

ifstream if_synsets(synset_file.c_str());

if (!if_synsets) PLERROR("can't open %s", synset_file.c_str());

ifstream if_ontology(ontology_file.c_str());

if (!if_ontology) PLERROR("can't open %s", ontology_file.c_str());

string line;

int word_count = 0;

while (!if_voc.eof()) // voc

{

getline(if_voc, line, '\n');

if (line == "") continue;

if (line[0] == '#' && line[1] == '#') continue;

words_id[line] = word_count;

word_to_senses[word_count] = Set();

words[word_count++] = line;

}

if_voc.close();

word_index = word_count;

int line_no = 0;

int ss_id = -1;

while (!if_synsets.eof()) // synsets

{

++line_no;

getline(if_synsets, line, '\n');

if (line == "") continue;

if (line[0] == '#') continue;

vector<string> tokens = split(line, "*");

if (tokens.size() != 3 && tokens.size() != 4)

PLERROR("the synset file has not the expected format, line %d = '%s'", line_no, line.c_str());

if(tokens.size() == 3 && line_no == 1)

PLWARNING("The synset file doesn't contain enough information for correct representation of the synsets!");

ss_id = toint(tokens[0]);

vector<string> type_tokens = split(tokens[1], "|");

vector<string> ss_tokens = split(tokens[2], "|");

vector<string> offset_tokens;

if(tokens.size() == 4) offset_tokens = split(tokens[3],"|");

Node* node = new Node(ss_id);

for (unsigned int i = 0; i < type_tokens.size(); i++)

node->types.insert(toint(type_tokens[i]));

node->gloss = ss_tokens[0];

//node->syns.reserve(ss_tokens.size() - 1);

for (unsigned int i = 1; i < ss_tokens.size(); i++)

{

if (i == 1) // extract unknown_sense_index

if (startsWith(ss_tokens[i], "UNKNOWN_SENSE_"))

unknown_sense_index = toint(ss_tokens[i].substr(14, ss_tokens[i].size())) + 1;

node->syns.push_back(ss_tokens[i]);

}

if(tokens.size() == 4)

{

node->fnum = toint(offset_tokens[0]);