|

PLearn 0.1

|

|

PLearn 0.1

|

Provide some memory-management utilities for kernels. More...

#include <MemoryCachedKernel.h>

Public Member Functions | |

| MemoryCachedKernel () | |

| Default constructor. | |

| virtual void | setDataForKernelMatrix (VMat the_data) |

| Optionally cache the data to a real Mat if its number of elements lies within the threshold. | |

| virtual void | addDataForKernelMatrix (const Vec &newRow) |

| Update the cache if a new row is added to the data. | |

| bool | dataCached () const |

| Return true if the cache is active after setting some data. | |

| virtual MemoryCachedKernel * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | m_cache_threshold |

| Threshold on the number of elements to cache the data VMatrix into a real matrix. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

| void | dataRow (int i, Vec &row) const |

| Interface for derived classes: access row i of the data matrix. | |

| Vec * | dataRow (int i) const |

| Interface for derived classes: access row i of the data matrix and return it as a POINTER to a Vec. | |

| template<class DerivedClass > | |

| void | computeGramMatrixNV (Mat K, const DerivedClass *This) const |

| Interface to ease derived-class implementation of computeGramMatrix that avoids virtual function calls in kernel evaluation. | |

| template<class DerivedClass , real(DerivedClass::*)(int, int, int, real) const derivativeFunc> | |

| void | computeGramMatrixDerivNV (Mat &KD, const DerivedClass *This, int arg, bool derivative_func_requires_K=true) const |

| Interface to ease derived-class implementation of computeGramMatrixDerivative, that avoids virtual function calls as much as possible. | |

| template<class DerivedClass > | |

| void | evaluateAllIXNV (const Vec &x, const Vec &k_xi_x, int istart) const |

| Interface to ease derived-class implementation of evaluate_all_i_x that avoids virtual function calls in kernel evaluation. | |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| Mat | m_data_cache |

| In-memory cache of the data matrix. | |

| TVec< Vec > | m_row_cache |

| Cache of vectors for each row of the data matrix; this avoids reconstructing a Vec each time we want to access a row. | |

Private Types | |

| typedef Kernel | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Provide some memory-management utilities for kernels.

This class is intended as a base class to provide some memory management utilities for the data-matrix set with setDataForKernelMatrix function. In particular, it provides a single (inline, non-virtual) function to access a given input vector of the data matrix. If the data VMatrix passed to setDataForKernelMatrix is within a certain size threshold, the VMatrix is converted to a Mat and cached to memory (without requiring additional space if the VMatrix is actually a MemoryVMatrix), and all further element access are done without requiring virtual function calls.

IMPORTANT NOTE: the 'cache_gram_matrix' option is enabled automatically by default for this class. This makes the computation of the Gram matrix derivatives (with respect to kernel hyperparameters) quite faster in many cases. If you really don't want this caching to occur, just set it explicitly to false.

This class also provides utility functions to derived classes to compute the Gram matrix and its derivative (with respect to kernel hyperparameters) without requiring virtual function calls in data access or evaluation function.

Definition at line 70 of file MemoryCachedKernel.h.

typedef Kernel PLearn::MemoryCachedKernel::inherited [private] |

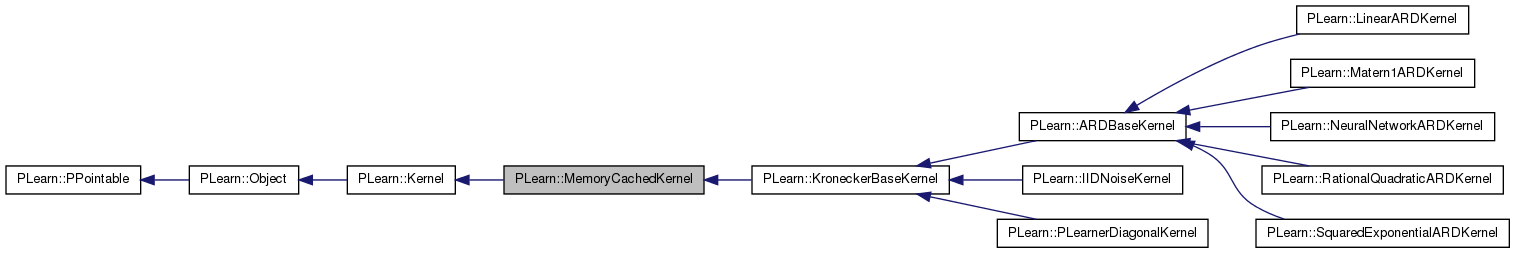

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 72 of file MemoryCachedKernel.h.

| PLearn::MemoryCachedKernel::MemoryCachedKernel | ( | ) |

Default constructor.

Definition at line 72 of file MemoryCachedKernel.cc.

References PLearn::Kernel::cache_gram_matrix.

: m_cache_threshold(1000000) { cache_gram_matrix = true; }

| string PLearn::MemoryCachedKernel::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 67 of file MemoryCachedKernel.cc.

| OptionList & PLearn::MemoryCachedKernel::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 67 of file MemoryCachedKernel.cc.

| RemoteMethodMap & PLearn::MemoryCachedKernel::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 67 of file MemoryCachedKernel.cc.

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 67 of file MemoryCachedKernel.cc.

| StaticInitializer MemoryCachedKernel::_static_initializer_ & PLearn::MemoryCachedKernel::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 67 of file MemoryCachedKernel.cc.

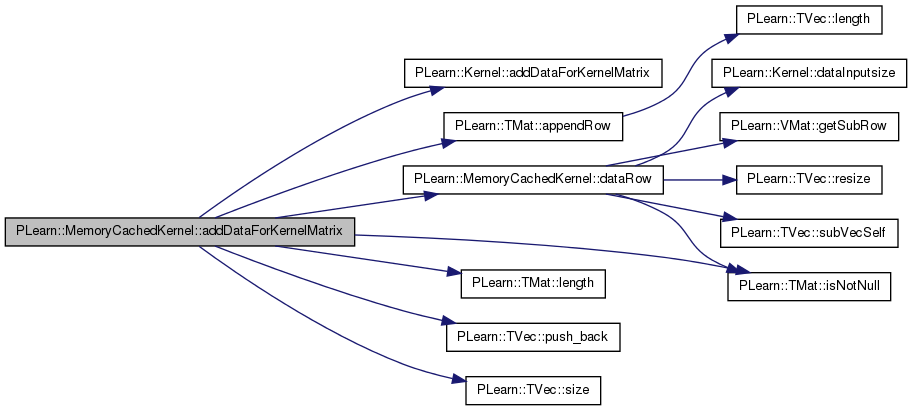

| void PLearn::MemoryCachedKernel::addDataForKernelMatrix | ( | const Vec & | newRow | ) | [virtual] |

Update the cache if a new row is added to the data.

Reimplemented from PLearn::Kernel.

Definition at line 147 of file MemoryCachedKernel.cc.

References PLearn::Kernel::addDataForKernelMatrix(), PLearn::TMat< T >::appendRow(), dataRow(), PLearn::TMat< T >::isNotNull(), PLearn::TMat< T >::length(), m_data_cache, m_row_cache, PLASSERT, PLearn::TVec< T >::push_back(), and PLearn::TVec< T >::size().

{

inherited::addDataForKernelMatrix(newrow);

if (m_data_cache.isNotNull()) {

const int OLD_N = m_data_cache.length();

PLASSERT( m_data_cache.length() == m_row_cache.size() );

m_data_cache.appendRow(newrow);

// Update row cache

m_row_cache.push_back(Vec());

dataRow(OLD_N, m_row_cache[OLD_N]);

}

}

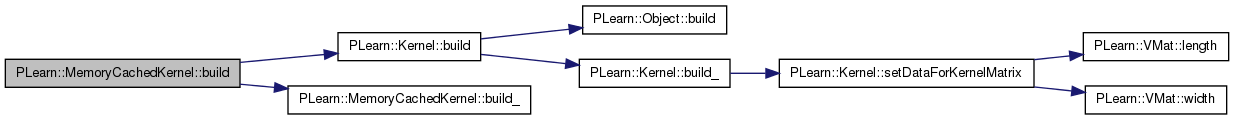

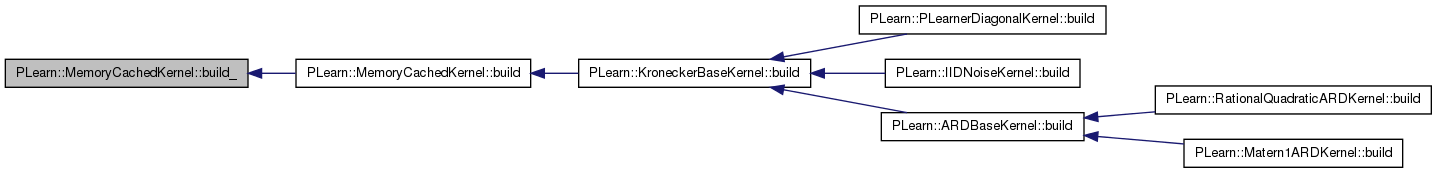

| void PLearn::MemoryCachedKernel::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

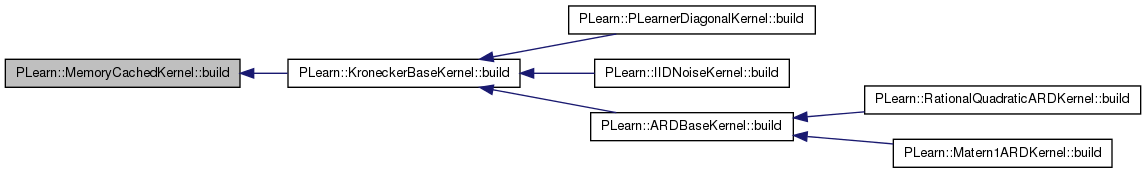

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 97 of file MemoryCachedKernel.cc.

References PLearn::Kernel::build(), and build_().

Referenced by PLearn::KroneckerBaseKernel::build().

{

// ### Nothing to add here, simply calls build_

inherited::build();

build_();

}

| void PLearn::MemoryCachedKernel::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 107 of file MemoryCachedKernel.cc.

Referenced by build().

{ }

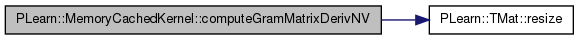

| void PLearn::MemoryCachedKernel::computeGramMatrixDerivNV | ( | Mat & | KD, |

| const DerivedClass * | This, | ||

| int | arg, | ||

| bool | derivative_func_requires_K = true |

||

| ) | const [protected] |

Interface to ease derived-class implementation of computeGramMatrixDerivative, that avoids virtual function calls as much as possible.

This template is instantiated with a member function pointer in the derived class to compute the actual element-wise derivative (with respect to some kernel hyperparameter, depending on which a different member pointer is passed). Both GCC 3.3.6 and 4.0.3 (which I tested on) generate very efficient code to call a member function passed as a template argument within a loop [although the generated code looks very different in both cases].

The member function is called with the following arguments:

The last argument to computeGramMatrixDerivNV, 'derivative_func_requires_K', specifies whether the derivativeFunc requires the value of K in order to compute the derivative. Passing the value 'false' can avoid unnecessary kernel computations in cases where the Gram matrix is not cached. In this case, the derivativeFunc is called with a MISSING_VALUE for its argument K.

Definition at line 283 of file MemoryCachedKernel.h.

References i, j, MISSING_VALUE, PLERROR, and PLearn::TMat< T >::resize().

Referenced by PLearn::RationalQuadraticARDKernel::computeGramMatrixDerivative().

{

if (!data)

PLERROR("Kernel::computeGramMatrixDerivative: "

"setDataForKernelMatrix not yet called");

if (!is_symmetric)

PLERROR("Kernel::computeGramMatrixDerivative: "

"not supported for non-symmetric kernels");

int W = nExamples();

KD.resize(W,W);

real KDij;

real* KDi;

real K = MISSING_VALUE;

real* Ki = 0; // Current row of kernel matrix, if cached

for (int i=0 ; i<W ; ++i) {

KDi = KD[i];

if (gram_matrix_is_cached)

Ki = gram_matrix[i];

for (int j=0 ; j <= i ; ++j) {

// Access the current kernel value depending on whether it's cached

if (Ki)

K = *Ki++;

else if (require_K) {

Vec& row_i = *dataRow(i);

Vec& row_j = *dataRow(j);

K = This->DerivedClass::evaluate(row_i, row_j);

}

// Compute and store the derivative

KDij = (This->*derivativeFunc)(i, j, arg, K);

*KDi++ = KDij;

}

}

}

| void PLearn::MemoryCachedKernel::computeGramMatrixNV | ( | Mat | K, |

| const DerivedClass * | This | ||

| ) | const [protected] |

Interface to ease derived-class implementation of computeGramMatrix that avoids virtual function calls in kernel evaluation.

The computeGramMatrixNV function should be called directly by the implementation of computeGramMatrix in a derived class, passing the name of the derived class as a template argument.

Definition at line 227 of file MemoryCachedKernel.h.

References PLearn::Kernel::cache_gram_matrix, PLearn::Object::classname(), PLearn::Kernel::data, dataRow(), PLearn::Kernel::gram_matrix, PLearn::Kernel::gram_matrix_is_cached, i, PLearn::Kernel::is_symmetric, j, PLearn::TMat< T >::length(), PLearn::VMat::length(), m, PLearn::TMat< T >::mod(), PLASSERT, PLERROR, PLearn::Kernel::report_progress, PLearn::TMat< T >::resize(), and PLearn::TMat< T >::width().

{

if (!data)

PLERROR("Kernel::computeGramMatrix: setDataForKernelMatrix not yet called");

if (!is_symmetric)

PLERROR("Kernel::computeGramMatrix: not supported for non-symmetric kernels");

if (K.length() != data.length() || K.width() != data.length())

PLERROR("Kernel::computeGramMatrix: the argument matrix K should be\n"

"of size %d x %d (currently of size %d x %d)",

data.length(), data.length(), K.length(), K.width());

if (cache_gram_matrix && gram_matrix_is_cached) {

K << gram_matrix;

return;

}

int l=data->length();

int m=K.mod();

PP<ProgressBar> pb;

int count = 0;

if (report_progress)

pb = new ProgressBar("Computing Gram matrix for " + classname(),

(l * (l + 1)) / 2);

Vec row_i, row_j;

real Kij;

real* Ki;

real* Kji;

for (int i=0 ; i<l ; ++i) {

Ki = K[i];

Kji = &K[0][i];

dataRow(i, row_i);

for (int j=0; j<=i; ++j, Kji += m) {

dataRow(j, row_j);

Kij = This->DerivedClass::evaluate(row_i, row_j);

*Ki++ = Kij;

if (j<i)

*Kji = Kij;

}

if (report_progress) {

count += i + 1;

PLASSERT( pb );

pb->update(count);

}

}

if (cache_gram_matrix) {

gram_matrix.resize(l,l);

gram_matrix << K;

gram_matrix_is_cached = true;

}

}

| bool PLearn::MemoryCachedKernel::dataCached | ( | ) | const [inline] |

Return true if the cache is active after setting some data.

Definition at line 101 of file MemoryCachedKernel.h.

References m_data_cache, and PLearn::TMat< T >::size().

{ return m_data_cache.size() > 0; }

Interface for derived classes: access row i of the data matrix and return it as a POINTER to a Vec.

NOTE: this version ASSUMES that the cache exists. You can verify this with the dataCached() function.

Definition at line 216 of file MemoryCachedKernel.h.

References i, and m_row_cache.

{

// Note: ASSUME that the cache exists; will boundcheck in dbg/safeopt if

// not.

return &m_row_cache[i];

}

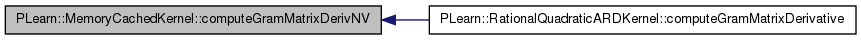

Interface for derived classes: access row i of the data matrix.

Note: the contents of the Vec SHOULD ABSOLUTELY NOT BE MODIFIED after calling this function. For performance, the Vec may not contain a copy of the input vector, but may point to the original data

Definition at line 204 of file MemoryCachedKernel.h.

References PLearn::Kernel::data, PLearn::Kernel::dataInputsize(), PLearn::VMat::getSubRow(), PLearn::TMat< T >::isNotNull(), m_data_cache, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::subVecSelf().

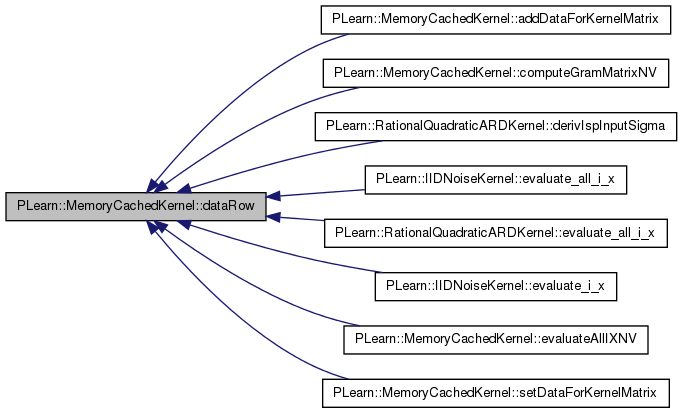

Referenced by addDataForKernelMatrix(), computeGramMatrixNV(), PLearn::RationalQuadraticARDKernel::derivIspInputSigma(), PLearn::IIDNoiseKernel::evaluate_all_i_x(), PLearn::RationalQuadraticARDKernel::evaluate_all_i_x(), PLearn::IIDNoiseKernel::evaluate_i_x(), evaluateAllIXNV(), and setDataForKernelMatrix().

{

if (m_data_cache.isNotNull()) {

row = m_data_cache(i);

row.subVecSelf(0, dataInputsize());

}

else {

row.resize(dataInputsize());

data->getSubRow(i, 0, row);

}

}

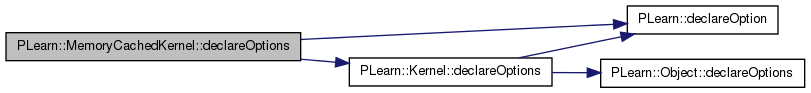

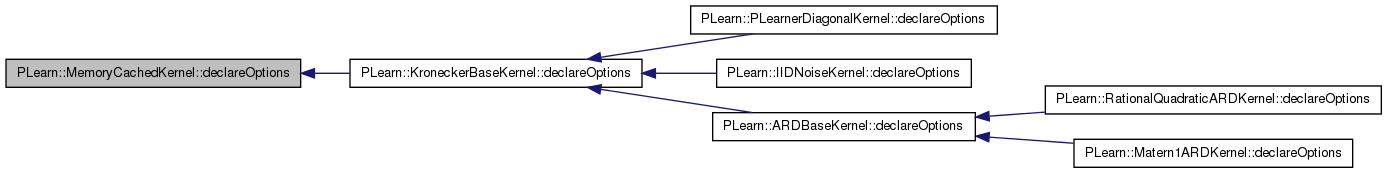

| void PLearn::MemoryCachedKernel::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 81 of file MemoryCachedKernel.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::Kernel::declareOptions(), and m_cache_threshold.

Referenced by PLearn::KroneckerBaseKernel::declareOptions().

{

declareOption(

ol, "cache_threshold", &MemoryCachedKernel::m_cache_threshold,

OptionBase::buildoption,

"Threshold on the number of elements to cache the data VMatrix into a\n"

"real matrix. Above this threshold, the VMatrix is left as-is, and\n"

"element access remains virtual. (Default value = 1000000)\n");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::MemoryCachedKernel::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 107 of file MemoryCachedKernel.h.

:

//##### Protected Member Functions ######################################

| MemoryCachedKernel * PLearn::MemoryCachedKernel::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 67 of file MemoryCachedKernel.cc.

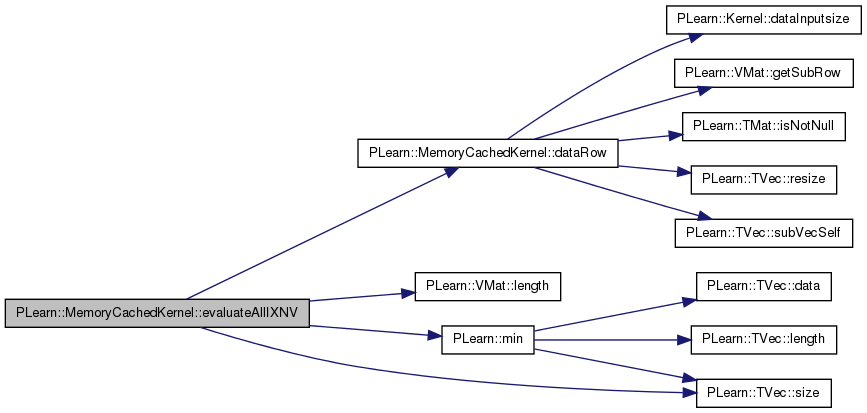

| void PLearn::MemoryCachedKernel::evaluateAllIXNV | ( | const Vec & | x, |

| const Vec & | k_xi_x, | ||

| int | istart | ||

| ) | const [protected] |

Interface to ease derived-class implementation of evaluate_all_i_x that avoids virtual function calls in kernel evaluation.

Definition at line 327 of file MemoryCachedKernel.h.

References PLearn::Kernel::data, dataRow(), i, PLearn::VMat::length(), PLearn::min(), PLERROR, and PLearn::TVec< T >::size().

{

if (!data)

PLERROR("Kernel::computeGramMatrix: setDataForKernelMatrix not yet called");

const DerivedClass* This = static_cast<const DerivedClass*>(this);

int l = min(data->length(), k_xi_x.size());

Vec row_i;

real* k_xi = &k_xi_x[0];

for (int i=istart ; i<l ; ++i) {

dataRow(i, row_i);

*k_xi++ = This->DerivedClass::evaluate(row_i, x);

}

}

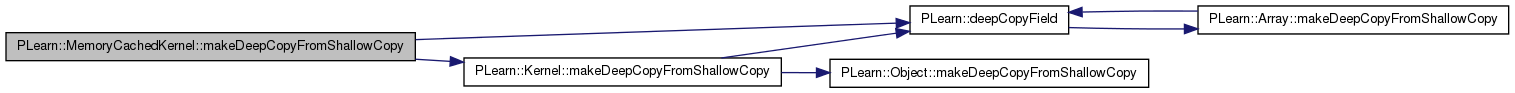

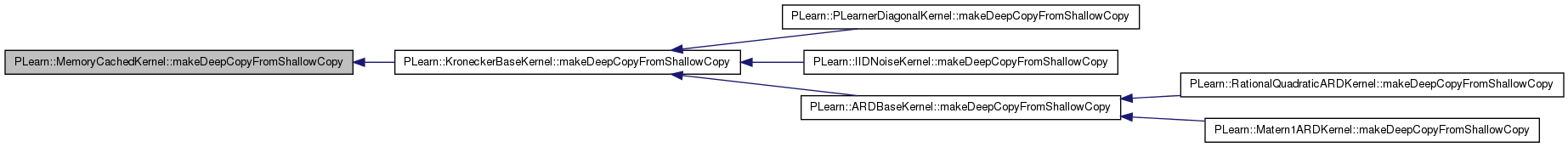

| void PLearn::MemoryCachedKernel::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 113 of file MemoryCachedKernel.cc.

References PLearn::deepCopyField(), m_data_cache, and PLearn::Kernel::makeDeepCopyFromShallowCopy().

Referenced by PLearn::KroneckerBaseKernel::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(m_data_cache, copies);

}

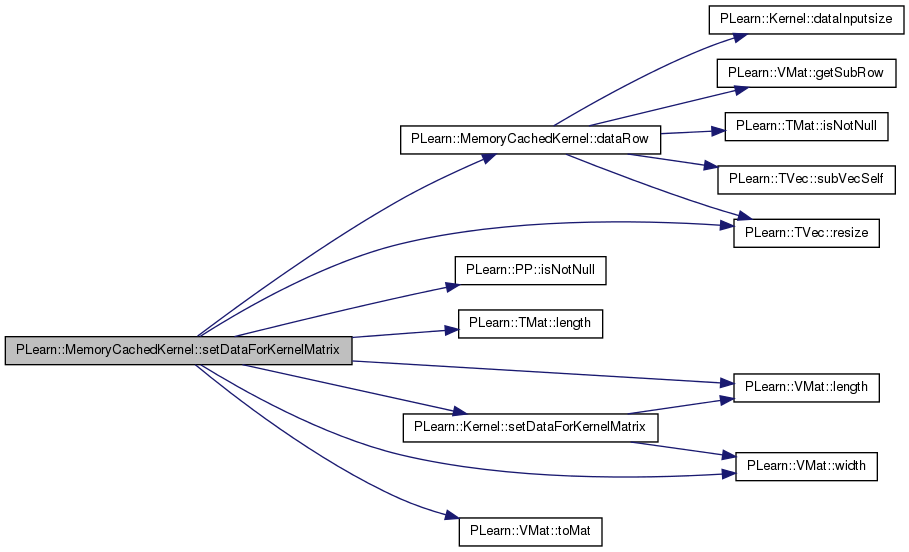

| void PLearn::MemoryCachedKernel::setDataForKernelMatrix | ( | VMat | the_data | ) | [virtual] |

Optionally cache the data to a real Mat if its number of elements lies within the threshold.

Reimplemented from PLearn::Kernel.

Definition at line 123 of file MemoryCachedKernel.cc.

References dataRow(), i, PLearn::PP< T >::isNotNull(), PLearn::TMat< T >::length(), PLearn::VMat::length(), m_cache_threshold, m_data_cache, m_row_cache, N, PLearn::TVec< T >::resize(), PLearn::Kernel::setDataForKernelMatrix(), PLearn::VMat::toMat(), and PLearn::VMat::width().

{

inherited::setDataForKernelMatrix(the_data);

if (the_data.width() * the_data.length() <= m_cache_threshold &&

the_data.isNotNull())

{

m_data_cache = the_data.toMat();

// Update row cache

const int N = m_data_cache.length();

m_row_cache.resize(N);

for (int i=0 ; i<N ; ++i)

dataRow(i, m_row_cache[i]);

}

else {

m_data_cache = Mat();

m_row_cache.resize(0);

}

}

Reimplemented from PLearn::Kernel.

Reimplemented in PLearn::ARDBaseKernel, PLearn::IIDNoiseKernel, PLearn::KroneckerBaseKernel, PLearn::LinearARDKernel, PLearn::Matern1ARDKernel, PLearn::NeuralNetworkARDKernel, PLearn::PLearnerDiagonalKernel, PLearn::RationalQuadraticARDKernel, and PLearn::SquaredExponentialARDKernel.

Definition at line 107 of file MemoryCachedKernel.h.

Threshold on the number of elements to cache the data VMatrix into a real matrix.

Above this threshold, the VMatrix is left as-is, and element access remains virtual. (Default value = 1000000)

Definition at line 82 of file MemoryCachedKernel.h.

Referenced by declareOptions(), and setDataForKernelMatrix().

Mat PLearn::MemoryCachedKernel::m_data_cache [protected] |

In-memory cache of the data matrix.

Definition at line 191 of file MemoryCachedKernel.h.

Referenced by addDataForKernelMatrix(), PLearn::KroneckerBaseKernel::computeGramMatrix(), PLearn::Matern1ARDKernel::computeGramMatrix(), PLearn::PLearnerDiagonalKernel::computeGramMatrix(), PLearn::RationalQuadraticARDKernel::computeGramMatrix(), PLearn::Matern1ARDKernel::computeGramMatrixDerivIspInputSigma(), PLearn::RationalQuadraticARDKernel::computeGramMatrixDerivIspInputSigma(), dataCached(), dataRow(), makeDeepCopyFromShallowCopy(), and setDataForKernelMatrix().

TVec<Vec> PLearn::MemoryCachedKernel::m_row_cache [protected] |

Cache of vectors for each row of the data matrix; this avoids reconstructing a Vec each time we want to access a row.

Definition at line 195 of file MemoryCachedKernel.h.

Referenced by addDataForKernelMatrix(), dataRow(), and setDataForKernelMatrix().

1.7.4

1.7.4