|

PLearn 0.1

|

|

PLearn 0.1

|

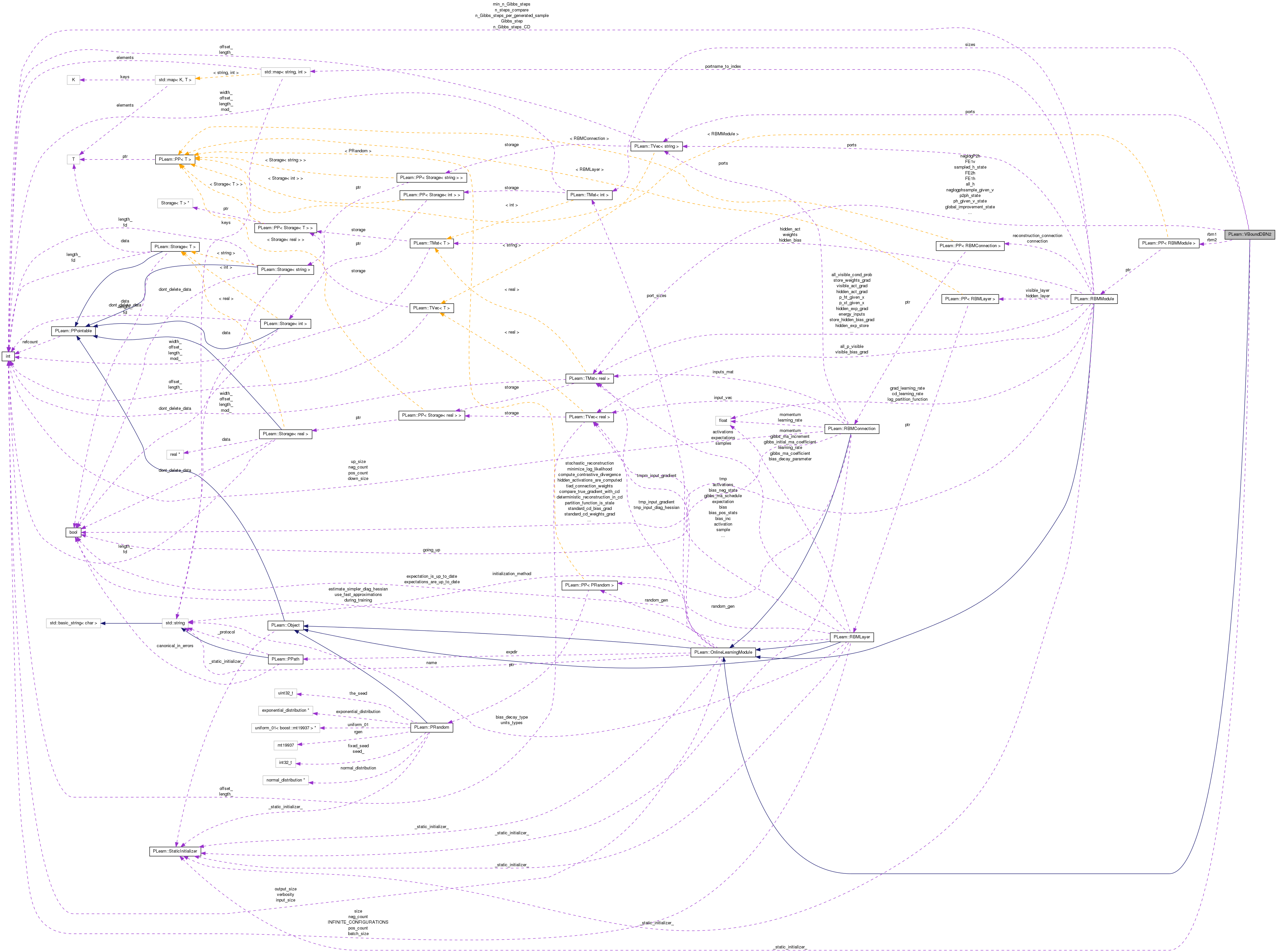

2-RBM DBN trained using Hinton's new variational bound of global likelihood: More...

#include <VBoundDBN2.h>

Public Member Functions | |

| VBoundDBN2 () | |

| Default constructor. | |

| void | fprop (const TVec< Mat * > &ports_value) |

| Perform a fprop step. | |

| virtual void | bpropAccUpdate (const TVec< Mat * > &ports_value, const TVec< Mat * > &ports_gradient) |

| Perform a back propagation step (also updating parameters according to the provided gradient). | |

| virtual void | forget () |

| Reset the parameters to the state they would be BEFORE starting training. | |

| virtual const TVec< string > & | getPorts () |

| Return the list of ports in the module. | |

| virtual const TMat< int > & | getPortSizes () |

| Return the size of all ports, in the form of a two-column matrix, where each row represents a port, and the two numbers on a row are respectively its length and its width (with -1 representing an undefined or variable value). | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual VBoundDBN2 * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| PP< RBMModule > | rbm1 |

| ### declare public option fields (such as build options) here Start your comments with Doxygen-compatible comments such as //! | |

| PP< RBMModule > | rbm2 |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

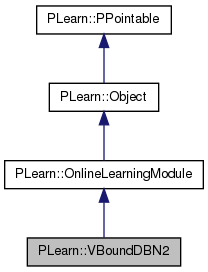

| typedef OnlineLearningModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| Mat | FE1v |

| Mat | FE1h |

| Mat | FE2h |

| Mat | sampled_h_state |

| Mat | global_improvement_state |

| Mat | ph_given_v_state |

| Mat | p2ph_state |

| Mat | neglogphsample_given_v |

| Mat | all_h |

| Mat | neglogP2h |

| TVec< string > | ports |

| TMat< int > | sizes |

2-RBM DBN trained using Hinton's new variational bound of global likelihood:

log P(x) >= -FE1(x) + E_{P1(h|x)}[ FE1(h) - FE2(h) ] - log Z2

where P1 and P2 are RBMs with Pi(x) = exp(-FEi(x))/Zi.

Definition at line 56 of file VBoundDBN2.h.

typedef OnlineLearningModule PLearn::VBoundDBN2::inherited [private] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 58 of file VBoundDBN2.h.

| PLearn::VBoundDBN2::VBoundDBN2 | ( | ) |

| string PLearn::VBoundDBN2::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file VBoundDBN2.cc.

| OptionList & PLearn::VBoundDBN2::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file VBoundDBN2.cc.

| RemoteMethodMap & PLearn::VBoundDBN2::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file VBoundDBN2.cc.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file VBoundDBN2.cc.

| Object * PLearn::VBoundDBN2::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 52 of file VBoundDBN2.cc.

| StaticInitializer VBoundDBN2::_static_initializer_ & PLearn::VBoundDBN2::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file VBoundDBN2.cc.

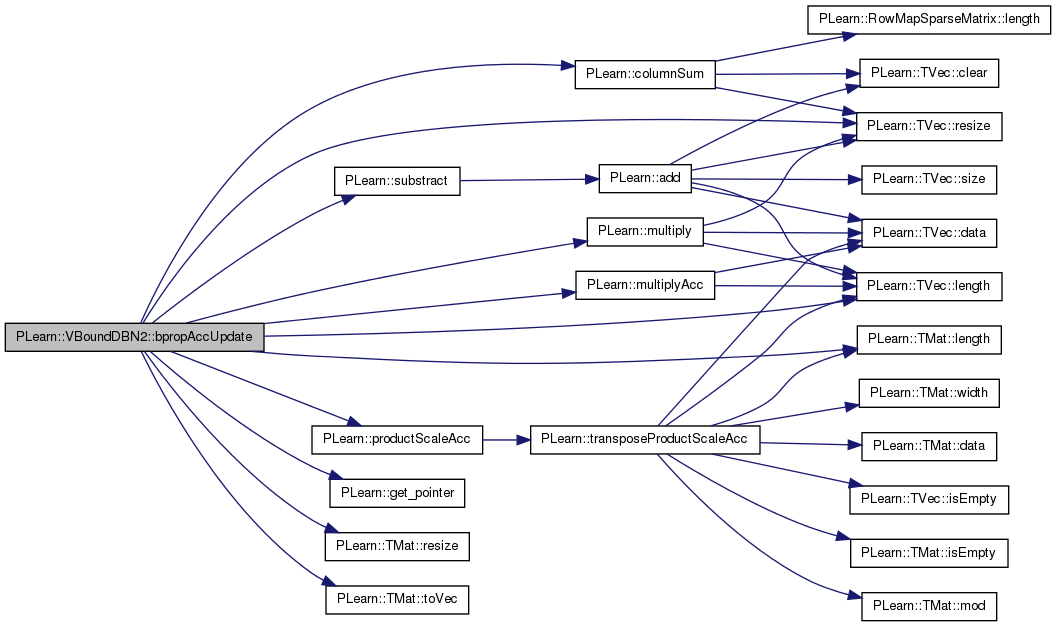

| void PLearn::VBoundDBN2::bpropAccUpdate | ( | const TVec< Mat * > & | ports_value, |

| const TVec< Mat * > & | ports_gradient | ||

| ) | [virtual] |

Perform a back propagation step (also updating parameters according to the provided gradient).

The matrices in 'ports_value' must be the same as the ones given in a previous call to 'fprop' (and thus they should in particular contain the result of the fprop computation). However, they are not necessarily the same as the ones given in the LAST call to 'fprop': if there is a need to store an internal module state, this should be done using a specific port to store this state. Each Mat* pointer in the 'ports_gradient' vector can be one of:

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 121 of file VBoundDBN2.cc.

References PLearn::columnSum(), PLearn::get_pointer(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::multiply(), PLearn::multiplyAcc(), PLASSERT, PLearn::productScaleAcc(), PLearn::TVec< T >::resize(), PLearn::TMat< T >::resize(), PLearn::substract(), and PLearn::TMat< T >::toVec().

{

PLASSERT( ports_value.length() == nPorts() && ports_gradient.length() == nPorts());

PLASSERT( rbm1 && rbm2);

Mat* input = ports_value[0];

Mat* sampled_h_ = ports_value[3]; // a state if input is given

Mat* global_improvement_ = ports_value[4]; // a state if input is given

Mat* ph_given_v_ = ports_value[5]; // a state if input is given

Mat* p2ph_ = ports_value[6]; // same story

int mbs = input->length();

PLASSERT( input && sampled_h_ && global_improvement_

&& ph_given_v_ && p2ph_);

// do CD on rbm2

rbm2->setAllLearningRates(rbm2->cd_learning_rate);

rbm2->hidden_layer->setExpectations(*p2ph_);

rbm2->hidden_layer->generateSamples();

rbm2->sampleVisibleGivenHidden(rbm2->hidden_layer->samples);

rbm2->computeHiddenActivations(rbm2->visible_layer->samples);

rbm2->hidden_layer->computeExpectations();

rbm2->visible_layer->update(*sampled_h_,rbm2->visible_layer->samples);

rbm2->connection->update(*sampled_h_,*p2ph_,

rbm2->visible_layer->samples,

rbm2->hidden_layer->getExpectations());

rbm2->hidden_layer->update(*p2ph_,rbm2->hidden_layer->getExpectations());

// for now do the ugly hack, for binomial + MatrixConnection case

PLASSERT(rbm1->visible_layer->classname()=="RBMBinomialLayer");

PLASSERT(rbm1->hidden_layer->classname()=="RBMBinomialLayer");

PLASSERT(rbm1->connection->classname() == "RBMMatrixConnection");

Mat& weights = ((RBMMatrixConnection*)

get_pointer(rbm1->connection))->weights;

static Mat delta_W;

static Vec delta_hb;

static Vec delta_vb1;

static Vec delta_vb2;

static Mat delta_h;

delta_W.resize(rbm1->hidden_layer->size,rbm1->visible_layer->size);

delta_hb.resize(rbm1->hidden_layer->size);

delta_vb1.resize(rbm1->visible_layer->size);

delta_vb2.resize(rbm1->visible_layer->size);

delta_h.resize(mbs,rbm1->hidden_layer->size);

// reconstruct the input

rbm1->computeVisibleActivations(*sampled_h_);

rbm1->visible_layer->computeExpectations();

Mat reconstructed_v = rbm1->visible_layer->getExpectations();

// compute RBM1 weight negative gradient

// dlogbound/dWij sampling approx = (ph_given_v[i] + (h[i]-ph_given_v[i])*global_improvement)*v[j] - h[i]*reconstructed_v[j]

substract(*sampled_h_, *ph_given_v_, delta_h);

multiply(delta_h, delta_h, global_improvement_->toVec());

delta_h += *ph_given_v_;

productScaleAcc(delta_W, delta_h, true, *input, false, 1., 0.);

productScaleAcc(delta_W, *sampled_h_, true, reconstructed_v, false, -1., 1.);

// update the weights

multiplyAcc(weights, delta_W, rbm1->cd_learning_rate);

// do the biases now

// dlogbound/dbi sampling approx = (ph_given_v[i] + (h[i]-ph_given_v[i])*global_improvement) - h[i]

substract(delta_h, *sampled_h_, delta_h);

columnSum(delta_h,delta_hb);

multiplyAcc(rbm1->hidden_layer->bias,delta_hb,rbm1->cd_learning_rate);

// dlogbound/dji sampling approx = v[j] - reconstructed_v[j]

columnSum(reconstructed_v,delta_vb1);

columnSum(*input,delta_vb2);

substract(delta_vb2,delta_vb1,delta_vb1);

multiplyAcc(rbm1->visible_layer->bias,delta_vb1,rbm1->cd_learning_rate);

// Ensure all required gradients have been computed.

checkProp(ports_gradient);

}

| void PLearn::VBoundDBN2::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 112 of file VBoundDBN2.cc.

{

inherited::build();

build_();

}

| void PLearn::VBoundDBN2::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 80 of file VBoundDBN2.cc.

{

if (random_gen)

{

if (rbm1 && !rbm1->random_gen)

{

rbm1->random_gen = random_gen;

rbm1->build();

rbm1->forget();

}

if (rbm2 && !rbm2->random_gen)

{

rbm2->random_gen = random_gen;

rbm2->build();

rbm2->forget();

}

}

if (ports.length()==0)

{

ports.append("input"); // 0

ports.append("bound"); // 1

ports.append("nll"); // 2

ports.append("sampled_h"); // 3

ports.append("global_improvement"); // 4

ports.append("ph_given_v"); // 5

ports.append("p2ph"); // 6

}

}

| string PLearn::VBoundDBN2::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 52 of file VBoundDBN2.cc.

| void PLearn::VBoundDBN2::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 64 of file VBoundDBN2.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), rbm1, and rbm2.

{

declareOption(ol, "rbm1", &VBoundDBN2::rbm1,

OptionBase::buildoption,

"First RBM, taking the DBN's input in its visible layer");

declareOption(ol, "rbm2", &VBoundDBN2::rbm2,

OptionBase::buildoption,

"Second RBM, producing the DBN's output and generating internal representations.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::VBoundDBN2::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 258 of file VBoundDBN2.h.

:

//##### Protected Member Functions ######################################

| VBoundDBN2 * PLearn::VBoundDBN2::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file VBoundDBN2.cc.

| void PLearn::VBoundDBN2::forget | ( | ) | [virtual] |

Reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Definition at line 219 of file VBoundDBN2.cc.

Perform a fprop step.

Each Mat* pointer in the 'ports_value' vector can be one of:

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 231 of file VBoundDBN2.cc.

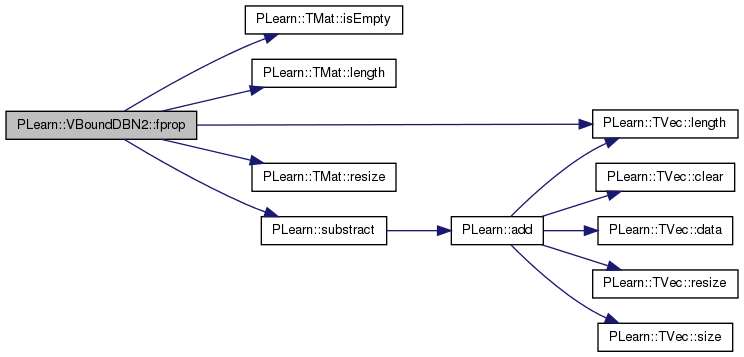

References c, i, PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), N, PLASSERT, PLearn::TMat< T >::resize(), and PLearn::substract().

{

PLASSERT( ports_value.length() == nPorts() );

PLASSERT( rbm1 && rbm2);

Mat* input = ports_value[0];

Mat* bound = ports_value[1];

Mat* nll = ports_value[2];

Mat* sampled_h_ = ports_value[3]; // a state if input is given

Mat* global_improvement_ = ports_value[4]; // a state if input is given

Mat* ph_given_v_ = ports_value[5]; // a state if input is given

Mat* p2ph_ = ports_value[6]; // same story

// fprop has two modes:

// 1) input is given (presumably for learning, or measuring bound or nll)

// 2) input is not given and we want to generate one

//

// for learning or testing

if (input && !input->isEmpty())

{

int mbs=input->length();

FE1v.resize(mbs,1);

FE1h.resize(mbs,1);

FE2h.resize(mbs,1);

Mat* sampled_h = sampled_h_?sampled_h_:&sampled_h_state;

Mat* global_improvement = global_improvement_?global_improvement_:&global_improvement_state;

Mat* ph_given_v = ph_given_v_?ph_given_v_:&ph_given_v_state;

Mat* p2ph = p2ph_?p2ph_:&p2ph_state;

sampled_h->resize(mbs,rbm1->hidden_layer->size);

global_improvement->resize(mbs,1);

ph_given_v->resize(mbs,rbm1->hidden_layer->size);

// compute things needed for everything else

rbm1->sampleHiddenGivenVisible(*input);

*ph_given_v << rbm1->hidden_layer->getExpectations();

*sampled_h << rbm1->hidden_layer->samples;

rbm1->computeFreeEnergyOfVisible(*input,FE1v,false);

rbm1->computeFreeEnergyOfHidden(*sampled_h,FE1h);

rbm2->computeFreeEnergyOfVisible(*sampled_h,FE2h,false);

p2ph->resize(mbs,rbm2->hidden_layer->size);

*p2ph << rbm2->hidden_layer->getExpectations();

substract(FE1h,FE2h,*global_improvement);

if (bound) // actually minus the bound, to be in same units as nll, only computed exactly during test

{

PLASSERT(bound->isEmpty());

bound->resize(mbs,1);

if (rbm2->partition_function_is_stale && !during_training)

rbm2->computePartitionFunction();

*bound << FE1v;

*bound -= *global_improvement;

*bound += rbm2->log_partition_function;

}

if (nll) // exact -log P(input) = - log sum_h P2(h) P1(input|h)

{

PLASSERT( nll->isEmpty() );

int n_h_configurations = 1 << rbm1->hidden_layer->size;

if (all_h.length()!=n_h_configurations || all_h.width()!=rbm1->hidden_layer->size)

{

all_h.resize(n_h_configurations,rbm1->hidden_layer->size);

for (int c=0;c<n_h_configurations;c++)

{

int N=c;

for (int i=0;i<rbm1->hidden_layer->size;i++)

{

all_h(c,i) = N&1;

N >>= 1;

}

}

}

// compute -log P2(h) for each possible h configuration

if (rbm2->partition_function_is_stale && !during_training)

rbm2->computePartitionFunction();

neglogP2h.resize(n_h_configurations, 1);

rbm2->computeFreeEnergyOfVisible(all_h, neglogP2h, false);

neglogP2h += rbm2->log_partition_function;

/*

if (!during_training) {

// Debug code to ensure probabilities sum to 1.

real check = 0;

real check2 = 0;

for (int c = 0; c < n_h_configurations; c++) {

check2 += exp(- neglogP2h(c, 0));

if (c == 0)

check = - neglogP2h(c, 0);

else

check = logadd(check, - neglogP2h(c, 0));

}

pout << check << endl;

pout << check2 << endl;

}

*/

rbm1->computeNegLogPVisibleGivenPHidden(*input,all_h,&neglogP2h,*nll);

}

}

// Ensure all required ports have been computed.

checkProp(ports_value);

}

| OptionList & PLearn::VBoundDBN2::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 52 of file VBoundDBN2.cc.

| OptionMap & PLearn::VBoundDBN2::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 52 of file VBoundDBN2.cc.

| const TVec< string > & PLearn::VBoundDBN2::getPorts | ( | ) | [virtual] |

Return the list of ports in the module.

The default implementation returns a pair ("input", "output") to handle the most common case.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 344 of file VBoundDBN2.cc.

{

return ports;

}

Return the size of all ports, in the form of a two-column matrix, where each row represents a port, and the two numbers on a row are respectively its length and its width (with -1 representing an undefined or variable value).

The default value fills this matrix with:

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 351 of file VBoundDBN2.cc.

References PLASSERT.

{

PLASSERT(rbm1 && rbm2);

if (sizes.width()!=2)

{

sizes.resize(nPorts(),2);

sizes.fill(-1);

sizes(0,1)=rbm1->visible_layer->size;

sizes(1,1)=1;

sizes(2,1)=1;

sizes(3,1)=rbm1->hidden_layer->size;

sizes(4,1)=1;

sizes(5,1)=rbm1->hidden_layer->size;

sizes(6,1)=rbm2->hidden_layer->size;

}

return sizes;

}

| RemoteMethodMap & PLearn::VBoundDBN2::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 52 of file VBoundDBN2.cc.

| void PLearn::VBoundDBN2::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 371 of file VBoundDBN2.cc.

References PLearn::deepCopyField().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(rbm1, copies);

deepCopyField(rbm2, copies);

deepCopyField(FE1v, copies);

deepCopyField(FE1h, copies);

deepCopyField(FE2h, copies);

deepCopyField(sampled_h_state, copies);

deepCopyField(global_improvement_state, copies);

deepCopyField(ph_given_v_state, copies);

deepCopyField(p2ph_state, copies);

deepCopyField(all_h, copies);

deepCopyField(all_h, copies);

deepCopyField(neglogP2h, copies);

deepCopyField(ports, copies);

}

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 258 of file VBoundDBN2.h.

Mat PLearn::VBoundDBN2::all_h [private] |

Definition at line 290 of file VBoundDBN2.h.

Mat PLearn::VBoundDBN2::FE1h [private] |

Definition at line 284 of file VBoundDBN2.h.

Mat PLearn::VBoundDBN2::FE1v [private] |

Definition at line 284 of file VBoundDBN2.h.

Mat PLearn::VBoundDBN2::FE2h [private] |

Definition at line 284 of file VBoundDBN2.h.

Definition at line 286 of file VBoundDBN2.h.

Mat PLearn::VBoundDBN2::neglogP2h [private] |

Definition at line 291 of file VBoundDBN2.h.

Definition at line 289 of file VBoundDBN2.h.

Mat PLearn::VBoundDBN2::p2ph_state [private] |

Definition at line 288 of file VBoundDBN2.h.

Mat PLearn::VBoundDBN2::ph_given_v_state [private] |

Definition at line 287 of file VBoundDBN2.h.

TVec<string> PLearn::VBoundDBN2::ports [private] |

Definition at line 292 of file VBoundDBN2.h.

### declare public option fields (such as build options) here Start your comments with Doxygen-compatible comments such as //!

Definition at line 66 of file VBoundDBN2.h.

Referenced by declareOptions().

Definition at line 67 of file VBoundDBN2.h.

Referenced by declareOptions().

Mat PLearn::VBoundDBN2::sampled_h_state [private] |

Definition at line 285 of file VBoundDBN2.h.

TMat<int> PLearn::VBoundDBN2::sizes [private] |

Definition at line 293 of file VBoundDBN2.h.

1.7.4

1.7.4