|

PLearn 0.1

|

|

PLearn 0.1

|

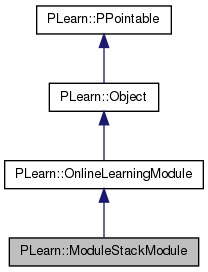

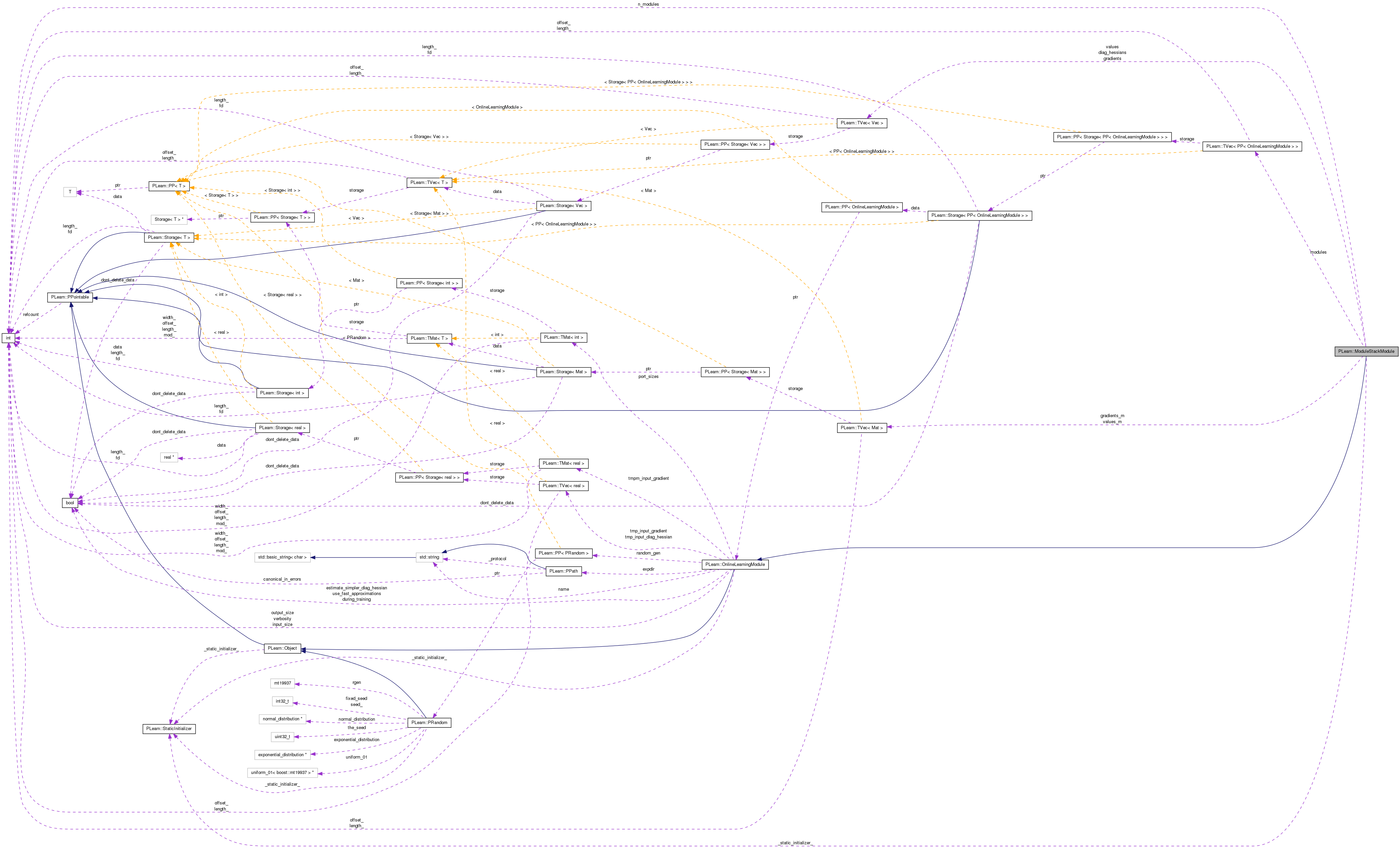

Wraps a stack of layered OnlineLearningModule into a single one. More...

#include <ModuleStackModule.h>

Public Member Functions | |

| ModuleStackModule () | |

| Default constructor. | |

| virtual void | fprop (const Vec &input, Vec &output) const |

| given the input, compute the output (possibly resize it appropriately) | |

| virtual void | fprop (const Mat &inputs, Mat &outputs) |

| Overridden. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false) |

| Adapt based on the output gradient, and obtain the input gradient. | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, const Vec &output_gradient) |

| This version does not obtain the input gradient. | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, Vec &input_diag_hessian, const Vec &output_diag_hessian, bool accumulate=false) |

| Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back. | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &output, const Vec &output_gradient, const Vec &output_diag_hessian) |

| This version does not obtain the input gradient and diag_hessian. | |

| virtual void | forget () |

| Reset the parameters to the state they would be BEFORE starting training. | |

| virtual void | finalize () |

| Perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation. | |

| virtual bool | bpropDoesNothing () |

| In case bpropUpdate does not do anything, make it known. | |

| virtual void | setLearningRate (real dynamic_learning_rate) |

| If this class has a learning rate (or something close to it), set it. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual ModuleStackModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

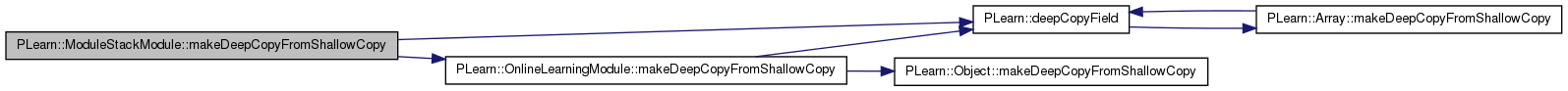

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| TVec< PP< OnlineLearningModule > > | modules |

| The underlying modules. | |

| int | n_modules |

| The number of modules. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef OnlineLearningModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| TVec< Vec > | values |

| values[i] represents the value of the output of module i and the input of module i+1. | |

| TVec< Vec > | gradients |

| TVec< Vec > | diag_hessians |

| TVec< Mat > | values_m |

| Mini-batch versions. | |

| TVec< Mat > | gradients_m |

Wraps a stack of layered OnlineLearningModule into a single one.

The OnlineLearningModule's are disposed like superposed layers: outputs of module i are the inputs of module (i+1), the last layer is the output layer.

Definition at line 55 of file ModuleStackModule.h.

typedef OnlineLearningModule PLearn::ModuleStackModule::inherited [private] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 57 of file ModuleStackModule.h.

| PLearn::ModuleStackModule::ModuleStackModule | ( | ) |

| string PLearn::ModuleStackModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file ModuleStackModule.cc.

| OptionList & PLearn::ModuleStackModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file ModuleStackModule.cc.

| RemoteMethodMap & PLearn::ModuleStackModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file ModuleStackModule.cc.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file ModuleStackModule.cc.

| Object * PLearn::ModuleStackModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 52 of file ModuleStackModule.cc.

| StaticInitializer ModuleStackModule::_static_initializer_ & PLearn::ModuleStackModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file ModuleStackModule.cc.

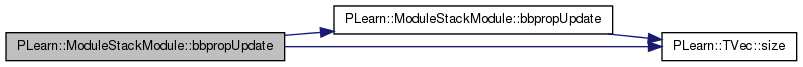

| void PLearn::ModuleStackModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | output_gradient, | ||

| const Vec & | output_diag_hessian | ||

| ) | [virtual] |

This version does not obtain the input gradient and diag_hessian.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 299 of file ModuleStackModule.cc.

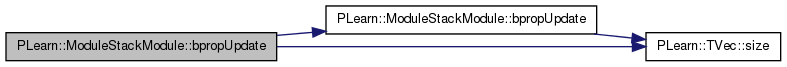

References bbpropUpdate(), diag_hessians, gradients, i, PLearn::OnlineLearningModule::input_size, modules, n_modules, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TVec< T >::size(), and values.

{

PLASSERT( n_modules > 0 );

PLASSERT( input.size() == input_size );

PLASSERT( output.size() == output_size );

PLASSERT( output_gradient.size() == output_size );

PLASSERT( output_diag_hessian.size() == output_size );

// bbpropUpdate should be called just after the corresponding fprop,

// so values should be up-to-date.

modules[n_modules-1]->bbpropUpdate( values[n_modules-2], output,

gradients[n_modules-2], output_gradient,

diag_hessians[n_modules-2],

output_diag_hessian );

for( int i=n_modules-2 ; i>0 ; i-- )

modules[i]->bbpropUpdate( values[i-1], values[i],

gradients[i-1], gradients[i],

diag_hessians[i-1], diag_hessians[i] );

modules[0]->bbpropUpdate( input, values[0],

gradients[0], diag_hessians[0] );

}

| void PLearn::ModuleStackModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| Vec & | input_diag_hessian, | ||

| const Vec & | output_diag_hessian, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back.

If these methods are defined, you can use them INSTEAD of bpropUpdate(...)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 260 of file ModuleStackModule.cc.

References diag_hessians, gradients, i, PLearn::OnlineLearningModule::input_size, modules, n_modules, PLearn::OnlineLearningModule::output_size, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::size(), and values.

Referenced by bbpropUpdate().

{

PLASSERT( n_modules > 0 );

PLASSERT( input.size() == input_size );

PLASSERT( output.size() == output_size );

PLASSERT( output_gradient.size() == output_size );

PLASSERT( output_diag_hessian.size() == output_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == input_size,

"Cannot resize input_gradient AND accumulate into it" );

PLASSERT_MSG( input_diag_hessian.size() == input_size,

"Cannot resize input_diag_hessian AND accumulate into it"

);

}

// bbpropUpdate should be called just after the corresponding fprop,

// so values should be up-to-date.

modules[n_modules-1]->bbpropUpdate( values[n_modules-2], output,

gradients[n_modules-2], output_gradient,

diag_hessians[n_modules-2],

output_diag_hessian );

for( int i=n_modules-2 ; i>0 ; i-- )

modules[i]->bbpropUpdate( values[i-1], values[i],

gradients[i-1], gradients[i],

diag_hessians[i-1], diag_hessians[i] );

modules[0]->bbpropUpdate( input, values[0], input_gradient, gradients[0],

input_diag_hessian, diag_hessians[0],

accumulate );

}

| bool PLearn::ModuleStackModule::bpropDoesNothing | ( | ) | [virtual] |

In case bpropUpdate does not do anything, make it known.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 360 of file ModuleStackModule.cc.

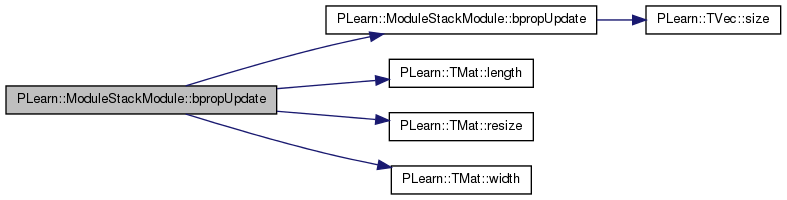

| void PLearn::ModuleStackModule::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 204 of file ModuleStackModule.cc.

References bpropUpdate(), gradients_m, i, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), modules, n_modules, PLearn::OnlineLearningModule::output_size, PLASSERT, PLASSERT_MSG, PLearn::TMat< T >::resize(), values_m, and PLearn::TMat< T >::width().

{

PLASSERT( n_modules >= 2 );

PLASSERT( inputs.width() == input_size );

PLASSERT( outputs.width() == output_size );

PLASSERT( output_gradients.width() == output_size );

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == input_size &&

input_gradients.length() == inputs.length(),

"Cannot resize input_gradients and accumulate into it" );

} else

input_gradients.resize(inputs.length(), input_size);

// bpropUpdate should be called just after the corresponding fprop,

// so 'values_m' should be up-to-date.

modules[n_modules-1]->bpropUpdate( values_m[n_modules-2], outputs,

gradients_m[n_modules-2],

output_gradients );

for( int i=n_modules-2 ; i>0 ; i-- )

modules[i]->bpropUpdate( values_m[i-1], values_m[i],

gradients_m[i-1], gradients_m[i] );

modules[0]->bpropUpdate( inputs, values_m[0], input_gradients, gradients_m[0],

accumulate );

}

| void PLearn::ModuleStackModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

This version does not obtain the input gradient.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 236 of file ModuleStackModule.cc.

References bpropUpdate(), gradients, i, PLearn::OnlineLearningModule::input_size, modules, n_modules, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TVec< T >::size(), and values.

{

PLASSERT( n_modules > 0 );

PLASSERT( input.size() == input_size );

PLASSERT( output.size() == output_size );

PLASSERT( output_gradient.size() == output_size );

// bpropUpdate should be called just after the corresponding fprop,

// so values should be up-to-date.

modules[n_modules-1]->bpropUpdate( values[n_modules-2], output,

gradients[n_modules-2],

output_gradient );

for( int i=n_modules-2 ; i>0 ; i-- )

modules[i]->bpropUpdate( values[i-1], values[i],

gradients[i-1], gradients[i] );

modules[0]->bpropUpdate( input, values[0], gradients[0] );

}

| void PLearn::ModuleStackModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Adapt based on the output gradient, and obtain the input gradient.

This method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then). Since sub-classes are supposed to learn ONLINE, the object is 'ready-to-be-used' just after any bpropUpdate.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 174 of file ModuleStackModule.cc.

References gradients, i, PLearn::OnlineLearningModule::input_size, modules, n_modules, PLearn::OnlineLearningModule::output_size, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::size(), and values.

Referenced by bpropUpdate().

{

PLASSERT( n_modules >= 2 );

PLASSERT( input.size() == input_size );

PLASSERT( output.size() == output_size );

PLASSERT( output_gradient.size() == output_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == input_size,

"Cannot resize input_gradient AND accumulate into it" );

}

// bpropUpdate should be called just after the corresponding fprop,

// so values should be up-to-date.

modules[n_modules-1]->bpropUpdate( values[n_modules-2], output,

gradients[n_modules-2],

output_gradient );

for( int i=n_modules-2 ; i>0 ; i-- )

modules[i]->bpropUpdate( values[i-1], values[i],

gradients[i-1], gradients[i] );

modules[0]->bpropUpdate( input, values[0], input_gradient, gradients[0],

accumulate );

}

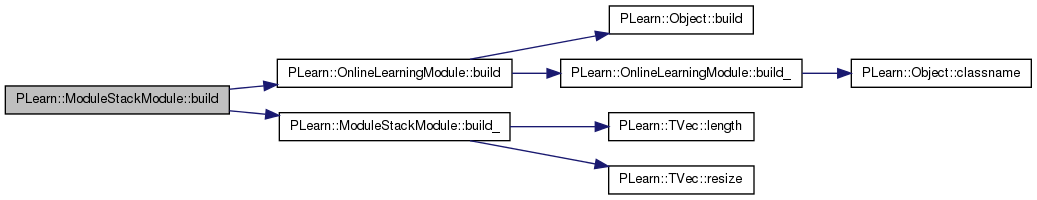

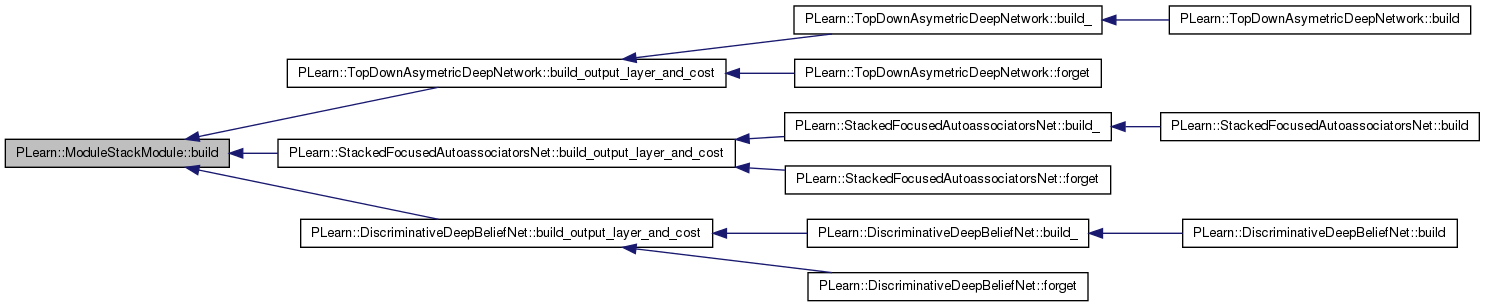

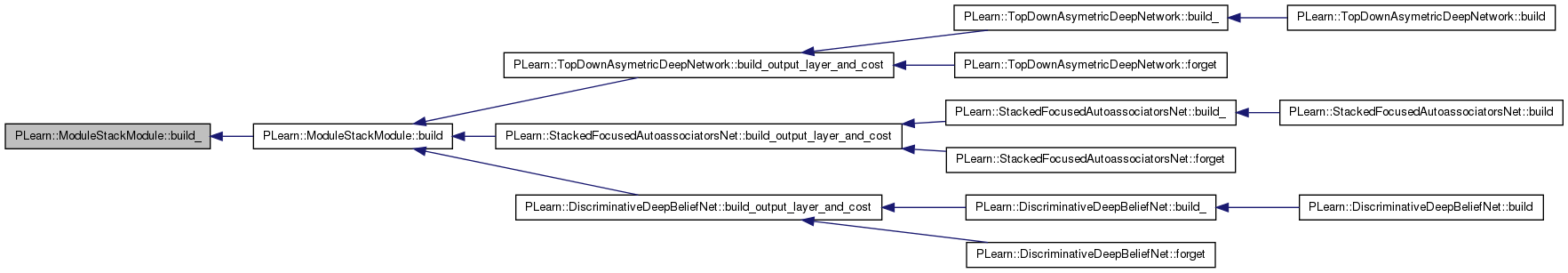

| void PLearn::ModuleStackModule::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 124 of file ModuleStackModule.cc.

References PLearn::OnlineLearningModule::build(), and build_().

Referenced by PLearn::TopDownAsymetricDeepNetwork::build_output_layer_and_cost(), PLearn::StackedFocusedAutoassociatorsNet::build_output_layer_and_cost(), and PLearn::DiscriminativeDeepBeliefNet::build_output_layer_and_cost().

{

inherited::build();

build_();

}

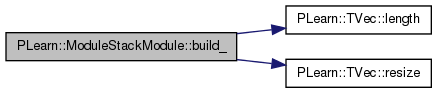

| void PLearn::ModuleStackModule::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 90 of file ModuleStackModule.cc.

References diag_hessians, gradients, gradients_m, i, PLearn::OnlineLearningModule::input_size, PLearn::TVec< T >::length(), modules, n_modules, PLearn::OnlineLearningModule::output_size, PLearn::OnlineLearningModule::random_gen, PLearn::TVec< T >::resize(), values, and values_m.

Referenced by build().

{

n_modules = modules.length();

if( n_modules > 0 )

{

values.resize( n_modules-1 );

gradients.resize( n_modules-1 );

diag_hessians.resize( n_modules-1 );

values_m.resize(n_modules - 1);

gradients_m.resize(n_modules - 1);

input_size = modules[0]->input_size;

output_size = modules[n_modules-1]->output_size;

}

else

{

input_size = -1;

output_size = -1;

}

// If we have a random_gen and some modules do not, share it with them

if( random_gen )

for( int i=0; i<n_modules; i++ )

if( !(modules[i]->random_gen) )

{

modules[i]->random_gen = random_gen;

modules[i]->forget();

}

}

| string PLearn::ModuleStackModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 52 of file ModuleStackModule.cc.

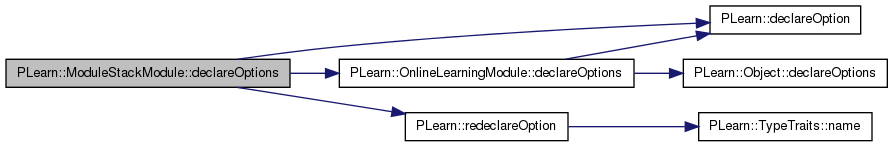

| void PLearn::ModuleStackModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 62 of file ModuleStackModule.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::OnlineLearningModule::declareOptions(), PLearn::OnlineLearningModule::input_size, PLearn::OptionBase::learntoption, modules, n_modules, PLearn::OptionBase::nosave, PLearn::OnlineLearningModule::output_size, and PLearn::redeclareOption().

{

declareOption(ol, "modules", &ModuleStackModule::modules,

OptionBase::buildoption,

"The underlying modules");

declareOption(ol, "n_modules", &ModuleStackModule::n_modules,

OptionBase::learntoption,

"The number of modules");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

// Hide unused options.

redeclareOption(ol, "input_size", &ModuleStackModule::input_size,

OptionBase::nosave,

"Set at build time.");

redeclareOption(ol, "output_size", &ModuleStackModule::output_size,

OptionBase::nosave,

"Set at build time.");

}

| static const PPath& PLearn::ModuleStackModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 142 of file ModuleStackModule.h.

:

//##### Protected Member Functions ######################################

| ModuleStackModule * PLearn::ModuleStackModule::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 52 of file ModuleStackModule.cc.

| void PLearn::ModuleStackModule::finalize | ( | ) | [virtual] |

Perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 351 of file ModuleStackModule.cc.

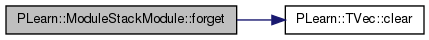

| void PLearn::ModuleStackModule::forget | ( | ) | [virtual] |

Reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Definition at line 328 of file ModuleStackModule.cc.

References PLearn::TVec< T >::clear(), diag_hessians, gradients, i, modules, n_modules, PLWARNING, PLearn::OnlineLearningModule::random_gen, and values.

{

values.clear();

gradients.clear();

diag_hessians.clear();

if( !random_gen )

{

PLWARNING("ModuleStackModule: cannot forget() without random_gen");

return;

}

for( int i=0 ; i<n_modules ; i++ )

{

// Ensure modules[i] can forget

if( !(modules[i]->random_gen) )

modules[i]->random_gen = random_gen;

modules[i]->forget();

}

}

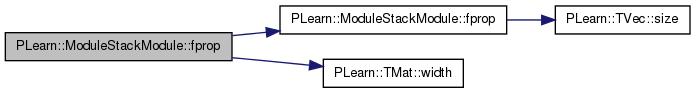

given the input, compute the output (possibly resize it appropriately)

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 149 of file ModuleStackModule.cc.

References i, PLearn::OnlineLearningModule::input_size, modules, n_modules, PLASSERT, PLearn::TVec< T >::size(), and values.

Referenced by fprop().

{

PLASSERT( n_modules >= 2 );

PLASSERT( input.size() == input_size );

modules[0]->fprop( input, values[0] );

for( int i=1 ; i<n_modules-1 ; i++ )

modules[i]->fprop( values[i-1], values[i] );

modules[n_modules-1]->fprop( values[n_modules-2], output );

}

Overridden.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 160 of file ModuleStackModule.cc.

References fprop(), i, PLearn::OnlineLearningModule::input_size, modules, n_modules, PLASSERT, values_m, and PLearn::TMat< T >::width().

{

PLASSERT( n_modules >= 2 );

PLASSERT( inputs.width() == input_size );

modules[0]->fprop( inputs, values_m[0] );

for( int i=1 ; i<n_modules-1 ; i++ )

modules[i]->fprop( values_m[i-1], values_m[i] );

modules[n_modules-1]->fprop( values_m[n_modules-2], outputs );

}

| OptionList & PLearn::ModuleStackModule::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 52 of file ModuleStackModule.cc.

| OptionMap & PLearn::ModuleStackModule::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 52 of file ModuleStackModule.cc.

| RemoteMethodMap & PLearn::ModuleStackModule::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 52 of file ModuleStackModule.cc.

| void PLearn::ModuleStackModule::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 134 of file ModuleStackModule.cc.

References PLearn::deepCopyField(), diag_hessians, gradients, gradients_m, PLearn::OnlineLearningModule::makeDeepCopyFromShallowCopy(), modules, values, and values_m.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(modules, copies);

deepCopyField(values, copies);

deepCopyField(gradients, copies);

deepCopyField(diag_hessians, copies);

deepCopyField(values_m, copies);

deepCopyField(gradients_m, copies);

}

| void PLearn::ModuleStackModule::setLearningRate | ( | real | dynamic_learning_rate | ) | [virtual] |

If this class has a learning rate (or something close to it), set it.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 371 of file ModuleStackModule.cc.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 142 of file ModuleStackModule.h.

TVec<Vec> PLearn::ModuleStackModule::diag_hessians [mutable, private] |

Definition at line 174 of file ModuleStackModule.h.

Referenced by bbpropUpdate(), build_(), forget(), and makeDeepCopyFromShallowCopy().

TVec<Vec> PLearn::ModuleStackModule::gradients [mutable, private] |

Definition at line 173 of file ModuleStackModule.h.

Referenced by bbpropUpdate(), bpropUpdate(), build_(), forget(), and makeDeepCopyFromShallowCopy().

TVec<Mat> PLearn::ModuleStackModule::gradients_m [private] |

Definition at line 178 of file ModuleStackModule.h.

Referenced by bpropUpdate(), build_(), and makeDeepCopyFromShallowCopy().

The underlying modules.

Definition at line 63 of file ModuleStackModule.h.

Referenced by bbpropUpdate(), bpropDoesNothing(), bpropUpdate(), build_(), PLearn::TopDownAsymetricDeepNetwork::build_output_layer_and_cost(), PLearn::StackedFocusedAutoassociatorsNet::build_output_layer_and_cost(), PLearn::DiscriminativeDeepBeliefNet::build_output_layer_and_cost(), declareOptions(), finalize(), forget(), fprop(), makeDeepCopyFromShallowCopy(), and setLearningRate().

The number of modules.

Definition at line 66 of file ModuleStackModule.h.

Referenced by bbpropUpdate(), bpropDoesNothing(), bpropUpdate(), build_(), declareOptions(), finalize(), forget(), fprop(), and setLearningRate().

TVec<Vec> PLearn::ModuleStackModule::values [mutable, private] |

values[i] represents the value of the output of module i and the input of module i+1.

No need for values[n_modules-1] because it's the output. gradients[i] and diag_hessians[i] works just the same, and there is no need for gradients[-1] because it is input_gradient.

Definition at line 172 of file ModuleStackModule.h.

Referenced by bbpropUpdate(), bpropUpdate(), build_(), forget(), fprop(), and makeDeepCopyFromShallowCopy().

TVec<Mat> PLearn::ModuleStackModule::values_m [private] |

Mini-batch versions.

Definition at line 177 of file ModuleStackModule.h.

Referenced by bpropUpdate(), build_(), fprop(), and makeDeepCopyFromShallowCopy().

1.7.4

1.7.4