|

PLearn 0.1

|

|

PLearn 0.1

|

The first sentence should be a BRIEF DESCRIPTION of what the class does. More...

#include <BasisSelectionRegressor.h>

Classes | |

| struct | thread_wawr |

Public Member Functions | |

| BasisSelectionRegressor () | |

| Default constructor. | |

| void | printModelFunction (PStream &out) const |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual void | setTrainStatsCollector (PP< VecStatsCollector > statscol) |

| simply forwards stats coll. to sub-learner | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Forwards the call to sub-learner. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual BasisSelectionRegressor * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual void | setExperimentDirectory (const PPath &the_expdir) |

| The experiment directory is the directory in which files related to this model are to be saved. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| bool | consider_constant_function |

| TVec< RealFunc > | explicit_functions |

| TVec< RealFunc > | explicit_interaction_functions |

| TVec< string > | explicit_interaction_variables |

| TVec< RealFunc > | mandatory_functions |

| bool | consider_raw_inputs |

| bool | consider_normalized_inputs |

| bool | consider_input_range_indicators |

| bool | fixed_min_range |

| real | indicator_desired_prob |

| real | indicator_min_prob |

| TVec< Ker > | kernels |

| Mat | kernel_centers |

| int | n_kernel_centers_to_pick |

| bool | consider_interaction_terms |

| int | max_interaction_terms |

| int | consider_n_best_for_interaction |

| int | interaction_max_order |

| bool | consider_sorted_encodings |

| int | max_n_vals_for_sorted_encodings |

| bool | normalize_features |

| PP< PLearner > | learner |

| bool | precompute_features |

| int | n_threads |

| int | thread_subtrain_length |

| bool | use_all_basis |

| TVec< RealFunc > | selected_functions |

| Vec | alphas |

| Mat | scores |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| PP< PLearner > | template_learner |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | appendCandidateFunctionsOfSingleField (int fieldnum, TVec< RealFunc > &functions) const |

| void | appendKernelFunctions (TVec< RealFunc > &functions) const |

| void | appendConstantFunction (TVec< RealFunc > &functions) const |

| void | buildSimpleCandidateFunctions () |

| Fills the simple_candidate_functions. | |

| void | buildAllCandidateFunctions () |

| Builds candidate_functions. | |

| TVec< RealFunc > | buildTopCandidateFunctions () |

| Builds top best candidate functions (from simple_candidate_functions only) | |

| void | findBestCandidateFunction (int &best_candidate_index, real &best_score) const |

| Returns the index of the best candidate function (most colinear with the residue) | |

| void | addInteractionFunction (RealFunc &f1, RealFunc &f2, TVec< RealFunc > &all_functions) |

| Adds an interaction term to all_functions as RealFunctionProduct(f1, f2) | |

| void | computeOrder (RealFunc &func, int &order) |

| Computes the order of the given function (number of single function embedded) | |

| void | computeWeightedAveragesWithResidue (const TVec< RealFunc > &functions, real &wsum, Vec &E_x, Vec &E_xx, real &E_y, real &E_yy, Vec &E_xy) const |

| void | appendFunctionToSelection (int candidate_index) |

| void | retrainLearner () |

| void | initTargetsResidueWeight () |

| void | recomputeFeatures () |

| void | recomputeResidue () |

| void | computeOutputFromFeaturevec (const Vec &featurevec, Vec &output) const |

Private Attributes | |

| TVec< RealFunc > | simple_candidate_functions |

| TVec< RealFunc > | candidate_functions |

| Mat | features |

| Vec | residue |

| Vec | targets |

| Vec | weights |

| double | residue_sum |

| double | residue_sum_sq |

| Vec | input |

| Vec | targ |

| Vec | featurevec |

The first sentence should be a BRIEF DESCRIPTION of what the class does.

Place the rest of the class programmer documentation here. Doxygen supports Javadoc-style comments. See http://www.doxygen.org/manual.html

Definition at line 60 of file BasisSelectionRegressor.h.

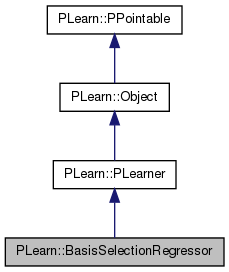

typedef PLearner PLearn::BasisSelectionRegressor::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 62 of file BasisSelectionRegressor.h.

| PLearn::BasisSelectionRegressor::BasisSelectionRegressor | ( | ) |

Default constructor.

Definition at line 67 of file BasisSelectionRegressor.cc.

: consider_constant_function(false), consider_raw_inputs(true), consider_normalized_inputs(false), consider_input_range_indicators(false), fixed_min_range(false), indicator_desired_prob(0.05), indicator_min_prob(0.01), n_kernel_centers_to_pick(-1), consider_interaction_terms(false), max_interaction_terms(-1), consider_n_best_for_interaction(-1), interaction_max_order(-1), consider_sorted_encodings(false), max_n_vals_for_sorted_encodings(-1), normalize_features(false), precompute_features(true), n_threads(0), thread_subtrain_length(0), use_all_basis(false), residue_sum(0), residue_sum_sq(0) {}

| string PLearn::BasisSelectionRegressor::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 65 of file BasisSelectionRegressor.cc.

| OptionList & PLearn::BasisSelectionRegressor::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 65 of file BasisSelectionRegressor.cc.

| RemoteMethodMap & PLearn::BasisSelectionRegressor::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 65 of file BasisSelectionRegressor.cc.

Reimplemented from PLearn::PLearner.

Definition at line 65 of file BasisSelectionRegressor.cc.

| Object * PLearn::BasisSelectionRegressor::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 65 of file BasisSelectionRegressor.cc.

| StaticInitializer BasisSelectionRegressor::_static_initializer_ & PLearn::BasisSelectionRegressor::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 65 of file BasisSelectionRegressor.cc.

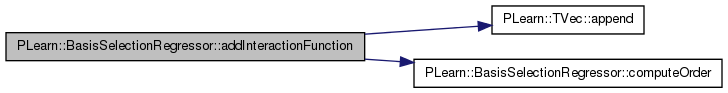

| void PLearn::BasisSelectionRegressor::addInteractionFunction | ( | RealFunc & | f1, |

| RealFunc & | f2, | ||

| TVec< RealFunc > & | all_functions | ||

| ) | [private] |

Adds an interaction term to all_functions as RealFunctionProduct(f1, f2)

Definition at line 618 of file BasisSelectionRegressor.cc.

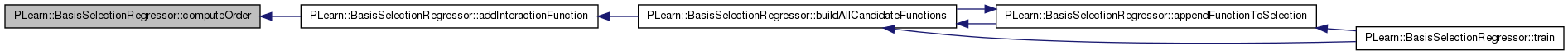

References PLearn::TVec< T >::append(), computeOrder(), and interaction_max_order.

Referenced by buildAllCandidateFunctions().

{

// Check that feature in f2 don't exceed "interaction_max_order" of that feature in f1

// Note that f2 should be a new function to be added (and thus an instance of RealFunctionOfInputFeature)

if (interaction_max_order > 0)

{

int order = 0;

computeOrder(f1, order);

computeOrder(f2, order);

if (order > interaction_max_order)

return;

}

RealFunc f = new RealFunctionProduct(f1, f2);

f->setInfo("(" + f1->getInfo() + "*" + f2->getInfo() + ")");

all_functions.append(f);

}

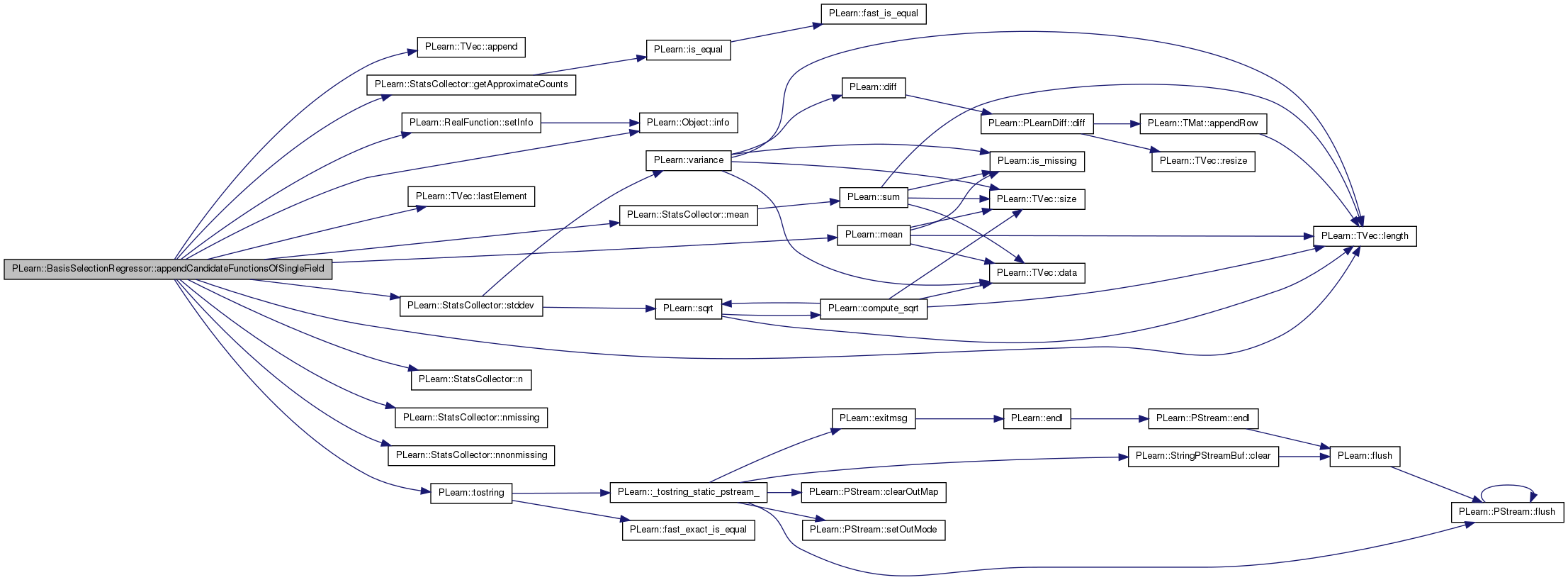

| void PLearn::BasisSelectionRegressor::appendCandidateFunctionsOfSingleField | ( | int | fieldnum, |

| TVec< RealFunc > & | functions | ||

| ) | const [private] |

Definition at line 335 of file BasisSelectionRegressor.cc.

References PLearn::TVec< T >::append(), consider_input_range_indicators, consider_normalized_inputs, consider_raw_inputs, PLearn::VMField::DiscrGeneral, PLearn::VMField::DiscrMonotonic, PLearn::VMField::fieldtype, fixed_min_range, PLearn::StatsCollector::getApproximateCounts(), PLearn::RealRange::high, indicator_desired_prob, indicator_min_prob, PLearn::Object::info(), PLearn::TVec< T >::lastElement(), PLearn::TVec< T >::length(), PLearn::RealRange::low, PLearn::mean(), PLearn::StatsCollector::mean(), MISSING_VALUE, PLearn::StatsCollector::n(), n, PLearn::VMField::name, PLearn::StatsCollector::nmissing(), PLearn::StatsCollector::nnonmissing(), PLearn::RealRangeIndicatorFunction::range, PLearn::RealFunction::setInfo(), PLearn::StatsCollector::stddev(), PLearn::tostring(), and PLearn::PLearner::train_set.

Referenced by buildAllCandidateFunctions(), and buildSimpleCandidateFunctions().

{

VMField field_info = train_set->getFieldInfos(fieldnum);

string fieldname = field_info.name;

VMField::FieldType fieldtype = field_info.fieldtype;

StatsCollector& stats_collector = train_set->getStats(fieldnum);

real n = stats_collector.n();

real nmissing = stats_collector.nmissing();

real nnonmissing = stats_collector.nnonmissing();

real min_count = indicator_min_prob * n;

real desired_count = indicator_desired_prob * n;

// Raw inputs for non-discrete variables

if (consider_raw_inputs && (fieldtype != VMField::DiscrGeneral))

{

RealFunc f = new RealFunctionOfInputFeature(fieldnum);

f->setInfo(fieldname);

functions.append(f);

}

// Normalized inputs for non-discrete variables

if (consider_normalized_inputs && (fieldtype != VMField::DiscrGeneral))

{

if (nnonmissing > 0)

{

real mean = stats_collector.mean();

real stddev = stats_collector.stddev();

if (stddev > 1e-9)

{

RealFunc f = new ShiftAndRescaleFeatureRealFunction(fieldnum, -mean, 1./stddev, 0.);

string info = fieldname + "-" + tostring(mean) + "/" + tostring(stddev);

f->setInfo(info);

functions.append(f);

}

}

}

if (consider_input_range_indicators)

{

const map<real,string>& smap = train_set->getRealToStringMapping(fieldnum);

map<real,string>::const_iterator smap_it = smap.begin();

map<real,string>::const_iterator smap_end = smap.end();

map<real, StatsCollectorCounts>* counts = stats_collector.getApproximateCounts();

map<real,StatsCollectorCounts>::const_iterator count_it = counts->begin();

map<real,StatsCollectorCounts>::const_iterator count_end = counts->end();

// Indicator function for mapped variables

while (smap_it != smap_end)

{

RealFunc f = new RealValueIndicatorFunction(fieldnum, smap_it->first);

string info = fieldname + "=" + smap_it->second;

f->setInfo(info);

functions.append(f);

++smap_it;

}

// Indicator function for discrete variables not using mapping

if (fieldtype == VMField::DiscrGeneral || fieldtype == VMField::DiscrMonotonic)

{

while (count_it != count_end)

{

real val = count_it->first;

// Make sure the variable don't use mapping for this particular value

bool mapped_value = false;

smap_it = smap.begin();

while (smap_it != smap_end)

{

if (smap_it->first == val)

{

mapped_value = true;

break;

}

++smap_it;

}

if (!mapped_value)

{

RealFunc f = new RealValueIndicatorFunction(fieldnum, val);

string info = fieldname + "=" + tostring(val);

f->setInfo(info);

functions.append(f);

}

++count_it;

}

}

// If enough missing values, add an indicator function for it

if (nmissing >= min_count && nnonmissing >= min_count)

{

RealFunc f = new RealValueIndicatorFunction(fieldnum, MISSING_VALUE);

string info = fieldname + "=MISSING";

f->setInfo(info);

functions.append(f);

}

// For fieldtype DiscrGeneral, it stops here.

// A RealRangeIndicatorFunction makes no sense for DiscrGeneral

if (fieldtype == VMField::DiscrGeneral) return;

real cum_count = 0;

real low = -FLT_MAX;

real val = FLT_MAX;

count_it = counts->begin();

while (count_it != count_end)

{

val = count_it->first;

cum_count += count_it->second.nbelow;

bool in_smap = (smap.find(val) != smap_end);

if((cum_count>=desired_count || in_smap&&cum_count>=min_count) && (n-cum_count>=desired_count || in_smap&&n-cum_count>=min_count))

{

RealRange range(']',low,val,'[');

if (fixed_min_range) range.low = -FLT_MAX;

RealFunc f = new RealRangeIndicatorFunction(fieldnum, range);

string info = fieldname + "__" + tostring(range);

f->setInfo(info);

functions.append(f);

cum_count = 0;

low = val;

}

cum_count += count_it->second.n;

if (in_smap)

{

cum_count = 0;

low = val;

}

else if (cum_count>=desired_count && n-cum_count>=desired_count)

{

RealRange range(']',low,val,']');

if (fixed_min_range) range.low = -FLT_MAX;

RealFunc f = new RealRangeIndicatorFunction(fieldnum, range);

string info = fieldname + "__" + tostring(range);

f->setInfo(info);

functions.append(f);

cum_count = 0;

low = val;

}

++count_it;

}

// last chunk

if (cum_count > 0)

{

if (cum_count>=min_count && n-cum_count>=min_count)

{

RealRange range(']',low,val,']');

if (fixed_min_range) range.low = -FLT_MAX;

RealFunc f = new RealRangeIndicatorFunction(fieldnum, range);

string info = fieldname + "__" + tostring(range);

f->setInfo(info);

functions.append(f);

}

else if (functions.length()>0) // possibly lump it together with last range

{

RealRangeIndicatorFunction* f = (RealRangeIndicatorFunction*)(RealFunction*)functions.lastElement();

RealRange& range = f->range;

if(smap.find(range.high) != smap_end) // last element does not appear to be symbolic

{

range.high = val; // OK, change the last range to include val

string info = fieldname + "__" + tostring(range);

f->setInfo(info);

}

}

}

}

}

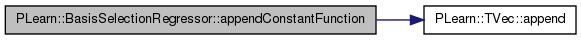

| void PLearn::BasisSelectionRegressor::appendConstantFunction | ( | TVec< RealFunc > & | functions | ) | const [private] |

Definition at line 531 of file BasisSelectionRegressor.cc.

References PLearn::TVec< T >::append().

Referenced by buildSimpleCandidateFunctions().

{

functions.append(new ConstantRealFunction());

}

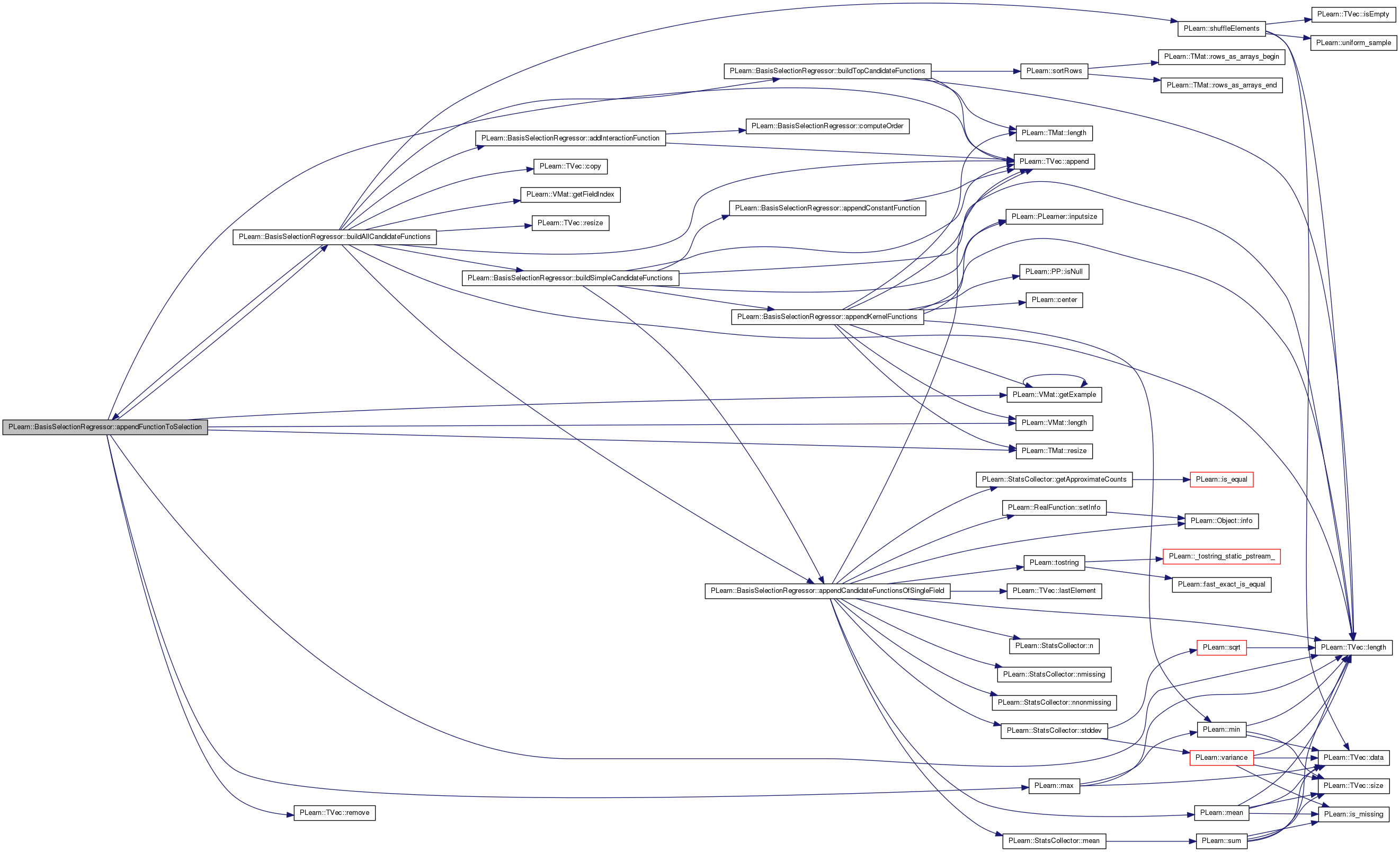

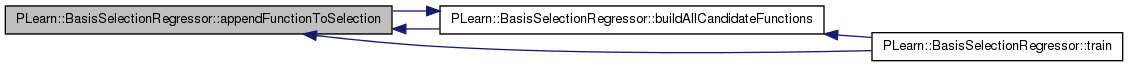

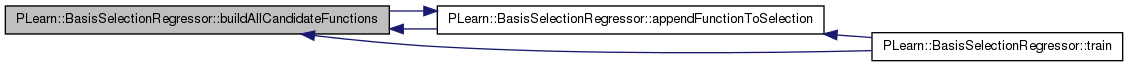

| void PLearn::BasisSelectionRegressor::appendFunctionToSelection | ( | int | candidate_index | ) | [private] |

Definition at line 1062 of file BasisSelectionRegressor.cc.

References PLearn::TVec< T >::append(), buildAllCandidateFunctions(), candidate_functions, consider_interaction_terms, features, PLearn::VMat::getExample(), i, input, PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::max(), precompute_features, PLearn::TVec< T >::remove(), PLearn::TMat< T >::resize(), selected_functions, targ, and PLearn::PLearner::train_set.

Referenced by buildAllCandidateFunctions(), and train().

{

RealFunc f = candidate_functions[candidate_index];

if(precompute_features)

{

int l = train_set->length();

int nf = selected_functions.length();

features.resize(l,nf+1, max(1,static_cast<int>(0.25*l*nf)),true); // enlarge width while preserving content

real weight;

for(int i=0; i<l; i++)

{

train_set->getExample(i,input,targ,weight);

features(i,nf) = f->evaluate(input);

}

}

selected_functions.append(f);

if(!consider_interaction_terms)

candidate_functions.remove(candidate_index);

else

buildAllCandidateFunctions();

}

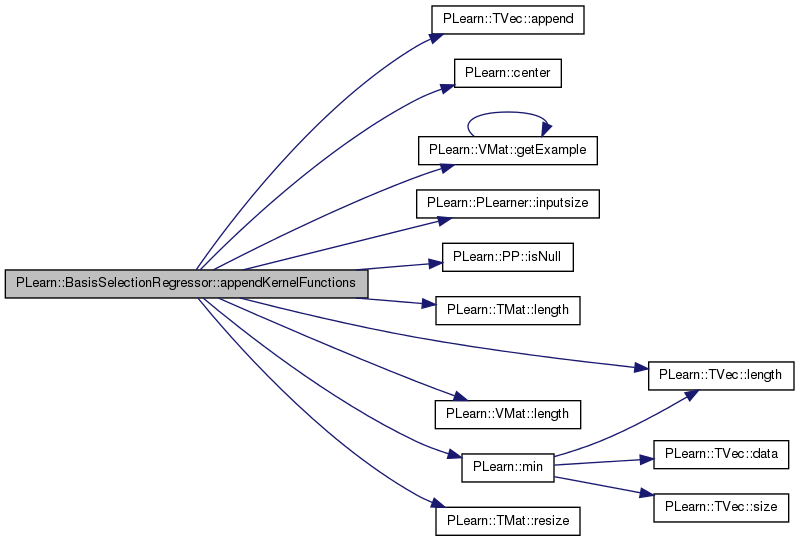

| void PLearn::BasisSelectionRegressor::appendKernelFunctions | ( | TVec< RealFunc > & | functions | ) | const [private] |

Definition at line 504 of file BasisSelectionRegressor.cc.

References PLearn::TVec< T >::append(), PLearn::center(), PLearn::VMat::getExample(), i, input, PLearn::PLearner::inputsize(), PLearn::PP< T >::isNull(), kernel_centers, kernels, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::min(), n_kernel_centers_to_pick, PLearn::PLearner::random_gen, PLearn::TMat< T >::resize(), PLearn::PLearner::seed_, targ, and PLearn::PLearner::train_set.

Referenced by buildSimpleCandidateFunctions().

{

if(kernel_centers.length()<=0 && n_kernel_centers_to_pick>=0)

{

int nc = n_kernel_centers_to_pick;

kernel_centers.resize(nc, inputsize());

real weight;

int l = train_set->length();

if(random_gen.isNull())

random_gen = new PRandom();

random_gen->manual_seed(seed_);

for(int i=0; i<nc; i++)

{

Vec input = kernel_centers(i);

int rowpos = min(int(l*random_gen->uniform_sample()),l-1);

train_set->getExample(rowpos, input, targ, weight);

}

}

for(int i=0; i<kernel_centers.length(); i++)

{

Vec center = kernel_centers(i);

for(int k=0; k<kernels.length(); k++)

functions.append(new RealFunctionFromKernel(kernels[k],center));

}

}

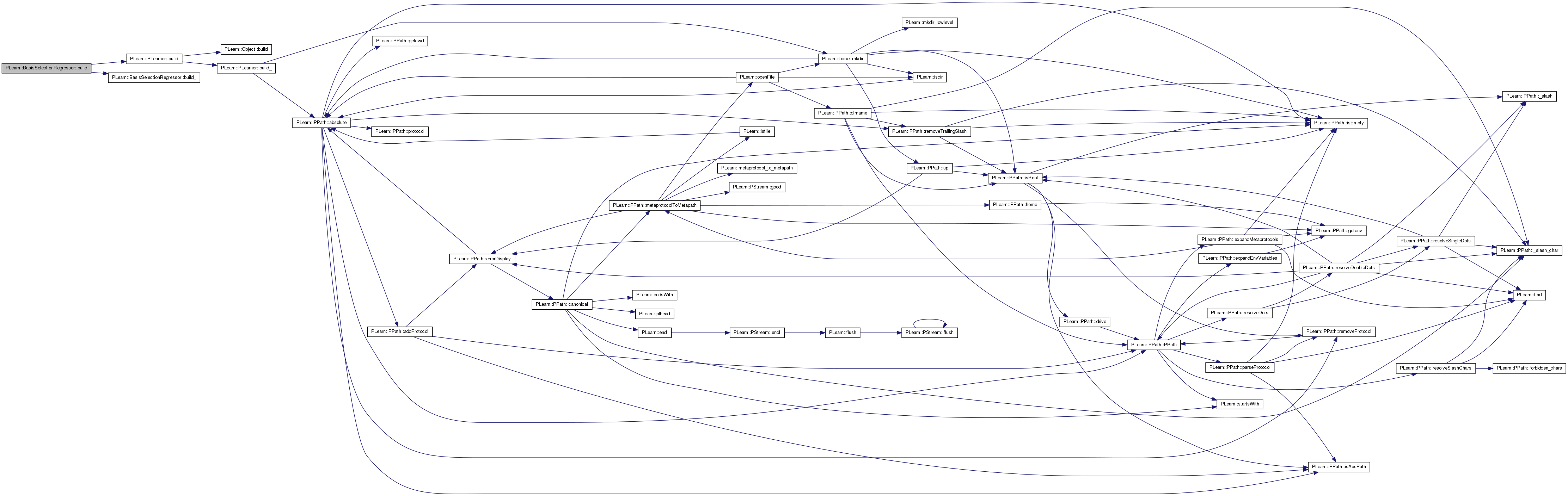

| void PLearn::BasisSelectionRegressor::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 276 of file BasisSelectionRegressor.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::BasisSelectionRegressor::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 258 of file BasisSelectionRegressor.cc.

References consider_interaction_terms, PLearn::PLearner::nstages, PLASSERT_MSG, and use_all_basis.

Referenced by build().

{

if (use_all_basis)

{

PLASSERT_MSG(nstages == 1, "\"nstages\" must be 1 when \"use_all_basis\" is true");

PLASSERT_MSG(!consider_interaction_terms, "\"consider_interaction_terms\" must be false when \"use_all_basis\" is true");

}

}

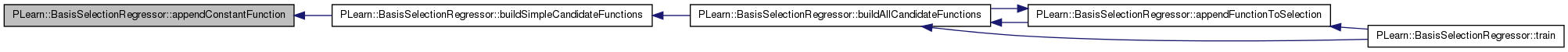

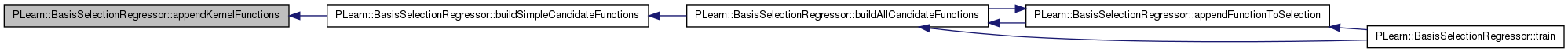

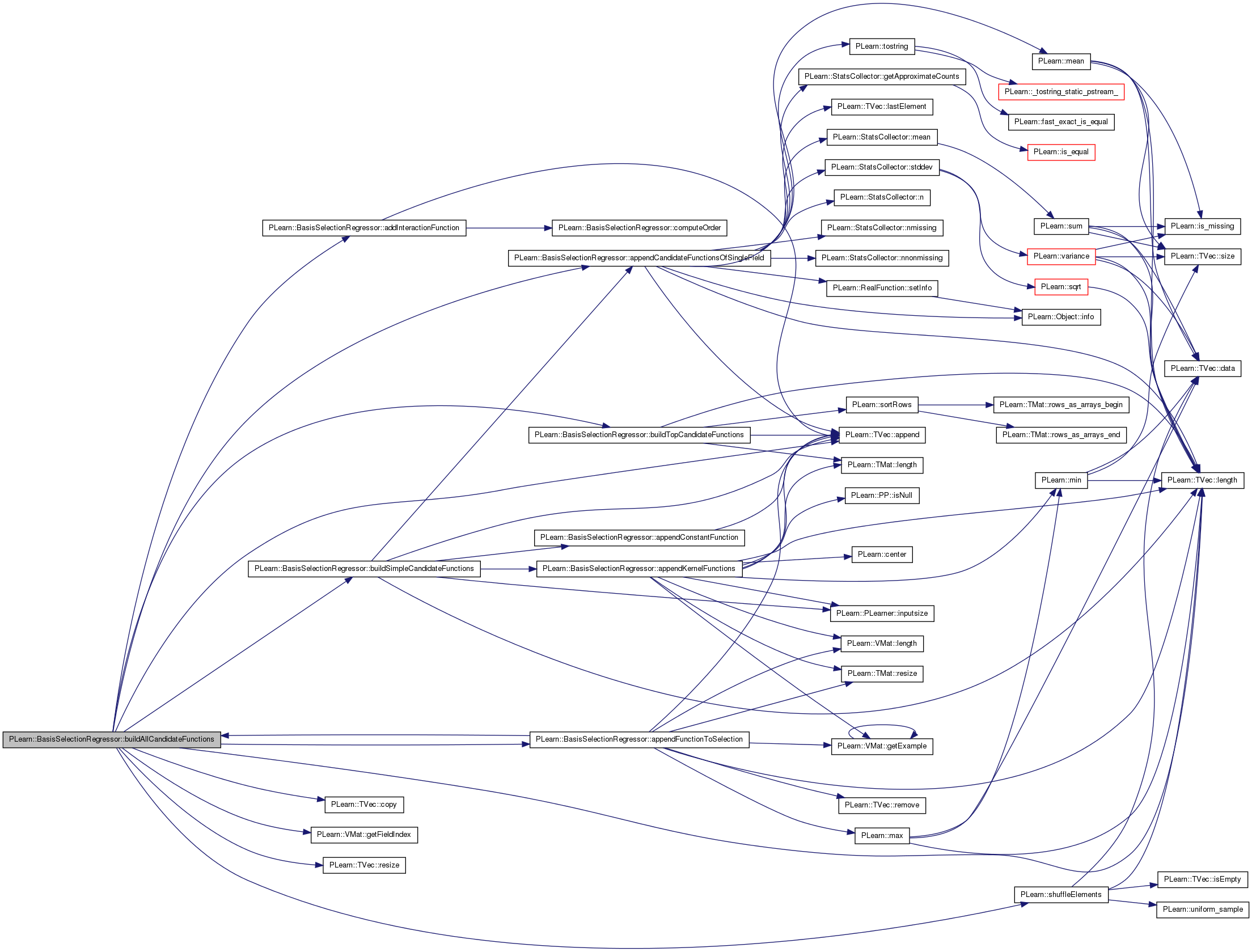

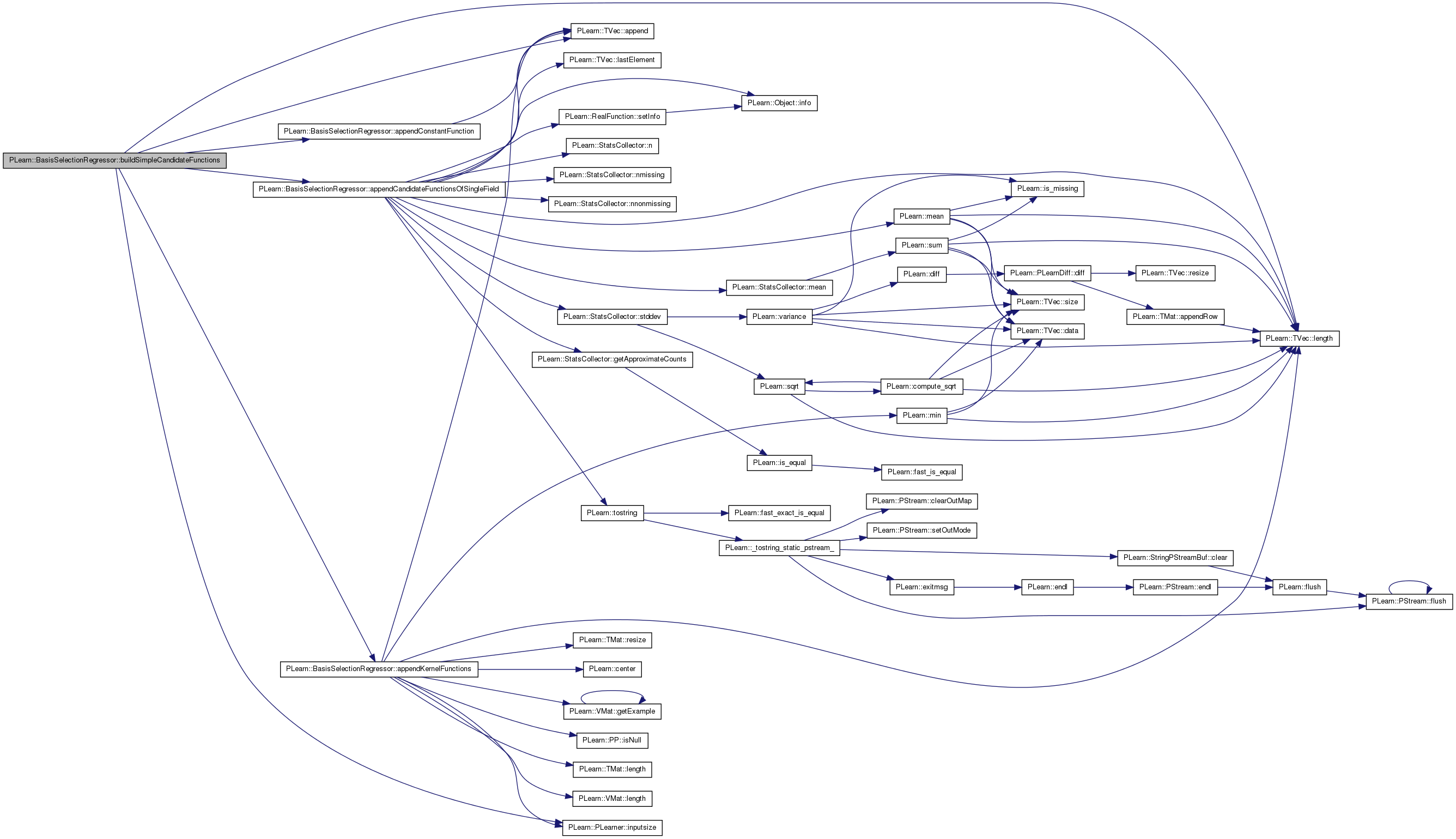

| void PLearn::BasisSelectionRegressor::buildAllCandidateFunctions | ( | ) | [private] |

Builds candidate_functions.

If consider_interactions is false, candidate_functions is the same as simple_candidate_functions If consider_interactions is true, candidate_functions will in addidion include all products between simple_candidate_functions and selected_functions

Definition at line 551 of file BasisSelectionRegressor.cc.

References addInteractionFunction(), PLearn::TVec< T >::append(), appendCandidateFunctionsOfSingleField(), appendFunctionToSelection(), buildSimpleCandidateFunctions(), buildTopCandidateFunctions(), candidate_functions, consider_constant_function, consider_interaction_terms, consider_n_best_for_interaction, PLearn::TVec< T >::copy(), explicit_interaction_functions, explicit_interaction_variables, PLearn::VMat::getFieldIndex(), j, PLearn::TVec< T >::length(), mandatory_functions, max_interaction_terms, PLearn::TVec< T >::resize(), selected_functions, PLearn::shuffleElements(), simple_candidate_functions, PLearn::PLearner::train_set, and use_all_basis.

Referenced by appendFunctionToSelection(), and train().

{

if(selected_functions.length()==0)

{

candidate_functions= mandatory_functions.copy();

while(candidate_functions.length() > 0)

appendFunctionToSelection(0);

}

if(simple_candidate_functions.length()==0)

buildSimpleCandidateFunctions();

candidate_functions = simple_candidate_functions.copy();

TVec<RealFunc> interaction_candidate_functions;

int candidate_start = consider_constant_function ? 1 : 0; // skip bias

int ncandidates = candidate_functions.length();

int nselected = selected_functions.length();

if (nselected > 0 && consider_interaction_terms)

{

TVec<RealFunc> top_candidate_functions = simple_candidate_functions.copy();

int start = candidate_start;

if (consider_n_best_for_interaction > 0 && ncandidates > consider_n_best_for_interaction)

{

top_candidate_functions = buildTopCandidateFunctions();

start = 0;

}

for (int k=0; k<nselected; k++)

{

for (int j=start; j<top_candidate_functions.length(); j++)

{

addInteractionFunction(selected_functions[k], top_candidate_functions[j], interaction_candidate_functions);

}

}

}

// Build explicit_interaction_functions from explicit_interaction_variables

explicit_interaction_functions.resize(0);

for(int k=0; k<explicit_interaction_variables.length(); ++k)

appendCandidateFunctionsOfSingleField(train_set->getFieldIndex(explicit_interaction_variables[k]), explicit_interaction_functions);

// Add interaction_candidate_functions from explicit_interaction_functions

for(int k= 0; k < explicit_interaction_functions.length(); ++k)

{

for(int j=candidate_start; j<ncandidates; ++j)

{

addInteractionFunction(explicit_interaction_functions[k], simple_candidate_functions[j], interaction_candidate_functions);

}

}

// If too many interaction_candidate_functions, we choose them at random

if(max_interaction_terms > 0 && interaction_candidate_functions.length() > max_interaction_terms)

{

shuffleElements(interaction_candidate_functions);

interaction_candidate_functions.resize(max_interaction_terms);

}

candidate_functions.append(interaction_candidate_functions);

// If use_all_basis, append all candidate_functions to selected_functions

if (use_all_basis)

{

while (candidate_functions.length() > 0)

appendFunctionToSelection(0);

}

}

| void PLearn::BasisSelectionRegressor::buildSimpleCandidateFunctions | ( | ) | [private] |

Fills the simple_candidate_functions.

Definition at line 536 of file BasisSelectionRegressor.cc.

References PLearn::TVec< T >::append(), appendCandidateFunctionsOfSingleField(), appendConstantFunction(), appendKernelFunctions(), consider_constant_function, explicit_functions, PLearn::PLearner::inputsize(), kernels, PLearn::TVec< T >::length(), and simple_candidate_functions.

Referenced by buildAllCandidateFunctions().

{

if(consider_constant_function)

appendConstantFunction(simple_candidate_functions);

if(explicit_functions.length()>0)

simple_candidate_functions.append(explicit_functions);

for(int fieldnum=0; fieldnum<inputsize(); fieldnum++)

appendCandidateFunctionsOfSingleField(fieldnum, simple_candidate_functions);

if(kernels.length()>0)

appendKernelFunctions(simple_candidate_functions);

}

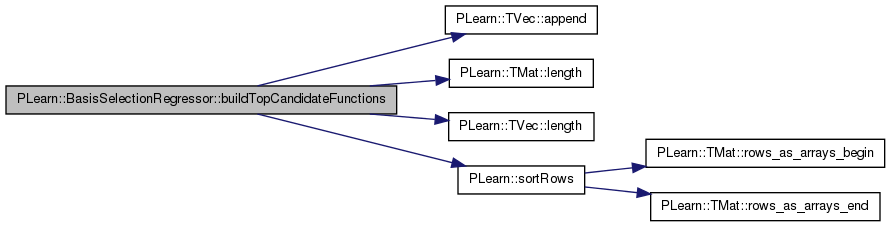

Builds top best candidate functions (from simple_candidate_functions only)

Definition at line 651 of file BasisSelectionRegressor.cc.

References PLearn::TVec< T >::append(), candidate_functions, consider_n_best_for_interaction, i, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLASSERT, scores, simple_candidate_functions, and PLearn::sortRows().

Referenced by buildAllCandidateFunctions().

{

// The scores matrix should match (in size) the candidate_functions matrix

PLASSERT(scores.length() == candidate_functions.length());

sortRows(scores, 1, false);

TVec<RealFunc> top_best_functions;

for (int i=0; i<consider_n_best_for_interaction; i++)

top_best_functions.append(simple_candidate_functions[(int)scores(i,0)]);

return top_best_functions;

}

| string PLearn::BasisSelectionRegressor::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 65 of file BasisSelectionRegressor.cc.

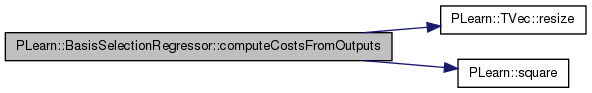

| void PLearn::BasisSelectionRegressor::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 1300 of file BasisSelectionRegressor.cc.

References PLearn::TVec< T >::resize(), and PLearn::square().

Computes the order of the given function (number of single function embedded)

Definition at line 636 of file BasisSelectionRegressor.cc.

References PLERROR.

Referenced by addInteractionFunction().

{

if (dynamic_cast<RealFunctionOfInputFeature*>((RealFunction*) func))

{

++order;

}

else if (RealFunctionProduct* f = dynamic_cast<RealFunctionProduct*>((RealFunction*) func))

{

computeOrder(f->f1, order);

computeOrder(f->f2, order);

}

else

PLERROR("In BasisSelectionRegressor::computeOrder: bad function type.");

}

| void PLearn::BasisSelectionRegressor::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

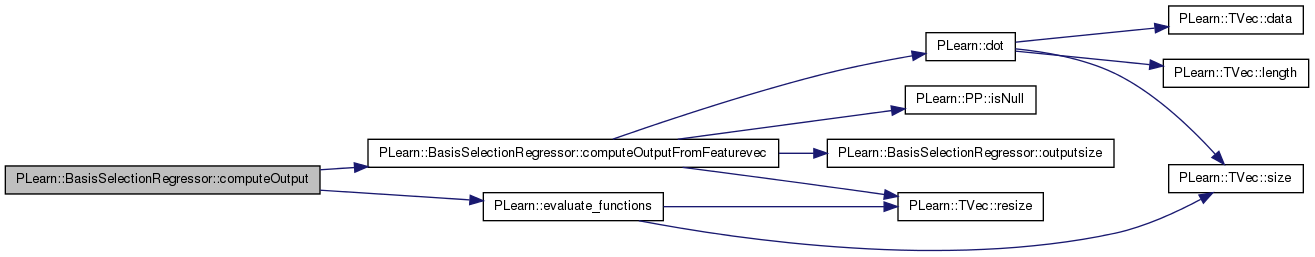

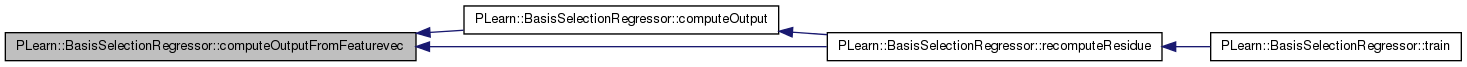

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 1285 of file BasisSelectionRegressor.cc.

References computeOutputFromFeaturevec(), PLearn::evaluate_functions(), featurevec, and selected_functions.

Referenced by recomputeResidue().

{

evaluate_functions(selected_functions, input, featurevec);

computeOutputFromFeaturevec(featurevec, output);

}

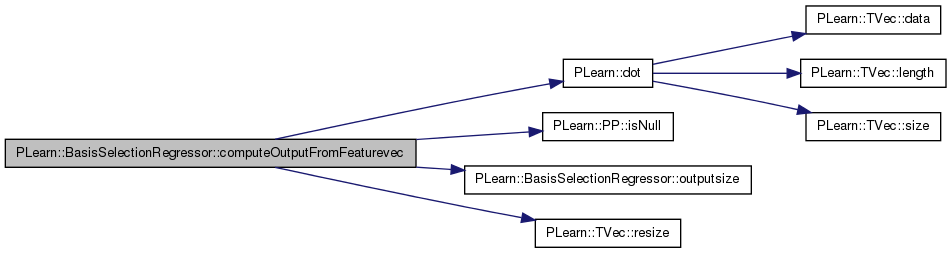

| void PLearn::BasisSelectionRegressor::computeOutputFromFeaturevec | ( | const Vec & | featurevec, |

| Vec & | output | ||

| ) | const [private] |

Definition at line 1272 of file BasisSelectionRegressor.cc.

References alphas, PLearn::dot(), PLearn::PP< T >::isNull(), learner, outputsize(), PLERROR, PLearn::TVec< T >::resize(), and use_all_basis.

Referenced by computeOutput(), and recomputeResidue().

{

int nout = outputsize();

if(nout!=1 && !use_all_basis)

PLERROR("outputsize should always be 1 for this learner (=%d)", nout);

output.resize(nout);

if(learner.isNull())

output[0] = dot(alphas, featurevec);

else

learner->computeOutput(featurevec, output);

}

| void PLearn::BasisSelectionRegressor::computeWeightedAveragesWithResidue | ( | const TVec< RealFunc > & | functions, |

| real & | wsum, | ||

| Vec & | E_x, | ||

| Vec & | E_xx, | ||

| real & | E_y, | ||

| real & | E_yy, | ||

| Vec & | E_xy | ||

| ) | const [private] |

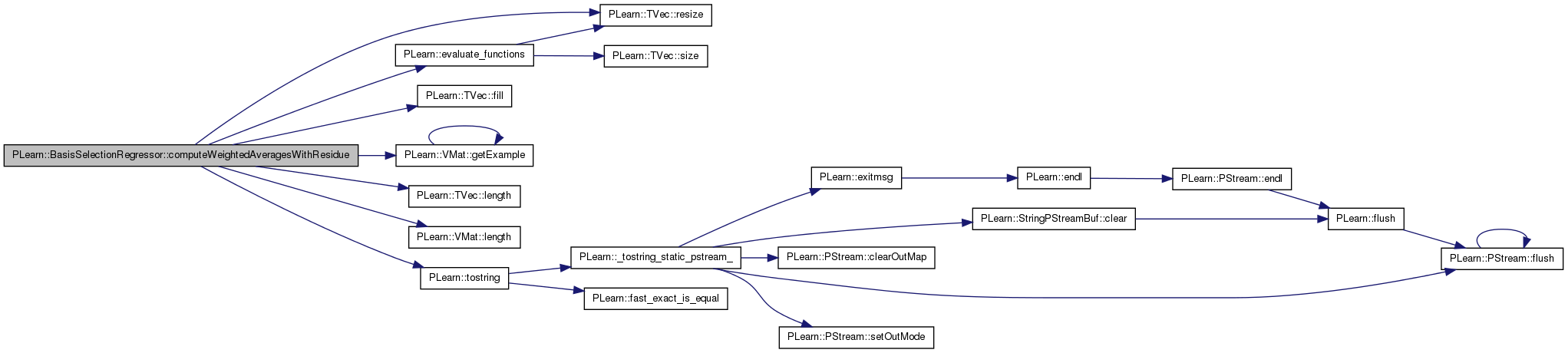

Definition at line 843 of file BasisSelectionRegressor.cc.

References PLearn::evaluate_functions(), PLearn::TVec< T >::fill(), PLearn::VMat::getExample(), i, input, j, PLearn::TVec< T >::length(), PLearn::VMat::length(), n_threads, PLearn::PLearner::report_progress, residue, PLearn::TVec< T >::resize(), targ, thread_subtrain_length, PLearn::tostring(), PLearn::PLearner::train_set, w, and x.

Referenced by findBestCandidateFunction().

{

const Vec& Y = residue;

int n_candidates = functions.length();

E_x.resize(n_candidates);

E_x.fill(0.);

E_xx.resize(n_candidates);

E_xx.fill(0.);

E_y = 0.;

E_yy = 0.;

E_xy.resize(n_candidates);

E_xy.fill(0.);

wsum = 0;

Vec candidate_features;

real w;

int l = train_set->length();

PP<ProgressBar> pb;

if(report_progress)

pb = new ProgressBar("Computing residue scores for " + tostring(n_candidates) + " candidate functions", l);

if(n_threads > 0)

{

Vec wsums(n_threads);

TVec<Vec> E_xs(n_threads);

TVec<Vec> E_xxs(n_threads);

Vec E_ys(n_threads);

Vec E_yys(n_threads);

TVec<Vec> E_xys(n_threads);

boost::mutex ts_mx, pb_mx;

TVec<boost::thread* > threads(n_threads);

TVec<thread_wawr* > tws(n_threads);

for(int i= 0; i < n_threads; ++i)

{

tws[i]= new thread_wawr(i, n_threads, functions,

wsums[i],

E_xs[i], E_xxs[i],

E_ys[i], E_yys[i],

E_xys[i], Y, ts_mx, train_set,

pb_mx, pb, thread_subtrain_length);

threads[i]= new boost::thread(*tws[i]);

}

for(int i= 0; i < n_threads; ++i)

{

threads[i]->join();

wsum+= wsums[i];

E_y+= E_ys[i];

E_yy+= E_yys[i];

for(int j= 0; j < n_candidates; ++j)

{

E_x[j]+= E_xs[i][j];

E_xx[j]+= E_xxs[i][j];

E_xy[j]+= E_xys[i][j];

}

delete threads[i];

delete tws[i];

}

}

else // single-thread version

{

for(int i=0; i<l; i++)

{

real y = Y[i];

train_set->getExample(i, input, targ, w);

evaluate_functions(functions, input, candidate_features);

wsum += w;

real wy = w*y;

E_y += wy;

E_yy += wy*y;

for(int j=0; j<n_candidates; j++)

{

real x = candidate_features[j];

real wx = w*x;

E_x[j] += wx;

E_xx[j] += wx*x;

E_xy[j] += wx*y;

}

if(pb)

pb->update(i);

}

}

// Finalize computation

real inv_wsum = 1.0/wsum;

E_x *= inv_wsum;

E_xx *= inv_wsum;

E_y *= inv_wsum;

E_yy *= inv_wsum;

E_xy *= inv_wsum;

}

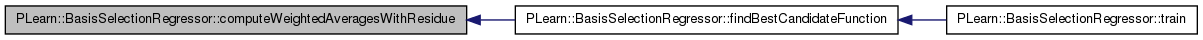

| void PLearn::BasisSelectionRegressor::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 91 of file BasisSelectionRegressor.cc.

References alphas, PLearn::OptionBase::buildoption, candidate_functions, consider_constant_function, consider_input_range_indicators, consider_interaction_terms, consider_n_best_for_interaction, consider_normalized_inputs, consider_raw_inputs, consider_sorted_encodings, PLearn::declareOption(), PLearn::PLearner::declareOptions(), explicit_functions, explicit_interaction_functions, explicit_interaction_variables, fixed_min_range, indicator_desired_prob, indicator_min_prob, interaction_max_order, kernel_centers, kernels, learner, PLearn::OptionBase::learntoption, mandatory_functions, max_interaction_terms, max_n_vals_for_sorted_encodings, n_kernel_centers_to_pick, n_threads, normalize_features, precompute_features, scores, selected_functions, template_learner, thread_subtrain_length, and use_all_basis.

{

//##### Public Build Options ############################################

declareOption(ol, "consider_constant_function", &BasisSelectionRegressor::consider_constant_function,

OptionBase::buildoption,

"If true, the constant function is included in the dictionary");

declareOption(ol, "explicit_functions", &BasisSelectionRegressor::explicit_functions,

OptionBase::buildoption,

"This (optional) list of explicitly given RealFunctions\n"

"will get included in the dictionary");

declareOption(ol, "explicit_interaction_variables", &BasisSelectionRegressor::explicit_interaction_variables,

OptionBase::buildoption,

"This (optional) list of explicitly given variables (fieldnames)\n"

"will get included in the dictionary for interaction terms ONLY\n"

"(i.e. these interact with the other functions.)");

declareOption(ol, "mandatory_functions", &BasisSelectionRegressor::mandatory_functions,

OptionBase::buildoption,

"This (optional) list of explicitly given RealFunctions\n"

"will be automatically selected.");

declareOption(ol, "consider_raw_inputs", &BasisSelectionRegressor::consider_raw_inputs,

OptionBase::buildoption,

"If true, then functions which select one of the raw inputs\n"

"will be included in the dictionary."

"Beware that missing values (NaN) will be left as such.");

declareOption(ol, "consider_normalized_inputs", &BasisSelectionRegressor::consider_normalized_inputs,

OptionBase::buildoption,

"If true, then functions which select and normalize inputs\n"

"will be included in the dictionary. \n"

"Missing values will be replaced by 0 (i.e. the mean of normalized input)\n"

"Inputs which have nearly zero variance will be ignored.\n");

declareOption(ol, "consider_input_range_indicators", &BasisSelectionRegressor::consider_input_range_indicators,

OptionBase::buildoption,

"If true, then we'll include in the dictionary indicator functions\n"

"triggered by input ranges and input special values\n"

"Special values will include all symbolic values\n"

"(detected by the existance of a corresponding string mapping)\n"

"as well as MISSING_VALUE (nan) (if it's present more than \n"

"indicator_min_prob fraction of the training set).\n"

"Real ranges will be formed in accordance to indicator_desired_prob \n"

"and indicator_min_prob options. The necessary statistics are obtained\n"

"from the counts in the StatsCollector of the train_set VMatrix.\n");

declareOption(ol, "fixed_min_range", &BasisSelectionRegressor::fixed_min_range,

OptionBase::buildoption,

"If true, the min value of all range functions will be set to -FLT_MAX.\n"

"This correspond to a 'thermometer' type of mapping.");

declareOption(ol, "indicator_desired_prob", &BasisSelectionRegressor::indicator_desired_prob,

OptionBase::buildoption,

"The algo will try to build input ranges that have at least that probability of occurence in the training set.");

declareOption(ol, "indicator_min_prob", &BasisSelectionRegressor::indicator_min_prob,

OptionBase::buildoption,

"This will be used instead of indicator_desired_prob, for missing values, \n"

"and ranges immediately followed by a symbolic value");

declareOption(ol, "kernels", &BasisSelectionRegressor::kernels,

OptionBase::buildoption,

"If given then each of these kernels, centered on each of the kernel_centers \n"

"will be included in the dictionary");

declareOption(ol, "kernel_centers", &BasisSelectionRegressor::kernel_centers,

OptionBase::buildoption,

"If you specified a non empty kernels, you can give a matrix of explicit \n"

"centers here. Alternatively you can specify n_kernel_centers_to_pick.\n");

declareOption(ol, "n_kernel_centers_to_pick", &BasisSelectionRegressor::n_kernel_centers_to_pick,

OptionBase::buildoption,

"If >0 then kernel_centers will be generated by randomly picking \n"

"n_kernel_centers_to_pick data points from the training set \n"

"(don't forget to set the seed option)");

declareOption(ol, "consider_interaction_terms", &BasisSelectionRegressor::consider_interaction_terms,

OptionBase::buildoption,

"If true, the dictionary will be enriched, at each stage, by the product of\n"

"each of the already chosen basis functions with each of the dictionary functions\n");

declareOption(ol, "max_interaction_terms", &BasisSelectionRegressor::max_interaction_terms,

OptionBase::buildoption,

"Maximum number of interaction terms to consider. -1 means no max.\n"

"If more terms are possible, some are chosen randomly at each stage.\n");

declareOption(ol, "consider_n_best_for_interaction", &BasisSelectionRegressor::consider_n_best_for_interaction,

OptionBase::buildoption,

"Only the top best functions of single variables are considered when building interaction terms. -1 means no max.\n");

declareOption(ol, "interaction_max_order", &BasisSelectionRegressor::interaction_max_order,

OptionBase::buildoption,

"Maximum order of a feature in an interaction function. -1 means no max.\n");

declareOption(ol, "consider_sorted_encodings", &BasisSelectionRegressor::consider_sorted_encodings,

OptionBase::buildoption,

"If true, the dictionary will be enriched with encodings sorted in target order.\n"

"This will be done for all fields with less than max_n_vals_for_sorted_encodings different values.\n");

declareOption(ol, "max_n_vals_for_sorted_encodings", &BasisSelectionRegressor::max_n_vals_for_sorted_encodings,

OptionBase::buildoption,

"Maximum number of different values for a field to be considered for a sorted encoding.\n");

declareOption(ol, "normalize_features", &BasisSelectionRegressor::normalize_features,

OptionBase::buildoption,

"EXPERIMENTAL OPTION (under development)");

declareOption(ol, "learner", &BasisSelectionRegressor::template_learner,

OptionBase::buildoption,

"The underlying template learner.");

declareOption(ol, "precompute_features", &BasisSelectionRegressor::precompute_features,

OptionBase::buildoption,

"True if features mat should be kept in memory; false if each row should be recalculated every time it is needed.");

declareOption(ol, "n_threads", &BasisSelectionRegressor::n_threads,

OptionBase::buildoption,

"The number of threads to use when computing residue scores.\n"

"NOTE: MOST OF PLEARN IS NOT THREAD-SAFE; THIS CODE ASSUMES THAT SOME PARTS ARE, BUT THESE MAY CHANGE.");

declareOption(ol, "thread_subtrain_length", &BasisSelectionRegressor::thread_subtrain_length,

OptionBase::buildoption,

"Preload thread_subtrain_length data when using multi-threading.");

declareOption(ol, "use_all_basis", &BasisSelectionRegressor::use_all_basis,

OptionBase::buildoption,

"If true, we use the underlying learner on all basis functions generated by the BSR.\n"

"In this special way, all interaction terms are shut down and only 1 stage of training is necessary");

//##### Public Learnt Options ############################################

declareOption(ol, "selected_functions", &BasisSelectionRegressor::selected_functions,

OptionBase::learntoption,

"The list of real functions selected by the incremental algorithm.");

declareOption(ol, "alphas", &BasisSelectionRegressor::alphas,

OptionBase::learntoption,

"CURRENTLY UNUSED");

declareOption(ol, "scores", &BasisSelectionRegressor::scores,

OptionBase::learntoption,

"Matrice of the scores for each candidate function.\n"

"Used only when 'consider_n_best_for_interaction' > 0.");

declareOption(ol, "candidate_functions", &BasisSelectionRegressor::candidate_functions,

OptionBase::learntoption,

"The list of current candidate functions.");

declareOption(ol, "explicit_interaction_functions", &BasisSelectionRegressor::explicit_interaction_functions,

OptionBase::learntoption,

"This (optional) list of explicitly given RealFunctions\n"

"will get included in the dictionary for interaction terms ONLY\n"

"(i.e. these interact with the other functions.)");

declareOption(ol, "true_learner", &BasisSelectionRegressor::learner,

OptionBase::learntoption,

"The underlying learner to be trained with the extracted features.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::BasisSelectionRegressor::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 155 of file BasisSelectionRegressor.h.

:

//##### Protected Member Functions ######################################

| BasisSelectionRegressor * PLearn::BasisSelectionRegressor::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 65 of file BasisSelectionRegressor.cc.

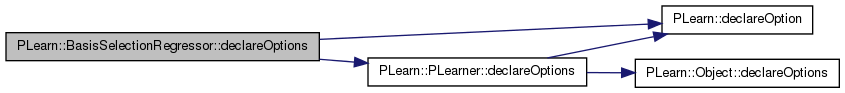

| void PLearn::BasisSelectionRegressor::findBestCandidateFunction | ( | int & | best_candidate_index, |

| real & | best_score | ||

| ) | const [private] |

Returns the index of the best candidate function (most colinear with the residue)

Definition at line 667 of file BasisSelectionRegressor.cc.

References candidate_functions, computeWeightedAveragesWithResidue(), PLearn::endl(), j, PLearn::TVec< T >::length(), normalize_features, PLearn::perr, PLearn::TMat< T >::resize(), scores, simple_candidate_functions, PLearn::TVec< T >::size(), PLearn::sqrt(), PLearn::square(), and PLearn::PLearner::verbosity.

Referenced by train().

{

int n_candidates = candidate_functions.size();

Vec E_x;

Vec E_xx;

Vec E_xy;

real wsum = 0;

real E_y = 0;

real E_yy = 0;

computeWeightedAveragesWithResidue(candidate_functions, wsum, E_x, E_xx, E_y, E_yy, E_xy);

scores.resize(simple_candidate_functions.length(), 2);

if(verbosity>=5)

perr << "n_candidates = " << n_candidates << endl;

if(verbosity>=10)

perr << "E_xy = " << E_xy << endl;

best_candidate_index = -1;

best_score = 0;

for(int j=0; j<n_candidates; j++)

{

real score = 0;

if(normalize_features)

score = fabs((E_xy[j]-E_y*E_x[j])/(1e-6+sqrt(E_xx[j]-square(E_x[j]))));

else

score = fabs(E_xy[j]);

if(verbosity>=10)

perr << score << ' ';

if(score>best_score)

{

best_candidate_index = j;

best_score = score;

}

// we keep the score only for the simple_candidate_functions

if (j < simple_candidate_functions.length())

{

scores(j, 0) = j;

scores(j, 1) = score;

}

}

if(verbosity>=10)

perr << endl;

}

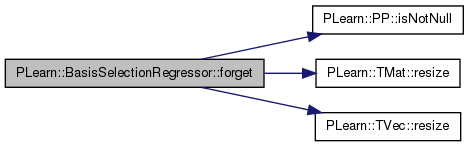

| void PLearn::BasisSelectionRegressor::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 318 of file BasisSelectionRegressor.cc.

References candidate_functions, features, PLearn::PP< T >::isNotNull(), kernel_centers, learner, n_kernel_centers_to_pick, residue, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), selected_functions, PLearn::PLearner::stage, targets, and weights.

{

selected_functions.resize(0);

targets.resize(0);

residue.resize(0);

weights.resize(0);

features.resize(0,0);

if(n_kernel_centers_to_pick>=0)

kernel_centers.resize(0,0);

if(learner.isNotNull())

learner->forget();

candidate_functions.resize(0);

stage = 0;

}

| OptionList & PLearn::BasisSelectionRegressor::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 65 of file BasisSelectionRegressor.cc.

| OptionMap & PLearn::BasisSelectionRegressor::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 65 of file BasisSelectionRegressor.cc.

| RemoteMethodMap & PLearn::BasisSelectionRegressor::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 65 of file BasisSelectionRegressor.cc.

| TVec< string > PLearn::BasisSelectionRegressor::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 1307 of file BasisSelectionRegressor.cc.

{

return TVec<string>(1,string("mse"));

}

| TVec< string > PLearn::BasisSelectionRegressor::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 1319 of file BasisSelectionRegressor.cc.

References template_learner.

{

return template_learner->getTrainCostNames();

}

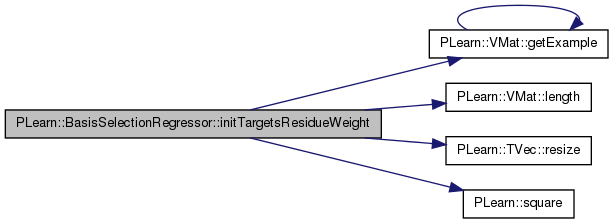

| void PLearn::BasisSelectionRegressor::initTargetsResidueWeight | ( | ) | [private] |

Definition at line 1202 of file BasisSelectionRegressor.cc.

References PLearn::VMat::getExample(), i, input, PLearn::VMat::length(), residue, residue_sum, residue_sum_sq, PLearn::TVec< T >::resize(), PLearn::square(), targ, targets, PLearn::PLearner::train_set, w, and weights.

Referenced by train().

{

int l = train_set.length();

residue.resize(l);

targets.resize(l);

residue_sum = 0.;

residue_sum_sq = 0.;

weights.resize(l);

real w;

for(int i=0; i<l; i++)

{

train_set->getExample(i, input, targ, w);

real t = targ[0];

targets[i] = t;

residue[i] = t;

weights[i] = w;

residue_sum += w*t;

residue_sum_sq += w*square(t);

}

}

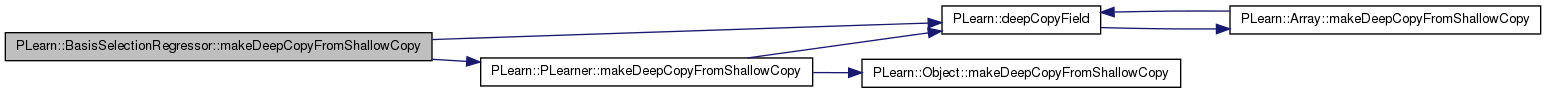

| void PLearn::BasisSelectionRegressor::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 283 of file BasisSelectionRegressor.cc.

References alphas, candidate_functions, PLearn::deepCopyField(), explicit_functions, explicit_interaction_functions, explicit_interaction_variables, features, featurevec, input, kernel_centers, kernels, learner, PLearn::PLearner::makeDeepCopyFromShallowCopy(), mandatory_functions, residue, scores, selected_functions, simple_candidate_functions, targ, targets, template_learner, and weights.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(explicit_functions, copies);

deepCopyField(explicit_interaction_functions, copies);

deepCopyField(explicit_interaction_variables, copies);

deepCopyField(mandatory_functions, copies);

deepCopyField(kernels, copies);

deepCopyField(kernel_centers, copies);

deepCopyField(learner, copies);

deepCopyField(template_learner, copies);

deepCopyField(selected_functions, copies);

deepCopyField(alphas, copies);

deepCopyField(scores, copies);

deepCopyField(simple_candidate_functions, copies);

deepCopyField(candidate_functions, copies);

deepCopyField(features, copies);

deepCopyField(residue, copies);

deepCopyField(targets, copies);

deepCopyField(weights, copies);

deepCopyField(input, copies);

deepCopyField(targ, copies);

deepCopyField(featurevec, copies);

}

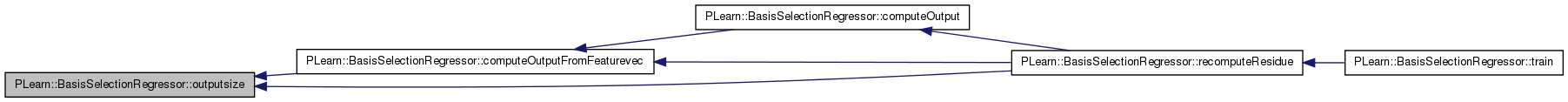

| int PLearn::BasisSelectionRegressor::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 312 of file BasisSelectionRegressor.cc.

References template_learner.

Referenced by computeOutputFromFeaturevec(), and recomputeResidue().

{

//return 1;

return template_learner->outputsize();

}

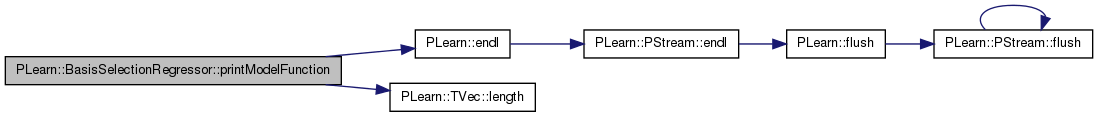

| void PLearn::BasisSelectionRegressor::printModelFunction | ( | PStream & | out | ) | const |

Definition at line 1291 of file BasisSelectionRegressor.cc.

References alphas, PLearn::endl(), PLearn::TVec< T >::length(), and selected_functions.

{

for(int k=0; k<selected_functions.length(); k++)

{

out << "+ " << alphas[k] << "* " << selected_functions[k];

out << endl;

}

}

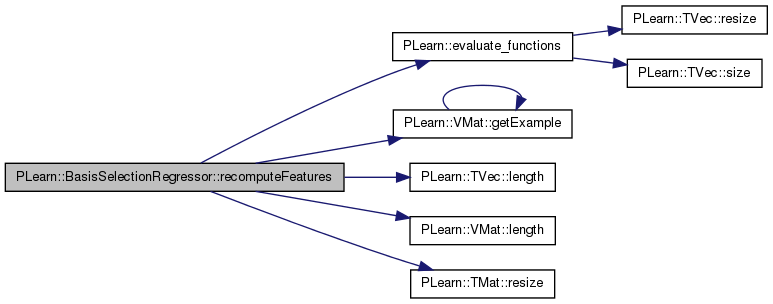

| void PLearn::BasisSelectionRegressor::recomputeFeatures | ( | ) | [private] |

Definition at line 1224 of file BasisSelectionRegressor.cc.

References PLearn::evaluate_functions(), features, PLearn::VMat::getExample(), i, input, PLearn::TVec< T >::length(), PLearn::VMat::length(), precompute_features, PLearn::TMat< T >::resize(), selected_functions, targ, and PLearn::PLearner::train_set.

Referenced by train().

{

if(!precompute_features)

return;

int l = train_set.length();

int nf = selected_functions.length();

features.resize(l,nf);

real weight = 0;

for(int i=0; i<l; i++)

{

train_set->getExample(i, input, targ, weight);

Vec v = features(i);

evaluate_functions(selected_functions, input, v);

}

}

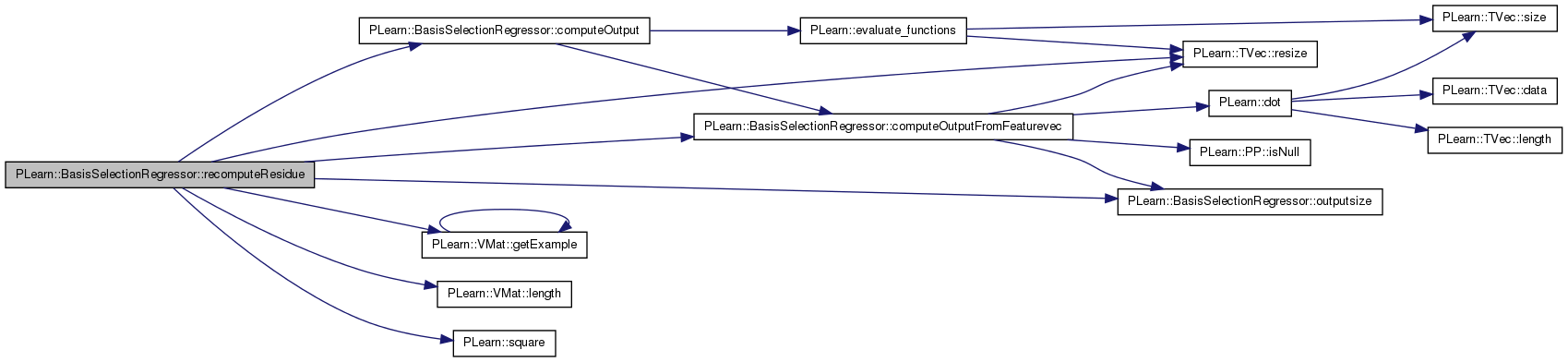

| void PLearn::BasisSelectionRegressor::recomputeResidue | ( | ) | [private] |

Definition at line 1240 of file BasisSelectionRegressor.cc.

References computeOutput(), computeOutputFromFeaturevec(), features, PLearn::VMat::getExample(), i, input, PLearn::VMat::length(), outputsize(), precompute_features, residue, residue_sum, residue_sum_sq, PLearn::TVec< T >::resize(), PLearn::square(), targ, targets, PLearn::PLearner::train_set, w, and weights.

Referenced by train().

{

int l = train_set.length();

residue.resize(l);

residue_sum = 0;

residue_sum_sq = 0;

Vec output(outputsize());

// perr << "recomp_residue: { ";

for(int i=0; i<l; i++)

{

real t = targets[i];

real w = weights[i];

if(precompute_features)

computeOutputFromFeaturevec(features(i),output);

else

{

real wt;

train_set->getExample(i,input,targ,wt);

computeOutput(input,output);

}

real resid = t-output[0];

residue[i] = resid;

// perr << "feature " << i << ": " << features(i) << " t:" << t << " out: " << output[0] << " resid: " << residue[i] << endl;

residue_sum += resid;

residue_sum_sq += w*square(resid);

}

// perr << "}" << endl;

// perr << "targets: \n" << targets << endl;

// perr << "residue: \n" << residue << endl;

}

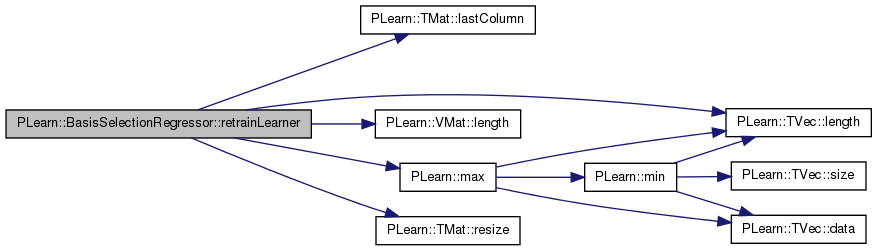

| void PLearn::BasisSelectionRegressor::retrainLearner | ( | ) | [private] |

Definition at line 1085 of file BasisSelectionRegressor.cc.

References PLearn::PLearner::expdir, features, i, PLearn::TMat< T >::lastColumn(), learner, PLearn::TVec< T >::length(), PLearn::VMat::length(), PLearn::max(), precompute_features, PLearn::TMat< T >::resize(), selected_functions, targets, template_learner, PLearn::PLearner::train_set, and weights.

Referenced by train().

{

int l = train_set->length();

int nf = selected_functions.length();

bool weighted = train_set->hasWeights();

// set dummy training set, so that undelying learner frees reference to previous training set

/*

VMat newtrainset = new MemoryVMatrix(1,nf+(weighted?2:1));

newtrainset->defineSizes(nf,1,weighted?1:0);

learner->setTrainingSet(newtrainset);

learner->forget();

*/

// Deep-copy the underlying learner

CopiesMap copies;

learner = template_learner->deepCopy(copies);

PP<VecStatsCollector> statscol = template_learner->getTrainStatsCollector();

learner->setTrainStatsCollector(statscol);

PPath expdir = template_learner->getExperimentDirectory();

learner->setExperimentDirectory(expdir);

VMat newtrainset;

if(precompute_features)

{

features.resize(l,nf+(weighted?2:1), max(1,int(0.25*l*nf)), true); // enlarge width while preserving content

if(weighted)

{

for(int i=0; i<l; i++) // append target and weight columns to features matrix

{

features(i,nf) = targets[i];

features(i,nf+1) = weights[i];

}

}

else // no weights

features.lastColumn() << targets; // append target column to features matrix

newtrainset = new MemoryVMatrix(features);

}

else

newtrainset= new RealFunctionsProcessedVMatrix(train_set, selected_functions, false, true, true);

newtrainset->defineSizes(nf,1,weighted?1:0);

learner->setTrainingSet(newtrainset);

template_learner->setTrainingSet(newtrainset);

learner->forget();

learner->train();

// resize features matrix so it contains only the features

if(precompute_features)

features.resize(l,nf);

}

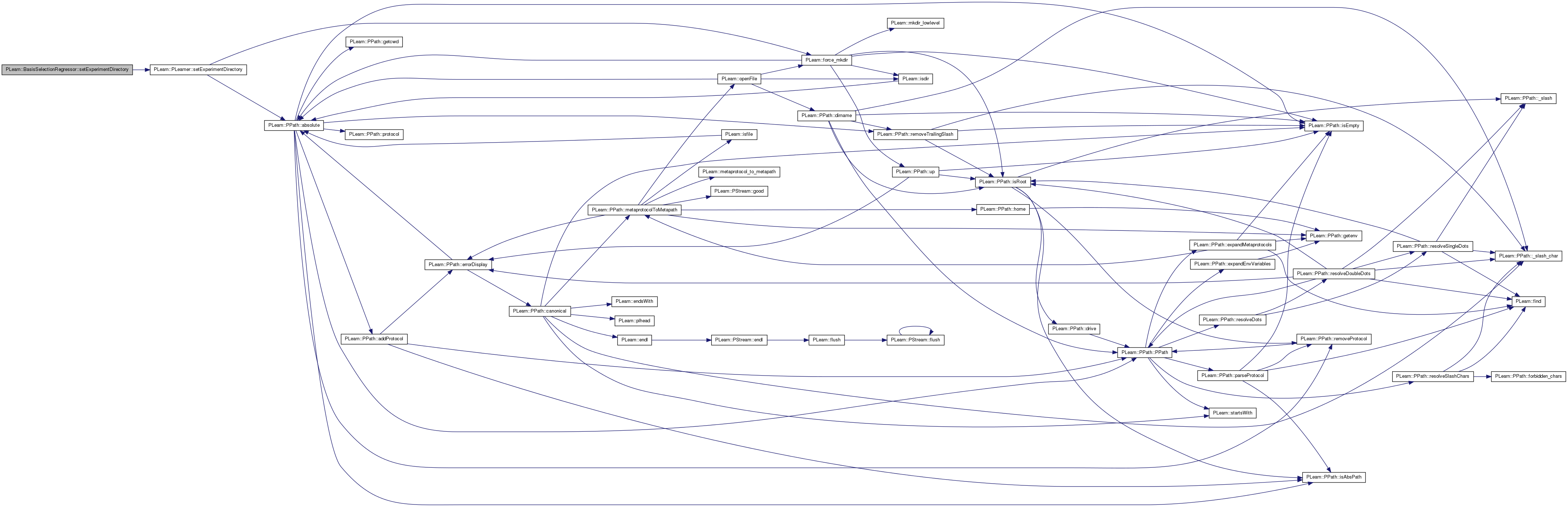

| void PLearn::BasisSelectionRegressor::setExperimentDirectory | ( | const PPath & | the_expdir | ) | [virtual] |

The experiment directory is the directory in which files related to this model are to be saved.

If it is an empty string, it is understood to mean that the user doesn't want any file created by this learner.

Reimplemented from PLearn::PLearner.

Definition at line 268 of file BasisSelectionRegressor.cc.

References PLearn::PLearner::setExperimentDirectory(), and template_learner.

{

inherited::setExperimentDirectory(the_expdir);

template_learner->setExperimentDirectory(the_expdir / "SubLearner");

}

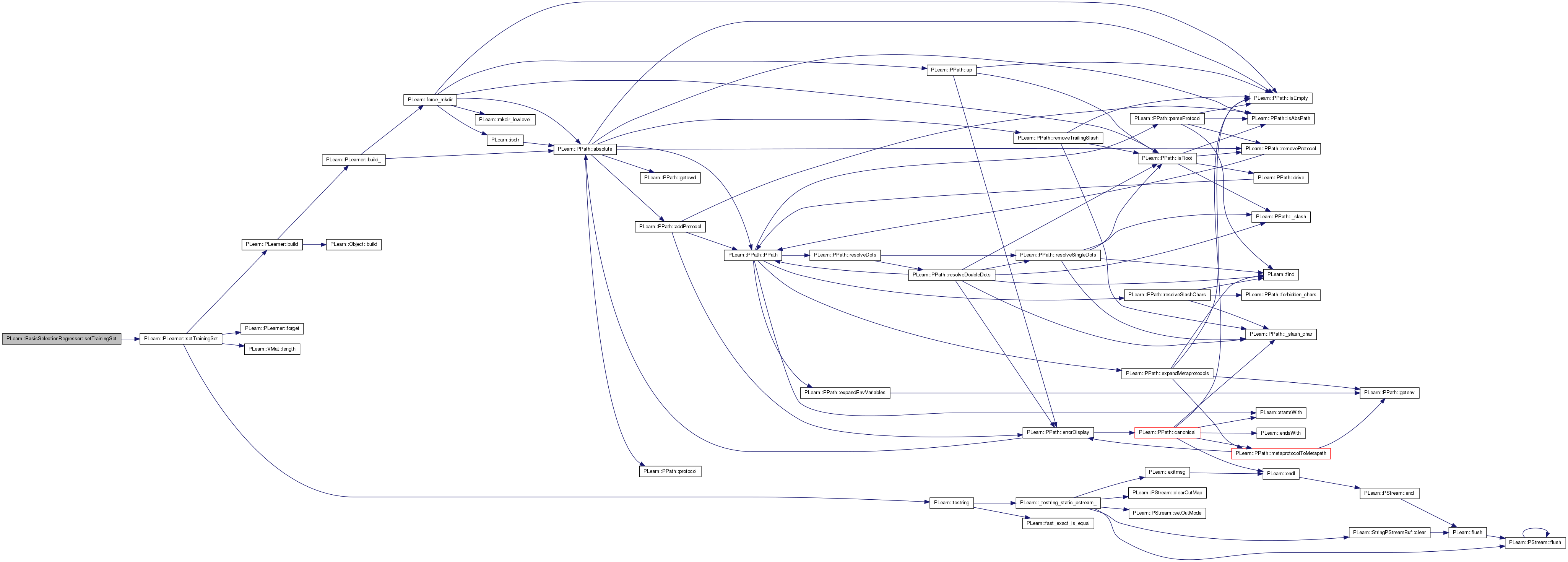

| void PLearn::BasisSelectionRegressor::setTrainingSet | ( | VMat | training_set, |

| bool | call_forget = true |

||

| ) | [virtual] |

Forwards the call to sub-learner.

Reimplemented from PLearn::PLearner.

Definition at line 1324 of file BasisSelectionRegressor.cc.

References PLearn::PLearner::setTrainingSet(), and template_learner.

{

inherited::setTrainingSet(training_set, call_forget);

template_learner->setTrainingSet(training_set, call_forget);

}

| void PLearn::BasisSelectionRegressor::setTrainStatsCollector | ( | PP< VecStatsCollector > | statscol | ) | [virtual] |

simply forwards stats coll. to sub-learner

Reimplemented from PLearn::PLearner.

Definition at line 1312 of file BasisSelectionRegressor.cc.

References PLearn::PLearner::setTrainStatsCollector(), and template_learner.

{

inherited::setTrainStatsCollector(statscol);

template_learner->setTrainStatsCollector(statscol);

}

| void PLearn::BasisSelectionRegressor::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 1137 of file BasisSelectionRegressor.cc.

References appendFunctionToSelection(), buildAllCandidateFunctions(), candidate_functions, PLearn::endl(), findBestCandidateFunction(), initTargetsResidueWeight(), PLearn::PLearner::initTrain(), PLearn::TVec< T >::length(), PLearn::PLearner::nstages, PLearn::perr, recomputeFeatures(), recomputeResidue(), residue_sum_sq, PLearn::TVec< T >::resize(), retrainLearner(), selected_functions, simple_candidate_functions, PLearn::PLearner::stage, targets, PLearn::PLearner::train_stats, and PLearn::PLearner::verbosity.

{

if(nstages > 0)

{

if (!initTrain())

return;

} // work around so that nstages can be zero...

else if (!train_stats)

train_stats = new VecStatsCollector();

if(stage==0)

{

simple_candidate_functions.resize(0);

buildAllCandidateFunctions();

}

while(stage<nstages)

{

if(targets.length()==0)

{

initTargetsResidueWeight();

if(selected_functions.length()>0)

{

recomputeFeatures();

if(stage==0) // only mandatory funcs.

retrainLearner();

if (candidate_functions.length()>0)

recomputeResidue();

}

}

if(candidate_functions.length()>0)

{

int best_candidate_index = -1;

real best_score = 0;

findBestCandidateFunction(best_candidate_index, best_score);

if(verbosity>=2)

perr << "\n\n*** Stage " << stage << " *****" << endl

<< "Best candidate: index=" << best_candidate_index << endl

<< " score=" << best_score << endl;

if(best_candidate_index>=0)

{

if(verbosity>=2)

perr << " function info = " << candidate_functions[best_candidate_index]->getInfo() << endl;

if(verbosity>=3)

perr << " function= " << candidate_functions[best_candidate_index] << endl;

appendFunctionToSelection(best_candidate_index);

if(verbosity>=2)

perr << "residue_sum_sq before retrain: " << residue_sum_sq << endl;

retrainLearner();

recomputeResidue();

if(verbosity>=2)

perr << "residue_sum_sq after retrain: " << residue_sum_sq << endl;

}

}

else

{

if(verbosity>=2)

perr << "\n\n*** Stage " << stage << " : no more candidate functions. *****" << endl;

}

++stage;

}

}

Reimplemented from PLearn::PLearner.

Definition at line 155 of file BasisSelectionRegressor.h.

Definition at line 95 of file BasisSelectionRegressor.h.

Referenced by computeOutputFromFeaturevec(), declareOptions(), makeDeepCopyFromShallowCopy(), and printModelFunction().

Definition at line 234 of file BasisSelectionRegressor.h.

Referenced by appendFunctionToSelection(), buildAllCandidateFunctions(), buildTopCandidateFunctions(), declareOptions(), findBestCandidateFunction(), forget(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 66 of file BasisSelectionRegressor.h.

Referenced by buildAllCandidateFunctions(), buildSimpleCandidateFunctions(), and declareOptions().

Definition at line 73 of file BasisSelectionRegressor.h.

Referenced by appendCandidateFunctionsOfSingleField(), and declareOptions().

Definition at line 80 of file BasisSelectionRegressor.h.

Referenced by appendFunctionToSelection(), build_(), buildAllCandidateFunctions(), and declareOptions().

Definition at line 82 of file BasisSelectionRegressor.h.

Referenced by buildAllCandidateFunctions(), buildTopCandidateFunctions(), and declareOptions().

Definition at line 72 of file BasisSelectionRegressor.h.

Referenced by appendCandidateFunctionsOfSingleField(), and declareOptions().

Definition at line 71 of file BasisSelectionRegressor.h.

Referenced by appendCandidateFunctionsOfSingleField(), and declareOptions().

Definition at line 84 of file BasisSelectionRegressor.h.

Referenced by declareOptions().

Definition at line 67 of file BasisSelectionRegressor.h.

Referenced by buildSimpleCandidateFunctions(), declareOptions(), and makeDeepCopyFromShallowCopy().

Definition at line 68 of file BasisSelectionRegressor.h.

Referenced by buildAllCandidateFunctions(), declareOptions(), and makeDeepCopyFromShallowCopy().

Definition at line 69 of file BasisSelectionRegressor.h.

Referenced by buildAllCandidateFunctions(), declareOptions(), and makeDeepCopyFromShallowCopy().

Mat PLearn::BasisSelectionRegressor::features [private] |

Definition at line 235 of file BasisSelectionRegressor.h.

Referenced by appendFunctionToSelection(), forget(), makeDeepCopyFromShallowCopy(), recomputeFeatures(), recomputeResidue(), and retrainLearner().

Vec PLearn::BasisSelectionRegressor::featurevec [mutable, private] |

Definition at line 244 of file BasisSelectionRegressor.h.

Referenced by computeOutput(), and makeDeepCopyFromShallowCopy().

Definition at line 74 of file BasisSelectionRegressor.h.

Referenced by appendCandidateFunctionsOfSingleField(), and declareOptions().

Definition at line 75 of file BasisSelectionRegressor.h.

Referenced by appendCandidateFunctionsOfSingleField(), and declareOptions().

Definition at line 76 of file BasisSelectionRegressor.h.

Referenced by appendCandidateFunctionsOfSingleField(), and declareOptions().

Vec PLearn::BasisSelectionRegressor::input [mutable, private] |

Definition at line 242 of file BasisSelectionRegressor.h.

Referenced by appendFunctionToSelection(), appendKernelFunctions(), computeWeightedAveragesWithResidue(), initTargetsResidueWeight(), makeDeepCopyFromShallowCopy(), PLearn::BasisSelectionRegressor::thread_wawr::operator()(), recomputeFeatures(), and recomputeResidue().

Definition at line 83 of file BasisSelectionRegressor.h.

Referenced by addInteractionFunction(), and declareOptions().

Definition at line 78 of file BasisSelectionRegressor.h.

Referenced by appendKernelFunctions(), declareOptions(), forget(), and makeDeepCopyFromShallowCopy().

Definition at line 77 of file BasisSelectionRegressor.h.

Referenced by appendKernelFunctions(), buildSimpleCandidateFunctions(), declareOptions(), and makeDeepCopyFromShallowCopy().

Definition at line 87 of file BasisSelectionRegressor.h.

Referenced by computeOutputFromFeaturevec(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and retrainLearner().

Definition at line 70 of file BasisSelectionRegressor.h.

Referenced by buildAllCandidateFunctions(), declareOptions(), and makeDeepCopyFromShallowCopy().

Definition at line 81 of file BasisSelectionRegressor.h.

Referenced by buildAllCandidateFunctions(), and declareOptions().

Definition at line 85 of file BasisSelectionRegressor.h.

Referenced by declareOptions().

Definition at line 79 of file BasisSelectionRegressor.h.

Referenced by appendKernelFunctions(), declareOptions(), and forget().

Definition at line 89 of file BasisSelectionRegressor.h.

Referenced by computeWeightedAveragesWithResidue(), and declareOptions().

Definition at line 86 of file BasisSelectionRegressor.h.

Referenced by declareOptions(), and findBestCandidateFunction().

Definition at line 88 of file BasisSelectionRegressor.h.

Referenced by appendFunctionToSelection(), declareOptions(), recomputeFeatures(), recomputeResidue(), and retrainLearner().

Vec PLearn::BasisSelectionRegressor::residue [private] |

Definition at line 236 of file BasisSelectionRegressor.h.

Referenced by computeWeightedAveragesWithResidue(), forget(), initTargetsResidueWeight(), makeDeepCopyFromShallowCopy(), and recomputeResidue().

double PLearn::BasisSelectionRegressor::residue_sum [private] |

Definition at line 239 of file BasisSelectionRegressor.h.

Referenced by initTargetsResidueWeight(), and recomputeResidue().

double PLearn::BasisSelectionRegressor::residue_sum_sq [private] |

Definition at line 240 of file BasisSelectionRegressor.h.

Referenced by initTargetsResidueWeight(), recomputeResidue(), and train().

Mat PLearn::BasisSelectionRegressor::scores [mutable] |

Definition at line 96 of file BasisSelectionRegressor.h.

Referenced by buildTopCandidateFunctions(), declareOptions(), findBestCandidateFunction(), and makeDeepCopyFromShallowCopy().

Definition at line 94 of file BasisSelectionRegressor.h.

Referenced by appendFunctionToSelection(), buildAllCandidateFunctions(), computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), printModelFunction(), recomputeFeatures(), retrainLearner(), and train().

Definition at line 233 of file BasisSelectionRegressor.h.

Referenced by buildAllCandidateFunctions(), buildSimpleCandidateFunctions(), buildTopCandidateFunctions(), findBestCandidateFunction(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::BasisSelectionRegressor::targ [mutable, private] |

Definition at line 243 of file BasisSelectionRegressor.h.

Referenced by appendFunctionToSelection(), appendKernelFunctions(), computeWeightedAveragesWithResidue(), initTargetsResidueWeight(), makeDeepCopyFromShallowCopy(), PLearn::BasisSelectionRegressor::thread_wawr::operator()(), recomputeFeatures(), and recomputeResidue().

Vec PLearn::BasisSelectionRegressor::targets [private] |

Definition at line 237 of file BasisSelectionRegressor.h.

Referenced by forget(), initTargetsResidueWeight(), makeDeepCopyFromShallowCopy(), recomputeResidue(), retrainLearner(), and train().

PP<PLearner> PLearn::BasisSelectionRegressor::template_learner [protected] |

Definition at line 228 of file BasisSelectionRegressor.h.

Referenced by declareOptions(), getTrainCostNames(), makeDeepCopyFromShallowCopy(), outputsize(), retrainLearner(), setExperimentDirectory(), setTrainingSet(), and setTrainStatsCollector().

Definition at line 90 of file BasisSelectionRegressor.h.

Referenced by computeWeightedAveragesWithResidue(), and declareOptions().

Definition at line 91 of file BasisSelectionRegressor.h.

Referenced by build_(), buildAllCandidateFunctions(), computeOutputFromFeaturevec(), and declareOptions().

Vec PLearn::BasisSelectionRegressor::weights [private] |

Definition at line 238 of file BasisSelectionRegressor.h.

Referenced by forget(), initTargetsResidueWeight(), makeDeepCopyFromShallowCopy(), recomputeResidue(), and retrainLearner().

1.7.4

1.7.4