|

PLearn 0.1

|

|

PLearn 0.1

|

Computes the NLL, given a probability vector and the true class. More...

#include <SoftmaxNLLCostModule.h>

Public Member Functions | |

| SoftmaxNLLCostModule () | |

| Default constructor. | |

| virtual void | fprop (const Vec &input, const Vec &target, Vec &cost) const |

| given the input and target, compute the cost | |

| virtual void | fprop (const Mat &inputs, const Mat &targets, Mat &costs) const |

| batch version | |

| virtual void | fprop (const TVec< Mat * > &ports_value) |

| new version | |

| virtual void | bpropUpdate (const Vec &input, const Vec &target, real cost, Vec &input_gradient, bool accumulate=false) |

| Adapt based on the output gradient: this method should only be called just after a corresponding fprop. | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &targets, const Vec &costs, Mat &input_gradients, bool accumulate=false) |

| Overridden. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &target, real cost) |

| Does nothing. | |

| virtual void | bpropAccUpdate (const TVec< Mat * > &ports_value, const TVec< Mat * > &ports_gradient) |

| New version of backpropagation. | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &target, real cost, Vec &input_gradient, Vec &input_diag_hessian, bool accumulate=false) |

| Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back. | |

| virtual void | bbpropUpdate (const Vec &input, const Vec &target, real cost) |

| Does nothing. | |

| virtual void | setLearningRate (real dynamic_learning_rate) |

| Overridden to do nothing (in particular, no warning). | |

| virtual TVec< string > | costNames () |

| Indicates the name of the computed costs. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual SoftmaxNLLCostModule * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef CostModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| Vec | tmp_vec |

| Mat | tmp_mat |

Computes the NLL, given a probability vector and the true class.

If input is the probability vector, and target the index of the true class, this module computes cost = -log( input[target] ), and back-propagates the gradient and diagonal of Hessian.

Definition at line 53 of file SoftmaxNLLCostModule.h.

typedef CostModule PLearn::SoftmaxNLLCostModule::inherited [private] |

Reimplemented from PLearn::CostModule.

Definition at line 55 of file SoftmaxNLLCostModule.h.

| PLearn::SoftmaxNLLCostModule::SoftmaxNLLCostModule | ( | ) |

Default constructor.

Definition at line 53 of file SoftmaxNLLCostModule.cc.

{

output_size = 1;

target_size = 1;

}

| string PLearn::SoftmaxNLLCostModule::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| OptionList & PLearn::SoftmaxNLLCostModule::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| RemoteMethodMap & PLearn::SoftmaxNLLCostModule::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| Object * PLearn::SoftmaxNLLCostModule::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| StaticInitializer SoftmaxNLLCostModule::_static_initializer_ & PLearn::SoftmaxNLLCostModule::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| virtual void PLearn::SoftmaxNLLCostModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost | ||

| ) | [inline, virtual] |

Does nothing.

Reimplemented from PLearn::CostModule.

Definition at line 103 of file SoftmaxNLLCostModule.h.

{}

| void PLearn::SoftmaxNLLCostModule::bbpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost, | ||

| Vec & | input_gradient, | ||

| Vec & | input_diag_hessian, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Similar to bpropUpdate, but adapt based also on the estimation of the diagonal of the Hessian matrix, and propagates this back.

Reimplemented from PLearn::CostModule.

Definition at line 296 of file SoftmaxNLLCostModule.cc.

References PLCHECK.

{

PLCHECK(false);

}

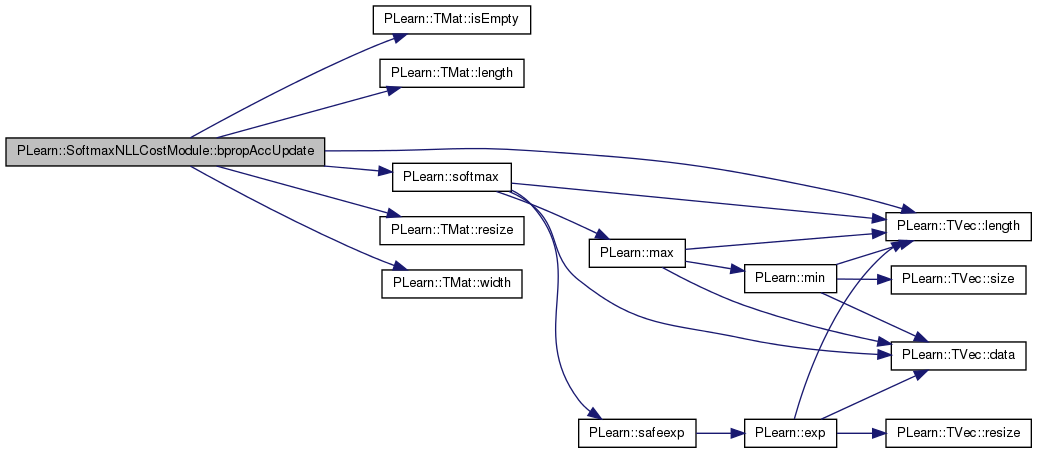

| void PLearn::SoftmaxNLLCostModule::bpropAccUpdate | ( | const TVec< Mat * > & | ports_value, |

| const TVec< Mat * > & | ports_gradient | ||

| ) | [virtual] |

New version of backpropagation.

Reimplemented from PLearn::CostModule.

Definition at line 235 of file SoftmaxNLLCostModule.cc.

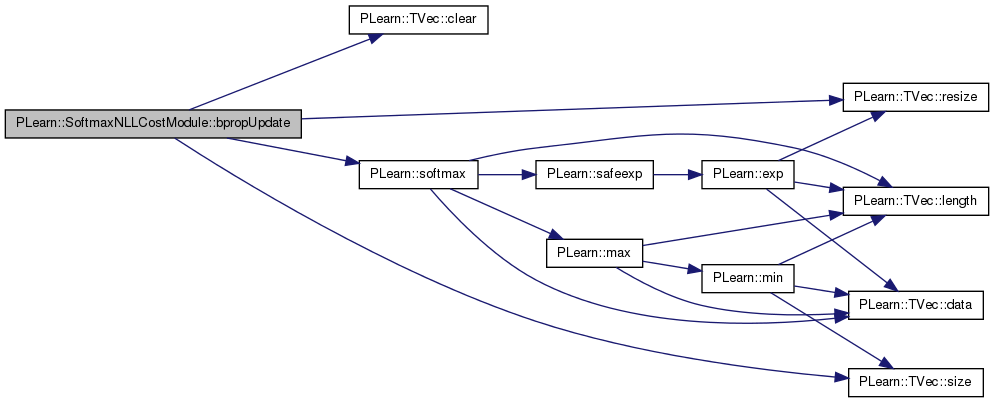

References PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLASSERT, PLERROR, PLearn::TMat< T >::resize(), PLearn::softmax(), and PLearn::TMat< T >::width().

{

PLASSERT( ports_value.length() == nPorts() );

PLASSERT( ports_gradient.length() == nPorts() );

Mat* prediction = ports_value[0];

Mat* target = ports_value[1];

#ifdef BOUNDCHECK

Mat* cost = ports_value[2];

#endif

Mat* prediction_grad = ports_gradient[0];

Mat* target_grad = ports_gradient[1];

Mat* cost_grad = ports_gradient[2];

// If we have cost_grad and we want prediction_grad

if( prediction_grad && prediction_grad->isEmpty()

&& cost_grad && !cost_grad->isEmpty() )

{

PLASSERT( prediction );

PLASSERT( target );

PLASSERT( cost );

PLASSERT( !target_grad );

PLASSERT( prediction->width() == port_sizes(0,1) );

PLASSERT( target->width() == port_sizes(1,1) );

PLASSERT( cost->width() == port_sizes(2,1) );

PLASSERT( prediction_grad->width() == port_sizes(0,1) );

PLASSERT( cost_grad->width() == port_sizes(2,1) );

PLASSERT( cost_grad->width() == 1 );

int batch_size = prediction->length();

PLASSERT( target->length() == batch_size );

PLASSERT( cost->length() == batch_size );

PLASSERT( cost_grad->length() == batch_size );

prediction_grad->resize(batch_size, port_sizes(0,1));

for( int k=0; k<batch_size; k++ )

{

// input_gradient[ i ] = softmax(x)[i] if i != t,

// input_gradient[ t ] = softmax(x)[t] - 1.

int target_k = (int) round((*target)(k, 0));

softmax((*prediction)(k), (*prediction_grad)(k));

(*prediction_grad)(k, target_k) -= 1.;

}

}

else if( !prediction_grad && !target_grad && !cost_grad )

return;

else if( !cost_grad && prediction_grad && prediction_grad->isEmpty() )

PLERROR("In SoftmaxNLLCostModule::bpropAccUpdate - cost gradient is NULL,\n"

"cannot compute prediction gradient. Maybe you should set\n"

"\"propagate_gradient = 0\" on the incoming connection.\n");

else

PLERROR("In OnlineLearningModule::bpropAccUpdate - Port configuration "

"not implemented for class '%s'", classname().c_str());

checkProp(ports_value);

checkProp(ports_gradient);

}

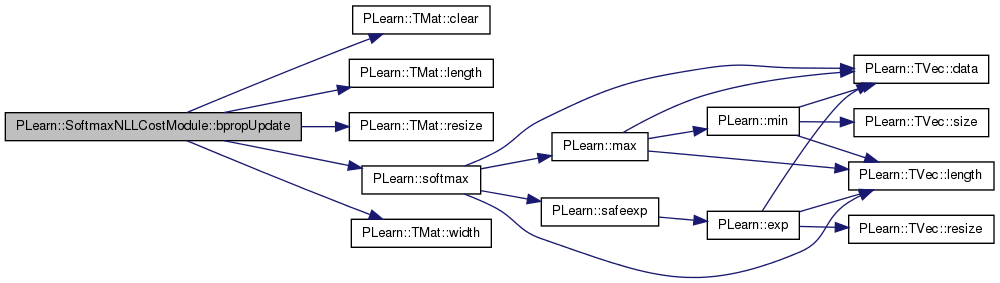

| void PLearn::SoftmaxNLLCostModule::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| const Vec & | costs, | ||

| Mat & | input_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Overridden.

Reimplemented from PLearn::CostModule.

Definition at line 207 of file SoftmaxNLLCostModule.cc.

References PLearn::TMat< T >::clear(), i, PLearn::TMat< T >::length(), PLASSERT, PLASSERT_MSG, PLearn::TMat< T >::resize(), PLearn::softmax(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

PLASSERT( targets.width() == target_size );

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == input_size &&

input_gradients.length() == inputs.length(),

"Cannot resize input_gradients and accumulate into it" );

}

else

{

input_gradients.resize(inputs.length(), input_size );

input_gradients.clear();

}

// input_gradient[ i ] = softmax(x)[i] if i != t,

// input_gradient[ t ] = softmax(x)[t] - 1.

for (int i = 0; i < inputs.length(); i++) {

int the_target = (int) round( targets(i, 0) );

softmax(inputs(i), input_gradients(i));

input_gradients(i, the_target) -= 1.;

}

}

| void PLearn::SoftmaxNLLCostModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost, | ||

| Vec & | input_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Adapt based on the output gradient: this method should only be called just after a corresponding fprop.

Reimplemented from PLearn::CostModule.

Definition at line 181 of file SoftmaxNLLCostModule.cc.

References PLearn::TVec< T >::clear(), PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), and PLearn::softmax().

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == input_size,

"Cannot resize input_gradient AND accumulate into it" );

}

else

{

input_gradient.resize( input_size );

input_gradient.clear();

}

int the_target = (int) round( target[0] );

// input_gradient[ i ] = softmax(x)[i] if i != t,

// input_gradient[ t ] = softmax(x)[t] - 1.

softmax(input, input_gradient);

input_gradient[ the_target ] -= 1.;

}

| virtual void PLearn::SoftmaxNLLCostModule::bpropUpdate | ( | const Vec & | input, |

| const Vec & | target, | ||

| real | cost | ||

| ) | [inline, virtual] |

Does nothing.

Reimplemented from PLearn::CostModule.

Definition at line 89 of file SoftmaxNLLCostModule.h.

{}

| void PLearn::SoftmaxNLLCostModule::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::CostModule.

Definition at line 74 of file SoftmaxNLLCostModule.cc.

{

inherited::build();

build_();

}

| void PLearn::SoftmaxNLLCostModule::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::CostModule.

Definition at line 69 of file SoftmaxNLLCostModule.cc.

{

}

| string PLearn::SoftmaxNLLCostModule::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| TVec< string > PLearn::SoftmaxNLLCostModule::costNames | ( | ) | [virtual] |

Indicates the name of the computed costs.

Reimplemented from PLearn::CostModule.

Definition at line 304 of file SoftmaxNLLCostModule.cc.

| void PLearn::SoftmaxNLLCostModule::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::CostModule.

Definition at line 59 of file SoftmaxNLLCostModule.cc.

{

// declareOption(ol, "myoption", &SoftmaxNLLCostModule::myoption,

// OptionBase::buildoption,

// "Help text describing this option");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::SoftmaxNLLCostModule::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::CostModule.

Definition at line 117 of file SoftmaxNLLCostModule.h.

:

//##### Protected Member Functions ######################################

| SoftmaxNLLCostModule * PLearn::SoftmaxNLLCostModule::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| void PLearn::SoftmaxNLLCostModule::fprop | ( | const Mat & | inputs, |

| const Mat & | targets, | ||

| Mat & | costs | ||

| ) | const [virtual] |

batch version

Reimplemented from PLearn::CostModule.

Definition at line 108 of file SoftmaxNLLCostModule.cc.

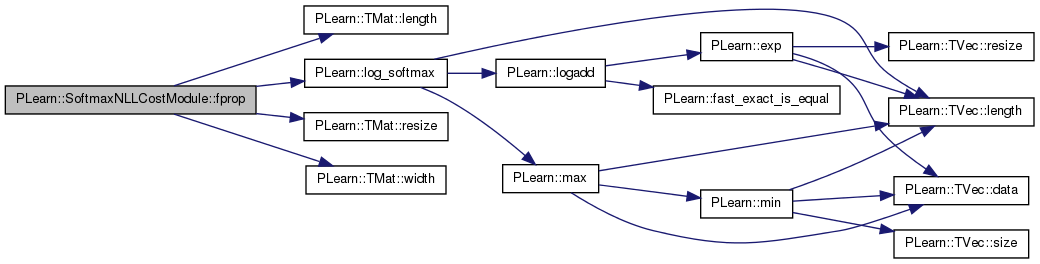

References PLearn::TMat< T >::length(), PLearn::log_softmax(), MISSING_VALUE, PLASSERT, PLearn::TMat< T >::resize(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == input_size );

PLASSERT( targets.width() == target_size );

int batch_size = inputs.length();

PLASSERT( inputs.length() == batch_size );

PLASSERT( targets.length() == batch_size );

tmp_vec.resize(input_size);

costs.resize(batch_size, output_size);

for( int k=0; k<batch_size; k++ )

{

if (inputs(k).hasMissing())

costs(k, 0) = MISSING_VALUE;

else

{

int target_k = (int) round( targets(k, 0) );

log_softmax(inputs(k), tmp_vec);

costs(k, 0) = - tmp_vec[target_k];

}

}

}

| void PLearn::SoftmaxNLLCostModule::fprop | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | cost | ||

| ) | const [virtual] |

given the input and target, compute the cost

Reimplemented from PLearn::CostModule.

Definition at line 90 of file SoftmaxNLLCostModule.cc.

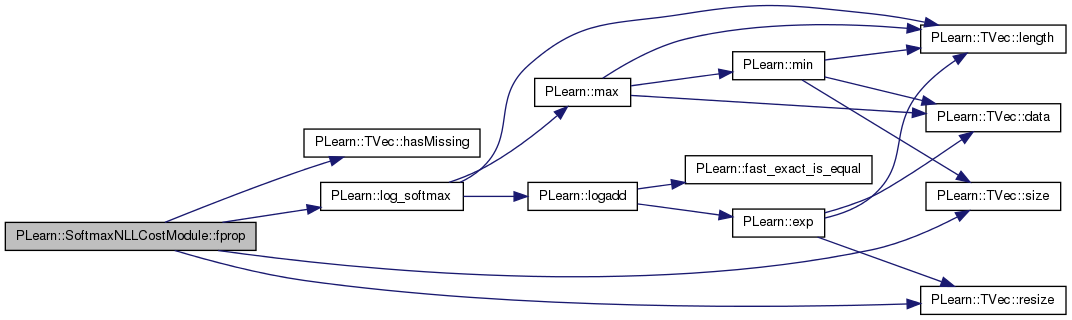

References PLearn::TVec< T >::hasMissing(), PLearn::log_softmax(), MISSING_VALUE, PLASSERT, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::size().

{

PLASSERT( input.size() == input_size );

PLASSERT( target.size() == target_size );

tmp_vec.resize(input_size);

cost.resize(output_size);

if (input.hasMissing())

cost[0] = MISSING_VALUE;

else

{

int the_target = (int) round( target[0] );

log_softmax(input, tmp_vec);

cost[0] = - tmp_vec[the_target];

}

}

new version

Reimplemented from PLearn::CostModule.

Definition at line 134 of file SoftmaxNLLCostModule.cc.

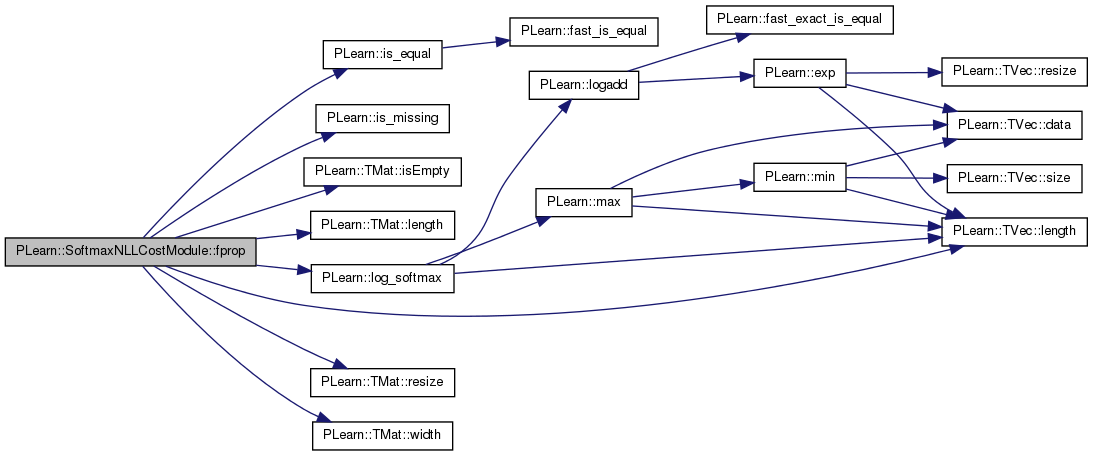

References i, PLearn::is_equal(), PLearn::is_missing(), PLearn::TMat< T >::isEmpty(), PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::log_softmax(), MISSING_VALUE, PLASSERT, PLCHECK_MSG, PLearn::TMat< T >::resize(), and PLearn::TMat< T >::width().

{

PLASSERT( ports_value.length() == nPorts() );

Mat* prediction = ports_value[0];

Mat* target = ports_value[1];

Mat* cost = ports_value[2];

// If we have prediction and target, and we want cost

if( prediction && !prediction->isEmpty()

&& target && !target->isEmpty()

&& cost && cost->isEmpty() )

{

PLASSERT( prediction->width() == port_sizes(0, 1) );

PLASSERT( target->width() == port_sizes(1, 1) );

int batch_size = prediction->length();

PLASSERT( target->length() == batch_size );

cost->resize(batch_size, port_sizes(2, 1));

for( int i=0; i<batch_size; i++ )

{

if( (*prediction)(i).hasMissing() || is_missing((*target)(i,0)) )

(*cost)(i,0) = MISSING_VALUE;

else

{

int target_i = (int) round( (*target)(i,0) );

PLASSERT( is_equal( (*target)(i, 0), target_i ) );

log_softmax( (*prediction)(i), tmp_vec );

(*cost)(i,0) = - tmp_vec[target_i];

}

}

}

else if( !prediction && !target && !cost )

return;

else

PLCHECK_MSG( false, "Unknown port configuration" );

checkProp(ports_value);

}

| OptionList & PLearn::SoftmaxNLLCostModule::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| OptionMap & PLearn::SoftmaxNLLCostModule::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| RemoteMethodMap & PLearn::SoftmaxNLLCostModule::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::CostModule.

Definition at line 51 of file SoftmaxNLLCostModule.cc.

| void PLearn::SoftmaxNLLCostModule::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::CostModule.

Definition at line 81 of file SoftmaxNLLCostModule.cc.

{

inherited::makeDeepCopyFromShallowCopy(copies);

}

| virtual void PLearn::SoftmaxNLLCostModule::setLearningRate | ( | real | dynamic_learning_rate | ) | [inline, virtual] |

Overridden to do nothing (in particular, no warning).

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 107 of file SoftmaxNLLCostModule.h.

{}

Reimplemented from PLearn::CostModule.

Definition at line 117 of file SoftmaxNLLCostModule.h.

Mat PLearn::SoftmaxNLLCostModule::tmp_mat [mutable, private] |

Definition at line 143 of file SoftmaxNLLCostModule.h.

Vec PLearn::SoftmaxNLLCostModule::tmp_vec [mutable, private] |

Definition at line 142 of file SoftmaxNLLCostModule.h.

1.7.4

1.7.4