Learning relational dictionaries

Modelling two images with one set of hidden variables makes it

possible to learn spatio-temporal features that can represent the relationship between

the images.

The key ingredient to make this work is to let the three groups of variables

interact multiplicatively, leading to a gated sparse coding model.

Factored gated Boltzmann machines ([Memisevic, Hinton, 2010])

implement this idea in the form of a probabilistic model with a simple Hebbian learning rule.

But one can apply the approach to other sparse coding techniques.

Code

A Python implementation of a factored gated Boltzmann machine is

available here

(requires numpy).

An example Python script that instantiates and trains the model on pairs

of shifted

random images is available here

(uses

matplotlib

for plotting).

(inputimages.txt, outputimages.txt)

(Note: The image files are approximately 40MB each.

You can get both image sets in a small compressed tar (tgz) file here.)

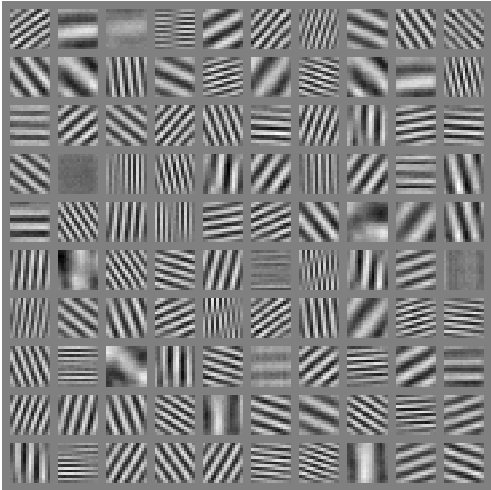

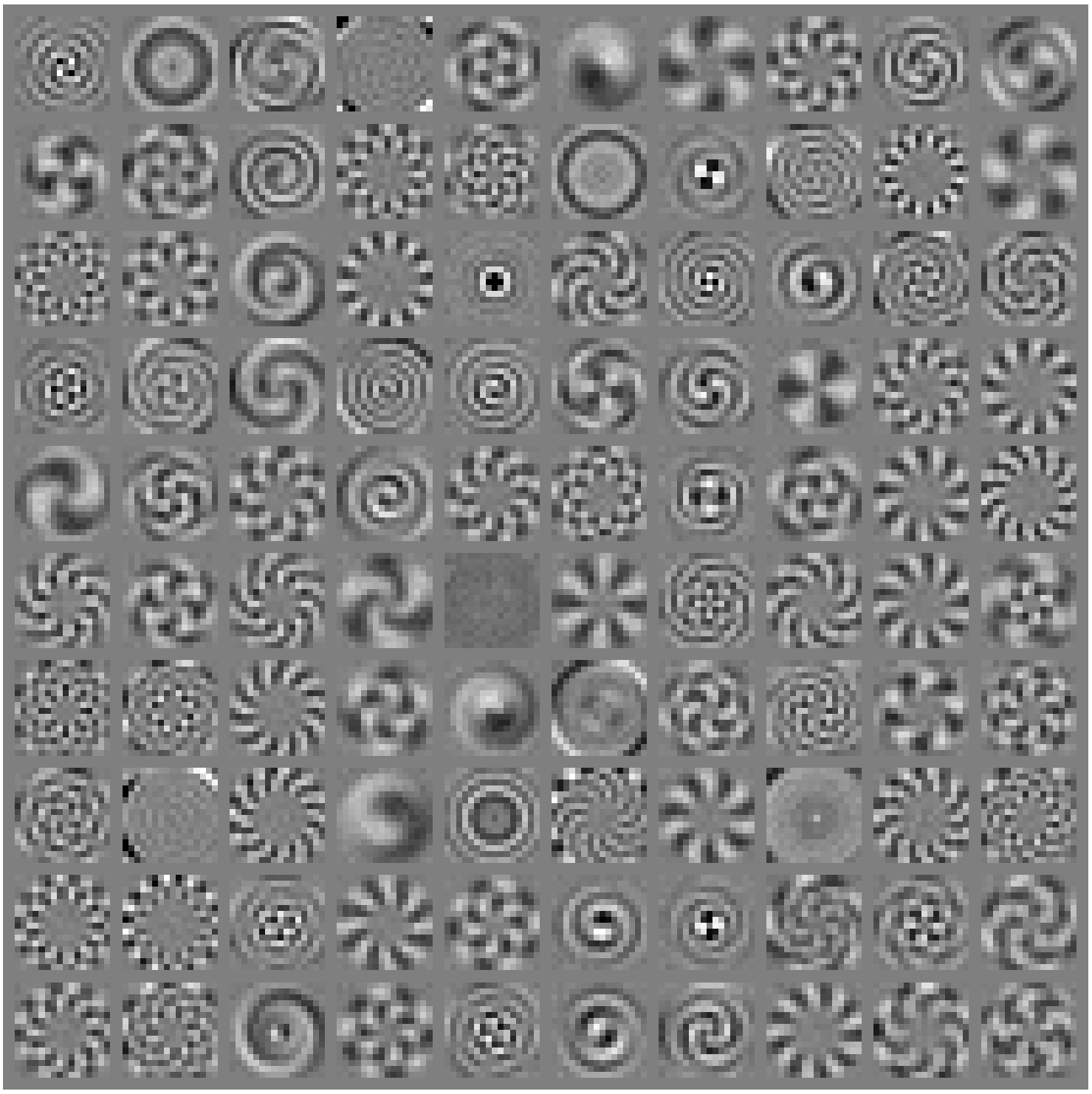

After about 200 epochs of training on the shifted random images

(this can easily take an hour or so - don't get discouraged)

the learned filters should look something like this:

The model has learned a 2d Fourier transform as the optimal filters for

representing translations.

(Filters for the input image are shown on the left,

filters for the output image on the right.)

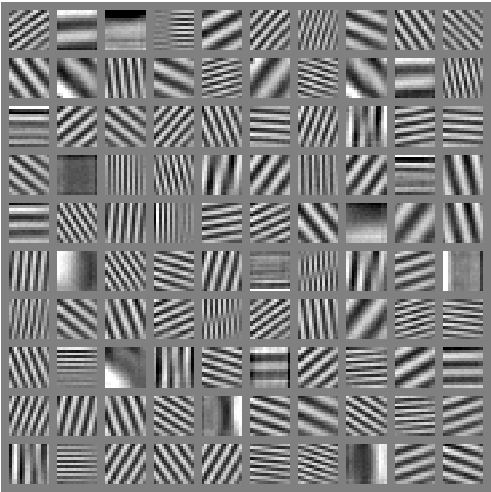

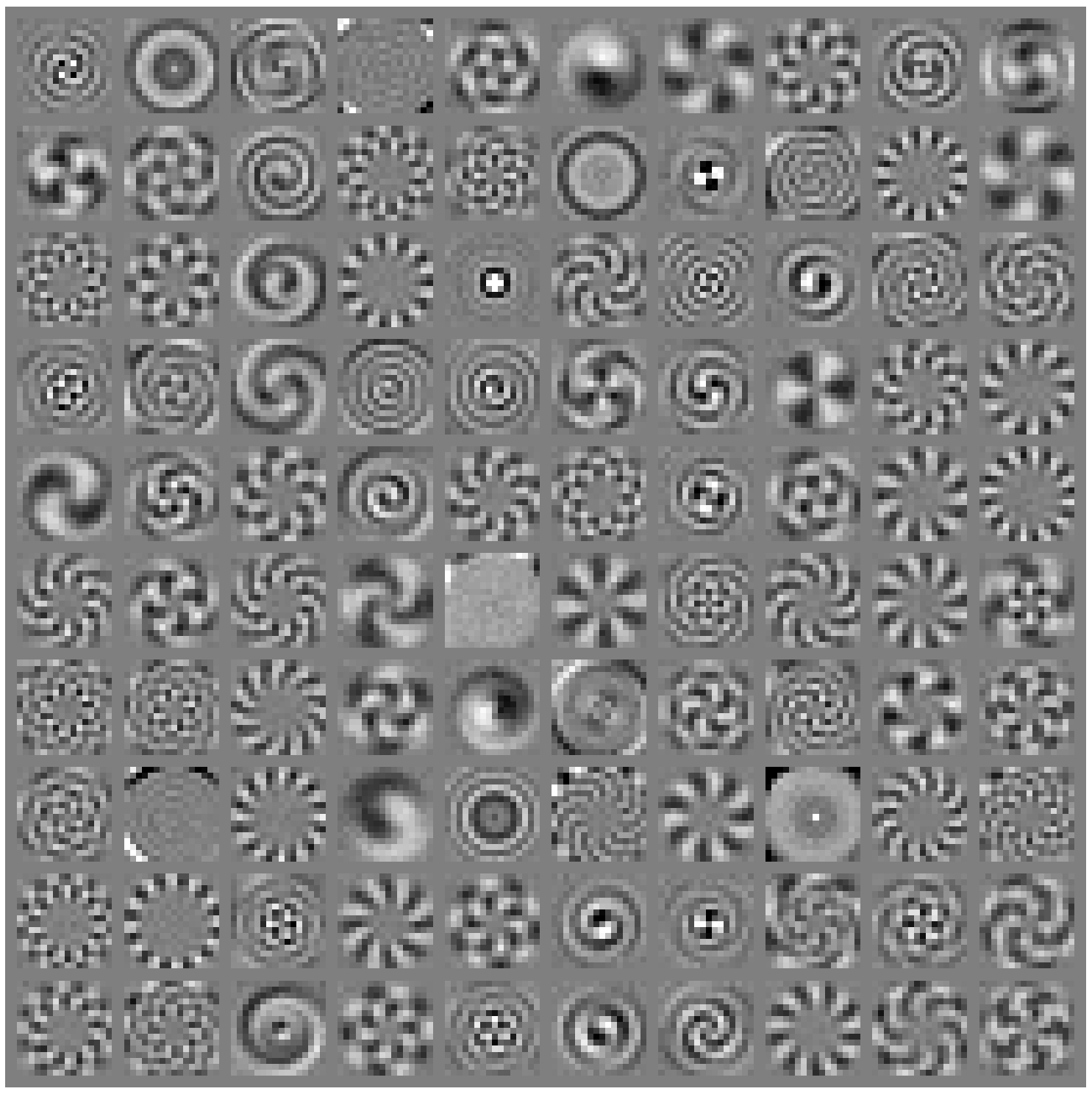

Example 2: Learning a dictionary to represent rotation

When training on rotations, the model learns a polar ("circular") generalization of

the Fourier transform:

Training on scalings and other types of transformation

leads to other types of transformation-specific basis functions.

After learning about transformations it is possible to make analogies,

understand the meaning of "the same" and to perform transformation invariant classification

[Memisevic, Hinton, 2010].

References

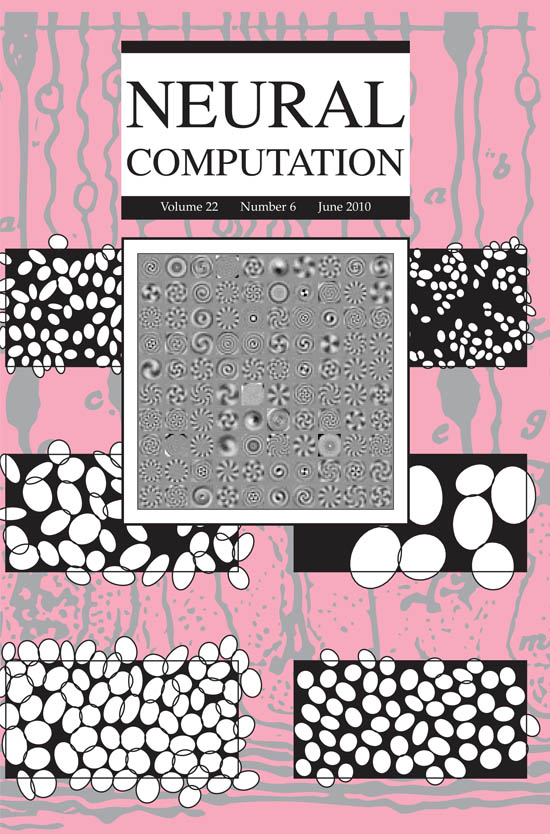

2010 Memisevic, R., Hinton, G.

Learning to Represent Spatial Transformations with Factored Higher-Order Boltzmann Machines.

Neural Computation June 2010, Vol. 22, No. 6: 1473-1492.

[pdf], [tech-report (pdf)], [errata]

The paper introduces the model and shows some simple applications.

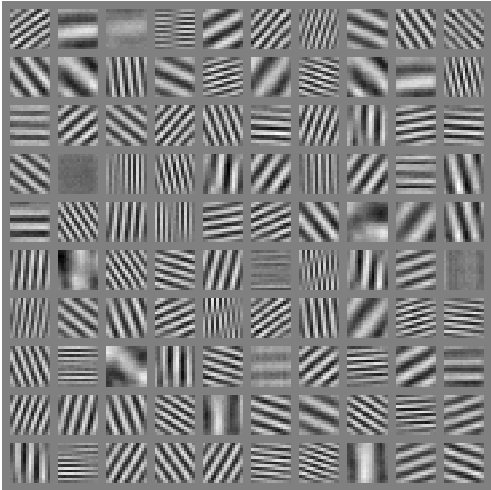

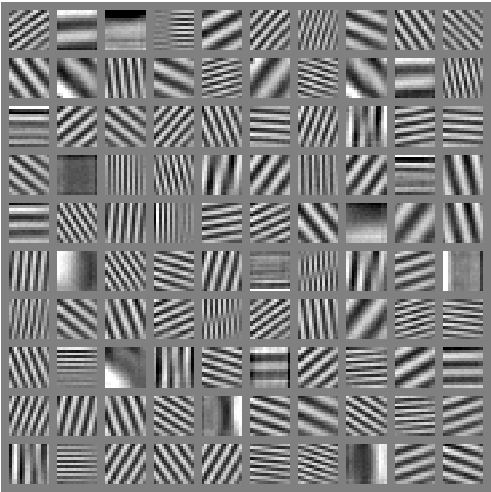

Basis functions that the model learned from rotations are

shown on the June 2010 edition of Neural Computation (click to enlarge):

2007 Memisevic, R. and Hinton, G. E.

Unsupervised learning of image transformations.

Computer Vision and Pattern Recognition (CVPR 2007).

[pdf], [tech-report (pdf)]

Describes a simpler, non-factorized version of the model and

a convolutional version.

2008 Memisevic, Roland

Non-linear latent factor models for revealing structure in high-dimensional data.

Doctoral dissertation, University of Toronto. [pdf]

Describes some extensions and a non-probabilistic version of the model.

2011 Memisevic, R.

Gradient-based learning of higher-order image features. (ICCV 2011)

Introduces the gated autoencoder, which provides an efficent way to learn relational features.

[pdf]

2013 Memisevic, R.

Learning to relate images.

IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI)

Detailed analysis and introduction to gated feature learning and bi-linear models.

[preprint pdf]

Tutorials:

Webside from multiview feature learning tutorial at cvpr 2012

Slides from IPAM summer school on deep learning at UCLA 2012:

Slides part 1 and

Slides part 2.

(The full schedule with links to video.)

Slides and code

from CIFAR summer school in Toronto 2013.