|

PLearn 0.1

|

|

PLearn 0.1

|

#include <EntropyContrast.h>

Public Member Functions | |

| EntropyContrast () | |

| virtual void | build () |

| simply calls inherited::build() then build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual EntropyContrast * | deepCopy (CopiesMap &copies) const |

| virtual int | outputsize () const |

| returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options) | |

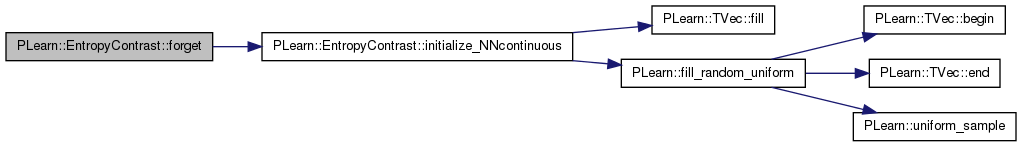

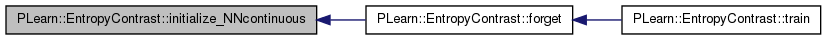

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!) | |

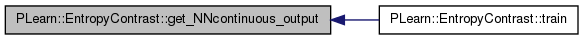

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| void | reconstruct (const Vec &output, Vec &input) const |

| Reconstructs an input from a (possibly partial) output (i.e. the first few princial components kept). | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< string > | getTestCostNames () const |

| Returns [ "squared_reconstruction_error" ]. | |

| virtual TVec< string > | getTrainCostNames () const |

| No trian costs are computed for this learner. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| string | cost_real |

| string | cost_gen |

| string | cost_extra |

| string | gen_method |

| string | evaluation_method |

| int | nconstraints |

| int | inputsize |

| The number of constraints. | |

| real | learning_rate |

| real | decay_factor |

| the learning rate | |

| real | weight_real |

| the decay factor of the learning rate | |

| real | weight_gen |

| real | weight_extra |

| real | weight_decay_output |

| real | weight_decay_hidden |

| int | n_seen_examples |

| real | starting_learning_rate |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

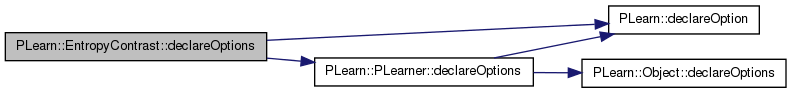

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

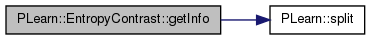

| string | getInfo () |

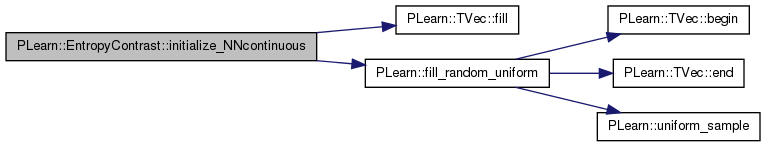

| void | initialize_NNcontinuous () |

| Initialize all the data structures for the NNet. | |

| void | update_NNcontinuous () |

| update the parameters of the NNet from the regular cost | |

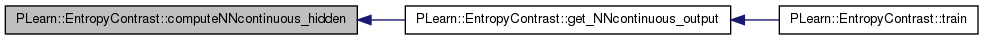

| void | computeNNcontinuous_hidden (const Vec &input_units, Vec &hidden_units) |

| compute the hidden units of the NNet given the input | |

| void | computeNNcontinuous_constraints (Vec &hidden_units, Vec &output_units) |

| Compute the output units given the hidden units. | |

| void | get_NNcontinuous_output (const Vec &x, Vec &f_x, Vec &z_x) |

| Compute the output units and also the hidden units given the input. | |

| void | update_mu_sigma_f (const Vec &f_x, Vec &mu, Vec &sigma) |

| Given the output of the NNet it updates the running averages(mu, variance) | |

| void | update_alpha (int stage, int current_input_index) |

| Update the weight of a sample(alpha). | |

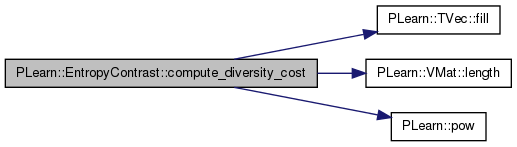

| void | compute_diversity_cost (const Vec &f_x, const Vec &cost, Vec &grad_C_extra_cost_wrt_f_x) |

| Compute the cost and the gradiant for the diversity cost given by C_i = sum_j<i {cov((f_i)^2,(f_j)^2)}. | |

| void | get_grad_log_variance_wrt_f (Vec &grad, const Vec &f_x, const Vec &mu, const Vec &sigma) |

| Compute d log(Var(f_x)) / df_x. | |

| void | set_NNcontinuous_gradient (Vec &grad_C_real_wrt_f_x, Mat &grad_H_f_x_wrt_w, Mat &grad_H_f_x_wrt_v, Vec &z_x, Vec &x, Vec &grad_H_f_x_wrt_bias_output, Vec &grad_H_f_x_wrt_bias_hidden) |

| Compute all the gradiants wrt to the parameters of the neural network. | |

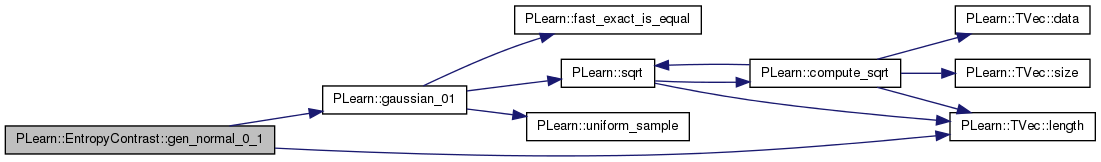

| void | gen_normal_0_1 (Vec &output) |

| Fill a vector with numbers from a gaussian having mu=0 , and sigma=1 This can be used for the generataion of the data. | |

| void | set_NNcontinuous_gradient_from_extra_cost (Mat &grad_C_wrt_df_dx, const Vec &input) |

| do the bprop step for NNet, compute all the gradiants | |

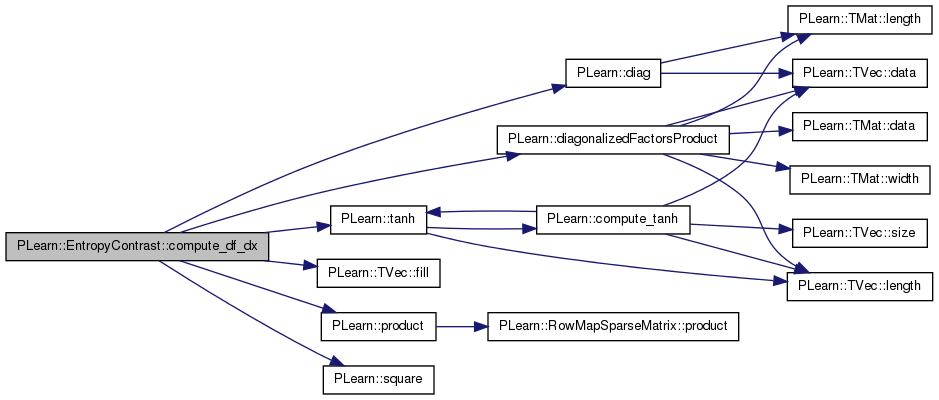

| void | compute_df_dx (Mat &df_dx, const Vec &input) |

| Compute df/dx, given x(input) | |

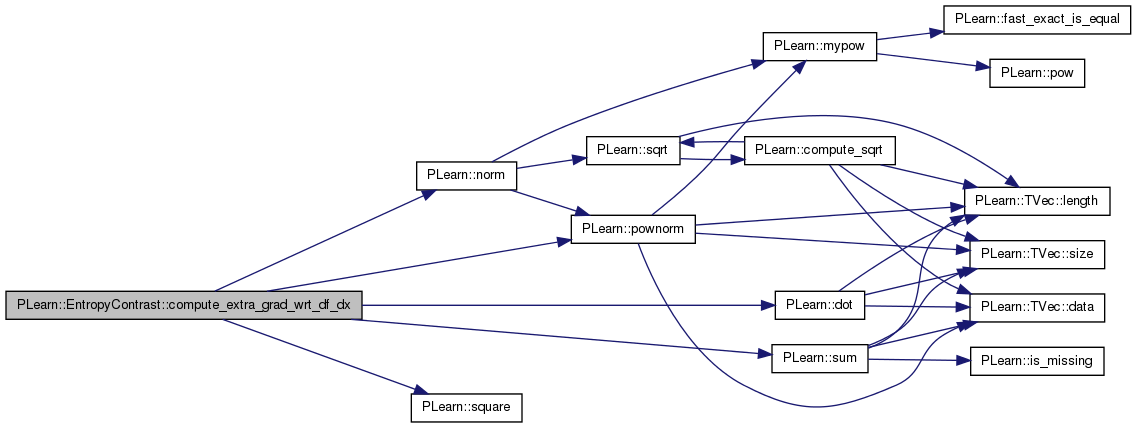

| void | compute_extra_grad_wrt_df_dx (Mat &grad_C_wrt_df_dx) |

| compute the grad extra_cost wrt df_dx | |

| void | update_NNcontinuous_from_extra_cost () |

| update the parameters of the NNet from the extra cost given by the derivative(angle) cost | |

Private Attributes | |

| int | n |

| int | evaluate_every_n_epochs |

| bool | evaluate_first_epoch |

| Mat | w |

| Mat | v |

| Vec | x |

| Vec | f_x |

| Vec | grad_C_real_wrt_f_x |

| Vec | grad_C_extra_cost_wrt_f_x |

| Vec | x_hat |

| Vec | f_x_hat |

| Vec | grad_C_generated_wrt_f_x_hat |

| VMat | test_set |

| VMat | validation_set |

| Validation set used in some contexts. | |

| real | alpha |

| int | nhidden |

| Vec | mu_f |

| the number of hidden units, in the one existing hidden layer | |

| Vec | sigma_f |

| Vec | mu_f_hat |

| Vec | sigma_f_hat |

| Vec | mu_f_square |

| Vec | sigma_f_square |

| Mat | grad_H_f_x_wrt_w |

| Mat | grad_H_f_x_hat_wrt_w |

| Mat | grad_H_f_x_wrt_v |

| Mat | grad_H_f_x_hat_wrt_v |

| Vec | grad_H_f_x_wrt_bias_output |

| Vec | grad_H_f_x_wrt_bias_hidden |

| Vec | grad_H_f_x_hat_wrt_bias_output |

| Vec | grad_H_f_x_hat_wrt_bias_hidden |

| Mat | grad_H_g_wrt_w |

| Vec | sigma_g |

| Vec | mu_g |

| Vec | g_x |

| Vec | bias_hidden |

| Vec | z_x |

| Vec | z_x_hat |

| Vec | bias_output |

| Vec | full_sum |

| real | full |

| Mat | df_dx |

| Mat | grad_C_wrt_df_dx |

| Mat | grad_extra_wrt_w |

| Mat | grad_extra_wrt_v |

| Vec | grad_extra_wrt_bias_hidden |

| Vec | grad_extra_wrt_bias_output |

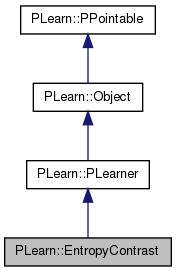

Definition at line 60 of file EntropyContrast.h.

typedef PLearner PLearn::EntropyContrast::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 65 of file EntropyContrast.h.

| PLearn::EntropyContrast::EntropyContrast | ( | ) |

Definition at line 49 of file EntropyContrast.cc.

References alpha, decay_factor, evaluate_every_n_epochs, evaluate_first_epoch, evaluation_method, learning_rate, n, nconstraints, nhidden, weight_extra, weight_gen, and weight_real.

:nconstraints(4) //TODO: change to input_size { learning_rate = 0.001; decay_factor = 0; weight_real = weight_gen = weight_extra = 1; nconstraints = 0 ; n = 0 ; evaluate_every_n_epochs = 1; evaluate_first_epoch = true; evaluation_method = "no_evaluation"; nhidden = 0 ; alpha = 0.0 ; }

| string PLearn::EntropyContrast::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 66 of file EntropyContrast.cc.

| OptionList & PLearn::EntropyContrast::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 66 of file EntropyContrast.cc.

| RemoteMethodMap & PLearn::EntropyContrast::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 66 of file EntropyContrast.cc.

Reimplemented from PLearn::PLearner.

Definition at line 66 of file EntropyContrast.cc.

| Object * PLearn::EntropyContrast::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 66 of file EntropyContrast.cc.

| StaticInitializer EntropyContrast::_static_initializer_ & PLearn::EntropyContrast::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 66 of file EntropyContrast.cc.

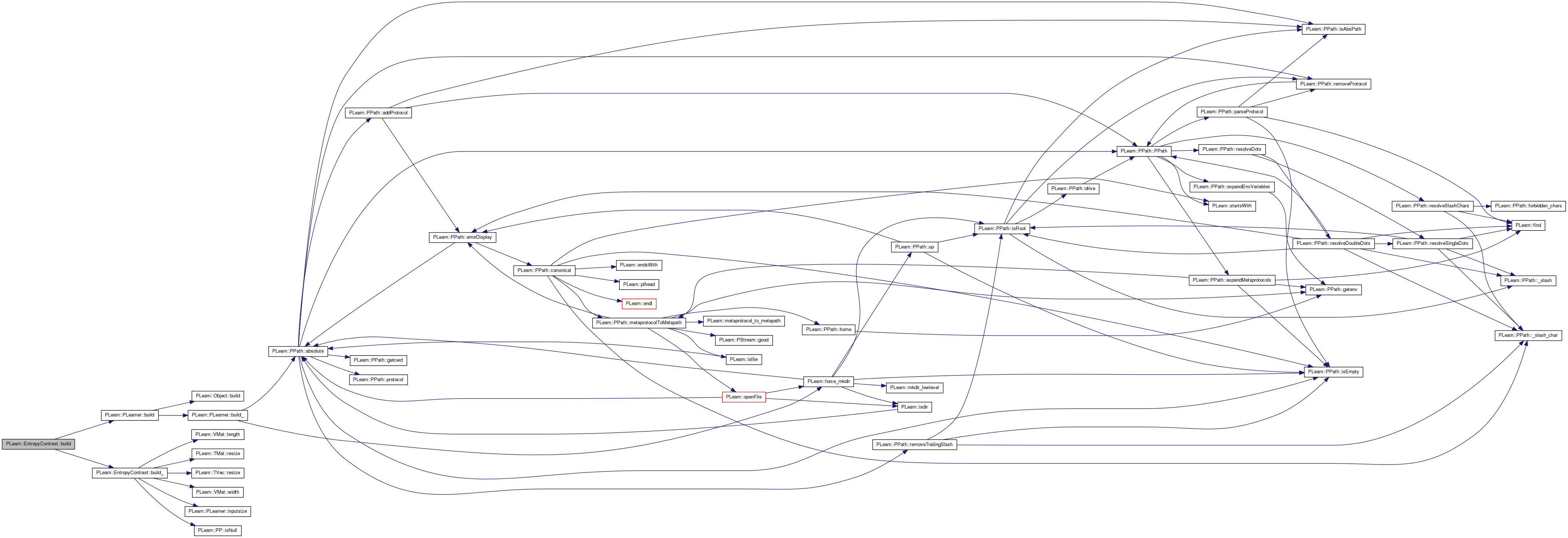

| void PLearn::EntropyContrast::build | ( | ) | [virtual] |

simply calls inherited::build() then build_()

Reimplemented from PLearn::PLearner.

Definition at line 539 of file EntropyContrast.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

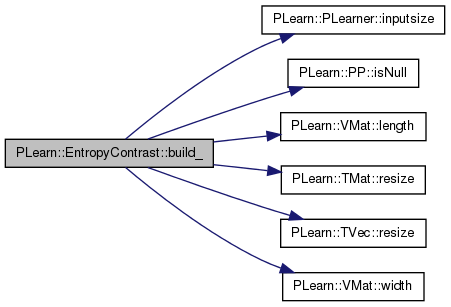

| void PLearn::EntropyContrast::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 460 of file EntropyContrast.cc.

References bias_hidden, bias_output, df_dx, f_x, f_x_hat, full_sum, g_x, grad_C_extra_cost_wrt_f_x, grad_C_generated_wrt_f_x_hat, grad_C_real_wrt_f_x, grad_C_wrt_df_dx, grad_extra_wrt_v, grad_extra_wrt_w, grad_H_f_x_hat_wrt_bias_hidden, grad_H_f_x_hat_wrt_bias_output, grad_H_f_x_hat_wrt_v, grad_H_f_x_hat_wrt_w, grad_H_f_x_wrt_bias_hidden, grad_H_f_x_wrt_bias_output, grad_H_f_x_wrt_v, grad_H_f_x_wrt_w, grad_H_g_wrt_w, PLearn::PLearner::inputsize(), PLearn::PP< T >::isNull(), learning_rate, PLearn::VMat::length(), mu_f, mu_f_hat, mu_f_square, mu_g, n, n_seen_examples, nconstraints, nhidden, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), sigma_f, sigma_f_hat, sigma_f_square, sigma_g, starting_learning_rate, PLearn::PLearner::train_set, v, w, PLearn::VMat::width(), x, x_hat, z_x, and z_x_hat.

Referenced by build().

{

if (!train_set.isNull())

{

n = train_set->width() ; // setting the input dimension

inputsize = train_set->length() ; // set the number of training inputs

x.resize(n) ; // the current input sample, presented

f_x.resize(nconstraints) ; // the constraints on the real sample

grad_C_real_wrt_f_x.resize(nconstraints); // the gradient of the real cost wrt to the constraints

x_hat.resize(n) ; // the current generated sample

f_x_hat.resize(nconstraints) ; // the constraints on the generated sample

grad_C_generated_wrt_f_x_hat.resize(nconstraints); // the gradient of the generated cost wrt to the constraints

grad_C_extra_cost_wrt_f_x.resize(nconstraints);

starting_learning_rate = learning_rate;

n_seen_examples = 0;

w.resize(nconstraints,nhidden) ; // setting the size of the weights between the hidden layer and the output(the constraints)

z_x.resize(nhidden) ; // set the size of the hidden units

z_x_hat.resize(nhidden) ; // set the size of the hidden units

v.resize(nhidden,n) ; // set the size of the weights between the hidden input and the hidden units

mu_f.resize(nconstraints) ; // the average of the constraints over time, used in the computation on certain gradiants

mu_f_hat.resize(nconstraints) ; // the average of the constraints over time, used in the computation on certain gradiants

sigma_f.resize(nconstraints) ; // the variance of the constraints over time,, sued in the computation on certain gradiants

sigma_f_hat.resize(nconstraints) ;//the variance of the constraints over time,, sued in the computation on certain gradiants

mu_f_square.resize(nconstraints) ;

sigma_f_square.resize(nconstraints) ;

bias_hidden.resize(nhidden) ;

bias_output.resize(nconstraints);

grad_H_f_x_wrt_bias_output.resize(nconstraints) ;

grad_H_f_x_wrt_bias_hidden.resize(nhidden) ;

grad_H_f_x_hat_wrt_bias_output.resize(nconstraints) ;

grad_H_f_x_hat_wrt_bias_hidden.resize(nhidden) ;

grad_H_f_x_hat_wrt_w.resize(nconstraints,nhidden);

grad_H_f_x_wrt_w.resize(nconstraints,nhidden) ;

grad_H_g_wrt_w.resize(nconstraints,nhidden) ;

grad_H_f_x_wrt_v.resize(nhidden,n) ;

grad_H_f_x_hat_wrt_v.resize(nhidden,n) ;

// used for the computation of the extra diversity constraints

sigma_g.resize(nconstraints) ;

mu_g.resize(nconstraints) ;

g_x.resize(nconstraints) ;

grad_C_wrt_df_dx.resize(nconstraints,n) ;

df_dx.resize(nconstraints,n) ;

grad_extra_wrt_w.resize(nconstraints, nhidden) ;

grad_extra_wrt_v.resize(nhidden, n) ;

full_sum.resize(nconstraints) ;

}

}

| string PLearn::EntropyContrast::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 66 of file EntropyContrast.cc.

Compute df/dx, given x(input)

Definition at line 250 of file EntropyContrast.cc.

References bias_hidden, PLearn::diag(), PLearn::diagonalizedFactorsProduct(), PLearn::TVec< T >::fill(), nhidden, PLearn::product(), PLearn::square(), PLearn::tanh(), v, and w.

Referenced by train().

{

Vec ones(nhidden);

ones.fill(1);

Vec hidden(nhidden);

hidden = product(v,input);

hidden = hidden + bias_hidden;

Vec diag(nhidden) ;

diag = ones - square(tanh(hidden)) ;

diagonalizedFactorsProduct(df_dx,w,diag,v);

}

| void PLearn::EntropyContrast::compute_diversity_cost | ( | const Vec & | f_x, |

| const Vec & | cost, | ||

| Vec & | grad_C_extra_cost_wrt_f_x | ||

| ) | [private] |

Compute the cost and the gradiant for the diversity cost given by C_i = sum_j<i {cov((f_i)^2,(f_j)^2)}.

Definition at line 225 of file EntropyContrast.cc.

References PLearn::TVec< T >::fill(), full_sum, i, j, PLearn::VMat::length(), mu_f, nconstraints, PLearn::pow(), sigma_f, and PLearn::PLearner::train_set.

Referenced by train().

{

cost.fill (0.0);

for (int i = 0; i < nconstraints; ++i)

{

for (int j = 0; j <= i; ++j)

cost[i] += pow (f_x[j], 2);

cost[i] /= i + 1;

}

Vec full_sum(nconstraints) ;

full_sum[0] = (pow(f_x[0],2) - (sigma_f[0] + pow(mu_f[0],2) ) ) ;

for (int i = 1 ; i<nconstraints ; ++i)

{

full_sum[i] = full_sum[i-1] + (pow(f_x[i],2) - (sigma_f[i] + pow(mu_f[i],2) ) ) ;

grad_C_extra_cost_wrt_f_x[i] = full_sum[i-1] * f_x[i] / train_set.length() ;

}

}

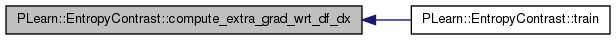

| void PLearn::EntropyContrast::compute_extra_grad_wrt_df_dx | ( | Mat & | grad_C_wrt_df_dx | ) | [private] |

compute the grad extra_cost wrt df_dx

Definition at line 359 of file EntropyContrast.cc.

References d, df_dx, PLearn::dot(), grad_C_wrt_df_dx, i, j, n, nconstraints, PLearn::norm(), PLearn::pownorm(), PLearn::square(), and PLearn::sum().

Referenced by train().

{

for(int i=0 ; i<n ; i++){

grad_C_wrt_df_dx[0][i] = 0.0 ;

}

// compute dot product g_i , g_j

Mat dot_g(nconstraints,nconstraints);

for (int i=0; i<nconstraints ;++i) {

for (int j=0; j<i ; ++j) {

dot_g(i,j) = dot(df_dx(i),df_dx(j));

}

}

Vec cost(nconstraints);

Vec d(nconstraints);

for(int i=1 ; i<nconstraints ; ++i) {

cost[i] = 0;

d[i] = 0;

real sum = 0;

for(int j=0 ; j<i ; ++j) {

d[i] += pownorm(df_dx(i))*pownorm(df_dx(j));

sum += square(dot_g(i,j));

}

cost[i] += sum / d[i];

}

for (int j = 1; j<nconstraints; ++j ) {

for (int k = 0; k<n ; ++k) {

grad_C_wrt_df_dx(j,k) = 0;

for (int i = 0; i < j ; ++i) {

grad_C_wrt_df_dx(j,k) += 2 * dot_g(j,i) * df_dx(i,k);

}

grad_C_wrt_df_dx(j,k) /= d[j];

grad_C_wrt_df_dx(j,k) -= (2*cost[j]*df_dx(j,k)/norm(df_dx(j)));

}

}

}

| void PLearn::EntropyContrast::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

The only computed cost is the squared_reconstruction_error

Implements PLearn::PLearner.

Definition at line 787 of file EntropyContrast.cc.

{

}

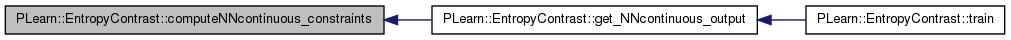

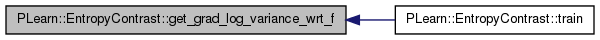

| void PLearn::EntropyContrast::computeNNcontinuous_constraints | ( | Vec & | hidden_units, |

| Vec & | output_units | ||

| ) | [private] |

Compute the output units given the hidden units.

Definition at line 162 of file EntropyContrast.cc.

References bias_output, i, j, nconstraints, nhidden, and w.

Referenced by get_NNcontinuous_output().

{

for (int i = 0 ; i < nconstraints ; ++i )

{

output_units[i] = bias_output[i] ;

for (int j = 0 ; j < nhidden ; ++j)

output_units[i] += w(i,j) * hidden_units[j] ;

}

}

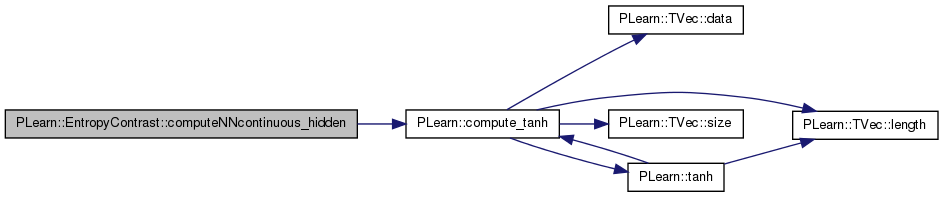

| void PLearn::EntropyContrast::computeNNcontinuous_hidden | ( | const Vec & | input_units, |

| Vec & | hidden_units | ||

| ) | [private] |

compute the hidden units of the NNet given the input

Definition at line 145 of file EntropyContrast.cc.

References bias_hidden, PLearn::compute_tanh(), i, j, n, nhidden, and v.

Referenced by get_NNcontinuous_output().

{

for (int i = 0 ; i < nhidden ; ++i )

{

hidden_units[i] = bias_hidden[i] ;

for (int j = 0 ; j < n ; ++j)

hidden_units[i] += v(i,j) * input_units[j] ;

}

compute_tanh(hidden_units,hidden_units) ;

}

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 778 of file EntropyContrast.cc.

{

}

| void PLearn::EntropyContrast::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 68 of file EntropyContrast.cc.

References PLearn::OptionBase::buildoption, cost_extra, cost_gen, cost_real, decay_factor, PLearn::declareOption(), PLearn::PLearner::declareOptions(), evaluate_every_n_epochs, evaluation_method, gen_method, learning_rate, nconstraints, nhidden, test_set, weight_decay_hidden, weight_decay_output, weight_extra, weight_gen, and weight_real.

{

declareOption(ol, "nconstraints", &EntropyContrast::nconstraints, OptionBase::buildoption,

"The number of constraints to create (that's also the outputsize)");

declareOption(ol, "learning_rate", &EntropyContrast::learning_rate, OptionBase::buildoption,

"The learning rate of the algorithm");

declareOption(ol, "decay_factor", &EntropyContrast::decay_factor, OptionBase::buildoption,

"The decay factor of the learning rate");

declareOption(ol, "weight_decay_hidden", &EntropyContrast::weight_decay_hidden, OptionBase::buildoption,

"The decay factor for the hidden units");

declareOption(ol, "weight_decay_output", &EntropyContrast::weight_decay_output, OptionBase::buildoption,

"The decay factor for the output units");

declareOption(ol, "cost_real", &EntropyContrast::cost_real, OptionBase::buildoption,

"The method to compute the real cost");

declareOption(ol, "cost_gen", &EntropyContrast::cost_gen, OptionBase::buildoption,

"The method to compute the cost for the generated cost");

declareOption(ol, "cost_extra", &EntropyContrast::cost_extra, OptionBase::buildoption,

"The method to compute the extra cost");

declareOption(ol, "gen_method", &EntropyContrast::gen_method, OptionBase::buildoption,

"Method used to generate new points");

declareOption(ol, "weight_real", &EntropyContrast::weight_real, OptionBase::buildoption,

"the relative weight of the cost of the real data, by default it is 1");

declareOption(ol, "weight_gen", &EntropyContrast::weight_gen, OptionBase::buildoption,

"the relative weight of the cost of the generated data, by default it is 1");

declareOption(ol, "weight_extra", &EntropyContrast::weight_extra, OptionBase::buildoption,

"the relative weight of the extra cost, by default it is 1");

declareOption(ol, "evaluation_method", &EntropyContrast::evaluation_method, OptionBase::buildoption,

"Method for evaluation of constraint learning");

declareOption(ol, "evaluate_every_n_epochs", &EntropyContrast::evaluate_every_n_epochs, OptionBase::buildoption,

"Number of epochs after which the constraints evaluation is done");

declareOption(ol, "test_set", &EntropyContrast::test_set, OptionBase::buildoption,

"VMat test set");

declareOption(ol, "nhidden", &EntropyContrast::nhidden, OptionBase::buildoption,

"the number of hidden units");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::EntropyContrast::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 232 of file EntropyContrast.h.

| EntropyContrast * PLearn::EntropyContrast::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 66 of file EntropyContrast.cc.

| void PLearn::EntropyContrast::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) And sets 'stage' back to 0 (this is the stage of a fresh learner!)

Reimplemented from PLearn::PLearner.

Definition at line 559 of file EntropyContrast.cc.

References initialize_NNcontinuous().

Referenced by train().

{

// Initialization

initialize_NNcontinuous() ;

}

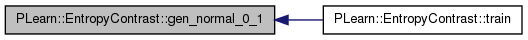

| void PLearn::EntropyContrast::gen_normal_0_1 | ( | Vec & | output | ) | [private] |

Fill a vector with numbers from a gaussian having mu=0 , and sigma=1 This can be used for the generataion of the data.

Definition at line 189 of file EntropyContrast.cc.

References PLearn::gaussian_01(), i, and PLearn::TVec< T >::length().

Referenced by train().

{

for (int i = 0 ; i < output.length() ; ++ i) {

output[i] = gaussian_01();

}

}

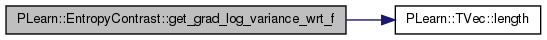

| void PLearn::EntropyContrast::get_grad_log_variance_wrt_f | ( | Vec & | grad, |

| const Vec & | f_x, | ||

| const Vec & | mu, | ||

| const Vec & | sigma | ||

| ) | [private] |

Compute d log(Var(f_x)) / df_x.

Definition at line 265 of file EntropyContrast.cc.

References i, and PLearn::TVec< T >::length().

Referenced by train().

| void PLearn::EntropyContrast::get_NNcontinuous_output | ( | const Vec & | x, |

| Vec & | f_x, | ||

| Vec & | z_x | ||

| ) | [private] |

Compute the output units and also the hidden units given the input.

Definition at line 176 of file EntropyContrast.cc.

References computeNNcontinuous_constraints(), and computeNNcontinuous_hidden().

Referenced by train().

{

computeNNcontinuous_hidden(input_units,hidden_units) ; // compute the hidden units

computeNNcontinuous_constraints(hidden_units,output_units) ; // compute the hidden units

}

| string PLearn::EntropyContrast::getInfo | ( | ) | [inline, private] |

Definition at line 181 of file EntropyContrast.h.

References info, and PLearn::split().

Referenced by train().

{

time_t tt;

time(&tt);

string time_str(ctime(&tt));

vector<string> tokens = split(time_str);

string info = tokens[3];

info += "> ";

return info;

}

| OptionList & PLearn::EntropyContrast::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 66 of file EntropyContrast.cc.

| OptionMap & PLearn::EntropyContrast::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 66 of file EntropyContrast.cc.

| RemoteMethodMap & PLearn::EntropyContrast::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 66 of file EntropyContrast.cc.

| TVec< string > PLearn::EntropyContrast::getTestCostNames | ( | ) | const [virtual] |

Returns [ "squared_reconstruction_error" ].

Implements PLearn::PLearner.

Definition at line 792 of file EntropyContrast.cc.

{

return TVec<string>(1,"squared_reconstruction_error");

}

| TVec< string > PLearn::EntropyContrast::getTrainCostNames | ( | ) | const [virtual] |

No trian costs are computed for this learner.

Implements PLearn::PLearner.

Definition at line 797 of file EntropyContrast.cc.

{

return TVec<string>();

}

| void PLearn::EntropyContrast::initialize_NNcontinuous | ( | ) | [private] |

Initialize all the data structures for the NNet.

Definition at line 115 of file EntropyContrast.cc.

References bias_hidden, bias_output, PLearn::TVec< T >::fill(), PLearn::fill_random_uniform(), full, mu_f, mu_f_hat, mu_f_square, mu_g, sigma_f, sigma_f_hat, sigma_f_square, sigma_g, v, and w.

Referenced by forget().

{

fill_random_uniform(w,-10.0,10.0) ;

fill_random_uniform(v,-10.0,10.0) ;

fill_random_uniform(bias_hidden,-10.0,10.0) ;

fill_random_uniform(bias_output,-10.0,10.0) ;

mu_f.fill(0.0) ;

sigma_f.fill(1.0) ;

mu_f_hat.fill(0.0) ;

sigma_f_hat.fill(1.0) ;

// the extra_diversity constraint

mu_g = 0.0 ;

sigma_g = 1.0 ;

sigma_g.fill(1.0) ;

mu_g.fill(0.0) ;

mu_f_square.fill(0.0) ;

sigma_f_square.fill(1.0) ;

full = 1.0 ;

}

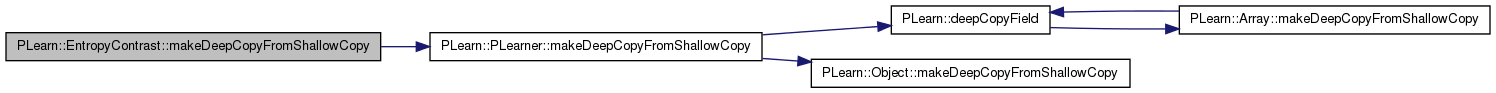

| void PLearn::EntropyContrast::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 546 of file EntropyContrast.cc.

References PLearn::PLearner::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

// deepCopyField(eigenvecs, copies);

}

| int PLearn::EntropyContrast::outputsize | ( | ) | const [virtual] |

returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options)

Implements PLearn::PLearner.

Definition at line 554 of file EntropyContrast.cc.

References nconstraints.

{

return nconstraints;

}

Reconstructs an input from a (possibly partial) output (i.e. the first few princial components kept).

Definition at line 783 of file EntropyContrast.cc.

{

}

| void PLearn::EntropyContrast::set_NNcontinuous_gradient | ( | Vec & | grad_C_real_wrt_f_x, |

| Mat & | grad_H_f_x_wrt_w, | ||

| Mat & | grad_H_f_x_wrt_v, | ||

| Vec & | z_x, | ||

| Vec & | x, | ||

| Vec & | grad_H_f_x_wrt_bias_output, | ||

| Vec & | grad_H_f_x_wrt_bias_hidden | ||

| ) | [private] |

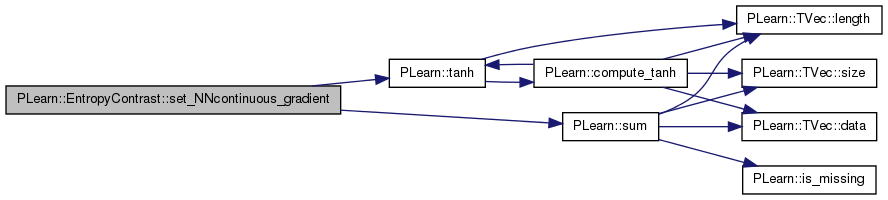

Compute all the gradiants wrt to the parameters of the neural network.

Definition at line 275 of file EntropyContrast.cc.

References bias_hidden, grad_H_f_x_wrt_v, grad_H_f_x_wrt_w, i, j, n, nconstraints, nhidden, PLearn::sum(), PLearn::tanh(), v, and w.

Referenced by train().

{

// set the gradiant grad_H_f_x_wrt_w ;

for (int i = 0 ; i < nconstraints ; ++ i)

for (int j = 0 ; j < nhidden ; ++j)

{

grad_H_f_x_wrt_w(i,j) = grad_C_real_wrt_f_x[i] * hidden_units[j] ;

}

// set the gradiant grad_H_f_x_wrt_bias_z_output ;

for (int i = 0 ; i < nconstraints ; ++i)

grad_H_f_x_wrt_bias_output[i] = grad_C_real_wrt_f_x[i] ;

// set the gradiant grad_H_f_x_wrt_v ;

real sum; // keep sum v_i_k * x_k

real grad_tmp ; // keep sum grad_C_wrt_f * grad_f_k_wrt_z

for (int i = 0 ; i < nhidden ; ++ i)

{

sum = 0 ;

for (int k = 0 ; k < n ; ++ k)

sum+=v(i,k) * input_units[k] ;

grad_tmp = 0;

for (int l = 0 ; l < nconstraints ; ++l)

grad_tmp += grad_C_real_wrt_f_x[l] * w(l,i) ;

for(int j=0 ; j<n ; ++j)

grad_H_f_x_wrt_v(i,j) = grad_tmp * (1 - tanh(bias_hidden[i] + sum) * tanh(bias_hidden[i] + sum)) * input_units[j];

grad_H_f_x_wrt_bias_hidden[i] = grad_tmp * (1 - tanh(bias_hidden[i] + sum) * tanh(bias_hidden[i] + sum));

}

}

| void PLearn::EntropyContrast::set_NNcontinuous_gradient_from_extra_cost | ( | Mat & | grad_C_wrt_df_dx, |

| const Vec & | input | ||

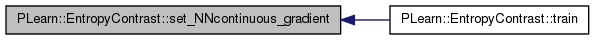

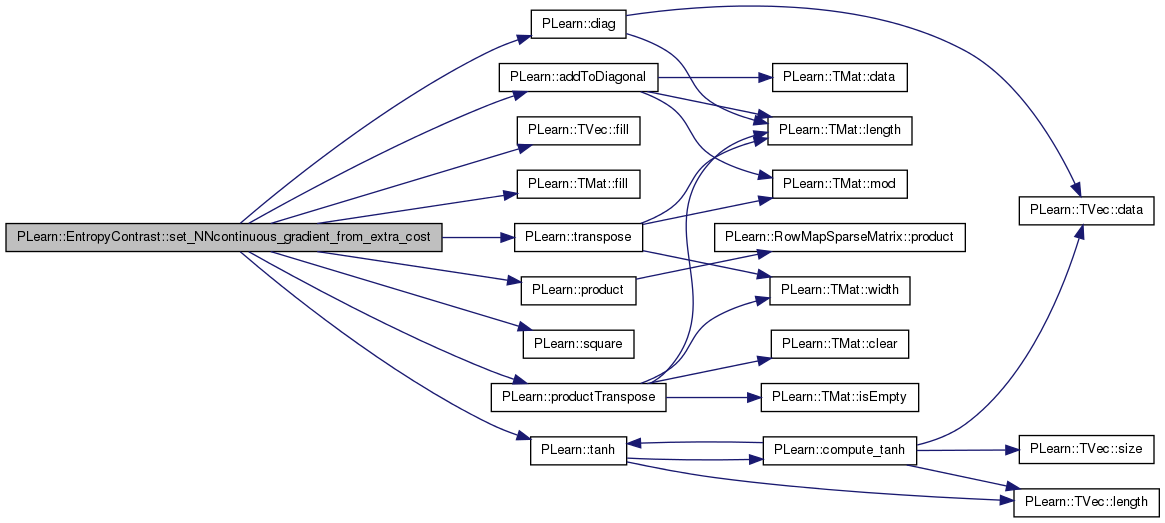

| ) | [private] |

do the bprop step for NNet, compute all the gradiants

Definition at line 403 of file EntropyContrast.cc.

References a, PLearn::addToDiagonal(), b, bias_hidden, PLearn::diag(), PLearn::TVec< T >::fill(), PLearn::TMat< T >::fill(), grad_extra_wrt_bias_hidden, grad_extra_wrt_v, grad_extra_wrt_w, i, j, n, nconstraints, nhidden, PLearn::product(), PLearn::productTranspose(), PLearn::square(), PLearn::tanh(), PLearn::transpose(), v, and w.

Referenced by train().

{

//compute a = 1 - tanh^2(v * x)

// b = 1 - tanh( v * x ) ;

Vec ones(nhidden) ;

Vec b(nhidden) ;

ones.fill(1) ;

Vec hidden(nhidden);

hidden = product(v,input);

hidden = hidden + bias_hidden;

Vec diag(nhidden) ;

diag = ones - square(tanh(hidden)) ;

b = ones - tanh(hidden) ;

Mat a(nhidden,nhidden) ;

a.fill(0.0) ;

addToDiagonal(a,diag) ;

// compute dC / dw = dC/dg * v' * a

Mat temp(nconstraints,nhidden);

productTranspose(temp,grad_C_wrt_df_dx,v) ;

product(grad_extra_wrt_w,temp,a) ;

// compute dC/dv = a * w' * dC/dg -2 * (dC/da * b * a) x' ;

{

Mat tmp(nhidden,nconstraints) ;

product(tmp,a,transpose(w)) ;

product(grad_extra_wrt_v,tmp,grad_C_wrt_df_dx) ;

}

// compute dC/da

{

Vec grad_C_wrt_a ;

Mat tmp(nhidden,n) ;

product(tmp,transpose(w),grad_C_wrt_df_dx) ;

Mat tmp_a(nhidden,nhidden) ;

product(tmp_a,tmp,transpose(v)) ;

// grad_extra_wrt_v += (-2 * diag * b * diag(tmp_a) ) * transpose(input) ;

Vec temp(nhidden) ;

for (int i= 0 ; i < nhidden ; ++i)

{

temp[i] = (-2) * tmp_a(i,i) * b[i] * a(i,i);

for (int j = 0 ; j < n ; ++j)

{

grad_extra_wrt_v(i,j) += temp[i] * input[j];

}

}

grad_extra_wrt_bias_hidden = temp;

}

}

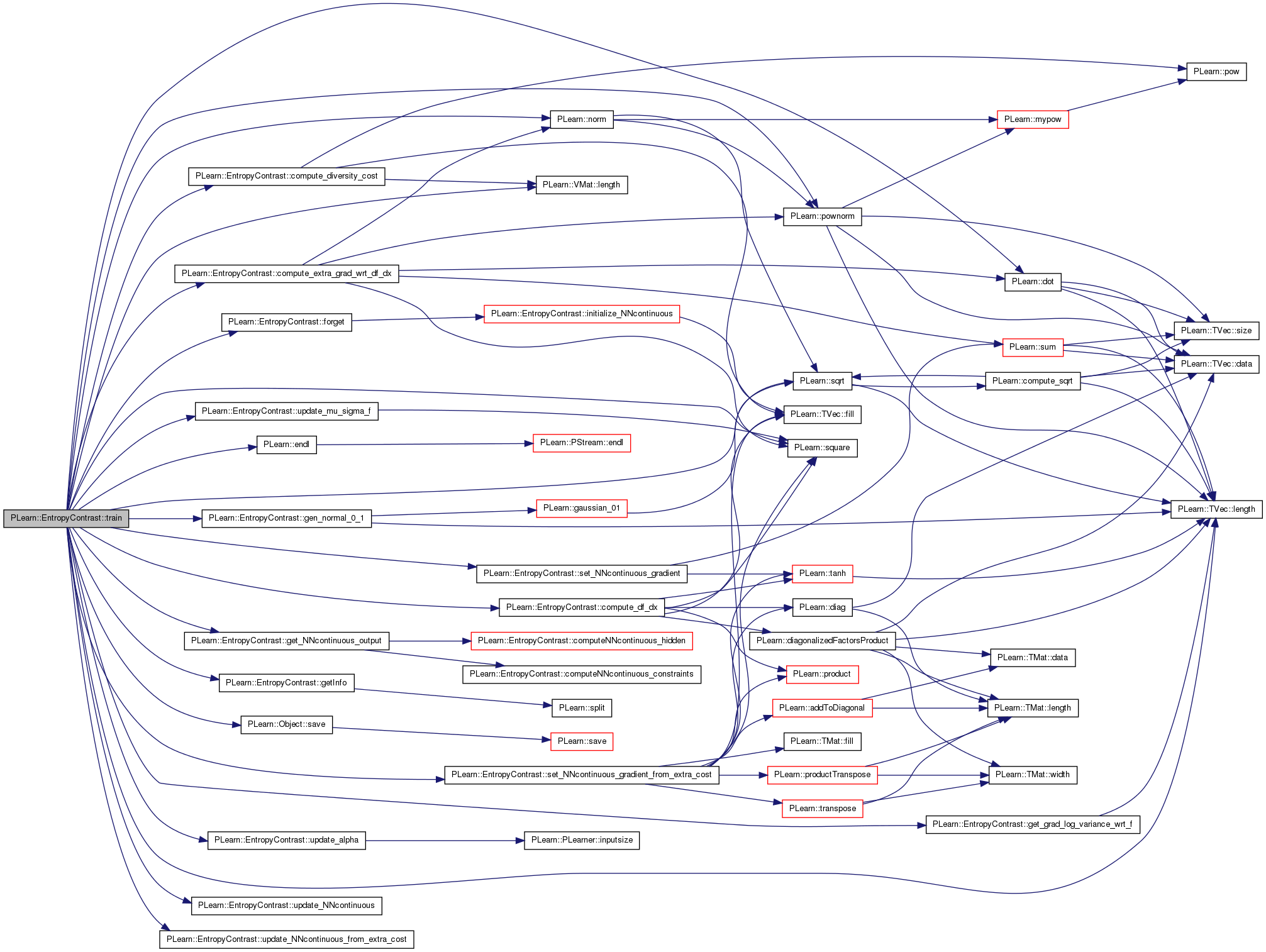

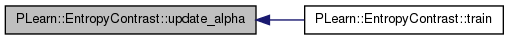

| void PLearn::EntropyContrast::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 566 of file EntropyContrast.cc.

References alpha, compute_df_dx(), compute_diversity_cost(), compute_extra_grad_wrt_df_dx(), cost_extra, cost_gen, cost_real, decay_factor, df_dx, PLearn::dot(), PLearn::endl(), f_x, f_x_hat, forget(), full, g_x, gen_method, gen_normal_0_1(), get_grad_log_variance_wrt_f(), get_NNcontinuous_output(), getInfo(), grad_C_extra_cost_wrt_f_x, grad_C_generated_wrt_f_x_hat, grad_C_real_wrt_f_x, grad_C_wrt_df_dx, grad_H_f_x_hat_wrt_bias_hidden, grad_H_f_x_hat_wrt_bias_output, grad_H_f_x_hat_wrt_v, grad_H_f_x_hat_wrt_w, grad_H_f_x_wrt_bias_hidden, grad_H_f_x_wrt_bias_output, grad_H_f_x_wrt_v, grad_H_f_x_wrt_w, i, j, learning_rate, PLearn::TVec< T >::length(), PLearn::VMat::length(), mu_f, mu_f_hat, mu_f_square, n, n_seen_examples, nconstraints, PLearn::norm(), PLearn::PLearner::nstages, PLearn::pownorm(), PLearn::Object::save(), set_NNcontinuous_gradient(), set_NNcontinuous_gradient_from_extra_cost(), sigma_f, sigma_f_hat, sigma_f_square, PLearn::sqrt(), PLearn::square(), PLearn::PLearner::stage, starting_learning_rate, PLearn::PLearner::train_set, update_alpha(), update_mu_sigma_f(), update_NNcontinuous(), update_NNcontinuous_from_extra_cost(), weight_extra, weight_gen, weight_real, x, x_hat, z_x, and z_x_hat.

{

int t ;

// manual_seed(12345678);

forget();

real cost;

Vec save(n);

for (;stage < nstages;stage++)

{

cost = 0;

cout << getInfo() << endl;

cout << "Stage = " << stage << endl;

cout << "Learning rate = " << learning_rate << endl;

for (t = 0 ; t < train_set.length(); ++ t)

{

update_alpha(stage,t) ; // used in the update of the running averages

train_set->getRow(t,x);

// Real data section

// Get constraint output for real data (fill the f_x field)

get_NNcontinuous_output(x,f_x,z_x) ; // this also computes the value of the hidden units , which will be needed when we compute all the gradiants

update_mu_sigma_f(f_x,mu_f,sigma_f) ;

if (cost_real == "constraint_variance") {

update_mu_sigma_f(square(f_x),mu_f_square,sigma_f_square);

}

// Get gradient for cost function for real data (fill grad_C_real_wrt_f_x)

if(cost_real == "constraint_variance") {

// compute gradiant of the cost wrt to f_x

get_grad_log_variance_wrt_f(grad_C_real_wrt_f_x, f_x, mu_f, sigma_f);

}

// Adjust weight of the gradient

grad_C_real_wrt_f_x *= weight_real;

// Extra cost function

if(cost_extra == "variance_sum_square_constraints") {

compute_diversity_cost(f_x,g_x,grad_C_extra_cost_wrt_f_x) ; // this also computes the gradiant extra_cost wrt to the constrains f_i(x) grad_C_extra_cost_wrt_f_x

grad_C_extra_cost_wrt_f_x *= weight_extra;

}

if(cost_extra == "derivative") {

compute_df_dx(df_dx,x);

compute_extra_grad_wrt_df_dx(grad_C_wrt_df_dx);

grad_C_wrt_df_dx *= weight_extra;

}

// Set gradient for the constraint using real data

// set the gradiant of the cost wrt to the weights w,v and to the bias

set_NNcontinuous_gradient(grad_C_real_wrt_f_x,grad_H_f_x_wrt_w,grad_H_f_x_wrt_v,z_x,x,

grad_H_f_x_wrt_bias_hidden,grad_H_f_x_wrt_bias_output);

if (cost_extra == "derivative"){

set_NNcontinuous_gradient_from_extra_cost(grad_C_wrt_df_dx,x) ;

}

if (cost_extra == "variance_sum_square_constraints") {

// combine the grad_real & grad_extra

for(int it=0; it<grad_C_real_wrt_f_x.length(); it++) {

grad_C_real_wrt_f_x[it] += grad_C_extra_cost_wrt_f_x[it];

}

}

// Generated data section

// Generate a new point (fill x_hat)

if(gen_method == "N(0,1)") {

gen_normal_0_1(x_hat) ;

}

// Get constraint output from generated data (fill the f_x_hat field)

get_NNcontinuous_output(x_hat,f_x_hat,z_x_hat);

update_mu_sigma_f(f_x_hat,mu_f_hat,sigma_f_hat);

// Get gradient for cost function for generated data (fill grad_C_generated_wrt_f_x_hat)

if(cost_gen == "constraint_variance") {

get_grad_log_variance_wrt_f(grad_C_generated_wrt_f_x_hat,f_x_hat,mu_f_hat,sigma_f_hat);

}

// Adjust weight of the gradient

grad_C_generated_wrt_f_x_hat *= weight_gen;

// Set gradient for the constraint using generated data

set_NNcontinuous_gradient(grad_C_generated_wrt_f_x_hat,grad_H_f_x_hat_wrt_w,grad_H_f_x_hat_wrt_v,z_x_hat,x_hat,

grad_H_f_x_hat_wrt_bias_hidden,grad_H_f_x_hat_wrt_bias_output);

// Update

update_NNcontinuous();

if (cost_extra=="derivative") {

update_NNcontinuous_from_extra_cost();

}

n_seen_examples++;

full = alpha * full + (1-alpha) * (f_x[0] * f_x[0] - (sigma_f[0] + mu_f[0]*mu_f[0])) * (f_x[1] * f_x[1] - (sigma_f[1] + mu_f[1]*mu_f[1]) ) ;

real den = 0;

real nom = 0;

for(int i=0 ; i<nconstraints ; ++i) {

for(int j=0 ; j<i ; ++j) {

den += pownorm(df_dx(i))*pownorm(df_dx(j));

nom += square(dot(df_dx(i),df_dx(j)));

}

}

cost += nom / den;

}

learning_rate = starting_learning_rate / (1 + decay_factor*n_seen_examples);

// Train evaluation

cout << "cov = " << full/train_set.length() << endl ;

cout << "var f_square: " << sigma_f_square[0] << " "<< sigma_f_square[1] << endl;

cout << "corr: " << full / sqrt(sigma_f_square[0] / sqrt(sigma_f_square[1])) << endl;

cout << "f : " << f_x << endl;

cout << "cost: " << cost << endl;

train_set->getRow(0,x);

compute_df_dx(df_dx,x);

cout << "angle: " << (dot(df_dx(0),df_dx(1))/(norm(df_dx(1))*norm(df_dx(0)))) << endl;

// cout << "df/dx: " << df_dx(0) << endl;

save << df_dx(0);

cout << "--------------------------------" << endl;

/*

ostringstream sss;

sss << t;

string sstage = sss.str();

ofstream file1((string("gen1_")+sstage+".dat").c_str());

ofstream file2(("gen2_"+sstage+".dat").c_str());

ofstream file3(("gen3_"+sstage+".dat").c_str());

for(int t=0 ; t<train_set.length() ; ++t) {

train_set->getRow(t,x);

compute_df_dx(df_dx,x);

file1 << x << " " << df_dx(0) << endl;

file2 << x << " " << df_dx(1) << endl;

file3 << x << " " << dot(df_dx(0),df_dx(1))/(pownorm(df_dx(0))*pownorm(df_dx(1))) << endl;

}

file1.close();

file2.close();

file3.close();

*/

}

/*

FILE * f1 = fopen("gen1.dat","wt") ;

FILE * f2 = fopen("gen2.dat","wt") ;

FILE * f3 = fopen("gen3.dat","wt") ;

for (int i = -10 ; i <= 10 ; i+=2) {

for (int j = -1 ; j <= 9 ; j+=2 ) {

for (int k = -1 ; k <= 9 ; k+=3 ) {

Mat res(2,3) ;

Vec input(3) ;

Vec ones(nhidden) ;

ones.fill(1) ;

input[0] = (real)i / 10 ;

input[1] = (real)j / 10 ;

input[2] = (real)k / 100 ;

Vec hidden(nhidden);

hidden = product(v,input) ;

Vec diag(nhidden) ;

diag = ones - square(tanh(hidden)) ;

diagonalizedFactorsProduct(res,w,diag,v);

fprintf(f1,"%f %f %f %f %f %f\n",(real)i/10,(real)j/10,(real)k/100,res(0,0),res(0,1),res(0,2));

fprintf(f2,"%f %f %f %f %f %f\n",(real)i/10,(real)j/10,(real)k/100,res(1,0),res(1,1),res(1,2));

real norm0 = sqrt(res(0,0)*res(0,0)+res(0,1)*res(0,1)+res(0,2)*res(0,2)) ;

real norm1 = sqrt(res(1,0)*res(1,0)+res(1,1)*res(1,1)+res(1,2)*res(1,2)) ;

real angle = res(0,0) / norm0 * res(1,0) / norm1 + res(0,1) / norm0 * res(1,1) / norm1 + res(0,2) / norm0 * res(1,2) / norm1 ;

fprintf(f3,"%f %f %f %f\n",(real)i/10,(real)j/10,(real)k/100,angle) ;

// fprintf(f2,"%f %f %f %f\n",(real)i/10,(real)j/10,res(1,0),res(1,1)) ;

}

}

}

fclose(f1) ;

fclose(f2) ;

fclose(f3) ;

*/

}

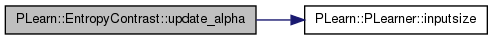

Update the weight of a sample(alpha).

It can range from 1/2, when the first sample is presented, to 1/inputsize if stage > 0

Definition at line 213 of file EntropyContrast.cc.

References alpha, and PLearn::PLearner::inputsize().

Referenced by train().

{

if (stage==0)

alpha = 1.0 - 1.0 / ( current_input_index + 2 ) ;

else

alpha = 1.0 - 1.0/inputsize;

}

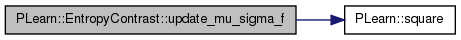

| void PLearn::EntropyContrast::update_mu_sigma_f | ( | const Vec & | f_x, |

| Vec & | mu, | ||

| Vec & | sigma | ||

| ) | [private] |

Given the output of the NNet it updates the running averages(mu, variance)

Definition at line 200 of file EntropyContrast.cc.

References alpha, and PLearn::square().

Referenced by train().

{

// :update mu_f_hat

mu = mu * alpha + f_x * (1-alpha) ;

// :update sigma_f_hat

sigma = alpha * (sigma) + (1-alpha) * square(f_x - mu) ;

}

| void PLearn::EntropyContrast::update_NNcontinuous | ( | ) | [private] |

update the parameters of the NNet from the regular cost

Definition at line 339 of file EntropyContrast.cc.

References bias_hidden, bias_output, grad_H_f_x_hat_wrt_bias_hidden, grad_H_f_x_hat_wrt_bias_output, grad_H_f_x_hat_wrt_v, grad_H_f_x_hat_wrt_w, grad_H_f_x_wrt_bias_hidden, grad_H_f_x_wrt_bias_output, grad_H_f_x_wrt_v, grad_H_f_x_wrt_w, i, j, learning_rate, n, nconstraints, nhidden, v, w, weight_decay_hidden, and weight_decay_output.

Referenced by train().

{

for (int i = 0 ; i < nhidden ; ++i)

for(int j = 0 ; j < n ; ++j)

v(i,j)-= learning_rate * (grad_H_f_x_wrt_v(i,j) - grad_H_f_x_hat_wrt_v(i,j)) + weight_decay_hidden * v(i,j) ;

for (int i = 0 ; i < nconstraints ; ++i)

for(int j = 0 ; j < nhidden ; ++j)

w(i,j)-= learning_rate * (grad_H_f_x_wrt_w(i,j) - grad_H_f_x_hat_wrt_w(i,j)) + weight_decay_output * w(i,j) ;

for(int j = 0 ; j < nhidden ; ++j)

bias_hidden[j] -= learning_rate * (grad_H_f_x_wrt_bias_hidden[j] - grad_H_f_x_hat_wrt_bias_hidden[j] );

for(int j = 0 ; j < nconstraints ; ++j)

bias_output[j] -= learning_rate * (grad_H_f_x_wrt_bias_output[j] - grad_H_f_x_hat_wrt_bias_output[j] );

}

| void PLearn::EntropyContrast::update_NNcontinuous_from_extra_cost | ( | ) | [private] |

update the parameters of the NNet from the extra cost given by the derivative(angle) cost

Definition at line 315 of file EntropyContrast.cc.

References bias_hidden, grad_extra_wrt_bias_hidden, grad_extra_wrt_v, grad_extra_wrt_w, i, j, learning_rate, n, nconstraints, nhidden, v, and w.

Referenced by train().

{

//TODO: maybe change the learning_rate used for the extra_cost

for (int i = 0 ; i < nhidden ; ++i) {

for(int j = 0 ; j < n ; ++j) {

v(i,j) -= learning_rate * grad_extra_wrt_v(i,j);

}

}

for (int i = 0 ; i < nconstraints ; ++i) {

for(int j = 0 ; j < nhidden ; ++j) {

w(i,j) -= learning_rate * grad_extra_wrt_w(i,j);

}

}

for(int j = 0 ; j < nhidden ; ++j) {

bias_hidden[j] -= learning_rate * grad_extra_wrt_bias_hidden[j];

}

}

Reimplemented from PLearn::PLearner.

Definition at line 232 of file EntropyContrast.h.

real PLearn::EntropyContrast::alpha [private] |

Definition at line 89 of file EntropyContrast.h.

Referenced by EntropyContrast(), train(), update_alpha(), and update_mu_sigma_f().

Vec PLearn::EntropyContrast::bias_hidden [private] |

Definition at line 119 of file EntropyContrast.h.

Referenced by build_(), compute_df_dx(), computeNNcontinuous_hidden(), initialize_NNcontinuous(), set_NNcontinuous_gradient(), set_NNcontinuous_gradient_from_extra_cost(), update_NNcontinuous(), and update_NNcontinuous_from_extra_cost().

Vec PLearn::EntropyContrast::bias_output [private] |

Definition at line 125 of file EntropyContrast.h.

Referenced by build_(), computeNNcontinuous_constraints(), initialize_NNcontinuous(), and update_NNcontinuous().

Definition at line 149 of file EntropyContrast.h.

Referenced by declareOptions(), and train().

Definition at line 148 of file EntropyContrast.h.

Referenced by declareOptions(), and train().

Definition at line 147 of file EntropyContrast.h.

Referenced by declareOptions(), and train().

the learning rate

Definition at line 158 of file EntropyContrast.h.

Referenced by declareOptions(), EntropyContrast(), and train().

Mat PLearn::EntropyContrast::df_dx [private] |

Definition at line 132 of file EntropyContrast.h.

Referenced by build_(), compute_extra_grad_wrt_df_dx(), and train().

Definition at line 67 of file EntropyContrast.h.

Referenced by declareOptions(), and EntropyContrast().

Definition at line 68 of file EntropyContrast.h.

Referenced by EntropyContrast().

Definition at line 151 of file EntropyContrast.h.

Referenced by declareOptions(), and EntropyContrast().

Vec PLearn::EntropyContrast::f_x [private] |

Definition at line 75 of file EntropyContrast.h.

Vec PLearn::EntropyContrast::f_x_hat [private] |

Definition at line 81 of file EntropyContrast.h.

real PLearn::EntropyContrast::full [private] |

Definition at line 129 of file EntropyContrast.h.

Referenced by initialize_NNcontinuous(), and train().

Vec PLearn::EntropyContrast::full_sum [private] |

Definition at line 127 of file EntropyContrast.h.

Referenced by build_(), and compute_diversity_cost().

Vec PLearn::EntropyContrast::g_x [private] |

Definition at line 117 of file EntropyContrast.h.

Definition at line 150 of file EntropyContrast.h.

Referenced by declareOptions(), and train().

Definition at line 78 of file EntropyContrast.h.

Definition at line 82 of file EntropyContrast.h.

Definition at line 76 of file EntropyContrast.h.

Mat PLearn::EntropyContrast::grad_C_wrt_df_dx [private] |

Definition at line 133 of file EntropyContrast.h.

Referenced by build_(), compute_extra_grad_wrt_df_dx(), and train().

Definition at line 135 of file EntropyContrast.h.

Referenced by set_NNcontinuous_gradient_from_extra_cost(), and update_NNcontinuous_from_extra_cost().

Definition at line 135 of file EntropyContrast.h.

Mat PLearn::EntropyContrast::grad_extra_wrt_v [private] |

Definition at line 134 of file EntropyContrast.h.

Referenced by build_(), set_NNcontinuous_gradient_from_extra_cost(), and update_NNcontinuous_from_extra_cost().

Mat PLearn::EntropyContrast::grad_extra_wrt_w [private] |

Definition at line 134 of file EntropyContrast.h.

Referenced by build_(), set_NNcontinuous_gradient_from_extra_cost(), and update_NNcontinuous_from_extra_cost().

Definition at line 111 of file EntropyContrast.h.

Referenced by build_(), train(), and update_NNcontinuous().

Definition at line 110 of file EntropyContrast.h.

Referenced by build_(), train(), and update_NNcontinuous().

Definition at line 105 of file EntropyContrast.h.

Referenced by build_(), train(), and update_NNcontinuous().

Definition at line 103 of file EntropyContrast.h.

Referenced by build_(), train(), and update_NNcontinuous().

Definition at line 108 of file EntropyContrast.h.

Referenced by build_(), train(), and update_NNcontinuous().

Definition at line 107 of file EntropyContrast.h.

Referenced by build_(), train(), and update_NNcontinuous().

Mat PLearn::EntropyContrast::grad_H_f_x_wrt_v [private] |

Definition at line 104 of file EntropyContrast.h.

Referenced by build_(), set_NNcontinuous_gradient(), train(), and update_NNcontinuous().

Mat PLearn::EntropyContrast::grad_H_f_x_wrt_w [private] |

Definition at line 102 of file EntropyContrast.h.

Referenced by build_(), set_NNcontinuous_gradient(), train(), and update_NNcontinuous().

Mat PLearn::EntropyContrast::grad_H_g_wrt_w [private] |

Definition at line 113 of file EntropyContrast.h.

Referenced by build_().

The number of constraints.

Definition at line 155 of file EntropyContrast.h.

Definition at line 157 of file EntropyContrast.h.

Referenced by build_(), declareOptions(), EntropyContrast(), train(), update_NNcontinuous(), and update_NNcontinuous_from_extra_cost().

Vec PLearn::EntropyContrast::mu_f [private] |

the number of hidden units, in the one existing hidden layer

Definition at line 94 of file EntropyContrast.h.

Referenced by build_(), compute_diversity_cost(), initialize_NNcontinuous(), and train().

Vec PLearn::EntropyContrast::mu_f_hat [private] |

Definition at line 97 of file EntropyContrast.h.

Referenced by build_(), initialize_NNcontinuous(), and train().

Vec PLearn::EntropyContrast::mu_f_square [private] |

Definition at line 100 of file EntropyContrast.h.

Referenced by build_(), initialize_NNcontinuous(), and train().

Vec PLearn::EntropyContrast::mu_g [private] |

Definition at line 116 of file EntropyContrast.h.

Referenced by build_(), and initialize_NNcontinuous().

int PLearn::EntropyContrast::n [private] |

Definition at line 66 of file EntropyContrast.h.

Referenced by build_(), compute_extra_grad_wrt_df_dx(), computeNNcontinuous_hidden(), EntropyContrast(), set_NNcontinuous_gradient(), set_NNcontinuous_gradient_from_extra_cost(), train(), update_NNcontinuous(), and update_NNcontinuous_from_extra_cost().

Definition at line 162 of file EntropyContrast.h.

Definition at line 153 of file EntropyContrast.h.

Referenced by build_(), compute_diversity_cost(), compute_extra_grad_wrt_df_dx(), computeNNcontinuous_constraints(), declareOptions(), EntropyContrast(), outputsize(), set_NNcontinuous_gradient(), set_NNcontinuous_gradient_from_extra_cost(), train(), update_NNcontinuous(), and update_NNcontinuous_from_extra_cost().

int PLearn::EntropyContrast::nhidden [private] |

Definition at line 92 of file EntropyContrast.h.

Referenced by build_(), compute_df_dx(), computeNNcontinuous_constraints(), computeNNcontinuous_hidden(), declareOptions(), EntropyContrast(), set_NNcontinuous_gradient(), set_NNcontinuous_gradient_from_extra_cost(), update_NNcontinuous(), and update_NNcontinuous_from_extra_cost().

Vec PLearn::EntropyContrast::sigma_f [private] |

Definition at line 95 of file EntropyContrast.h.

Referenced by build_(), compute_diversity_cost(), initialize_NNcontinuous(), and train().

Vec PLearn::EntropyContrast::sigma_f_hat [private] |

Definition at line 98 of file EntropyContrast.h.

Referenced by build_(), initialize_NNcontinuous(), and train().

Vec PLearn::EntropyContrast::sigma_f_square [private] |

Definition at line 101 of file EntropyContrast.h.

Referenced by build_(), initialize_NNcontinuous(), and train().

Vec PLearn::EntropyContrast::sigma_g [private] |

Definition at line 115 of file EntropyContrast.h.

Referenced by build_(), and initialize_NNcontinuous().

Definition at line 163 of file EntropyContrast.h.

VMat PLearn::EntropyContrast::test_set [private] |

Definition at line 84 of file EntropyContrast.h.

Referenced by declareOptions().

Mat PLearn::EntropyContrast::v [private] |

Definition at line 71 of file EntropyContrast.h.

Referenced by build_(), compute_df_dx(), computeNNcontinuous_hidden(), initialize_NNcontinuous(), set_NNcontinuous_gradient(), set_NNcontinuous_gradient_from_extra_cost(), update_NNcontinuous(), and update_NNcontinuous_from_extra_cost().

VMat PLearn::EntropyContrast::validation_set [private] |

Validation set used in some contexts.

Reimplemented from PLearn::PLearner.

Definition at line 85 of file EntropyContrast.h.

Mat PLearn::EntropyContrast::w [private] |

Definition at line 70 of file EntropyContrast.h.

Referenced by build_(), compute_df_dx(), computeNNcontinuous_constraints(), initialize_NNcontinuous(), set_NNcontinuous_gradient(), set_NNcontinuous_gradient_from_extra_cost(), update_NNcontinuous(), and update_NNcontinuous_from_extra_cost().

Definition at line 161 of file EntropyContrast.h.

Referenced by declareOptions(), and update_NNcontinuous().

Definition at line 160 of file EntropyContrast.h.

Referenced by declareOptions(), and update_NNcontinuous().

Definition at line 159 of file EntropyContrast.h.

Referenced by declareOptions(), EntropyContrast(), and train().

Definition at line 159 of file EntropyContrast.h.

Referenced by declareOptions(), EntropyContrast(), and train().

the decay factor of the learning rate

Definition at line 159 of file EntropyContrast.h.

Referenced by declareOptions(), EntropyContrast(), and train().

Vec PLearn::EntropyContrast::x [private] |

Definition at line 74 of file EntropyContrast.h.

Vec PLearn::EntropyContrast::x_hat [private] |

Definition at line 80 of file EntropyContrast.h.

Vec PLearn::EntropyContrast::z_x [private] |

Definition at line 120 of file EntropyContrast.h.

Vec PLearn::EntropyContrast::z_x_hat [private] |

Definition at line 122 of file EntropyContrast.h.

1.7.4

1.7.4