|

PLearn 0.1

|

|

PLearn 0.1

|

#include <NNet.h>

Public Member Functions | |

| NNet () | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual NNet * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | forget () |

| *** SUBCLASS WRITING: *** | |

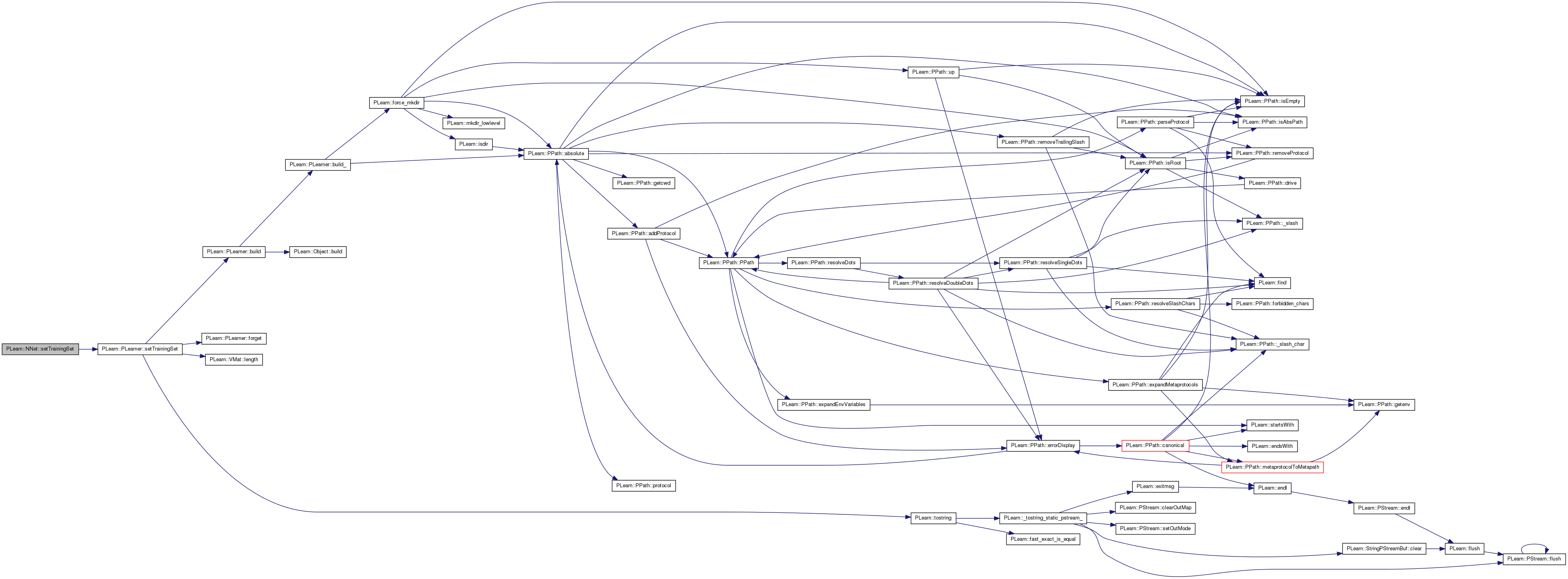

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Overridden to support the case where noutputs==-1, in which case noutputs is set automatically from the targetsize of the training set (correct for the regression case; should be extended to cover classification scenarios in the future as well.) | |

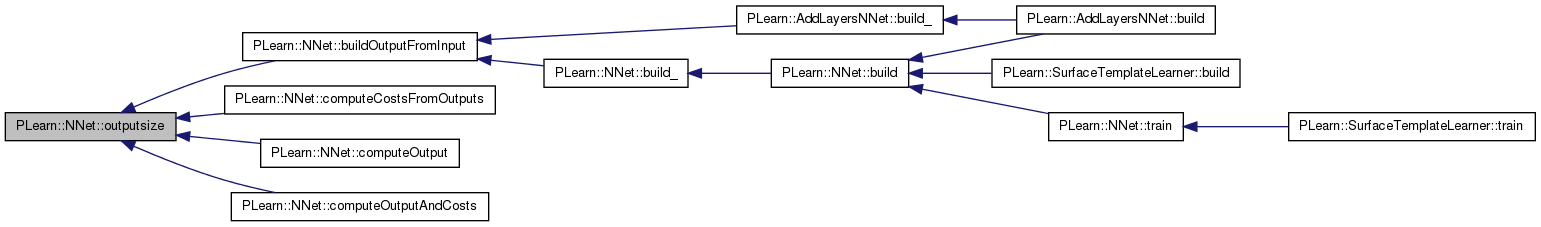

| virtual int | outputsize () const |

| SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options. | |

| virtual TVec< string > | getTrainCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual TVec< string > | getTestCostNames () const |

| *** SUBCLASS WRITING: *** | |

| virtual void | train () |

| *** SUBCLASS WRITING: *** | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| *** SUBCLASS WRITING: *** | |

| virtual void | computeOutputAndCosts (const Vec &input, const Vec &target, Vec &output, Vec &costs) const |

| Default calls computeOutput and computeCostsFromOutputs. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| *** SUBCLASS WRITING: *** | |

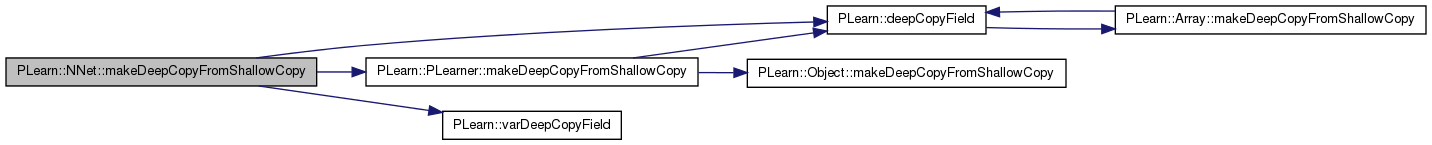

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual Mat | getW1 () |

| Methods to get the network's (learned) parameters. | |

| virtual Mat | getW2 () |

| virtual Mat | getWdirect () |

| virtual Mat | getWout () |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Vec | paramsvalues |

| Var | input |

| Var | target |

| Var | sampleweight |

| Var | w1 |

| Var | w2 |

| Var | wout |

| Var | outbias |

| Var | wdirect |

| Var | wrec |

| VarArray | v1 |

| Matrices used for the quadratic term in the 'ratio' transfer function. | |

| VarArray | v2 |

| Var | hidden_layer |

| Func | input_to_output |

| Func | test_costf |

| Func | output_and_target_to_cost |

| int | nhidden |

| number of hidden units in first hidden layer (default:0) | |

| int | nhidden2 |

| number of hidden units in second hidden layer (default:0) | |

| int | noutputs |

| Number of output units. | |

| bool | operate_on_bags |

| int | max_bag_size |

| real | weight_decay |

| real | bias_decay |

| real | layer1_weight_decay |

| real | layer1_bias_decay |

| real | layer2_weight_decay |

| real | layer2_bias_decay |

| real | output_layer_weight_decay |

| real | output_layer_bias_decay |

| real | direct_in_to_out_weight_decay |

| real | classification_regularizer |

| real | margin |

| bool | fixed_output_weights |

| int | rbf_layer_size |

| bool | first_class_is_junk |

| string | penalty_type |

| bool | L1_penalty |

| real | input_reconstruction_penalty |

| bool | direct_in_to_out |

| string | output_transfer_func |

| string | hidden_transfer_func |

| real | interval_minval |

| real | interval_maxval |

| bool | do_not_change_params |

| Var | first_hidden_layer |

| bool | first_hidden_layer_is_output |

| bool | transpose_first_hidden_layer |

| int | n_non_params_in_first_hidden_layer |

| TVec< string > | cost_funcs |

| Cost functions. | |

| PP< Optimizer > | optimizer |

| int | batch_size |

| string | initialization_method |

| int | ratio_rank |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Protected Member Functions | |

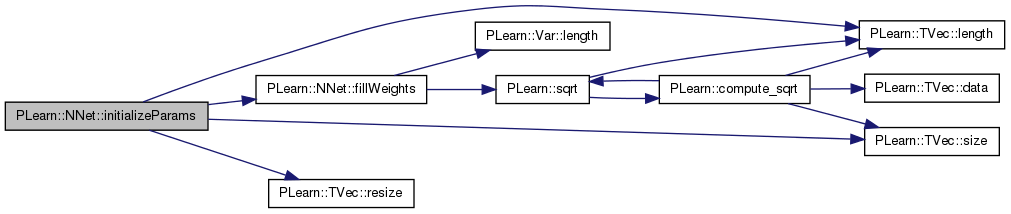

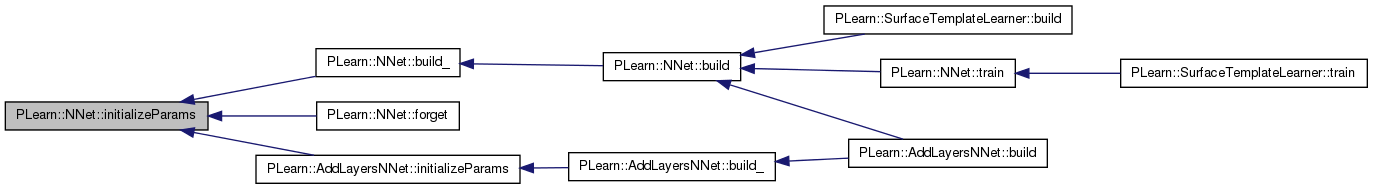

| virtual void | initializeParams (bool set_seed=true) |

| Initialize the parameters. | |

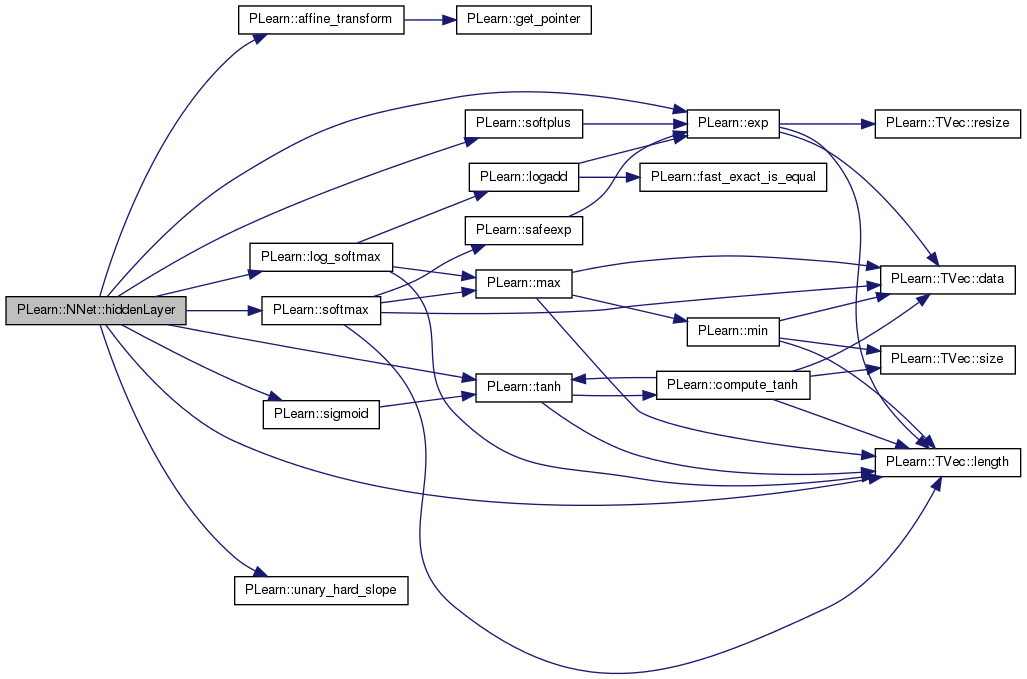

| Var | hiddenLayer (const Var &input, const Var &weights, string transfer_func="default", VarArray *ratio_quad_weights=NULL) |

| Return a variable that is the hidden layer corresponding to given input and weights. | |

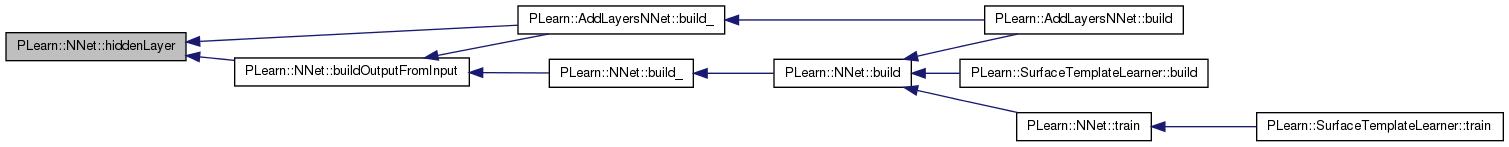

| void | buildOutputFromInput (const Var &the_input, Var &hidden_layer, Var &before_transfer_func) |

| Build the output of the neural network, from the given input. | |

| void | buildBagOutputFromBagInputs (const Var &input, Var &before_transfer_func, const Var &bag_inputs, const Var &bag_size, Var &bag_output) |

| Build the output for a whole bag, from the network defined by the 'input' to 'before_transfer_func' variables. | |

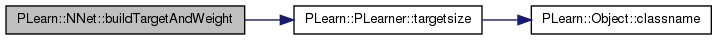

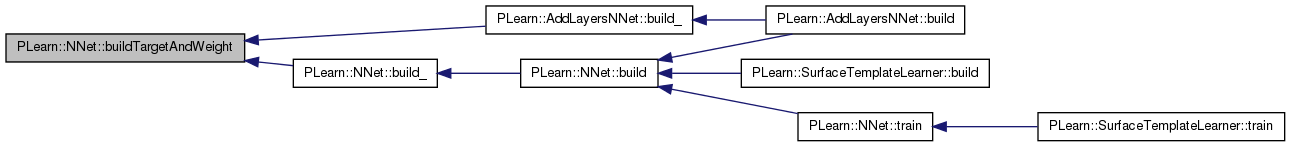

| void | buildTargetAndWeight () |

| Builds the target and sampleweight variables. | |

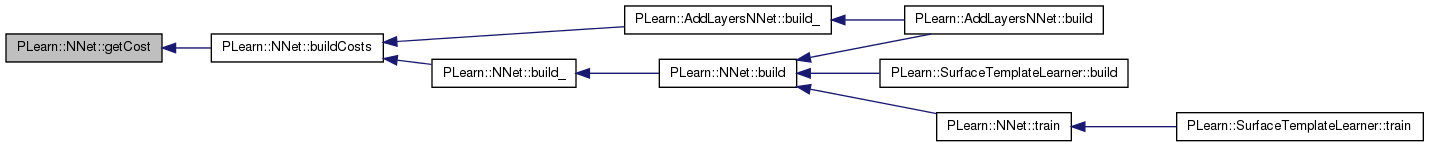

| void | buildCosts (const Var &output, const Var &target, const Var &hidden_layer, const Var &before_transfer_func) |

| Build the costs variable from other variables. | |

| virtual Var | getCost (const string &costname, const Var &output, const Var &target, const Var &before_transfer_func) |

| Return the cost corresponding to the given cost name. | |

| void | buildFuncs (const Var &the_input, const Var &the_output, const Var &the_target, const Var &the_sampleweight, const Var &the_bag_size) |

| Build the various functions used in the network. | |

| void | applyTransferFunc (const Var &before_transfer_func, Var &output) |

| Compute the final output from the activations of the output units. | |

| void | fillWeights (const Var &weights, bool clear_first_row) |

| Fill a matrix of weights according to the 'initialization_method' specified. | |

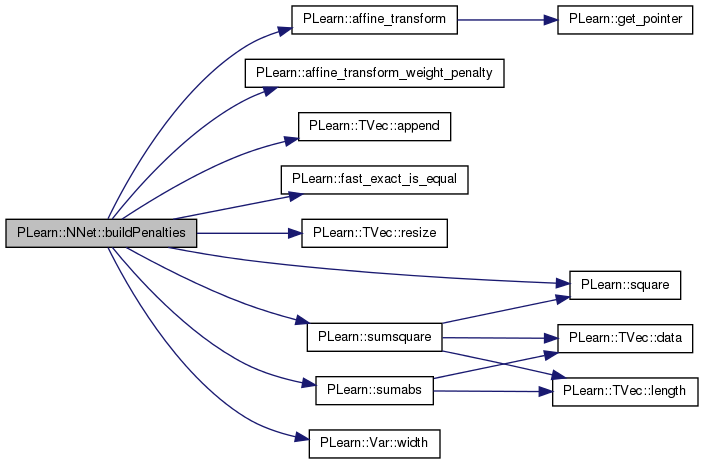

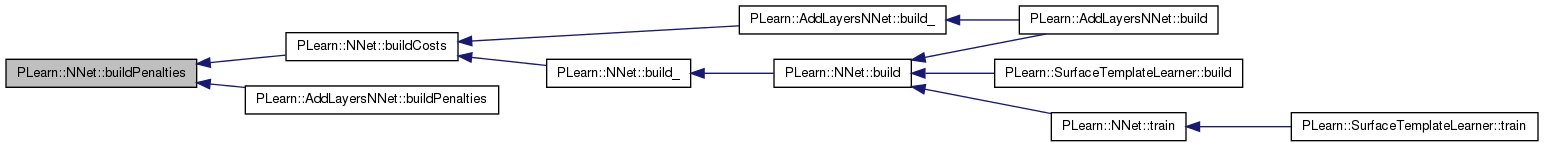

| virtual void | buildPenalties (const Var &hidden_layer) |

| Fill the costs penalties. | |

Static Protected Member Functions | |

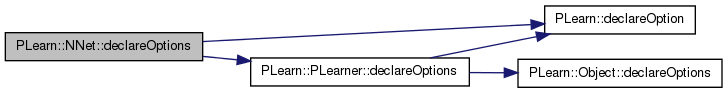

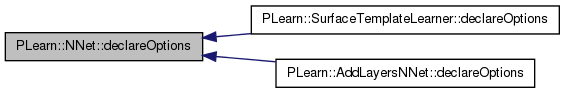

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Var | rbf_centers |

| Var | rbf_sigmas |

| Var | junk_prob |

| Var | alpha_adaboost |

| Var | output |

| Var | predicted_input |

| VarArray | costs |

| VarArray | penalties |

| Var | training_cost |

| Var | test_costs |

| VarArray | invars |

| VarArray | params |

| Var | bag_inputs |

| Used to store the inputs in a bag when 'operate_on_bags' is true. | |

| Mat | store_bag_inputs |

| Used to store test inputs in a bag when 'operate_on_bags' is true. | |

| Var | bag_size |

| Used to store the size of a bag when 'operate_on_bags' is true. | |

| Vec | store_bag_size |

| Used to remember the size of a test bag. | |

| int | n_training_bags |

| Number of bags in the training set. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| **** SUBCLASS WRITING: **** | |

typedef PLearner PLearn::NNet::inherited [private] |

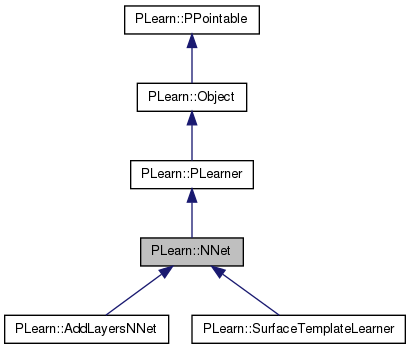

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

| PLearn::NNet::NNet | ( | ) |

Definition at line 101 of file NNet.cc.

References PLearn::PLearner::random_gen.

: n_training_bags(-1), nhidden(0), nhidden2(0), noutputs(0), operate_on_bags(false), max_bag_size(20), weight_decay(0), bias_decay(0), layer1_weight_decay(0), layer1_bias_decay(0), layer2_weight_decay(0), layer2_bias_decay(0), output_layer_weight_decay(0), output_layer_bias_decay(0), direct_in_to_out_weight_decay(0), classification_regularizer(0), margin(1), fixed_output_weights(0), rbf_layer_size(0), first_class_is_junk(1), penalty_type("L2_square"), L1_penalty(false), input_reconstruction_penalty(0), direct_in_to_out(false), output_transfer_func(""), hidden_transfer_func("tanh"), interval_minval(0), interval_maxval(1), do_not_change_params(false), first_hidden_layer_is_output(false), transpose_first_hidden_layer(false), n_non_params_in_first_hidden_layer(0), batch_size(1), initialization_method("uniform_linear"), ratio_rank(0) { // Use the generic PLearner random number generator. random_gen = new PRandom(); }

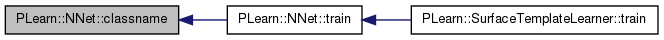

| string PLearn::NNet::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

| OptionList & PLearn::NNet::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

| RemoteMethodMap & PLearn::NNet::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

| Object * PLearn::NNet::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

| StaticInitializer NNet::_static_initializer_ & PLearn::NNet::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

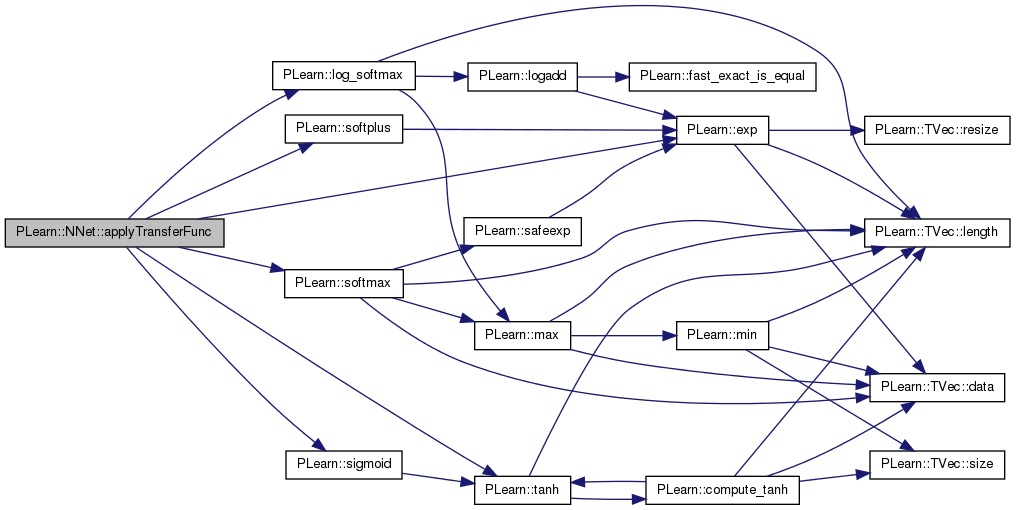

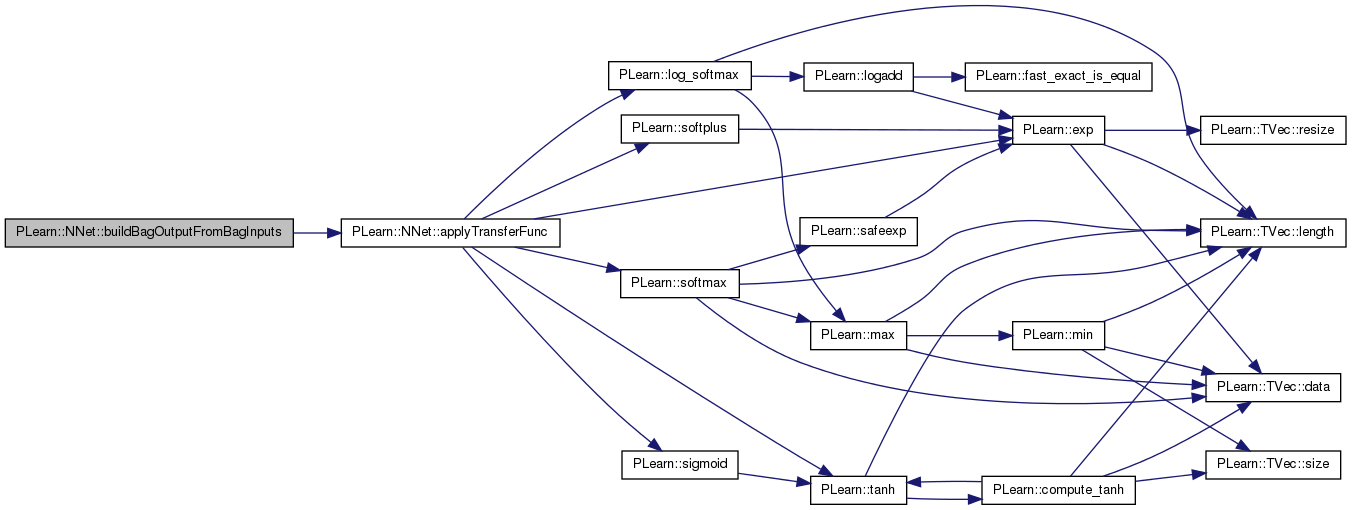

Compute the final output from the activations of the output units.

Definition at line 796 of file NNet.cc.

References PLearn::exp(), interval_maxval, interval_minval, PLearn::log_softmax(), output_transfer_func, PLERROR, PLearn::sigmoid(), PLearn::softmax(), PLearn::softplus(), and PLearn::tanh().

Referenced by buildBagOutputFromBagInputs(), and buildOutputFromInput().

{

size_t p=0;

if(output_transfer_func!="" && output_transfer_func!="none")

{

if(output_transfer_func=="tanh")

output = tanh(before_transfer_func);

else if(output_transfer_func=="sigmoid")

output = sigmoid(before_transfer_func);

else if(output_transfer_func=="softplus")

output = softplus(before_transfer_func);

else if(output_transfer_func=="exp")

output = exp(before_transfer_func);

else if(output_transfer_func=="softmax")

output = softmax(before_transfer_func);

else if (output_transfer_func == "log_softmax")

output = log_softmax(before_transfer_func);

else if ((p=output_transfer_func.find("interval"))!=string::npos)

{

size_t q = output_transfer_func.find(",");

interval_minval = atof(output_transfer_func.substr(p+1,q-(p+1)).c_str());

size_t r = output_transfer_func.find(")");

interval_maxval = atof(output_transfer_func.substr(q+1,r-(q+1)).c_str());

output = interval_minval + (interval_maxval - interval_minval)*sigmoid(before_transfer_func);

}

else

PLERROR("In NNet::applyTransferFunc() -Unknown value for the "

"'output_transfer_func' option: %s",

output_transfer_func.c_str());

}

}

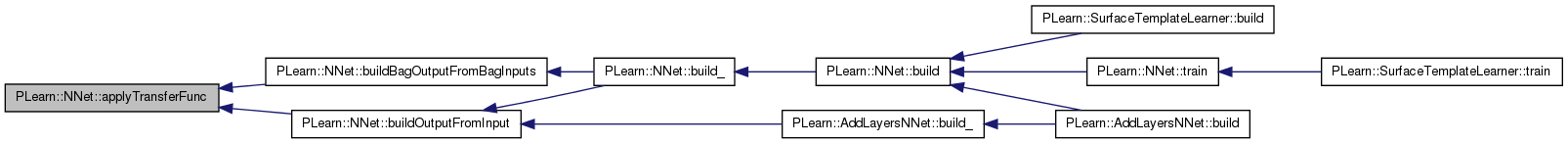

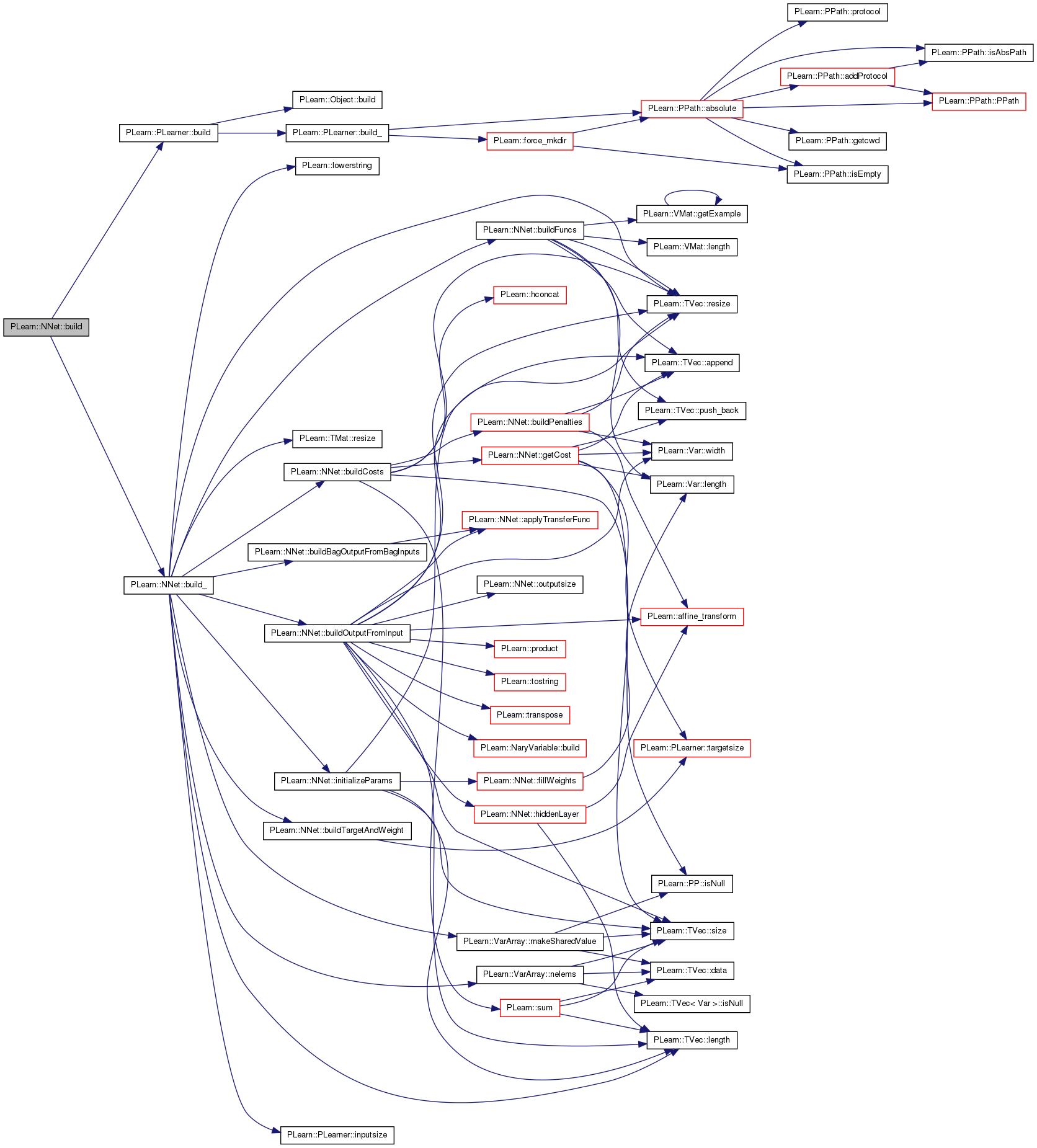

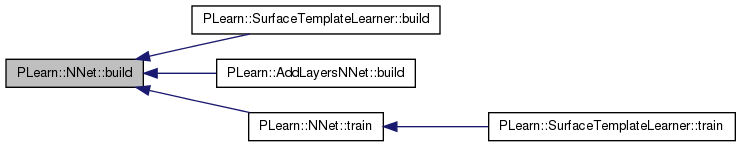

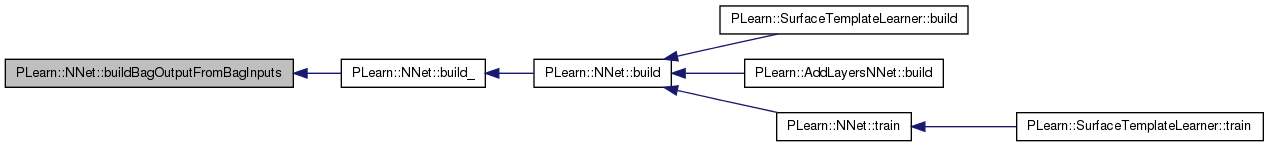

| void PLearn::NNet::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

Definition at line 440 of file NNet.cc.

References PLearn::PLearner::build(), and build_().

Referenced by PLearn::SurfaceTemplateLearner::build(), PLearn::AddLayersNNet::build(), and train().

{

inherited::build();

build_();

}

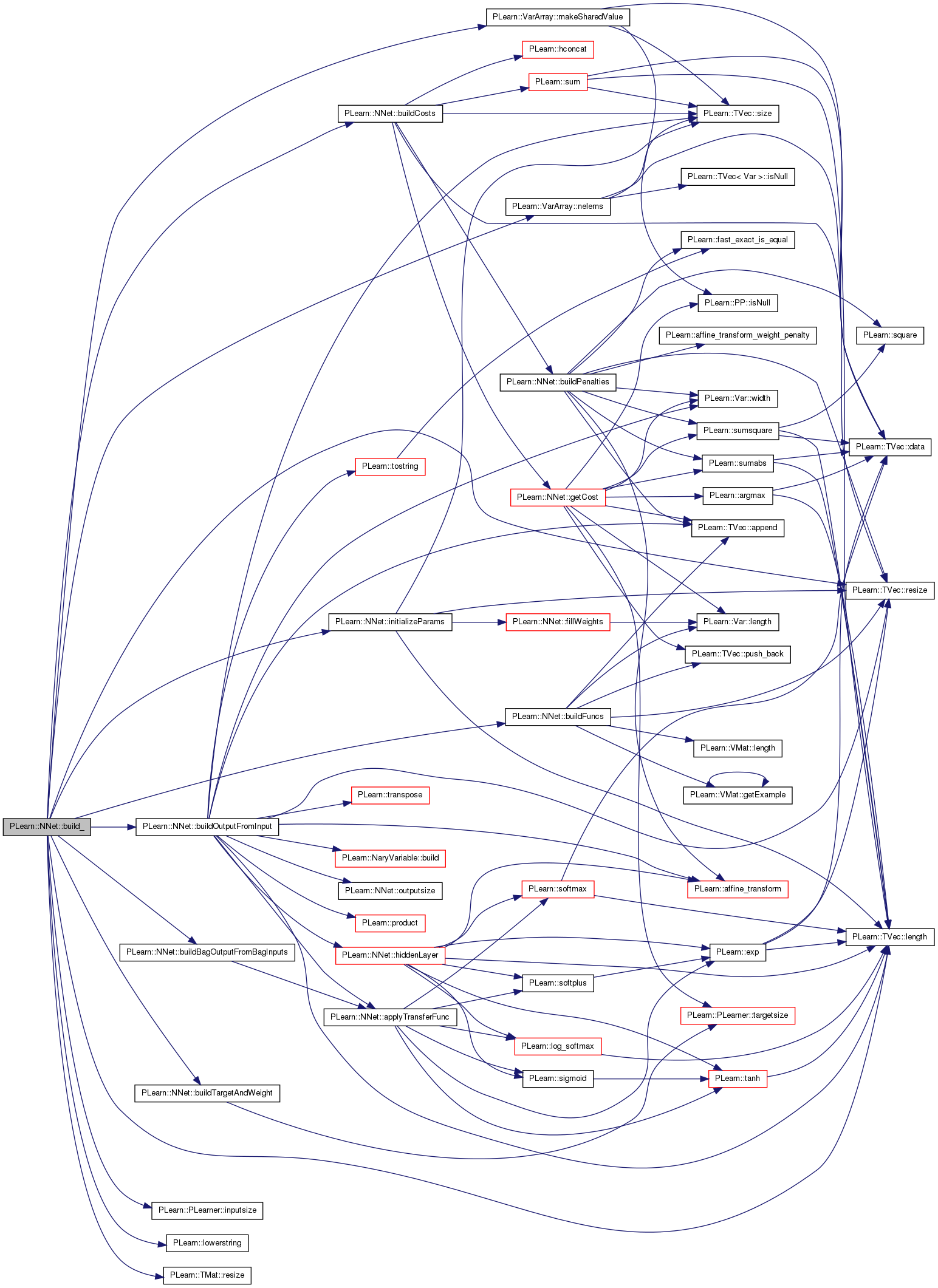

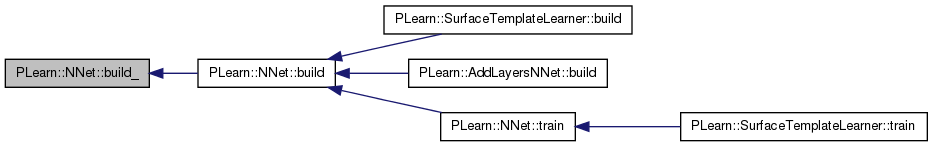

| void PLearn::NNet::build_ | ( | ) | [private] |

**** SUBCLASS WRITING: ****

This method should finish building of the object, according to set 'options', in *any* situation.

Typical situations include:

You can assume that the parent class' build_() has already been called.

A typical build method will want to know the inputsize(), targetsize() and outputsize(), and may also want to check whether train_set->hasWeights(). All these methods require a train_set to be set, so the first thing you may want to do, is check if(train_set), before doing any heavy building...

Note: build() is always called by setTrainingSet.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

Definition at line 463 of file NNet.cc.

References bag_inputs, bag_size, buildBagOutputFromBagInputs(), buildCosts(), buildFuncs(), buildOutputFromInput(), buildTargetAndWeight(), do_not_change_params, hidden_layer, initializeParams(), input, PLearn::PLearner::inputsize(), PLearn::PLearner::inputsize_, L1_penalty, PLearn::TVec< T >::length(), PLearn::lowerstring(), PLearn::VarArray::makeSharedValue(), max_bag_size, PLearn::VarArray::nelems(), noutputs, operate_on_bags, optimizer, output, params, paramsvalues, penalty_type, PLDEPRECATED, PLERROR, PLWARNING, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), sampleweight, store_bag_inputs, store_bag_size, target, PLearn::PLearner::targetsize_, and PLearn::PLearner::weightsize_.

Referenced by build().

{

/*

* Create Topology Var Graph

*/

// Don't do anything if we don't have a train_set

// It's the only one who knows the inputsize and targetsize anyway...

if(inputsize_>=0 && targetsize_>=0 && weightsize_>=0)

{

// Ensure we have some inputs

if (noutputs == 0)

PLERROR("NNet: the option 'noutputs' must be specified");

// Initialize input.

input = Var(1, inputsize(), "input");

// Initialize bag stuff.

if (operate_on_bags) {

bag_size = Var(1, 1, "bag_size");

store_bag_size.resize(1);

store_bag_inputs.resize(max_bag_size, inputsize());

}

params.resize(0);

Var before_transfer_func;

// Build main network graph.

buildOutputFromInput(input, hidden_layer, before_transfer_func);

// When operating on bags, use this network to compute the output on a

// whole bag, which also becomes the output of the network.

if (operate_on_bags) {

bag_inputs = Var(max_bag_size, inputsize(), "bag_inputs");

buildBagOutputFromBagInputs(input, before_transfer_func,

bag_inputs, bag_size, output);

}

// Build target and weight variables.

buildTargetAndWeight();

// Build costs.

if( L1_penalty )

{

PLDEPRECATED("Option \"L1_penalty\" deprecated. Please use \"penalty_type = L1\" instead.");

L1_penalty = 0;

penalty_type = "L1";

}

string pt = lowerstring( penalty_type );

if( pt == "l1" )

penalty_type = "L1";

else if( pt == "l1_square" || pt == "l1 square" || pt == "l1square" )

penalty_type = "L1_square";

else if( pt == "l2_square" || pt == "l2 square" || pt == "l2square" )

penalty_type = "L2_square";

else if( pt == "l2" )

{

PLWARNING("L2 penalty not supported, assuming you want L2 square");

penalty_type = "L2_square";

}

else

PLERROR("penalty_type \"%s\" not supported", penalty_type.c_str());

buildCosts(output, target, hidden_layer, before_transfer_func);

// Shared values hack...

if (!do_not_change_params) {

if(paramsvalues.length() == params.nelems())

params << paramsvalues;

else

{

paramsvalues.resize(params.nelems());

initializeParams();

if(optimizer)

optimizer->reset();

}

params.makeSharedValue(paramsvalues);

}

// Build functions.

buildFuncs(operate_on_bags ? bag_inputs : input,

output, target, sampleweight,

operate_on_bags ? bag_size : NULL);

}

}

| void PLearn::NNet::buildBagOutputFromBagInputs | ( | const Var & | input, |

| Var & | before_transfer_func, | ||

| const Var & | bag_inputs, | ||

| const Var & | bag_size, | ||

| Var & | bag_output | ||

| ) | [protected] |

Build the output for a whole bag, from the network defined by the 'input' to 'before_transfer_func' variables.

'before_transfer_func' is modified so as to hold the activations for the bag rather than for individual samples.

Definition at line 449 of file NNet.cc.

References applyTransferFunc().

Referenced by build_().

{

Func in_to_out = Func(input, before_transfer_func);

Var tmp_out = new UnfoldedFuncVariable(bag_inputs, in_to_out, false,

bag_size);

before_transfer_func = new LogAddVariable(tmp_out, bag_size, "per_column");

applyTransferFunc(before_transfer_func, bag_output);

}

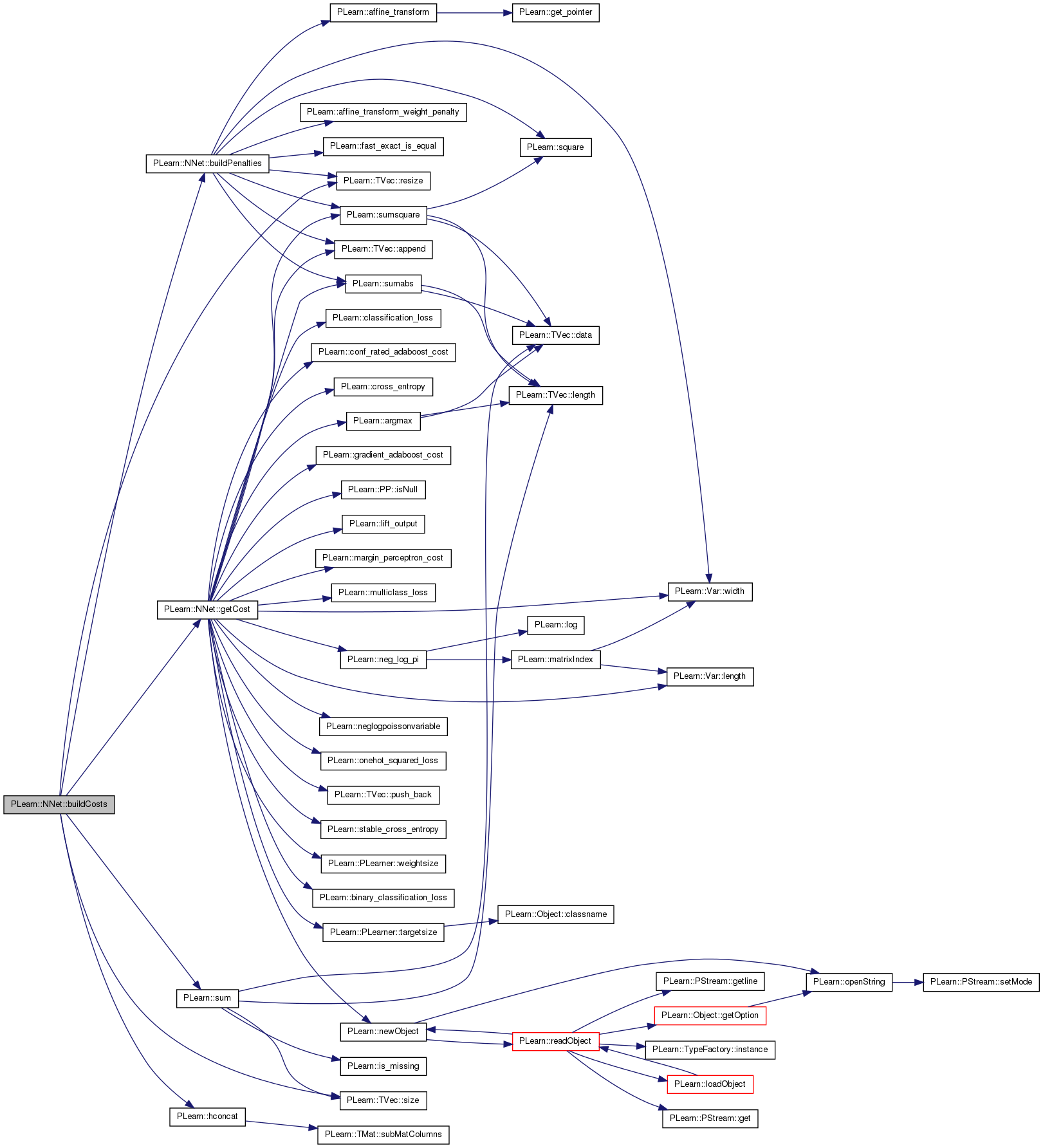

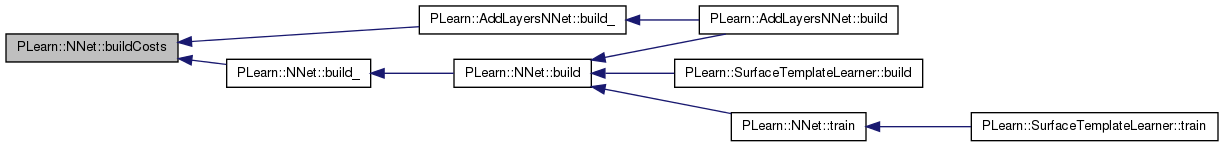

| void PLearn::NNet::buildCosts | ( | const Var & | output, |

| const Var & | target, | ||

| const Var & | hidden_layer, | ||

| const Var & | before_transfer_func | ||

| ) | [protected] |

Build the costs variable from other variables.

Definition at line 576 of file NNet.cc.

References buildPenalties(), cost_funcs, costs, getCost(), PLearn::hconcat(), penalties, PLERROR, PLearn::TVec< T >::resize(), sampleweight, PLearn::TVec< T >::size(), PLearn::sum(), test_costs, training_cost, and PLearn::PLearner::weightsize_.

Referenced by PLearn::AddLayersNNet::build_(), and build_().

{

int ncosts = cost_funcs.size();

if(ncosts<=0)

PLERROR("In NNet::buildCosts - Empty cost_funcs : must at least specify the cost function to optimize!");

costs.resize(ncosts);

for (int k=0; k<ncosts; k++)

costs[k] = getCost(cost_funcs[k], the_output, the_target, before_transfer_func);

/*

* weight and bias decay penalty

*/

// create penalties

buildPenalties(hidden_layer);

test_costs = hconcat(costs);

// Apply penalty to cost.

// If there is no penalty, we still add costs[0] as the first cost, in

// order to keep the same number of costs as if there was a penalty.

if(penalties.size() != 0) {

if (weightsize_>0)

// only multiply by sampleweight if there are weights

training_cost = hconcat(sampleweight*sum(hconcat(costs[0] & penalties))

& (test_costs*sampleweight));

else {

training_cost = hconcat(sum(hconcat(costs[0] & penalties)) & test_costs);

}

}

else {

if(weightsize_>0) {

// only multiply by sampleweight if there are weights

training_cost = hconcat(costs[0]*sampleweight & test_costs*sampleweight);

} else {

training_cost = hconcat(costs[0] & test_costs);

}

}

training_cost->setName("training_cost");

test_costs->setName("test_costs");

the_output->setName("output");

}

| void PLearn::NNet::buildFuncs | ( | const Var & | the_input, |

| const Var & | the_output, | ||

| const Var & | the_target, | ||

| const Var & | the_sampleweight, | ||

| const Var & | the_bag_size | ||

| ) | [protected] |

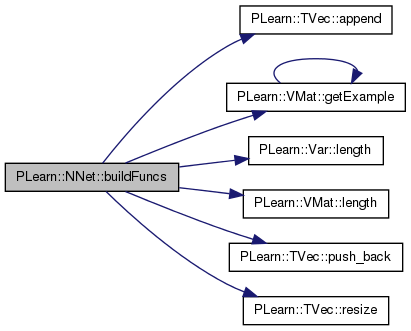

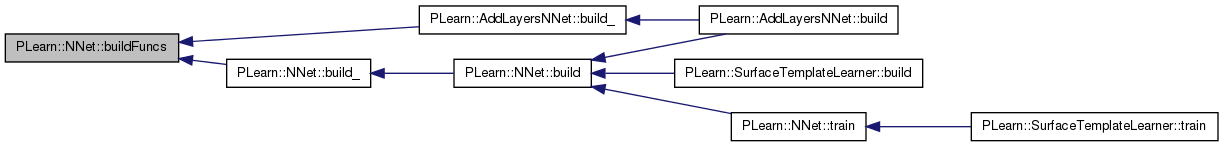

Build the various functions used in the network.

Definition at line 622 of file NNet.cc.

References PLearn::TVec< T >::append(), PLearn::VMat::getExample(), i, input, input_to_output, invars, PLearn::Var::length(), PLearn::VMat::length(), output_and_target_to_cost, PLearn::TVec< T >::push_back(), PLearn::TVec< T >::resize(), target, test_costf, test_costs, and PLearn::PLearner::train_set.

Referenced by PLearn::AddLayersNNet::build_(), and build_().

{

invars.resize(0);

VarArray outvars;

VarArray testinvars;

if (the_input)

{

invars.push_back(the_input);

testinvars.push_back(the_input);

}

if (the_bag_size) {

invars.append(the_bag_size);

testinvars.append(the_bag_size);

}

if (the_output)

outvars.push_back(the_output);

if(the_target)

{

invars.push_back(the_target);

testinvars.push_back(the_target);

outvars.push_back(the_target);

}

if(the_sampleweight)

{

invars.push_back(the_sampleweight);

}

input_to_output = Func(the_input, the_output);

test_costf = Func(testinvars, the_output&test_costs);

test_costf->recomputeParents();

output_and_target_to_cost = Func(outvars, test_costs);

// Since there will be a fprop() in the network, we need to make sure the

// input is valid.

if (train_set && train_set->length() >= the_input->length()) {

Vec input, target;

real weight;

for (int i = 0; i < the_input->length(); i++) {

train_set->getExample(i, input, target, weight);

the_input->matValue(i) << input;

}

}

output_and_target_to_cost->recomputeParents();

}

| void PLearn::NNet::buildOutputFromInput | ( | const Var & | the_input, |

| Var & | hidden_layer, | ||

| Var & | before_transfer_func | ||

| ) | [protected] |

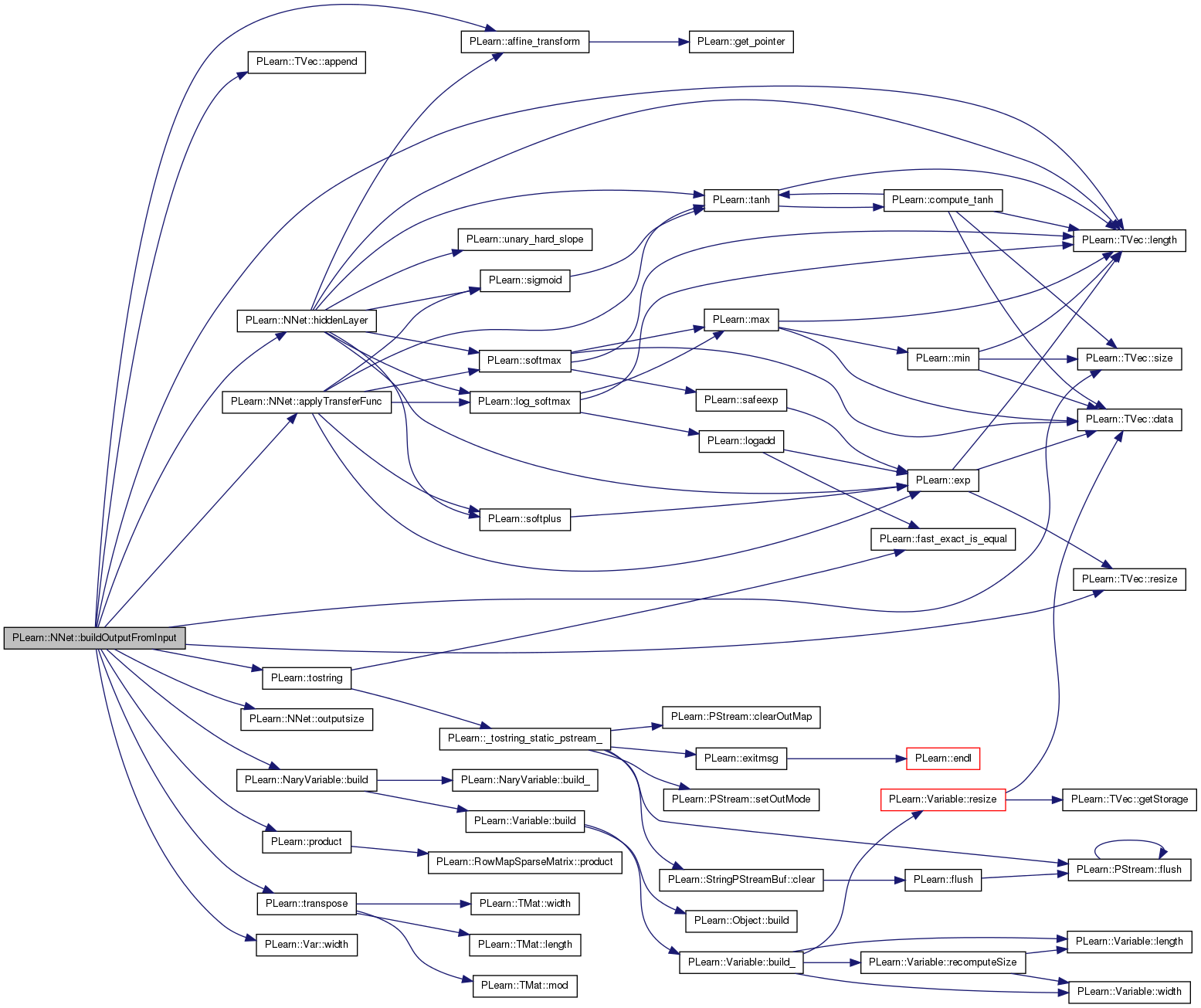

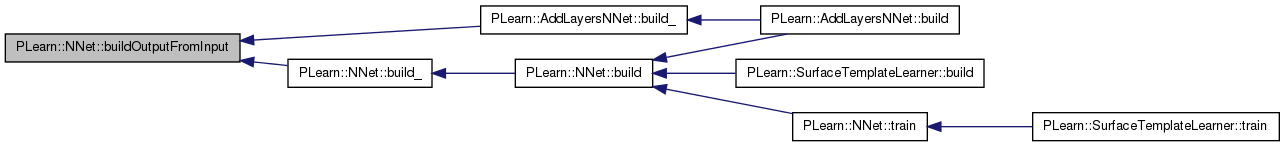

Build the output of the neural network, from the given input.

The hidden layer is also made available in the 'hidden_layer' parameter. The output before the transfer function is applied is also made available in the 'before_transfer_func' parameter.

Definition at line 668 of file NNet.cc.

References PLearn::affine_transform(), PLearn::TVec< T >::append(), applyTransferFunc(), PLearn::NaryVariable::build(), direct_in_to_out, first_class_is_junk, first_hidden_layer, first_hidden_layer_is_output, fixed_output_weights, hidden_layer, hidden_transfer_func, hiddenLayer(), i, junk_prob, PLearn::TVec< T >::length(), n_non_params_in_first_hidden_layer, nhidden, nhidden2, outbias, output, outputsize(), params, PLASSERT, PLERROR, PLearn::product(), ratio_rank, rbf_centers, rbf_layer_size, rbf_sigmas, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::tostring(), PLearn::transpose(), transpose_first_hidden_layer, v1, v2, PLearn::NaryVariable::varray, w1, w2, wdirect, PLearn::Var::width(), and wout.

Referenced by PLearn::AddLayersNNet::build_(), and build_().

{

output = the_input;

// First hidden layer.

if (first_hidden_layer)

{

NaryVariable* layer_var = dynamic_cast<NaryVariable*>((Variable*)first_hidden_layer);

if (!layer_var)

PLERROR("In NNet::buildOutputFromInput - 'first_hidden_layer' should be "

"from a subclass of NaryVariable");

if (layer_var->varray.size() < 1)

layer_var->varray.resize(1);

layer_var->varray[0] =

transpose_first_hidden_layer ? transpose(output)

: output; // Here output = NNet input.

layer_var->build(); // make sure everything is consistent and finish the build

if (layer_var->varray.size()<2)

PLERROR("In NNet::buildOutputFromInput - 'first_hidden_layer' should have parameters");

int index_max_param =

layer_var->varray.length() - n_non_params_in_first_hidden_layer;

for (int i = 1; i < index_max_param; i++)

params.append(layer_var->varray[i]);

hidden_layer = transpose_first_hidden_layer ? transpose(layer_var)

: layer_var;

output = hidden_layer;

}

else if(nhidden>0)

{

w1 = Var(1 + the_input->width(), nhidden, "w1");

params.append(w1);

if (hidden_transfer_func == "ratio") {

v1.resize(ratio_rank > 0 ? ratio_rank

: ratio_rank == -1 ? the_input->width()

: 0);

for (int i = 0; i < v1.length(); i++) {

v1[i] = Var(the_input->width(), nhidden, "v1[" + tostring(i) + "]");

params.append(v1[i]);

}

}

hidden_layer = hiddenLayer(output, w1, "default", &v1);

output = hidden_layer;

// TODO BEWARE! This is not the same 'hidden_layer' as before.

}

// second hidden layer

if(nhidden2>0)

{

PLASSERT( !first_hidden_layer_is_output );

w2 = Var(1 + output.width(), nhidden2, "w2");

params.append(w2);

if (hidden_transfer_func == "ratio") {

v2.resize(ratio_rank > 0 ? ratio_rank

: ratio_rank == -1 ? output->width()

: 0);

for (int i = 0; i < v2.length(); i++) {

v2[i] = Var(output->width(), nhidden2, "v2[" + tostring(i) + "]");

params.append(v2[i]);

}

}

output = hiddenLayer(output, w2, "default", &v2);

}

if (nhidden2>0 && nhidden==0 && !first_hidden_layer)

PLERROR("NNet:: can't have nhidden2 (=%d) > 0 while nhidden=0",nhidden2);

if (rbf_layer_size>0)

{

if (first_class_is_junk)

{

rbf_centers = Var(outputsize()-1, rbf_layer_size, "rbf_centers");

rbf_sigmas = Var(outputsize()-1, "rbf_sigmas");

PLERROR("In NNet.cc, the code needs to be completed, rbf_layer isn't declared and thus it doesn't compile with the line below");

// TODO (Also put back the corresponding include).

// output = hconcat(rbf_layer(output,rbf_centers,rbf_sigmas)&junk_prob);

params.append(junk_prob);

}

else

{

rbf_centers = Var(outputsize(), rbf_layer_size, "rbf_centers");

rbf_sigmas = Var(outputsize(), "rbf_sigmas");

PLERROR("In NNet.cc, the code needs to be completed, rbf_layer isn't declared and thus it doesn't compile with the line below");

// output = rbf_layer(output,rbf_centers,rbf_sigmas);

}

params.append(rbf_centers);

params.append(rbf_sigmas);

}

// Output layer before transfer function.

if (!first_hidden_layer_is_output) {

wout = Var(1 + output->width(), outputsize(), "wout");

output = affine_transform(output, wout, true);

output->setName("output_activations");

if (!fixed_output_weights)

params.append(wout);

else

{

outbias = Var(1, output->width(), "outbias");

output = output + outbias;

params.append(outbias);

}

} else {

// Verify we have provided a 'first_hidden_layer' Variable: even though

// one might want to use this option without such a Var, it would be

// simpler in this case to just set 'nhidden' to 0.

if (!first_hidden_layer)

PLERROR("In NNet::buildOutputFromInput - The option "

"'first_hidden_layer_is_output' can only be used in "

"conjunction with a 'first_hidden_layer' Variable");

}

// Direct in-to-out layer.

if(direct_in_to_out)

{

wdirect = Var(the_input->width(), outputsize(), "wdirect");

output += product(the_input, wdirect);

params.append(wdirect);

if (nhidden <= 0)

PLERROR("In NNet::buildOutputFromInput - It seems weird to use direct in-to-out connections if there is no hidden layer anyway");

}

before_transfer_func = output;

applyTransferFunc(before_transfer_func, output);

}

| void PLearn::NNet::buildPenalties | ( | const Var & | hidden_layer | ) | [protected, virtual] |

Fill the costs penalties.

Reimplemented in PLearn::AddLayersNNet.

Definition at line 831 of file NNet.cc.

References PLearn::affine_transform(), PLearn::affine_transform_weight_penalty(), PLearn::TVec< T >::append(), bias_decay, direct_in_to_out_weight_decay, PLearn::fast_exact_is_equal(), input, input_reconstruction_penalty, layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, output_layer_bias_decay, output_layer_weight_decay, params, penalties, penalty_type, predicted_input, PLearn::TVec< T >::resize(), PLearn::square(), PLearn::sumabs(), PLearn::sumsquare(), w1, w2, wdirect, weight_decay, PLearn::Var::width(), wout, and wrec.

Referenced by buildCosts(), and PLearn::AddLayersNNet::buildPenalties().

{

penalties.resize(0); // prevents penalties from being added twice by consecutive builds

if(w1 && (!fast_exact_is_equal(layer1_weight_decay + weight_decay, 0) ||

!fast_exact_is_equal(layer1_bias_decay + bias_decay, 0)))

penalties.append(affine_transform_weight_penalty(w1, (layer1_weight_decay + weight_decay), (layer1_bias_decay + bias_decay), penalty_type));

if(w2 && (!fast_exact_is_equal(layer2_weight_decay + weight_decay, 0) ||

!fast_exact_is_equal(layer2_bias_decay + bias_decay, 0)))

penalties.append(affine_transform_weight_penalty(w2, (layer2_weight_decay + weight_decay), (layer2_bias_decay + bias_decay), penalty_type));

if(wout && (!fast_exact_is_equal(output_layer_weight_decay + weight_decay, 0) ||

!fast_exact_is_equal(output_layer_bias_decay + bias_decay, 0)))

penalties.append(affine_transform_weight_penalty(wout, (output_layer_weight_decay + weight_decay),

(output_layer_bias_decay + bias_decay), penalty_type));

if(wdirect &&

!fast_exact_is_equal(direct_in_to_out_weight_decay + weight_decay, 0))

{

if (penalty_type == "L1_square")

penalties.append(square(sumabs(wdirect))*(direct_in_to_out_weight_decay + weight_decay));

else if (penalty_type == "L1")

penalties.append(sumabs(wdirect)*(direct_in_to_out_weight_decay + weight_decay));

else if (penalty_type == "L2_square")

penalties.append(sumsquare(wdirect)*(direct_in_to_out_weight_decay + weight_decay));

}

if (input_reconstruction_penalty>0)

{

wrec = Var(1 + hidden_layer->width(),input->width(),"wrec");

predicted_input = affine_transform(hidden_layer, wrec, true);

params.append(wrec);

penalties.append(input_reconstruction_penalty*sumsquare(predicted_input - input));

}

}

| void PLearn::NNet::buildTargetAndWeight | ( | ) | [protected] |

Builds the target and sampleweight variables.

Definition at line 865 of file NNet.cc.

References operate_on_bags, PLERROR, sampleweight, target, PLearn::PLearner::targetsize(), and PLearn::PLearner::weightsize_.

Referenced by PLearn::AddLayersNNet::build_(), and build_().

{

int ts = operate_on_bags ? targetsize() - 1 // Remove bag information.

: targetsize();

target = Var(1, ts, "target");

if(weightsize_>0)

{

if (weightsize_!=1)

PLERROR("In NNet::buildTargetAndWeight - Expected weightsize to be 1 or 0 (or unspecified = -1, meaning 0), got %d",weightsize_);

sampleweight = Var(1, "weight");

}

}

| string PLearn::NNet::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

Definition at line 96 of file NNet.cc.

Referenced by train().

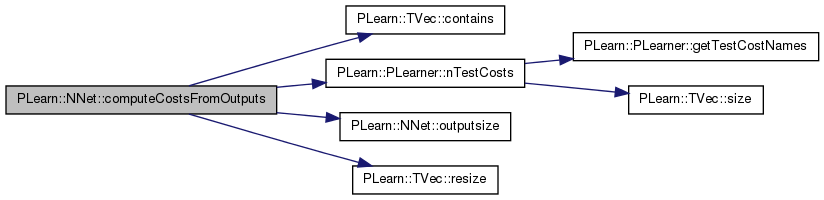

| void PLearn::NNet::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the weighted costs from already computed output. The costs should correspond to the cost names returned by getTestCostNames().

NOTE: In exotic cases, the cost may also depend on some info in the input, that's why the method also gets so see it.

Implements PLearn::PLearner.

Definition at line 880 of file NNet.cc.

References PLearn::TVec< T >::contains(), cost_funcs, PLearn::PLearner::nTestCosts(), operate_on_bags, output_and_target_to_cost, outputsize(), PLASSERT_MSG, PLERROR, and PLearn::TVec< T >::resize().

{

PLASSERT_MSG( !operate_on_bags, "Not implemented" );

#ifdef BOUNDCHECK

// Stable cross entropy needs the value *before* the transfer function.

if (cost_funcs.contains("stable_cross_entropy") or

(cost_funcs.contains("NLL") and outputsize() == 1))

PLERROR("In NNet::computeCostsFromOutputs - Cannot directly compute stable "

"cross entropy from output and target");

#endif

costsv.resize(nTestCosts());

output_and_target_to_cost->fprop(outputv&targetv, costsv);

}

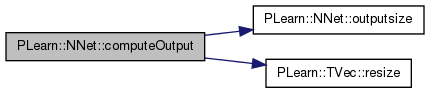

*** SUBCLASS WRITING: ***

This should be defined in subclasses to compute the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 898 of file NNet.cc.

References input_to_output, operate_on_bags, outputsize(), PLERROR, and PLearn::TVec< T >::resize().

{

if (operate_on_bags)

PLERROR("In NNet::computeOutput - Cannot compute output without bag "

"information");

outputv.resize(outputsize());

input_to_output->fprop(inputv,outputv);

}

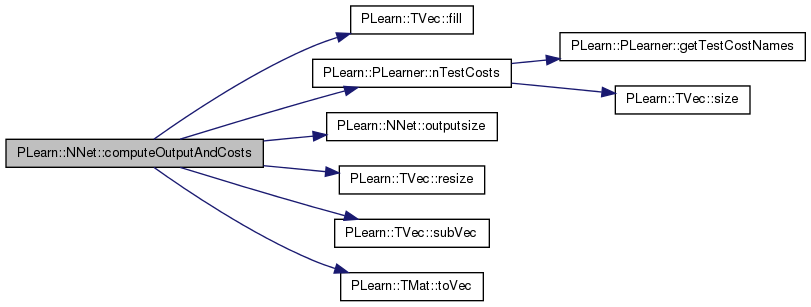

| void PLearn::NNet::computeOutputAndCosts | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | output, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Default calls computeOutput and computeCostsFromOutputs.

You may override this if you have a more efficient way to compute both output and weighted costs at the same time.

Reimplemented from PLearn::PLearner.

Definition at line 910 of file NNet.cc.

References PLearn::TVec< T >::fill(), MISSING_VALUE, PLearn::PLearner::nTestCosts(), operate_on_bags, outputsize(), PLearn::TVec< T >::resize(), store_bag_inputs, store_bag_size, PLearn::TVec< T >::subVec(), PLearn::SumOverBagsVariable::TARGET_COLUMN_FIRST, PLearn::SumOverBagsVariable::TARGET_COLUMN_LAST, test_costf, and PLearn::TMat< T >::toVec().

{

outputv.resize(outputsize());

costsv.resize(nTestCosts());

if (!operate_on_bags)

test_costf->fprop(inputv&targetv, outputv&costsv);

else {

// We can only compute the output once the whole bag has been seen.

int last_target_idx = targetv.length() - 1;

int bag_info = int(round(targetv[last_target_idx]));

if (bag_info & SumOverBagsVariable::TARGET_COLUMN_FIRST)

store_bag_size[0] = 0;

store_bag_inputs(int(round(store_bag_size[0]))) << inputv;

store_bag_size[0]++;

if (bag_info & SumOverBagsVariable::TARGET_COLUMN_LAST)

test_costf->fprop(store_bag_inputs.toVec()

& store_bag_size

& targetv.subVec(0, last_target_idx),

outputv & costsv);

else {

outputv.fill(MISSING_VALUE);

costsv.fill(MISSING_VALUE);

}

}

}

| void PLearn::NNet::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

Definition at line 141 of file NNet.cc.

References PLearn::OptionBase::advanced_level, batch_size, bias_decay, PLearn::OptionBase::buildoption, classification_regularizer, cost_funcs, PLearn::declareOption(), PLearn::PLearner::declareOptions(), direct_in_to_out, direct_in_to_out_weight_decay, do_not_change_params, first_class_is_junk, first_hidden_layer, first_hidden_layer_is_output, fixed_output_weights, hidden_transfer_func, initialization_method, input_reconstruction_penalty, L1_penalty, layer1_bias_decay, layer1_weight_decay, layer2_bias_decay, layer2_weight_decay, PLearn::OptionBase::learntoption, margin, max_bag_size, n_non_params_in_first_hidden_layer, nhidden, nhidden2, PLearn::OptionBase::nosave, noutputs, operate_on_bags, optimizer, outbias, output_layer_bias_decay, output_layer_weight_decay, output_transfer_func, paramsvalues, penalty_type, ratio_rank, rbf_layer_size, transpose_first_hidden_layer, w1, w2, wdirect, weight_decay, wout, and wrec.

Referenced by PLearn::SurfaceTemplateLearner::declareOptions(), and PLearn::AddLayersNNet::declareOptions().

{

declareOption(

ol, "nhidden", &NNet::nhidden, OptionBase::buildoption,

"Number of hidden units in first hidden layer (0 means no hidden layer)\n");

declareOption(

ol, "nhidden2", &NNet::nhidden2, OptionBase::buildoption,

"Number of hidden units in second hidden layer (0 means no hidden layer)\n");

declareOption(

ol, "noutputs", &NNet::noutputs, OptionBase::buildoption,

"Number of output units. This gives this learner its outputsize. It is\n"

"typically of the same dimensionality as the target for regression\n"

"problems. But for classification problems where target is just the\n"

"class number, noutputs is usually of dimensionality number of classes\n"

"(as we want to output a score or probability vector, one per class).\n"

"\n"

"The default value is 0, which is caught at build-time and gives an\n"

"error. If a value of -1 is put, noutputs is set from the targetsize of\n"

"the trainingset the first time setTrainingSet() is called on the\n"

"learner (appropriate for regression scenarios). This allows using the\n"

"learner as a 'template' without knowing in advance the number of\n"

"outputs it should have to handle. Future extensions will cover the\n"

"case of automatically discovering the outputsize for classification.\n");

declareOption(

ol, "weight_decay", &NNet::weight_decay, OptionBase::buildoption,

"Global weight decay for all layers\n");

declareOption(

ol, "bias_decay", &NNet::bias_decay, OptionBase::buildoption,

"Global bias decay for all layers\n");

declareOption(

ol, "layer1_weight_decay", &NNet::layer1_weight_decay, OptionBase::buildoption,

"Additional weight decay for the first hidden layer. Is added to weight_decay.\n");

declareOption(

ol, "layer1_bias_decay", &NNet::layer1_bias_decay, OptionBase::buildoption,

"Additional bias decay for the first hidden layer. Is added to bias_decay.\n");

declareOption(

ol, "layer2_weight_decay", &NNet::layer2_weight_decay, OptionBase::buildoption,

"Additional weight decay for the second hidden layer. Is added to weight_decay.\n");

declareOption(

ol, "layer2_bias_decay", &NNet::layer2_bias_decay, OptionBase::buildoption,

"Additional bias decay for the second hidden layer. Is added to bias_decay.\n");

declareOption(

ol, "output_layer_weight_decay", &NNet::output_layer_weight_decay, OptionBase::buildoption,

"Additional weight decay for the output layer. Is added to 'weight_decay'.\n");

declareOption(

ol, "output_layer_bias_decay", &NNet::output_layer_bias_decay, OptionBase::buildoption,

"Additional bias decay for the output layer. Is added to 'bias_decay'.\n");

declareOption(

ol, "direct_in_to_out_weight_decay", &NNet::direct_in_to_out_weight_decay, OptionBase::buildoption,

"Additional weight decay for the direct in-to-out layer. Is added to 'weight_decay'.\n");

declareOption(

ol, "penalty_type", &NNet::penalty_type,

OptionBase::buildoption,

"Penalty to use on the weights (for weight and bias decay).\n"

"Can be any of:\n"

" - \"L1\": L1 norm,\n"

" - \"L1_square\": square of the L1 norm,\n"

" - \"L2_square\" (default): square of the L2 norm.\n");

declareOption(

ol, "L1_penalty", &NNet::L1_penalty, OptionBase::buildoption,

"Deprecated - You should use \"penalty_type\" instead\n"

"should we use L1 penalty instead of the default L2 penalty on the weights?\n");

declareOption(

ol, "fixed_output_weights", &NNet::fixed_output_weights, OptionBase::buildoption,

"If true then the output weights are not learned. They are initialized to +1 or -1 randomly.\n");

declareOption(

ol, "input_reconstruction_penalty", &NNet::input_reconstruction_penalty, OptionBase::buildoption,

"If >0 then a set of weights will be added from a hidden layer to predict (reconstruct) the inputs\n"

"and the total loss will include an extra term that is the squared input reconstruction error,\n"

"multiplied by the input_reconstruction_penalty factor.\n");

declareOption(

ol, "direct_in_to_out", &NNet::direct_in_to_out, OptionBase::buildoption,

"should we include direct input to output connections?\n");

declareOption(

ol, "rbf_layer_size", &NNet::rbf_layer_size, OptionBase::buildoption,

"If non-zero, add an extra layer which computes N(h(x);mu_i,sigma_i) (Gaussian density) for the\n"

"i-th output unit with mu_i a free vector and sigma_i a free scalar, and h(x) the vector of\n"

"activations of the 'representation' output, i.e. what would be the output layer otherwise. The\n"

"given non-zero value is the number of these 'representation' outputs. Typically this\n"

"makes sense for classification problems, with a softmax output_transfer_func. If the\n"

"first_class_is_junk option is set then the first output (first class) does not get a\n"

"Gaussian density but just a 'pseudo-uniform' density (the single free parameter is the\n"

"value of that density) and in a softmax it makes sure that when h(x) is far from the\n"

"centers mu_i for all the other classes then the last class gets the strongest posterior probability.\n");

declareOption(

ol, "first_class_is_junk", &NNet::first_class_is_junk, OptionBase::buildoption,

"This option is used only when rbf_layer_size>0. If true then the first class is\n"

"treated differently and gets a pre-transfer-function value that is a learned constant, whereas\n"

"the others get a normal centered at mu_i.\n");

declareOption(

ol, "output_transfer_func", &NNet::output_transfer_func, OptionBase::buildoption,

"what transfer function to use for ouput layer? One of: \n"

" - \"tanh\" \n"

" - \"sigmoid\" \n"

" - \"exp\" \n"

" - \"softplus\" \n"

" - \"softmax\" \n"

" - \"log_softmax\" \n"

" - \"interval(<minval>,<maxval>)\", which stands for\n"

" <minval>+(<maxval>-<minval>)*sigmoid(.).\n"

"An empty string or \"none\" means no output transfer function \n");

declareOption(

ol, "hidden_transfer_func", &NNet::hidden_transfer_func, OptionBase::buildoption,

"What transfer function to use for hidden units? One of \n"

" - \"linear\" \n"

" - \"tanh\" \n"

" - \"sigmoid\" \n"

" - \"exp\" \n"

" - \"softplus\" \n"

" - \"softmax\" \n"

" - \"log_softmax\" \n"

" - \"hard_slope\" \n"

" - \"symm_hard_slope\" \n"

" - \"ratio\": e/(1+e) with e=sqrt(x'V'Vx + softplus(a)^2)\n"

" with a=b+W'x and V a matrix of rank 'ratio_rank'");

declareOption(

ol, "cost_funcs", &NNet::cost_funcs, OptionBase::buildoption,

"A list of cost functions to use\n"

"in the form \"[ cf1; cf2; cf3; ... ]\" where each function is one of: \n"

" - \"mse\" (for regression)\n"

" - \"mse_onehot\" (for classification)\n"

" - \"NLL\" (negative log likelihood -log(p[c]) for classification) \n"

" - \"class_error\" (classification error) \n"

" - \"binary_class_error\" (classification error for a 0-1 binary classifier)\n"

" - \"multiclass_error\" \n"

" - \"cross_entropy\" (for binary classification)\n"

" - \"stable_cross_entropy\" (more accurate backprop and possible regularization, for binary classification)\n"

" - \"margin_perceptron_cost\" (a hard version of the cross_entropy, uses the 'margin' option)\n"

" - \"lift_output\" (not a real cost function, just the output for lift computation)\n"

" - \"conf_rated_adaboost_cost\" (for Confidence-rated Adaboost)\n"

" - \"gradient_adaboost_cost\" (for MarginBoost, see \"Functional \n"

" Gradient Techniques for Combining \n"

" Hypotheses\" by Mason et al.)\n"

" - \"poisson_nll\"\n"

" - \"L1\"\n"

"The FIRST function of the list will be used as \n"

"the objective function to optimize \n"

"(possibly with an added weight decay penalty) \n");

declareOption(

ol, "classification_regularizer", &NNet::classification_regularizer, OptionBase::buildoption,

"Used only in the stable_cross_entropy cost function, to fight overfitting (0<=r<1)\n");

declareOption(

ol, "first_hidden_layer", &NNet::first_hidden_layer, OptionBase::buildoption,

"A user-specified NAry Var that computes the output of the first hidden layer\n"

"from the network input vector and a set of parameters. Its first argument should\n"

"be the network input and the remaining arguments the tunable parameters.\n",

OptionBase::advanced_level);

declareOption(

ol, "first_hidden_layer_is_output",

&NNet::first_hidden_layer_is_output, OptionBase::buildoption,

"If true and a 'first_hidden_layer' Var is provided, then this layer\n"

"will be considered as the NNet output before transfer function.",

OptionBase::advanced_level);

declareOption(

ol, "n_non_params_in_first_hidden_layer",

&NNet::n_non_params_in_first_hidden_layer,

OptionBase::buildoption,

"Number of elements in the 'varray' option of 'first_hidden_layer'\n"

"that are not updated parameters (assumed to be the last elements in\n"

"'varray').",

OptionBase::advanced_level);

declareOption(

ol, "transpose_first_hidden_layer",

&NNet::transpose_first_hidden_layer,

OptionBase::buildoption,

"If true and the 'first_hidden_layer' option is set, this layer will\n"

"be transposed, and the input variable given to this layer will also\n"

"be transposed.", OptionBase::advanced_level);

declareOption(

ol, "margin", &NNet::margin, OptionBase::buildoption,

"Margin requirement, used only with the margin_perceptron_cost cost function.\n"

"It should be positive, and larger values regularize more.\n");

declareOption(

ol, "do_not_change_params", &NNet::do_not_change_params, OptionBase::buildoption,

"If set to 1, the weights won't be loaded nor initialized at build time.");

declareOption(

ol, "optimizer", &NNet::optimizer, OptionBase::buildoption,

"Specify the optimizer to use\n");

declareOption(

ol, "batch_size", &NNet::batch_size, OptionBase::buildoption,

"How many samples to use to estimate the avergage gradient before updating the weights\n"

"0 is equivalent to specifying training_set->length() \n");

declareOption(

ol, "initialization_method", &NNet::initialization_method, OptionBase::buildoption,

"The method used to initialize the weights:\n"

" - \"normal_linear\" = a normal law with variance 1/n_inputs\n"

" - \"normal_sqrt\" = a normal law with variance 1/sqrt(n_inputs)\n"

" - \"uniform_linear\" = a uniform law in [-1/n_inputs, 1/n_inputs]\n"

" - \"uniform_sqrt\" = a uniform law in [-1/sqrt(n_inputs), 1/sqrt(n_inputs)]\n"

" - \"zero\" = all weights are set to 0\n");

declareOption(

ol, "operate_on_bags", &NNet::operate_on_bags, OptionBase::buildoption,

"If True, then samples are no longer considered as unique entities.\n"

"Instead, each sample belongs to a so-called 'bag', that may contain\n"

"1 or more samples. The last column of the target is assumed to\n"

"provide information about bags (see help of SumOverBagsVariable for\n"

"details on the coding of bags).\n"

"When operating on bags, each bag is considered a training sample.\n"

"The activations a_ci of output units c for each bag sample i are\n"

"combined within each bag, yielding bag activation a_c given by:\n"

" a_c = logadd(a_c1, ..., acn)\n"

"In particular, when using the 'softmax' output transfer function,\n"

"this corresponds to computing:\n"

" P(class = c | x_1, ..., x_i, ..., x_n) =\n"

" (\\sum_i exp(a_ci)) / \\sum_c,i exp(a_ci)\n"

"where a_ci is the activation of output node c for the i-th sample\n"

"x_i in the bag.",

OptionBase::advanced_level);

declareOption(

ol, "max_bag_size", &NNet::max_bag_size, OptionBase::buildoption,

"Maximum number of samples in a bag (used with 'operate_on_bags').",

OptionBase::advanced_level);

declareOption(

ol, "ratio_rank", &NNet::ratio_rank, OptionBase::buildoption,

"Rank of matrix V when using the 'ratio' hidden transfer function.\n"

"Use -1 for full rank, and 0 to have no quadratic term.",

OptionBase::advanced_level);

// Learnt options.

declareOption(

ol, "paramsvalues", &NNet::paramsvalues, OptionBase::learntoption,

"The learned parameter vector\n");

// Introspective options. The following are direct views on the individual

// parameters of the NNet. They are marked 'nosave' since they overlap

// with paramsvalues, but are useful for inspecting the NNet structure from

// a Python program.

declareOption(

ol, "w1", &NNet::w1,

OptionBase::learntoption | OptionBase::nosave,

"(Introspection option) bias and weights of first hidden layer");

declareOption(

ol, "w2", &NNet::w2,

OptionBase::learntoption | OptionBase::nosave,

"(Introspection option) bias and weights of second hidden layer");

declareOption(

ol, "wout", &NNet::wout,

OptionBase::learntoption | OptionBase::nosave,

"(Introspection option) bias and weights of output layer");

declareOption(

ol, "outbias", &NNet::outbias,

OptionBase::learntoption | OptionBase::nosave,

"(Introspection option) bias used only if fixed_output_weights");

declareOption(

ol, "wdirect", &NNet::wdirect,

OptionBase::learntoption | OptionBase::nosave,

"(Introspection option) bias and weights for direct in-to-out connection");

declareOption(

ol, "wrec", &NNet::wrec,

OptionBase::learntoption | OptionBase::nosave,

"(Introspection option) input reconstruction weights (optional), from hidden layer to predicted input");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::NNet::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

Definition at line 201 of file NNet.h.

{return w1->matValue;}

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

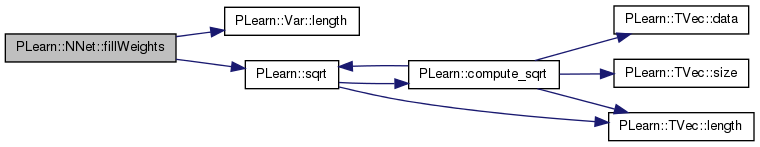

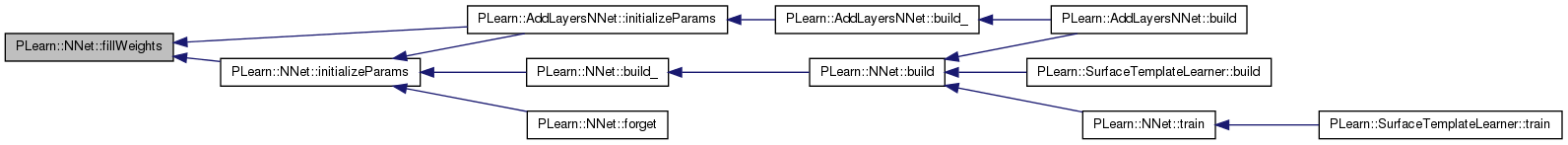

Fill a matrix of weights according to the 'initialization_method' specified.

The 'clear_first_row' boolean indicates whether we should fill the first row with zeros.

Definition at line 940 of file NNet.cc.

References initialization_method, PLearn::Var::length(), PLearn::PLearner::random_gen, and PLearn::sqrt().

Referenced by PLearn::AddLayersNNet::initializeParams(), and initializeParams().

{

if (!weights)

return;

if (initialization_method == "zero")

{

weights->value->clear();

return;

}

real delta;

int is = weights.length();

if (clear_first_row)

is--; // -1 to get the same result as before.

if (initialization_method.find("linear") != string::npos)

delta = 1.0 / real(is);

else

delta = 1.0 / sqrt(real(is));

if (initialization_method.find("normal") != string::npos)

random_gen->fill_random_normal(weights->value, 0, delta);

else

random_gen->fill_random_uniform(weights->value, -delta, delta);

if (clear_first_row)

weights->matValue(0).clear();

}

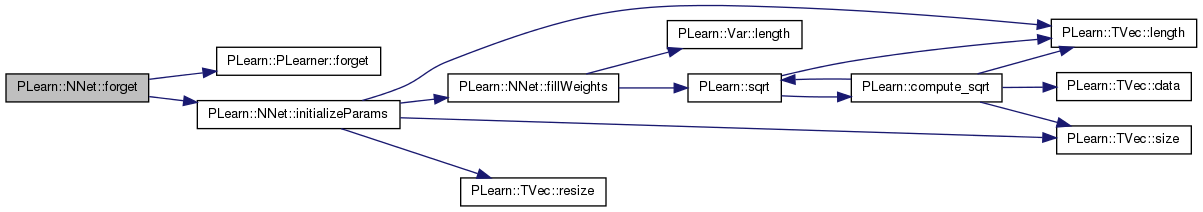

| void PLearn::NNet::forget | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!)

A typical forget() method should do the following:

This method is typically called by the build_() method, after it has finished setting up the parameters, and if it deemed useful to set or reset the learner in its fresh state. (remember build may be called after modifying options that do not necessarily require the learner to restart from a fresh state...) forget is also called by the setTrainingSet method, after calling build(), so it will generally be called TWICE during setTrainingSet!

Reimplemented from PLearn::PLearner.

Definition at line 969 of file NNet.cc.

References PLearn::PLearner::forget(), initializeParams(), n_training_bags, optimizer, PLearn::PLearner::stage, and PLearn::PLearner::train_set.

{

inherited::forget();

if (train_set) initializeParams();

if(optimizer)

optimizer->reset();

stage = 0;

n_training_bags = -1;

}

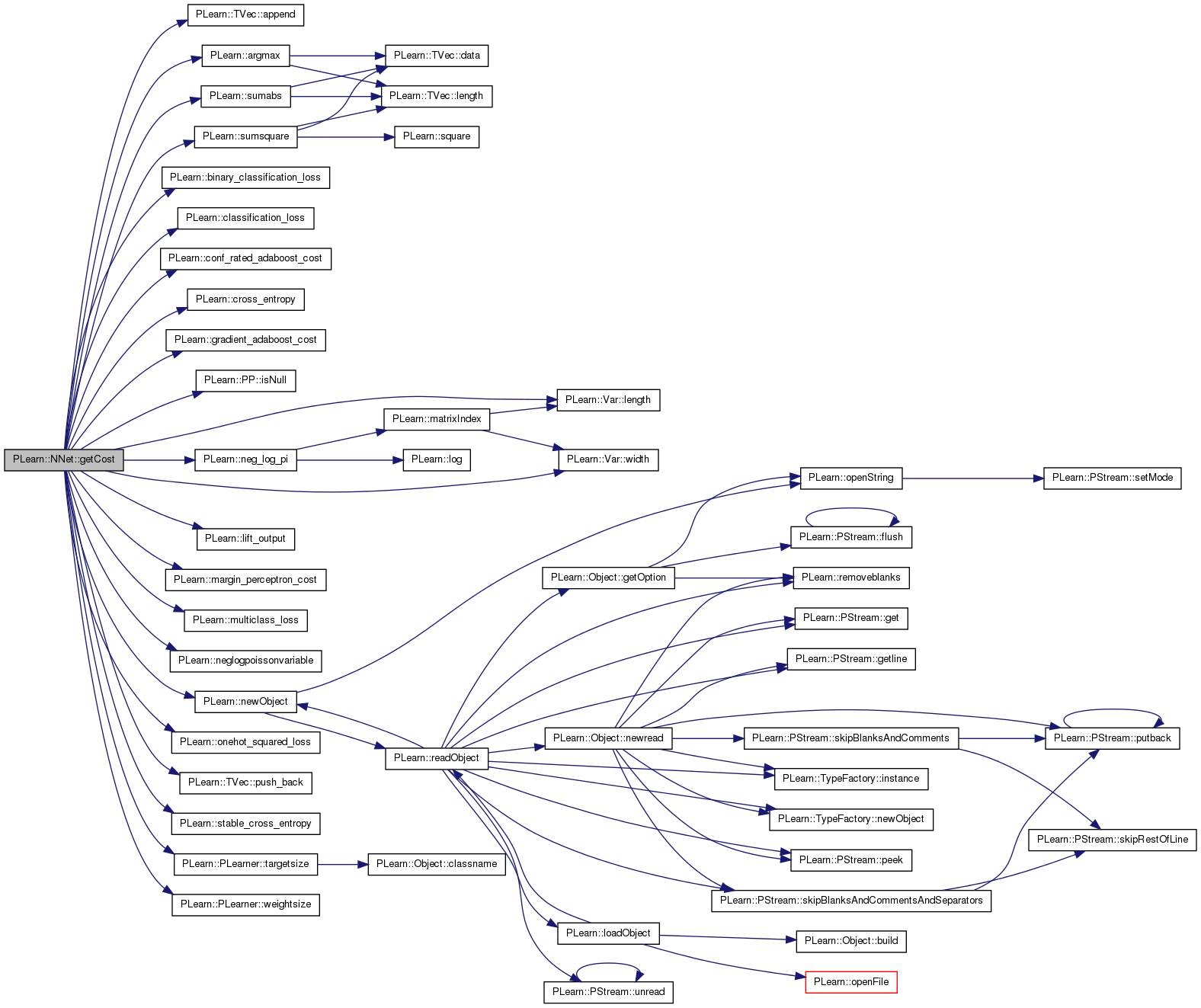

| Var PLearn::NNet::getCost | ( | const string & | costname, |

| const Var & | output, | ||

| const Var & | target, | ||

| const Var & | before_transfer_func | ||

| ) | [protected, virtual] |

Return the cost corresponding to the given cost name.

This method is virtual so that subclasses can implement their own custom costs.

Definition at line 982 of file NNet.cc.

References alpha_adaboost, PLearn::TVec< T >::append(), PLearn::argmax(), PLearn::binary_classification_loss(), c, PLearn::classification_loss(), classification_regularizer, PLearn::conf_rated_adaboost_cost(), PLearn::cross_entropy(), PLearn::gradient_adaboost_cost(), PLearn::PP< T >::isNull(), PLearn::Var::length(), PLearn::lift_output(), margin, PLearn::margin_perceptron_cost(), PLearn::multiclass_loss(), PLearn::neg_log_pi(), PLearn::neglogpoissonvariable(), PLearn::newObject(), PLearn::onehot_squared_loss(), output_transfer_func, params, PLASSERT, PLERROR, PLWARNING, PLearn::TVec< T >::push_back(), sampleweight, PLearn::stable_cross_entropy(), PLearn::sumabs(), PLearn::sumsquare(), PLearn::PLearner::targetsize(), PLearn::PLearner::weightsize(), and PLearn::Var::width().

Referenced by buildCosts().

{

// We don't need to take into account the sampleweight, because it is

// taken care of in stats->update.

if (costname=="mse") {

// The following assert may be useful since 'operator-' on variables

// can be used to do subtractions on Variables of different sizes,

// which should not be the case in a NNet.

PLASSERT( the_output->length() == the_target->length() &&

the_output->width() == the_target->width() );

return sumsquare(the_output - the_target);

} else if (costname=="mse_onehot")

return onehot_squared_loss(the_output, the_target);

else if (costname=="NLL")

{

if (the_output->width() == 1) {

// Assume sigmoid output here!

return stable_cross_entropy(before_transfer_func, the_target);

} else {

if (output_transfer_func == "log_softmax")

return -the_output[the_target];

else

return neg_log_pi(the_output, the_target);

}

}

else if (costname=="class_error")

{

if (the_output->width()==1)

return binary_classification_loss(the_output, the_target);

else {

Var targ = the_target;

if (targetsize() > 1)

// One-hot encoding of target: we need to convert it to an

// index in order to be able to use 'classification_loss'.

targ = argmax(the_target);

return classification_loss(the_output, targ);

}

}

else if (costname=="binary_class_error")

return binary_classification_loss(the_output, the_target);

else if (costname=="multiclass_error")

return multiclass_loss(the_output, the_target);

else if (costname=="cross_entropy")

return cross_entropy(the_output, the_target);

else if(costname=="conf_rated_adaboost_cost")

{

if(output_transfer_func != "sigmoid")

PLWARNING("In NNet:buildCosts(): conf_rated_adaboost_cost expects an output in (0,1)");

alpha_adaboost = Var(1,1); alpha_adaboost->value[0] = 1.0;

params.append(alpha_adaboost);

return conf_rated_adaboost_cost(the_output, the_target, alpha_adaboost);

}

else if (costname=="gradient_adaboost_cost")

{

if(output_transfer_func != "sigmoid")

PLWARNING("In NNet:buildCosts(): gradient_adaboost_cost expects an output in (0,1)");

return gradient_adaboost_cost(the_output, the_target);

}

else if (costname=="stable_cross_entropy") {

Var c = stable_cross_entropy(before_transfer_func, the_target);

PLASSERT( classification_regularizer >= 0 );

if (classification_regularizer > 0) {

// There is a regularizer to add to the cost function.

dynamic_cast<NegCrossEntropySigmoidVariable*>((Variable*) c)->

setRegularizer(classification_regularizer);

}

return c;

}

else if (costname=="margin_perceptron_cost")

return margin_perceptron_cost(the_output,the_target,margin);

else if (costname=="lift_output")

return lift_output(the_output, the_target);

else if (costname=="poisson_nll") {

VarArray the_varray(the_output, the_target);

if (weightsize()>0) {

PLERROR("In NNet::getCost - The weight is used, is this really "

"intended? (see comment in code at the top of this "

"method");

the_varray.push_back(sampleweight);

}

return neglogpoissonvariable(the_varray);

}

else if (costname == "L1")

return sumabs(the_output - the_target);

else {

// Assume we got a Variable name and its options

Var cost = dynamic_cast<Variable*>(newObject(costname));

if(cost.isNull())

PLERROR("In NNet::build_() - unknown cost name: %s",

costname.c_str());

cost->setParents(the_output & the_target);

cost->build();

return cost;

}

}

| OptionList & PLearn::NNet::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

| OptionMap & PLearn::NNet::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

| RemoteMethodMap & PLearn::NNet::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

| TVec< string > PLearn::NNet::getTestCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the costs computed by computeCostsFromOutputs.

Implements PLearn::PLearner.

Definition at line 1095 of file NNet.cc.

References cost_funcs.

{

return cost_funcs;

}

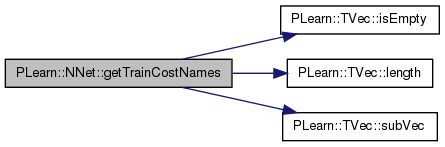

| TVec< string > PLearn::NNet::getTrainCostNames | ( | ) | const [virtual] |

*** SUBCLASS WRITING: ***

This should return the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 1082 of file NNet.cc.

References cost_funcs, PLearn::TVec< T >::isEmpty(), PLearn::TVec< T >::length(), PLASSERT, and PLearn::TVec< T >::subVec().

{

PLASSERT( !cost_funcs.isEmpty() );

int n_costs = cost_funcs.length();

TVec<string> train_costs(n_costs + 1);

train_costs[0] = cost_funcs[0] + "+penalty";

train_costs.subVec(1, n_costs) << cost_funcs;

return train_costs;

}

| virtual Mat PLearn::NNet::getW1 | ( | ) | [inline, virtual] |

| virtual Mat PLearn::NNet::getW2 | ( | ) | [inline, virtual] |

| virtual Mat PLearn::NNet::getWdirect | ( | ) | [inline, virtual] |

| virtual Mat PLearn::NNet::getWout | ( | ) | [inline, virtual] |

| Var PLearn::NNet::hiddenLayer | ( | const Var & | input, |

| const Var & | weights, | ||

| string | transfer_func = "default", |

||

| VarArray * | ratio_quad_weights = NULL |

||

| ) | [protected] |

Return a variable that is the hidden layer corresponding to given input and weights.

If the 'default' transfer_func is used, we use the hidden_transfer_func option.

Definition at line 1103 of file NNet.cc.

References PLearn::affine_transform(), PLearn::exp(), hidden_transfer_func, i, PLearn::TVec< T >::length(), PLearn::log_softmax(), PLASSERT, PLERROR, ratio_rank, PLearn::sigmoid(), PLearn::softmax(), PLearn::softplus(), PLearn::tanh(), and PLearn::unary_hard_slope().

Referenced by PLearn::AddLayersNNet::build_(), and buildOutputFromInput().

{

Var hidden = affine_transform(input, weights, true);

hidden->setName("hidden_layer_activations");

Var result;

if (transfer_func == "default")

transfer_func = hidden_transfer_func;

if(transfer_func=="linear")

result = hidden;

else if(transfer_func=="tanh")

result = tanh(hidden);

else if(transfer_func=="sigmoid")

result = sigmoid(hidden);

else if(transfer_func=="softplus")

result = softplus(hidden);

else if(transfer_func=="exp")

result = exp(hidden);

else if(transfer_func=="softmax")

result = softmax(hidden);

else if (transfer_func == "log_softmax")

result = log_softmax(hidden);

else if(transfer_func=="hard_slope")

result = unary_hard_slope(hidden,0,1);

else if(transfer_func=="symm_hard_slope")

result = unary_hard_slope(hidden,-1,1);

else if (transfer_func == "ratio") {

PLASSERT( ratio_quad_weights );

Var softp = new SoftplusVariable(hidden);

Var before_ratio = softp;

if (ratio_rank != 0) {

// Compute quadratic term.

VarArray quad_terms(ratio_quad_weights->length());

for (int i = 0; i < ratio_quad_weights->length(); i++) {

quad_terms[i] = new SquareVariable(

new ProductVariable(input, (*ratio_quad_weights)[i]));

}

Var sum_quad_terms = new PlusManyVariable(quad_terms);

// Add the softplus term.

Var softp_square = new SquareVariable(softp);

Var total = new PlusVariable(sum_quad_terms, softp_square);

// Take the square root.

before_ratio = new SquareRootVariable(total);

}

// Perform ratio.

result = new DivVariable(before_ratio,

new PlusConstantVariable(before_ratio, 1.0));

}

else

PLERROR("In NNet::hiddenLayer - Unknown value for transfer_func: %s",transfer_func.c_str());

return result;

}

| void PLearn::NNet::initializeParams | ( | bool | set_seed = true | ) | [protected, virtual] |

Initialize the parameters.

If 'set_seed' is set to false, the seed will not be set in this method (it will be assumed to be already initialized according to the 'seed' option).

Reimplemented in PLearn::AddLayersNNet.

Definition at line 1158 of file NNet.cc.

References direct_in_to_out, fillWeights(), first_hidden_layer, fixed_output_weights, i, PLearn::TVec< T >::length(), nhidden, nhidden2, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), PLearn::PLearner::seed_, PLearn::TVec< T >::size(), v1, v2, w1, w2, wdirect, and wout.

Referenced by build_(), forget(), and PLearn::AddLayersNNet::initializeParams().

{

if (set_seed && seed_ != 0)

random_gen->manual_seed(seed_);

if (nhidden>0) {

if (!first_hidden_layer) {

fillWeights(w1, true);

for (int i = 0; i < v1.length(); i++)

fillWeights(v1[i], true);

}

if (direct_in_to_out)

fillWeights(wdirect, false);

}

if(nhidden2>0) {

fillWeights(w2, true);

for (int i = 0; i < v2.length(); i++)

fillWeights(v2[i], true);

}

if (fixed_output_weights) {

static Vec values;

if (values.size()==0)

{

values.resize(2);

values[0]=-1;

values[1]=1;

}

random_gen->fill_random_discrete(wout->value, values);

wout->matValue(0).clear();

}

else {

fillWeights(wout, true);

}

}

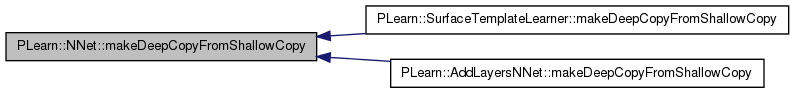

| void PLearn::NNet::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

Definition at line 1208 of file NNet.cc.

References alpha_adaboost, bag_inputs, bag_size, cost_funcs, costs, PLearn::deepCopyField(), first_hidden_layer, hidden_layer, input, input_to_output, invars, junk_prob, PLearn::PLearner::makeDeepCopyFromShallowCopy(), optimizer, outbias, output, output_and_target_to_cost, params, paramsvalues, penalties, predicted_input, rbf_centers, rbf_sigmas, sampleweight, store_bag_inputs, store_bag_size, target, test_costf, test_costs, training_cost, v1, v2, PLearn::varDeepCopyField(), w1, w2, wdirect, wout, and wrec.

Referenced by PLearn::SurfaceTemplateLearner::makeDeepCopyFromShallowCopy(), and PLearn::AddLayersNNet::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

// protected:

varDeepCopyField(rbf_centers, copies);

varDeepCopyField(rbf_sigmas, copies);

varDeepCopyField(junk_prob, copies);

varDeepCopyField(alpha_adaboost,copies);

varDeepCopyField(output, copies);

varDeepCopyField(predicted_input, copies);

deepCopyField(costs, copies);

deepCopyField(penalties, copies);

varDeepCopyField(training_cost, copies);

varDeepCopyField(test_costs, copies);

deepCopyField(invars, copies);

deepCopyField(params, copies);

varDeepCopyField(bag_inputs, copies);

deepCopyField(store_bag_inputs, copies);

varDeepCopyField(bag_size, copies);

deepCopyField(store_bag_size, copies);

// public:

deepCopyField(paramsvalues, copies);

varDeepCopyField(input, copies);

varDeepCopyField(target, copies);

varDeepCopyField(sampleweight, copies);

varDeepCopyField(w1, copies);

varDeepCopyField(w2, copies);

deepCopyField(v1, copies);

deepCopyField(v2, copies);

varDeepCopyField(wout, copies);

varDeepCopyField(outbias, copies);

varDeepCopyField(wdirect, copies);

varDeepCopyField(wrec, copies);

varDeepCopyField(hidden_layer, copies);

deepCopyField(input_to_output, copies);

deepCopyField(test_costf, copies);

deepCopyField(output_and_target_to_cost, copies);

varDeepCopyField(first_hidden_layer, copies);

deepCopyField(cost_funcs, copies);

deepCopyField(optimizer, copies);

}

| int PLearn::NNet::outputsize | ( | ) | const [virtual] |

SUBCLASS WRITING: override this so that it returns the size of this learner's output, as a function of its inputsize(), targetsize() and set options.

Implements PLearn::PLearner.

Definition at line 1256 of file NNet.cc.

References noutputs.

Referenced by buildOutputFromInput(), computeCostsFromOutputs(), computeOutput(), and computeOutputAndCosts().

{

return noutputs;

}

Overridden to support the case where noutputs==-1, in which case noutputs is set automatically from the targetsize of the training set (correct for the regression case; should be extended to cover classification scenarios in the future as well.)

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::SurfaceTemplateLearner.

Definition at line 556 of file NNet.cc.

References n_training_bags, noutputs, PLASSERT, and PLearn::PLearner::setTrainingSet().

Referenced by PLearn::SurfaceTemplateLearner::setTrainingSet().

{

PLASSERT( training_set );

// Automatically set noutputs from targetsize if not already set

if (noutputs < 0)

noutputs = training_set->targetsize();

inherited::setTrainingSet(training_set, call_forget);

//cout << "name = " << name << endl << "targetsize = " << targetsize_ << endl << "weightsize = " << weightsize_ << endl;

// Since the training set probably changed, it is safer to reset

// 'n_training_bags', just in case.

n_training_bags = -1;

}

| void PLearn::NNet::train | ( | ) | [virtual] |

*** SUBCLASS WRITING: ***

The role of the train method is to bring the learner up to stage==nstages, updating the stats with training costs measured on-line in the process.

TYPICAL CODE:

static Vec input; // static so we don't reallocate/deallocate memory each time... static Vec target; // (but be careful that static means shared!) input.resize(inputsize()); // the train_set's inputsize() target.resize(targetsize()); // the train_set's targetsize() real weight; if(!train_stats) // make a default stats collector, in case there's none train_stats = new VecStatsCollector(); if(nstages<stage) // asking to revert to a previous stage! forget(); // reset the learner to stage=0 while(stage<nstages) { // clear statistics of previous epoch train_stats->forget(); //... train for 1 stage, and update train_stats, // using train_set->getSample(input, target, weight); // and train_stats->update(train_costs) ++stage; train_stats->finalize(); // finalize statistics for this epoch }

Implements PLearn::PLearner.

Reimplemented in PLearn::SurfaceTemplateLearner.

Definition at line 1263 of file NNet.cc.

References PLearn::TVec< T >::append(), batch_size, build(), classname(), PLearn::endl(), PLearn::PLearner::expdir, PLearn::VMat::getExample(), i, input, input_to_output, invars, PLearn::PPath::isEmpty(), PLearn::isfile(), PLearn::PP< T >::isNull(), PLearn::TVec< T >::lastElement(), PLearn::VMat::length(), max_bag_size, PLearn::meanOf(), n_training_bags, PLearn::PLearner::nstages, operate_on_bags, optimizer, output_and_target_to_cost, params, PLERROR, PLearn::pout, PLearn::PLearner::report_progress, PLearn::PLearner::setTrainStatsCollector(), PLearn::PLearner::stage, PLearn::sumOverBags(), target, PLearn::SumOverBagsVariable::TARGET_COLUMN_FIRST, test_costf, PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, training_cost, and PLearn::PLearner::verbosity.

Referenced by PLearn::SurfaceTemplateLearner::train().

{

// NNet nstages is number of epochs (whole passages through the training set)

// while optimizer nstages is number of weight updates.

// So relationship between the 2 depends on whether we are in stochastic,

// batch or minibatch mode.

if(!train_set)

PLERROR("In NNet::train - No training set available");

if (operate_on_bags && n_training_bags < 0) {

// Compute the number of bags in the training set.

int n_train = train_set->length();

PP<ProgressBar> pb =

report_progress ? new ProgressBar("Counting bags", n_train)

: NULL;

Vec input, target;

real weight;

n_training_bags = 0;

for (int i = 0; i < n_train; i++) {

train_set->getExample(i, input, target, weight);

if (int(round(target.lastElement()))

& SumOverBagsVariable::TARGET_COLUMN_FIRST)

n_training_bags++;

if (pb)

pb->update(i);

}

}

if(!train_stats)

setTrainStatsCollector(new VecStatsCollector());

// PLERROR("In NNet::train, you did not setTrainStatsCollector");

int n_train = operate_on_bags ? n_training_bags

: train_set->length();

if(input_to_output.isNull())

{

// Net has not been properly built yet (because build was called before the learner had a proper training set)

build();

if (input_to_output.isNull())

PLERROR(

"NNet::build was not able to properly build the network.\n"

"Please check that your variables have an appropriate value,\n"

"that your training set is correctly defined, that its sizes\n"

"are consistent, that its targetsize is not -1...");

}

// number of samples seen by optimizer before each optimizer update

int nsamples = batch_size>0 ? batch_size : n_train;

Func paramf = Func(invars, training_cost); // parameterized function to optimize

Var totalcost =

operate_on_bags ? sumOverBags(train_set, paramf, max_bag_size,

nsamples, true)

: meanOf(train_set, paramf, nsamples);

if(optimizer)

{

optimizer->setToOptimize(params, totalcost);

optimizer->build();

}

else PLERROR("NNet::train can't train without setting an optimizer first!");

// number of optimizer stages corresponding to one learner stage (one epoch)

int optstage_per_lstage = n_train / nsamples;

PP<ProgressBar> pb;

if(report_progress)

pb = new ProgressBar("Training " + classname() + " from stage " + tostring(stage) + " to " + tostring(nstages), nstages-stage);

// Open/create vmat to save train costs at each epoch.

VMat costs_per_epoch= 0;

if(!expdir.isEmpty())

{

PPath cpe_path= expdir / "NNet_train_costs.pmat";

if(isfile(cpe_path))

costs_per_epoch= new FileVMatrix(cpe_path, true);

else

{

TVec<string> fieldnames(1, "epoch");

fieldnames.append(train_stats->getFieldNames());

costs_per_epoch= new FileVMatrix(cpe_path, 0, fieldnames);

}

}

int initial_stage = stage;

bool early_stop=false;

while(stage<nstages && !early_stop)

{

optimizer->nstages = optstage_per_lstage;

train_stats->forget();

optimizer->early_stop = false;

early_stop = optimizer->optimizeN(*train_stats);

// optimizer->verifyGradient(1e-6); // Uncomment if you want to check your new Var.

train_stats->finalize();

if(verbosity>2)

pout << "Epoch " << stage << " train objective: " << train_stats->getMean() << endl;

if(costs_per_epoch)

{

Vec v(1, stage);

v.append(train_stats->getMean());

costs_per_epoch->appendRow(v);

}

++stage;

if(pb)

pb->update(stage-initial_stage);

}

if(verbosity>1)

pout << "EPOCH " << stage << " train objective: " << train_stats->getMean() << endl;

output_and_target_to_cost->recomputeParents();

test_costf->recomputeParents();

// cerr << "totalcost->value = " << totalcost->value << endl;

// cout << "Result for benchmark is: " << totalcost->value << endl;

}

Reimplemented from PLearn::PLearner.

Reimplemented in PLearn::AddLayersNNet, and PLearn::SurfaceTemplateLearner.

Var PLearn::NNet::alpha_adaboost [protected] |

Definition at line 63 of file NNet.h.

Referenced by getCost(), and makeDeepCopyFromShallowCopy().

Var PLearn::NNet::bag_inputs [protected] |

Used to store the inputs in a bag when 'operate_on_bags' is true.

Definition at line 74 of file NNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Var PLearn::NNet::bag_size [protected] |

Used to store the size of a bag when 'operate_on_bags' is true.

Definition at line 80 of file NNet.h.

Referenced by build_(), and makeDeepCopyFromShallowCopy().

Definition at line 188 of file NNet.h.

Referenced by declareOptions(), and train().

Definition at line 151 of file NNet.h.

Referenced by buildPenalties(), PLearn::AddLayersNNet::buildPenalties(), declareOptions(), and PLearn::SurfaceTemplateLearner::declareOptions().

Definition at line 159 of file NNet.h.

Referenced by declareOptions(), and getCost().

| TVec<string> PLearn::NNet::cost_funcs |

Cost functions.

Definition at line 183 of file NNet.h.

Referenced by buildCosts(), computeCostsFromOutputs(), declareOptions(), getTestCostNames(), getTrainCostNames(), makeDeepCopyFromShallowCopy(), and PLearn::SurfaceTemplateLearner::SurfaceTemplateLearner().

VarArray PLearn::NNet::costs [protected] |

Definition at line 66 of file NNet.h.

Referenced by buildCosts(), and makeDeepCopyFromShallowCopy().

Definition at line 170 of file NNet.h.

Referenced by buildOutputFromInput(), PLearn::SurfaceTemplateLearner::declareOptions(), declareOptions(), and initializeParams().

Definition at line 158 of file NNet.h.

Referenced by buildPenalties(), PLearn::SurfaceTemplateLearner::declareOptions(), and declareOptions().

Definition at line 175 of file NNet.h.

Referenced by PLearn::AddLayersNNet::build(), PLearn::AddLayersNNet::build_(), build_(), PLearn::SurfaceTemplateLearner::declareOptions(), and declareOptions().

Definition at line 164 of file NNet.h.

Referenced by buildOutputFromInput(), PLearn::SurfaceTemplateLearner::declareOptions(), and declareOptions().

Definition at line 177 of file NNet.h.

Referenced by PLearn::SurfaceTemplateLearner::build_(), buildOutputFromInput(), PLearn::SurfaceTemplateLearner::declareOptions(), declareOptions(), initializeParams(), makeDeepCopyFromShallowCopy(), PLearn::SurfaceTemplateLearner::setTrainingSet(), PLearn::SurfaceTemplateLearner::test(), and PLearn::SurfaceTemplateLearner::train().

Definition at line 178 of file NNet.h.

Referenced by PLearn::SurfaceTemplateLearner::build(), buildOutputFromInput(), and declareOptions().

Definition at line 161 of file NNet.h.

Referenced by buildOutputFromInput(), PLearn::SurfaceTemplateLearner::declareOptions(), declareOptions(), and initializeParams().

Definition at line 108 of file NNet.h.

Referenced by PLearn::AddLayersNNet::build_(), build_(), buildOutputFromInput(), and makeDeepCopyFromShallowCopy().

Definition at line 172 of file NNet.h.

Referenced by buildOutputFromInput(), PLearn::SurfaceTemplateLearner::declareOptions(), declareOptions(), and hiddenLayer().

Definition at line 191 of file NNet.h.

Referenced by PLearn::AddLayersNNet::build(), PLearn::SurfaceTemplateLearner::declareOptions(), declareOptions(), and fillWeights().

Definition at line 92 of file NNet.h.

Referenced by build_(), PLearn::AddLayersNNet::build_(), buildFuncs(), buildPenalties(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 169 of file NNet.h.

Referenced by buildPenalties(), PLearn::SurfaceTemplateLearner::declareOptions(), and declareOptions().

Func PLearn::NNet::input_to_output [mutable] |

Definition at line 112 of file NNet.h.

Referenced by buildFuncs(), computeOutput(), makeDeepCopyFromShallowCopy(), and train().

Definition at line 173 of file NNet.h.

Referenced by applyTransferFunc().

Definition at line 173 of file NNet.h.

Referenced by applyTransferFunc().

VarArray PLearn::NNet::invars [protected] |

Definition at line 70 of file NNet.h.

Referenced by buildFuncs(), makeDeepCopyFromShallowCopy(), and train().

Var PLearn::NNet::junk_prob [protected] |

Definition at line 62 of file NNet.h.

Referenced by buildOutputFromInput(), and makeDeepCopyFromShallowCopy().

Definition at line 167 of file NNet.h.

Referenced by build_(), PLearn::SurfaceTemplateLearner::declareOptions(), and declareOptions().