|

PLearn 0.1

|

|

PLearn 0.1

|

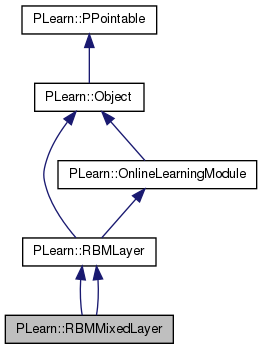

Layer in an RBM formed with binomial units. More...

#include <RBMMixedLayer.h>

Public Member Functions | |

| RBMMixedLayer () | |

| Default constructor. | |

| RBMMixedLayer (TVec< PP< RBMLayer > > the_sub_layers) | |

| Constructor from the sub_layers. | |

| virtual void | getUnitActivations (int i, PP< RBMParameters > rbmp, int offset=0) |

| Uses "rbmp" to obtain the activations of unit "i" of this layer. | |

| virtual void | getAllActivations (PP< RBMParameters > rbmp, int offset=0) |

| Uses "rbmp" to obtain the activations of all units in this layer. | |

| virtual void | generateSample () |

| compute a sample, and update the sample field | |

| virtual void | computeExpectation () |

| compute the expectation | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient) |

| back-propagates the output gradient to the input | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMMixedLayer * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

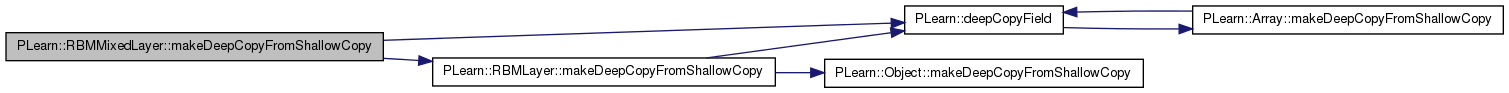

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| RBMMixedLayer () | |

| Default constructor. | |

| RBMMixedLayer (TVec< PP< RBMLayer > > the_sub_layers) | |

| Constructor from the sub_layers. | |

| virtual void | setLearningRate (real the_learning_rate) |

| Sets the learning rate, also in the sub_layers. | |

| virtual void | setMomentum (real the_momentum) |

| Sets the momentum, also in the sub_layers. | |

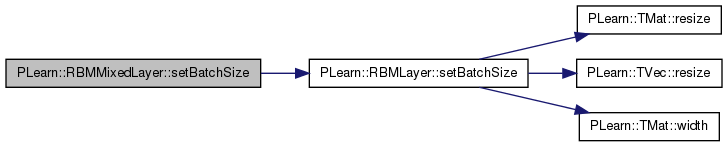

| virtual void | setBatchSize (int the_batch_size) |

| Sets batch_size and resize activations, expectations, and samples. | |

| virtual void | setExpectation (const Vec &the_expectation) |

| Copy the given expectation in the 'expectation' vector. | |

| virtual void | setExpectationByRef (const Vec &the_expectation) |

| Make the 'expectation' vector point to the given data vector (so no copy is performed). | |

| virtual void | setExpectations (const Mat &the_expectations) |

| Copy the given expectations in the 'expectations' matrix. | |

| virtual void | setExpectationsByRef (const Mat &the_expectations) |

| Make the 'expectations' matrix point to the given data matrix (so no copy is performed). | |

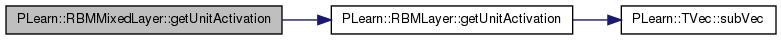

| virtual void | getUnitActivation (int i, PP< RBMConnection > rbmc, int offset=0) |

| Uses "rbmc" to compute the activation of unit "i" of this layer. | |

| virtual void | getAllActivations (PP< RBMConnection > rbmc, int offset=0, bool minibatch=false) |

| Uses "rbmc" to obtain the activations of all units in this layer. | |

| virtual void | expectation_is_not_up_to_date () |

| virtual void | generateSample () |

| compute a sample, and update the sample field | |

| virtual void | generateSamples () |

| generate activations.length() samples | |

| virtual void | computeExpectation () |

| compute the expectation | |

| virtual void | computeExpectations () |

| compute the expectations according to activations | |

| virtual void | fprop (const Vec &input, Vec &output) const |

| forward propagation | |

| virtual void | fprop (const Mat &inputs, Mat &outputs) |

| Batch forward propagation. | |

| virtual void | fprop (const Vec &input, const Vec &rbm_bias, Vec &output) const |

| forward propagation with provided bias | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false) |

| back-propagates the output gradient to the input | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| Back-propagate the output gradient to the input, and update parameters. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &rbm_bias, const Vec &output, Vec &input_gradient, Vec &rbm_bias_gradient, const Vec &output_gradient) |

| back-propagates the output gradient to the input and the bias | |

| virtual real | fpropNLL (const Vec &target) |

| Computes the negative log-likelihood of target given the internal activations of the layer. | |

| virtual void | fpropNLL (const Mat &targets, const Mat &costs_column) |

| Batch fpropNLL. | |

| virtual void | bpropNLL (const Vec &target, real nll, Vec &bias_gradient) |

| Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations. | |

| virtual void | bpropNLL (const Mat &targets, const Mat &costs_column, Mat &bias_gradients) |

| virtual void | accumulatePosStats (const Vec &pos_values) |

| Accumulates positive phase statistics. | |

| virtual void | accumulateNegStats (const Vec &neg_values) |

| Accumulates negative phase statistics. | |

| virtual void | update () |

| Update parameters according to accumulated statistics. | |

| virtual void | update (const Vec &pos_values, const Vec &neg_values) |

| Update parameters according to one pair of vectors. | |

| virtual void | update (const Mat &pos_values, const Mat &neg_values) |

| Update parameters according to several pairs of vectors. | |

| virtual void | reset () |

| resets activations, sample and expectation fields | |

| virtual void | clearStats () |

| resets the statistics and counts | |

| virtual void | forget () |

| forgets everything | |

| virtual real | energy (const Vec &unit_values) const |

| Compute -bias' unit_values. | |

| virtual real | freeEnergyContribution (const Vec &unit_activations) const |

Computes  This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. | |

| virtual void | freeEnergyContributionGradient (const Vec &unit_activations, Vec &unit_activations_gradient, real output_gradient=1, bool accumulate=false) const |

Computes gradient of the result of freeEnergyContribution  with respect to unit_activations. with respect to unit_activations. | |

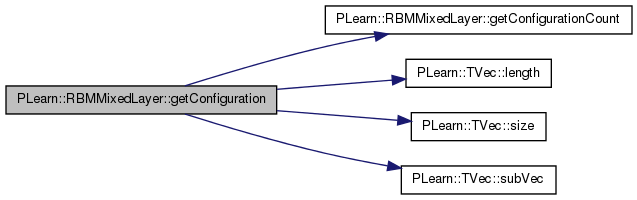

| virtual int | getConfigurationCount () |

| Returns a number of different configurations the layer can be in. | |

| virtual void | getConfiguration (int conf_index, Vec &output) |

| Computes the conf_index configuration of the layer. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMMixedLayer * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| TVec< PP< RBMLayer > > | sub_layers |

| The concatenated RBMLayers composing this layer. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| TVec< int > | init_positions |

| Initial index of the sub_layers. | |

| TVec< int > | layer_of_unit |

| layer_of_unit[i] is the index of sub_layer containing unit i | |

| int | n_layers |

| Number of sub-layers. | |

Private Types | |

| typedef RBMLayer | inherited |

| typedef RBMLayer | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| Vec | nlls |

| Mat | mat_nlls |

Layer in an RBM formed with binomial units.

Layer in an RBM formed with the concatenation of other layers.

Definition at line 54 of file DEPRECATED/RBMMixedLayer.h.

typedef RBMLayer PLearn::RBMMixedLayer::inherited [private] |

Reimplemented from PLearn::RBMLayer.

Definition at line 56 of file DEPRECATED/RBMMixedLayer.h.

typedef RBMLayer PLearn::RBMMixedLayer::inherited [private] |

Reimplemented from PLearn::RBMLayer.

Definition at line 55 of file RBMMixedLayer.h.

| PLearn::RBMMixedLayer::RBMMixedLayer | ( | ) |

Constructor from the sub_layers.

Definition at line 55 of file DEPRECATED/RBMMixedLayer.cc.

References build().

:

sub_layers( the_sub_layers )

{

build();

}

| PLearn::RBMMixedLayer::RBMMixedLayer | ( | ) |

Default constructor.

Constructor from the sub_layers.

| string PLearn::RBMMixedLayer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

| static string PLearn::RBMMixedLayer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

| OptionList & PLearn::RBMMixedLayer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

| static OptionList& PLearn::RBMMixedLayer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

| static RemoteMethodMap& PLearn::RBMMixedLayer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

| RemoteMethodMap & PLearn::RBMMixedLayer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

Reimplemented from PLearn::RBMLayer.

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

| static Object* PLearn::RBMMixedLayer::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

| Object * PLearn::RBMMixedLayer::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

| StaticInitializer RBMMixedLayer::_static_initializer_ & PLearn::RBMMixedLayer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

| static void PLearn::RBMMixedLayer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

| void PLearn::RBMMixedLayer::accumulateNegStats | ( | const Vec & | neg_values | ) | [virtual] |

Accumulates negative phase statistics.

Reimplemented from PLearn::RBMLayer.

Definition at line 545 of file RBMMixedLayer.cc.

References i, init_positions, n_layers, PLearn::RBMLayer::neg_count, PLearn::RBMLayer::size, sub_layers, and PLearn::TVec< T >::subVec().

{

for( int i=0 ; i<n_layers ; i++ )

{

Vec sub_neg_values = neg_values.subVec( init_positions[i],

sub_layers[i]->size );

sub_layers[i]->accumulateNegStats( sub_neg_values );

}

neg_count++;

}

| void PLearn::RBMMixedLayer::accumulatePosStats | ( | const Vec & | pos_values | ) | [virtual] |

Accumulates positive phase statistics.

Reimplemented from PLearn::RBMLayer.

Definition at line 534 of file RBMMixedLayer.cc.

References i, init_positions, n_layers, PLearn::RBMLayer::pos_count, PLearn::RBMLayer::size, sub_layers, and PLearn::TVec< T >::subVec().

{

for( int i=0 ; i<n_layers ; i++ )

{

Vec sub_pos_values = pos_values.subVec( init_positions[i],

sub_layers[i]->size );

sub_layers[i]->accumulatePosStats( sub_pos_values );

}

pos_count++;

}

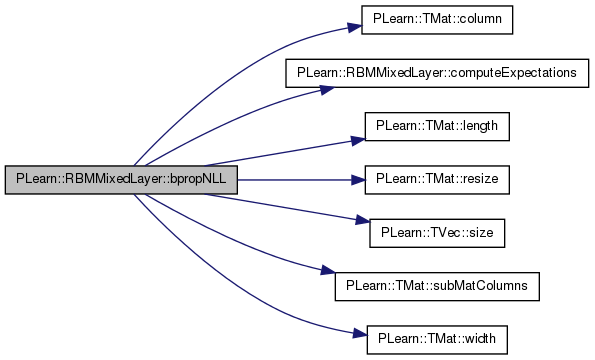

| void PLearn::RBMMixedLayer::bpropNLL | ( | const Mat & | targets, |

| const Mat & | costs_column, | ||

| Mat & | bias_gradients | ||

| ) | [virtual] |

Reimplemented from PLearn::RBMLayer.

Definition at line 475 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::batch_size, PLearn::TMat< T >::column(), computeExpectations(), i, init_positions, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), mat_nlls, n_layers, PLASSERT, PLearn::TMat< T >::resize(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, sub_layers, PLearn::TMat< T >::subMatColumns(), and PLearn::TMat< T >::width().

{

computeExpectations();

PLASSERT( targets.width() == input_size );

PLASSERT( targets.length() == batch_size );

PLASSERT( costs_column.width() == 1 );

PLASSERT( costs_column.length() == batch_size );

bias_gradients.resize( batch_size, size );

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Mat sub_targets = targets.subMatColumns(begin, size_i);

Mat sub_bias_gradients = bias_gradients.subMatColumns(begin, size_i);

// TODO: something else than store mat_nlls...

sub_layers[i]->bpropNLL( sub_targets, mat_nlls.column(i),

sub_bias_gradients );

}

}

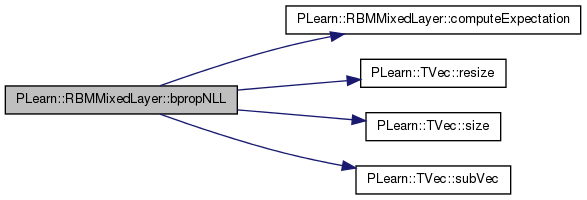

| void PLearn::RBMMixedLayer::bpropNLL | ( | const Vec & | target, |

| real | nll, | ||

| Vec & | bias_gradient | ||

| ) | [virtual] |

Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations.

Reimplemented from PLearn::RBMLayer.

Definition at line 457 of file RBMMixedLayer.cc.

References computeExpectation(), i, init_positions, PLearn::OnlineLearningModule::input_size, n_layers, nlls, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, sub_layers, and PLearn::TVec< T >::subVec().

{

computeExpectation();

PLASSERT( target.size() == input_size );

bias_gradient.resize( size );

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Vec sub_target = target.subVec(begin, size_i);

Vec sub_bias_gradient = bias_gradient.subVec(begin, size_i);

sub_layers[i]->bpropNLL( sub_target, nlls[i], sub_bias_gradient );

}

}

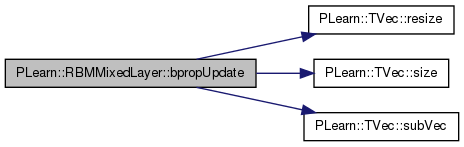

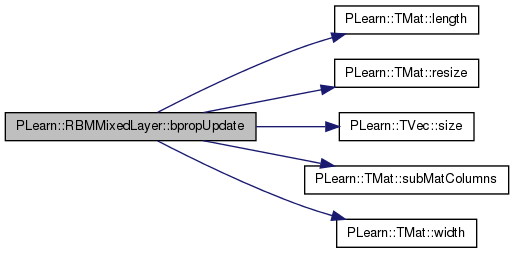

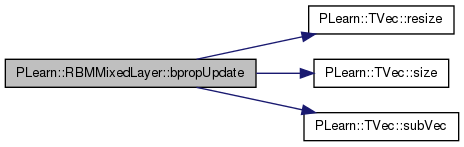

| void PLearn::RBMMixedLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

back-propagates the output gradient to the input

Implements PLearn::RBMLayer.

Definition at line 310 of file RBMMixedLayer.cc.

References i, init_positions, n_layers, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, sub_layers, and PLearn::TVec< T >::subVec().

{

PLASSERT( input.size() == size );

PLASSERT( output.size() == size );

PLASSERT( output_gradient.size() == size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == size,

"Cannot resize input_gradient AND accumulate into it" );

}

else

// Note that, by construction of 'size', the whole gradient vector

// should be cleared in the calls to sub_layers->bpropUpdate(..) below.

input_gradient.resize( size );

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Vec sub_input = input.subVec( begin, size_i );

Vec sub_output = output.subVec( begin, size_i );

Vec sub_input_gradient = input_gradient.subVec( begin, size_i );

Vec sub_output_gradient = output_gradient.subVec( begin, size_i );

sub_layers[i]->bpropUpdate( sub_input, sub_output,

sub_input_gradient, sub_output_gradient,

accumulate );

}

}

| void PLearn::RBMMixedLayer::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

Back-propagate the output gradient to the input, and update parameters.

Implements PLearn::RBMLayer.

Definition at line 344 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::batch_size, i, init_positions, PLearn::TMat< T >::length(), n_layers, PLASSERT, PLASSERT_MSG, PLearn::TMat< T >::resize(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, sub_layers, PLearn::TMat< T >::subMatColumns(), and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == size );

PLASSERT( outputs.width() == size );

PLASSERT( output_gradients.width() == size );

int batch_size = inputs.length();

PLASSERT( outputs.length() == batch_size );

PLASSERT( output_gradients.length() == batch_size );

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == size &&

input_gradients.length() == batch_size,

"Cannot resize input_gradients and accumulate into it" );

}

else

// Note that, by construction of 'size', the whole gradient vector

// should be cleared in the calls to sub_layers->bpropUpdate(..) below.

input_gradients.resize(batch_size, size);

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Mat sub_inputs = inputs.subMatColumns( begin, size_i );

Mat sub_outputs = outputs.subMatColumns( begin, size_i );

Mat sub_input_gradients =

input_gradients.subMatColumns( begin, size_i );

Mat sub_output_gradients =

output_gradients.subMatColumns( begin, size_i );

sub_layers[i]->bpropUpdate( sub_inputs, sub_outputs,

sub_input_gradients, sub_output_gradients,

accumulate );

}

}

| void PLearn::RBMMixedLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | rbm_bias, | ||

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| Vec & | rbm_bias_gradient, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

back-propagates the output gradient to the input and the bias

Reimplemented from PLearn::RBMLayer.

Definition at line 385 of file RBMMixedLayer.cc.

References i, init_positions, n_layers, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, sub_layers, and PLearn::TVec< T >::subVec().

{

PLASSERT( input.size() == size );

PLASSERT( rbm_bias.size() == size );

PLASSERT( output.size() == size );

PLASSERT( output_gradient.size() == size );

input_gradient.resize( size );

rbm_bias_gradient.resize( size );

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Vec sub_input = input.subVec( begin, size_i );

Vec sub_rbm_bias = rbm_bias.subVec( begin, size_i );

Vec sub_output = output.subVec( begin, size_i );

Vec sub_input_gradient = input_gradient.subVec( begin, size_i );

Vec sub_rbm_bias_gradient = rbm_bias_gradient.subVec( begin, size_i);

Vec sub_output_gradient = output_gradient.subVec( begin, size_i );

sub_layers[i]->bpropUpdate( sub_input, sub_rbm_bias, sub_output,

sub_input_gradient, sub_rbm_bias_gradient,

sub_output_gradient );

}

}

| void PLearn::RBMMixedLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

back-propagates the output gradient to the input

Implements PLearn::RBMLayer.

Definition at line 104 of file DEPRECATED/RBMMixedLayer.cc.

References PLERROR.

{

PLERROR( "RBMMixedLayer::bpropUpdate not implemented yet." );

}

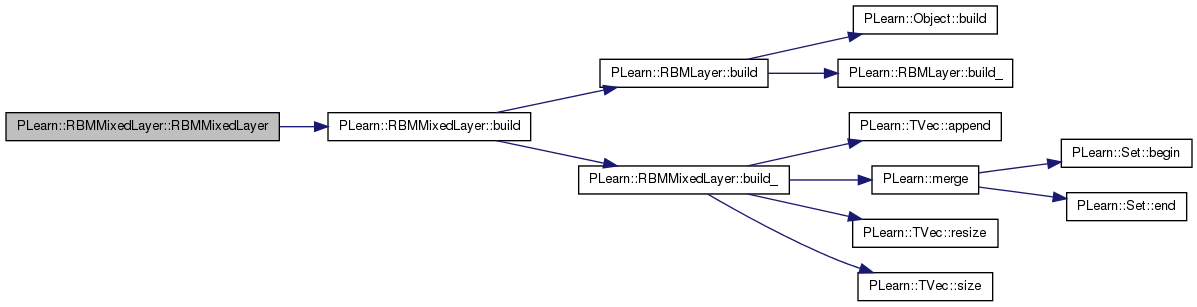

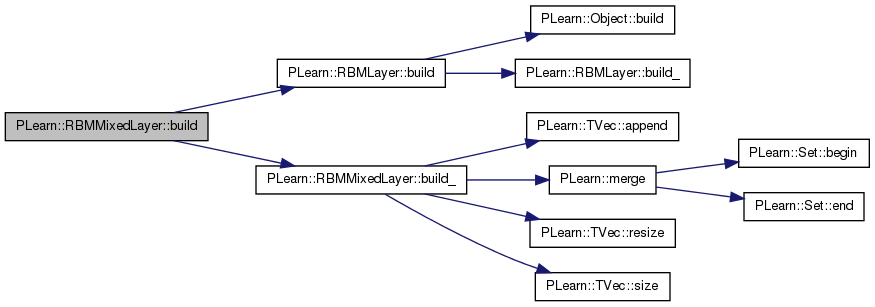

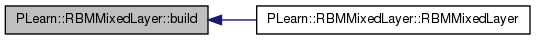

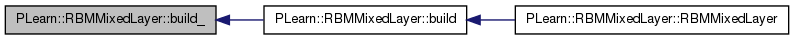

| virtual void PLearn::RBMMixedLayer::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMLayer.

| void PLearn::RBMMixedLayer::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMLayer.

Definition at line 162 of file DEPRECATED/RBMMixedLayer.cc.

References PLearn::RBMLayer::build(), and build_().

Referenced by RBMMixedLayer().

{

inherited::build();

build_();

}

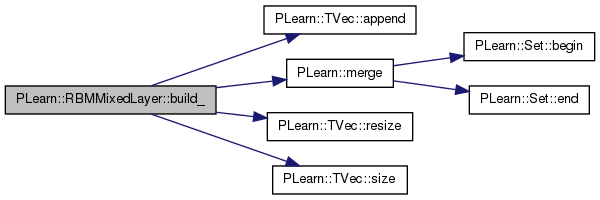

| void PLearn::RBMMixedLayer::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMLayer.

| void PLearn::RBMMixedLayer::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMLayer.

Definition at line 134 of file DEPRECATED/RBMMixedLayer.cc.

References PLearn::RBMLayer::activations, PLearn::TVec< T >::append(), PLearn::RBMLayer::expectation, PLearn::RBMLayer::expectation_is_up_to_date, i, init_positions, layer_of_unit, PLearn::merge(), n_layers, PLearn::TVec< T >::resize(), PLearn::RBMLayer::sample, PLearn::TVec< T >::size(), PLearn::RBMLayer::size, sub_layers, and PLearn::RBMLayer::units_types.

Referenced by build().

{

units_types = "";

size = 0;

activations.resize( 0 );

sample.resize( 0 );

expectation.resize( 0 );

expectation_is_up_to_date = false;

layer_of_unit.resize( 0 );

n_layers = sub_layers.size();

init_positions.resize( n_layers );

for( int i=0 ; i<n_layers ; i++ )

{

init_positions[i] = size;

PP<RBMLayer> cur_layer = sub_layers[i];

units_types += cur_layer->units_types;

size += cur_layer->size;

activations = merge( activations, cur_layer->activations );

sample = merge( sample, cur_layer->sample );

expectation = merge( expectation, cur_layer->expectation );

layer_of_unit.append( TVec<int>( cur_layer->size, i ) );

}

}

| string PLearn::RBMMixedLayer::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

| virtual string PLearn::RBMMixedLayer::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| void PLearn::RBMMixedLayer::clearStats | ( | ) | [virtual] |

resets the statistics and counts

Reimplemented from PLearn::RBMLayer.

Definition at line 598 of file RBMMixedLayer.cc.

References i, n_layers, PLearn::RBMLayer::neg_count, PLearn::RBMLayer::pos_count, and sub_layers.

Referenced by update().

{

for( int i=0 ; i<n_layers ; i++ )

sub_layers[i]->clearStats();

pos_count = 0;

neg_count = 0;

}

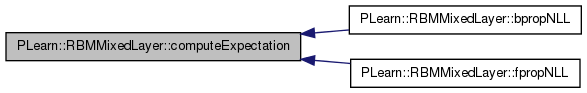

| void PLearn::RBMMixedLayer::computeExpectation | ( | ) | [virtual] |

compute the expectation

Implements PLearn::RBMLayer.

Definition at line 93 of file DEPRECATED/RBMMixedLayer.cc.

References PLearn::RBMLayer::expectation_is_up_to_date, i, n_layers, and sub_layers.

Referenced by bpropNLL(), and fpropNLL().

{

if( expectation_is_up_to_date )

return;

for( int i=0 ; i<n_layers ; i++ )

sub_layers[i]->computeExpectation();

expectation_is_up_to_date = true;

}

| virtual void PLearn::RBMMixedLayer::computeExpectation | ( | ) | [virtual] |

compute the expectation

Implements PLearn::RBMLayer.

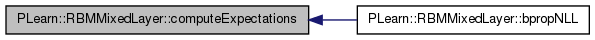

| void PLearn::RBMMixedLayer::computeExpectations | ( | ) | [virtual] |

compute the expectations according to activations

Implements PLearn::RBMLayer.

Definition at line 235 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::expectations_are_up_to_date, i, n_layers, and sub_layers.

Referenced by bpropNLL().

{

if( expectations_are_up_to_date )

return;

for( int i=0 ; i < n_layers ; i++ )

sub_layers[i]->computeExpectations();

expectations_are_up_to_date = true;

}

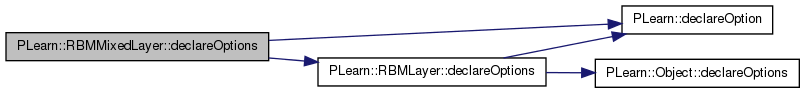

| static void PLearn::RBMMixedLayer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMLayer.

| void PLearn::RBMMixedLayer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMLayer.

Definition at line 111 of file DEPRECATED/RBMMixedLayer.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::RBMLayer::declareOptions(), init_positions, layer_of_unit, PLearn::OptionBase::learntoption, n_layers, and sub_layers.

{

declareOption(ol, "sub_layers", &RBMMixedLayer::sub_layers,

OptionBase::buildoption,

"The concatenated RBMLayers composing this layer.");

declareOption(ol, "init_positions", &RBMMixedLayer::init_positions,

OptionBase::learntoption,

" Initial index of the sub_layers.");

declareOption(ol, "layer_of_unit", &RBMMixedLayer::layer_of_unit,

OptionBase::learntoption,

"layer_of_unit[i] is the index of sub_layer containing unit"

" i.");

declareOption(ol, "n_layers", &RBMMixedLayer::n_layers,

OptionBase::learntoption,

"Number of sub-layers.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RBMMixedLayer::declaringFile | ( | ) | [inline, static] |

| static const PPath& PLearn::RBMMixedLayer::declaringFile | ( | ) | [inline, static] |

| virtual RBMMixedLayer* PLearn::RBMMixedLayer::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMLayer.

| RBMMixedLayer * PLearn::RBMMixedLayer::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

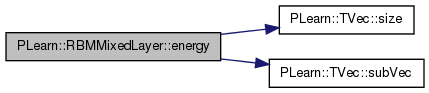

Compute -bias' unit_values.

Reimplemented from PLearn::RBMLayer.

Definition at line 713 of file RBMMixedLayer.cc.

References i, init_positions, n_layers, PLearn::TVec< T >::size(), sub_layers, and PLearn::TVec< T >::subVec().

{

real energy = 0;

for ( int i = 0; i < n_layers; ++i ) {

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Vec values = unit_values.subVec( begin, size_i );

energy += sub_layers[i]->energy(values);

}

return energy;

}

| void PLearn::RBMMixedLayer::expectation_is_not_up_to_date | ( | ) | [virtual] |

Reimplemented from PLearn::RBMLayer.

Definition at line 192 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::expectation_is_up_to_date, i, n_layers, and sub_layers.

{

for( int i=0 ; i<n_layers ; i++ )

sub_layers[i]->expectation_is_not_up_to_date();

expectation_is_up_to_date = false;

}

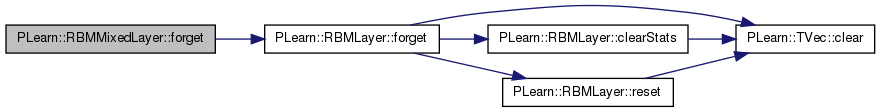

| void PLearn::RBMMixedLayer::forget | ( | ) | [virtual] |

forgets everything

Reimplemented from PLearn::RBMLayer.

Definition at line 607 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::forget(), i, n_layers, PLWARNING, PLearn::RBMLayer::random_gen, and sub_layers.

{

inherited::forget();

if( !random_gen )

{

PLWARNING("RBMMixedLayer: cannot forget() without random_gen");

return;

}

for( int i=0; i<n_layers; i++ )

{

if( !(sub_layers[i]->random_gen) )

sub_layers[i]->random_gen = random_gen;

sub_layers[i]->forget();

}

}

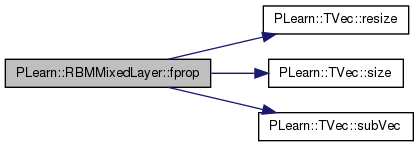

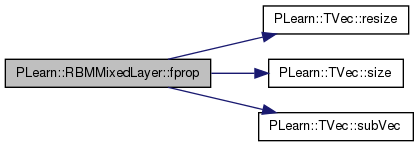

forward propagation

Reimplemented from PLearn::RBMLayer.

Definition at line 249 of file RBMMixedLayer.cc.

References i, init_positions, PLearn::OnlineLearningModule::input_size, n_layers, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sub_layers, and PLearn::TVec< T >::subVec().

{

PLASSERT( input.size() == input_size );

output.resize( output_size );

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Vec sub_input = input.subVec(begin, size_i);

Vec sub_output = output.subVec(begin, size_i);

sub_layers[i]->fprop( sub_input, sub_output );

}

}

| void PLearn::RBMMixedLayer::fprop | ( | const Vec & | input, |

| const Vec & | rbm_bias, | ||

| Vec & | output | ||

| ) | const [virtual] |

forward propagation with provided bias

Reimplemented from PLearn::RBMLayer.

Definition at line 287 of file RBMMixedLayer.cc.

References i, init_positions, PLearn::OnlineLearningModule::input_size, n_layers, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sub_layers, and PLearn::TVec< T >::subVec().

{

PLASSERT( input.size() == input_size );

PLASSERT( rbm_bias.size() == input_size );

output.resize( output_size );

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Vec sub_input = input.subVec(begin, size_i);

Vec sub_rbm_bias = rbm_bias.subVec(begin, size_i);

Vec sub_output = output.subVec(begin, size_i);

sub_layers[i]->fprop( sub_input, sub_rbm_bias, sub_output );

}

}

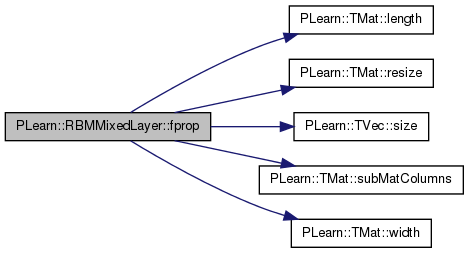

Batch forward propagation.

Reimplemented from PLearn::RBMLayer.

Definition at line 268 of file RBMMixedLayer.cc.

References i, init_positions, PLearn::TMat< T >::length(), n_layers, PLASSERT, PLearn::TMat< T >::resize(), PLearn::TVec< T >::size(), PLearn::RBMLayer::size, sub_layers, PLearn::TMat< T >::subMatColumns(), and PLearn::TMat< T >::width().

{

int mbatch_size = inputs.length();

PLASSERT( inputs.width() == size );

outputs.resize( mbatch_size, size );

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Mat sub_inputs = inputs.subMatColumns(begin, size_i);

Mat sub_outputs = outputs.subMatColumns(begin, size_i);

// GCC bug? This doesn't work:

// sub_layers[i]->fprop( sub_inputs, sub_outputs );

sub_layers[i]->OnlineLearningModule::fprop( sub_inputs, sub_outputs );

}

}

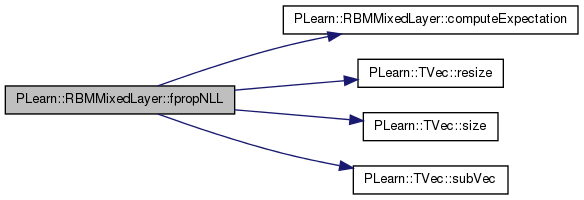

Computes the negative log-likelihood of target given the internal activations of the layer.

Reimplemented from PLearn::RBMLayer.

Definition at line 415 of file RBMMixedLayer.cc.

References computeExpectation(), i, init_positions, PLearn::OnlineLearningModule::input_size, n_layers, nlls, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), sub_layers, and PLearn::TVec< T >::subVec().

{

computeExpectation();

PLASSERT( target.size() == input_size );

nlls.resize(n_layers);

real ret = 0;

real nlli = 0;

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

nlli = sub_layers[i]->fpropNLL( target.subVec(begin, size_i));

nlls[i] = nlli;

ret += nlli;

}

return ret;

}

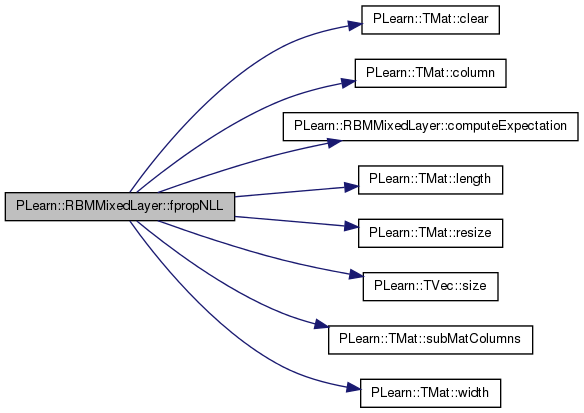

Batch fpropNLL.

Reimplemented from PLearn::RBMLayer.

Definition at line 435 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::batch_size, PLearn::TMat< T >::clear(), PLearn::TMat< T >::column(), computeExpectation(), i, init_positions, PLearn::OnlineLearningModule::input_size, j, PLearn::TMat< T >::length(), mat_nlls, n_layers, PLASSERT, PLearn::TMat< T >::resize(), PLearn::TVec< T >::size(), sub_layers, PLearn::TMat< T >::subMatColumns(), and PLearn::TMat< T >::width().

{

computeExpectation();

PLASSERT( targets.width() == input_size );

PLASSERT( targets.length() == batch_size );

PLASSERT( costs_column.width() == 1 );

PLASSERT( costs_column.length() == batch_size );

costs_column.clear();

mat_nlls.resize(batch_size, n_layers);

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

sub_layers[i]->fpropNLL( targets.subMatColumns(begin, size_i),

mat_nlls.column(i) );

for( int j=0; j < batch_size; ++j )

costs_column(j,0) += mat_nlls(j, i);

}

}

Computes  This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

Reimplemented from PLearn::RBMLayer.

Definition at line 727 of file RBMMixedLayer.cc.

References i, init_positions, n_layers, PLearn::TVec< T >::size(), sub_layers, and PLearn::TVec< T >::subVec().

{

real freeEnergy = 0;

Vec act;

for ( int i = 0; i < n_layers; ++i ) {

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

act = unit_activations.subVec( begin, size_i );

freeEnergy += sub_layers[i]->freeEnergyContribution(act);

}

return freeEnergy;

}

| void PLearn::RBMMixedLayer::freeEnergyContributionGradient | ( | const Vec & | unit_activations, |

| Vec & | unit_activations_gradient, | ||

| real | output_gradient = 1, |

||

| bool | accumulate = false |

||

| ) | const [virtual] |

Computes gradient of the result of freeEnergyContribution  with respect to unit_activations.

with respect to unit_activations.

Optionally, a gradient with respect to freeEnergyContribution can be given

Reimplemented from PLearn::RBMLayer.

Definition at line 743 of file RBMMixedLayer.cc.

References i, init_positions, n_layers, PLearn::TVec< T >::size(), sub_layers, and PLearn::TVec< T >::subVec().

{

Vec act;

Vec gact;

for ( int i = 0; i < n_layers; ++i ) {

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

act = unit_activations.subVec( begin, size_i );

gact = unit_activations_gradient.subVec( begin, size_i );

sub_layers[i]->freeEnergyContributionGradient(

act, gact, output_gradient, accumulate);

}

}

| virtual void PLearn::RBMMixedLayer::generateSample | ( | ) | [virtual] |

compute a sample, and update the sample field

Implements PLearn::RBMLayer.

| void PLearn::RBMMixedLayer::generateSample | ( | ) | [virtual] |

compute a sample, and update the sample field

Implements PLearn::RBMLayer.

Definition at line 87 of file DEPRECATED/RBMMixedLayer.cc.

References i, n_layers, and sub_layers.

{

for( int i=0 ; i<n_layers ; i++ )

sub_layers[i]->generateSample();

}

| void PLearn::RBMMixedLayer::generateSamples | ( | ) | [virtual] |

generate activations.length() samples

Implements PLearn::RBMLayer.

Definition at line 212 of file RBMMixedLayer.cc.

References i, n_layers, and sub_layers.

{

for( int i=0 ; i<n_layers ; i++ )

sub_layers[i]->generateSamples();

}

| void PLearn::RBMMixedLayer::getAllActivations | ( | PP< RBMConnection > | rbmc, |

| int | offset = 0, |

||

| bool | minibatch = false |

||

| ) | [virtual] |

Uses "rbmc" to obtain the activations of all units in this layer.

Unit 0 of this layer corresponds to unit "offset" of "rbmc".

Reimplemented from PLearn::RBMLayer.

Definition at line 179 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::getAllActivations(), i, n_layers, and sub_layers.

{

inherited::getAllActivations( rbmc, offset, minibatch );

for( int i=0 ; i<n_layers ; i++ )

{

if( minibatch )

sub_layers[i]->expectations_are_up_to_date = false;

else

sub_layers[i]->expectation_is_up_to_date = false;

}

}

| void PLearn::RBMMixedLayer::getAllActivations | ( | PP< RBMParameters > | rbmp, |

| int | offset = 0 |

||

| ) | [virtual] |

Uses "rbmp" to obtain the activations of all units in this layer.

Unit 0 of this layer corresponds to unit "offset" of "rbmp".

Implements PLearn::RBMLayer.

Definition at line 77 of file DEPRECATED/RBMMixedLayer.cc.

References PLearn::RBMLayer::expectation_is_up_to_date, i, init_positions, n_layers, and sub_layers.

{

for( int i=0 ; i<n_layers ; i++ )

{

int total_offset = offset + init_positions[i];

sub_layers[i]->getAllActivations( rbmp, total_offset );

}

expectation_is_up_to_date = false;

}

Computes the conf_index configuration of the layer.

Reimplemented from PLearn::RBMLayer.

Definition at line 775 of file RBMMixedLayer.cc.

References getConfigurationCount(), i, init_positions, PLearn::TVec< T >::length(), n_layers, PLASSERT, PLearn::TVec< T >::size(), PLearn::RBMLayer::size, sub_layers, and PLearn::TVec< T >::subVec().

{

PLASSERT( output.length() == size );

PLASSERT( conf_index >= 0 && conf_index < getConfigurationCount() );

int conf_i = conf_index;

for ( int i = 0; i < n_layers; ++i ) {

int conf_layer_i = sub_layers[i]->getConfigurationCount();

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Vec output_i = output.subVec( begin, size_i );

sub_layers[i]->getConfiguration(conf_i % conf_layer_i, output_i);

conf_i /= conf_layer_i;

}

}

| int PLearn::RBMMixedLayer::getConfigurationCount | ( | ) | [virtual] |

Returns a number of different configurations the layer can be in.

Reimplemented from PLearn::RBMLayer.

Definition at line 760 of file RBMMixedLayer.cc.

References i, PLearn::RBMLayer::INFINITE_CONFIGURATIONS, n_layers, and sub_layers.

Referenced by getConfiguration().

{

int count = 1;

for ( int i = 0; i < n_layers; ++i ) {

int cc_layer_i = sub_layers[i]->getConfigurationCount();

// Avoiding overflow

if ( INFINITE_CONFIGURATIONS/cc_layer_i <= count )

return INFINITE_CONFIGURATIONS;

count *= cc_layer_i;

}

return count;

}

| OptionList & PLearn::RBMMixedLayer::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

| virtual OptionList& PLearn::RBMMixedLayer::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| virtual OptionMap& PLearn::RBMMixedLayer::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| OptionMap & PLearn::RBMMixedLayer::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

| virtual RemoteMethodMap& PLearn::RBMMixedLayer::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| RemoteMethodMap & PLearn::RBMMixedLayer::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMMixedLayer.cc.

| void PLearn::RBMMixedLayer::getUnitActivation | ( | int | i, |

| PP< RBMConnection > | rbmc, | ||

| int | offset = 0 |

||

| ) | [virtual] |

Uses "rbmc" to compute the activation of unit "i" of this layer.

This activation is computed by the "i+offset"-th unit of "rbmc"

Reimplemented from PLearn::RBMLayer.

Definition at line 167 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::getUnitActivation(), i, j, layer_of_unit, and sub_layers.

{

inherited::getUnitActivation( i, rbmc, offset );

int j = layer_of_unit[i];

sub_layers[j]->expectation_is_up_to_date = false;

}

| void PLearn::RBMMixedLayer::getUnitActivations | ( | int | i, |

| PP< RBMParameters > | rbmp, | ||

| int | offset = 0 |

||

| ) | [virtual] |

Uses "rbmp" to obtain the activations of unit "i" of this layer.

This activation vector is computed by the "i+offset"-th unit of "rbmp"

Implements PLearn::RBMLayer.

Definition at line 63 of file DEPRECATED/RBMMixedLayer.cc.

References PLearn::RBMLayer::expectation_is_up_to_date, i, init_positions, j, layer_of_unit, and sub_layers.

{

int j = layer_of_unit[i];

PP<RBMLayer> layer = sub_layers[i];

int sub_index = i - init_positions[j];

int total_offset = offset + init_positions[j];

layer->getUnitActivations( sub_index, rbmp, total_offset );

expectation_is_up_to_date = false;

}

| void PLearn::RBMMixedLayer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMLayer.

Definition at line 169 of file DEPRECATED/RBMMixedLayer.cc.

References PLearn::deepCopyField(), init_positions, layer_of_unit, PLearn::RBMLayer::makeDeepCopyFromShallowCopy(), and sub_layers.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(sub_layers, copies);

deepCopyField(init_positions, copies);

deepCopyField(layer_of_unit, copies);

}

| virtual void PLearn::RBMMixedLayer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMLayer.

| void PLearn::RBMMixedLayer::reset | ( | ) | [virtual] |

resets activations, sample and expectation fields

Reimplemented from PLearn::RBMLayer.

Definition at line 590 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::expectation_is_up_to_date, i, n_layers, and sub_layers.

{

for( int i=0 ; i<n_layers ; i++ )

sub_layers[i]->reset();

expectation_is_up_to_date = false;

}

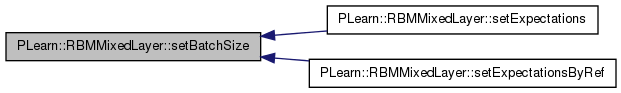

| void PLearn::RBMMixedLayer::setBatchSize | ( | int | the_batch_size | ) | [virtual] |

Sets batch_size and resize activations, expectations, and samples.

Reimplemented from PLearn::RBMLayer.

Definition at line 89 of file RBMMixedLayer.cc.

References i, n_layers, PLearn::RBMLayer::setBatchSize(), and sub_layers.

Referenced by setExpectations(), and setExpectationsByRef().

{

inherited::setBatchSize( the_batch_size );

for( int i = 0; i < n_layers; i++ )

sub_layers[i]->setBatchSize( the_batch_size );

}

| void PLearn::RBMMixedLayer::setExpectation | ( | const Vec & | the_expectation | ) | [virtual] |

Copy the given expectation in the 'expectation' vector.

Reimplemented from PLearn::RBMLayer.

Definition at line 99 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::expectation, PLearn::RBMLayer::expectation_is_up_to_date, i, n_layers, and sub_layers.

{

expectation << the_expectation;

expectation_is_up_to_date=true;

for( int i = 0; i < n_layers; i++ )

sub_layers[i]->expectation_is_up_to_date=true;

}

| void PLearn::RBMMixedLayer::setExpectationByRef | ( | const Vec & | the_expectation | ) | [virtual] |

Make the 'expectation' vector point to the given data vector (so no copy is performed).

Reimplemented from PLearn::RBMLayer.

Definition at line 110 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::expectation, PLearn::RBMLayer::expectation_is_up_to_date, i, init_positions, n_layers, sub_layers, and PLearn::TVec< T >::subVec().

{

expectation = the_expectation;

expectation_is_up_to_date=true;

// Rearrange pointers

for( int i = 0; i < n_layers; i++ )

{

int init_pos = init_positions[i];

PP<RBMLayer> layer = sub_layers[i];

int layer_size = layer->size;

layer->setExpectationByRef( expectation.subVec(init_pos, layer_size) );

}

}

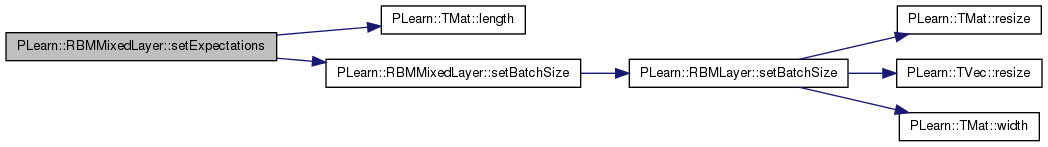

| void PLearn::RBMMixedLayer::setExpectations | ( | const Mat & | the_expectations | ) | [virtual] |

Copy the given expectations in the 'expectations' matrix.

Reimplemented from PLearn::RBMLayer.

Definition at line 130 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::batch_size, PLearn::RBMLayer::expectations, PLearn::RBMLayer::expectations_are_up_to_date, i, PLearn::TMat< T >::length(), n_layers, setBatchSize(), and sub_layers.

{

batch_size = the_expectations.length();

setBatchSize( batch_size );

expectations << the_expectations;

expectations_are_up_to_date=true;

for( int i = 0; i < n_layers; i++ )

sub_layers[i]->expectations_are_up_to_date=true;

}

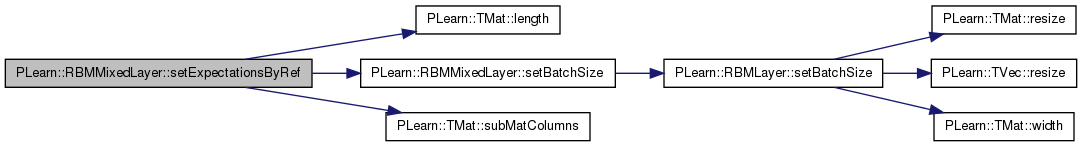

| void PLearn::RBMMixedLayer::setExpectationsByRef | ( | const Mat & | the_expectations | ) | [virtual] |

Make the 'expectations' matrix point to the given data matrix (so no copy is performed).

Reimplemented from PLearn::RBMLayer.

Definition at line 143 of file RBMMixedLayer.cc.

References PLearn::RBMLayer::batch_size, PLearn::RBMLayer::expectations, PLearn::RBMLayer::expectations_are_up_to_date, i, init_positions, PLearn::TMat< T >::length(), n_layers, setBatchSize(), sub_layers, and PLearn::TMat< T >::subMatColumns().

{

batch_size = the_expectations.length();

setBatchSize( batch_size );

expectations = the_expectations;

expectations_are_up_to_date=true;

// Rearrange pointers

for( int i = 0; i < n_layers; i++ )

{

int init_pos = init_positions[i];

PP<RBMLayer> layer = sub_layers[i];

int layer_size = layer->size;

layer->setExpectationsByRef(expectations.subMatColumns(init_pos,

layer_size));

}

}

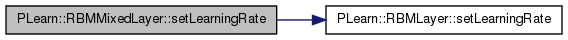

| void PLearn::RBMMixedLayer::setLearningRate | ( | real | the_learning_rate | ) | [virtual] |

Sets the learning rate, also in the sub_layers.

Reimplemented from PLearn::RBMLayer.

Definition at line 67 of file RBMMixedLayer.cc.

References i, n_layers, PLearn::RBMLayer::setLearningRate(), and sub_layers.

{

inherited::setLearningRate( the_learning_rate );

for( int i=0 ; i<n_layers ; i++ )

sub_layers[i]->setLearningRate( the_learning_rate );

}

| void PLearn::RBMMixedLayer::setMomentum | ( | real | the_momentum | ) | [virtual] |

Sets the momentum, also in the sub_layers.

Reimplemented from PLearn::RBMLayer.

Definition at line 78 of file RBMMixedLayer.cc.

References i, n_layers, PLearn::RBMLayer::setMomentum(), and sub_layers.

{

inherited::setMomentum( the_momentum );

for( int i=0 ; i<n_layers ; i++ )

sub_layers[i]->setMomentum( the_momentum );

}

Update parameters according to one pair of vectors.

Reimplemented from PLearn::RBMLayer.

Definition at line 564 of file RBMMixedLayer.cc.

References i, init_positions, n_layers, PLearn::TVec< T >::size(), sub_layers, and PLearn::TVec< T >::subVec().

{

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Vec sub_pos_values = pos_values.subVec( begin, size_i );

Vec sub_neg_values = neg_values.subVec( begin, size_i );

sub_layers[i]->update( sub_pos_values, sub_neg_values );

}

}

Update parameters according to several pairs of vectors.

Reimplemented from PLearn::RBMLayer.

Definition at line 577 of file RBMMixedLayer.cc.

References i, init_positions, n_layers, PLearn::TVec< T >::size(), sub_layers, and PLearn::TMat< T >::subMatColumns().

{

for( int i=0 ; i<n_layers ; i++ )

{

int begin = init_positions[i];

int size_i = sub_layers[i]->size;

Mat sub_pos_values = pos_values.subMatColumns( begin, size_i );

Mat sub_neg_values = neg_values.subMatColumns( begin, size_i );

sub_layers[i]->update( sub_pos_values, sub_neg_values );

}

}

| void PLearn::RBMMixedLayer::update | ( | ) | [virtual] |

Update parameters according to accumulated statistics.

Reimplemented from PLearn::RBMLayer.

Definition at line 556 of file RBMMixedLayer.cc.

References clearStats(), i, n_layers, and sub_layers.

{

for( int i=0 ; i<n_layers ; i++ )

sub_layers[i]->update();

clearStats();

}

static StaticInitializer PLearn::RBMMixedLayer::_static_initializer_ [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 99 of file DEPRECATED/RBMMixedLayer.h.

TVec< int > PLearn::RBMMixedLayer::init_positions [protected] |

Initial index of the sub_layers.

Definition at line 110 of file DEPRECATED/RBMMixedLayer.h.

Referenced by accumulateNegStats(), accumulatePosStats(), bpropNLL(), bpropUpdate(), build_(), declareOptions(), energy(), fprop(), fpropNLL(), freeEnergyContribution(), freeEnergyContributionGradient(), getAllActivations(), getConfiguration(), getUnitActivations(), makeDeepCopyFromShallowCopy(), setExpectationByRef(), setExpectationsByRef(), and update().

TVec< int > PLearn::RBMMixedLayer::layer_of_unit [protected] |

layer_of_unit[i] is the index of sub_layer containing unit i

Definition at line 113 of file DEPRECATED/RBMMixedLayer.h.

Referenced by build_(), declareOptions(), getUnitActivation(), getUnitActivations(), and makeDeepCopyFromShallowCopy().

Mat PLearn::RBMMixedLayer::mat_nlls [mutable, private] |

Definition at line 249 of file RBMMixedLayer.h.

Referenced by bpropNLL(), and fpropNLL().

int PLearn::RBMMixedLayer::n_layers [protected] |

Number of sub-layers.

Definition at line 116 of file DEPRECATED/RBMMixedLayer.h.

Referenced by accumulateNegStats(), accumulatePosStats(), bpropNLL(), bpropUpdate(), build_(), clearStats(), computeExpectation(), computeExpectations(), declareOptions(), energy(), expectation_is_not_up_to_date(), forget(), fprop(), fpropNLL(), freeEnergyContribution(), freeEnergyContributionGradient(), generateSample(), generateSamples(), getAllActivations(), getConfiguration(), getConfigurationCount(), reset(), setBatchSize(), setExpectation(), setExpectationByRef(), setExpectations(), setExpectationsByRef(), setLearningRate(), setMomentum(), and update().

Vec PLearn::RBMMixedLayer::nlls [mutable, private] |

Definition at line 248 of file RBMMixedLayer.h.

Referenced by bpropNLL(), and fpropNLL().

The concatenated RBMLayers composing this layer.

Definition at line 62 of file DEPRECATED/RBMMixedLayer.h.

Referenced by accumulateNegStats(), accumulatePosStats(), bpropNLL(), bpropUpdate(), build_(), clearStats(), computeExpectation(), computeExpectations(), declareOptions(), energy(), expectation_is_not_up_to_date(), forget(), fprop(), fpropNLL(), freeEnergyContribution(), freeEnergyContributionGradient(), generateSample(), generateSamples(), getAllActivations(), getConfiguration(), getConfigurationCount(), getUnitActivation(), getUnitActivations(), makeDeepCopyFromShallowCopy(), reset(), setBatchSize(), setExpectation(), setExpectationByRef(), setExpectations(), setExpectationsByRef(), setLearningRate(), setMomentum(), and update().

1.7.4

1.7.4