|

PLearn 0.1

|

|

PLearn 0.1

|

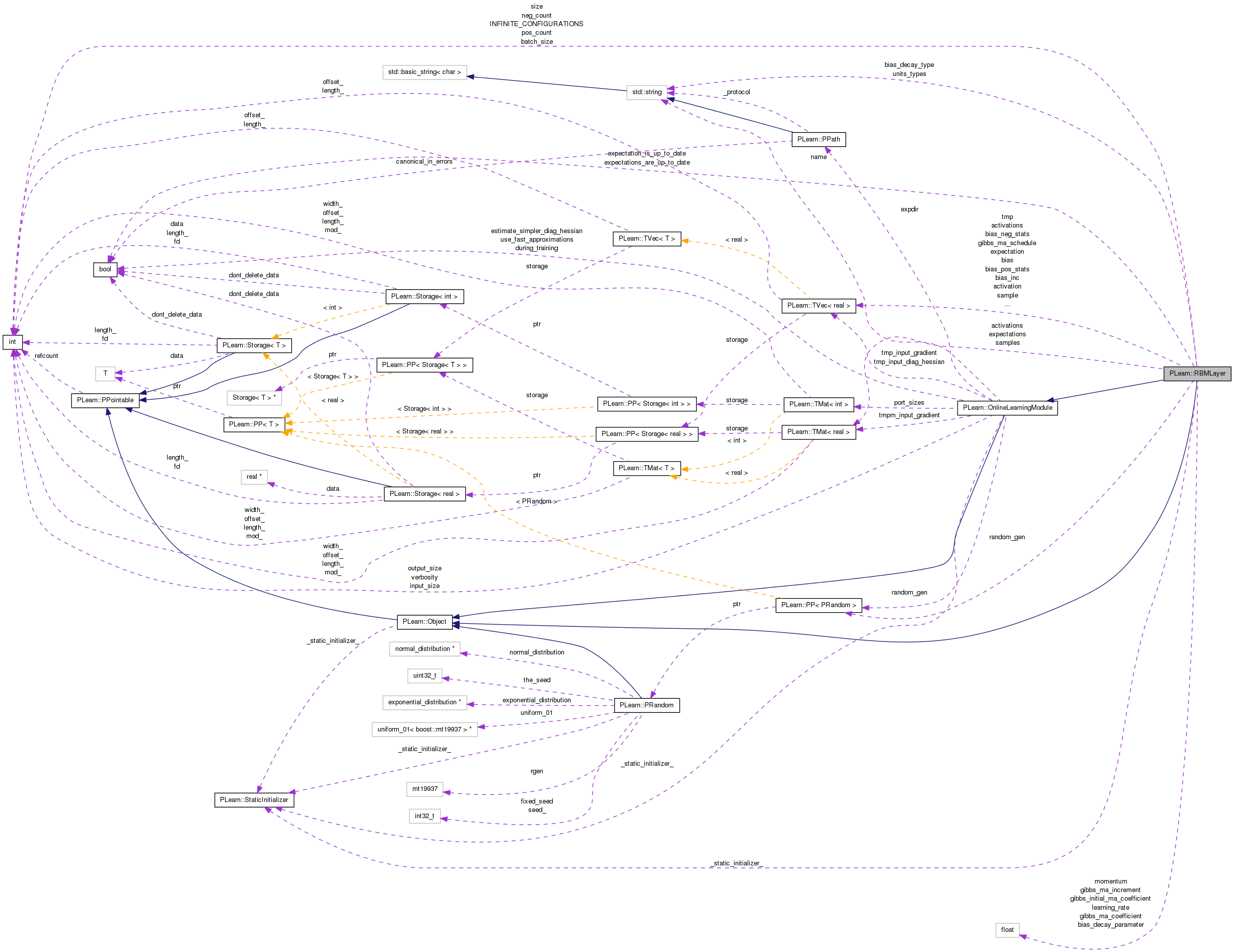

Virtual class for a layer in an RBM. More...

#include <RBMLayer.h>

Public Member Functions | |

| RBMLayer () | |

| Default constructor. | |

| virtual void | getUnitActivations (int i, PP< RBMParameters > rbmp, int offset=0)=0 |

| Uses "rbmp" to obtain the activations of unit "i" of this layer. | |

| virtual void | getAllActivations (PP< RBMParameters > rbmp, int offset=0)=0 |

| Uses "rbmp" to obtain the activations of all units in this layer. | |

| virtual void | generateSample ()=0 |

| generate a sample, and update the sample field | |

| virtual void | computeExpectation ()=0 |

| compute the expectation | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient)=0 |

| back-propagates the output gradient to the input | |

| virtual void | reset () |

| resets activations, sample and expectation fields | |

| string | getUnitsTypes () |

| return units_types | |

| virtual RBMLayer * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

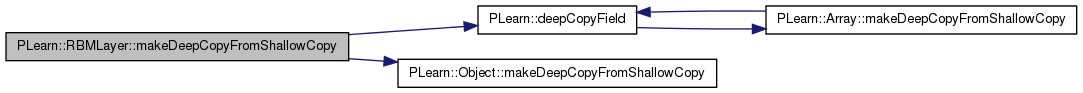

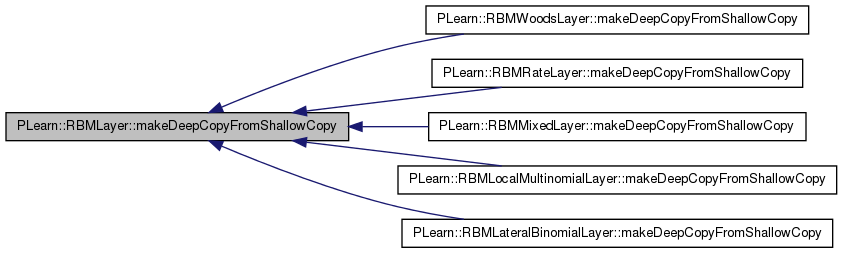

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| RBMLayer (real the_learning_rate=0.) | |

| Default constructor. | |

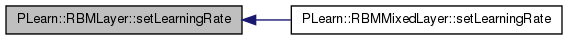

| virtual void | setLearningRate (real the_learning_rate) |

| Sets the learning rate. | |

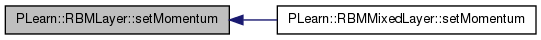

| virtual void | setMomentum (real the_momentum) |

| Sets the momentum. | |

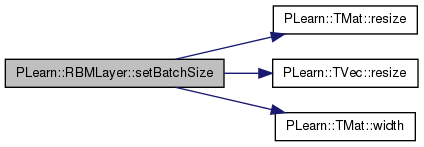

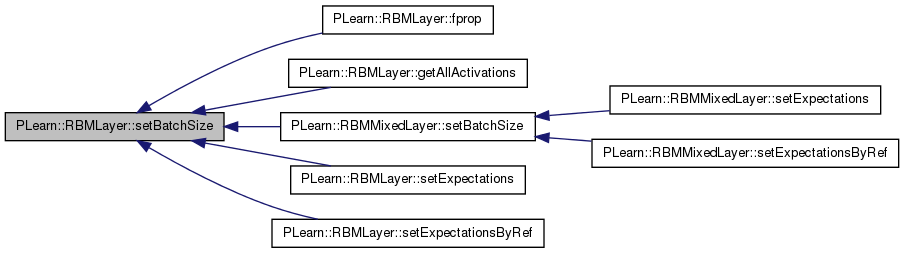

| virtual void | setBatchSize (int the_batch_size) |

| Sets batch_size and resize activations, expectations, and samples. | |

| virtual void | setExpectation (const Vec &the_expectation) |

| Copy the given expectation in the 'expectation' vector. | |

| virtual void | setExpectationByRef (const Vec &the_expectation) |

| Make the 'expectation' vector point to the given data vector (so no copy is performed). | |

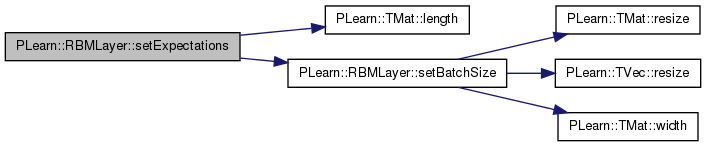

| virtual void | setExpectations (const Mat &the_expectations) |

| Copy the given expectations in the 'expectations' matrix. | |

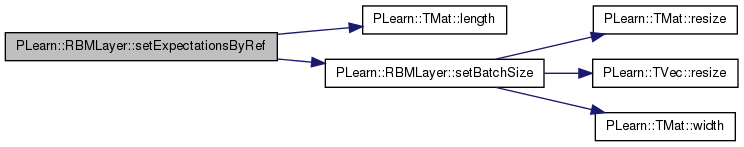

| virtual void | setExpectationsByRef (const Mat &the_expectations) |

| Make the 'expectations' matrix point to the given data matrix (so no copy is performed). | |

| const Mat & | getExpectations () |

| Accessor to the 'expectations' matrix. | |

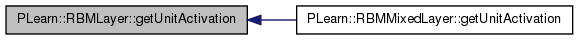

| virtual void | getUnitActivation (int i, PP< RBMConnection > rbmc, int offset=0) |

| Uses "rbmc" to compute the activation of unit "i" of this layer. | |

| virtual void | getAllActivations (PP< RBMConnection > rbmc, int offset=0, bool minibatch=false) |

| Uses "rbmc" to obtain the activations of all units in this layer. | |

| virtual void | expectation_is_not_up_to_date () |

| virtual void | generateSample ()=0 |

| generate a sample, and update the sample field | |

| virtual void | generateSamples ()=0 |

| Generate a mini-batch set of samples. | |

| virtual void | computeExpectation ()=0 |

| Compute expectation. | |

| virtual void | computeExpectations ()=0 |

| Compute expectations (mini-batch). | |

| virtual void | fprop (const Vec &input, Vec &output) const |

| Adds the bias to input, consider this as the activation, then compute the expectation. | |

| virtual void | fprop (const Mat &inputs, Mat &outputs) |

| Mini-batch fprop. | |

| virtual void | fprop (const Vec &input, const Vec &rbm_bias, Vec &output) const |

| computes the expectation given the conditional input and the given bias | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false)=0 |

| back-propagates the output gradient to the input, and update the bias (and possibly the quadratic term) | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false)=0 |

| Back-propagate the output gradient to the input, and update parameters. | |

| virtual void | bpropUpdate (const Vec &input, const Vec &rbm_bias, const Vec &output, Vec &input_gradient, Vec &rbm_bias_gradient, const Vec &output_gradient) |

| back-propagates the output gradient to the input and the bias | |

| virtual real | fpropNLL (const Vec &target) |

| Computes the negative log-likelihood of target given the internal activations of the layer. | |

| virtual void | fpropNLL (const Mat &targets, const Mat &costs_column) |

| virtual real | fpropNLL (const Vec &target, const Vec &cost_weights) |

| virtual void | bpropNLL (const Vec &target, real nll, Vec &bias_gradient) |

| Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations. | |

| virtual void | bpropNLL (const Mat &targets, const Mat &costs_column, Mat &bias_gradients) |

| virtual void | accumulatePosStats (const Vec &pos_values) |

| Accumulates positive phase statistics. | |

| virtual void | accumulatePosStats (const Mat &ps_values) |

| virtual void | accumulateNegStats (const Vec &neg_values) |

| Accumulates negative phase statistics. | |

| virtual void | accumulateNegStats (const Mat &neg_values) |

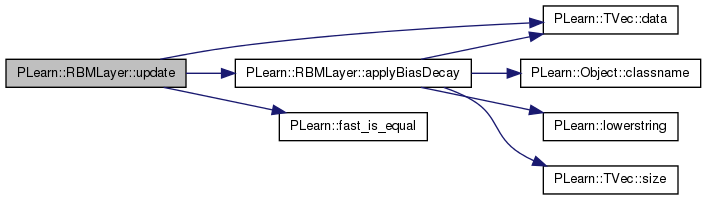

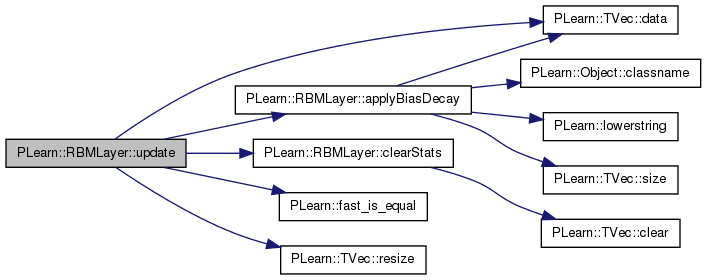

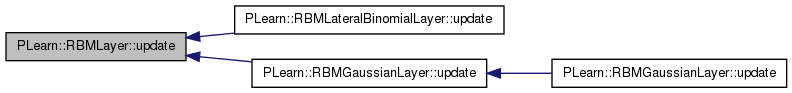

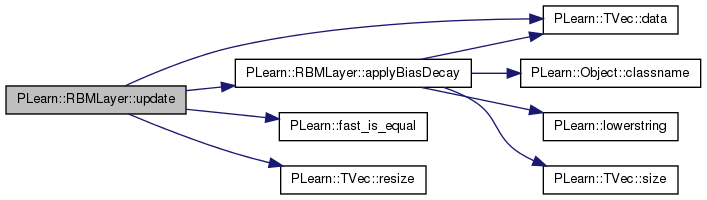

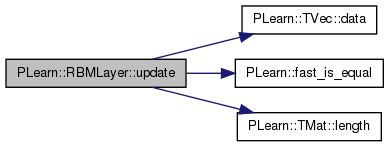

| virtual void | update () |

| Update parameters according to accumulated statistics. | |

| virtual void | update (const Vec &grad) |

| Updates parameters according to the given gradient. | |

| virtual void | update (const Mat &grad) |

| virtual void | update (const Vec &pos_values, const Vec &neg_values) |

| Update parameters according to one pair of vectors. | |

| virtual void | update (const Mat &pos_values, const Mat &neg_values) |

| Update parameters according to one pair of matrices. | |

| virtual void | updateCDandGibbs (const Mat &pos_values, const Mat &cd_neg_values, const Mat &gibbs_neg_values, real background_gibbs_update_ratio) |

| virtual void | updateGibbs (const Mat &pos_values, const Mat &gibbs_neg_values) |

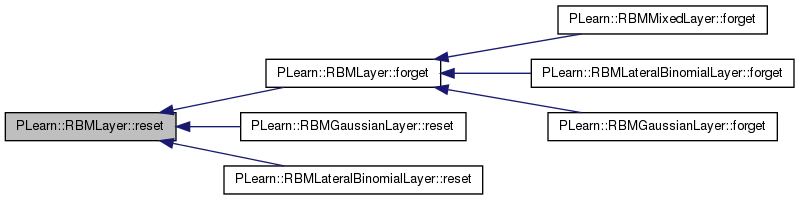

| virtual void | reset () |

| resets activations, sample and expectation fields | |

| virtual void | clearStats () |

| resets the statistics and counts | |

| virtual void | forget () |

| forgets everything | |

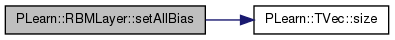

| virtual void | setAllBias (const Vec &rbm_bias) |

| Set the internal bias values to rbm_bias. | |

| virtual void | bpropCD (Vec &bias_gradient) |

| Computes the contrastive divergence gradient with respect to the bias (or activations, which is equivalent). | |

| virtual void | bpropCD (const Vec &pos_values, const Vec &neg_values, Vec &bias_gradient) |

| Computes the contrastive divergence gradient with respect to the bias (or activations, which is equivalent), given the positive and negative phase values. | |

| virtual real | energy (const Vec &unit_values) const |

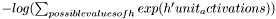

| virtual real | freeEnergyContribution (const Vec &unit_activations) const |

Computes  This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. | |

| virtual void | freeEnergyContributionGradient (const Vec &unit_activations, Vec &unit_activations_gradient, real output_gradient=1, bool accumulate=false) const |

Computes gradient of the result of freeEnergyContribution  with respect to unit_activations. with respect to unit_activations. | |

| virtual int | getConfigurationCount () |

| Returns a number of different configurations the layer can be in. | |

| virtual void | getConfiguration (int conf_index, Vec &output) |

| Computes the conf_index configuration of the layer. | |

| virtual void | applyBiasDecay () |

| Applies the bias decay. | |

| virtual void | addBiasDecay (Vec &bias_gradient) |

| Adds the bias decay to the bias gradients. | |

| virtual void | addBiasDecay (Mat &bias_gradient) |

| Adds the bias decay to the bias gradients. | |

| virtual RBMLayer * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | size |

| Number of units. | |

| string | units_types |

| Each character of this string describes the type of an up unit: | |

| PP< PRandom > | random_gen |

| Random number generator. | |

| Vec | activations |

| values allowing to know the distribution : the activation value (before sigmoid) for a binomial input, the couple (mu, sigma) for a gaussian unit. | |

| Vec | sample |

| Contains a sample of the random variable in this layer: | |

| Vec | expectation |

| Contains the expected value of the random variable in this layer: | |

| bool | expectation_is_up_to_date |

| flags that expectation was computed based on most recently computed value of activation | |

| real | learning_rate |

| Learning rate. | |

| real | momentum |

| Momentum. | |

| string | bias_decay_type |

| Type of decay applied to the biases. | |

| real | bias_decay_parameter |

| Bias decay parameter. | |

| Vec | gibbs_ma_schedule |

| background gibbs chain options each element of this vector is a number of updates after which the moving average coefficient is incremented (by incrementing its inverse sigmoid by gibbs_ma_increment). | |

| real | gibbs_ma_increment |

| real | gibbs_initial_ma_coefficient |

| Vec | bias |

| real | gibbs_ma_coefficient |

| used for Gibbs chain methods only | |

| int | batch_size |

| Size of batches when using mini-batch. | |

| Vec | activation |

activation value:  | |

| Mat | activations |

| Mat | samples |

| bool | expectations_are_up_to_date |

| Indicate whether expectations were computed based on most recently computed values of activations. | |

| Vec | bias_pos_stats |

| Accumulates positive contribution to the gradient of bias. | |

| Vec | bias_neg_stats |

| Accumulates negative contribution to the gradient of bias. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

| static const int | INFINITE_CONFIGURATIONS = 0x7fffffff |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

| static void | declareMethods (RemoteMethodMap &rmm) |

| Declares the class Methods. | |

Protected Attributes | |

| Vec | bias_inc |

| Stores the momentum of the gradient. | |

| Vec | ones |

| A vector containing only ones, used to compute efficiently mini-batch updates. | |

| int | pos_count |

| Count of positive examples. | |

| int | neg_count |

| Count of negative examples. | |

| Mat | expectations |

| Expectations for mini-batch operations. | |

| Vec | tmp |

Private Types | |

| typedef Object | inherited |

| typedef OnlineLearningModule | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | build_ () |

| This does the actual building. | |

Virtual class for a layer in an RBM.

Definition at line 57 of file DEPRECATED/RBMLayer.h.

typedef Object PLearn::RBMLayer::inherited [private] |

Reimplemented from PLearn::Object.

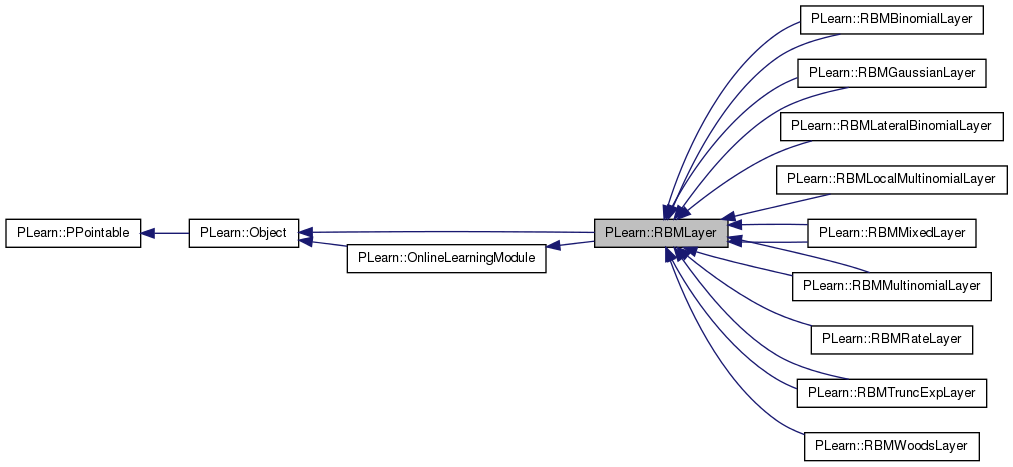

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 59 of file DEPRECATED/RBMLayer.h.

typedef OnlineLearningModule PLearn::RBMLayer::inherited [private] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 58 of file RBMLayer.h.

| PLearn::RBMLayer::RBMLayer | ( | ) |

Default constructor.

Definition at line 52 of file DEPRECATED/RBMLayer.cc.

:

size(-1),

expectation_is_up_to_date(false)

{

}

| PLearn::RBMLayer::RBMLayer | ( | real | the_learning_rate = 0. | ) |

Default constructor.

Definition at line 54 of file RBMLayer.cc.

:

learning_rate(the_learning_rate),

momentum(0.),

size(-1),

bias_decay_type("none"),

bias_decay_parameter(0),

gibbs_ma_increment(0.1),

gibbs_initial_ma_coefficient(0.1),

batch_size(0),

expectation_is_up_to_date(false),

expectations_are_up_to_date(false),

pos_count(0),

neg_count(0)

{

}

| string PLearn::RBMLayer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 50 of file DEPRECATED/RBMLayer.cc.

| static string PLearn::RBMLayer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| OptionList & PLearn::RBMLayer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 50 of file DEPRECATED/RBMLayer.cc.

| static OptionList& PLearn::RBMLayer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| static RemoteMethodMap& PLearn::RBMLayer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| RemoteMethodMap & PLearn::RBMLayer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 50 of file DEPRECATED/RBMLayer.cc.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 50 of file DEPRECATED/RBMLayer.cc.

| static void PLearn::RBMLayer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| StaticInitializer RBMLayer::_static_initializer_ & PLearn::RBMLayer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 50 of file DEPRECATED/RBMLayer.cc.

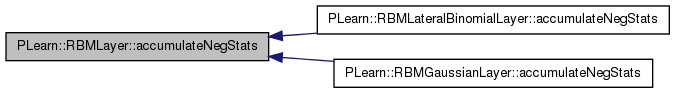

| void PLearn::RBMLayer::accumulateNegStats | ( | const Vec & | neg_values | ) | [virtual] |

Accumulates negative phase statistics.

Reimplemented in PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, and PLearn::RBMMixedLayer.

Definition at line 439 of file RBMLayer.cc.

References bias_neg_stats, and neg_count.

Referenced by PLearn::RBMLateralBinomialLayer::accumulateNegStats(), and PLearn::RBMGaussianLayer::accumulateNegStats().

{

bias_neg_stats += neg_values;

neg_count++;

}

| void PLearn::RBMLayer::accumulateNegStats | ( | const Mat & | neg_values | ) | [virtual] |

Reimplemented in PLearn::RBMLateralBinomialLayer.

Definition at line 444 of file RBMLayer.cc.

References bias_neg_stats, i, PLearn::TMat< T >::length(), and neg_count.

{

for (int i=0;i<neg_values.length();i++)

bias_neg_stats += neg_values(i);

neg_count+=neg_values.length();

}

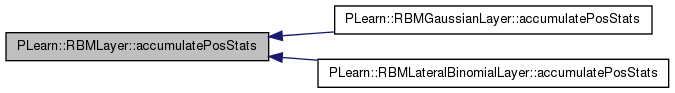

| void PLearn::RBMLayer::accumulatePosStats | ( | const Vec & | pos_values | ) | [virtual] |

Accumulates positive phase statistics.

Reimplemented in PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, and PLearn::RBMMixedLayer.

Definition at line 424 of file RBMLayer.cc.

References bias_pos_stats, and pos_count.

Referenced by PLearn::RBMGaussianLayer::accumulatePosStats(), and PLearn::RBMLateralBinomialLayer::accumulatePosStats().

{

bias_pos_stats += pos_values;

pos_count++;

}

| void PLearn::RBMLayer::accumulatePosStats | ( | const Mat & | ps_values | ) | [virtual] |

Reimplemented in PLearn::RBMLateralBinomialLayer.

Definition at line 429 of file RBMLayer.cc.

References bias_pos_stats, i, PLearn::TMat< T >::length(), and pos_count.

{

for (int i=0;i<pos_values.length();i++)

bias_pos_stats += pos_values(i);

pos_count+=pos_values.length();

}

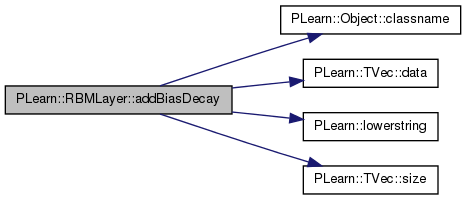

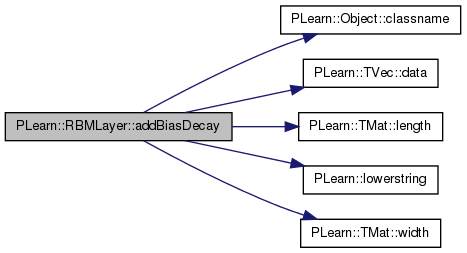

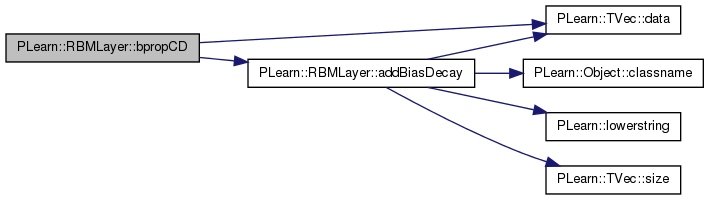

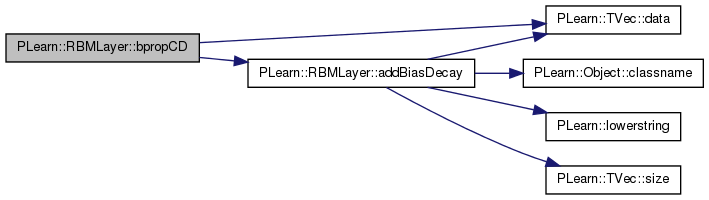

| void PLearn::RBMLayer::addBiasDecay | ( | Vec & | bias_gradient | ) | [virtual] |

Adds the bias decay to the bias gradients.

Definition at line 818 of file RBMLayer.cc.

References b, bias, bias_decay_parameter, bias_decay_type, PLearn::Object::classname(), PLearn::TVec< T >::data(), i, learning_rate, PLearn::lowerstring(), PLASSERT, PLERROR, PLearn::TVec< T >::size(), and size.

Referenced by bpropCD(), and PLearn::RBMGaussianLayer::bpropNLL().

{

PLASSERT(bias_gradient.size()==size);

real *bg = bias_gradient.data();

real *b = bias.data();

bias_decay_type = lowerstring(bias_decay_type);

if (bias_decay_type=="none")

{}

else if (bias_decay_type=="negative") // Pushes the biases towards -\infty

for( int i=0 ; i<size ; i++ )

bg[i] += learning_rate * bias_decay_parameter;

else if (bias_decay_type=="l2") // L2 penalty on the biases

for (int i=0 ; i<size ; i++ )

bg[i] += learning_rate * bias_decay_parameter * b[i];

else

PLERROR("RBMLayer::addBiasDecay(string) bias_decay_type %s is not in"

" the list, in subclass %s\n",bias_decay_type.c_str(),classname().c_str());

}

| void PLearn::RBMLayer::addBiasDecay | ( | Mat & | bias_gradient | ) | [virtual] |

Adds the bias decay to the bias gradients.

Definition at line 840 of file RBMLayer.cc.

References b, bias, bias_decay_parameter, bias_decay_type, PLearn::Object::classname(), PLearn::TVec< T >::data(), i, learning_rate, PLearn::TMat< T >::length(), PLearn::lowerstring(), PLASSERT, PLERROR, size, and PLearn::TMat< T >::width().

{

PLASSERT(bias_gradients.width()==size);

if (bias_decay_type=="none")

return;

real avg_lr = learning_rate / bias_gradients.length();

for(int b=0; b<bias_gradients.length(); b++)

{

real *bg = bias_gradients[b];

real *b = bias.data();

bias_decay_type = lowerstring(bias_decay_type);

if (bias_decay_type=="negative") // Pushes the biases towards -\infty

for( int i=0 ; i<size ; i++ )

bg[i] += avg_lr * bias_decay_parameter;

else if (bias_decay_type=="l2") // L2 penalty on the biases

for (int i=0 ; i<size ; i++ )

bg[i] += avg_lr * bias_decay_parameter * b[i];

else

PLERROR("RBMLayer::addBiasDecay(string) bias_decay_type %s is not in"

" the list, in subclass %s\n",bias_decay_type.c_str(),classname().c_str());

}

}

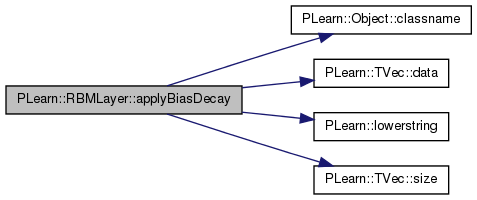

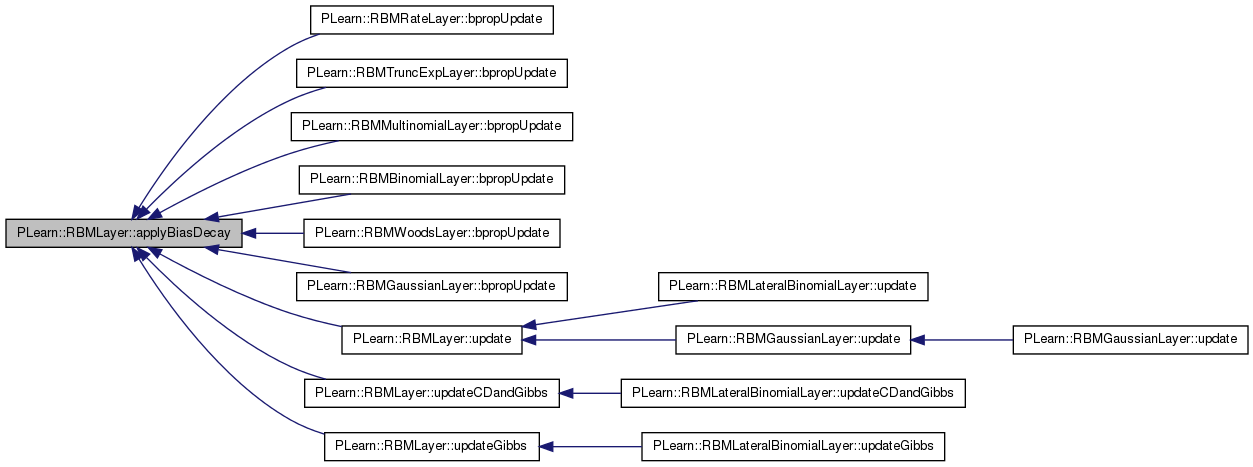

| void PLearn::RBMLayer::applyBiasDecay | ( | ) | [virtual] |

Applies the bias decay.

Definition at line 866 of file RBMLayer.cc.

References b, bias, bias_decay_parameter, bias_decay_type, PLearn::Object::classname(), PLearn::TVec< T >::data(), i, learning_rate, PLearn::lowerstring(), PLASSERT, PLERROR, PLearn::TVec< T >::size(), and size.

Referenced by PLearn::RBMRateLayer::bpropUpdate(), PLearn::RBMTruncExpLayer::bpropUpdate(), PLearn::RBMMultinomialLayer::bpropUpdate(), PLearn::RBMBinomialLayer::bpropUpdate(), PLearn::RBMWoodsLayer::bpropUpdate(), PLearn::RBMGaussianLayer::bpropUpdate(), update(), updateCDandGibbs(), and updateGibbs().

{

PLASSERT(bias.size()==size);

real* b = bias.data();

bias_decay_type = lowerstring(bias_decay_type);

if (bias_decay_type=="none")

{}

else if (bias_decay_type=="negative") // Pushes the biases towards -\infty

for( int i=0 ; i<size ; i++ )

b[i] -= learning_rate * bias_decay_parameter;

else if (bias_decay_type=="l2") // L2 penalty on the biases

bias *= (1 - learning_rate * bias_decay_parameter);

else

PLERROR("RBMLayer::applyBiasDecay(string) bias_decay_type %s is not in"

" the list, in subclass %s\n",bias_decay_type.c_str(),classname().c_str());

}

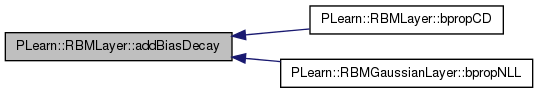

| void PLearn::RBMLayer::bpropCD | ( | Vec & | bias_gradient | ) | [virtual] |

Computes the contrastive divergence gradient with respect to the bias (or activations, which is equivalent).

It should be noted that bpropCD does not call clearstats().

Definition at line 756 of file RBMLayer.cc.

References addBiasDecay(), bias_neg_stats, bias_pos_stats, PLearn::TVec< T >::data(), i, neg_count, pos_count, and size.

{

// grad = -bias_pos_stats/pos_count + bias_neg_stats/neg_count

real* bg = bias_gradient.data();

real* bps = bias_pos_stats.data();

real* bns = bias_neg_stats.data();

for( int i=0 ; i<size ; i++ )

bg[i] = -bps[i]/pos_count + bns[i]/neg_count;

addBiasDecay(bias_gradient);

}

| void PLearn::RBMLayer::bpropCD | ( | const Vec & | pos_values, |

| const Vec & | neg_values, | ||

| Vec & | bias_gradient | ||

| ) | [virtual] |

Computes the contrastive divergence gradient with respect to the bias (or activations, which is equivalent), given the positive and negative phase values.

Definition at line 771 of file RBMLayer.cc.

References addBiasDecay(), PLearn::TVec< T >::data(), i, and size.

{

// grad = -bias_pos_stats/pos_count + bias_neg_stats/neg_count

real* bg = bias_gradient.data();

real* bps = pos_values.data();

real* bns = neg_values.data();

for( int i=0 ; i<size ; i++ )

bg[i] = -bps[i] + bns[i];

addBiasDecay(bias_gradient);

}

Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, and PLearn::RBMWoodsLayer.

Definition at line 408 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

| void PLearn::RBMLayer::bpropNLL | ( | const Mat & | targets, |

| const Mat & | costs_column, | ||

| Mat & | bias_gradients | ||

| ) | [virtual] |

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, and PLearn::RBMWoodsLayer.

Definition at line 414 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

| virtual void PLearn::RBMLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient | ||

| ) | [pure virtual] |

back-propagates the output gradient to the input

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, and PLearn::RBMTruncExpLayer.

| virtual void PLearn::RBMLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [pure virtual] |

back-propagates the output gradient to the input, and update the bias (and possibly the quadratic term)

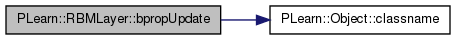

Reimplemented from PLearn::OnlineLearningModule.

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| virtual void PLearn::RBMLayer::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [pure virtual] |

Back-propagate the output gradient to the input, and update parameters.

Reimplemented from PLearn::OnlineLearningModule.

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| void PLearn::RBMLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | rbm_bias, | ||

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| Vec & | rbm_bias_gradient, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

back-propagates the output gradient to the input and the bias

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, and PLearn::RBMWoodsLayer.

Definition at line 356 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

{

PLERROR("In RBMLayer::bpropUpdate(): not implemented in subclass %s",

this->classname().c_str());

}

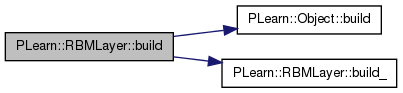

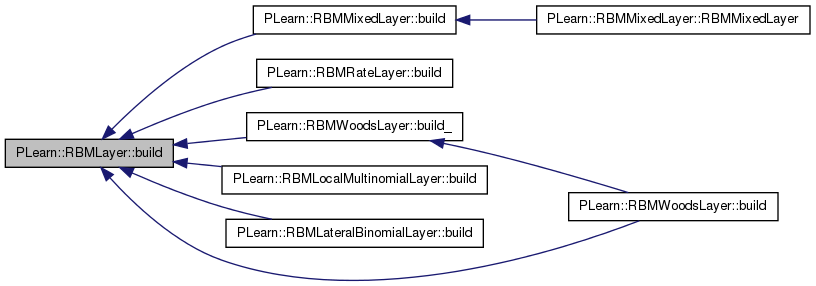

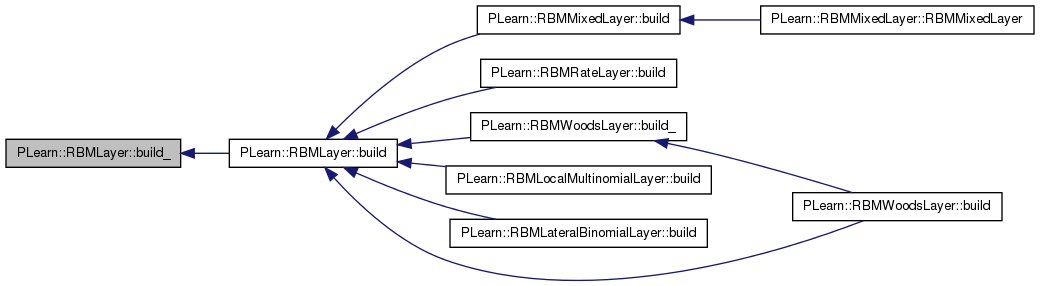

| virtual void PLearn::RBMLayer::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| void PLearn::RBMLayer::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 99 of file DEPRECATED/RBMLayer.cc.

References PLearn::Object::build(), and build_().

Referenced by PLearn::RBMWoodsLayer::build(), PLearn::RBMRateLayer::build(), PLearn::RBMMixedLayer::build(), PLearn::RBMLocalMultinomialLayer::build(), PLearn::RBMLateralBinomialLayer::build(), and PLearn::RBMWoodsLayer::build_().

{

inherited::build();

build_();

}

| void PLearn::RBMLayer::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 89 of file DEPRECATED/RBMLayer.cc.

References random_gen, and size.

Referenced by build().

{

if( size <= 0 )

return;

if( !random_gen )

random_gen = new PRandom();

random_gen->build();

}

| void PLearn::RBMLayer::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

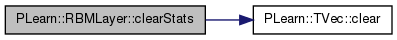

| void PLearn::RBMLayer::clearStats | ( | ) | [virtual] |

resets the statistics and counts

Reimplemented in PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, and PLearn::RBMMixedLayer.

Definition at line 80 of file RBMLayer.cc.

References bias_neg_stats, bias_pos_stats, PLearn::TVec< T >::clear(), gibbs_initial_ma_coefficient, gibbs_ma_coefficient, neg_count, and pos_count.

Referenced by PLearn::RBMLateralBinomialLayer::clearStats(), PLearn::RBMGaussianLayer::clearStats(), forget(), and update().

{

bias_pos_stats.clear();

bias_neg_stats.clear();

pos_count = 0;

neg_count = 0;

gibbs_ma_coefficient = gibbs_initial_ma_coefficient;

}

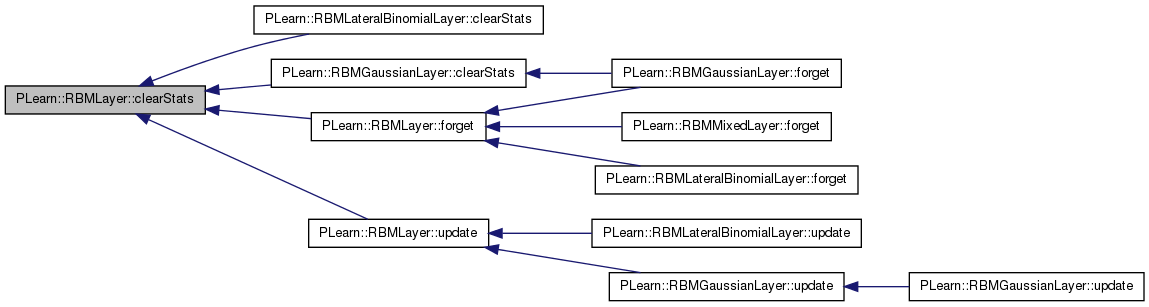

| virtual void PLearn::RBMLayer::computeExpectation | ( | ) | [pure virtual] |

Compute expectation.

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| virtual void PLearn::RBMLayer::computeExpectation | ( | ) | [pure virtual] |

compute the expectation

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

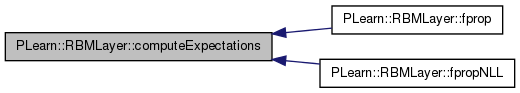

Referenced by declareMethods(), and fprop().

| virtual void PLearn::RBMLayer::computeExpectations | ( | ) | [pure virtual] |

Compute expectations (mini-batch).

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Referenced by fprop(), and fpropNLL().

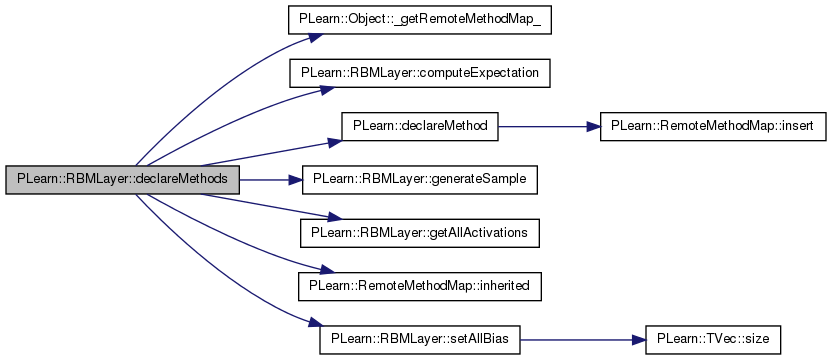

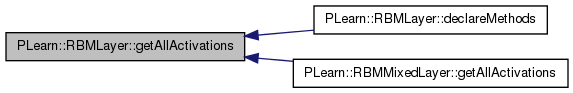

| void PLearn::RBMLayer::declareMethods | ( | RemoteMethodMap & | rmm | ) | [static, protected] |

Declares the class Methods.

Reimplemented from PLearn::Object.

Definition at line 157 of file RBMLayer.cc.

References PLearn::Object::_getRemoteMethodMap_(), computeExpectation(), PLearn::declareMethod(), generateSample(), getAllActivations(), PLearn::RemoteMethodMap::inherited(), and setAllBias().

{

// Make sure that inherited methods are declared

rmm.inherited(inherited::_getRemoteMethodMap_());

declareMethod(rmm, "setAllBias", &RBMLayer::setAllBias,

(BodyDoc("Set the biases values"),

ArgDoc ("bias", "the vector of biases")));

declareMethod(rmm, "generateSample", &RBMLayer::generateSample,

(BodyDoc("Generate a sample, and update the sample field")));

declareMethod(rmm, "getAllActivations", &RBMLayer::getAllActivations,

(BodyDoc("Uses 'rbmc' to obtain the activations of all units in this layer. \n"

"Unit 0 of this layer corresponds to unit 'offset' of 'rbmc'."),

ArgDoc("PP<RBMConnection> rbmc", "RBM Connection"),

ArgDoc("int offset", "Offset"),

ArgDoc("bool minibatch", "Use minibatch")));

declareMethod(rmm, "computeExpectation", &RBMLayer::computeExpectation,

(BodyDoc("Compute expectation.")));

}

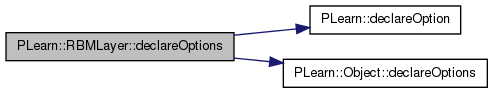

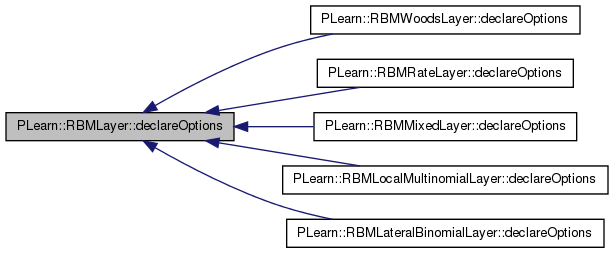

| void PLearn::RBMLayer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 66 of file DEPRECATED/RBMLayer.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::Object::declareOptions(), PLearn::OptionBase::learntoption, random_gen, size, and units_types.

Referenced by PLearn::RBMWoodsLayer::declareOptions(), PLearn::RBMRateLayer::declareOptions(), PLearn::RBMMixedLayer::declareOptions(), PLearn::RBMLocalMultinomialLayer::declareOptions(), and PLearn::RBMLateralBinomialLayer::declareOptions().

{

declareOption(ol, "units_types", &RBMLayer::units_types,

OptionBase::learntoption,

"Each character of this string describes the type of an"

" up unit:\n"

" - 'l' if the energy function of this unit is linear\n"

" (binomial or multinomial unit),\n"

" - 'q' if it is quadratic (for a gaussian unit).\n");

declareOption(ol, "random_gen", &RBMLayer::random_gen,

OptionBase::buildoption,

"Random generator.");

declareOption(ol, "size", &RBMLayer::size,

OptionBase::buildoption,

"Numer of units.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static void PLearn::RBMLayer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| static const PPath& PLearn::RBMLayer::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 322 of file RBMLayer.h.

:

| static const PPath& PLearn::RBMLayer::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 135 of file DEPRECATED/RBMLayer.h.

:

//##### Not Options #####################################################

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 50 of file DEPRECATED/RBMLayer.cc.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, and PLearn::RBMWoodsLayer.

Definition at line 787 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

{

PLERROR("RBMLayer::energy(Vec) not implemented in subclass %s\n",classname().c_str());

return 0;

}

| void PLearn::RBMLayer::expectation_is_not_up_to_date | ( | ) | [virtual] |

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 297 of file RBMLayer.cc.

References expectation_is_up_to_date.

{

expectation_is_up_to_date = false;

}

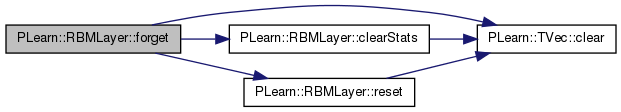

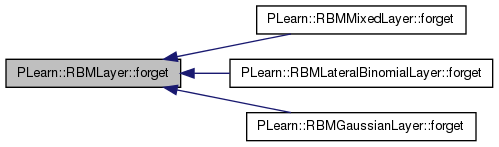

| void PLearn::RBMLayer::forget | ( | ) | [virtual] |

forgets everything

Implements PLearn::OnlineLearningModule.

Reimplemented in PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, and PLearn::RBMMixedLayer.

Definition at line 89 of file RBMLayer.cc.

References bias, PLearn::TVec< T >::clear(), clearStats(), and reset().

Referenced by PLearn::RBMMixedLayer::forget(), PLearn::RBMLateralBinomialLayer::forget(), and PLearn::RBMGaussianLayer::forget().

{

bias.clear();

reset();

clearStats();

}

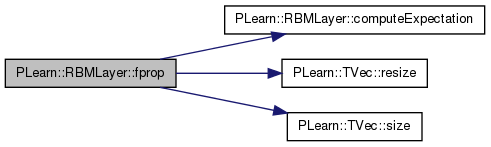

Adds the bias to input, consider this as the activation, then compute the expectation.

Reimplemented from PLearn::OnlineLearningModule.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 313 of file RBMLayer.cc.

References activation, bias, computeExpectation(), expectation, expectation_is_up_to_date, PLearn::OnlineLearningModule::input_size, PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::size().

{

// Note: inefficient.

// Yes it's ugly, blame the const plague

RBMLayer* This = const_cast<RBMLayer*>(this);

PLASSERT( input.size() == This->input_size );

output.resize( This->output_size );

This->activation << input;

This->activation += bias;

This->expectation_is_up_to_date = false;

This->computeExpectation();

output << This->expectation;

}

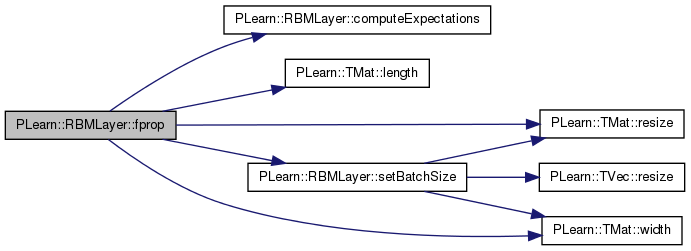

Mini-batch fprop.

Default implementation raises an error. SOON TO BE DEPRECATED, USE fprop(const TVec<Mat*>& ports_value)

Reimplemented from PLearn::OnlineLearningModule.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMMixedLayer, and PLearn::RBMWoodsLayer.

Definition at line 332 of file RBMLayer.cc.

References activations, bias, computeExpectations(), expectations, expectations_are_up_to_date, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), PLearn::OnlineLearningModule::output_size, PLASSERT, PLearn::TMat< T >::resize(), setBatchSize(), and PLearn::TMat< T >::width().

{

// Note: inefficient.

PLASSERT( inputs.width() == input_size );

int mbatch_size = inputs.length();

outputs.resize(mbatch_size, output_size);

setBatchSize(mbatch_size);

activations << inputs;

for (int k = 0; k < mbatch_size; k++)

activations(k) += bias;

expectations_are_up_to_date = false;

computeExpectations();

outputs << expectations;

}

| void PLearn::RBMLayer::fprop | ( | const Vec & | input, |

| const Vec & | rbm_bias, | ||

| Vec & | output | ||

| ) | const [virtual] |

computes the expectation given the conditional input and the given bias

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, and PLearn::RBMWoodsLayer.

Definition at line 349 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

Computes the negative log-likelihood of target given the internal activations of the layer.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, and PLearn::RBMWoodsLayer.

Definition at line 365 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

Referenced by fpropNLL().

{

PLERROR("In RBMLayer::fpropNLL(): not implemented in subclass %s",

this->classname().c_str());

return REAL_MAX;

}

Reimplemented in PLearn::RBMBinomialLayer.

Definition at line 372 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

{

PLERROR("weighted version of RBMLayer::fpropNLL not implemented in subclass %s",

this->classname().c_str());

return REAL_MAX;

}

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, and PLearn::RBMWoodsLayer.

Definition at line 380 of file RBMLayer.cc.

References activation, activations, batch_size, PLearn::Object::classname(), computeExpectations(), expectation, expectation_is_up_to_date, expectations, fpropNLL(), PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), PLASSERT, PLWARNING, PLearn::TVec< T >::resize(), PLearn::selectRows(), tmp, and PLearn::TMat< T >::width().

{

PLWARNING("batch version of RBMLayer::fpropNLL may not be optimized in subclass %s",

this->classname().c_str());

PLASSERT( targets.width() == input_size );

PLASSERT( targets.length() == batch_size );

PLASSERT( costs_column.width() == 1 );

PLASSERT( costs_column.length() == batch_size );

Mat tmp;

tmp.resize(1,input_size);

Vec target;

target.resize(input_size);

computeExpectations();

expectation_is_up_to_date = false;

for (int k=0;k<batch_size;k++) // loop over minibatch

{

selectRows(expectations, TVec<int>(1, k), tmp );

expectation << tmp;

selectRows( activations, TVec<int>(1, k), tmp );

activation << tmp;

selectRows( targets, TVec<int>(1, k), tmp );

target << tmp;

costs_column(k,0) = fpropNLL( target );

}

}

Computes  This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, and PLearn::RBMWoodsLayer.

Definition at line 793 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

{

PLERROR("RBMLayer::freeEnergyContribution(Vec) not implemented in subclass %s\n",classname().c_str());

return 0;

}

| void PLearn::RBMLayer::freeEnergyContributionGradient | ( | const Vec & | unit_activations, |

| Vec & | unit_activations_gradient, | ||

| real | output_gradient = 1, |

||

| bool | accumulate = false |

||

| ) | const [virtual] |

Computes gradient of the result of freeEnergyContribution  with respect to unit_activations.

with respect to unit_activations.

Optionally, a gradient with respect to freeEnergyContribution can be given

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, and PLearn::RBMWoodsLayer.

Definition at line 799 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

{

PLERROR("RBMLayer::freeEnergyContributionGradient(Vec, Vec) not implemented in subclass %s\n",classname().c_str());

}

| virtual void PLearn::RBMLayer::generateSample | ( | ) | [pure virtual] |

generate a sample, and update the sample field

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| virtual void PLearn::RBMLayer::generateSample | ( | ) | [pure virtual] |

generate a sample, and update the sample field

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Referenced by declareMethods().

| virtual void PLearn::RBMLayer::generateSamples | ( | ) | [pure virtual] |

Generate a mini-batch set of samples.

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| void PLearn::RBMLayer::getAllActivations | ( | PP< RBMConnection > | rbmc, |

| int | offset = 0, |

||

| bool | minibatch = false |

||

| ) | [virtual] |

Uses "rbmc" to obtain the activations of all units in this layer.

Unit 0 of this layer corresponds to unit "offset" of "rbmc".

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 282 of file RBMLayer.cc.

References activation, activations, bias, expectation_is_up_to_date, expectations_are_up_to_date, PLearn::TVec< T >::length(), setBatchSize(), and size.

{

if (minibatch) {

rbmc->computeProducts( offset, size, activations );

activations += bias;

setBatchSize(activations.length());

} else {

rbmc->computeProduct( offset, size, activation );

activation += bias;

}

expectation_is_up_to_date = false;

expectations_are_up_to_date = false;

}

| virtual void PLearn::RBMLayer::getAllActivations | ( | PP< RBMParameters > | rbmp, |

| int | offset = 0 |

||

| ) | [pure virtual] |

Uses "rbmp" to obtain the activations of all units in this layer.

Unit 0 of this layer corresponds to unit "offset" of "rbmp".

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, and PLearn::RBMTruncExpLayer.

Referenced by declareMethods(), and PLearn::RBMMixedLayer::getAllActivations().

Computes the conf_index configuration of the layer.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, and PLearn::RBMWoodsLayer.

Definition at line 813 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

{

PLERROR("RBMLayer::getConfiguration(int, Vec) not implemented in subclass %s\n",classname().c_str());

}

| int PLearn::RBMLayer::getConfigurationCount | ( | ) | [virtual] |

Returns a number of different configurations the layer can be in.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, and PLearn::RBMWoodsLayer.

Definition at line 807 of file RBMLayer.cc.

References PLearn::Object::classname(), and PLERROR.

{

PLERROR("RBMLayer::getConfigurationCount() not implemented in subclass %s\n",classname().c_str());

return 0;

}

| const Mat & PLearn::RBMLayer::getExpectations | ( | ) |

Accessor to the 'expectations' matrix.

Definition at line 306 of file RBMLayer.cc.

References expectations.

{

return this->expectations;

}

| void PLearn::RBMLayer::getUnitActivation | ( | int | i, |

| PP< RBMConnection > | rbmc, | ||

| int | offset = 0 |

||

| ) | [virtual] |

Uses "rbmc" to compute the activation of unit "i" of this layer.

This activation is computed by the "i+offset"-th unit of "rbmc"

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 270 of file RBMLayer.cc.

References activation, bias, expectation_is_up_to_date, expectations_are_up_to_date, i, and PLearn::TVec< T >::subVec().

Referenced by PLearn::RBMMixedLayer::getUnitActivation().

{

Vec act = activation.subVec(i,1);

rbmc->computeProduct( i+offset, 1, act );

act[0] += bias[i];

expectation_is_up_to_date = false;

expectations_are_up_to_date = false;

}

| virtual void PLearn::RBMLayer::getUnitActivations | ( | int | i, |

| PP< RBMParameters > | rbmp, | ||

| int | offset = 0 |

||

| ) | [pure virtual] |

Uses "rbmp" to obtain the activations of unit "i" of this layer.

This activation vector is computed by the "i+offset"-th unit of "rbmp"

Implemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, and PLearn::RBMTruncExpLayer.

| string PLearn::RBMLayer::getUnitsTypes | ( | ) | [inline] |

| void PLearn::RBMLayer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

Definition at line 106 of file DEPRECATED/RBMLayer.cc.

References activations, PLearn::deepCopyField(), expectation, PLearn::Object::makeDeepCopyFromShallowCopy(), random_gen, and sample.

Referenced by PLearn::RBMWoodsLayer::makeDeepCopyFromShallowCopy(), PLearn::RBMRateLayer::makeDeepCopyFromShallowCopy(), PLearn::RBMMixedLayer::makeDeepCopyFromShallowCopy(), PLearn::RBMLocalMultinomialLayer::makeDeepCopyFromShallowCopy(), and PLearn::RBMLateralBinomialLayer::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(random_gen, copies);

deepCopyField(activations, copies);

deepCopyField(sample, copies);

deepCopyField(expectation, copies);

}

| virtual void PLearn::RBMLayer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMRateLayer, PLearn::RBMTruncExpLayer, and PLearn::RBMWoodsLayer.

| void PLearn::RBMLayer::reset | ( | ) | [virtual] |

resets activations, sample and expectation fields

Reimplemented in PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, and PLearn::RBMMixedLayer.

Definition at line 58 of file DEPRECATED/RBMLayer.cc.

References activations, PLearn::TVec< T >::clear(), expectation, expectation_is_up_to_date, and sample.

Referenced by forget(), PLearn::RBMGaussianLayer::reset(), and PLearn::RBMLateralBinomialLayer::reset().

{

activations.clear();

sample.clear();

expectation.clear();

expectation_is_up_to_date = false;

}

| virtual void PLearn::RBMLayer::reset | ( | ) | [virtual] |

resets activations, sample and expectation fields

Reimplemented in PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, and PLearn::RBMMixedLayer.

| void PLearn::RBMLayer::setAllBias | ( | const Vec & | rbm_bias | ) | [virtual] |

Set the internal bias values to rbm_bias.

Definition at line 707 of file RBMLayer.cc.

References bias, PLASSERT, PLearn::TVec< T >::size(), and size.

Referenced by declareMethods().

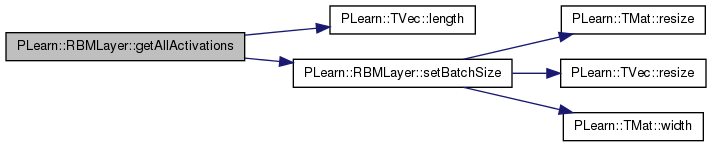

| void PLearn::RBMLayer::setBatchSize | ( | int | the_batch_size | ) | [virtual] |

Sets batch_size and resize activations, expectations, and samples.

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 255 of file RBMLayer.cc.

References activations, batch_size, expectations, PLASSERT, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), samples, size, and PLearn::TMat< T >::width().

Referenced by fprop(), getAllActivations(), PLearn::RBMMixedLayer::setBatchSize(), setExpectations(), and setExpectationsByRef().

{

batch_size = the_batch_size;

PLASSERT( activations.width() == size );

activations.resize( batch_size, size );

PLASSERT( expectations.width() == size );

expectations.resize( batch_size, size );

PLASSERT( samples.width() == size );

samples.resize( batch_size, size );

}

| void PLearn::RBMLayer::setExpectation | ( | const Vec & | the_expectation | ) | [virtual] |

Copy the given expectation in the 'expectation' vector.

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 716 of file RBMLayer.cc.

References expectation, and expectation_is_up_to_date.

{

expectation << the_expectation;

expectation_is_up_to_date=true;

}

| void PLearn::RBMLayer::setExpectationByRef | ( | const Vec & | the_expectation | ) | [virtual] |

Make the 'expectation' vector point to the given data vector (so no copy is performed).

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 725 of file RBMLayer.cc.

References expectation, and expectation_is_up_to_date.

{

expectation = the_expectation;

expectation_is_up_to_date=true;

}

| void PLearn::RBMLayer::setExpectations | ( | const Mat & | the_expectations | ) | [virtual] |

Copy the given expectations in the 'expectations' matrix.

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 734 of file RBMLayer.cc.

References batch_size, expectations, expectations_are_up_to_date, PLearn::TMat< T >::length(), and setBatchSize().

{

batch_size = the_expectations.length();

setBatchSize( batch_size );

expectations << the_expectations;

expectations_are_up_to_date=true;

}

| void PLearn::RBMLayer::setExpectationsByRef | ( | const Mat & | the_expectations | ) | [virtual] |

Make the 'expectations' matrix point to the given data matrix (so no copy is performed).

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 745 of file RBMLayer.cc.

References batch_size, expectations, expectations_are_up_to_date, PLearn::TMat< T >::length(), and setBatchSize().

{

batch_size = the_expectations.length();

setBatchSize( batch_size );

expectations = the_expectations;

expectations_are_up_to_date=true;

}

| void PLearn::RBMLayer::setLearningRate | ( | real | the_learning_rate | ) | [virtual] |

Sets the learning rate.

Reimplemented from PLearn::OnlineLearningModule.

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 239 of file RBMLayer.cc.

References learning_rate.

Referenced by PLearn::RBMMixedLayer::setLearningRate().

{

learning_rate = the_learning_rate;

}

| void PLearn::RBMLayer::setMomentum | ( | real | the_momentum | ) | [virtual] |

Sets the momentum.

Reimplemented in PLearn::RBMMixedLayer.

Definition at line 247 of file RBMLayer.cc.

References momentum.

Referenced by PLearn::RBMMixedLayer::setMomentum().

{

momentum = the_momentum;

}

| void PLearn::RBMLayer::update | ( | const Vec & | grad | ) | [virtual] |

Updates parameters according to the given gradient.

Reimplemented in PLearn::RBMLateralBinomialLayer.

Definition at line 494 of file RBMLayer.cc.

References applyBiasDecay(), b, bias, bias_inc, PLearn::TVec< T >::data(), PLearn::fast_is_equal(), i, learning_rate, momentum, and size.

{

real* b = bias.data();

real* gb = grad.data();

real* binc = momentum==0?0:bias_inc.data();

for( int i=0 ; i<size ; i++ )

{

if( fast_is_equal( momentum, 0.) )

{

// update the bias: bias -= learning_rate * input_gradient

b[i] -= learning_rate * gb[i];

}

else

{

// The update rule becomes:

// bias_inc = momentum * bias_inc - learning_rate * input_gradient

// bias += bias_inc

binc[i] = momentum * binc[i] - learning_rate * gb[i];

b[i] += binc[i];

}

}

applyBiasDecay();

}

| void PLearn::RBMLayer::update | ( | ) | [virtual] |

Update parameters according to accumulated statistics.

Reimplemented in PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, and PLearn::RBMMixedLayer.

Definition at line 454 of file RBMLayer.cc.

References applyBiasDecay(), b, bias, bias_inc, bias_neg_stats, bias_pos_stats, clearStats(), PLearn::TVec< T >::data(), PLearn::fast_is_equal(), i, learning_rate, momentum, neg_count, pos_count, PLearn::TVec< T >::resize(), and size.

Referenced by PLearn::RBMLateralBinomialLayer::update(), and PLearn::RBMGaussianLayer::update().

{

// bias += learning_rate * (bias_pos_stats/pos_count

// - bias_neg_stats/neg_count)

real pos_factor = learning_rate / pos_count;

real neg_factor = -learning_rate / neg_count;

real* b = bias.data();

real* bps = bias_pos_stats.data();

real* bns = bias_neg_stats.data();

if( fast_is_equal( momentum, 0.) )

{

// no need to use bias_inc

for( int i=0 ; i<size ; i++ )

b[i] += pos_factor * bps[i] + neg_factor * bns[i];

}

else

{

// ensure that bias_inc has the right size

bias_inc.resize( size );

// The update rule becomes:

// bias_inc = momentum * bias_inc

// + learning_rate * (bias_pos_stats/pos_count

// - bias_neg_stats/neg_count)

// bias += bias_inc

real* binc = bias_inc.data();

for( int i=0 ; i<size ; i++ )

{

binc[i] = momentum*binc[i] + pos_factor*bps[i] + neg_factor*bns[i];

b[i] += binc[i];

}

}

applyBiasDecay();

clearStats();

}

Update parameters according to one pair of vectors.

Reimplemented in PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, and PLearn::RBMMixedLayer.

Definition at line 547 of file RBMLayer.cc.

References applyBiasDecay(), b, bias, bias_inc, PLearn::TVec< T >::data(), PLearn::fast_is_equal(), i, learning_rate, momentum, PLearn::TVec< T >::resize(), and size.

{

// bias += learning_rate * (pos_values - neg_values)

real* b = bias.data();

real* pv = pos_values.data();

real* nv = neg_values.data();

if( fast_is_equal( momentum, 0.) )

{

for( int i=0 ; i<size ; i++ )

b[i] += learning_rate * ( pv[i] - nv[i] );

}

else

{

bias_inc.resize( size );

real* binc = bias_inc.data();

for( int i=0 ; i<size ; i++ )

{

binc[i] = momentum*binc[i] + learning_rate*( pv[i] - nv[i] );

b[i] += binc[i];

}

}

applyBiasDecay();

}

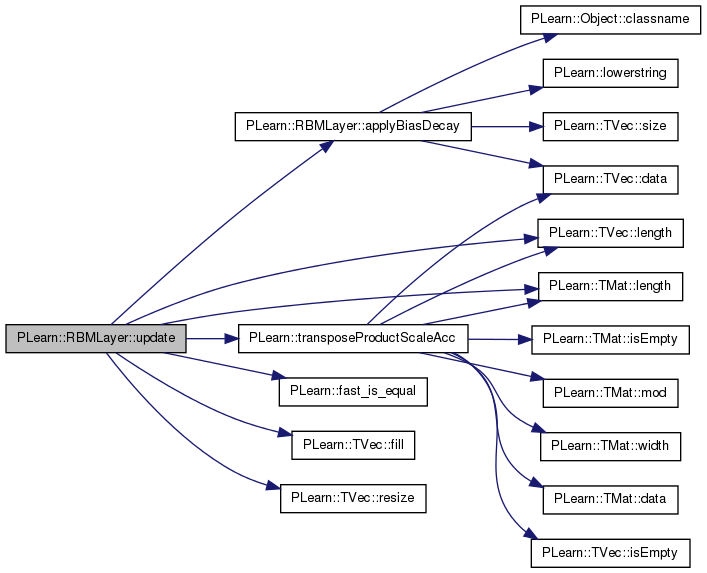

Update parameters according to one pair of matrices.

Reimplemented in PLearn::RBMGaussianLayer, PLearn::RBMLateralBinomialLayer, and PLearn::RBMMixedLayer.

Definition at line 574 of file RBMLayer.cc.

References applyBiasDecay(), bias, PLearn::fast_is_equal(), PLearn::TVec< T >::fill(), learning_rate, PLearn::TVec< T >::length(), PLearn::TMat< T >::length(), momentum, n, ones, PLASSERT, PLERROR, PLearn::TVec< T >::resize(), and PLearn::transposeProductScaleAcc().

{

// bias += learning_rate * (pos_values - neg_values)

int n = pos_values.length();

PLASSERT( neg_values.length() == n );

if (ones.length() < n) {

ones.resize(n);

ones.fill(1);

} else if (ones.length() > n)

// No need to fill with ones since we are only shrinking the vector.

ones.resize(n);

// We take the average gradient over the mini-batch.

real avg_lr = learning_rate / n;

if( fast_is_equal( momentum, 0.) )

{

transposeProductScaleAcc(bias, pos_values, ones, avg_lr, real(1));

transposeProductScaleAcc(bias, neg_values, ones, -avg_lr, real(1));

}

else

{

PLERROR("RBMLayer::update - Not implemented yet with momentum");

/*

bias_inc.resize( size );

real* binc = bias_inc.data();

for( int i=0 ; i<size ; i++ )

{

binc[i] = momentum*binc[i] + learning_rate*( pv[i] - nv[i] );

b[i] += binc[i];

}

*/

}

applyBiasDecay();

}

| void PLearn::RBMLayer::update | ( | const Mat & | grad | ) | [virtual] |

Definition at line 520 of file RBMLayer.cc.

References b, batch_size, bias, bias_inc, PLearn::TVec< T >::data(), PLearn::fast_is_equal(), grad, i, learning_rate, PLearn::TMat< T >::length(), momentum, and size.

{

int batch_size = grad.length();

real* b = bias.data();

real* binc = momentum==0?0:bias_inc.data();

real avg_lr = learning_rate / (real)batch_size;

for( int isample=0; isample<batch_size; isample++)

for( int i=0 ; i<size ; i++ )

{

if( fast_is_equal( momentum, 0.) )

{

// update the bias: bias -= learning_rate * input_gradient

b[i] -= avg_lr * grad(isample,i);

}

else

{

// The update rule becomes:

// bias_inc = momentum * bias_inc - learning_rate * input_gradient

// bias += bias_inc

binc[i] = momentum * binc[i] - avg_lr * grad(isample,i);

b[i] += binc[i];

}

}

}

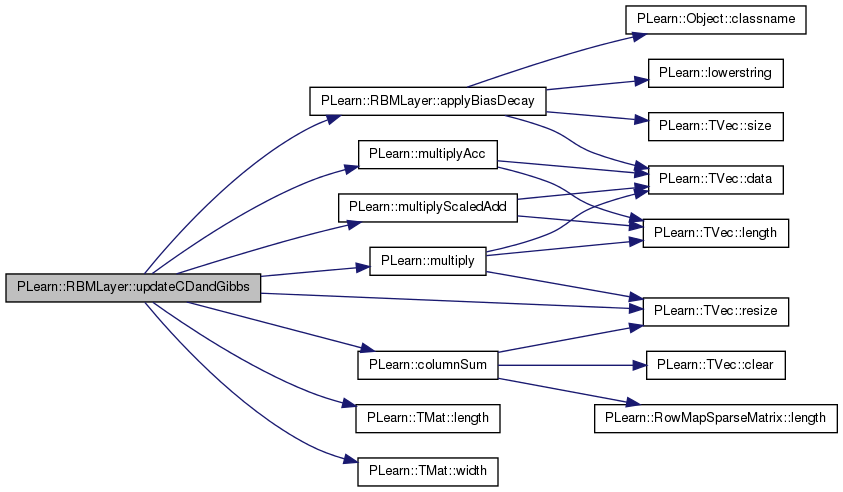

| void PLearn::RBMLayer::updateCDandGibbs | ( | const Mat & | pos_values, |

| const Mat & | cd_neg_values, | ||

| const Mat & | gibbs_neg_values, | ||

| real | background_gibbs_update_ratio | ||

| ) | [virtual] |

Reimplemented in PLearn::RBMLateralBinomialLayer.

Definition at line 617 of file RBMLayer.cc.

References applyBiasDecay(), bias, bias_neg_stats, PLearn::columnSum(), gibbs_ma_coefficient, learning_rate, PLearn::TMat< T >::length(), PLearn::multiply(), PLearn::multiplyAcc(), PLearn::multiplyScaledAdd(), neg_count, PLASSERT, PLearn::TVec< T >::resize(), size, tmp, and PLearn::TMat< T >::width().

Referenced by PLearn::RBMLateralBinomialLayer::updateCDandGibbs().

{

PLASSERT(pos_values.width()==size);

PLASSERT(cd_neg_values.width()==size);

PLASSERT(gibbs_neg_values.width()==size);

int minibatch_size=gibbs_neg_values.length();

PLASSERT(pos_values.length()==minibatch_size);

PLASSERT(cd_neg_values.length()==minibatch_size);

real normalize_factor=1.0/minibatch_size;

// neg_stats <-- gibbs_chain_statistics_forgetting_factor * neg_stats

// +(1-gibbs_chain_statistics_forgetting_factor)

// * sumoverrows(gibbs_neg_values)

tmp.resize(size);

columnSum(gibbs_neg_values,tmp);

if (neg_count==0)

multiply(tmp,normalize_factor,bias_neg_stats);

else

multiplyScaledAdd(tmp,gibbs_ma_coefficient,

normalize_factor*(1-gibbs_ma_coefficient),

bias_neg_stats);

neg_count++;

// delta w = lrate * ( sumoverrows(pos_values)

// - ( background_gibbs_update_ratio*neg_stats

// +(1-background_gibbs_update_ratio)

// * sumoverrows(cd_neg_values) ) )

columnSum(pos_values,tmp);

multiplyAcc(bias, tmp, learning_rate*normalize_factor);

multiplyAcc(bias, bias_neg_stats,

-learning_rate*background_gibbs_update_ratio);

columnSum(cd_neg_values, tmp);

multiplyAcc(bias, tmp,

-learning_rate*(1-background_gibbs_update_ratio)*normalize_factor);

applyBiasDecay();

}

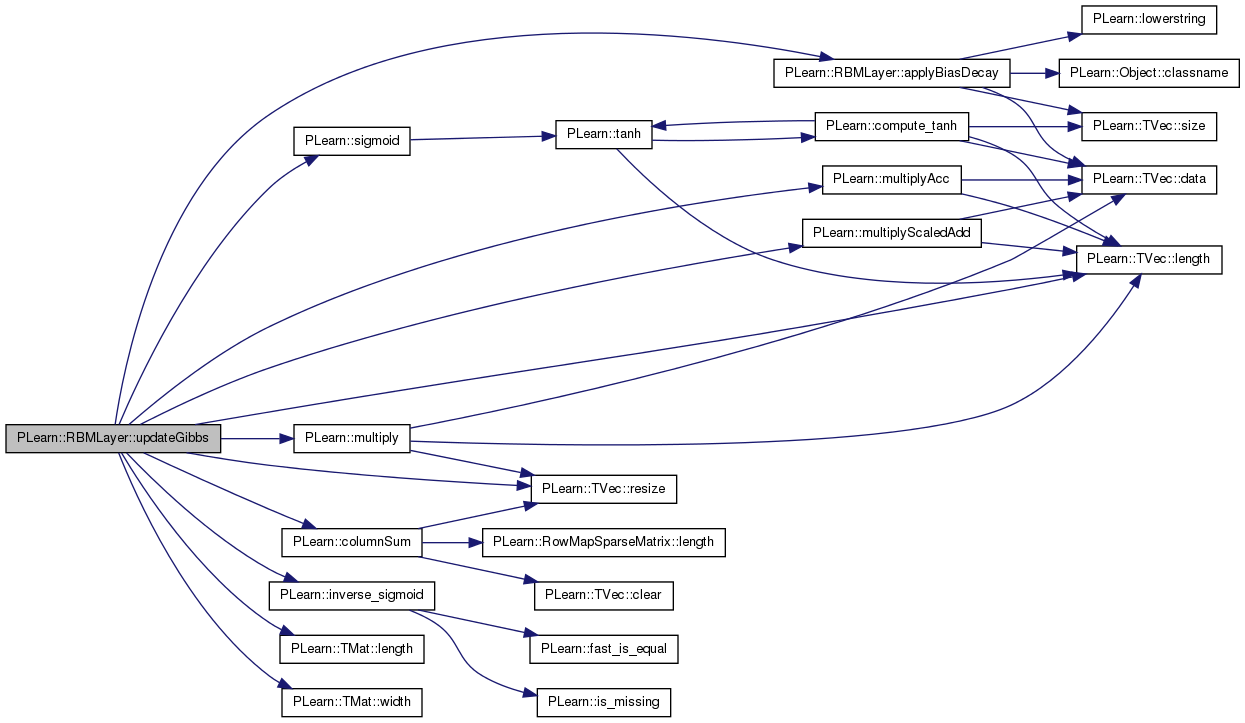

| void PLearn::RBMLayer::updateGibbs | ( | const Mat & | pos_values, |

| const Mat & | gibbs_neg_values | ||

| ) | [virtual] |

Reimplemented in PLearn::RBMLateralBinomialLayer.

Definition at line 662 of file RBMLayer.cc.

References applyBiasDecay(), bias, bias_neg_stats, PLearn::columnSum(), gibbs_ma_coefficient, gibbs_ma_increment, gibbs_ma_schedule, i, PLearn::inverse_sigmoid(), learning_rate, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::multiply(), PLearn::multiplyAcc(), PLearn::multiplyScaledAdd(), neg_count, PLASSERT, PLearn::TVec< T >::resize(), PLearn::sigmoid(), size, tmp, and PLearn::TMat< T >::width().

Referenced by PLearn::RBMLateralBinomialLayer::updateGibbs().

{

int minibatch_size = pos_values.length();

PLASSERT(pos_values.width()==size);

PLASSERT(gibbs_neg_values.width()==size);

PLASSERT(minibatch_size==gibbs_neg_values.length());

// neg_stats <-- gibbs_chain_statistics_forgetting_factor * neg_stats

// +(1-gibbs_chain_statistics_forgetting_factor)

// * meanoverrows(gibbs_neg_values)

tmp.resize(size);

real normalize_factor=1.0/minibatch_size;

columnSum(gibbs_neg_values,tmp);

if (neg_count==0)

multiply(tmp, normalize_factor, bias_neg_stats);

else // bias_neg_stats <-- tmp*(1-gibbs_chain_statistics_forgetting_factor)/minibatch_size

// +gibbs_chain_statistics_forgetting_factor*bias_neg_stats

multiplyScaledAdd(tmp,gibbs_ma_coefficient,

normalize_factor*(1-gibbs_ma_coefficient),

bias_neg_stats);

neg_count++;

bool increase_ma=false;

for (int i=0;i<gibbs_ma_schedule.length();i++)

if (gibbs_ma_schedule[i]==neg_count*minibatch_size)

{

increase_ma=true;

break;

}

if (increase_ma)

gibbs_ma_coefficient = sigmoid(gibbs_ma_increment + inverse_sigmoid(gibbs_ma_coefficient));

// delta w = lrate * ( meanoverrows(pos_values) - neg_stats )

columnSum(pos_values,tmp);

multiplyAcc(bias, tmp, learning_rate*normalize_factor);

multiplyAcc(bias, bias_neg_stats, -learning_rate);

applyBiasDecay();

}

static StaticInitializer PLearn::RBMLayer::_static_initializer_ [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMBinomialLayer, PLearn::RBMGaussianLayer, PLearn::RBMMixedLayer, PLearn::RBMMultinomialLayer, PLearn::RBMTruncExpLayer, PLearn::RBMLateralBinomialLayer, PLearn::RBMLocalMultinomialLayer, PLearn::RBMRateLayer, and PLearn::RBMWoodsLayer.

Definition at line 135 of file DEPRECATED/RBMLayer.h.

activation value:

Definition at line 104 of file RBMLayer.h.

Referenced by PLearn::RBMLateralBinomialLayer::computeExpectation(), PLearn::RBMWoodsLayer::computeExpectation(), PLearn::RBMRateLayer::computeExpectation(), PLearn::RBMLocalMultinomialLayer::computeExpectation(), fprop(), fpropNLL(), PLearn::RBMBinomialLayer::fpropNLL(), PLearn::RBMWoodsLayer::fpropNLL(), PLearn::RBMRateLayer::fpropNLL(), getAllActivations(), getUnitActivation(), PLearn::RBMBinomialLayer::RBMBinomialLayer(), PLearn::RBMGaussianLayer::RBMGaussianLayer(), PLearn::RBMMultinomialLayer::RBMMultinomialLayer(), and PLearn::RBMTruncExpLayer::RBMTruncExpLayer().

values allowing to know the distribution : the activation value (before sigmoid) for a binomial input, the couple (mu, sigma) for a gaussian unit.

Definition at line 81 of file DEPRECATED/RBMLayer.h.

Referenced by PLearn::RBMMixedLayer::build_(), PLearn::RBMMultinomialLayer::computeExpectations(), PLearn::RBMLocalMultinomialLayer::computeExpectations(), PLearn::RBMRateLayer::computeExpectations(), PLearn::RBMGaussianLayer::computeExpectations(), PLearn::RBMBinomialLayer::computeExpectations(), PLearn::RBMLateralBinomialLayer::computeExpectations(), PLearn::RBMWoodsLayer::computeExpectations(), PLearn::RBMTruncExpLayer::computeExpectations(), fprop(), fpropNLL(), PLearn::RBMBinomialLayer::fpropNLL(), PLearn::RBMWoodsLayer::fpropNLL(), PLearn::RBMTruncExpLayer::generateSamples(), getAllActivations(), makeDeepCopyFromShallowCopy(), reset(), and setBatchSize().

Definition at line 105 of file RBMLayer.h.

Size of batches when using mini-batch.

Definition at line 101 of file RBMLayer.h.

Referenced by PLearn::RBMLateralBinomialLayer::bpropNLL(), PLearn::RBMBinomialLayer::bpropNLL(), PLearn::RBMMultinomialLayer::bpropNLL(), PLearn::RBMWoodsLayer::bpropNLL(), PLearn::RBMMixedLayer::bpropNLL(), PLearn::RBMLocalMultinomialLayer::bpropNLL(), PLearn::RBMGaussianLayer::bpropNLL(), PLearn::RBMMixedLayer::bpropUpdate(), PLearn::RBMMultinomialLayer::computeExpectations(), PLearn::RBMLocalMultinomialLayer::computeExpectations(), PLearn::RBMRateLayer::computeExpectations(), PLearn::RBMGaussianLayer::computeExpectations(), PLearn::RBMBinomialLayer::computeExpectations(), PLearn::RBMLateralBinomialLayer::computeExpectations(), PLearn::RBMWoodsLayer::computeExpectations(), PLearn::RBMTruncExpLayer::computeExpectations(), PLearn::RBMLateralBinomialLayer::fprop(), PLearn::RBMMultinomialLayer::fpropNLL(), fpropNLL(), PLearn::RBMLateralBinomialLayer::fpropNLL(), PLearn::RBMBinomialLayer::fpropNLL(), PLearn::RBMWoodsLayer::fpropNLL(), PLearn::RBMGaussianLayer::fpropNLL(), PLearn::RBMMixedLayer::fpropNLL(), PLearn::RBMLocalMultinomialLayer::fpropNLL(), PLearn::RBMWoodsLayer::generateSamples(), PLearn::RBMBinomialLayer::generateSamples(), PLearn::RBMTruncExpLayer::generateSamples(), PLearn::RBMLateralBinomialLayer::generateSamples(), PLearn::RBMMultinomialLayer::generateSamples(), PLearn::RBMRateLayer::generateSamples(), PLearn::RBMLocalMultinomialLayer::generateSamples(), PLearn::RBMGaussianLayer::generateSamples(), setBatchSize(), PLearn::RBMMixedLayer::setExpectations(), setExpectations(), PLearn::RBMMixedLayer::setExpectationsByRef(), setExpectationsByRef(), PLearn::RBMGaussianLayer::update(), and update().

Definition at line 93 of file RBMLayer.h.

Referenced by addBiasDecay(), applyBiasDecay(), PLearn::RBMLateralBinomialLayer::bpropUpdate(), PLearn::RBMRateLayer::bpropUpdate(), PLearn::RBMTruncExpLayer::bpropUpdate(), PLearn::RBMMultinomialLayer::bpropUpdate(), PLearn::RBMBinomialLayer::bpropUpdate(), PLearn::RBMWoodsLayer::bpropUpdate(), PLearn::RBMGaussianLayer::bpropUpdate(), PLearn::RBMLocalMultinomialLayer::bpropUpdate(), PLearn::RBMLateralBinomialLayer::energy(), PLearn::RBMGaussianLayer::energy(), PLearn::RBMBinomialLayer::energy(), PLearn::RBMLocalMultinomialLayer::energy(), PLearn::RBMMultinomialLayer::energy(), PLearn::RBMRateLayer::energy(), PLearn::RBMWoodsLayer::energy(), forget(), PLearn::RBMMultinomialLayer::fprop(), PLearn::RBMWoodsLayer::fprop(), PLearn::RBMBinomialLayer::fprop(), PLearn::RBMGaussianLayer::fprop(), PLearn::RBMRateLayer::fprop(), fprop(), PLearn::RBMLocalMultinomialLayer::fprop(), PLearn::RBMTruncExpLayer::fprop(), PLearn::RBMLateralBinomialLayer::fprop(), getAllActivations(), getUnitActivation(), PLearn::RBMBinomialLayer::RBMBinomialLayer(), PLearn::RBMGaussianLayer::RBMGaussianLayer(), PLearn::RBMMultinomialLayer::RBMMultinomialLayer(), PLearn::RBMTruncExpLayer::RBMTruncExpLayer(), setAllBias(), update(), updateCDandGibbs(), and updateGibbs().

Bias decay parameter.

Definition at line 79 of file RBMLayer.h.

Referenced by addBiasDecay(), and applyBiasDecay().

Type of decay applied to the biases.

Definition at line 76 of file RBMLayer.h.

Referenced by addBiasDecay(), and applyBiasDecay().

Vec PLearn::RBMLayer::bias_inc [protected] |

Stores the momentum of the gradient.

Definition at line 339 of file RBMLayer.h.

Referenced by PLearn::RBMLateralBinomialLayer::bpropUpdate(), PLearn::RBMRateLayer::bpropUpdate(), PLearn::RBMTruncExpLayer::bpropUpdate(), PLearn::RBMMultinomialLayer::bpropUpdate(), PLearn::RBMWoodsLayer::bpropUpdate(), PLearn::RBMBinomialLayer::bpropUpdate(), PLearn::RBMLocalMultinomialLayer::bpropUpdate(), PLearn::RBMGaussianLayer::bpropUpdate(), PLearn::RBMLateralBinomialLayer::build_(), and update().

Accumulates negative contribution to the gradient of bias.

Definition at line 336 of file RBMLayer.h.

Referenced by accumulateNegStats(), bpropCD(), clearStats(), PLearn::RBMBinomialLayer::RBMBinomialLayer(), PLearn::RBMGaussianLayer::RBMGaussianLayer(), PLearn::RBMMultinomialLayer::RBMMultinomialLayer(), PLearn::RBMTruncExpLayer::RBMTruncExpLayer(), update(), updateCDandGibbs(), and updateGibbs().

Accumulates positive contribution to the gradient of bias.

Definition at line 334 of file RBMLayer.h.