|

PLearn 0.1

|

|

PLearn 0.1

|

Select the M "best" of N trained PLearners based on a train cost. More...

#include <BestAveragingPLearner.h>

Public Member Functions | |

| BestAveragingPLearner () | |

| Default constructor. | |

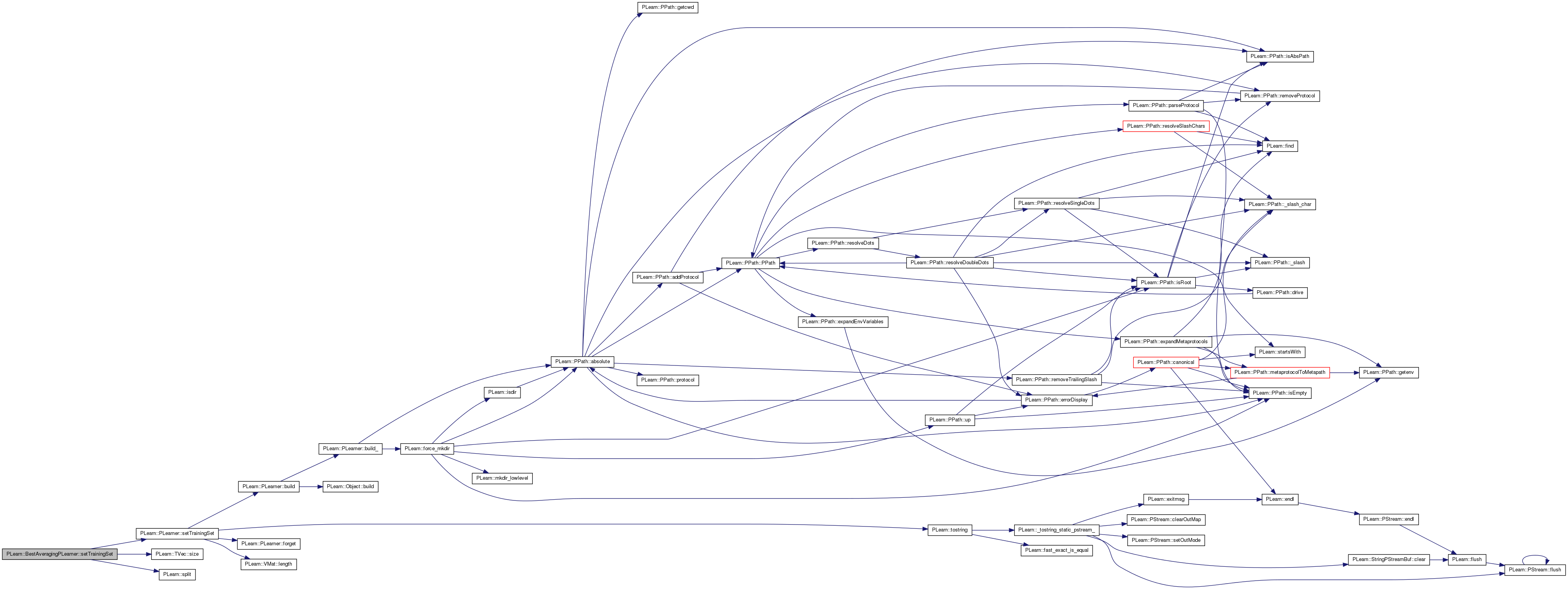

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Forwarded to inner learners. | |

| virtual void | setTrainStatsCollector (PP< VecStatsCollector > statscol) |

| New train-stats-collector are instantiated for sublearners. | |

| virtual void | setExperimentDirectory (const PPath &the_expdir) |

| Forwarded to inner learners (suffixed with '/learner_i') | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutputs (and thus the test method). | |

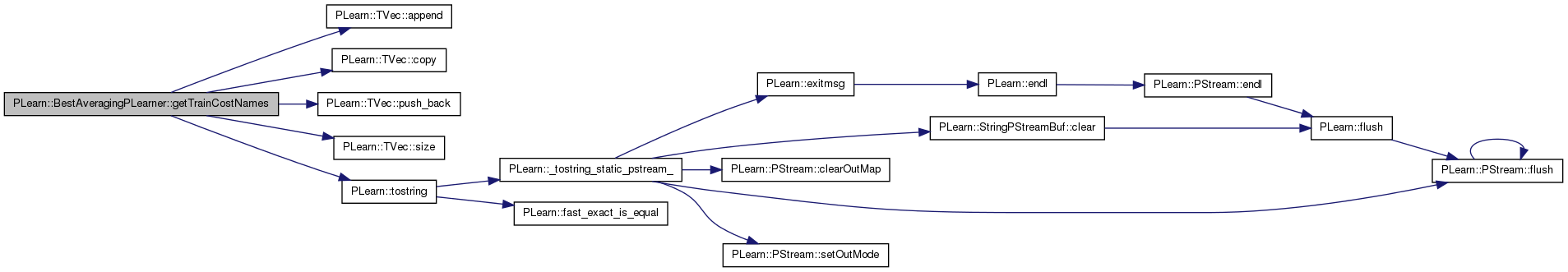

| virtual TVec< std::string > | getTrainCostNames () const |

| The train costs are simply the concatenation of all sublearner's train costs. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual BestAveragingPLearner * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| TVec< PP< PLearner > > | m_learner_set |

| The set of all learners to train, given in extension. | |

| PP< PLearner > | m_learner_template |

| If 'learner_set' is not specified, a template PLearner used to instantiate 'learner_set'. | |

| int32_t | m_initial_seed |

| If learners are instantiated from 'learner_template', the initial seed value to set into the learners before building them. | |

| string | m_seed_option |

| Use in conjunction with 'initial_seed'; option name pointing to the seed to be initialized. | |

| int | m_total_learner_num |

| Total number of learners to instantiate from learner_template (if 'learner_set' is not specified. | |

| int | m_best_learner_num |

| Number of BEST train-time learners to keep and average at compute-output time. | |

| string | m_comparison_statspec |

| Statistic specification to use to compare the training performance between learners. | |

| PP< Splitter > | m_splitter |

| Optional splitter that can be used to create the individual training sets for the learners. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| int | m_cached_outputsize |

| Cached outputsize, determined from the inner learners. | |

| Vec | m_learner_train_costs |

| List of train costs values for each learner in 'learner_set'. | |

| TVec< PP< PLearner > > | m_best_learners |

| Learners that have been found to be the best and are being kept. | |

| Vec | m_output_buffer |

| Buffer for compute output of inner model. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Select the M "best" of N trained PLearners based on a train cost.

This PLearner takes N raw models (themselved PLearners) and trains them all on the same data (or various splits given by an optional Splitter), then selects the M "best" models based on a train cost. At compute-output time, it outputs the arithmetic mean of the outputs of the selected models (which works fine for regression).

The train costs of this learner are simply the concatenation of the train costs of all sublearners. We also add the following costs: the cost 'selected_i', where 0 <= i < M, contains the index of the selected model (between 0 and N-1).

The test costs of this learner is, for now, just the mse.

Definition at line 64 of file BestAveragingPLearner.h.

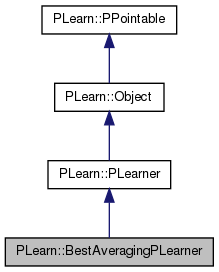

typedef PLearner PLearn::BestAveragingPLearner::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 66 of file BestAveragingPLearner.h.

| PLearn::BestAveragingPLearner::BestAveragingPLearner | ( | ) |

Default constructor.

Definition at line 66 of file BestAveragingPLearner.cc.

: m_initial_seed(-1), m_seed_option("seed"), m_total_learner_num(0), m_best_learner_num(0), m_cached_outputsize(-1) { }

| string PLearn::BestAveragingPLearner::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 64 of file BestAveragingPLearner.cc.

| OptionList & PLearn::BestAveragingPLearner::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 64 of file BestAveragingPLearner.cc.

| RemoteMethodMap & PLearn::BestAveragingPLearner::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 64 of file BestAveragingPLearner.cc.

Reimplemented from PLearn::PLearner.

Definition at line 64 of file BestAveragingPLearner.cc.

| Object * PLearn::BestAveragingPLearner::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 64 of file BestAveragingPLearner.cc.

| StaticInitializer BestAveragingPLearner::_static_initializer_ & PLearn::BestAveragingPLearner::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 64 of file BestAveragingPLearner.cc.

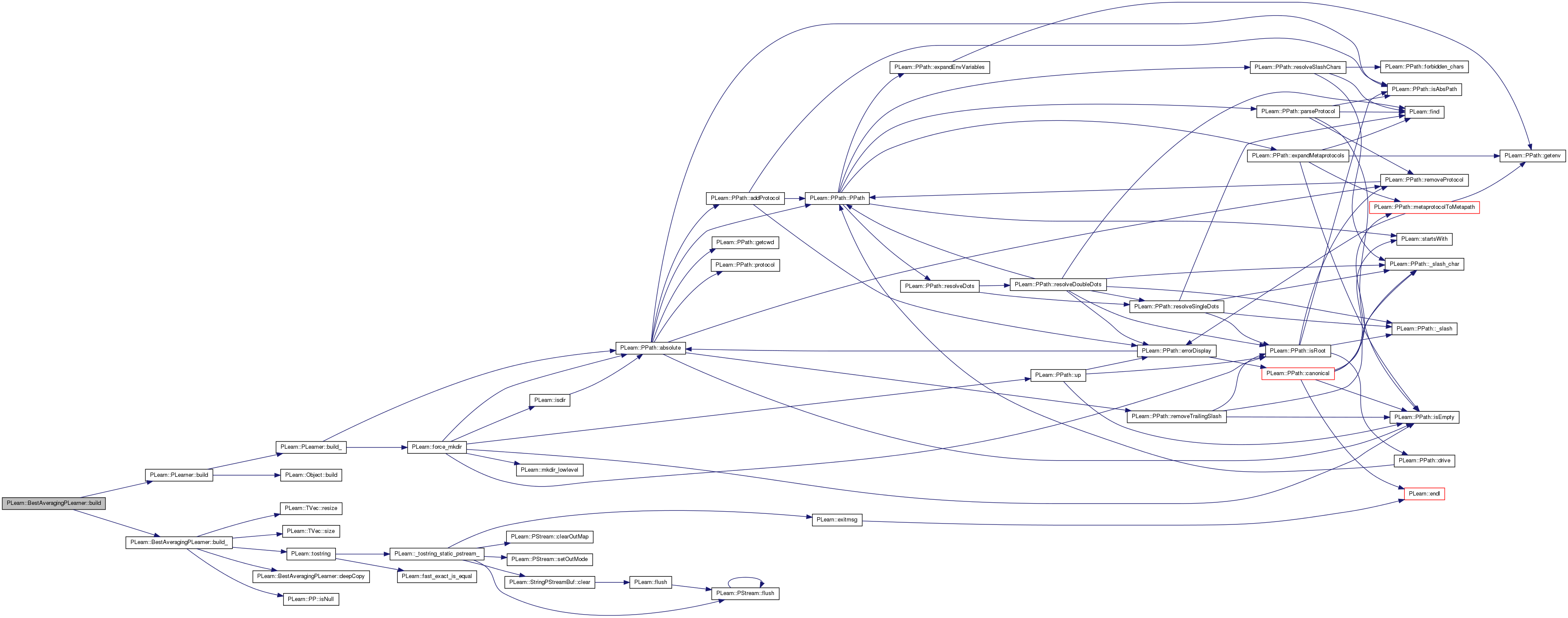

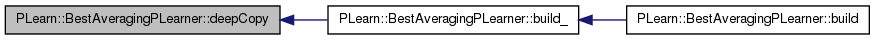

| void PLearn::BestAveragingPLearner::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 213 of file BestAveragingPLearner.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

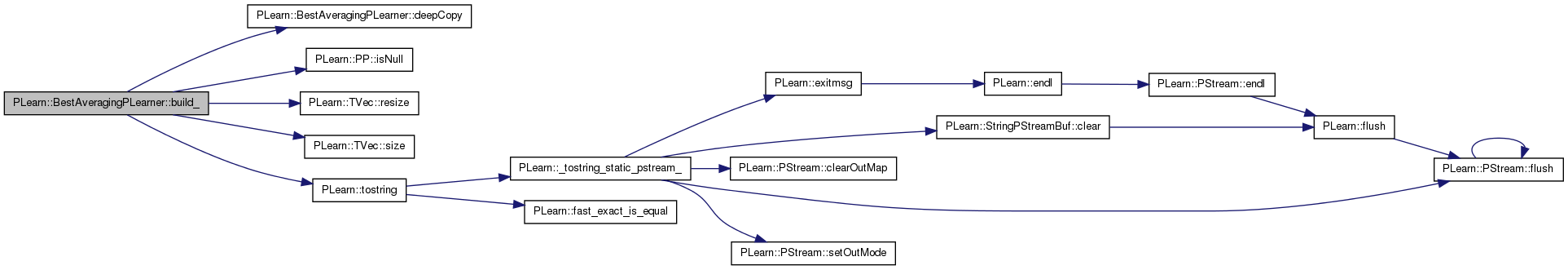

| void PLearn::BestAveragingPLearner::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 173 of file BestAveragingPLearner.cc.

References deepCopy(), i, PLearn::PP< T >::isNull(), m_best_learner_num, m_initial_seed, m_learner_set, m_learner_template, m_seed_option, m_total_learner_num, N, PLERROR, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), and PLearn::tostring().

Referenced by build().

{

if (! m_learner_set.size() && m_learner_template.isNull())

PLERROR("%s: one of 'learner_set' or 'learner_template' must be specified",

__FUNCTION__);

// If both 'learner_set' and 'learner_template' are specified, the former

// silently overrides the latter. Reason: after instantiation of

// learner_template, stuff is put into learner_set as a result.

if (! m_learner_set.size() && m_learner_template) {

// Sanity check on other options

if (m_total_learner_num < 1)

PLERROR("%s: 'total_learner_num' must be strictly positive",

__FUNCTION__);

const int N = m_total_learner_num;

int32_t cur_seed = m_initial_seed;

m_learner_set.resize(N);

for (int i=0 ; i<N ; ++i) {

PP<PLearner> new_learner = PLearn::deepCopy(m_learner_template);

new_learner->setOption(m_seed_option, tostring(cur_seed));

new_learner->build();

new_learner->forget();

m_learner_set[i] = new_learner;

if (cur_seed > 0)

++cur_seed;

}

}

// Some more sanity checking

if (m_best_learner_num < 1)

PLERROR("%s: 'best_learner_num' must be strictly positive", __FUNCTION__);

if (m_best_learner_num > m_learner_set.size())

PLERROR("%s: 'best_learner_num' (=%d) must not be larger than the total "

"number of learners (=%d)", __FUNCTION__, m_best_learner_num,

m_learner_set.size());

}

| string PLearn::BestAveragingPLearner::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 64 of file BestAveragingPLearner.cc.

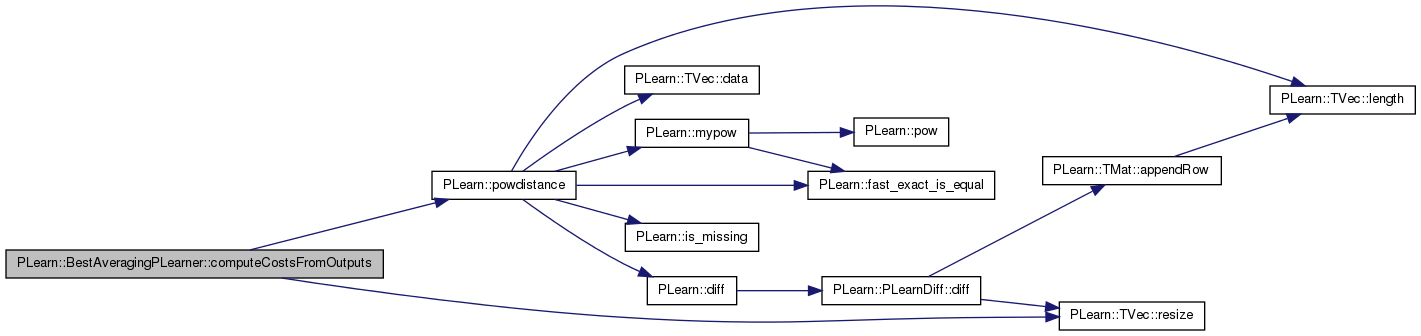

| void PLearn::BestAveragingPLearner::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 380 of file BestAveragingPLearner.cc.

References PLearn::powdistance(), and PLearn::TVec< T >::resize().

{

// For now, only MSE is computed...

real mse = powdistance(output, target);

costs.resize(1);

costs[0] = mse;

}

| void PLearn::BestAveragingPLearner::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 363 of file BestAveragingPLearner.cc.

References PLearn::TVec< T >::fill(), i, m_best_learners, m_output_buffer, n, outputsize(), PLearn::TVec< T >::resize(), and PLearn::TVec< T >::size().

{

output.resize(outputsize());

output.fill(0.0);

m_output_buffer.resize(outputsize());

// Basic strategy: accumulate into output, then divide by number of

// learners (take unweighted arithmetic mean). Works fine as long as we

// don't accumulate millions of terms...

for (int i=0, n=m_best_learners.size() ; i<n ; ++i) {

m_best_learners[i]->computeOutput(input, m_output_buffer);

output += m_output_buffer;

}

output /= m_best_learners.size();

}

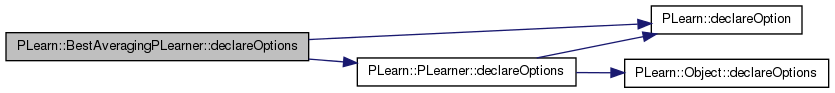

| void PLearn::BestAveragingPLearner::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 74 of file BestAveragingPLearner.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), PLearn::OptionBase::learntoption, m_best_learner_num, m_best_learners, m_cached_outputsize, m_comparison_statspec, m_initial_seed, m_learner_set, m_learner_template, m_learner_train_costs, m_seed_option, m_splitter, and m_total_learner_num.

{

//##### Build Options ###################################################

declareOption(

ol, "learner_set", &BestAveragingPLearner::m_learner_set,

OptionBase::buildoption,

"The set of all learners to train, given in extension. If this option\n"

"is specified, the learner template (see below) is ignored. Note that\n"

"these objects ARE NOT deep-copied before being trained.\n");

declareOption(

ol, "learner_template", &BestAveragingPLearner::m_learner_template,

OptionBase::buildoption,

"If 'learner_set' is not specified, a template PLearner used to\n"

"instantiate 'learner_set'. When instantiation is being carried out,\n"

"the seed is set sequentially from 'initial_seed'. The instantiation\n"

"sequence is as follows:\n"

"\n"

"- (1) template is deep-copied\n"

"- (2) seed and expdir are set\n"

"- (3) build() is called\n"

"- (4) forget() is called\n"

"\n"

"The expdir is set from the BestAveragingPLearner's expdir (if any)\n"

"by suffixing '/learner_i'.\n");

declareOption(

ol, "initial_seed", &BestAveragingPLearner::m_initial_seed,

OptionBase::buildoption,

"If learners are instantiated from 'learner_template', the initial seed\n"

"value to set into the learners before building them. The seed is\n"

"incremented by one from that starting point for each successive learner\n"

"that is being instantiated. If this value is <= 0, it is used as-is\n"

"without being incremented.\n");

declareOption(

ol, "seed_option", &BestAveragingPLearner::m_seed_option,

OptionBase::buildoption,

"Use in conjunction with 'initial_seed'; option name pointing to the\n"

"seed to be initialized. The default is just 'seed', which is the\n"

"PLearner option name for the seed, and is adequate if the\n"

"learner_template is \"shallow\", such as NNet. This option is useful if\n"

"the learner_template is a complex learner (e.g. HyperLearner) and the\n"

"seed must actually be set inside one of the inner learners. In the\n"

"particular case of HyperLearner, one could use 'learner.seed' as the\n"

"value for this option.\n");

declareOption(

ol, "total_learner_num", &BestAveragingPLearner::m_total_learner_num,

OptionBase::buildoption,

"Total number of learners to instantiate from learner_template (if\n"

"'learner_set' is not specified.\n");

declareOption(

ol, "best_learner_num", &BestAveragingPLearner::m_best_learner_num,

OptionBase::buildoption,

"Number of BEST train-time learners to keep and average at\n"

"compute-output time.\n");

declareOption(

ol, "comparison_statspec", &BestAveragingPLearner::m_comparison_statspec,

OptionBase::buildoption,

"Statistic specification to use to compare the training performance\n"

"between learners. For example, if all learners have a 'mse' measure,\n"

"this would be \"E[mse]\". It is assumed that all learners make available\n"

"the statistic under the same name.\n");

declareOption(

ol, "splitter", &BestAveragingPLearner::m_splitter,

OptionBase::buildoption,

"Optional splitter that can be used to create the individual training\n"

"sets for the learners. If this is specified, it is assumed that the\n"

"splitter returns a number of splits equal to the number of learners.\n"

"Each split is used as a learner's training set. If not specified,\n"

"all learners receive the same training set (passed to setTrainingSet)\n");

//##### Learnt Options ##################################################

declareOption(

ol, "cached_outputsize", &BestAveragingPLearner::m_cached_outputsize,

OptionBase::learntoption,

"Cached outputsize, determined from the inner learners");

declareOption(

ol, "learner_train_costs", &BestAveragingPLearner::m_learner_train_costs,

OptionBase::learntoption,

"List of train costs values for each learner in 'learner_set'");

declareOption(

ol, "best_learners", &BestAveragingPLearner::m_best_learners,

OptionBase::learntoption,

"Learners that have been found to be the best and are being kept");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::BestAveragingPLearner::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 195 of file BestAveragingPLearner.h.

:

//##### Learned Options #################################################

| BestAveragingPLearner * PLearn::BestAveragingPLearner::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 64 of file BestAveragingPLearner.cc.

Referenced by build_().

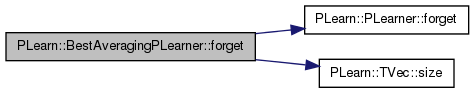

| void PLearn::BestAveragingPLearner::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 305 of file BestAveragingPLearner.cc.

References PLearn::PLearner::forget(), i, m_learner_set, n, and PLearn::TVec< T >::size().

{

inherited::forget();

for (int i=0, n=m_learner_set.size() ; i<n ; ++i)

m_learner_set[i]->forget();

}

| OptionList & PLearn::BestAveragingPLearner::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 64 of file BestAveragingPLearner.cc.

| OptionMap & PLearn::BestAveragingPLearner::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 64 of file BestAveragingPLearner.cc.

| RemoteMethodMap & PLearn::BestAveragingPLearner::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 64 of file BestAveragingPLearner.cc.

| TVec< string > PLearn::BestAveragingPLearner::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutputs (and thus the test method).

Implements PLearn::PLearner.

Definition at line 390 of file BestAveragingPLearner.cc.

{

return TVec<string>(1, "mse");

}

| TVec< string > PLearn::BestAveragingPLearner::getTrainCostNames | ( | ) | const [virtual] |

The train costs are simply the concatenation of all sublearner's train costs.

Implements PLearn::PLearner.

Definition at line 396 of file BestAveragingPLearner.cc.

References PLearn::TVec< T >::append(), c, PLearn::TVec< T >::copy(), i, j, m, m_best_learner_num, m_learner_set, n, PLearn::TVec< T >::push_back(), PLearn::TVec< T >::size(), and PLearn::tostring().

Referenced by setTrainStatsCollector().

{

TVec<string> c;

for (int i=0, n=m_learner_set.size() ; i<n ; ++i) {

TVec<string> learner_costs = m_learner_set[i]->getTrainCostNames().copy();

for (int j=0, m=learner_costs.size() ; j<m ; ++j)

learner_costs[j] = "learner"+tostring(i)+'_'+learner_costs[j];

c.append(learner_costs);

}

for (int i=0 ; i<m_best_learner_num ; ++i)

c.push_back("selected_" + tostring(i));

return c;

}

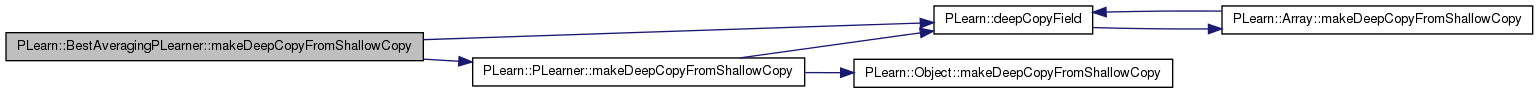

| void PLearn::BestAveragingPLearner::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 220 of file BestAveragingPLearner.cc.

References PLearn::deepCopyField(), m_best_learners, m_learner_set, m_learner_template, m_learner_train_costs, m_output_buffer, m_splitter, and PLearn::PLearner::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(m_learner_set, copies);

deepCopyField(m_learner_template, copies);

deepCopyField(m_splitter, copies);

deepCopyField(m_learner_train_costs, copies);

deepCopyField(m_best_learners, copies);

deepCopyField(m_output_buffer, copies);

}

| int PLearn::BestAveragingPLearner::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 286 of file BestAveragingPLearner.cc.

References i, m_cached_outputsize, m_learner_set, n, PLERROR, and PLearn::TVec< T >::size().

Referenced by computeOutput().

{

// If the outputsize has not already been determined, get it from the inner

// learners.

if (m_cached_outputsize < 0) {

for (int i=0, n=m_learner_set.size() ; i<n ; ++i) {

int cur_outputsize = m_learner_set[i]->outputsize();

if (m_cached_outputsize < 0)

m_cached_outputsize = cur_outputsize;

else if (m_cached_outputsize != cur_outputsize)

PLERROR("%s: all inner learners must have the same outputsize; "

"learner %d has an outputsize of %d, contrarily to %d for "

"the previous learners", __FUNCTION__, i, cur_outputsize,

m_cached_outputsize);

}

}

return m_cached_outputsize;

}

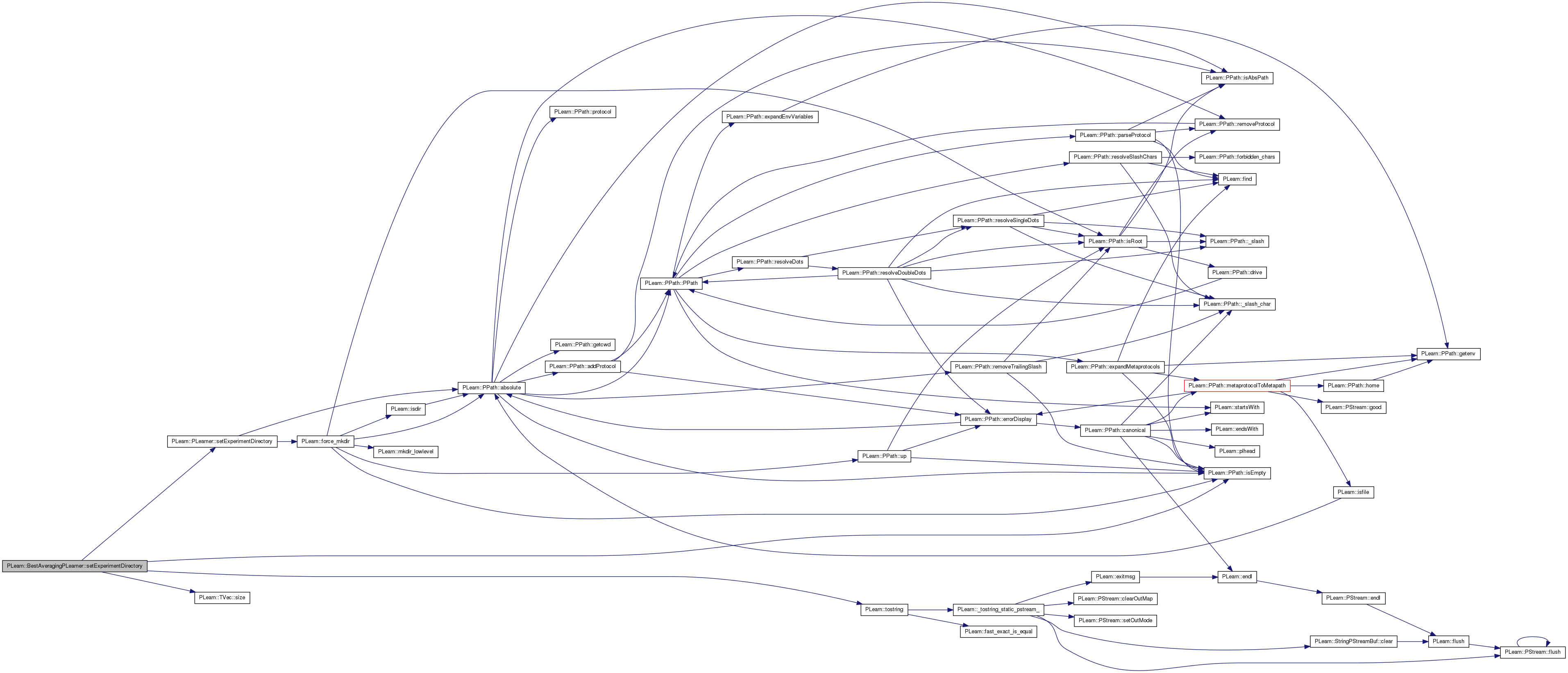

| void PLearn::BestAveragingPLearner::setExperimentDirectory | ( | const PPath & | the_expdir | ) | [virtual] |

Forwarded to inner learners (suffixed with '/learner_i')

Reimplemented from PLearn::PLearner.

Definition at line 275 of file BestAveragingPLearner.cc.

References i, PLearn::PPath::isEmpty(), m_learner_set, n, PLearn::PLearner::setExperimentDirectory(), PLearn::TVec< T >::size(), and PLearn::tostring().

{

inherited::setExperimentDirectory(the_expdir);

if (! the_expdir.isEmpty()) {

for (int i=0, n=m_learner_set.size() ; i<n ; ++i)

m_learner_set[i]->setExperimentDirectory(

the_expdir / "learner_" + tostring(i));

}

}

| void PLearn::BestAveragingPLearner::setTrainingSet | ( | VMat | training_set, |

| bool | call_forget = true |

||

| ) | [virtual] |

Forwarded to inner learners.

Reimplemented from PLearn::PLearner.

Definition at line 233 of file BestAveragingPLearner.cc.

References i, m_learner_set, m_splitter, n, PLERROR, PLearn::PLearner::setTrainingSet(), PLearn::TVec< T >::size(), and PLearn::split().

{

inherited::setTrainingSet(training_set, call_forget);

// Make intelligent use of splitter if any

if (m_splitter) {

m_splitter->setDataSet(training_set);

// Splitter should return exactly the same number of splits as there

// are inner learners

if (m_splitter->nsplits() != m_learner_set.size())

PLERROR("%s: splitter should return exactly the same number of splits (=%d) "

"as there are inner learners (=%d)", __FUNCTION__,

m_splitter->nsplits(), m_learner_set.size());

for (int i=0, n=m_learner_set.size() ; i<n ; ++i) {

TVec<VMat> split = m_splitter->getSplit(i);

if (split.size() != 1)

PLERROR("%s: split %d should return exactly 1 VMat (returned %d)",

__FUNCTION__, i, split.size());

m_learner_set[i]->setTrainingSet(split[0], call_forget);

}

}

else {

for (int i=0, n=m_learner_set.size() ; i<n ; ++i)

m_learner_set[i]->setTrainingSet(training_set, call_forget);

}

}

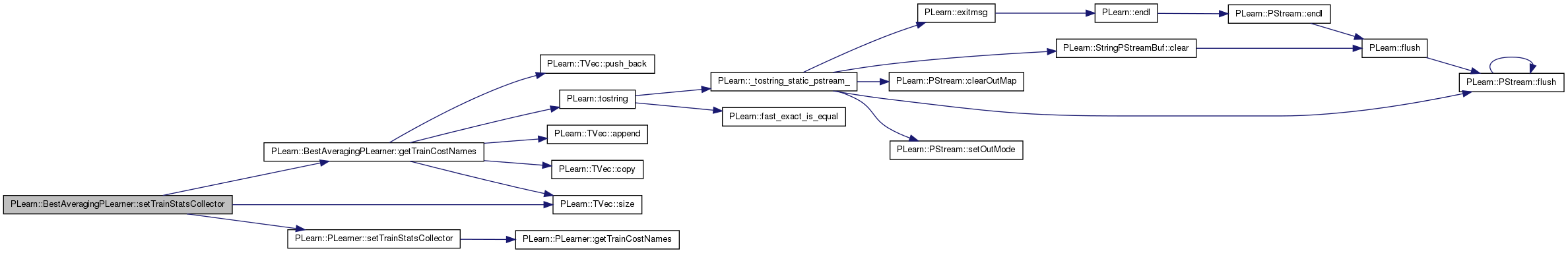

| void PLearn::BestAveragingPLearner::setTrainStatsCollector | ( | PP< VecStatsCollector > | statscol | ) | [virtual] |

New train-stats-collector are instantiated for sublearners.

Reimplemented from PLearn::PLearner.

Definition at line 263 of file BestAveragingPLearner.cc.

References getTrainCostNames(), i, m_learner_set, n, PLearn::PLearner::setTrainStatsCollector(), and PLearn::TVec< T >::size().

{

inherited::setTrainStatsCollector(statscol);

for (int i=0, n=m_learner_set.size() ; i<n ; ++i) {

// Set the statistic names so we can call getStat on the VSC.

PP<VecStatsCollector> vsc = new VecStatsCollector;

vsc->setFieldNames(m_learner_set[i]->getTrainCostNames());

m_learner_set[i]->setTrainStatsCollector(vsc);

}

}

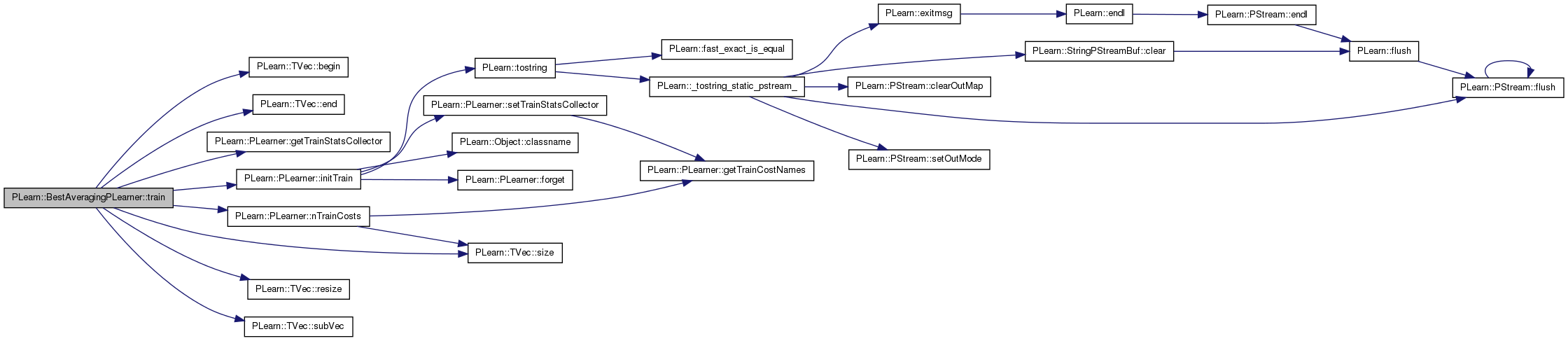

| void PLearn::BestAveragingPLearner::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 313 of file BestAveragingPLearner.cc.

References PLearn::TVec< T >::begin(), PLearn::TVec< T >::end(), PLearn::PLearner::getTrainStatsCollector(), i, PLearn::PLearner::initTrain(), m_best_learner_num, m_best_learners, m_comparison_statspec, m_learner_set, m_learner_train_costs, N, PLearn::PLearner::nTrainCosts(), PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::TVec< T >::subVec(), and PLearn::PLearner::verbosity.

{

if (! initTrain())

return;

const int N = m_learner_set.size();

m_learner_train_costs.resize(N);

TVec< pair<real, int> > model_scores(N);

PP<ProgressBar> pb(verbosity?

new ProgressBar("Training sublearners of BestAveragingPLearner",N) : 0);

// Basic idea is to train all sublearners, then sample the train statistic

// used for comparison, and fill out the member variable 'm_best_learners'.

// Finally we collect the expectation of their sublearners train statistics

// (to give this learner's train statistics)

// Actual train-cost vector

Vec traincosts(nTrainCosts());

int pos_traincost = 0;

for (int i=0 ; i<N ; ++i) {

if (pb)

pb->update(i);

m_learner_set[i]->train();

PP<VecStatsCollector> trainstats = m_learner_set[i]->getTrainStatsCollector();

real cur_comparison = trainstats->getStat(m_comparison_statspec);

m_learner_train_costs[i] = cur_comparison;

model_scores[i] = make_pair(cur_comparison, i);

Vec cur_traincosts = trainstats->getMean();

traincosts.subVec(pos_traincost, cur_traincosts.size()) << cur_traincosts;

pos_traincost += cur_traincosts.size();

}

// Find the M best train costs

sort(model_scores.begin(), model_scores.end());

PLASSERT( m_best_learner_num <= model_scores.size() );

m_best_learners.resize(m_best_learner_num);

for (int i=0 ; i<m_best_learner_num ; ++i) {

m_best_learners[i] = m_learner_set[model_scores[i].second];

traincosts[pos_traincost++] = model_scores[i].second;

}

// Accumulate into train statscollector

PLASSERT( getTrainStatsCollector() );

getTrainStatsCollector()->update(traincosts);

}

Reimplemented from PLearn::PLearner.

Definition at line 195 of file BestAveragingPLearner.h.

Number of BEST train-time learners to keep and average at compute-output time.

Definition at line 125 of file BestAveragingPLearner.h.

Referenced by build_(), declareOptions(), getTrainCostNames(), and train().

TVec< PP<PLearner> > PLearn::BestAveragingPLearner::m_best_learners [protected] |

Learners that have been found to be the best and are being kept.

Definition at line 213 of file BestAveragingPLearner.h.

Referenced by computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

int PLearn::BestAveragingPLearner::m_cached_outputsize [mutable, protected] |

Cached outputsize, determined from the inner learners.

Definition at line 207 of file BestAveragingPLearner.h.

Referenced by declareOptions(), and outputsize().

Statistic specification to use to compare the training performance between learners.

For example, if all learners have a 'mse' measure, this would be "E[mse]". It is assumed that all learners make available the statistic under the same name.

Definition at line 133 of file BestAveragingPLearner.h.

Referenced by declareOptions(), and train().

If learners are instantiated from 'learner_template', the initial seed value to set into the learners before building them.

The seed is incremented by one from that starting point for each successive learner that is being instantiated. If this value is <= 0, it is used as-is without being incremented.

Definition at line 101 of file BestAveragingPLearner.h.

Referenced by build_(), and declareOptions().

The set of all learners to train, given in extension.

If this option is specified, the learner template (see below) is ignored. Note that these objects ARE NOT deep-copied before being trained.

Definition at line 76 of file BestAveragingPLearner.h.

Referenced by build_(), declareOptions(), forget(), getTrainCostNames(), makeDeepCopyFromShallowCopy(), outputsize(), setExperimentDirectory(), setTrainingSet(), setTrainStatsCollector(), and train().

If 'learner_set' is not specified, a template PLearner used to instantiate 'learner_set'.

When instantiation is being carried out, the seed is set sequentially from 'initial_seed'. The instantiation sequence is as follows:

The expdir is set from the BestAveragingPLearner's expdir (if any) by suffixing '/learner_i'.

Definition at line 92 of file BestAveragingPLearner.h.

Referenced by build_(), declareOptions(), and makeDeepCopyFromShallowCopy().

List of train costs values for each learner in 'learner_set'.

Definition at line 210 of file BestAveragingPLearner.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::BestAveragingPLearner::m_output_buffer [mutable, protected] |

Buffer for compute output of inner model.

Definition at line 216 of file BestAveragingPLearner.h.

Referenced by computeOutput(), and makeDeepCopyFromShallowCopy().

Use in conjunction with 'initial_seed'; option name pointing to the seed to be initialized.

The default is just 'seed', which is the PLearner option name for the seed, and is adequate if the learner_template is "shallow", such as NNet. This option is useful if the learner_template is a complex learner (e.g. HyperLearner) and the seed must actually be set inside one of the inner learners. In the particular case of HyperLearner, one could use 'learner.seed' as the value for this option.

Definition at line 113 of file BestAveragingPLearner.h.

Referenced by build_(), and declareOptions().

Optional splitter that can be used to create the individual training sets for the learners.

If this is specified, it is assumed that the splitter returns a number of splits equal to the number of learners. Each split is used as a learner's training set. If not specified, all learners receive the same training set (passed to setTrainingSet)

Definition at line 142 of file BestAveragingPLearner.h.

Referenced by declareOptions(), makeDeepCopyFromShallowCopy(), and setTrainingSet().

Total number of learners to instantiate from learner_template (if 'learner_set' is not specified.

Definition at line 119 of file BestAveragingPLearner.h.

Referenced by build_(), and declareOptions().

1.7.4

1.7.4