|

PLearn 0.1

|

|

PLearn 0.1

|

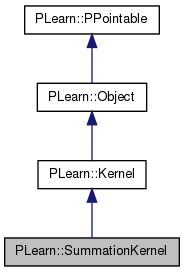

Kernel computing the sum of other kernels. More...

#include <SummationKernel.h>

Public Member Functions | |

| SummationKernel () | |

| Default constructor. | |

| virtual void | setDataForKernelMatrix (VMat the_data) |

| Distribute to terms (sub-kernels) in the summation, subsetting if required. | |

| virtual void | addDataForKernelMatrix (const Vec &newRow) |

| Distribute to terms (sub-kernels) in the summation, subsetting if required. | |

| virtual real | evaluate (const Vec &x1, const Vec &x2) const |

| Compute K(x1,x2). | |

| virtual real | evaluate_i_x (int i, const Vec &x, real) const |

| Evaluate a test example x against a train example given by its index. | |

| virtual void | evaluate_all_i_x (const Vec &x, const Vec &k_xi_x, real squared_norm_of_x=-1, int istart=0) const |

| Fill k_xi_x with K(x_i, x), for all i from istart to istart + k_xi_x.length() - 1. | |

| virtual void | computeGramMatrix (Mat K) const |

| Compute the Gram Matrix by calling subkernels computeGramMatrix. | |

| virtual void | computeGramMatrixDerivative (Mat &KD, const string &kernel_param, real epsilon=1e-6) const |

| Directly compute the derivative with respect to hyperparameters (Faster than finite differences...) | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual SummationKernel * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| TVec< Ker > | m_terms |

| Individual kernels to add to produce the final result. | |

| TVec< TVec< int > > | m_input_indexes |

| Optionally, one can specify which of individual input variables should be routed to each kernel. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| TVec< Vec > | m_input_buf1 |

| Input buffers for kernel evaluation in cases where subsetting is needed. | |

| TVec< Vec > | m_input_buf2 |

| Vec | m_eval_buf |

| Temporary buffer for kernel evaluation on all training dataset. | |

| Mat | m_gram_buf |

| Temporary buffer for Gram matrix accumulation. | |

Private Types | |

| typedef Kernel | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Kernel computing the sum of other kernels.

This kernel computes the summation of several subkernel objects. It can also chop up parts of its input vector and send it to each kernel (so that each kernel can operate on a subset of the variables).

Definition at line 54 of file SummationKernel.h.

typedef Kernel PLearn::SummationKernel::inherited [private] |

Reimplemented from PLearn::Kernel.

Definition at line 56 of file SummationKernel.h.

| PLearn::SummationKernel::SummationKernel | ( | ) |

| string PLearn::SummationKernel::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::Kernel.

Definition at line 53 of file SummationKernel.cc.

| OptionList & PLearn::SummationKernel::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::Kernel.

Definition at line 53 of file SummationKernel.cc.

| RemoteMethodMap & PLearn::SummationKernel::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::Kernel.

Definition at line 53 of file SummationKernel.cc.

Reimplemented from PLearn::Kernel.

Definition at line 53 of file SummationKernel.cc.

| Object * PLearn::SummationKernel::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SummationKernel.cc.

| StaticInitializer SummationKernel::_static_initializer_ & PLearn::SummationKernel::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::Kernel.

Definition at line 53 of file SummationKernel.cc.

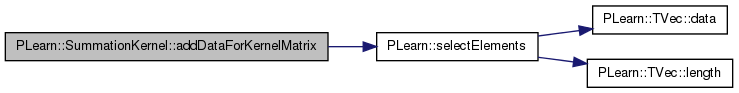

| void PLearn::SummationKernel::addDataForKernelMatrix | ( | const Vec & | newRow | ) | [virtual] |

Distribute to terms (sub-kernels) in the summation, subsetting if required.

Reimplemented from PLearn::Kernel.

Definition at line 145 of file SummationKernel.cc.

References i, n, and PLearn::selectElements().

{

inherited::addDataForKernelMatrix(newRow);

bool split_inputs = m_input_indexes.size() > 0;

for (int i=0, n=m_terms.size() ; i<n ; ++i) {

if (split_inputs && m_input_indexes[i].size() > 0) {

selectElements(newRow, m_input_indexes[i], m_input_buf1[i]);

m_terms[i]->addDataForKernelMatrix(m_input_buf1[i]);

}

else

m_terms[i]->addDataForKernelMatrix(newRow);

}

}

| void PLearn::SummationKernel::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::Kernel.

Definition at line 89 of file SummationKernel.cc.

{

// ### Nothing to add here, simply calls build_

inherited::build();

build_();

}

| void PLearn::SummationKernel::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::Kernel.

Definition at line 99 of file SummationKernel.cc.

References i, n, N, and PLERROR.

{

// Preallocate buffers for kernel evaluation

const int N = m_input_indexes.size();

m_input_buf1.resize(N);

m_input_buf2.resize(N);

for (int i=0 ; i<N ; ++i) {

const int M = m_input_indexes[i].size();

m_input_buf1[i].resize(M);

m_input_buf2[i].resize(M);

}

// Kernel is symmetric only if all terms are

is_symmetric = true;

for (int i=0, n=m_terms.size() ; i<n ; ++i) {

if (! m_terms[i])

PLERROR("SummationKernel::build_: kernel for term[%d] is not specified",i);

is_symmetric = is_symmetric && m_terms[i]->is_symmetric;

}

if (m_input_indexes.size() > 0 && m_terms.size() != m_input_indexes.size())

PLERROR("SummationKernel::build_: if 'input_indexes' is specified "

"it must have the same size (%d) as 'terms'; found %d elements",

m_terms.size(), m_input_indexes.size());

}

| string PLearn::SummationKernel::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SummationKernel.cc.

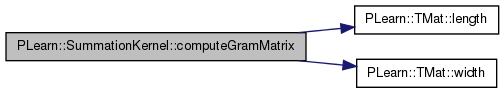

| void PLearn::SummationKernel::computeGramMatrix | ( | Mat | K | ) | const [virtual] |

Compute the Gram Matrix by calling subkernels computeGramMatrix.

Reimplemented from PLearn::Kernel.

Definition at line 221 of file SummationKernel.cc.

References i, PLearn::TMat< T >::length(), n, and PLearn::TMat< T >::width().

{

// Assume that K has the right size; will have error in subkernels

// evaluation if not the right size in any case.

m_gram_buf.resize(K.width(), K.length());

for (int i=0, n=m_terms.size() ; i<n ; ++i) {

if (i==0)

m_terms[i]->computeGramMatrix(K);

else {

m_terms[i]->computeGramMatrix(m_gram_buf);

K += m_gram_buf;

}

}

}

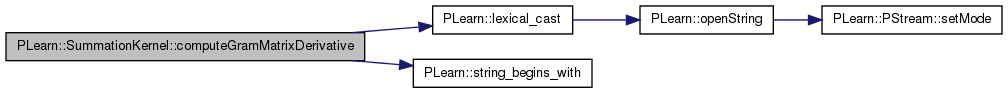

| void PLearn::SummationKernel::computeGramMatrixDerivative | ( | Mat & | KD, |

| const string & | kernel_param, | ||

| real | epsilon = 1e-6 |

||

| ) | const [virtual] |

Directly compute the derivative with respect to hyperparameters (Faster than finite differences...)

Reimplemented from PLearn::Kernel.

Definition at line 239 of file SummationKernel.cc.

References i, PLearn::lexical_cast(), PLERROR, and PLearn::string_begins_with().

{

// Find which term we want to compute the derivative for

if (string_begins_with(kernel_param, "terms[")) {

string::size_type rest = kernel_param.find("].");

if (rest == string::npos)

PLERROR("%s: malformed hyperparameter name for computing derivative '%s'",

__FUNCTION__, kernel_param.c_str());

string sub_param = kernel_param.substr(rest+2);

string term_index = kernel_param.substr(6,rest-6); // len("terms[") == 6

int i = lexical_cast<int>(term_index);

if (i < 0 || i >= m_terms.size())

PLERROR("%s: out of bounds access to term %d when computing derivative\n"

"for kernel parameter '%s'; only %d terms (0..%d) are available\n"

"in the SummationKernel", __FUNCTION__, i, kernel_param.c_str(),

m_terms.size(), m_terms.size()-1);

m_terms[i]->computeGramMatrixDerivative(KD, sub_param, epsilon);

}

else

inherited::computeGramMatrixDerivative(KD, kernel_param, epsilon);

// Compare against finite differences

// Mat KD1;

// Kernel::computeGramMatrixDerivative(KD1, kernel_param, epsilon);

// cerr << "Kernel hyperparameter: " << kernel_param << endl;

// cerr << "Analytic derivative (15th row):" << endl

// << KD(15) << endl

// << "Finite differences:" << endl

// << KD1(15) << endl;

}

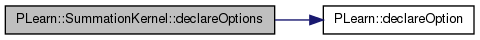

| void PLearn::SummationKernel::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::Kernel.

Definition at line 64 of file SummationKernel.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), m_input_indexes, and m_terms.

{

declareOption(

ol, "terms", &SummationKernel::m_terms, OptionBase::buildoption,

"Individual kernels to add to produce the final result. The\n"

"hyperparameters of kernel i can be accesed under the option names\n"

"'terms[i].hyperparam' for, e.g. GaussianProcessRegressor.\n");

declareOption(

ol, "input_indexes", &SummationKernel::m_input_indexes,

OptionBase::buildoption,

"Optionally, one can specify which of individual input variables should\n"

"be routed to each kernel. The format is as a vector of vectors: for\n"

"each kernel in 'terms', one must list the INDEXES in the original input\n"

"vector(zero-based) that should be passed to that kernel. If a list of\n"

"indexes is empty for a given kernel, it means that the COMPLETE input\n"

"vector should be passed to the kernel.\n");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::SummationKernel::declaringFile | ( | ) | [inline, static] |

| SummationKernel * PLearn::SummationKernel::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::Kernel.

Definition at line 53 of file SummationKernel.cc.

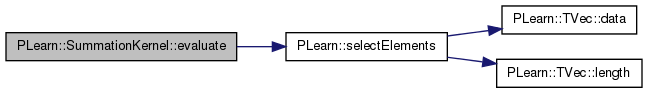

Compute K(x1,x2).

Implements PLearn::Kernel.

Definition at line 162 of file SummationKernel.cc.

References i, n, and PLearn::selectElements().

{

real kernel_value = 0.0;

bool split_inputs = m_input_indexes.size() > 0;

for (int i=0, n=m_terms.size() ; i<n ; ++i) {

if (split_inputs && m_input_indexes[i].size() > 0) {

selectElements(x1, m_input_indexes[i], m_input_buf1[i]);

selectElements(x2, m_input_indexes[i], m_input_buf2[i]);

kernel_value += m_terms[i]->evaluate(m_input_buf1[i],

m_input_buf2[i]);

}

else

kernel_value += m_terms[i]->evaluate(x1,x2);

}

return kernel_value;

}

| void PLearn::SummationKernel::evaluate_all_i_x | ( | const Vec & | x, |

| const Vec & | k_xi_x, | ||

| real | squared_norm_of_x = -1, |

||

| int | istart = 0 |

||

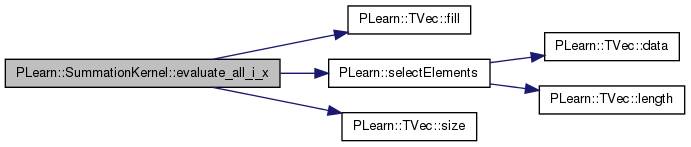

| ) | const [virtual] |

Fill k_xi_x with K(x_i, x), for all i from istart to istart + k_xi_x.length() - 1.

Reimplemented from PLearn::Kernel.

Definition at line 200 of file SummationKernel.cc.

References PLearn::TVec< T >::fill(), i, n, PLearn::selectElements(), and PLearn::TVec< T >::size().

{

k_xi_x.fill(0.0);

m_eval_buf.resize(k_xi_x.size());

bool split_inputs = m_input_indexes.size() > 0;

for (int i=0, n=m_terms.size() ; i<n ; ++i) {

// Note: if we slice x, we cannot rely on sq_norm_of_x any more...

if (split_inputs && m_input_indexes[i].size() > 0) {

selectElements(x, m_input_indexes[i], m_input_buf1[i]);

m_terms[i]->evaluate_all_i_x(m_input_buf1[i], m_eval_buf, -1, istart);

}

else

m_terms[i]->evaluate_all_i_x(x, m_eval_buf, sq_norm_of_x, istart);

k_xi_x += m_eval_buf;

}

}

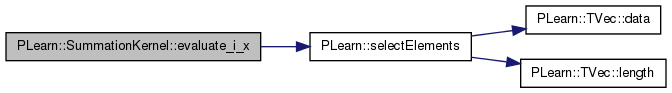

Evaluate a test example x against a train example given by its index.

Reimplemented from PLearn::Kernel.

Definition at line 182 of file SummationKernel.cc.

References i, n, and PLearn::selectElements().

{

real kernel_value = 0.0;

bool split_inputs = m_input_indexes.size() > 0;

for (int i=0, n=m_terms.size() ; i<n ; ++i) {

if (split_inputs && m_input_indexes[i].size() > 0) {

selectElements(x, m_input_indexes[i], m_input_buf1[i]);

kernel_value += m_terms[i]->evaluate_i_x(j, m_input_buf1[i]);

}

else

kernel_value += m_terms[i]->evaluate_i_x(j, x);

}

return kernel_value;

}

| OptionList & PLearn::SummationKernel::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SummationKernel.cc.

| OptionMap & PLearn::SummationKernel::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SummationKernel.cc.

| RemoteMethodMap & PLearn::SummationKernel::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 53 of file SummationKernel.cc.

| void PLearn::SummationKernel::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::Kernel.

Definition at line 276 of file SummationKernel.cc.

References PLearn::deepCopyField().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(m_terms, copies);

deepCopyField(m_input_indexes, copies);

deepCopyField(m_input_buf1, copies);

deepCopyField(m_input_buf2, copies);

deepCopyField(m_gram_buf, copies);

}

| void PLearn::SummationKernel::setDataForKernelMatrix | ( | VMat | the_data | ) | [virtual] |

Distribute to terms (sub-kernels) in the summation, subsetting if required.

Reimplemented from PLearn::Kernel.

Definition at line 128 of file SummationKernel.cc.

{

inherited::setDataForKernelMatrix(the_data);

bool split_inputs = m_input_indexes.size() > 0;

for (int i=0, n=m_terms.size() ; i<n ; ++i) {

if (split_inputs && m_input_indexes[i].size() > 0) {

VMat sub_inputs = new SelectColumnsVMatrix(the_data, m_input_indexes[i]);

m_terms[i]->setDataForKernelMatrix(sub_inputs);

}

else

m_terms[i]->setDataForKernelMatrix(the_data);

}

}

Reimplemented from PLearn::Kernel.

Definition at line 116 of file SummationKernel.h.

Vec PLearn::SummationKernel::m_eval_buf [mutable, protected] |

Temporary buffer for kernel evaluation on all training dataset.

Definition at line 130 of file SummationKernel.h.

Mat PLearn::SummationKernel::m_gram_buf [mutable, protected] |

Temporary buffer for Gram matrix accumulation.

Definition at line 133 of file SummationKernel.h.

TVec<Vec> PLearn::SummationKernel::m_input_buf1 [protected] |

Input buffers for kernel evaluation in cases where subsetting is needed.

Definition at line 126 of file SummationKernel.h.

TVec<Vec> PLearn::SummationKernel::m_input_buf2 [protected] |

Definition at line 127 of file SummationKernel.h.

Optionally, one can specify which of individual input variables should be routed to each kernel.

The format is as a vector of vectors: for each kernel in 'terms', one must list the INDEXES in the original input vector(zero-based) that should be passed to that kernel. If a list of indexes is empty for a given kernel, it means that the COMPLETE input vector should be passed to the kernel.

Definition at line 76 of file SummationKernel.h.

Referenced by declareOptions().

Individual kernels to add to produce the final result.

The hyperparameters of kernel i can be accesed under the option names 'terms[i].hyperparam' for, e.g. GaussianProcessRegressor.

Definition at line 66 of file SummationKernel.h.

Referenced by declareOptions().

1.7.4

1.7.4