|

PLearn 0.1

|

|

PLearn 0.1

|

Stores and learns the parameters between two linear layers of an RBM. More...

#include <RBMSparse1DMatrixConnection.h>

Public Member Functions | |

| RBMSparse1DMatrixConnection (real the_learning_rate=0) | |

| Default constructor. | |

| virtual void | accumulatePosStats (const Mat &down_values, const Mat &up_values) |

| virtual void | accumulateNegStats (const Mat &down_values, const Mat &up_values) |

| virtual void | computeProducts (int start, int length, Mat &activations, bool accumulate=false) const |

| Computes the vectors of activation of "length" units, starting from "start", and stores (or add) them into "activations". | |

| virtual void | fprop (const Vec &input, const Mat &rbm_weights, Vec &output) const |

| given the input and the connection weights, compute the output (possibly resize it appropriately) | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) | |

| virtual void | bpropAccUpdate (const TVec< Mat * > &ports_value, const TVec< Mat * > &ports_gradient) |

| Perform a back propagation step (also updating parameters according to the provided gradient). | |

| virtual void | update (const Mat &pos_down_values, const Mat &pos_up_values, const Mat &neg_down_values, const Mat &neg_up_values) |

| Not implemented. | |

| virtual void | forget () |

| reset the parameters to the state they would be BEFORE starting training. | |

| virtual int | nParameters () const |

| return the number of parameters | |

| virtual Mat | getWeights () const |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMSparse1DMatrixConnection * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| int | filter_size |

| bool | enforce_positive_weights |

| int | step_size |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

| static void | declareMethods (RemoteMethodMap &rmm) |

| Declare the methods that are remote-callable. | |

Private Types | |

| typedef RBMMatrixConnection | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

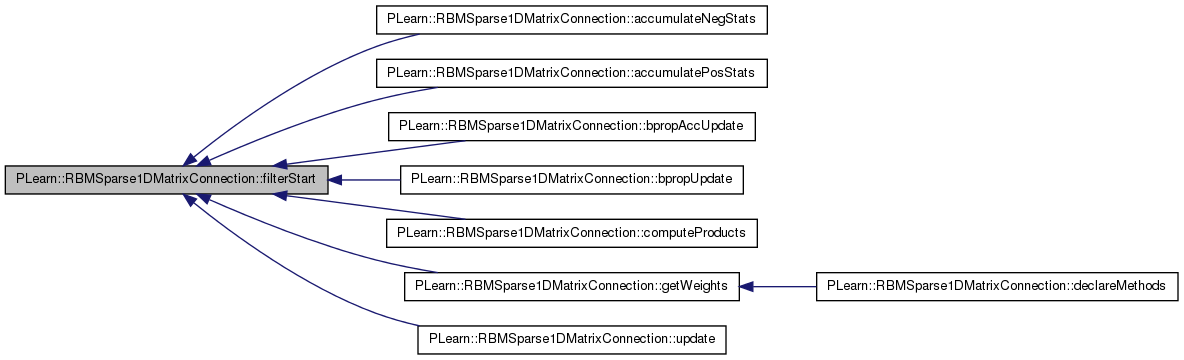

| int | filterStart (int idx) const |

| int | filterSize (int idx) const |

Stores and learns the parameters between two linear layers of an RBM.

Definition at line 53 of file RBMSparse1DMatrixConnection.h.

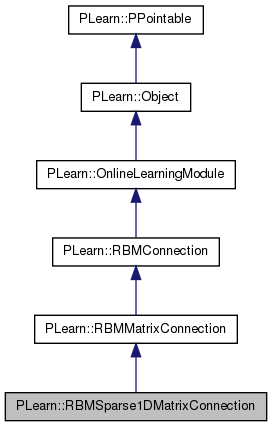

typedef RBMMatrixConnection PLearn::RBMSparse1DMatrixConnection::inherited [private] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 55 of file RBMSparse1DMatrixConnection.h.

| PLearn::RBMSparse1DMatrixConnection::RBMSparse1DMatrixConnection | ( | real | the_learning_rate = 0 | ) |

Default constructor.

Definition at line 52 of file RBMSparse1DMatrixConnection.cc.

:

filter_size(-1),

enforce_positive_weights(false)

{

}

| string PLearn::RBMSparse1DMatrixConnection::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

| OptionList & PLearn::RBMSparse1DMatrixConnection::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

| RemoteMethodMap & PLearn::RBMSparse1DMatrixConnection::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

| Object * PLearn::RBMSparse1DMatrixConnection::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

| StaticInitializer RBMSparse1DMatrixConnection::_static_initializer_ & PLearn::RBMSparse1DMatrixConnection::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

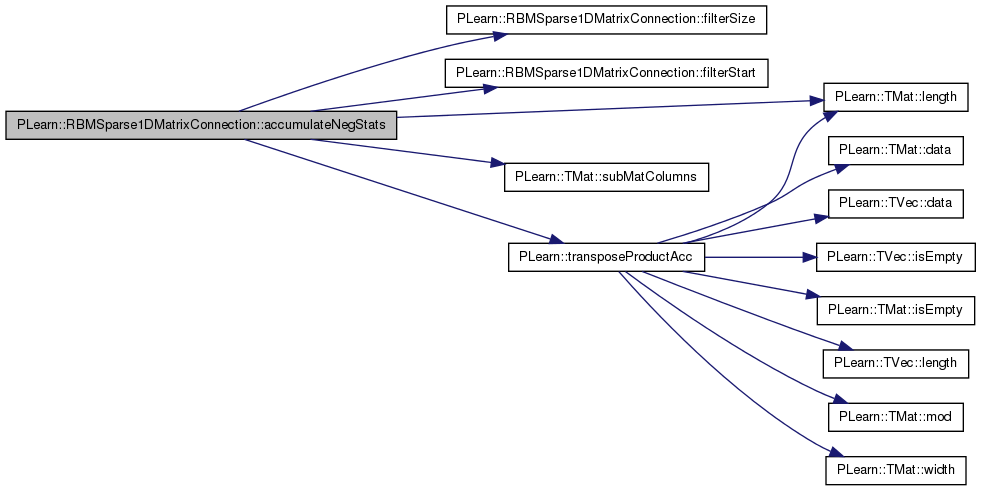

| void PLearn::RBMSparse1DMatrixConnection::accumulateNegStats | ( | const Mat & | down_values, |

| const Mat & | up_values | ||

| ) | [virtual] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 165 of file RBMSparse1DMatrixConnection.cc.

References filterSize(), filterStart(), i, PLearn::TMat< T >::length(), PLearn::RBMConnection::neg_count, PLASSERT, PLearn::TMat< T >::subMatColumns(), PLearn::transposeProductAcc(), PLearn::RBMConnection::up_size, and PLearn::RBMMatrixConnection::weights_neg_stats.

{

int mbs=down_values.length();

PLASSERT(up_values.length()==mbs);

// weights_neg_stats += up_values * down_values'

for ( int i=0; i<up_size; i++)

transposeProductAcc( weights_neg_stats(i),

down_values.subMatColumns( filterStart(i), filterSize(i) ),

up_values(i));

neg_count+=mbs;

}

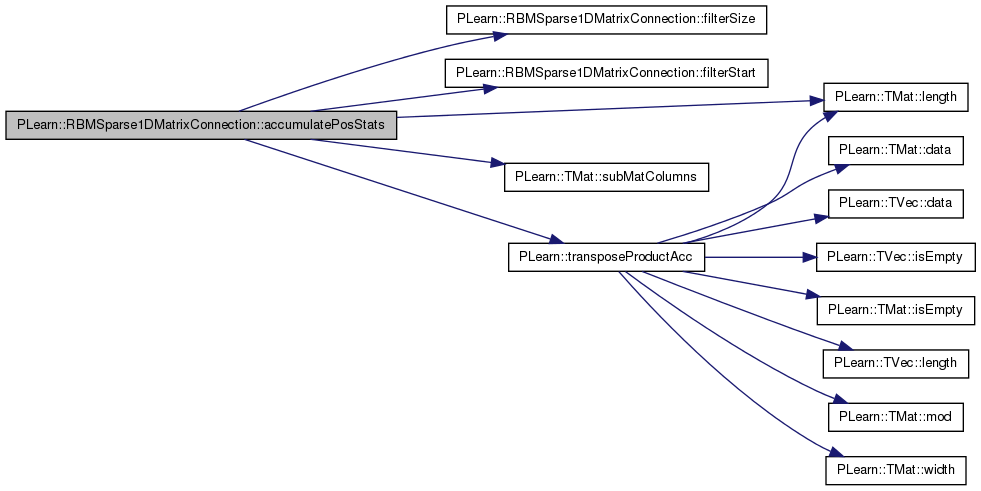

| void PLearn::RBMSparse1DMatrixConnection::accumulatePosStats | ( | const Mat & | down_values, |

| const Mat & | up_values | ||

| ) | [virtual] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 152 of file RBMSparse1DMatrixConnection.cc.

References filterSize(), filterStart(), i, PLearn::TMat< T >::length(), PLASSERT, PLearn::RBMConnection::pos_count, PLearn::TMat< T >::subMatColumns(), PLearn::transposeProductAcc(), PLearn::RBMConnection::up_size, and PLearn::RBMMatrixConnection::weights_pos_stats.

{

int mbs=down_values.length();

PLASSERT(up_values.length()==mbs);

// weights_pos_stats += up_values * down_values'

for ( int i=0; i<up_size; i++)

transposeProductAcc( weights_pos_stats(i),

down_values.subMatColumns( filterStart(i), filterSize(i) ),

up_values(i));

pos_count+=mbs;

}

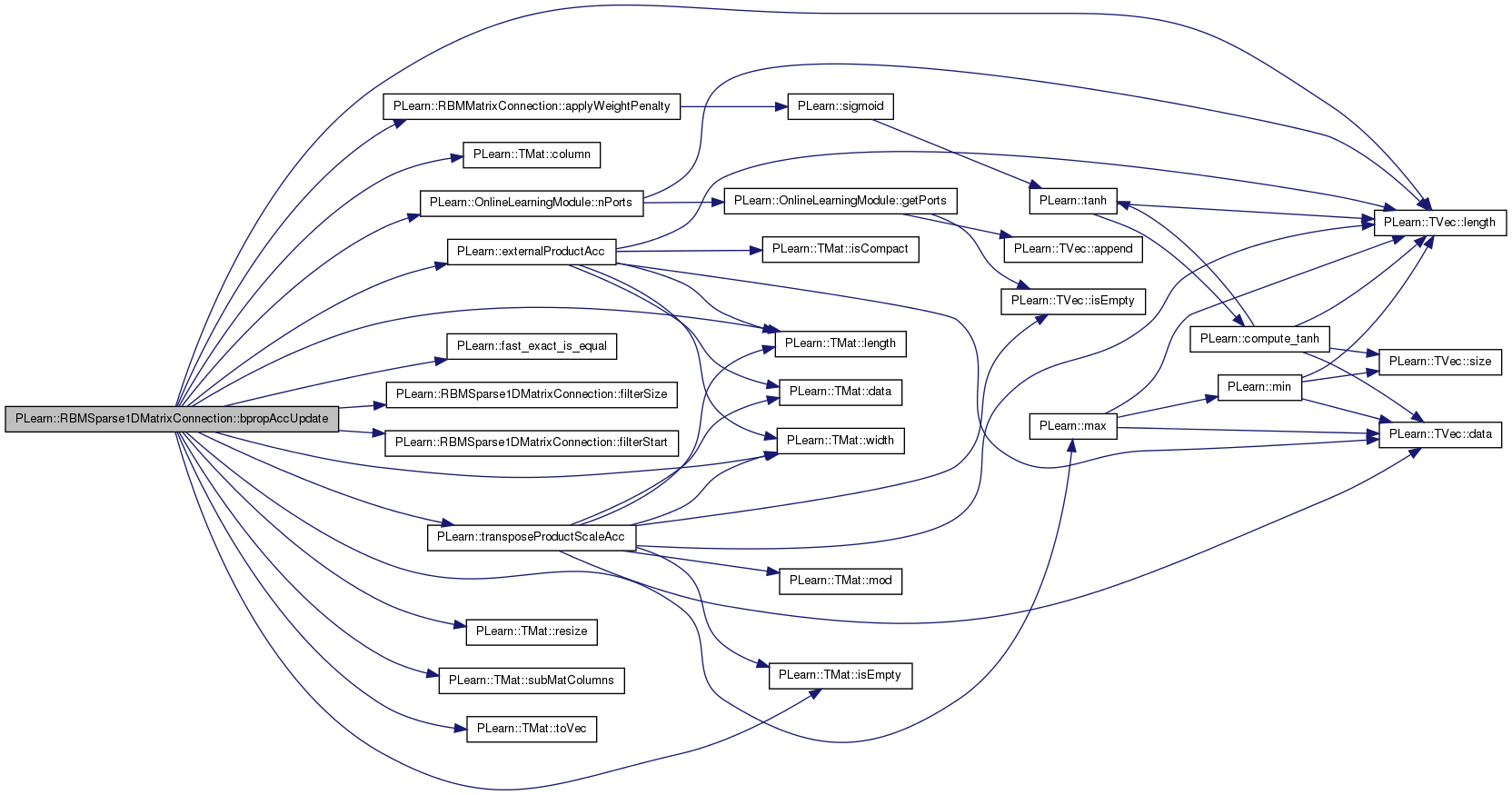

| void PLearn::RBMSparse1DMatrixConnection::bpropAccUpdate | ( | const TVec< Mat * > & | ports_value, |

| const TVec< Mat * > & | ports_gradient | ||

| ) | [virtual] |

Perform a back propagation step (also updating parameters according to the provided gradient).

The matrices in 'ports_value' must be the same as the ones given in a previous call to 'fprop' (and thus they should in particular contain the result of the fprop computation). However, they are not necessarily the same as the ones given in the LAST call to 'fprop': if there is a need to store an internal module state, this should be done using a specific port to store this state. Each Mat* pointer in the 'ports_gradient' vector can be one of:

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 278 of file RBMSparse1DMatrixConnection.cc.

References PLearn::RBMMatrixConnection::applyWeightPenalty(), PLearn::TMat< T >::column(), PLearn::RBMConnection::down_size, enforce_positive_weights, PLearn::externalProductAcc(), PLearn::fast_exact_is_equal(), filter_size, filterSize(), filterStart(), i, PLearn::TMat< T >::isEmpty(), j, PLearn::RBMMatrixConnection::L1_penalty_factor, PLearn::RBMMatrixConnection::L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::max(), PLearn::OnlineLearningModule::nPorts(), PLASSERT, PLCHECK_MSG, PLERROR, PLearn::TMat< T >::resize(), PLearn::TMat< T >::subMatColumns(), PLearn::TMat< T >::toVec(), PLearn::transposeProductScaleAcc(), PLearn::RBMConnection::up_size, PLearn::RBMMatrixConnection::weights, and PLearn::TMat< T >::width().

{

//TODO: add weights as port?

PLASSERT( ports_value.length() == nPorts()

&& ports_gradient.length() == nPorts() );

Mat* down = ports_value[0];

//Mat* up = ports_value[1];

Mat* down_grad = ports_gradient[0];

Mat* up_grad = ports_gradient[1];

PLASSERT( down && !down->isEmpty() );

//PLASSERT( up && !up->isEmpty() );

int batch_size = down->length();

//PLASSERT( up->length() == batch_size );

// If we have up_grad

if( up_grad && !up_grad->isEmpty() )

{

// down_grad should not be provided

PLASSERT( !down_grad || down_grad->isEmpty() );

PLASSERT( up_grad->length() == batch_size );

PLASSERT( up_grad->width() == up_size );

bool compute_down_grad = false;

if( down_grad && down_grad->isEmpty() )

{

compute_down_grad = true;

PLASSERT( down_grad->width() == down_size );

down_grad->resize(batch_size, down_size);

}

for (int i=0; i<up_size; i++) {

int filter_start= filterStart(i), length= filterSize(i);

// propagate gradient

// input_gradients = output_gradient * weights

if( compute_down_grad )

externalProductAcc( down_grad->subMatColumns( filter_start, length ),

up_grad->column(i).toVec(),

weights(i));

// update weights

// weights -= learning_rate/n * output_gradients' * inputs

transposeProductScaleAcc( weights(i),

down->subMatColumns( filter_start, length ),

up_grad->column(i).toVec(),

-learning_rate / batch_size, real(1));

if( enforce_positive_weights )

for (int j=0; j<filter_size; j++)

weights(i,j)= max( real(0), weights(i,j) );

}

}

else if( down_grad && !down_grad->isEmpty() )

{

PLERROR("down-up gradient not implemented in RBMSparse1DMatrixConnection::bpropAccUpdate.");

PLASSERT( down_grad->length() == batch_size );

PLASSERT( down_grad->width() == down_size );

}

else

PLCHECK_MSG( false,

"Unknown port configuration" );

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

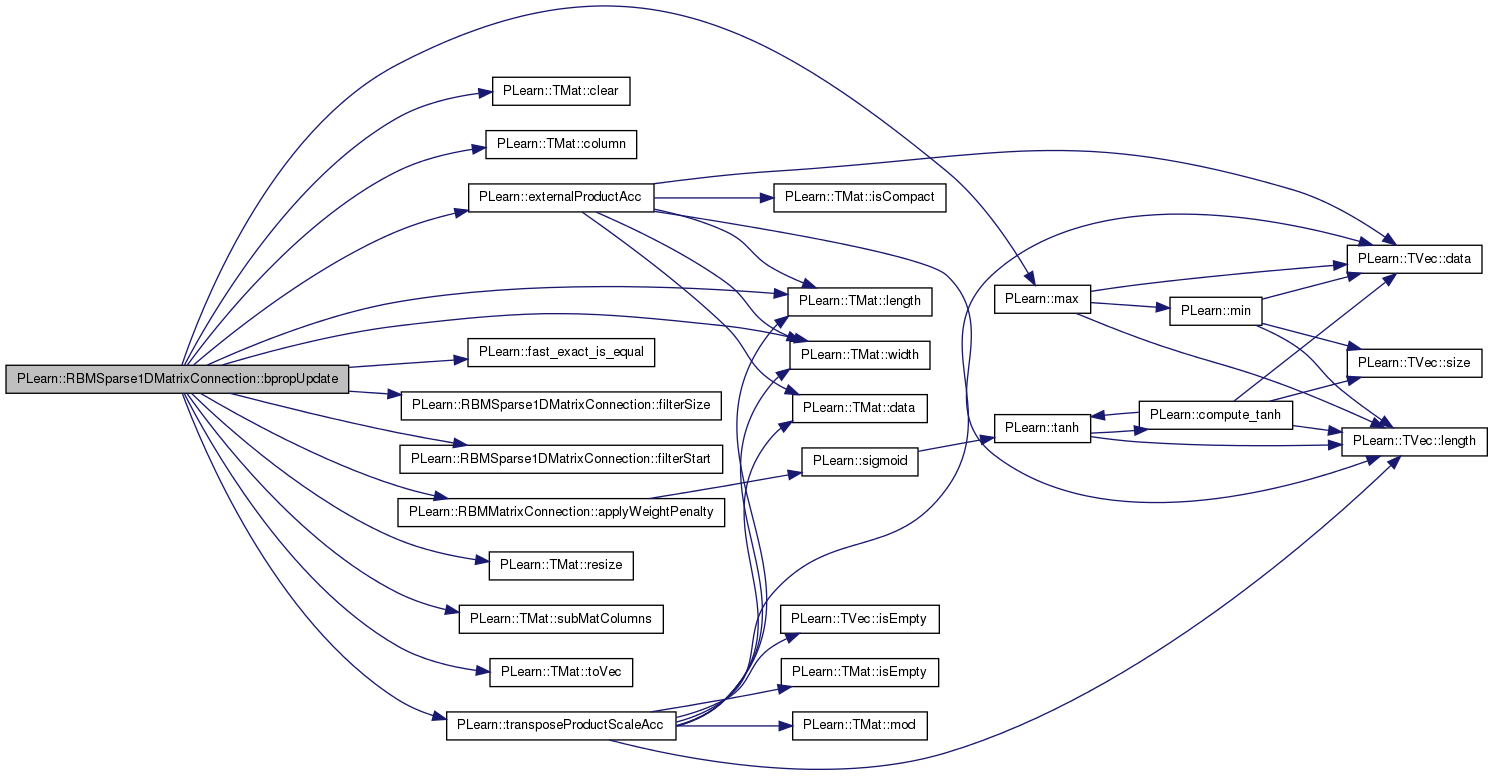

| void PLearn::RBMSparse1DMatrixConnection::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient)

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 232 of file RBMSparse1DMatrixConnection.cc.

References PLearn::RBMMatrixConnection::applyWeightPenalty(), PLearn::TMat< T >::clear(), PLearn::TMat< T >::column(), PLearn::RBMConnection::down_size, enforce_positive_weights, PLearn::externalProductAcc(), PLearn::fast_exact_is_equal(), filter_size, filterSize(), filterStart(), i, j, PLearn::RBMMatrixConnection::L1_penalty_factor, PLearn::RBMMatrixConnection::L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLearn::max(), PLASSERT, PLASSERT_MSG, PLearn::TMat< T >::resize(), PLearn::TMat< T >::subMatColumns(), PLearn::TMat< T >::toVec(), PLearn::transposeProductScaleAcc(), PLearn::RBMConnection::up_size, PLearn::RBMMatrixConnection::weights, and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == down_size );

PLASSERT( outputs.width() == up_size );

PLASSERT( output_gradients.width() == up_size );

if( accumulate )

PLASSERT_MSG( input_gradients.width() == down_size &&

input_gradients.length() == inputs.length(),

"Cannot resize input_gradients and accumulate into it" );

else {

input_gradients.resize(inputs.length(), down_size);

input_gradients.clear();

}

for (int i=0; i<up_size; i++) {

int filter_start= filterStart(i), length= filterSize(i);

// input_gradients = output_gradient * weights

externalProductAcc( input_gradients.subMatColumns( filter_start, length ),

output_gradients.column(i).toVec(),

weights(i));

// weights -= learning_rate/n * output_gradients' * inputs

transposeProductScaleAcc( weights(i),

inputs.subMatColumns( filter_start, length ),

output_gradients.column(i).toVec(),

-learning_rate / inputs.length(), real(1));

if( enforce_positive_weights )

for (int j=0; j<filter_size; j++)

weights(i,j)= max( real(0), weights(i,j) );

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

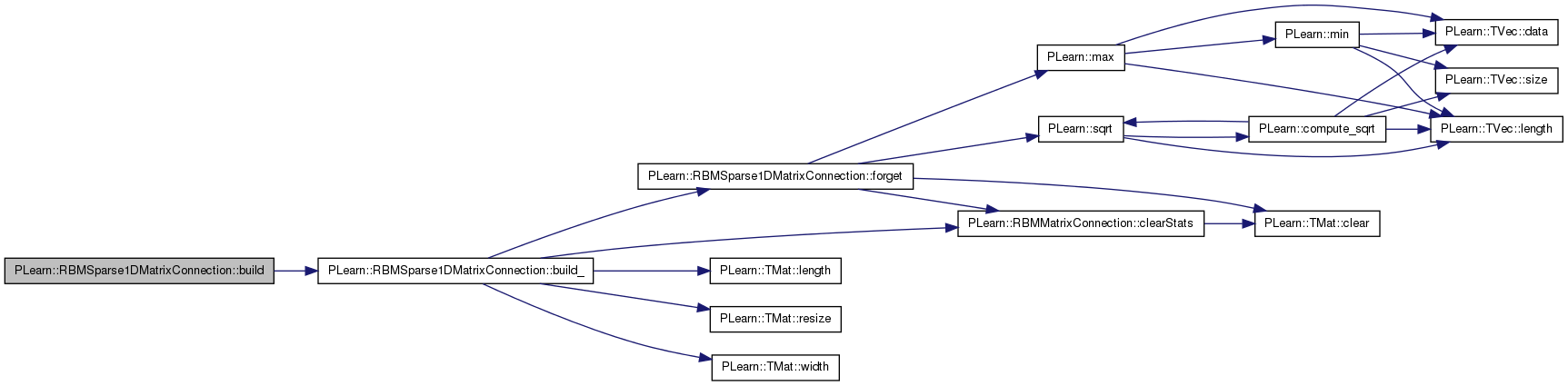

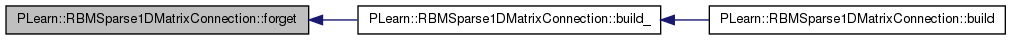

| void PLearn::RBMSparse1DMatrixConnection::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 124 of file RBMSparse1DMatrixConnection.cc.

References build_().

{

RBMConnection::build();

build_();

}

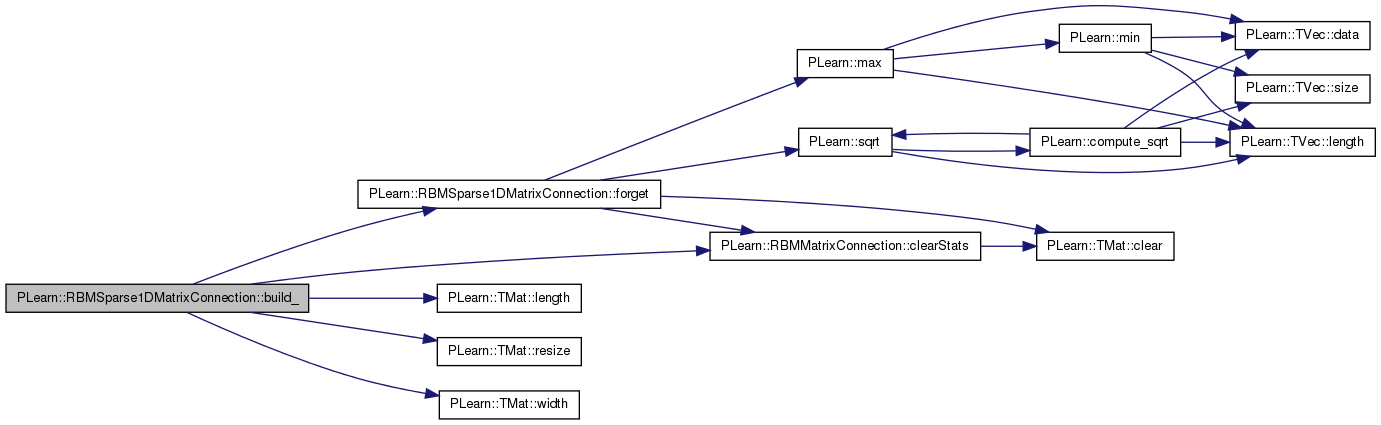

| void PLearn::RBMSparse1DMatrixConnection::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 90 of file RBMSparse1DMatrixConnection.cc.

References PLearn::RBMMatrixConnection::clearStats(), PLearn::RBMConnection::down_size, filter_size, forget(), PLearn::TMat< T >::length(), PLearn::RBMConnection::momentum, PLASSERT, PLearn::TMat< T >::resize(), step_size, PLearn::RBMConnection::up_size, PLearn::RBMMatrixConnection::weights, PLearn::RBMMatrixConnection::weights_inc, PLearn::RBMMatrixConnection::weights_neg_stats, PLearn::RBMMatrixConnection::weights_pos_stats, and PLearn::TMat< T >::width().

Referenced by build().

{

if( up_size <= 0 || down_size <= 0 )

return;

if( filter_size < 0 )

filter_size = down_size;

step_size = (int)((real)(down_size-filter_size)/(real)(up_size-1));

PLASSERT( filter_size <= down_size );

bool needs_forget = false; // do we need to reinitialize the parameters?

if( weights.length() != up_size ||

weights.width() != filter_size )

{

weights.resize( up_size, filter_size );

needs_forget = true;

}

weights_pos_stats.resize( up_size, filter_size );

weights_neg_stats.resize( up_size, filter_size );

if( momentum != 0. )

weights_inc.resize( up_size, filter_size );

if( needs_forget ) {

forget();

}

clearStats();

}

| string PLearn::RBMSparse1DMatrixConnection::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

| void PLearn::RBMSparse1DMatrixConnection::computeProducts | ( | int | start, |

| int | length, | ||

| Mat & | activations, | ||

| bool | accumulate = false |

||

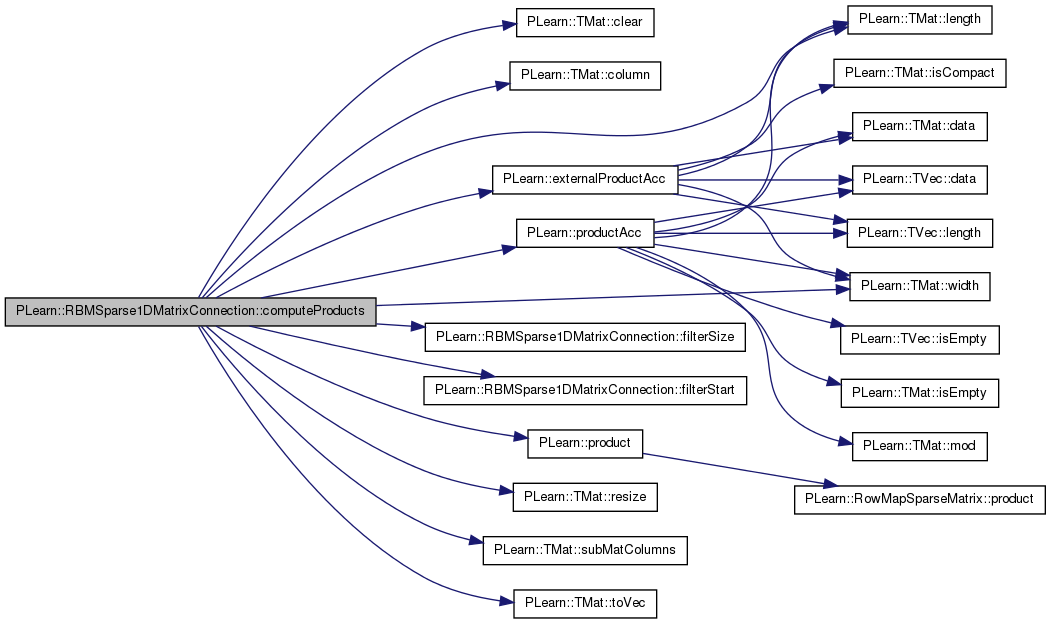

| ) | const [virtual] |

Computes the vectors of activation of "length" units, starting from "start", and stores (or add) them into "activations".

"start" indexes an up unit if "going_up", else a down unit.

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 181 of file RBMSparse1DMatrixConnection.cc.

References PLearn::TMat< T >::clear(), PLearn::TMat< T >::column(), PLearn::RBMConnection::down_size, PLearn::externalProductAcc(), filterSize(), filterStart(), PLearn::RBMConnection::going_up, i, PLearn::RBMConnection::inputs_mat, PLearn::TMat< T >::length(), PLASSERT, PLearn::product(), PLearn::productAcc(), PLearn::TMat< T >::resize(), PLearn::TMat< T >::subMatColumns(), PLearn::TMat< T >::toVec(), PLearn::RBMConnection::up_size, PLearn::RBMMatrixConnection::weights, and PLearn::TMat< T >::width().

{

PLASSERT( activations.width() == length );

activations.resize(inputs_mat.length(), length);

if( going_up )

{

PLASSERT( start+length <= up_size );

// activations(k, i-start) += sum_j weights(i,j) inputs_mat(k, j)

if( accumulate )

for (int i=start; i<start+length; i++)

productAcc( activations.column(i-start).toVec(),

inputs_mat.subMatColumns( filterStart(i), filterSize(i) ),

weights(i) );

else

for (int i=start; i<start+length; i++)

product( activations.column(i-start).toVec(),

inputs_mat.subMatColumns( filterStart(i), filterSize(i) ),

weights(i) );

}

else

{

PLASSERT( start+length <= down_size );

if( !accumulate )

activations.clear();

// activations(k, i-start) += sum_j weights(j,i) inputs_mat(k, j)

Mat all_activations(inputs_mat.length(), down_size);

all_activations.subMatColumns( start, length ) << activations;

for (int i=0; i<up_size; i++)

{

externalProductAcc( all_activations.subMatColumns( filterStart(i), filterSize(i) ),

inputs_mat.column(i).toVec(),

weights(i) );

}

activations << all_activations.subMatColumns( start, length );

}

}

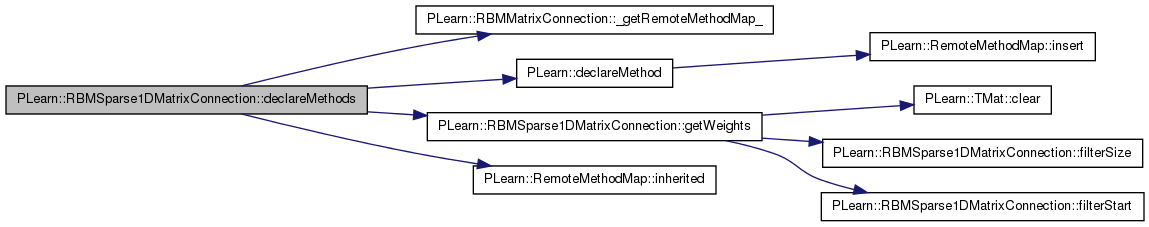

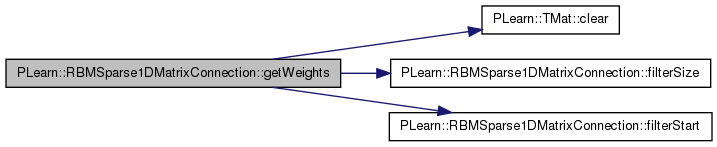

| void PLearn::RBMSparse1DMatrixConnection::declareMethods | ( | RemoteMethodMap & | rmm | ) | [static, protected] |

Declare the methods that are remote-callable.

Reimplemented from PLearn::RBMConnection.

Definition at line 79 of file RBMSparse1DMatrixConnection.cc.

References PLearn::RBMMatrixConnection::_getRemoteMethodMap_(), PLearn::declareMethod(), getWeights(), and PLearn::RemoteMethodMap::inherited().

{

// Insert a backpointer to remote methods; note that this is different from

// declareOptions().

rmm.inherited(inherited::_getRemoteMethodMap_());

declareMethod(

rmm, "getWeights", &RBMSparse1DMatrixConnection::getWeights,

(BodyDoc("Returns the full weights (including 0s).\n"),

RetDoc ("Matrix of weights (n_hidden x input_size)")));

}

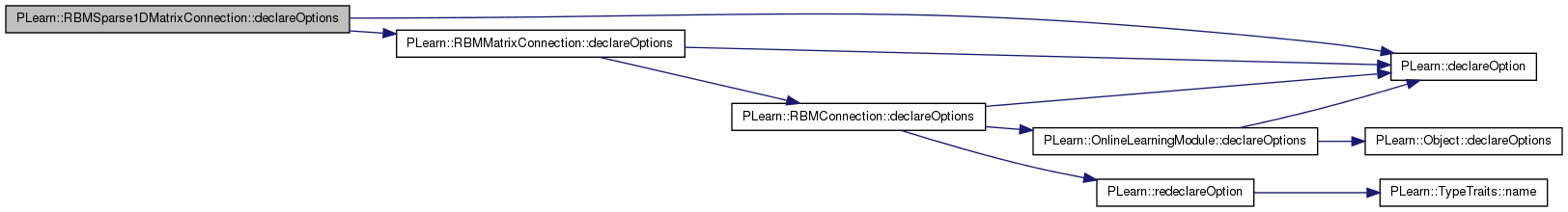

| void PLearn::RBMSparse1DMatrixConnection::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 58 of file RBMSparse1DMatrixConnection.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::RBMMatrixConnection::declareOptions(), enforce_positive_weights, filter_size, PLearn::OptionBase::learntoption, and step_size.

{

declareOption(ol, "filter_size", &RBMSparse1DMatrixConnection::filter_size,

OptionBase::buildoption,

"Length of each filter. If -1 then input_size is taken (RBMMatrixConnection).");

declareOption(ol, "enforce_positive_weights", &RBMSparse1DMatrixConnection::enforce_positive_weights,

OptionBase::buildoption,

"Whether or not to enforce having positive weights.");

declareOption(ol, "step_size", &RBMSparse1DMatrixConnection::step_size,

OptionBase::learntoption,

"Step between each filter.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RBMSparse1DMatrixConnection::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 118 of file RBMSparse1DMatrixConnection.h.

:

//##### Protected Member Functions ######################################

| RBMSparse1DMatrixConnection * PLearn::RBMSparse1DMatrixConnection::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

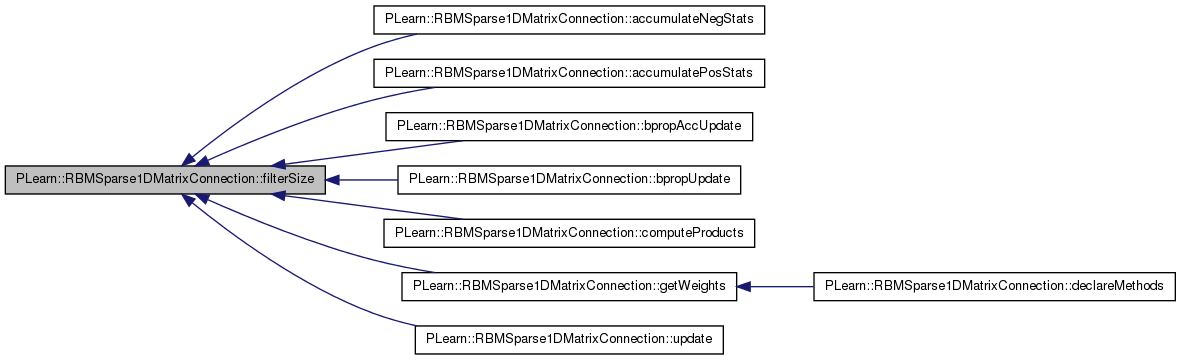

Definition at line 135 of file RBMSparse1DMatrixConnection.cc.

References filter_size.

Referenced by accumulateNegStats(), accumulatePosStats(), bpropAccUpdate(), bpropUpdate(), computeProducts(), getWeights(), and update().

{

return filter_size;

}

Definition at line 130 of file RBMSparse1DMatrixConnection.cc.

References step_size.

Referenced by accumulateNegStats(), accumulatePosStats(), bpropAccUpdate(), bpropUpdate(), computeProducts(), getWeights(), and update().

{

return step_size*idx;

}

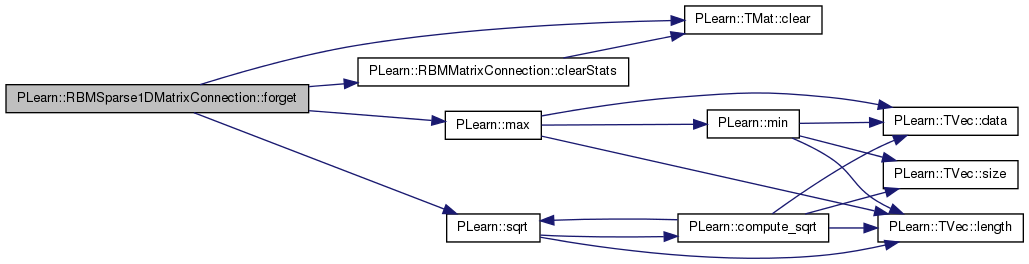

| void PLearn::RBMSparse1DMatrixConnection::forget | ( | ) | [virtual] |

reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 397 of file RBMSparse1DMatrixConnection.cc.

References PLearn::TMat< T >::clear(), PLearn::RBMMatrixConnection::clearStats(), d, enforce_positive_weights, filter_size, PLearn::RBMConnection::initialization_method, PLearn::RBMMatrixConnection::L2_n_updates, PLearn::max(), PLWARNING, PLearn::OnlineLearningModule::random_gen, PLearn::sqrt(), PLearn::RBMConnection::up_size, and PLearn::RBMMatrixConnection::weights.

Referenced by build_().

{

clearStats();

if( initialization_method == "zero" )

weights.clear();

else

{

if( !random_gen ) {

PLWARNING( "RBMSparse1DMatrixConnection: cannot forget() without"

" random_gen" );

return;

}

real d = 1. / max( filter_size, up_size );

if( initialization_method == "uniform_sqrt" )

d = sqrt( d );

if( enforce_positive_weights )

random_gen->fill_random_uniform( weights, real(0), d );

else

random_gen->fill_random_uniform( weights, -d, d );

}

L2_n_updates = 0;

}

| void PLearn::RBMSparse1DMatrixConnection::fprop | ( | const Vec & | input, |

| const Mat & | rbm_weights, | ||

| Vec & | output | ||

| ) | const [virtual] |

given the input and the connection weights, compute the output (possibly resize it appropriately)

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 223 of file RBMSparse1DMatrixConnection.cc.

References PLERROR.

{

PLERROR("RBMSparse1DMatrixConnection::fprop not implemented.");

}

| OptionList & PLearn::RBMSparse1DMatrixConnection::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

| OptionMap & PLearn::RBMSparse1DMatrixConnection::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

| RemoteMethodMap & PLearn::RBMSparse1DMatrixConnection::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 50 of file RBMSparse1DMatrixConnection.cc.

| Mat PLearn::RBMSparse1DMatrixConnection::getWeights | ( | ) | const [virtual] |

Definition at line 140 of file RBMSparse1DMatrixConnection.cc.

References PLearn::TMat< T >::clear(), PLearn::RBMConnection::down_size, filterSize(), filterStart(), i, PLearn::RBMConnection::up_size, w, and PLearn::RBMMatrixConnection::weights.

Referenced by declareMethods().

{

Mat w( up_size, down_size);

w.clear();

for ( int i=0; i<up_size; i++)

w(i).subVec( filterStart(i), filterSize(i) ) << weights(i);

return w;

}

| int PLearn::RBMSparse1DMatrixConnection::nParameters | ( | ) | const [virtual] |

return the number of parameters

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 422 of file RBMSparse1DMatrixConnection.cc.

References PLearn::TMat< T >::size(), and PLearn::RBMMatrixConnection::weights.

{

return weights.size();

}

| void PLearn::RBMSparse1DMatrixConnection::update | ( | const Mat & | pos_down_values, |

| const Mat & | pos_up_values, | ||

| const Mat & | neg_down_values, | ||

| const Mat & | neg_up_values | ||

| ) | [virtual] |

Not implemented.

Reimplemented from PLearn::RBMMatrixConnection.

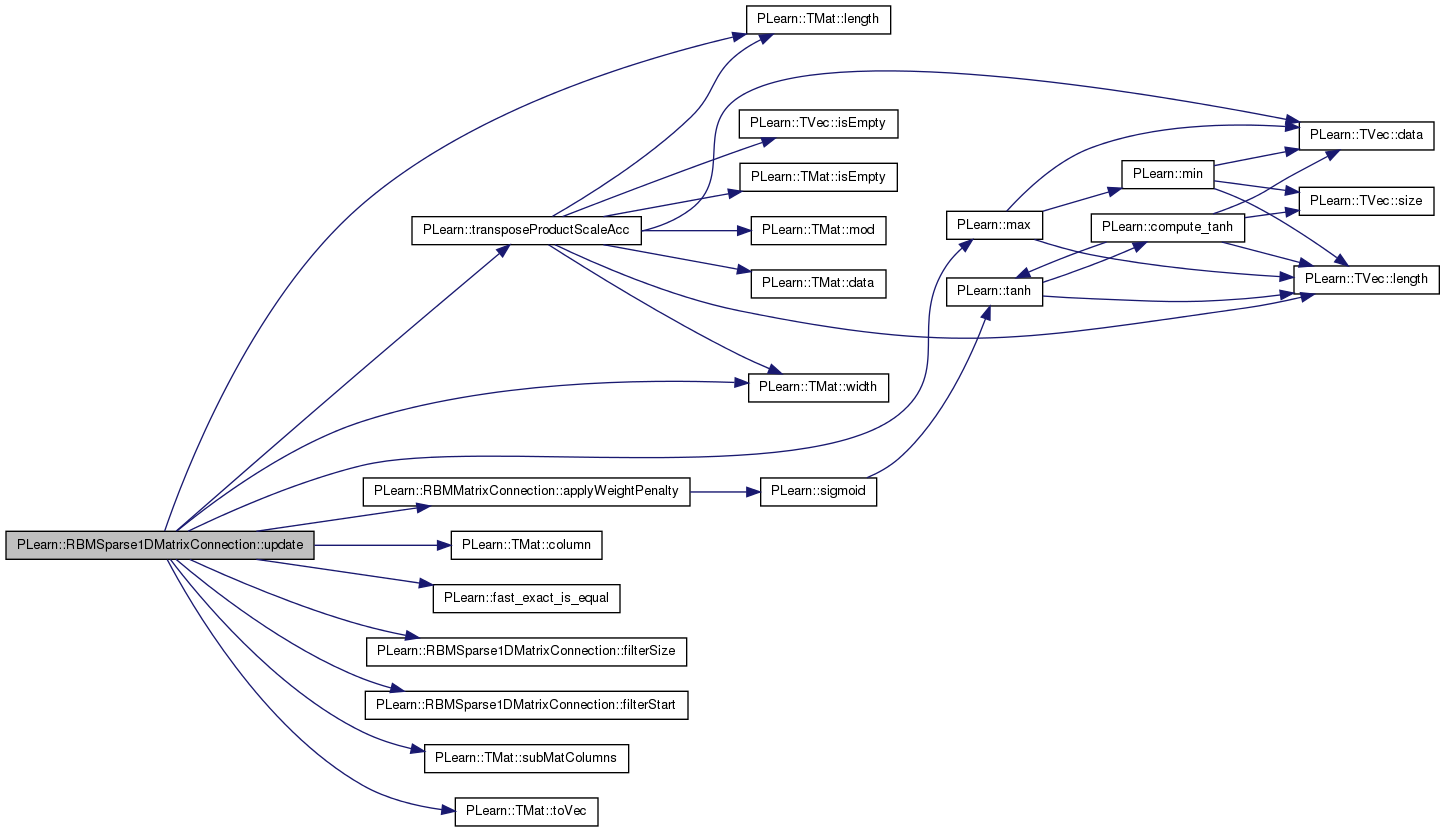

Definition at line 349 of file RBMSparse1DMatrixConnection.cc.

References PLearn::RBMMatrixConnection::applyWeightPenalty(), PLearn::TMat< T >::column(), PLearn::RBMConnection::down_size, enforce_positive_weights, PLearn::fast_exact_is_equal(), filter_size, filterSize(), filterStart(), i, j, PLearn::RBMMatrixConnection::L1_penalty_factor, PLearn::RBMMatrixConnection::L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLearn::max(), PLearn::RBMConnection::momentum, PLASSERT, PLERROR, PLearn::TMat< T >::subMatColumns(), PLearn::TMat< T >::toVec(), PLearn::transposeProductScaleAcc(), PLearn::RBMConnection::up_size, PLearn::RBMMatrixConnection::weights, and PLearn::TMat< T >::width().

{

// weights += learning_rate * ( h_0 v_0' - h_1 v_1' );

// or:

// weights[i][j] += learning_rate * (h_0[i] v_0[j] - h_1[i] v_1[j]);

PLASSERT( pos_up_values.width() == weights.length() );

PLASSERT( neg_up_values.width() == weights.length() );

PLASSERT( pos_down_values.width() == down_size );

PLASSERT( neg_down_values.width() == down_size );

if( momentum == 0. )

{

// We use the average gradient over a mini-batch.

real avg_lr = learning_rate / pos_down_values.length();

for (int i=0; i<up_size; i++) {

int filter_start= filterStart(i), length= filterSize(i);

transposeProductScaleAcc( weights(i),

pos_down_values.subMatColumns( filter_start, length ),

pos_up_values.column(i).toVec(),

avg_lr, real(1));

transposeProductScaleAcc( weights(i),

neg_down_values.subMatColumns( filter_start, length ),

neg_up_values.column(i).toVec(),

-avg_lr, real(1));

if( enforce_positive_weights )

for (int j=0; j<filter_size; j++)

weights(i,j)= max( real(0), weights(i,j) );

}

}

else

PLERROR("RBMSparse1DMatrixConnection::update minibatch with momentum - Not implemented");

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

Reimplemented from PLearn::RBMMatrixConnection.

Definition at line 118 of file RBMSparse1DMatrixConnection.h.

Definition at line 62 of file RBMSparse1DMatrixConnection.h.

Referenced by bpropAccUpdate(), bpropUpdate(), declareOptions(), forget(), and update().

Definition at line 60 of file RBMSparse1DMatrixConnection.h.

Referenced by bpropAccUpdate(), bpropUpdate(), build_(), declareOptions(), filterSize(), forget(), and update().

Definition at line 66 of file RBMSparse1DMatrixConnection.h.

Referenced by build_(), declareOptions(), and filterStart().

1.7.4

1.7.4