|

PLearn 0.1

|

|

PLearn 0.1

|

Stores and learns the parameters between two linear layers of an RBM. More...

#include <RBMMatrixConnection.h>

Public Member Functions | |

| RBMMatrixConnection (real the_learning_rate=0) | |

| Default constructor. | |

| virtual void | accumulatePosStats (const Vec &down_values, const Vec &up_values) |

| Accumulates positive phase statistics to *_pos_stats. | |

| virtual void | accumulatePosStats (const Mat &down_values, const Mat &up_values) |

| virtual void | accumulateNegStats (const Vec &down_values, const Vec &up_values) |

| Accumulates negative phase statistics to *_neg_stats. | |

| virtual void | accumulateNegStats (const Mat &down_values, const Mat &up_values) |

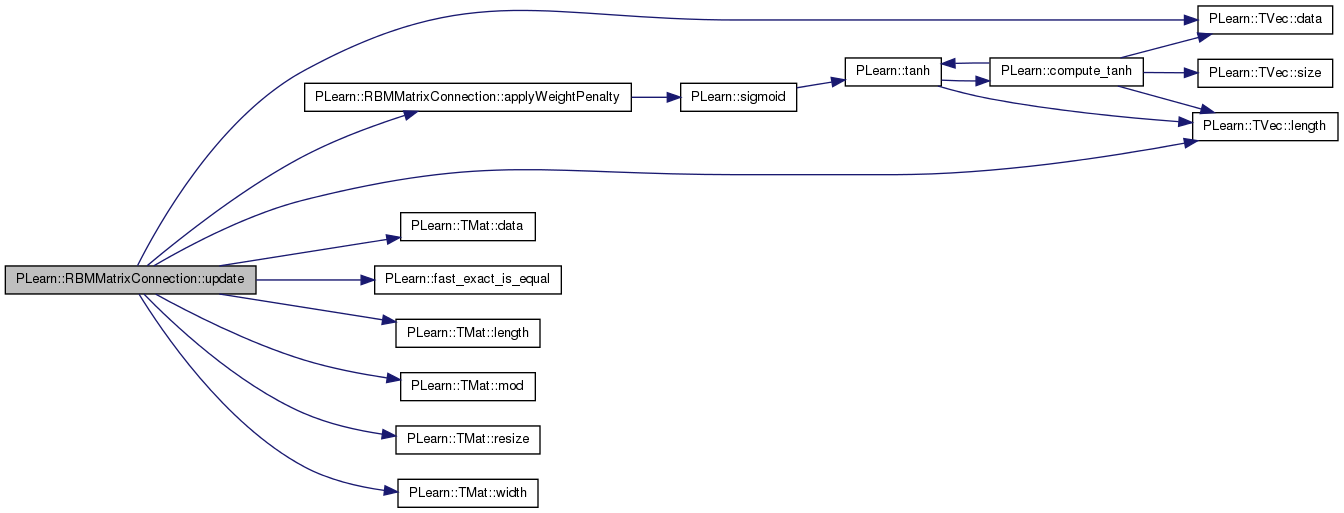

| virtual void | update () |

| Updates parameters according to contrastive divergence gradient. | |

| virtual void | update (const Vec &pos_down_values, const Vec &pos_up_values, const Vec &neg_down_values, const Vec &neg_up_values) |

| Updates parameters according to contrastive divergence gradient, not using the statistics but the explicit values passed. | |

| virtual void | update (const Mat &pos_down_values, const Mat &pos_up_values, const Mat &neg_down_values, const Mat &neg_up_values) |

| Not implemented. | |

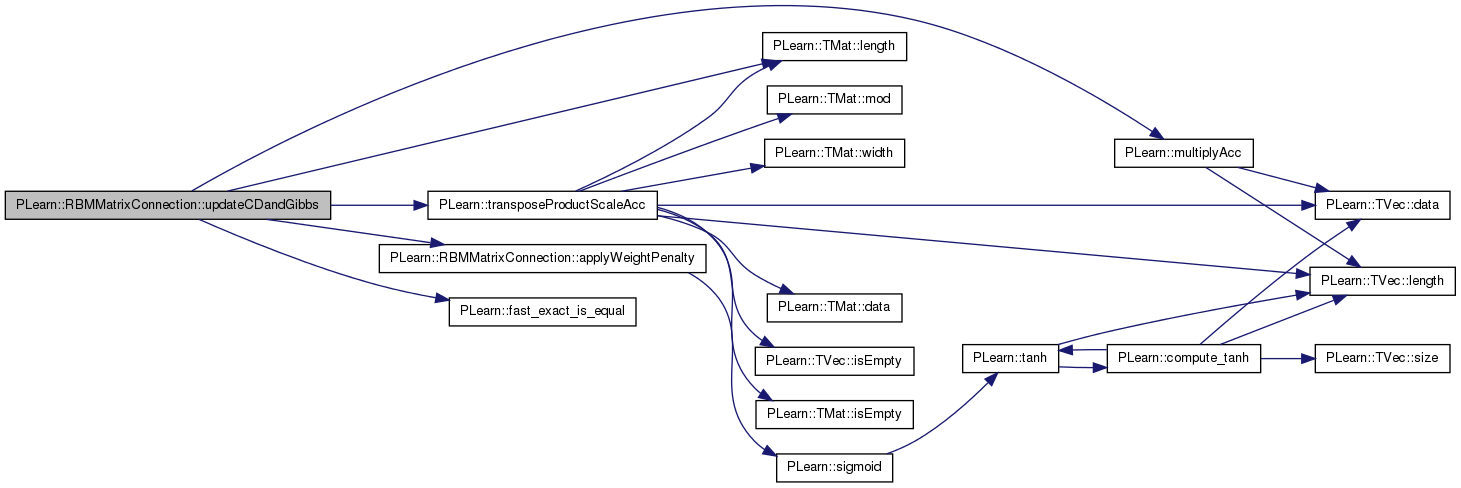

| virtual void | updateCDandGibbs (const Mat &pos_down_values, const Mat &pos_up_values, const Mat &cd_neg_down_values, const Mat &cd_neg_up_values, const Mat &gibbs_neg_down_values, const Mat &gibbs_neg_up_values, real background_gibbs_update_ratio) |

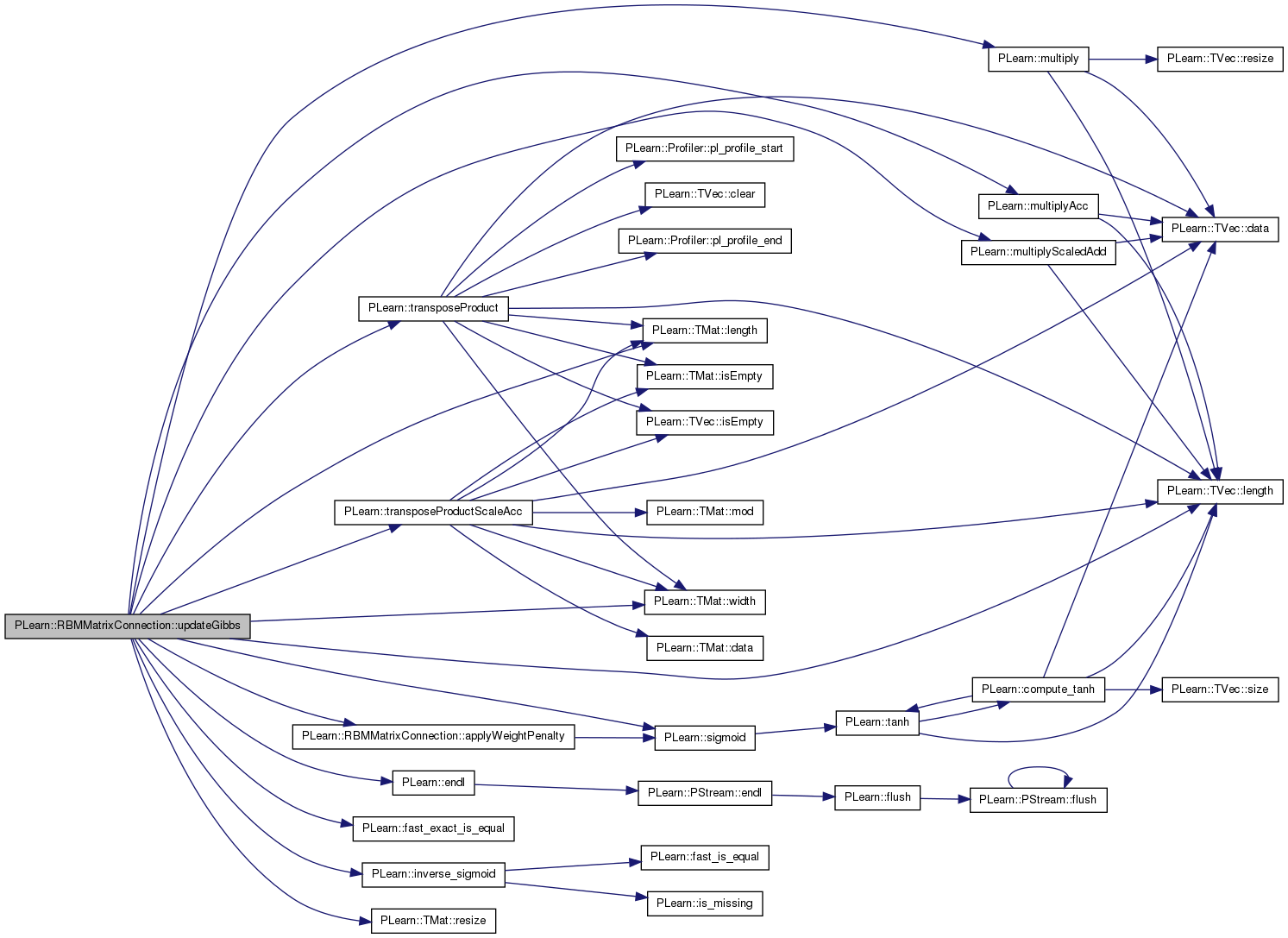

| virtual void | updateGibbs (const Mat &pos_down_values, const Mat &pos_up_values, const Mat &gibbs_neg_down_values, const Mat &gibbs_neg_up_values) |

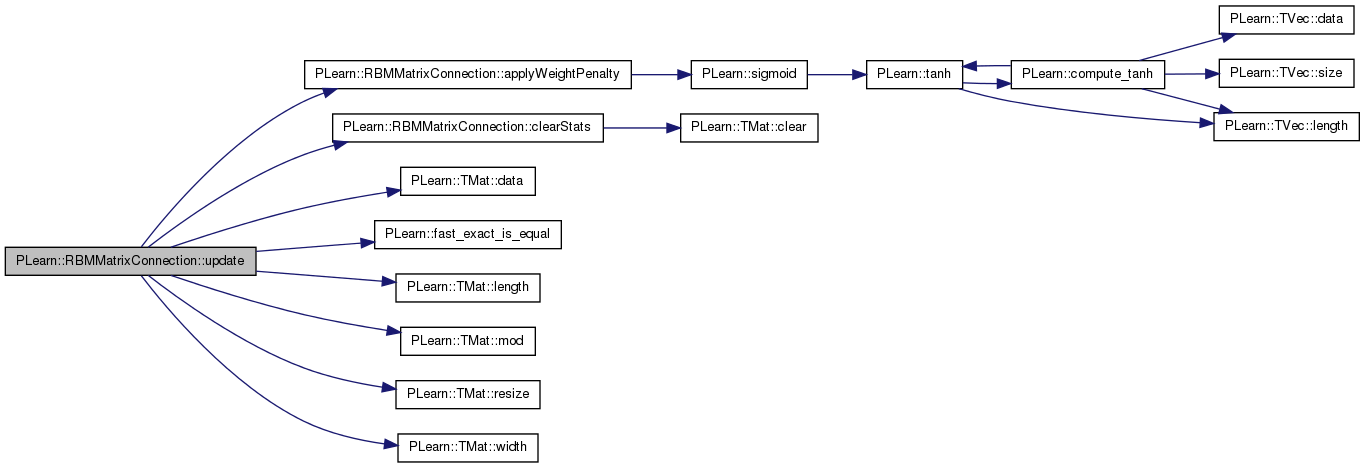

| virtual void | clearStats () |

| Clear all information accumulated during stats. | |

| virtual void | computeProduct (int start, int length, const Vec &activations, bool accumulate=false) const |

| Computes the vectors of activation of "length" units, starting from "start", and stores (or add) them into "activations". | |

| virtual void | computeProducts (int start, int length, Mat &activations, bool accumulate=false) const |

| Same as 'computeProduct' but for mini-batches. | |

| virtual void | fprop (const Vec &input, const Mat &rbm_weights, Vec &output) const |

| Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then). | |

| virtual void | getAllWeights (Mat &rbm_weights) const |

| provide the internal weight values (not a copy) | |

| virtual void | setAllWeights (const Mat &rbm_weights) |

| set the internal weight values to rbm_weights (not a copy) | |

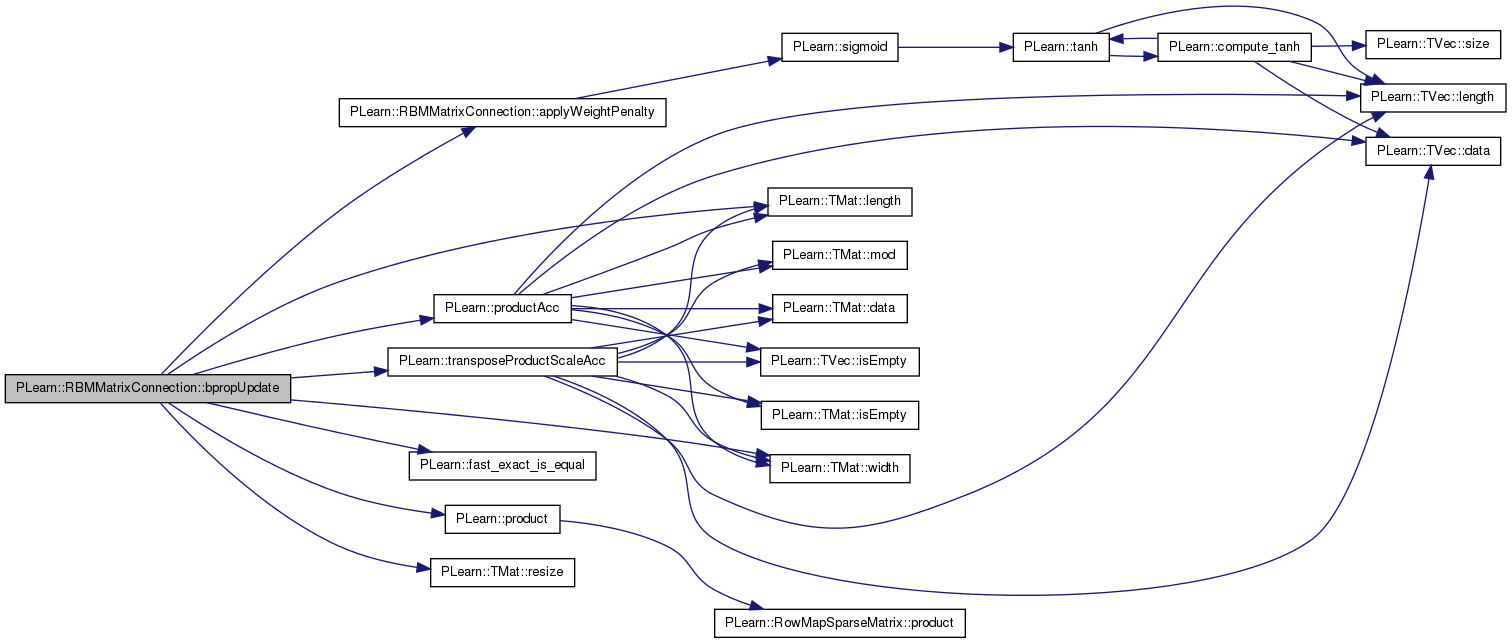

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false) |

| this version allows to obtain the input gradient as well N.B. | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient) | |

| virtual void | bpropAccUpdate (const TVec< Mat * > &ports_value, const TVec< Mat * > &ports_gradient) |

| Perform a back propagation step (also updating parameters according to the provided gradient). | |

| virtual void | petiteCulotteOlivierUpdate (const Vec &input, const Mat &rbm_weights, const Vec &output, Vec &input_gradient, Mat &rbm_weights_gradient, const Vec &output_gradient, bool accumulate=false) |

| back-propagates the output gradient to the input and the weights (the weights are not updated) | |

| virtual void | petiteCulotteOlivierCD (Mat &weights_gradient, bool accumulate=false) |

| Computes the contrastive divergence gradient with respect to the weights It should be noted that bpropCD does not call clearstats(). | |

| virtual void | petiteCulotteOlivierCD (const Vec &pos_down_values, const Vec &pos_up_values, const Vec &neg_down_values, const Vec &neg_up_values, Mat &weights_gradient, bool accumulate=false) |

| Computes the contrastive divergence gradient with respect to the weights given the positive and negative phase values. | |

| virtual void | applyWeightPenalty () |

| Applies penalty (decay) on weights. | |

| virtual void | addWeightPenalty (Mat weights, Mat weight_gradients) |

| Adds penalty (decay) gradient. | |

| virtual void | forget () |

| reset the parameters to the state they would be BEFORE starting training. | |

| virtual int | nParameters () const |

| optionally perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation. | |

| virtual Vec | makeParametersPointHere (const Vec &global_parameters) |

| Make the parameters data be sub-vectors of the given global_parameters. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMMatrixConnection * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| Vec | gibbs_ma_schedule |

| background gibbs chain options each element of this vector is a number of updates after which the moving average coefficient is incremented (by incrementing its inverse sigmoid by gibbs_ma_increment). | |

| real | gibbs_ma_increment |

| real | gibbs_initial_ma_coefficient |

| real | L1_penalty_factor |

| Optional (default=0) factor of L1 regularization term. | |

| real | L2_penalty_factor |

| Optional (default=0) factor of L2 regularization term. | |

| real | L2_decrease_constant |

| real | L2_shift |

| string | L2_decrease_type |

| int | L2_n_updates |

| Mat | weights |

| Matrix containing unit-to-unit weights (input_size × output_size) | |

| real | gibbs_ma_coefficient |

| used for Gibbs chain methods only | |

| Mat | weights_pos_stats |

| Accumulates positive contribution to the weights' gradient. | |

| Mat | weights_neg_stats |

| Accumulates negative contribution to the weights' gradient. | |

| Mat | weights_inc |

| Used if momentum != 0. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef RBMConnection | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Stores and learns the parameters between two linear layers of an RBM.

Definition at line 53 of file RBMMatrixConnection.h.

typedef RBMConnection PLearn::RBMMatrixConnection::inherited [private] |

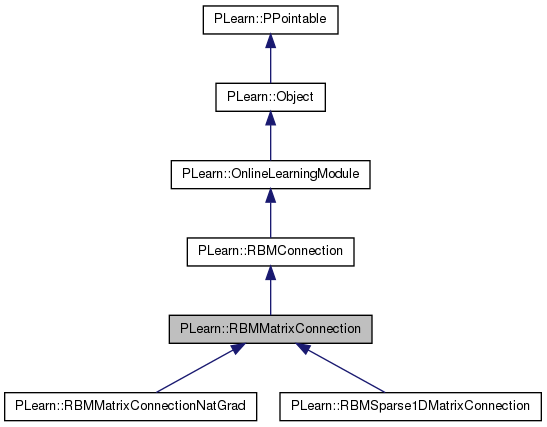

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 55 of file RBMMatrixConnection.h.

| PLearn::RBMMatrixConnection::RBMMatrixConnection | ( | real | the_learning_rate = 0 | ) |

Default constructor.

Definition at line 52 of file RBMMatrixConnection.cc.

:

inherited(the_learning_rate),

gibbs_ma_increment(0.1),

gibbs_initial_ma_coefficient(0.1),

L1_penalty_factor(0),

L2_penalty_factor(0),

L2_decrease_constant(0),

L2_shift(100),

L2_decrease_type("one_over_t"),

L2_n_updates(0)

{

}

| string PLearn::RBMMatrixConnection::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

| OptionList & PLearn::RBMMatrixConnection::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

| RemoteMethodMap & PLearn::RBMMatrixConnection::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

Referenced by PLearn::RBMSparse1DMatrixConnection::declareMethods().

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

| Object * PLearn::RBMMatrixConnection::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

| StaticInitializer RBMMatrixConnection::_static_initializer_ & PLearn::RBMMatrixConnection::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

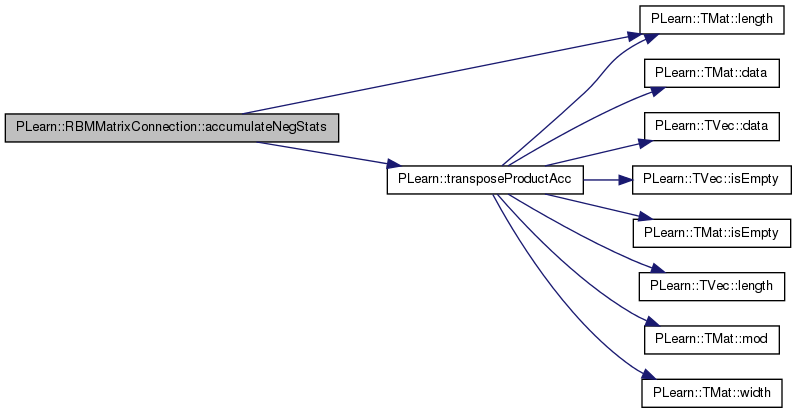

| void PLearn::RBMMatrixConnection::accumulateNegStats | ( | const Mat & | down_values, |

| const Mat & | up_values | ||

| ) | [virtual] |

Implements PLearn::RBMConnection.

Reimplemented in PLearn::RBMSparse1DMatrixConnection.

Definition at line 216 of file RBMMatrixConnection.cc.

References PLearn::TMat< T >::length(), PLearn::RBMConnection::neg_count, PLASSERT, PLearn::transposeProductAcc(), and weights_neg_stats.

{

int mbs=down_values.length();

PLASSERT(up_values.length()==mbs);

// weights_neg_stats += up_values * down_values'

transposeProductAcc(weights_neg_stats, up_values, down_values);

neg_count+=mbs;

}

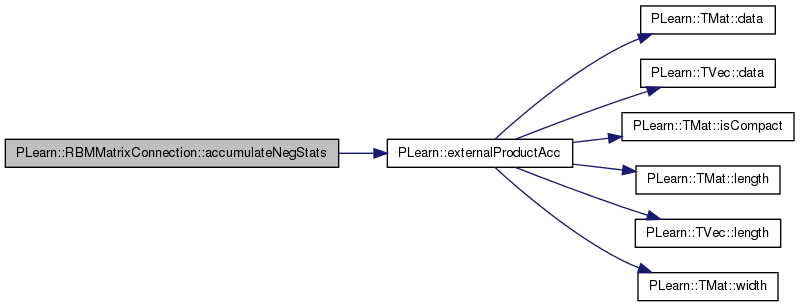

| void PLearn::RBMMatrixConnection::accumulateNegStats | ( | const Vec & | down_values, |

| const Vec & | up_values | ||

| ) | [virtual] |

Accumulates negative phase statistics to *_neg_stats.

Implements PLearn::RBMConnection.

Definition at line 207 of file RBMMatrixConnection.cc.

References PLearn::externalProductAcc(), PLearn::RBMConnection::neg_count, and weights_neg_stats.

{

// weights_neg_stats += up_values * down_values'

externalProductAcc( weights_neg_stats, up_values, down_values );

neg_count++;

}

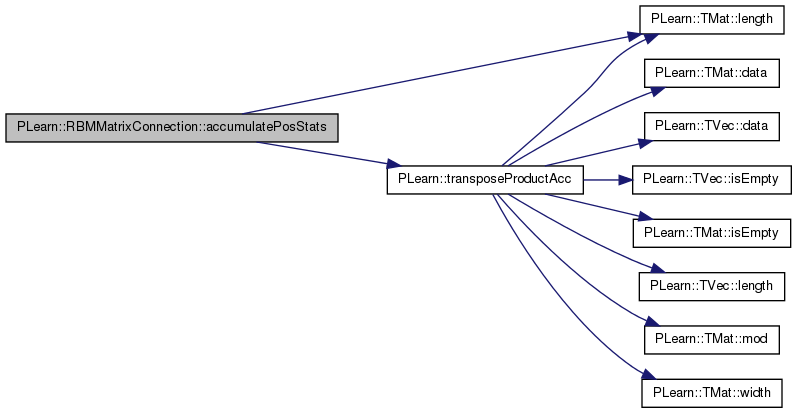

| void PLearn::RBMMatrixConnection::accumulatePosStats | ( | const Mat & | down_values, |

| const Mat & | up_values | ||

| ) | [virtual] |

Implements PLearn::RBMConnection.

Reimplemented in PLearn::RBMSparse1DMatrixConnection.

Definition at line 194 of file RBMMatrixConnection.cc.

References PLearn::TMat< T >::length(), PLASSERT, PLearn::RBMConnection::pos_count, PLearn::transposeProductAcc(), and weights_pos_stats.

{

int mbs=down_values.length();

PLASSERT(up_values.length()==mbs);

// weights_pos_stats += up_values * down_values'

transposeProductAcc(weights_pos_stats, up_values, down_values);

pos_count+=mbs;

}

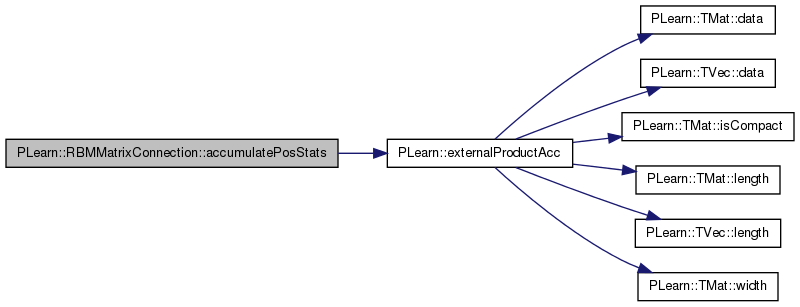

| void PLearn::RBMMatrixConnection::accumulatePosStats | ( | const Vec & | down_values, |

| const Vec & | up_values | ||

| ) | [virtual] |

Accumulates positive phase statistics to *_pos_stats.

Implements PLearn::RBMConnection.

Definition at line 185 of file RBMMatrixConnection.cc.

References PLearn::externalProductAcc(), PLearn::RBMConnection::pos_count, and weights_pos_stats.

{

// weights_pos_stats += up_values * down_values'

externalProductAcc( weights_pos_stats, up_values, down_values );

pos_count++;

}

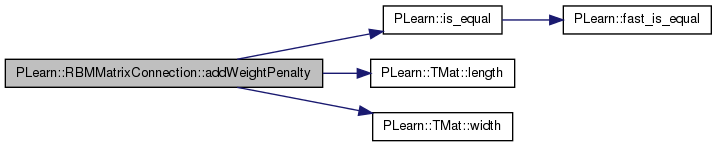

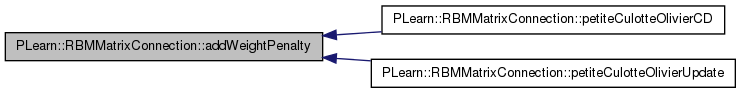

Adds penalty (decay) gradient.

Definition at line 889 of file RBMMatrixConnection.cc.

References i, PLearn::is_equal(), j, L1_penalty_factor, L2_decrease_constant, L2_penalty_factor, L2_shift, PLearn::TMat< T >::length(), PLASSERT_MSG, and PLearn::TMat< T >::width().

Referenced by petiteCulotteOlivierCD(), and petiteCulotteOlivierUpdate().

{

// Add penalty (decay) gradient.

real delta_L1 = L1_penalty_factor;

real delta_L2 = L2_penalty_factor;

PLASSERT_MSG( is_equal(L2_decrease_constant, 0) && is_equal(L2_shift, 100),

"L2 decrease not implemented in this method" );

for( int i=0; i<weights.length(); i++)

{

real* w_ = weights[i];

real* gw_ = weight_gradients[i];

for( int j=0; j<weights.width(); j++ )

{

if( delta_L2 != 0. )

gw_[j] += delta_L2*w_[j];

if( delta_L1 != 0. )

{

if( w_[j] > 0 )

gw_[j] += delta_L1;

else if( w_[j] < 0 )

gw_[j] -= delta_L1;

}

}

}

}

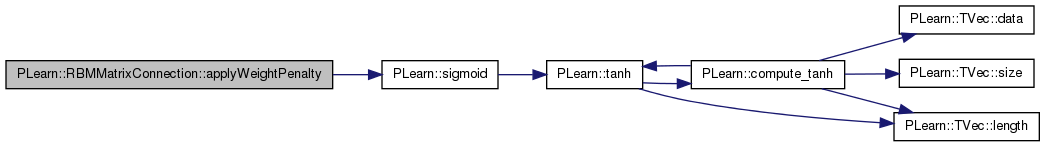

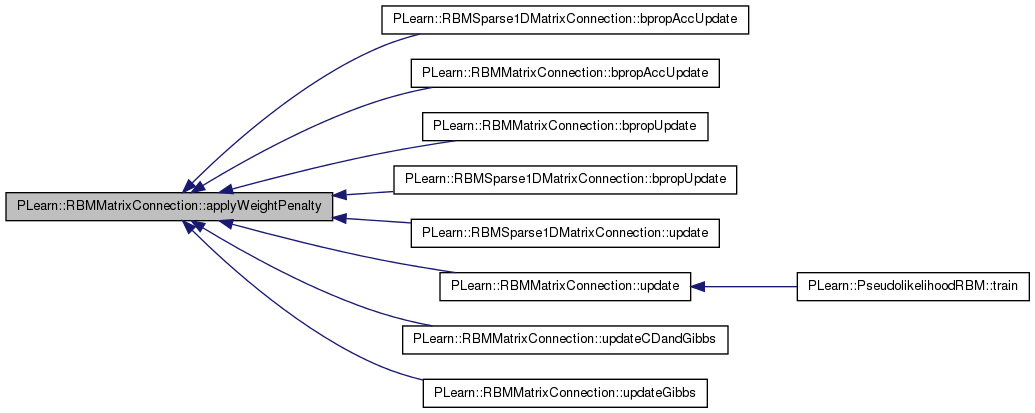

| void PLearn::RBMMatrixConnection::applyWeightPenalty | ( | ) | [virtual] |

Applies penalty (decay) on weights.

Definition at line 851 of file RBMMatrixConnection.cc.

References PLearn::RBMConnection::down_size, i, j, L1_penalty_factor, L2_decrease_constant, L2_decrease_type, L2_n_updates, L2_penalty_factor, L2_shift, PLearn::RBMConnection::learning_rate, PLERROR, PLearn::sigmoid(), PLearn::RBMConnection::up_size, and weights.

Referenced by PLearn::RBMSparse1DMatrixConnection::bpropAccUpdate(), bpropAccUpdate(), bpropUpdate(), PLearn::RBMSparse1DMatrixConnection::bpropUpdate(), PLearn::RBMSparse1DMatrixConnection::update(), update(), updateCDandGibbs(), and updateGibbs().

{

// Apply penalty (decay) on weights.

real delta_L1 = learning_rate * L1_penalty_factor;

real delta_L2 = learning_rate * L2_penalty_factor;

if (L2_decrease_type == "one_over_t")

delta_L2 /= (1 + L2_decrease_constant * L2_n_updates);

else if (L2_decrease_type == "sigmoid_like")

delta_L2 *= sigmoid((L2_shift - L2_n_updates) * L2_decrease_constant);

else

PLERROR("In RBMMatrixConnection::applyWeightPenalty - Invalid value "

"for L2_decrease_type: %s", L2_decrease_type.c_str());

for( int i=0; i<up_size; i++)

{

real* w_ = weights[i];

for( int j=0; j<down_size; j++ )

{

if( delta_L2 != 0. )

w_[j] *= (1 - delta_L2);

if( delta_L1 != 0. )

{

if( w_[j] > delta_L1 )

w_[j] -= delta_L1;

else if( w_[j] < -delta_L1 )

w_[j] += delta_L1;

else

w_[j] = 0.;

}

}

}

if (delta_L2 > 0)

L2_n_updates++;

}

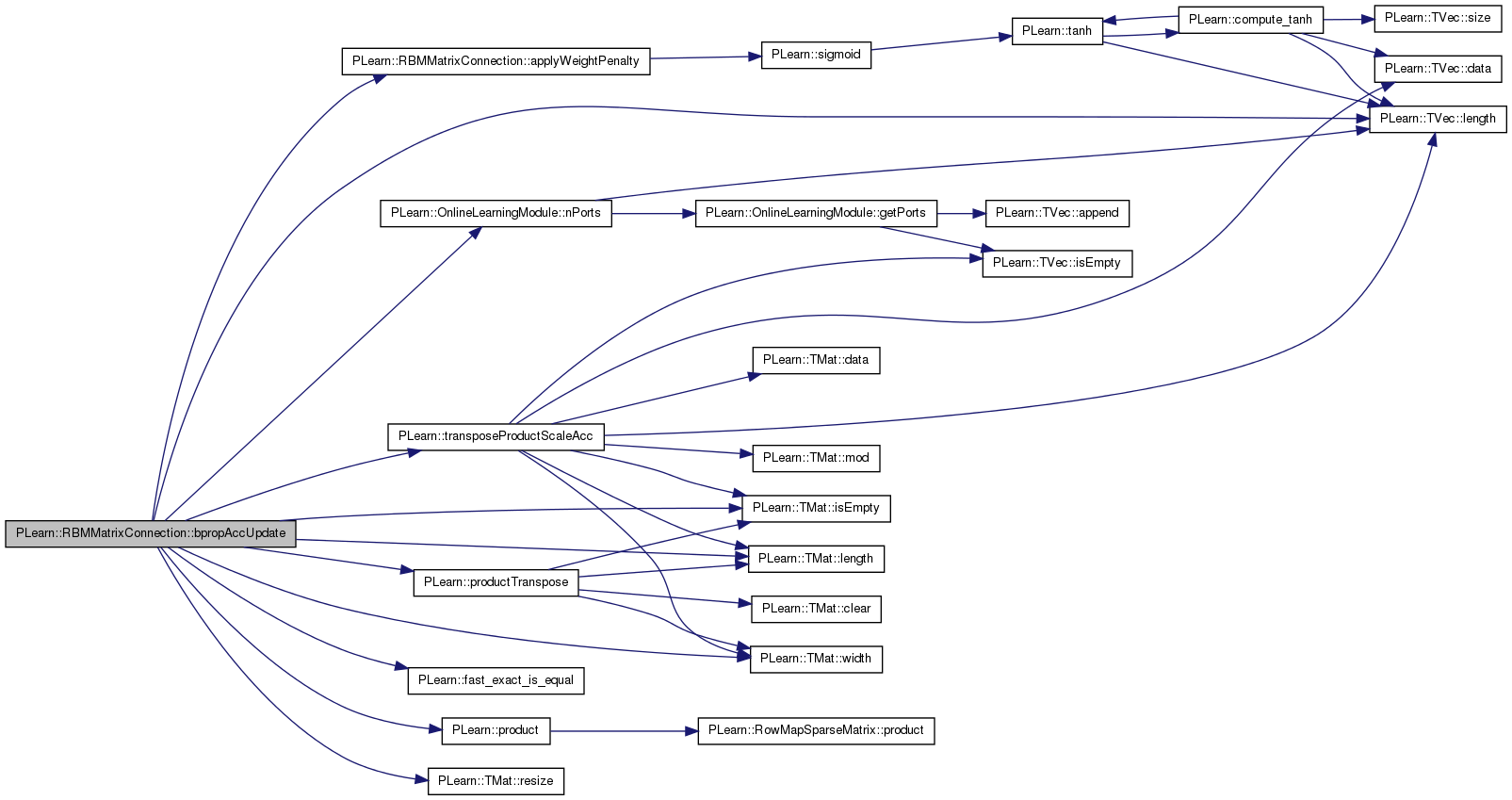

| void PLearn::RBMMatrixConnection::bpropAccUpdate | ( | const TVec< Mat * > & | ports_value, |

| const TVec< Mat * > & | ports_gradient | ||

| ) | [virtual] |

Perform a back propagation step (also updating parameters according to the provided gradient).

The matrices in 'ports_value' must be the same as the ones given in a previous call to 'fprop' (and thus they should in particular contain the result of the fprop computation). However, they are not necessarily the same as the ones given in the LAST call to 'fprop': if there is a need to store an internal module state, this should be done using a specific port to store this state. Each Mat* pointer in the 'ports_gradient' vector can be one of:

Reimplemented from PLearn::OnlineLearningModule.

Reimplemented in PLearn::RBMSparse1DMatrixConnection.

Definition at line 706 of file RBMMatrixConnection.cc.

References applyWeightPenalty(), PLearn::RBMConnection::down_size, PLearn::fast_exact_is_equal(), PLearn::TMat< T >::isEmpty(), L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::OnlineLearningModule::nPorts(), PLASSERT, PLCHECK_MSG, PLearn::product(), PLearn::productTranspose(), PLearn::TMat< T >::resize(), PLearn::transposeProductScaleAcc(), PLearn::RBMConnection::up_size, weights, and PLearn::TMat< T >::width().

{

//TODO: add weights as port?

PLASSERT( ports_value.length() == nPorts()

&& ports_gradient.length() == nPorts() );

Mat* down = ports_value[0];

Mat* up = ports_value[1];

Mat* down_grad = ports_gradient[0];

Mat* up_grad = ports_gradient[1];

PLASSERT( down && !down->isEmpty() );

PLASSERT( up && !up->isEmpty() );

int batch_size = down->length();

PLASSERT( up->length() == batch_size );

// If we have up_grad

if( up_grad && !up_grad->isEmpty() )

{

// down_grad should not be provided

PLASSERT( !down_grad || down_grad->isEmpty() );

PLASSERT( up_grad->length() == batch_size );

PLASSERT( up_grad->width() == up_size );

// If we want down_grad

if( down_grad && down_grad->isEmpty() )

{

PLASSERT( down_grad->width() == down_size );

down_grad->resize(batch_size, down_size);

// down_grad = up_grad * weights

product(*down_grad, *up_grad, weights);

}

// weights -= learning_rate/n * up_grad' * down

transposeProductScaleAcc(weights, *up_grad, *down,

-learning_rate/batch_size, real(1));

}

else if( down_grad && !down_grad->isEmpty() )

{

PLASSERT( down_grad->length() == batch_size );

PLASSERT( down_grad->width() == down_size );

// If we wand up_grad

if( up_grad && up_grad->isEmpty() )

{

PLASSERT( up_grad->width() == up_size );

up_grad->resize(batch_size, up_size);

// up_grad = down_grad * weights'

productTranspose(*up_grad, *down_grad, weights);

}

// weights = -learning_rate/n * up' * down_grad

transposeProductScaleAcc(weights, *up, *down_grad,

-learning_rate/batch_size, real(1));

}

else

PLCHECK_MSG( false,

"Unknown port configuration" );

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

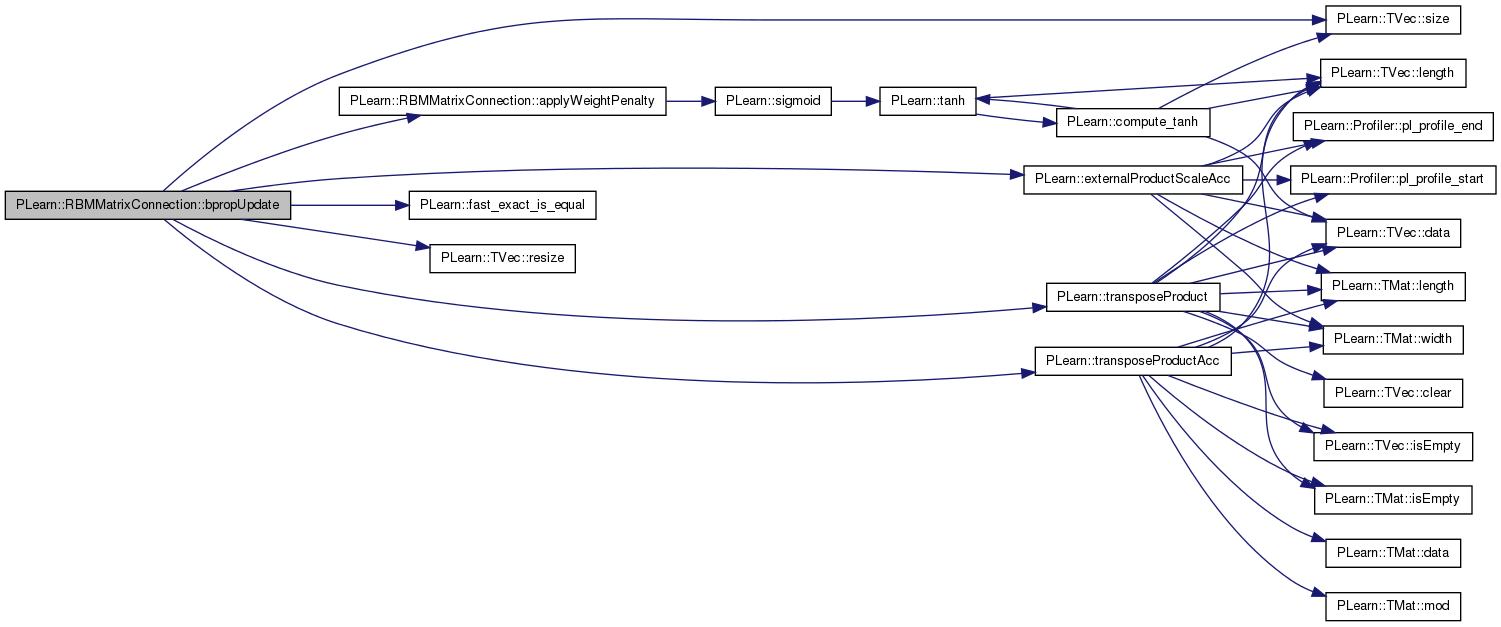

| void PLearn::RBMMatrixConnection::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

this version allows to obtain the input gradient as well N.B.

THE DEFAULT IMPLEMENTATION IN SUPER-CLASS JUST RAISES A PLERROR.

Reimplemented from PLearn::OnlineLearningModule.

Definition at line 596 of file RBMMatrixConnection.cc.

References applyWeightPenalty(), PLearn::RBMConnection::down_size, PLearn::externalProductScaleAcc(), PLearn::fast_exact_is_equal(), L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::transposeProduct(), PLearn::transposeProductAcc(), PLearn::RBMConnection::up_size, and weights.

{

PLASSERT( input.size() == down_size );

PLASSERT( output.size() == up_size );

PLASSERT( output_gradient.size() == up_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == down_size,

"Cannot resize input_gradient AND accumulate into it" );

// input_gradient += weights' * output_gradient

transposeProductAcc( input_gradient, weights, output_gradient );

}

else

{

input_gradient.resize( down_size );

// input_gradient = weights' * output_gradient

transposeProduct( input_gradient, weights, output_gradient );

}

// weights -= learning_rate * output_gradient * input'

externalProductScaleAcc( weights, output_gradient, input, -learning_rate );

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

| void PLearn::RBMMatrixConnection::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

SOON TO BE DEPRECATED, USE bpropAccUpdate(const TVec<Mat*>& ports_value, const TVec<Mat*>& ports_gradient)

Reimplemented from PLearn::OnlineLearningModule.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 628 of file RBMMatrixConnection.cc.

References applyWeightPenalty(), PLearn::RBMConnection::down_size, PLearn::fast_exact_is_equal(), L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLASSERT, PLASSERT_MSG, PLearn::product(), PLearn::productAcc(), PLearn::TMat< T >::resize(), PLearn::transposeProductScaleAcc(), PLearn::RBMConnection::up_size, weights, and PLearn::TMat< T >::width().

{

PLASSERT( inputs.width() == down_size );

PLASSERT( outputs.width() == up_size );

PLASSERT( output_gradients.width() == up_size );

if( accumulate )

{

PLASSERT_MSG( input_gradients.width() == down_size &&

input_gradients.length() == inputs.length(),

"Cannot resize input_gradients and accumulate into it" );

// input_gradients += output_gradient * weights

productAcc(input_gradients, output_gradients, weights);

}

else

{

input_gradients.resize(inputs.length(), down_size);

// input_gradients = output_gradient * weights

product(input_gradients, output_gradients, weights);

}

// weights -= learning_rate/n * output_gradients' * inputs

transposeProductScaleAcc(weights, output_gradients, inputs,

-learning_rate / inputs.length(), real(1));

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

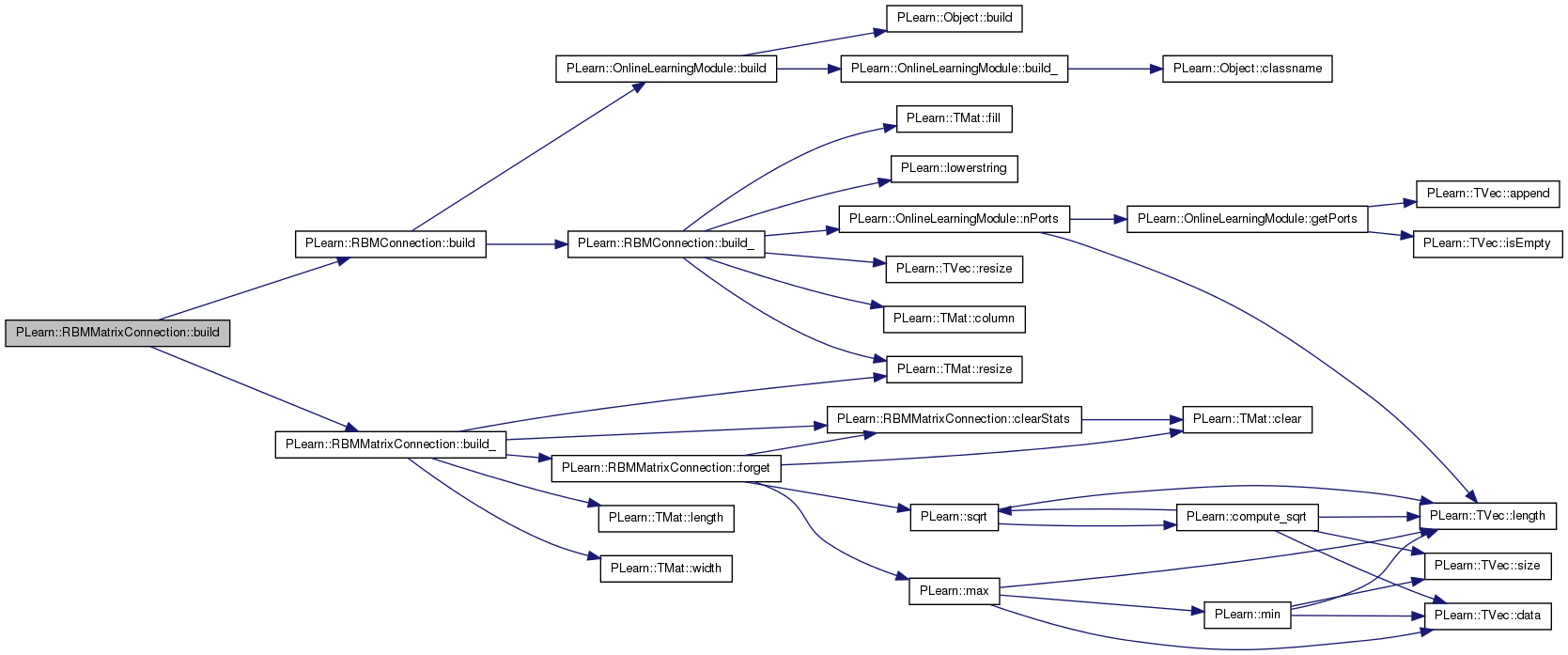

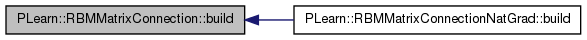

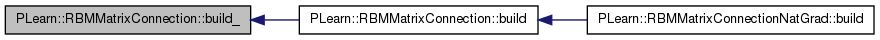

| void PLearn::RBMMatrixConnection::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 168 of file RBMMatrixConnection.cc.

References PLearn::RBMConnection::build(), and build_().

Referenced by PLearn::RBMMatrixConnectionNatGrad::build().

{

inherited::build();

build_();

}

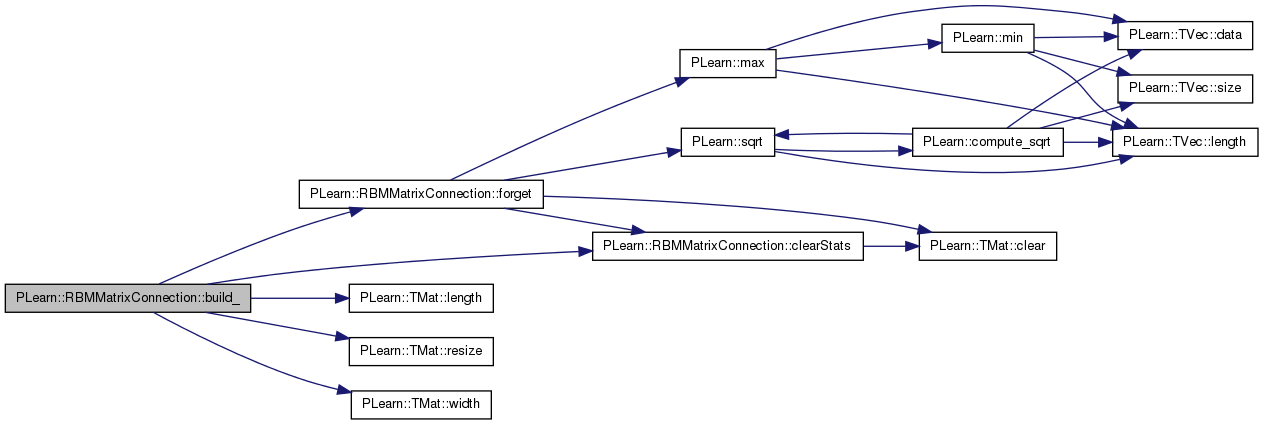

| void PLearn::RBMMatrixConnection::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 142 of file RBMMatrixConnection.cc.

References clearStats(), PLearn::RBMConnection::down_size, forget(), PLearn::TMat< T >::length(), PLearn::RBMConnection::momentum, PLearn::TMat< T >::resize(), PLearn::RBMConnection::up_size, weights, weights_inc, weights_neg_stats, weights_pos_stats, and PLearn::TMat< T >::width().

Referenced by build().

{

if( up_size <= 0 || down_size <= 0 )

return;

bool needs_forget = false; // do we need to reinitialize the parameters?

if( weights.length() != up_size ||

weights.width() != down_size )

{

weights.resize( up_size, down_size );

needs_forget = true;

}

weights_pos_stats.resize( up_size, down_size );

weights_neg_stats.resize( up_size, down_size );

if( momentum != 0. )

weights_inc.resize( up_size, down_size );

if( needs_forget )

forget();

clearStats();

}

| string PLearn::RBMMatrixConnection::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

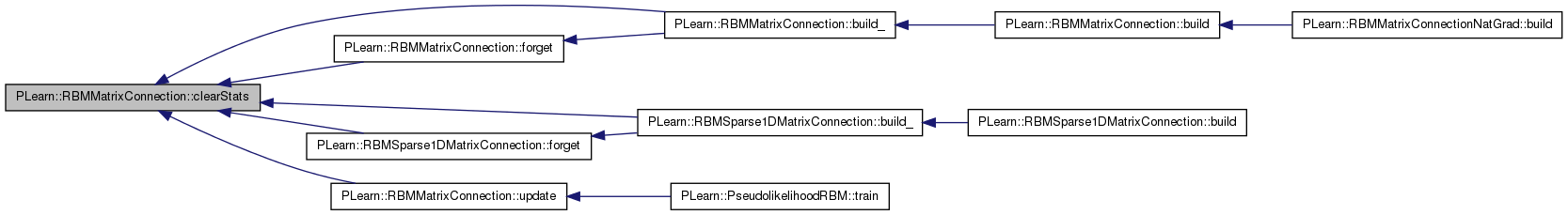

| void PLearn::RBMMatrixConnection::clearStats | ( | ) | [virtual] |

Clear all information accumulated during stats.

Implements PLearn::RBMConnection.

Definition at line 482 of file RBMMatrixConnection.cc.

References PLearn::TMat< T >::clear(), gibbs_initial_ma_coefficient, gibbs_ma_coefficient, PLearn::RBMConnection::neg_count, PLearn::RBMConnection::pos_count, weights_neg_stats, and weights_pos_stats.

Referenced by build_(), PLearn::RBMSparse1DMatrixConnection::build_(), PLearn::RBMSparse1DMatrixConnection::forget(), forget(), and update().

{

weights_pos_stats.clear();

weights_neg_stats.clear();

pos_count = 0;

neg_count = 0;

gibbs_ma_coefficient = gibbs_initial_ma_coefficient;

}

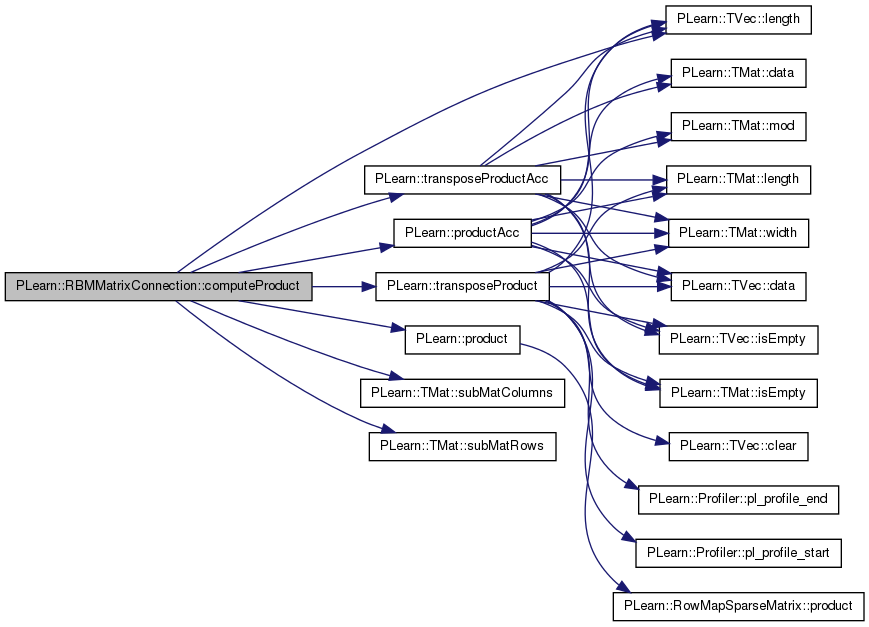

| void PLearn::RBMMatrixConnection::computeProduct | ( | int | start, |

| int | length, | ||

| const Vec & | activations, | ||

| bool | accumulate = false |

||

| ) | const [virtual] |

Computes the vectors of activation of "length" units, starting from "start", and stores (or add) them into "activations".

"start" indexes an up unit if "going_up", else a down unit.

Implements PLearn::RBMConnection.

Definition at line 496 of file RBMMatrixConnection.cc.

References PLearn::RBMConnection::down_size, PLearn::RBMConnection::going_up, PLearn::RBMConnection::input_vec, PLearn::TVec< T >::length(), PLASSERT, PLearn::product(), PLearn::productAcc(), PLearn::TMat< T >::subMatColumns(), PLearn::TMat< T >::subMatRows(), PLearn::transposeProduct(), PLearn::transposeProductAcc(), PLearn::RBMConnection::up_size, and weights.

{

PLASSERT( activations.length() == length );

if( going_up )

{

PLASSERT( start+length <= up_size );

// activations[i-start] += sum_j weights(i,j) input_vec[j]

if( accumulate )

productAcc( activations,

weights.subMatRows(start,length),

input_vec );

else

product( activations,

weights.subMatRows(start,length),

input_vec );

}

else

{

PLASSERT( start+length <= down_size );

// activations[i-start] += sum_j weights(j,i) input_vec[j]

if( accumulate )

transposeProductAcc( activations,

weights.subMatColumns(start,length),

input_vec );

else

transposeProduct( activations,

weights.subMatColumns(start,length),

input_vec );

}

}

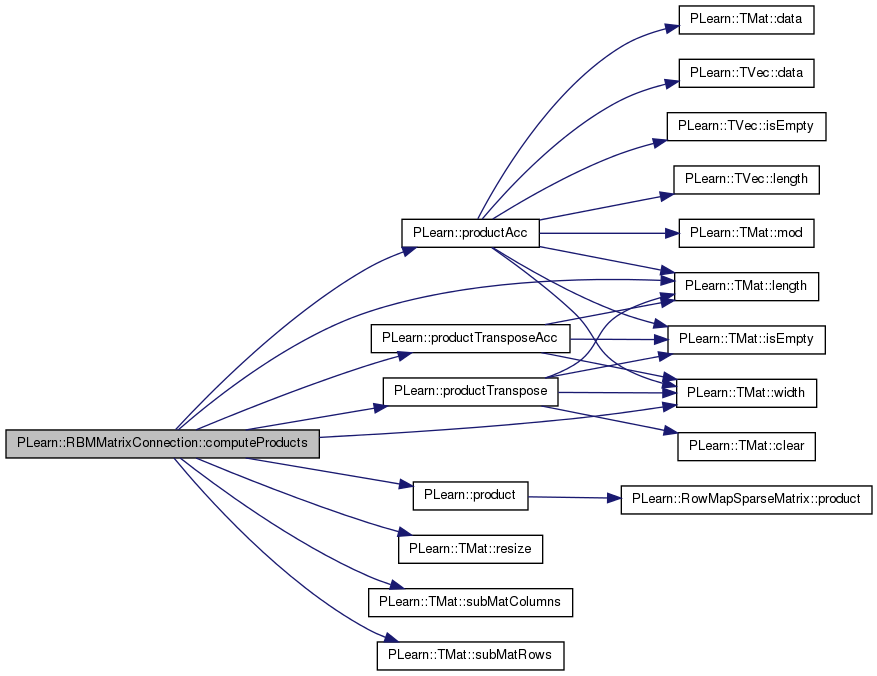

| void PLearn::RBMMatrixConnection::computeProducts | ( | int | start, |

| int | length, | ||

| Mat & | activations, | ||

| bool | accumulate = false |

||

| ) | const [virtual] |

Same as 'computeProduct' but for mini-batches.

Implements PLearn::RBMConnection.

Reimplemented in PLearn::RBMSparse1DMatrixConnection.

Definition at line 533 of file RBMMatrixConnection.cc.

References PLearn::RBMConnection::down_size, PLearn::RBMConnection::going_up, PLearn::RBMConnection::inputs_mat, PLearn::TMat< T >::length(), PLASSERT, PLearn::product(), PLearn::productAcc(), PLearn::productTranspose(), PLearn::productTransposeAcc(), PLearn::TMat< T >::resize(), PLearn::TMat< T >::subMatColumns(), PLearn::TMat< T >::subMatRows(), PLearn::RBMConnection::up_size, weights, and PLearn::TMat< T >::width().

{

PLASSERT( activations.width() == length );

activations.resize(inputs_mat.length(), length);

if( going_up )

{

PLASSERT( start+length <= up_size );

// activations(k, i-start) += sum_j weights(i,j) inputs_mat(k, j)

if( accumulate )

productTransposeAcc(activations,

inputs_mat,

weights.subMatRows(start,length));

else

productTranspose(activations,

inputs_mat,

weights.subMatRows(start,length));

}

else

{

PLASSERT( start+length <= down_size );

// activations(k, i-start) += sum_j weights(j,i) inputs_mat(k, j)

if( accumulate )

productAcc(activations,

inputs_mat,

weights.subMatColumns(start,length) );

else

product(activations,

inputs_mat,

weights.subMatColumns(start,length) );

}

}

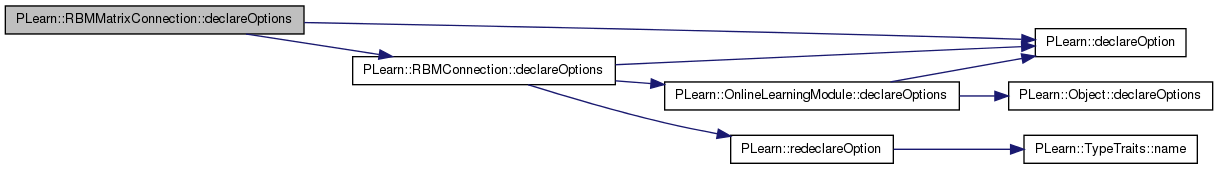

| void PLearn::RBMMatrixConnection::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 65 of file RBMMatrixConnection.cc.

References PLearn::OptionBase::advanced_level, PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::RBMConnection::declareOptions(), gibbs_initial_ma_coefficient, gibbs_ma_increment, gibbs_ma_schedule, L1_penalty_factor, L2_decrease_constant, L2_decrease_type, L2_n_updates, L2_penalty_factor, L2_shift, PLearn::OptionBase::learntoption, and weights.

Referenced by PLearn::RBMSparse1DMatrixConnection::declareOptions(), and PLearn::RBMMatrixConnectionNatGrad::declareOptions().

{

declareOption(ol, "weights", &RBMMatrixConnection::weights,

OptionBase::learntoption,

"Matrix containing unit-to-unit weights (up_size x"

" down_size)");

declareOption(ol, "gibbs_ma_schedule", &RBMMatrixConnection::gibbs_ma_schedule,

OptionBase::buildoption,

"Each element of this vector is a number of updates after which\n"

"the moving average coefficient is incremented (by incrementing\n"

"its inverse sigmoid by gibbs_ma_increment). After the last\n"

"increase has been made, the moving average coefficient stays constant.\n");

declareOption(ol, "gibbs_ma_increment",

&RBMMatrixConnection::gibbs_ma_increment,

OptionBase::buildoption,

"The increment in the inverse sigmoid of the moving "

"average coefficient\n"

"to apply after the number of updates reaches an element "

"of the gibbs_ma_schedule.\n");

declareOption(ol, "gibbs_initial_ma_coefficient",

&RBMMatrixConnection::gibbs_initial_ma_coefficient,

OptionBase::buildoption,

"Initial moving average coefficient for the negative phase "

"statistics in the Gibbs chain.\n");

declareOption(ol, "L1_penalty_factor",

&RBMMatrixConnection::L1_penalty_factor,

OptionBase::buildoption,

"Optional (default=0) factor of L1 regularization term, i.e.\n"

"minimize L1_penalty_factor * sum_{ij} |weights(i,j)| "

"during training.\n");

declareOption(ol, "L2_penalty_factor",

&RBMMatrixConnection::L2_penalty_factor,

OptionBase::buildoption,

"Optional (default=0) factor of L2 regularization term, i.e.\n"

"minimize 0.5 * L2_penalty_factor * sum_{ij} weights(i,j)^2 "

"during training.\n");

declareOption(ol, "L2_decrease_constant",

&RBMMatrixConnection::L2_decrease_constant,

OptionBase::buildoption,

"Parameter of the L2 penalty decrease (see L2_decrease_type).",

OptionBase::advanced_level);

declareOption(ol, "L2_shift",

&RBMMatrixConnection::L2_shift,

OptionBase::buildoption,

"Parameter of the L2 penalty decrease (see L2_decrease_type).",

OptionBase::advanced_level);

declareOption(ol, "L2_decrease_type",

&RBMMatrixConnection::L2_decrease_type,

OptionBase::buildoption,

"The kind of L2 decrease that is being applied. The decrease\n"

"consists in scaling the L2 penalty by a factor that depends on the\n"

"number 't' of times this penalty has been used to modify the\n"

"weights of the connection. It can be one of:\n"

" - 'one_over_t': 1 / (1 + t * L2_decrease_constant)\n"

" - 'sigmoid_like': sigmoid((L2_shift - t) * L2_decrease_constant)",

OptionBase::advanced_level);

declareOption(ol, "L2_n_updates",

&RBMMatrixConnection::L2_n_updates,

OptionBase::learntoption,

"Number of times that weights have been changed by the L2 penalty\n"

"update rule.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::RBMMatrixConnection::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 270 of file RBMMatrixConnection.h.

:

//##### Protected Member Functions ######################################

| RBMMatrixConnection * PLearn::RBMMatrixConnection::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

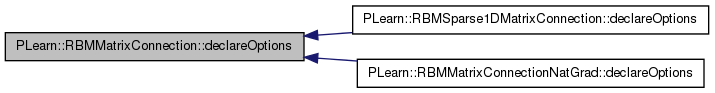

| void PLearn::RBMMatrixConnection::forget | ( | ) | [virtual] |

reset the parameters to the state they would be BEFORE starting training.

Note that this method is necessarily called from build().

Implements PLearn::OnlineLearningModule.

Reimplemented in PLearn::RBMSparse1DMatrixConnection.

Definition at line 921 of file RBMMatrixConnection.cc.

References PLearn::TMat< T >::clear(), clearStats(), d, PLearn::RBMConnection::down_size, PLearn::RBMConnection::initialization_method, L2_n_updates, PLearn::max(), PLWARNING, PLearn::OnlineLearningModule::random_gen, PLearn::sqrt(), PLearn::RBMConnection::up_size, and weights.

Referenced by build_().

{

clearStats();

if( initialization_method == "zero" )

weights.clear();

else

{

if( !random_gen )

{

PLWARNING( "RBMMatrixConnection: cannot forget() without"

" random_gen" );

return;

}

//random_gen->manual_seed(1827);

real d = 1. / max( down_size, up_size );

if( initialization_method == "uniform_sqrt" )

d = sqrt( d );

random_gen->fill_random_uniform( weights, -d, d );

}

L2_n_updates = 0;

}

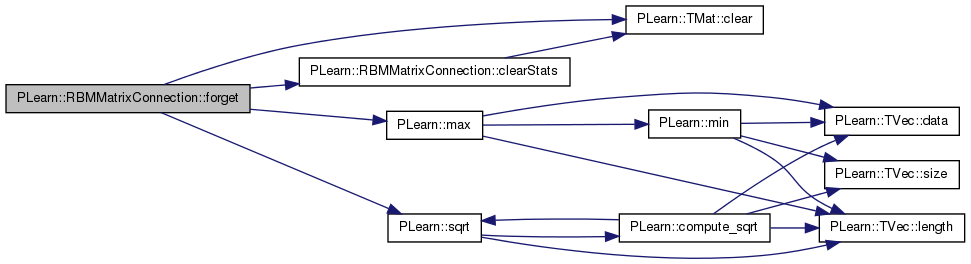

| void PLearn::RBMMatrixConnection::fprop | ( | const Vec & | input, |

| const Mat & | rbm_weights, | ||

| Vec & | output | ||

| ) | const [virtual] |

Adapt based on the output gradient: this method should only be called just after a corresponding fprop; it should be called with the same arguments as fprop for the first two arguments (and output should not have been modified since then).

Since sub-classes are supposed to learn ONLINE, the object is 'ready-to-be-used' just after any bpropUpdate. N.B. A DEFAULT IMPLEMENTATION IS PROVIDED IN THE SUPER-CLASS, WHICH JUST CALLS bpropUpdate(input, output, input_gradient, output_gradient) AND IGNORES INPUT GRADIENT. given the input and the connection weights, compute the output (possibly resize it appropriately)

Reimplemented in PLearn::RBMSparse1DMatrixConnection.

Definition at line 571 of file RBMMatrixConnection.cc.

References PLearn::product().

{

product( output, rbm_weights, input );

}

| void PLearn::RBMMatrixConnection::getAllWeights | ( | Mat & | rbm_weights | ) | const [virtual] |

provide the internal weight values (not a copy)

Reimplemented from PLearn::RBMConnection.

Definition at line 580 of file RBMMatrixConnection.cc.

References weights.

{

rbm_weights = weights;

}

| OptionList & PLearn::RBMMatrixConnection::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

| OptionMap & PLearn::RBMMatrixConnection::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

| RemoteMethodMap & PLearn::RBMMatrixConnection::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 50 of file RBMMatrixConnection.cc.

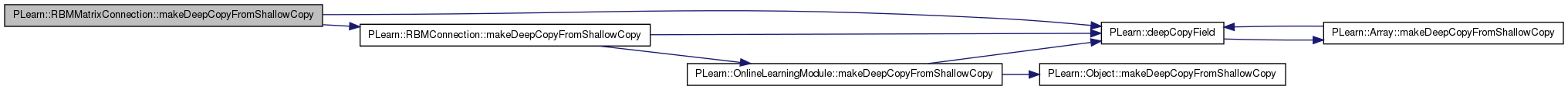

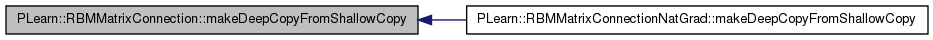

| void PLearn::RBMMatrixConnection::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad.

Definition at line 175 of file RBMMatrixConnection.cc.

References PLearn::deepCopyField(), PLearn::RBMConnection::makeDeepCopyFromShallowCopy(), weights, weights_inc, weights_neg_stats, and weights_pos_stats.

Referenced by PLearn::RBMMatrixConnectionNatGrad::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(weights, copies);

deepCopyField(weights_pos_stats, copies);

deepCopyField(weights_neg_stats, copies);

deepCopyField(weights_inc, copies);

}

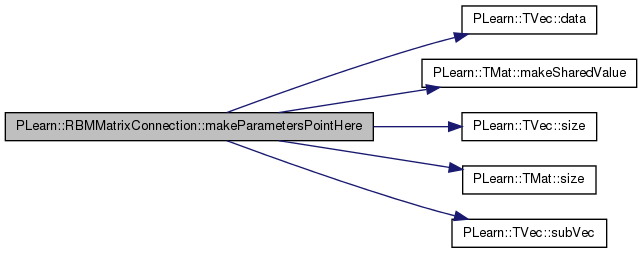

Make the parameters data be sub-vectors of the given global_parameters.

The argument should have size >= nParameters. The result is a Vec that starts just after this object's parameters end, i.e. result = global_parameters.subVec(nParameters(),global_parameters.size()-nParameters()); This allows to easily chain calls of this method on multiple RBMParameters.

Implements PLearn::RBMConnection.

Definition at line 968 of file RBMMatrixConnection.cc.

References PLearn::TVec< T >::data(), m, PLearn::TMat< T >::makeSharedValue(), n, PLERROR, PLearn::TVec< T >::size(), PLearn::TMat< T >::size(), PLearn::TVec< T >::subVec(), and weights.

{

int n=weights.size();

int m = global_parameters.size();

if (m<n)

PLERROR("RBMMatrixConnection::makeParametersPointHere: argument has length %d, should be longer than nParameters()=%d",m,n);

real* p = global_parameters.data();

weights.makeSharedValue(p,n);

return global_parameters.subVec(n,m-n);

}

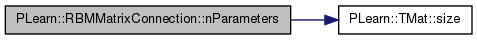

| int PLearn::RBMMatrixConnection::nParameters | ( | ) | const [virtual] |

optionally perform some processing after training, or after a series of fprop/bpropUpdate calls to prepare the model for truly out-of-sample operation.

return the number of parameters

THE DEFAULT IMPLEMENTATION PROVIDED IN THE SUPER-CLASS DOES NOT DO ANYTHING. return the number of parameters

Implements PLearn::RBMConnection.

Reimplemented in PLearn::RBMSparse1DMatrixConnection.

Definition at line 958 of file RBMMatrixConnection.cc.

References PLearn::TMat< T >::size(), and weights.

{

return weights.size();

}

| void PLearn::RBMMatrixConnection::petiteCulotteOlivierCD | ( | const Vec & | pos_down_values, |

| const Vec & | pos_up_values, | ||

| const Vec & | neg_down_values, | ||

| const Vec & | neg_up_values, | ||

| Mat & | weights_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

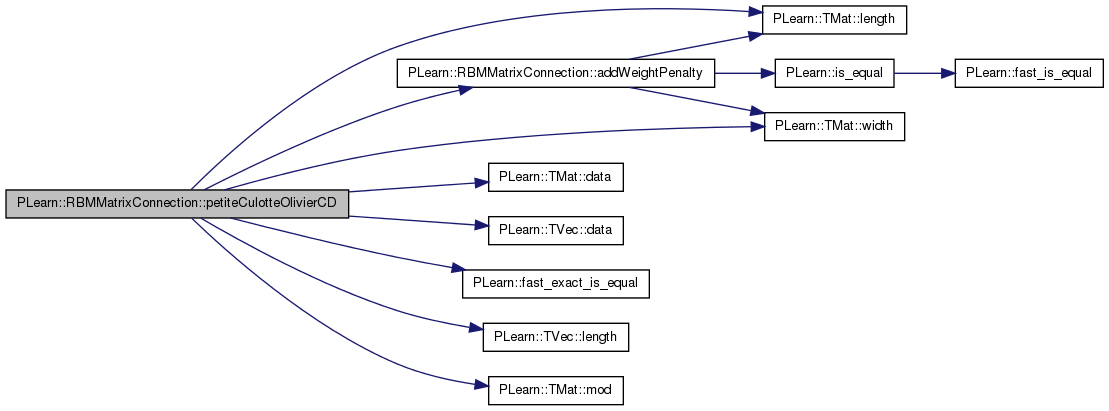

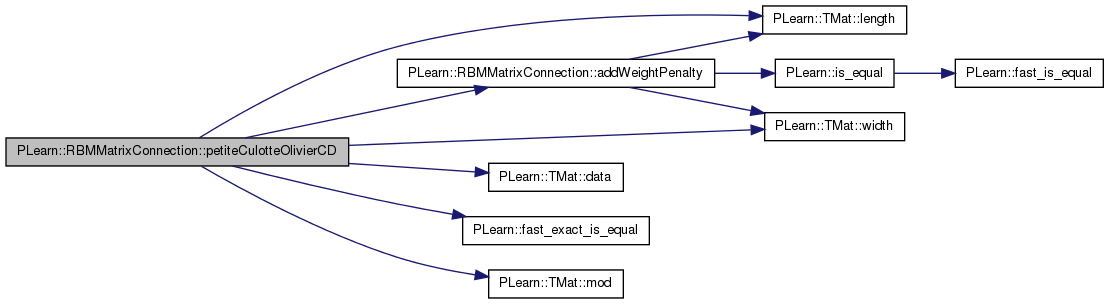

Computes the contrastive divergence gradient with respect to the weights given the positive and negative phase values.

Reimplemented from PLearn::RBMConnection.

Definition at line 809 of file RBMMatrixConnection.cc.

References addWeightPenalty(), PLearn::TMat< T >::data(), PLearn::TVec< T >::data(), PLearn::fast_exact_is_equal(), i, j, L1_penalty_factor, L2_penalty_factor, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::TMat< T >::mod(), PLASSERT, w, weights, and PLearn::TMat< T >::width().

{

int l = weights.length();

int w = weights.width();

PLASSERT( pos_up_values.length() == l );

PLASSERT( neg_up_values.length() == l );

PLASSERT( pos_down_values.length() == w );

PLASSERT( neg_down_values.length() == w );

real* w_i = weights_gradient.data();

real* puv_i = pos_up_values.data();

real* nuv_i = neg_up_values.data();

real* pdv = pos_down_values.data();

real* ndv = neg_down_values.data();

int w_mod = weights_gradient.mod();

if(accumulate)

{

for( int i=0 ; i<l ; i++, w_i += w_mod, puv_i++, nuv_i++ )

for( int j=0 ; j<w ; j++ )

w_i[j] += *nuv_i * ndv[j] - *puv_i * pdv[j] ;

}

else

{

for( int i=0 ; i<l ; i++, w_i += w_mod, puv_i++, nuv_i++ )

for( int j=0 ; j<w ; j++ )

w_i[j] = *nuv_i * ndv[j] - *puv_i * pdv[j] ;

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

addWeightPenalty(weights, weights_gradient);

}

| void PLearn::RBMMatrixConnection::petiteCulotteOlivierCD | ( | Mat & | weights_gradient, |

| bool | accumulate = false |

||

| ) | [virtual] |

Computes the contrastive divergence gradient with respect to the weights It should be noted that bpropCD does not call clearstats().

Reimplemented from PLearn::RBMConnection.

Definition at line 777 of file RBMMatrixConnection.cc.

References addWeightPenalty(), PLearn::TMat< T >::data(), PLearn::fast_exact_is_equal(), i, j, L1_penalty_factor, L2_penalty_factor, PLearn::TMat< T >::length(), PLearn::TMat< T >::mod(), PLearn::RBMConnection::neg_count, PLearn::RBMConnection::pos_count, w, weights, weights_neg_stats, weights_pos_stats, and PLearn::TMat< T >::width().

{

int l = weights_gradient.length();

int w = weights_gradient.width();

real* w_i = weights_gradient.data();

real* wps_i = weights_pos_stats.data();

real* wns_i = weights_neg_stats.data();

int w_mod = weights_gradient.mod();

int wps_mod = weights_pos_stats.mod();

int wns_mod = weights_neg_stats.mod();

if(accumulate)

{

for( int i=0 ; i<l ; i++, w_i+=w_mod, wps_i+=wps_mod, wns_i+=wns_mod )

for( int j=0 ; j<w ; j++ )

w_i[j] += wns_i[j]/pos_count - wps_i[j]/neg_count;

}

else

{

for( int i=0 ; i<l ; i++, w_i+=w_mod, wps_i+=wps_mod, wns_i+=wns_mod )

for( int j=0 ; j<w ; j++ )

w_i[j] = wns_i[j]/pos_count - wps_i[j]/neg_count;

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

addWeightPenalty(weights, weights_gradient);

}

| void PLearn::RBMMatrixConnection::petiteCulotteOlivierUpdate | ( | const Vec & | input, |

| const Mat & | rbm_weights, | ||

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| Mat & | rbm_weights_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

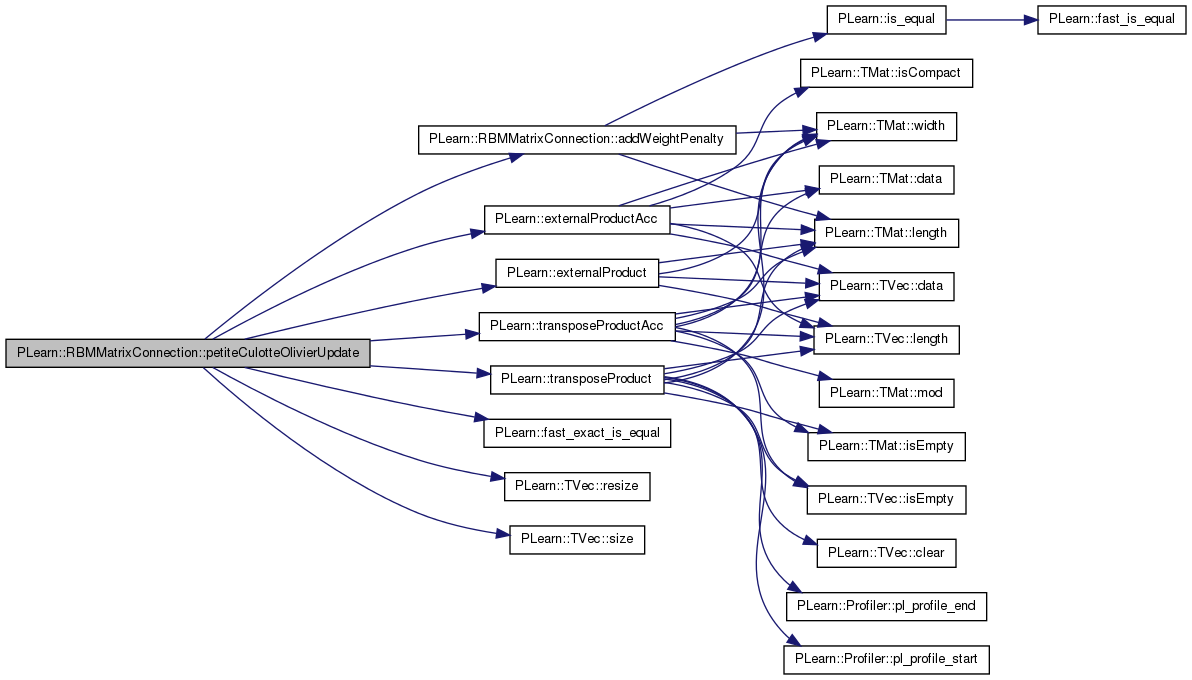

| ) | [virtual] |

back-propagates the output gradient to the input and the weights (the weights are not updated)

Reimplemented from PLearn::RBMConnection.

Definition at line 661 of file RBMMatrixConnection.cc.

References addWeightPenalty(), PLearn::RBMConnection::down_size, PLearn::externalProduct(), PLearn::externalProductAcc(), PLearn::fast_exact_is_equal(), L1_penalty_factor, L2_penalty_factor, PLASSERT, PLASSERT_MSG, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::transposeProduct(), PLearn::transposeProductAcc(), and PLearn::RBMConnection::up_size.

{

PLASSERT( input.size() == down_size );

PLASSERT( output.size() == up_size );

PLASSERT( output_gradient.size() == up_size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == down_size,

"Cannot resize input_gradient AND accumulate into it" );

// input_gradient += rbm_weights' * output_gradient

transposeProductAcc( input_gradient, rbm_weights, output_gradient );

// rbm_weights_gradient += output_gradient' * input

externalProductAcc( rbm_weights_gradient, output_gradient,

input);

}

else

{

input_gradient.resize( down_size );

// input_gradient = rbm_weights' * output_gradient

transposeProduct( input_gradient, rbm_weights, output_gradient );

// rbm_weights_gradient = output_gradient' * input

externalProduct( rbm_weights_gradient, output_gradient,

input);

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

addWeightPenalty(rbm_weights, rbm_weights_gradient);

}

| void PLearn::RBMMatrixConnection::setAllWeights | ( | const Mat & | rbm_weights | ) | [virtual] |

set the internal weight values to rbm_weights (not a copy)

Reimplemented from PLearn::RBMConnection.

Definition at line 588 of file RBMMatrixConnection.cc.

References weights.

{

weights = rbm_weights;

}

| void PLearn::RBMMatrixConnection::update | ( | const Mat & | pos_down_values, |

| const Mat & | pos_up_values, | ||

| const Mat & | neg_down_values, | ||

| const Mat & | neg_up_values | ||

| ) | [virtual] |

Not implemented.

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 339 of file RBMMatrixConnection.cc.

References applyWeightPenalty(), PLearn::fast_exact_is_equal(), L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLearn::RBMConnection::momentum, PLASSERT, PLERROR, PLearn::transposeProductScaleAcc(), weights, and PLearn::TMat< T >::width().

{

// weights += learning_rate * ( h_0 v_0' - h_1 v_1' );

// or:

// weights[i][j] += learning_rate * (h_0[i] v_0[j] - h_1[i] v_1[j]);

PLASSERT( pos_up_values.width() == weights.length() );

PLASSERT( neg_up_values.width() == weights.length() );

PLASSERT( pos_down_values.width() == weights.width() );

PLASSERT( neg_down_values.width() == weights.width() );

if( momentum == 0. )

{

// We use the average gradient over a mini-batch.

real avg_lr = learning_rate / pos_down_values.length();

transposeProductScaleAcc(weights, pos_up_values, pos_down_values,

avg_lr, real(1));

transposeProductScaleAcc(weights, neg_up_values, neg_down_values,

-avg_lr, real(1));

}

else

{

PLERROR("RBMMatrixConnection::update minibatch with momentum - Not implemented");

/*

// ensure that weights_inc has the right size

weights_inc.resize( l, w );

// The update rule becomes:

// weights_inc = momentum * weights_inc

// + learning_rate * ( h_0 v_0' - h_1 v_1' );

// weights += weights_inc;

real* winc_i = weights_inc.data();

int winc_mod = weights_inc.mod();

for( int i=0 ; i<l ; i++, w_i += w_mod, winc_i += winc_mod,

puv_i++, nuv_i++ )

for( int j=0 ; j<w ; j++ )

{

winc_i[j] = momentum * winc_i[j]

+ learning_rate * (*puv_i * pdv[j] - *nuv_i * ndv[j]);

w_i[j] += winc_i[j];

}

*/

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

| void PLearn::RBMMatrixConnection::update | ( | const Vec & | pos_down_values, |

| const Vec & | pos_up_values, | ||

| const Vec & | neg_down_values, | ||

| const Vec & | neg_up_values | ||

| ) | [virtual] |

Updates parameters according to contrastive divergence gradient, not using the statistics but the explicit values passed.

Reimplemented from PLearn::RBMConnection.

Definition at line 284 of file RBMMatrixConnection.cc.

References applyWeightPenalty(), PLearn::TVec< T >::data(), PLearn::TMat< T >::data(), PLearn::fast_exact_is_equal(), i, j, L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TVec< T >::length(), PLearn::TMat< T >::length(), PLearn::TMat< T >::mod(), PLearn::RBMConnection::momentum, PLASSERT, PLearn::TMat< T >::resize(), w, weights, weights_inc, and PLearn::TMat< T >::width().

{

// weights += learning_rate * ( h_0 v_0' - h_1 v_1' );

// or:

// weights[i][j] += learning_rate * (h_0[i] v_0[j] - h_1[i] v_1[j]);

int l = weights.length();

int w = weights.width();

PLASSERT( pos_up_values.length() == l );

PLASSERT( neg_up_values.length() == l );

PLASSERT( pos_down_values.length() == w );

PLASSERT( neg_down_values.length() == w );

real* w_i = weights.data();

real* puv_i = pos_up_values.data();

real* nuv_i = neg_up_values.data();

real* pdv = pos_down_values.data();

real* ndv = neg_down_values.data();

int w_mod = weights.mod();

if( momentum == 0. )

{

for( int i=0 ; i<l ; i++, w_i += w_mod, puv_i++, nuv_i++ )

for( int j=0 ; j<w ; j++ )

w_i[j] += learning_rate * (*puv_i * pdv[j] - *nuv_i * ndv[j]);

}

else

{

// ensure that weights_inc has the right size

weights_inc.resize( l, w );

// The update rule becomes:

// weights_inc = momentum * weights_inc

// - learning_rate * ( h_0 v_0' - h_1 v_1' );

// weights += weights_inc;

real* winc_i = weights_inc.data();

int winc_mod = weights_inc.mod();

for( int i=0 ; i<l ; i++, w_i += w_mod, winc_i += winc_mod,

puv_i++, nuv_i++ )

for( int j=0 ; j<w ; j++ )

{

winc_i[j] = momentum * winc_i[j]

+ learning_rate * (*puv_i * pdv[j] - *nuv_i * ndv[j]);

w_i[j] += winc_i[j];

}

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

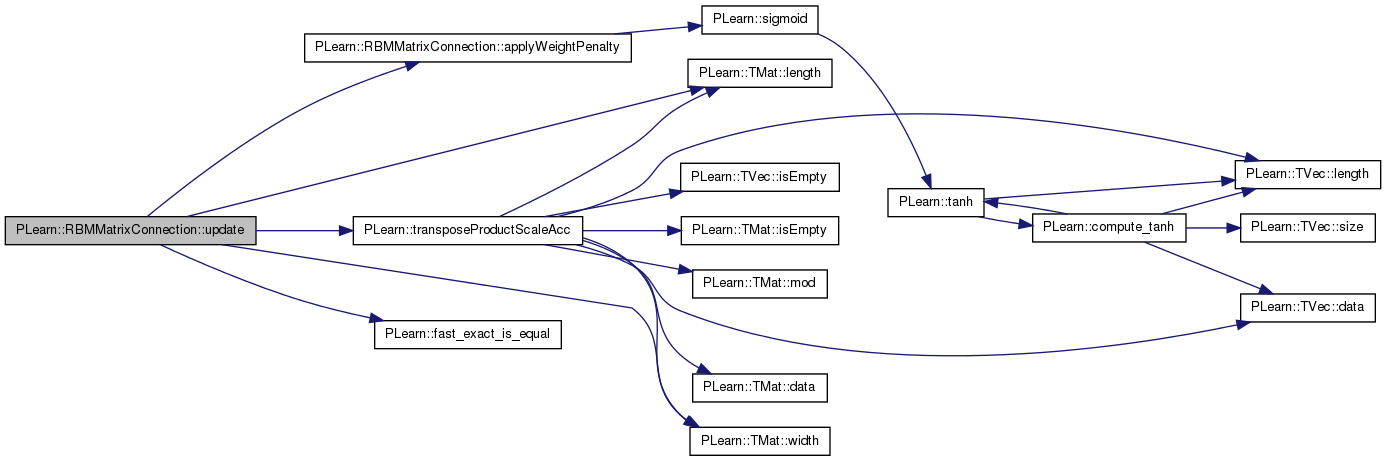

| void PLearn::RBMMatrixConnection::update | ( | ) | [virtual] |

Updates parameters according to contrastive divergence gradient.

Implements PLearn::RBMConnection.

Definition at line 229 of file RBMMatrixConnection.cc.

References applyWeightPenalty(), clearStats(), PLearn::TMat< T >::data(), PLearn::fast_exact_is_equal(), i, j, L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLearn::TMat< T >::mod(), PLearn::RBMConnection::momentum, PLearn::RBMConnection::neg_count, PLearn::RBMConnection::pos_count, PLearn::TMat< T >::resize(), w, weights, weights_inc, weights_neg_stats, weights_pos_stats, and PLearn::TMat< T >::width().

Referenced by PLearn::PseudolikelihoodRBM::train().

{

// updates parameters

//weights += learning_rate * (weights_pos_stats/pos_count

// - weights_neg_stats/neg_count)

real pos_factor = learning_rate / pos_count;

real neg_factor = -learning_rate / neg_count;

int l = weights.length();

int w = weights.width();

real* w_i = weights.data();

real* wps_i = weights_pos_stats.data();

real* wns_i = weights_neg_stats.data();

int w_mod = weights.mod();

int wps_mod = weights_pos_stats.mod();

int wns_mod = weights_neg_stats.mod();

if( momentum == 0. )

{

// no need to use weights_inc

for( int i=0 ; i<l ; i++, w_i+=w_mod, wps_i+=wps_mod, wns_i+=wns_mod )

for( int j=0 ; j<w ; j++ )

w_i[j] += pos_factor * wps_i[j] + neg_factor * wns_i[j];

}

else

{

// ensure that weights_inc has the right size

weights_inc.resize( l, w );

// The update rule becomes:

// weights_inc = momentum * weights_inc

// - learning_rate * (weights_pos_stats/pos_count

// - weights_neg_stats/neg_count);

// weights += weights_inc;

real* winc_i = weights_inc.data();

int winc_mod = weights_inc.mod();

for( int i=0 ; i<l ; i++, w_i += w_mod, wps_i += wps_mod,

wns_i += wns_mod, winc_i += winc_mod )

for( int j=0 ; j<w ; j++ )

{

winc_i[j] = momentum * winc_i[j]

+ pos_factor * wps_i[j] + neg_factor * wns_i[j];

w_i[j] += winc_i[j];

}

}

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

clearStats();

}

| void PLearn::RBMMatrixConnection::updateCDandGibbs | ( | const Mat & | pos_down_values, |

| const Mat & | pos_up_values, | ||

| const Mat & | cd_neg_down_values, | ||

| const Mat & | cd_neg_up_values, | ||

| const Mat & | gibbs_neg_down_values, | ||

| const Mat & | gibbs_neg_up_values, | ||

| real | background_gibbs_update_ratio | ||

| ) | [virtual] |

Reimplemented from PLearn::RBMConnection.

Definition at line 394 of file RBMMatrixConnection.cc.

References applyWeightPenalty(), PLearn::fast_exact_is_equal(), gibbs_ma_coefficient, L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLearn::multiplyAcc(), PLearn::RBMConnection::neg_count, PLearn::transposeProductScaleAcc(), weights, and weights_neg_stats.

{

real normalize_factor = 1.0/pos_down_values.length();

// neg_stats <-- gibbs_chain_statistics_forgetting_factor * neg_stats

// +(1-gibbs_chain_statistics_forgetting_factor)

// * gibbs_neg_up_values'*gibbs_neg_down_values/minibatch_size

if (neg_count==0)

transposeProductScaleAcc(weights_neg_stats, gibbs_neg_up_values,

gibbs_neg_down_values,

normalize_factor, real(0));

else

transposeProductScaleAcc(weights_neg_stats,

gibbs_neg_up_values,

gibbs_neg_down_values,

normalize_factor*(1-gibbs_ma_coefficient),

gibbs_ma_coefficient);

neg_count++;

// delta w = lrate * ( pos_up_values'*pos_down_values

// - ( background_gibbs_update_ratio*neg_stats

// +(1-background_gibbs_update_ratio)

// * cd_neg_up_values'*cd_neg_down_values/minibatch_size))

transposeProductScaleAcc(weights, pos_up_values, pos_down_values,

learning_rate*normalize_factor, real(1));

multiplyAcc(weights, weights_neg_stats,

-learning_rate*background_gibbs_update_ratio);

transposeProductScaleAcc(weights, cd_neg_up_values, cd_neg_down_values,

-learning_rate*(1-background_gibbs_update_ratio)*normalize_factor,

real(1));

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

| void PLearn::RBMMatrixConnection::updateGibbs | ( | const Mat & | pos_down_values, |

| const Mat & | pos_up_values, | ||

| const Mat & | gibbs_neg_down_values, | ||

| const Mat & | gibbs_neg_up_values | ||

| ) | [virtual] |

Reimplemented from PLearn::RBMConnection.

Definition at line 434 of file RBMMatrixConnection.cc.

References applyWeightPenalty(), PLearn::endl(), PLearn::fast_exact_is_equal(), gibbs_ma_coefficient, gibbs_ma_increment, gibbs_ma_schedule, i, PLearn::inverse_sigmoid(), L1_penalty_factor, L2_penalty_factor, PLearn::RBMConnection::learning_rate, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), PLearn::multiply(), PLearn::multiplyAcc(), PLearn::multiplyScaledAdd(), PLearn::RBMConnection::neg_count, PLearn::TMat< T >::resize(), PLearn::sigmoid(), PLearn::transposeProduct(), PLearn::transposeProductScaleAcc(), weights, weights_neg_stats, and PLearn::TMat< T >::width().

{

int minibatch_size = pos_down_values.length();

real normalize_factor = 1.0/minibatch_size;

// neg_stats <-- gibbs_chain_statistics_forgetting_factor * neg_stats

// +(1-gibbs_chain_statistics_forgetting_factor)

// * gibbs_neg_up_values'*gibbs_neg_down_values

static Mat tmp;

tmp.resize(weights.length(),weights.width());

transposeProduct(tmp, gibbs_neg_up_values, gibbs_neg_down_values);

if (neg_count==0)

multiply(weights_neg_stats,tmp,normalize_factor);

else

multiplyScaledAdd(tmp,gibbs_ma_coefficient,

normalize_factor*(1-gibbs_ma_coefficient),

weights_neg_stats);

neg_count++;

bool increase_ma=false;

for (int i=0;i<gibbs_ma_schedule.length();i++)

if (gibbs_ma_schedule[i]==neg_count*minibatch_size)

{

increase_ma=true;

break;

}

if (increase_ma)

{

gibbs_ma_coefficient = sigmoid(gibbs_ma_increment + inverse_sigmoid(gibbs_ma_coefficient));

cout << "new coefficient = " << gibbs_ma_coefficient << " at example " << neg_count*minibatch_size << endl;

}

// delta w = lrate * ( pos_up_values'*pos_down_values/minibatch_size - neg_stats )

transposeProductScaleAcc(weights, pos_up_values, pos_down_values,

learning_rate*normalize_factor, real(1));

multiplyAcc(weights, weights_neg_stats, -learning_rate);

if(!fast_exact_is_equal(L1_penalty_factor,0) || !fast_exact_is_equal(L2_penalty_factor,0))

applyWeightPenalty();

}

Reimplemented from PLearn::RBMConnection.

Reimplemented in PLearn::RBMMatrixConnectionNatGrad, and PLearn::RBMSparse1DMatrixConnection.

Definition at line 270 of file RBMMatrixConnection.h.

Definition at line 67 of file RBMMatrixConnection.h.

Referenced by clearStats(), and declareOptions().

used for Gibbs chain methods only

Definition at line 86 of file RBMMatrixConnection.h.

Referenced by clearStats(), updateCDandGibbs(), and updateGibbs().

Definition at line 66 of file RBMMatrixConnection.h.

Referenced by declareOptions(), and updateGibbs().

background gibbs chain options each element of this vector is a number of updates after which the moving average coefficient is incremented (by incrementing its inverse sigmoid by gibbs_ma_increment).

After the last increase has been made, the moving average coefficient stays constant.

Definition at line 65 of file RBMMatrixConnection.h.

Referenced by declareOptions(), and updateGibbs().

Optional (default=0) factor of L1 regularization term.

Definition at line 72 of file RBMMatrixConnection.h.

Referenced by addWeightPenalty(), applyWeightPenalty(), PLearn::RBMSparse1DMatrixConnection::bpropAccUpdate(), bpropAccUpdate(), bpropUpdate(), PLearn::RBMSparse1DMatrixConnection::bpropUpdate(), declareOptions(), petiteCulotteOlivierCD(), petiteCulotteOlivierUpdate(), PLearn::RBMSparse1DMatrixConnection::update(), update(), updateCDandGibbs(), and updateGibbs().

Definition at line 77 of file RBMMatrixConnection.h.

Referenced by addWeightPenalty(), applyWeightPenalty(), and declareOptions().

Definition at line 79 of file RBMMatrixConnection.h.

Referenced by applyWeightPenalty(), and declareOptions().

Definition at line 80 of file RBMMatrixConnection.h.

Referenced by applyWeightPenalty(), declareOptions(), PLearn::RBMSparse1DMatrixConnection::forget(), and forget().

Optional (default=0) factor of L2 regularization term.

Definition at line 75 of file RBMMatrixConnection.h.

Referenced by addWeightPenalty(), applyWeightPenalty(), PLearn::RBMSparse1DMatrixConnection::bpropAccUpdate(), bpropAccUpdate(), bpropUpdate(), PLearn::RBMSparse1DMatrixConnection::bpropUpdate(), declareOptions(), petiteCulotteOlivierCD(), petiteCulotteOlivierUpdate(), PLearn::RBMSparse1DMatrixConnection::update(), update(), updateCDandGibbs(), and updateGibbs().

Definition at line 78 of file RBMMatrixConnection.h.

Referenced by addWeightPenalty(), applyWeightPenalty(), and declareOptions().

Matrix containing unit-to-unit weights (input_size × output_size)

Definition at line 83 of file RBMMatrixConnection.h.

Referenced by applyWeightPenalty(), PLearn::RBMSparse1DMatrixConnection::bpropAccUpdate(), bpropAccUpdate(), PLearn::RBMSparse1DMatrixConnection::bpropUpdate(), PLearn::RBMMatrixConnectionNatGrad::bpropUpdate(), bpropUpdate(), PLearn::RBMSparse1DMatrixConnection::build_(), build_(), computeProduct(), PLearn::RBMSparse1DMatrixConnection::computeProducts(), computeProducts(), declareOptions(), PLearn::RBMSparse1DMatrixConnection::forget(), forget(), getAllWeights(), PLearn::DenoisingRecurrentNet::getDynamicConnectionsWeightMatrix(), PLearn::DenoisingRecurrentNet::getDynamicReconstructionConnectionsWeightMatrix(), PLearn::DenoisingRecurrentNet::getInputConnectionsWeightMatrix(), PLearn::DenoisingRecurrentNet::getTargetConnectionsWeightMatrix(), PLearn::RBMSparse1DMatrixConnection::getWeights(), makeDeepCopyFromShallowCopy(), makeParametersPointHere(), PLearn::RBMSparse1DMatrixConnection::nParameters(), nParameters(), petiteCulotteOlivierCD(), setAllWeights(), PLearn::PseudolikelihoodRBM::train(), PLearn::RBMSparse1DMatrixConnection::update(), PLearn::RBMMatrixConnectionNatGrad::update(), update(), updateCDandGibbs(), and updateGibbs().

Used if momentum != 0.

Definition at line 99 of file RBMMatrixConnection.h.

Referenced by build_(), PLearn::RBMSparse1DMatrixConnection::build_(), makeDeepCopyFromShallowCopy(), and update().

Accumulates negative contribution to the weights' gradient.

Definition at line 96 of file RBMMatrixConnection.h.

Referenced by PLearn::RBMSparse1DMatrixConnection::accumulateNegStats(), accumulateNegStats(), build_(), PLearn::RBMSparse1DMatrixConnection::build_(), clearStats(), makeDeepCopyFromShallowCopy(), petiteCulotteOlivierCD(), update(), updateCDandGibbs(), and updateGibbs().

Accumulates positive contribution to the weights' gradient.

Definition at line 93 of file RBMMatrixConnection.h.

Referenced by accumulatePosStats(), PLearn::RBMSparse1DMatrixConnection::accumulatePosStats(), build_(), PLearn::RBMSparse1DMatrixConnection::build_(), clearStats(), makeDeepCopyFromShallowCopy(), petiteCulotteOlivierCD(), and update().

1.7.4

1.7.4