|

PLearn 0.1

|

|

PLearn 0.1

|

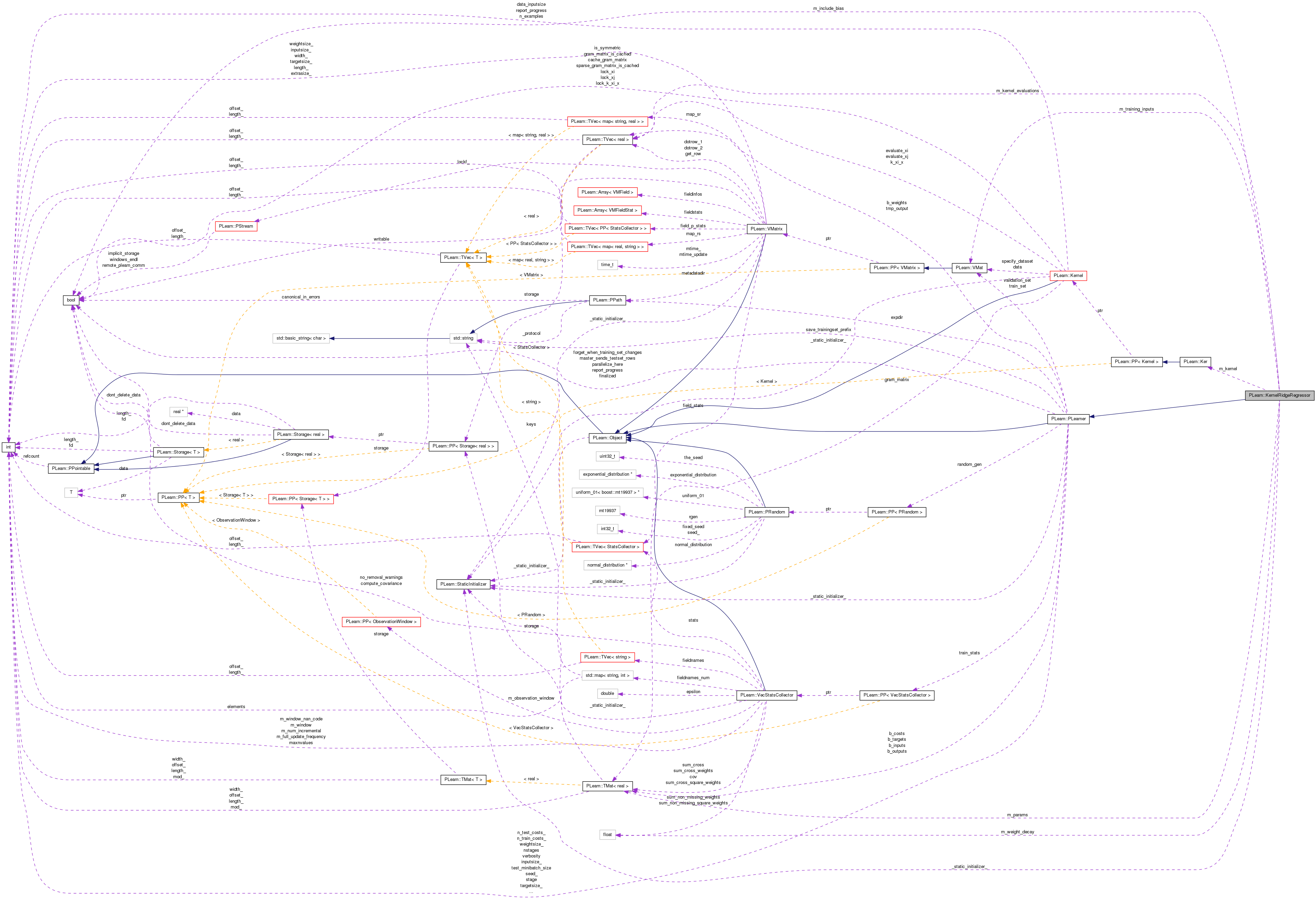

Implements a 'kernelized' version of linear ridge regression. More...

#include <KernelRidgeRegressor.h>

Public Member Functions | |

| KernelRidgeRegressor () | |

| Default constructor. | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutputs (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual KernelRidgeRegressor * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| bool | m_include_bias |

| Whether to include a bias term in the regression (true by default) | |

| Ker | m_kernel |

| Kernel to use for the computation. | |

| real | m_weight_decay |

| Weight decay coefficient (default = 0) | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| Mat | m_params |

| Vector of learned parameters, determined from the equation (M + lambda I)^-1 y (don't forget that y can be a matrix for multivariate output problems) | |

| VMat | m_training_inputs |

| Saved version of the training set inputs, which must be kept along for carrying out kernel evaluations with the test point. | |

| Vec | m_kernel_evaluations |

| Buffer for kernel evaluations at test time. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Implements a 'kernelized' version of linear ridge regression.

Given a kernel K(x,y) = phi(x)'phi(y), where phi(x) is the projection of a vector x into feature space, this class implements a version of the ridge estimator, giving the prediction at x as

f(x) = k(x)'(M + lambda I)^-1 y,

where x is the test vector where to estimate the response, k(x) is the vector of kernel evaluations between the test vector and the elements of the training set, namely

k(x) = (K(x,x1), K(x,x2), ..., K(x,xN))',

M is the Gram Matrix on the elements of the training set, i.e. the matrix where the element (i,j) is equal to K(xi, xj), lamdba is the weight decay coefficient, and y is the vector of training-set targets.

The disadvantage of this learner is that its training time is O(N^3) in the number of training examples (due to the matrix inversion). When saving the learner, the training set must be saved, along with an additional vector of the length of the training set.

Definition at line 77 of file KernelRidgeRegressor.h.

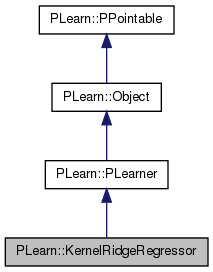

typedef PLearner PLearn::KernelRidgeRegressor::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 79 of file KernelRidgeRegressor.h.

| PLearn::KernelRidgeRegressor::KernelRidgeRegressor | ( | ) |

Default constructor.

Definition at line 76 of file KernelRidgeRegressor.cc.

: m_include_bias(true), m_weight_decay(0.0) { }

| string PLearn::KernelRidgeRegressor::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 73 of file KernelRidgeRegressor.cc.

| OptionList & PLearn::KernelRidgeRegressor::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 73 of file KernelRidgeRegressor.cc.

| RemoteMethodMap & PLearn::KernelRidgeRegressor::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 73 of file KernelRidgeRegressor.cc.

Reimplemented from PLearn::PLearner.

Definition at line 73 of file KernelRidgeRegressor.cc.

| Object * PLearn::KernelRidgeRegressor::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 73 of file KernelRidgeRegressor.cc.

| StaticInitializer KernelRidgeRegressor::_static_initializer_ & PLearn::KernelRidgeRegressor::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 73 of file KernelRidgeRegressor.cc.

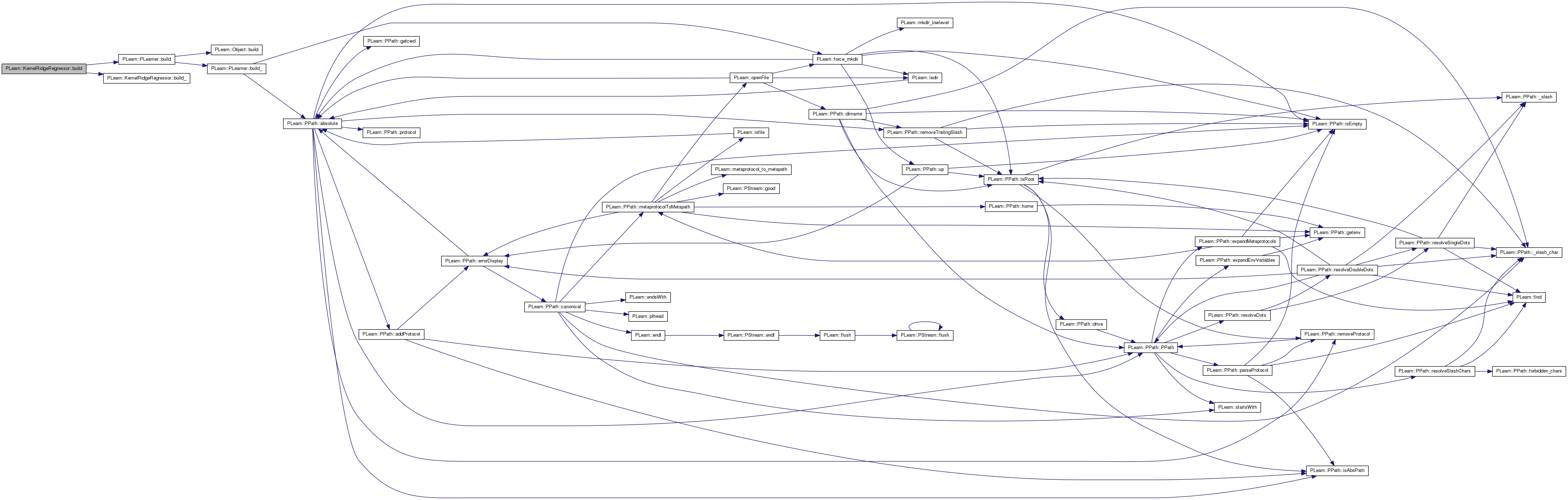

| void PLearn::KernelRidgeRegressor::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 122 of file KernelRidgeRegressor.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::KernelRidgeRegressor::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 111 of file KernelRidgeRegressor.cc.

References m_kernel, m_training_inputs, and PLERROR.

Referenced by build().

{

if (! m_kernel)

PLERROR("KernelRidgeRegressor::build_: 'kernel' option must be specified");

// If we are reloading the model, set the training inputs into the kernel

if (m_training_inputs)

m_kernel->setDataForKernelMatrix(m_training_inputs);

}

| string PLearn::KernelRidgeRegressor::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 73 of file KernelRidgeRegressor.cc.

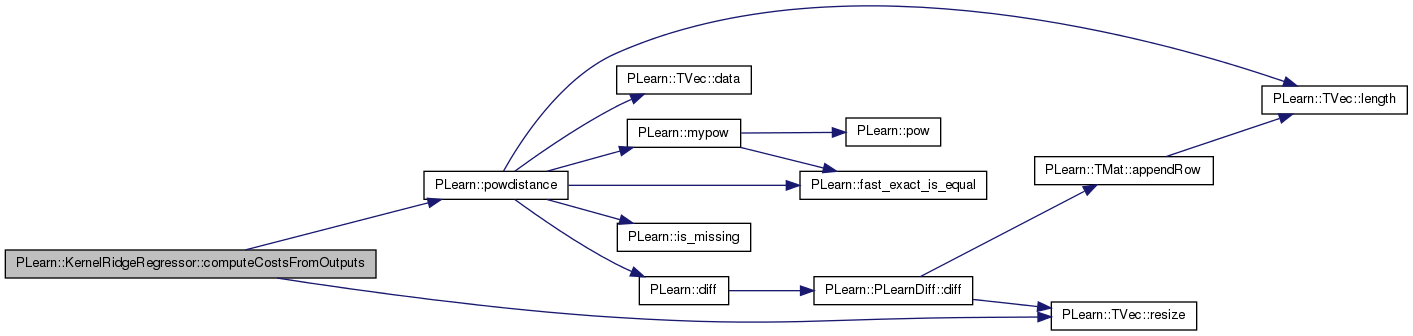

| void PLearn::KernelRidgeRegressor::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 199 of file KernelRidgeRegressor.cc.

References PLearn::powdistance(), and PLearn::TVec< T >::resize().

{

costs.resize(1);

real squared_loss = powdistance(output,target);

costs[0] = squared_loss;

}

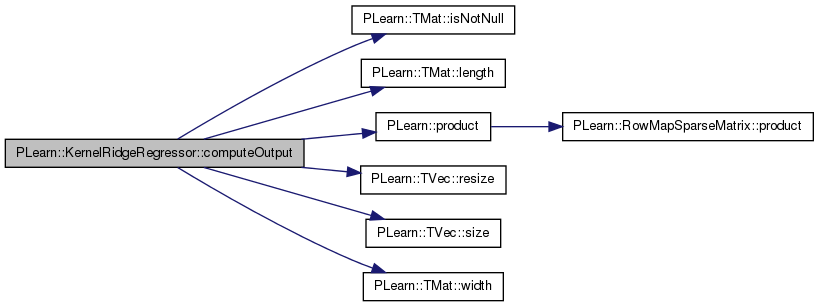

| void PLearn::KernelRidgeRegressor::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 184 of file KernelRidgeRegressor.cc.

References PLearn::TMat< T >::isNotNull(), PLearn::TMat< T >::length(), m_kernel, m_kernel_evaluations, m_params, m_training_inputs, PLASSERT, PLearn::product(), PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), and PLearn::TMat< T >::width().

{

PLASSERT( m_kernel && m_params.isNotNull() && m_training_inputs );

PLASSERT( output.size() == m_params.width() );

m_kernel_evaluations.resize(m_params.length());

m_kernel->evaluate_all_x_i(input, m_kernel_evaluations);

// Finally compute k(x,x_i) * (M + \lambda I)^-1 y

product(Mat(1, output.size(), output),

Mat(1, m_kernel_evaluations.size(), m_kernel_evaluations),

m_params);

}

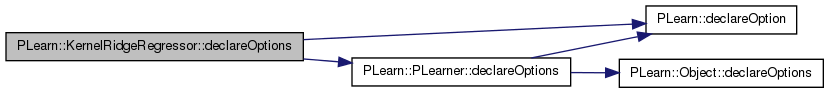

| void PLearn::KernelRidgeRegressor::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 82 of file KernelRidgeRegressor.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), PLearn::OptionBase::learntoption, m_include_bias, m_kernel, m_params, m_training_inputs, and m_weight_decay.

{

declareOption(ol, "kernel", &KernelRidgeRegressor::m_kernel,

OptionBase::buildoption,

"Kernel to use for the computation. This must be a similarity kernel\n"

"(i.e. closer vectors give higher kernel evaluations).");

declareOption(ol, "weight_decay", &KernelRidgeRegressor::m_weight_decay,

OptionBase::buildoption,

"Weight decay coefficient (default = 0)");

declareOption(ol, "include_bias", &KernelRidgeRegressor::m_include_bias,

OptionBase::buildoption,

"Whether to include a bias term in the regression (true by default)");

declareOption(ol, "params", &KernelRidgeRegressor::m_params,

OptionBase::learntoption,

"Vector of learned parameters, determined from the equation\n"

" (M + lambda I)^-1 y");

declareOption(ol, "training_inputs", &KernelRidgeRegressor::m_training_inputs,

OptionBase::learntoption,

"Saved version of the training set, which must be kept along for\n"

"carrying out kernel evaluations with the test point");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::KernelRidgeRegressor::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 137 of file KernelRidgeRegressor.h.

:

//##### Protected Options ###############################################

| KernelRidgeRegressor * PLearn::KernelRidgeRegressor::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 73 of file KernelRidgeRegressor.cc.

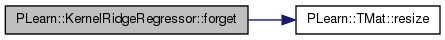

| void PLearn::KernelRidgeRegressor::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 144 of file KernelRidgeRegressor.cc.

References m_params, m_training_inputs, and PLearn::TMat< T >::resize().

{

m_params.resize(0,0);

m_training_inputs = 0;

}

| OptionList & PLearn::KernelRidgeRegressor::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 73 of file KernelRidgeRegressor.cc.

| OptionMap & PLearn::KernelRidgeRegressor::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 73 of file KernelRidgeRegressor.cc.

| RemoteMethodMap & PLearn::KernelRidgeRegressor::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 73 of file KernelRidgeRegressor.cc.

| TVec< string > PLearn::KernelRidgeRegressor::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutputs (and thus the test method).

Implements PLearn::PLearner.

Definition at line 208 of file KernelRidgeRegressor.cc.

{

return TVec<string>(1, "mse");

}

| TVec< string > PLearn::KernelRidgeRegressor::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 214 of file KernelRidgeRegressor.cc.

{

return TVec<string>(1, "mse");

}

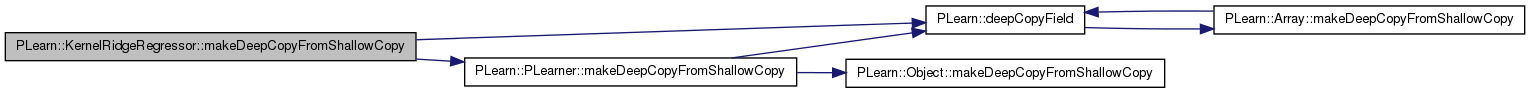

| void PLearn::KernelRidgeRegressor::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 129 of file KernelRidgeRegressor.cc.

References PLearn::deepCopyField(), m_kernel, m_params, m_training_inputs, and PLearn::PLearner::makeDeepCopyFromShallowCopy().

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField(m_kernel, copies);

deepCopyField(m_params, copies);

deepCopyField(m_training_inputs, copies);

}

| int PLearn::KernelRidgeRegressor::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 139 of file KernelRidgeRegressor.cc.

References PLearn::PLearner::targetsize().

{

return targetsize();

}

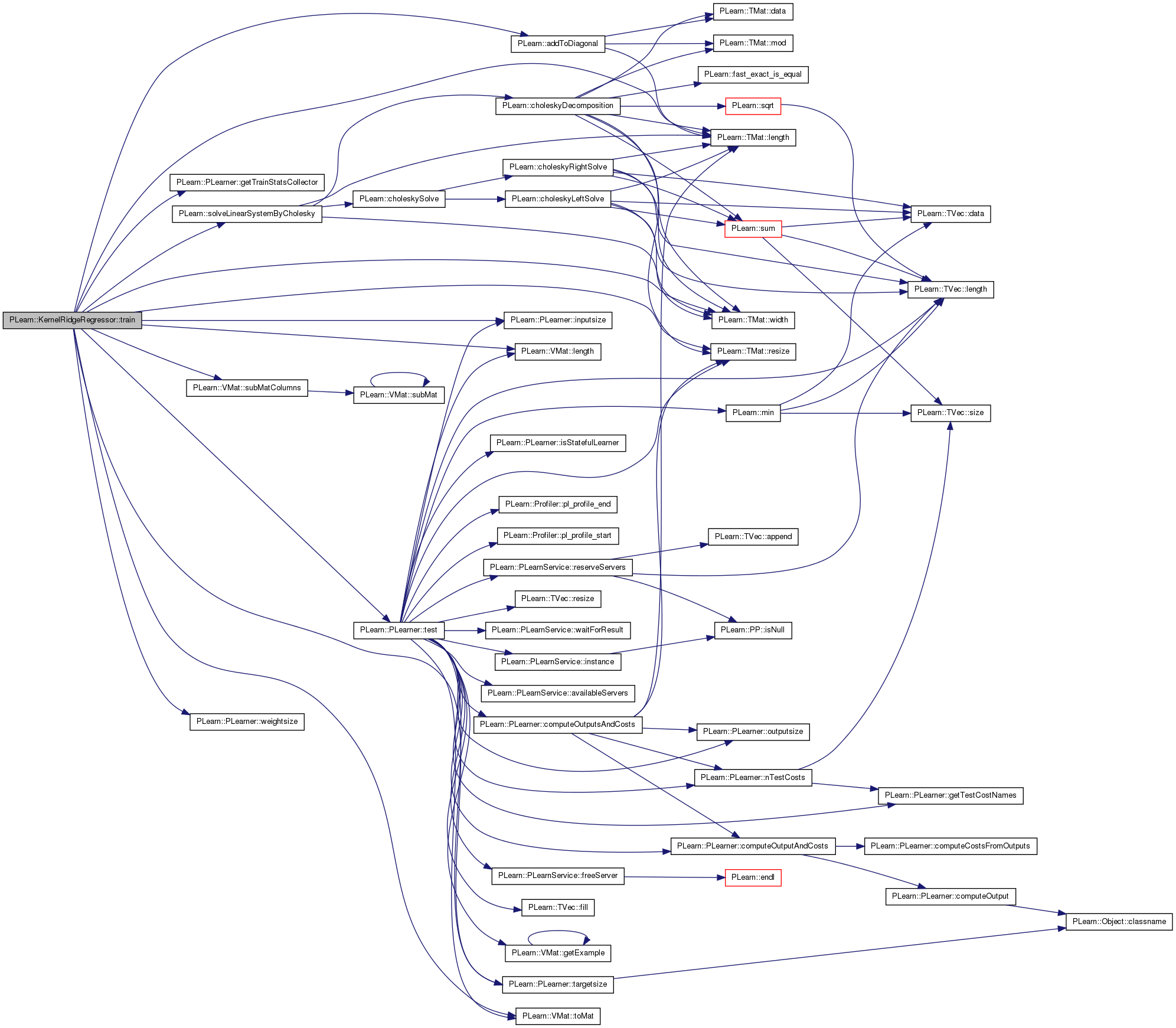

| void PLearn::KernelRidgeRegressor::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 150 of file KernelRidgeRegressor.cc.

References PLearn::addToDiagonal(), PLearn::PLearner::getTrainStatsCollector(), PLearn::PLearner::inputsize(), PLearn::TMat< T >::length(), PLearn::VMat::length(), m_kernel, m_params, m_training_inputs, m_weight_decay, PLASSERT, PLERROR, PLearn::TMat< T >::resize(), PLearn::solveLinearSystemByCholesky(), PLearn::VMat::subMatColumns(), PLearn::PLearner::targetsize(), PLearn::PLearner::test(), PLearn::VMat::toMat(), PLearn::PLearner::train_set, PLearn::PLearner::weightsize(), and PLearn::TMat< T >::width().

{

PLASSERT( m_kernel );

if (! train_set)

PLERROR("KernelRidgeRegressor::train: the training set must be specified");

int inputsize = train_set->inputsize() ;

int targetsize = train_set->targetsize();

int weightsize = train_set->weightsize();

if (inputsize < 0 || targetsize < 0 || weightsize < 0)

PLERROR("KernelRidgeRegressor::train: inconsistent inputsize/targetsize/weightsize "

"(%d/%d/%d) in training set", inputsize, targetsize, weightsize);

if (weightsize > 0)

PLERROR("KernelRidgeRegressor::train: observations weights are not currently supported");

m_training_inputs = train_set.subMatColumns(0, inputsize).toMat();

Mat targets = train_set.subMatColumns(inputsize, targetsize).toMat();

// Compute Gram Matrix and add weight decay to diagonal

m_kernel->setDataForKernelMatrix(m_training_inputs);

Mat gram_mat(m_training_inputs.length(), m_training_inputs.length());

m_kernel->computeGramMatrix(gram_mat);

addToDiagonal(gram_mat, m_weight_decay);

// Compute parameters

m_params.resize(targets.length(), targets.width());

solveLinearSystemByCholesky(gram_mat, targets, m_params);

// Compute train error if there is a train_stats_collector. There is

// probably an analytic formula, but ...

if (getTrainStatsCollector())

test(train_set, getTrainStatsCollector());

}

Reimplemented from PLearn::PLearner.

Definition at line 137 of file KernelRidgeRegressor.h.

Whether to include a bias term in the regression (true by default)

Definition at line 85 of file KernelRidgeRegressor.h.

Referenced by declareOptions().

Kernel to use for the computation.

This must be a similarity kernel (i.e. closer vectors give higher kernel evaluations).

Definition at line 89 of file KernelRidgeRegressor.h.

Referenced by build_(), computeOutput(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::KernelRidgeRegressor::m_kernel_evaluations [mutable, protected] |

Buffer for kernel evaluations at test time.

Definition at line 158 of file KernelRidgeRegressor.h.

Referenced by computeOutput().

Mat PLearn::KernelRidgeRegressor::m_params [protected] |

Vector of learned parameters, determined from the equation (M + lambda I)^-1 y (don't forget that y can be a matrix for multivariate output problems)

Definition at line 151 of file KernelRidgeRegressor.h.

Referenced by computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

VMat PLearn::KernelRidgeRegressor::m_training_inputs [protected] |

Saved version of the training set inputs, which must be kept along for carrying out kernel evaluations with the test point.

Definition at line 155 of file KernelRidgeRegressor.h.

Referenced by build_(), computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Weight decay coefficient (default = 0)

Definition at line 92 of file KernelRidgeRegressor.h.

Referenced by declareOptions(), and train().

1.7.4

1.7.4