|

PLearn 0.1

|

|

PLearn 0.1

|

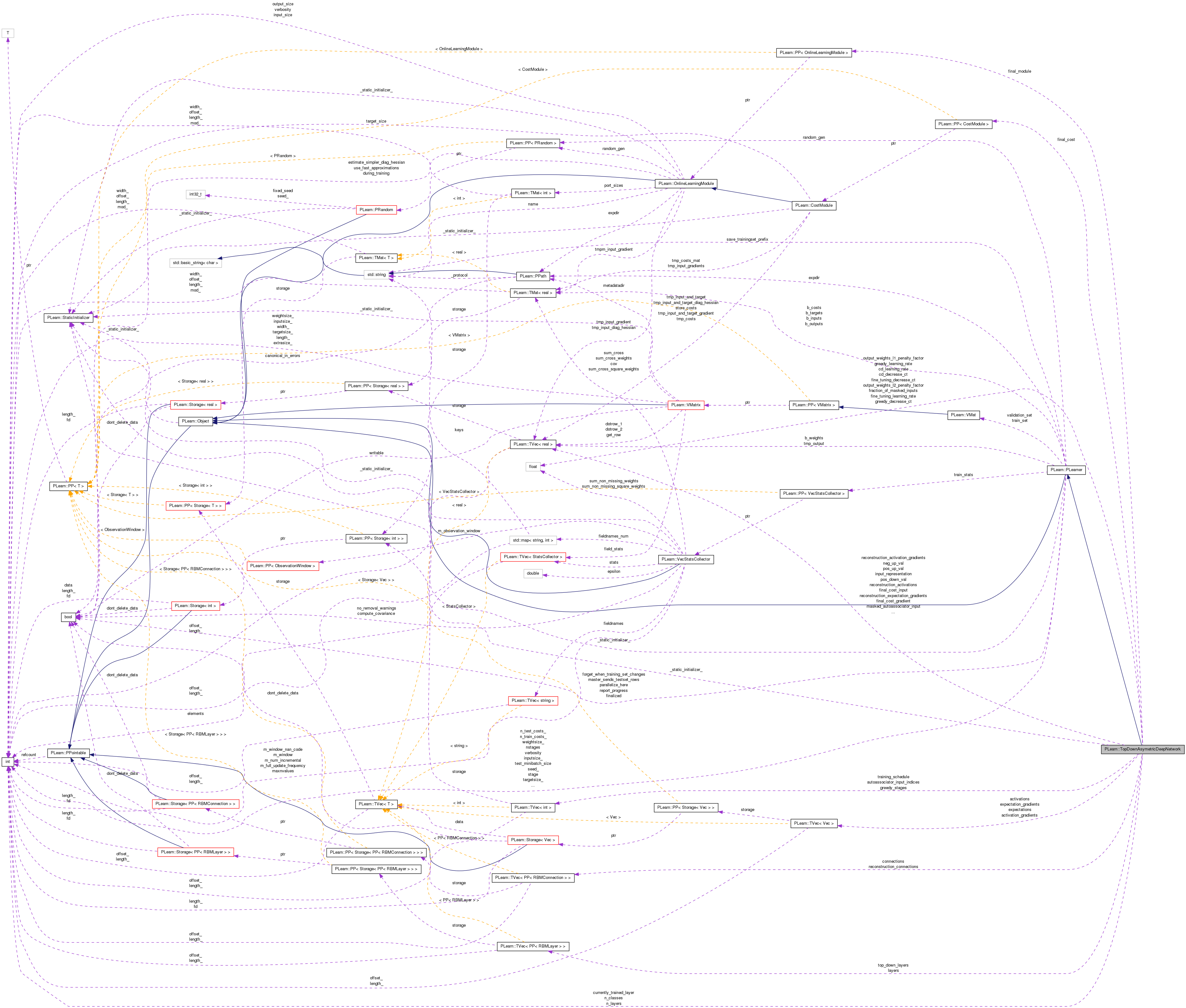

Neural net, trained layer-wise in a greedy but focused fashion using autoassociators/RBMs and a supervised non-parametric gradient. More...

#include <TopDownAsymetricDeepNetwork.h>

Public Member Functions | |

| TopDownAsymetricDeepNetwork () | |

| Default constructor. | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

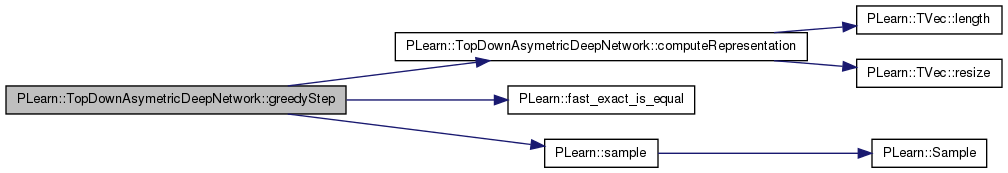

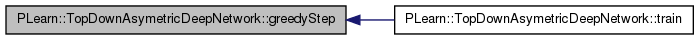

| void | greedyStep (const Vec &input, const Vec &target, int index, Vec train_costs, int stage) |

| void | fineTuningStep (const Vec &input, const Vec &target, Vec &train_costs) |

| void | computeRepresentation (const Vec &input, Vec &representation, int layer) const |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual TopDownAsymetricDeepNetwork * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

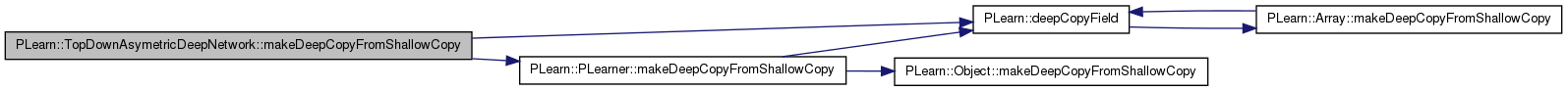

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | cd_learning_rate |

| Contrastive divergence learning rate. | |

| real | cd_decrease_ct |

| Contrastive divergence decrease constant. | |

| real | greedy_learning_rate |

| The learning rate used during the autoassociator gradient descent training. | |

| real | greedy_decrease_ct |

| The decrease constant of the learning rate used during the autoassociator gradient descent training. | |

| real | fine_tuning_learning_rate |

| The learning rate used during the fine tuning gradient descent. | |

| real | fine_tuning_decrease_ct |

| The decrease constant of the learning rate used during fine tuning gradient descent. | |

| TVec< int > | training_schedule |

| Number of examples to use during each phase of greedy pre-training. | |

| TVec< PP< RBMLayer > > | layers |

| The layers of units in the network for bottom up inference. | |

| TVec< PP< RBMLayer > > | top_down_layers |

| The layers of units in the network for top down inference. | |

| TVec< PP< RBMConnection > > | connections |

| The weights of the connections between the layers. | |

| TVec< PP< RBMConnection > > | reconstruction_connections |

| The reconstruction weights of the autoassociators. | |

| int | n_classes |

| Number of classes. | |

| real | output_weights_l1_penalty_factor |

| Output weights l1_penalty_factor. | |

| real | output_weights_l2_penalty_factor |

| Output weights l2_penalty_factor. | |

| real | fraction_of_masked_inputs |

| Fraction of the autoassociators' random input components that are masked, i.e. | |

| int | n_layers |

| Number of layers. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| TVec< Vec > | activations |

| Stores the activations of the input and hidden layers (at the input of the layers) | |

| TVec< Vec > | expectations |

| Stores the expectations of the input and hidden layers (at the output of the layers) | |

| TVec< Vec > | activation_gradients |

| Stores the gradient of the cost wrt the activations of the input and hidden layers (at the input of the layers) | |

| TVec< Vec > | expectation_gradients |

| Stores the gradient of the cost wrt the expectations of the input and hidden layers (at the output of the layers) | |

| Vec | reconstruction_activations |

| Reconstruction activations. | |

| Vec | reconstruction_activation_gradients |

| Reconstruction activation gradients. | |

| Vec | reconstruction_expectation_gradients |

| Reconstruction expectation gradients. | |

| Vec | input_representation |

| Example representation. | |

| Vec | masked_autoassociator_input |

| Perturbed input for current layer. | |

| TVec< int > | autoassociator_input_indices |

| Indices of input components for current layer. | |

| Vec | pos_down_val |

| Positive down statistic. | |

| Vec | pos_up_val |

| Positive up statistic. | |

| Vec | neg_down_val |

| Negative down statistic. | |

| Vec | neg_up_val |

| Negative up statistic. | |

| Vec | final_cost_input |

| Input of cost function. | |

| Vec | final_cost_value |

| Cost value. | |

| Vec | final_cost_gradient |

| Cost gradient on output layer. | |

| TVec< int > | greedy_stages |

| Stages of the different greedy phases. | |

| int | currently_trained_layer |

| Currently trained layer (1 means the first hidden layer, n_layers means the output layer) | |

| PP< OnlineLearningModule > | final_module |

| Output layer of neural net. | |

| PP< CostModule > | final_cost |

| Cost on output layer of neural net. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

| void | build_layers_and_connections () |

| void | build_output_layer_and_cost () |

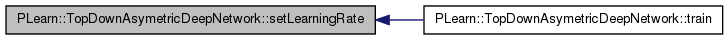

| void | setLearningRate (real the_learning_rate) |

Neural net, trained layer-wise in a greedy but focused fashion using autoassociators/RBMs and a supervised non-parametric gradient.

It is highly inspired by the StackedAutoassociators class, and can use use the same RBMLayer and RBMConnection components.

Definition at line 67 of file TopDownAsymetricDeepNetwork.h.

typedef PLearner PLearn::TopDownAsymetricDeepNetwork::inherited [private] |

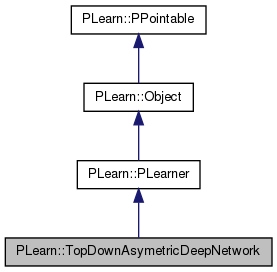

Reimplemented from PLearn::PLearner.

Definition at line 69 of file TopDownAsymetricDeepNetwork.h.

| PLearn::TopDownAsymetricDeepNetwork::TopDownAsymetricDeepNetwork | ( | ) |

Default constructor.

Definition at line 59 of file TopDownAsymetricDeepNetwork.cc.

References PLearn::PLearner::nstages, and PLearn::PLearner::random_gen.

:

cd_learning_rate( 0. ),

cd_decrease_ct( 0. ),

greedy_learning_rate( 0. ),

greedy_decrease_ct( 0. ),

fine_tuning_learning_rate( 0. ),

fine_tuning_decrease_ct( 0. ),

n_classes( -1 ),

output_weights_l1_penalty_factor(0),

output_weights_l2_penalty_factor(0),

fraction_of_masked_inputs( 0 ),

n_layers( 0 ),

currently_trained_layer( 0 )

{

// random_gen will be initialized in PLearner::build_()

random_gen = new PRandom();

nstages = 0;

}

| string PLearn::TopDownAsymetricDeepNetwork::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

| OptionList & PLearn::TopDownAsymetricDeepNetwork::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

| RemoteMethodMap & PLearn::TopDownAsymetricDeepNetwork::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

Reimplemented from PLearn::PLearner.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

| Object * PLearn::TopDownAsymetricDeepNetwork::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

| StaticInitializer TopDownAsymetricDeepNetwork::_static_initializer_ & PLearn::TopDownAsymetricDeepNetwork::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

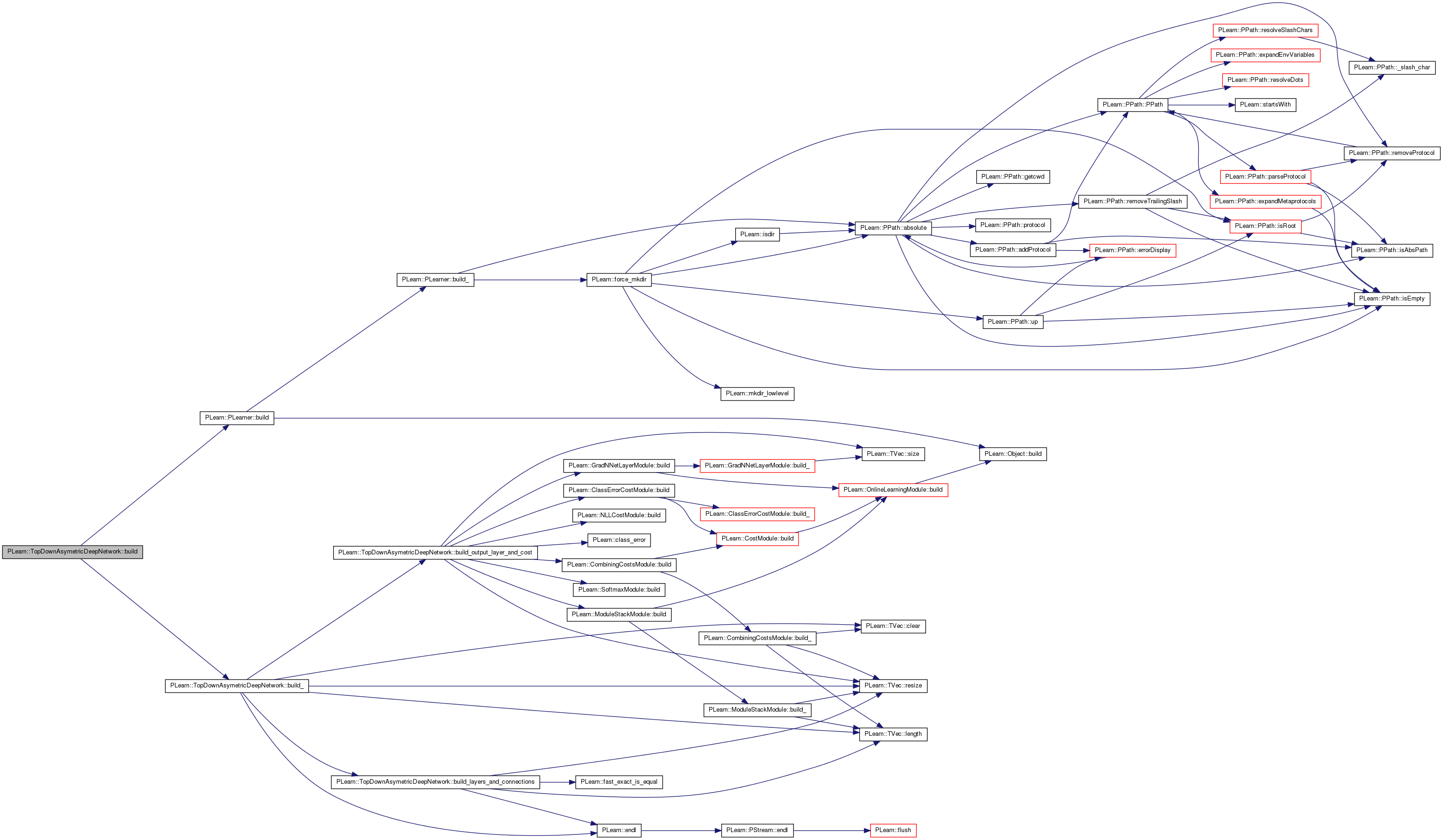

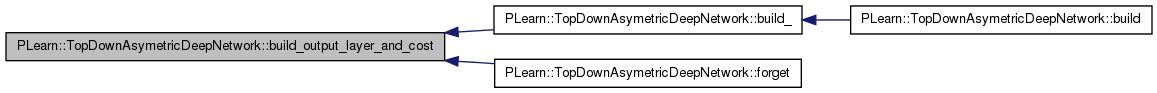

| void PLearn::TopDownAsymetricDeepNetwork::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 448 of file TopDownAsymetricDeepNetwork.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

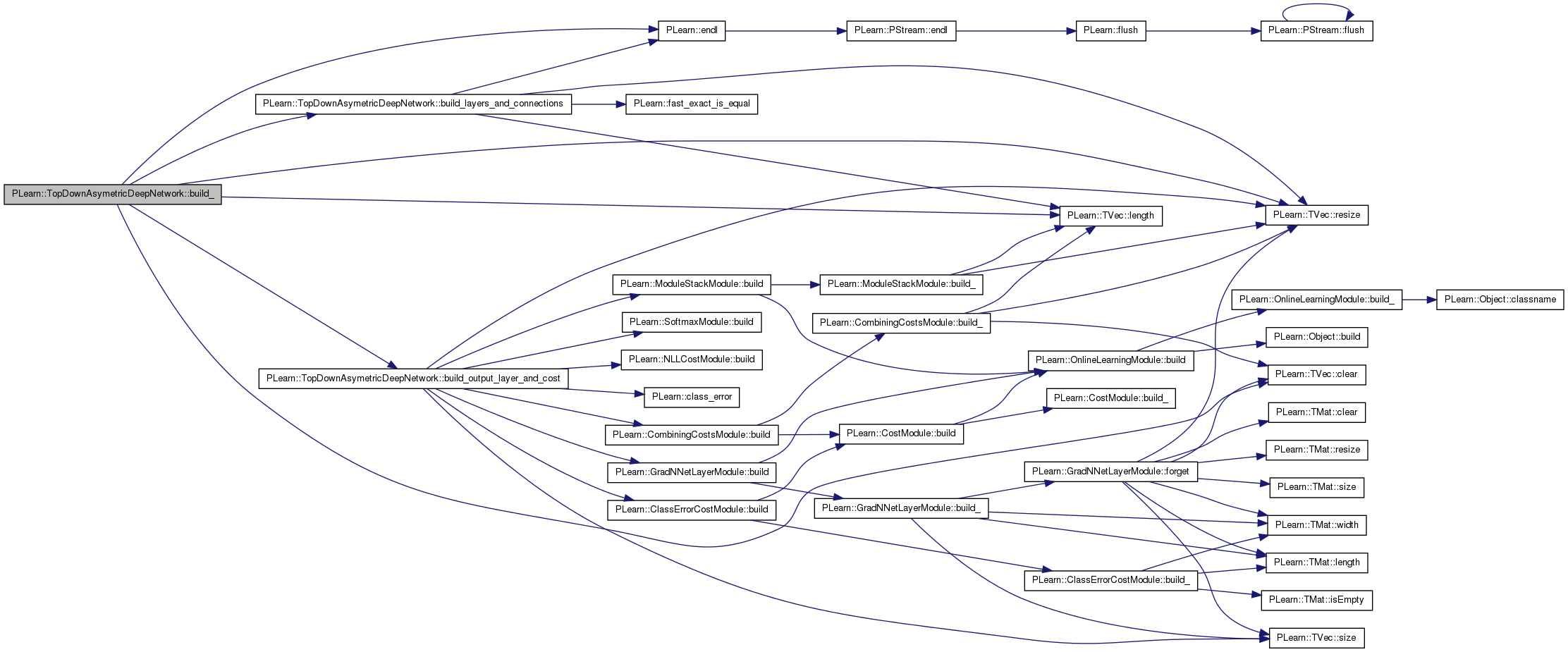

| void PLearn::TopDownAsymetricDeepNetwork::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 204 of file TopDownAsymetricDeepNetwork.cc.

References build_layers_and_connections(), build_output_layer_and_cost(), PLearn::TVec< T >::clear(), currently_trained_layer, PLearn::endl(), final_cost, final_module, greedy_stages, PLearn::PLearner::inputsize_, layers, PLearn::TVec< T >::length(), n_classes, n_layers, PLERROR, PLearn::TVec< T >::resize(), PLearn::PLearner::stage, PLearn::PLearner::targetsize_, training_schedule, and PLearn::PLearner::weightsize_.

Referenced by build().

{

// ### This method should do the real building of the object,

// ### according to set 'options', in *any* situation.

// ### Typical situations include:

// ### - Initial building of an object from a few user-specified options

// ### - Building of a "reloaded" object: i.e. from the complete set of

// ### all serialised options.

// ### - Updating or "re-building" of an object after a few "tuning"

// ### options have been modified.

// ### You should assume that the parent class' build_() has already been

// ### called.

MODULE_LOG << "build_() called" << endl;

if(inputsize_ > 0 && targetsize_ > 0)

{

// Initialize some learnt variables

n_layers = layers.length();

if( n_classes <= 0 )

PLERROR("TopDownAsymetricDeepNetwork::build_() - \n"

"n_classes should be > 0.\n");

if( weightsize_ > 0 )

PLERROR("TopDownAsymetricDeepNetwork::build_() - \n"

"usage of weighted samples (weight size > 0) is not\n"

"implemented yet.\n");

if( training_schedule.length() != n_layers-1 )

PLERROR("TopDownAsymetricDeepNetwork::build_() - \n"

"training_schedule should have %d elements.\n",

n_layers-1);

if(greedy_stages.length() == 0)

{

greedy_stages.resize(n_layers-1);

greedy_stages.clear();

}

if(stage > 0)

currently_trained_layer = n_layers;

else

{

currently_trained_layer = n_layers-1;

while(currently_trained_layer>1

&& greedy_stages[currently_trained_layer-1] <= 0)

currently_trained_layer--;

}

build_layers_and_connections();

if( !final_module || !final_cost )

build_output_layer_and_cost();

}

}

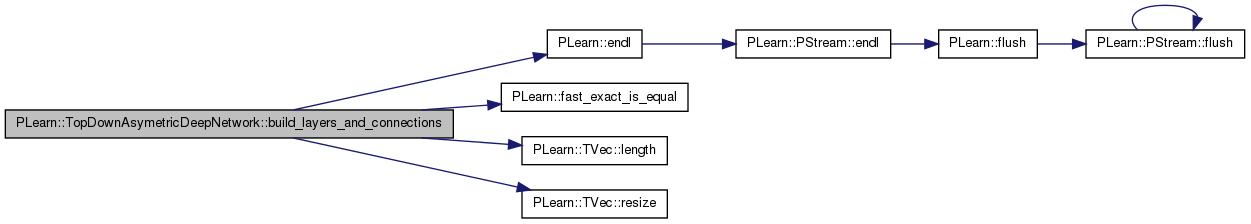

| void PLearn::TopDownAsymetricDeepNetwork::build_layers_and_connections | ( | ) | [private] |

Definition at line 309 of file TopDownAsymetricDeepNetwork.cc.

References activation_gradients, activations, connections, PLearn::endl(), expectation_gradients, expectations, PLearn::fast_exact_is_equal(), fraction_of_masked_inputs, greedy_learning_rate, i, PLearn::PLearner::inputsize_, layers, PLearn::TVec< T >::length(), n_layers, PLERROR, PLearn::PLearner::random_gen, reconstruction_connections, PLearn::TVec< T >::resize(), and top_down_layers.

Referenced by build_().

{

MODULE_LOG << "build_layers_and_connections() called" << endl;

if( connections.length() != n_layers-1 )

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() - \n"

"there should be %d connections.\n",

n_layers-1);

if( !fast_exact_is_equal( greedy_learning_rate, 0 )

&& reconstruction_connections.length() != n_layers-1 )

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() - \n"

"there should be %d reconstruction connections.\n",

n_layers-1);

if( !( reconstruction_connections.length() == 0

|| reconstruction_connections.length() == n_layers-1 ) )

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() - \n"

"there should be either 0 or %d reconstruction connections.\n",

n_layers-1);

if(top_down_layers.length() != n_layers

&& top_down_layers.length() != 0)

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() - \n"

"there should be either 0 of %d top_down_layers.\n",

n_layers);

if(layers[0]->size != inputsize_)

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() - \n"

"layers[0] should have a size of %d.\n",

inputsize_);

if(top_down_layers[0]->size != inputsize_)

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() - \n"

"top_down_layers[0] should have a size of %d.\n",

inputsize_);

if( fraction_of_masked_inputs < 0 )

PLERROR("TopDownAsymetricDeepNetwork::build_()"

" - \n"

"fraction_of_masked_inputs should be > or equal to 0.\n");

activations.resize( n_layers );

expectations.resize( n_layers );

activation_gradients.resize( n_layers );

expectation_gradients.resize( n_layers );

for( int i=0 ; i<n_layers-1 ; i++ )

{

if( layers[i]->size != connections[i]->down_size )

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() "

"- \n"

"connections[%i] should have a down_size of %d.\n",

i, layers[i]->size);

if( top_down_layers[i]->size != connections[i]->down_size )

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() "

"- \n"

"top_down_layers[%i] should have a size of %d.\n",

i, connections[i]->down_size);

if( connections[i]->up_size != layers[i+1]->size )

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() "

"- \n"

"connections[%i] should have a up_size of %d.\n",

i, layers[i+1]->size);

if( connections[i]->up_size != top_down_layers[i+1]->size )

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() "

"- \n"

"top_down_layers[%i] should have a up_size of %d.\n",

i, connections[i]->up_size);

if( reconstruction_connections.length() != 0 )

{

if( layers[i+1]->size != reconstruction_connections[i]->down_size )

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() "

"- \n"

"recontruction_connections[%i] should have a down_size of "

"%d.\n",

i, layers[i+1]->size);

if( reconstruction_connections[i]->up_size != layers[i]->size )

PLERROR("TopDownAsymetricDeepNetwork::build_layers_and_connections() "

"- \n"

"recontruction_connections[%i] should have a up_size of "

"%d.\n",

i, layers[i]->size);

}

if( !(layers[i]->random_gen) )

{

layers[i]->random_gen = random_gen;

layers[i]->forget();

}

if( !(top_down_layers[i]->random_gen) )

{

top_down_layers[i]->random_gen = random_gen;

top_down_layers[i]->forget();

}

if( !(connections[i]->random_gen) )

{

connections[i]->random_gen = random_gen;

connections[i]->forget();

}

if( reconstruction_connections.length() != 0

&& !(reconstruction_connections[i]->random_gen) )

{

reconstruction_connections[i]->random_gen = random_gen;

reconstruction_connections[i]->forget();

}

activations[i].resize( layers[i]->size );

expectations[i].resize( layers[i]->size );

activation_gradients[i].resize( layers[i]->size );

expectation_gradients[i].resize( layers[i]->size );

}

if( !(layers[n_layers-1]->random_gen) )

{

layers[n_layers-1]->random_gen = random_gen;

layers[n_layers-1]->forget();

}

if( !(top_down_layers[n_layers-1]->random_gen) )

{

top_down_layers[n_layers-1]->random_gen = random_gen;

top_down_layers[n_layers-1]->forget();

}

activations[n_layers-1].resize( layers[n_layers-1]->size );

expectations[n_layers-1].resize( layers[n_layers-1]->size );

activation_gradients[n_layers-1].resize( layers[n_layers-1]->size );

expectation_gradients[n_layers-1].resize( layers[n_layers-1]->size );

}

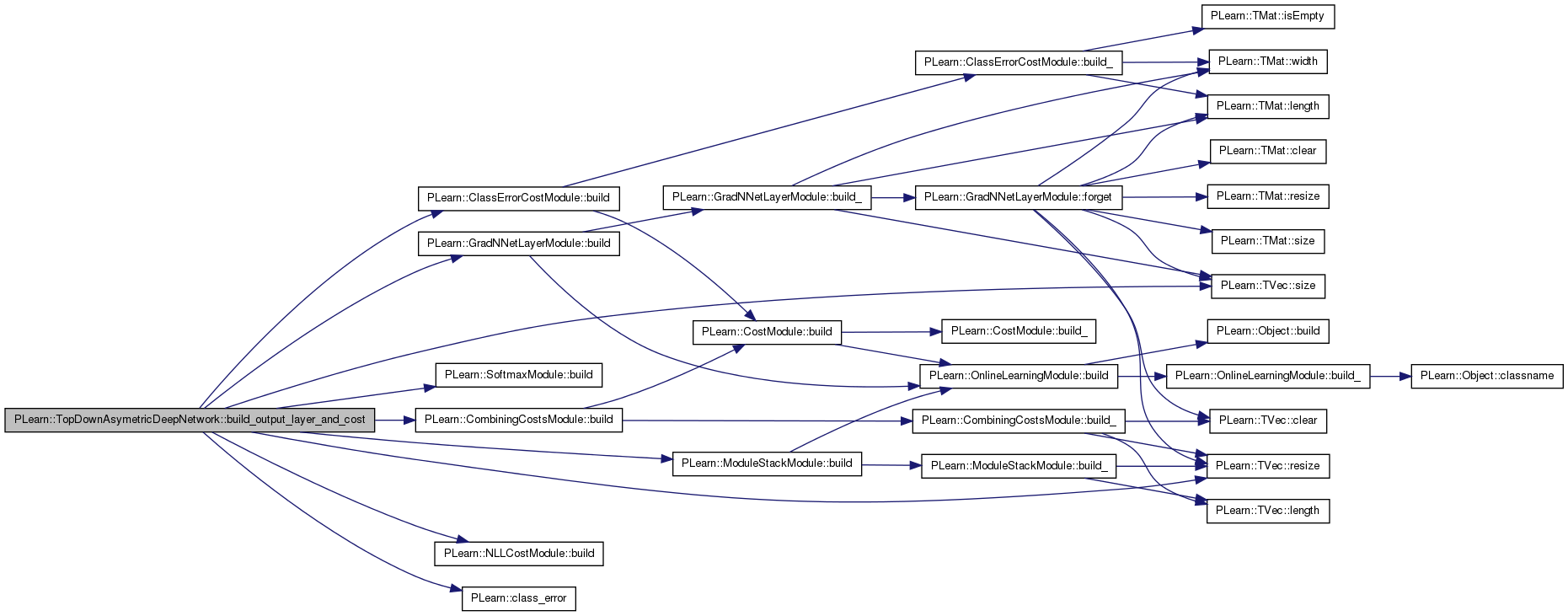

| void PLearn::TopDownAsymetricDeepNetwork::build_output_layer_and_cost | ( | ) | [private] |

Definition at line 261 of file TopDownAsymetricDeepNetwork.cc.

References PLearn::GradNNetLayerModule::build(), PLearn::SoftmaxModule::build(), PLearn::ModuleStackModule::build(), PLearn::ClassErrorCostModule::build(), PLearn::CombiningCostsModule::build(), PLearn::NLLCostModule::build(), PLearn::class_error(), PLearn::CombiningCostsModule::cost_weights, final_cost, final_module, PLearn::OnlineLearningModule::input_size, PLearn::GradNNetLayerModule::L1_penalty_factor, PLearn::GradNNetLayerModule::L2_penalty_factor, layers, PLearn::ModuleStackModule::modules, n_classes, n_layers, PLearn::OnlineLearningModule::output_size, output_weights_l1_penalty_factor, output_weights_l2_penalty_factor, PLearn::OnlineLearningModule::random_gen, PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), and PLearn::CombiningCostsModule::sub_costs.

Referenced by build_(), and forget().

{

GradNNetLayerModule* gnl = new GradNNetLayerModule();

gnl->input_size = layers[n_layers-1]->size;

gnl->output_size = n_classes;

gnl->L1_penalty_factor = output_weights_l1_penalty_factor;

gnl->L2_penalty_factor = output_weights_l2_penalty_factor;

gnl->random_gen = random_gen;

gnl->build();

SoftmaxModule* sm = new SoftmaxModule();

sm->input_size = n_classes;

sm->random_gen = random_gen;

sm->build();

ModuleStackModule* msm = new ModuleStackModule();

msm->modules.resize(2);

msm->modules[0] = gnl;

msm->modules[1] = sm;

msm->random_gen = random_gen;

msm->build();

final_module = msm;

final_module->forget();

NLLCostModule* nll = new NLLCostModule();

nll->input_size = n_classes;

nll->random_gen = random_gen;

nll->build();

ClassErrorCostModule* class_error = new ClassErrorCostModule();

class_error->input_size = n_classes;

class_error->random_gen = random_gen;

class_error->build();

CombiningCostsModule* comb_costs = new CombiningCostsModule();

comb_costs->cost_weights.resize(2);

comb_costs->cost_weights[0] = 1;

comb_costs->cost_weights[1] = 0;

comb_costs->sub_costs.resize(2);

comb_costs->sub_costs[0] = nll;

comb_costs->sub_costs[1] = class_error;

comb_costs->build();

final_cost = comb_costs;

final_cost->forget();

}

| string PLearn::TopDownAsymetricDeepNetwork::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

Referenced by train().

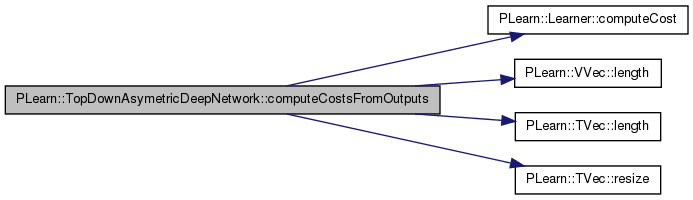

| void PLearn::TopDownAsymetricDeepNetwork::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 985 of file Learner.cc.

References PLearn::Learner::computeCost(), PLearn::VVec::length(), PLearn::TVec< T >::length(), PLERROR, PLearn::TVec< T >::resize(), PLearn::Learner::tmp_input, PLearn::Learner::tmp_target, and PLearn::Learner::tmp_weight.

{

tmp_input.resize(input.length());

tmp_input << input;

tmp_target.resize(target.length());

tmp_target << target;

computeCost(input, target, output, costs);

int nw = weight.length();

if(nw>0)

{

tmp_weight.resize(nw);

tmp_weight << weight;

if(nw==1) // a single scalar weight

costs *= tmp_weight[0];

else if(nw==costs.length()) // one weight per cost element

costs *= tmp_weight;

else

PLERROR("In computeCostsFromOutputs: don't know how to handle cost-weight vector of length %d while output vector has length %d", nw, output.length());

}

}

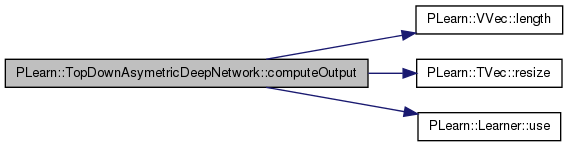

| void PLearn::TopDownAsymetricDeepNetwork::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 975 of file Learner.cc.

References PLearn::VVec::length(), PLearn::TVec< T >::resize(), PLearn::Learner::tmp_input, and PLearn::Learner::use().

{

tmp_input.resize(input.length());

tmp_input << input;

use(tmp_input,output);

}

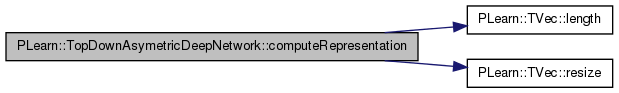

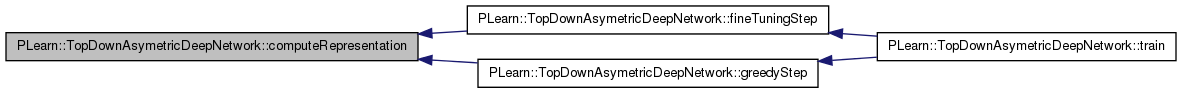

| void PLearn::TopDownAsymetricDeepNetwork::computeRepresentation | ( | const Vec & | input, |

| Vec & | representation, | ||

| int | layer | ||

| ) | const |

Definition at line 825 of file TopDownAsymetricDeepNetwork.cc.

References activations, connections, expectations, i, layers, PLearn::TVec< T >::length(), and PLearn::TVec< T >::resize().

Referenced by fineTuningStep(), and greedyStep().

{

if(layer == 0)

{

representation.resize(input.length());

expectations[0] << input;

representation << input;

return;

}

expectations[0] << input;

for( int i=0 ; i<layer; i++ )

{

connections[i]->fprop( expectations[i], activations[i+1] );

layers[i+1]->fprop(activations[i+1],expectations[i+1]);

}

representation.resize(expectations[layer].length());

representation << expectations[layer];

}

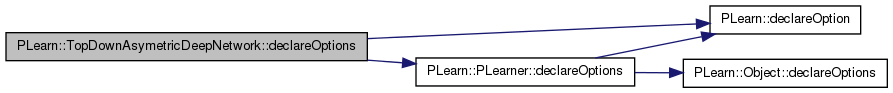

| void PLearn::TopDownAsymetricDeepNetwork::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 78 of file TopDownAsymetricDeepNetwork.cc.

References PLearn::OptionBase::buildoption, cd_decrease_ct, cd_learning_rate, connections, PLearn::declareOption(), PLearn::PLearner::declareOptions(), final_cost, final_module, fine_tuning_decrease_ct, fine_tuning_learning_rate, fraction_of_masked_inputs, greedy_decrease_ct, greedy_learning_rate, greedy_stages, layers, PLearn::OptionBase::learntoption, n_classes, n_layers, output_weights_l1_penalty_factor, output_weights_l2_penalty_factor, reconstruction_connections, top_down_layers, and training_schedule.

{

declareOption(ol, "cd_learning_rate",

&TopDownAsymetricDeepNetwork::cd_learning_rate,

OptionBase::buildoption,

"The learning rate used during the RBM "

"contrastive divergence training.\n");

declareOption(ol, "cd_decrease_ct",

&TopDownAsymetricDeepNetwork::cd_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the learning rate used during "

"the RBMs contrastive\n"

"divergence training. When a hidden layer has finished "

"its training,\n"

"the learning rate is reset to it's initial value.\n");

declareOption(ol, "greedy_learning_rate",

&TopDownAsymetricDeepNetwork::greedy_learning_rate,

OptionBase::buildoption,

"The learning rate used during the autoassociator "

"gradient descent training.\n");

declareOption(ol, "greedy_decrease_ct",

&TopDownAsymetricDeepNetwork::greedy_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the learning rate used during "

"the autoassociator\n"

"gradient descent training. When a hidden layer has finished "

"its training,\n"

"the learning rate is reset to it's initial value.\n");

declareOption(ol, "fine_tuning_learning_rate",

&TopDownAsymetricDeepNetwork::fine_tuning_learning_rate,

OptionBase::buildoption,

"The learning rate used during the fine tuning "

"gradient descent.\n");

declareOption(ol, "fine_tuning_decrease_ct",

&TopDownAsymetricDeepNetwork::fine_tuning_decrease_ct,

OptionBase::buildoption,

"The decrease constant of the learning rate used during "

"fine tuning\n"

"gradient descent.\n");

declareOption(ol, "training_schedule",

&TopDownAsymetricDeepNetwork::training_schedule,

OptionBase::buildoption,

"Number of examples to use during each phase "

"of greedy pre-training.\n"

"The number of fine-tunig steps is defined by nstages.\n"

);

declareOption(ol, "layers", &TopDownAsymetricDeepNetwork::layers,

OptionBase::buildoption,

"The layers of units in the network. The first element\n"

"of this vector should be the input layer and the\n"

"subsequent elements should be the hidden layers. The\n"

"output layer should not be included in layers.\n"

"These layers will be used only for bottom up inference.\n");

declareOption(ol, "top_down_layers",

&TopDownAsymetricDeepNetwork::top_down_layers,

OptionBase::buildoption,

"The layers of units used for top down inference during\n"

"greedy training of an RBM/autoencoder.");

declareOption(ol, "connections", &TopDownAsymetricDeepNetwork::connections,

OptionBase::buildoption,

"The weights of the connections between the layers");

declareOption(ol, "reconstruction_connections",

&TopDownAsymetricDeepNetwork::reconstruction_connections,

OptionBase::buildoption,

"The reconstruction weights of the autoassociators");

declareOption(ol, "n_classes",

&TopDownAsymetricDeepNetwork::n_classes,

OptionBase::buildoption,

"Number of classes.");

declareOption(ol, "output_weights_l1_penalty_factor",

&TopDownAsymetricDeepNetwork::output_weights_l1_penalty_factor,

OptionBase::buildoption,

"Output weights l1_penalty_factor.\n");

declareOption(ol, "output_weights_l2_penalty_factor",

&TopDownAsymetricDeepNetwork::output_weights_l2_penalty_factor,

OptionBase::buildoption,

"Output weights l2_penalty_factor.\n");

declareOption(ol, "fraction_of_masked_inputs",

&TopDownAsymetricDeepNetwork::fraction_of_masked_inputs,

OptionBase::buildoption,

"Fraction of the autoassociators' random input components "

"that are\n"

"masked, i.e. unsused to reconstruct the input.\n");

declareOption(ol, "greedy_stages",

&TopDownAsymetricDeepNetwork::greedy_stages,

OptionBase::learntoption,

"Number of training samples seen in the different greedy "

"phases.\n"

);

declareOption(ol, "n_layers", &TopDownAsymetricDeepNetwork::n_layers,

OptionBase::learntoption,

"Number of layers"

);

declareOption(ol, "final_module",

&TopDownAsymetricDeepNetwork::final_module,

OptionBase::learntoption,

"Output layer of neural net"

);

declareOption(ol, "final_cost",

&TopDownAsymetricDeepNetwork::final_cost,

OptionBase::learntoption,

"Cost on output layer of neural net"

);

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::TopDownAsymetricDeepNetwork::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 180 of file TopDownAsymetricDeepNetwork.h.

:

//##### Not Options #####################################################

| TopDownAsymetricDeepNetwork * PLearn::TopDownAsymetricDeepNetwork::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

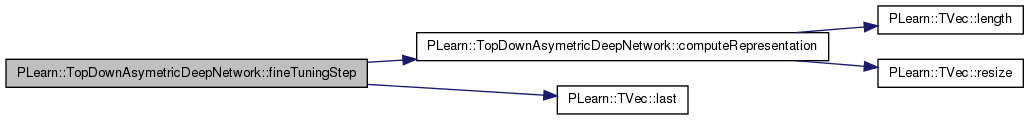

| void PLearn::TopDownAsymetricDeepNetwork::fineTuningStep | ( | const Vec & | input, |

| const Vec & | target, | ||

| Vec & | train_costs | ||

| ) |

Definition at line 791 of file TopDownAsymetricDeepNetwork.cc.

References activation_gradients, activations, computeRepresentation(), connections, expectation_gradients, expectations, final_cost, final_cost_gradient, final_cost_input, final_cost_value, final_module, i, input_representation, PLearn::TVec< T >::last(), layers, and n_layers.

Referenced by train().

{

// Get example representation

computeRepresentation(input, input_representation,

n_layers-1);

final_module->fprop( input_representation, final_cost_input );

final_cost->fprop( final_cost_input, target, final_cost_value );

final_cost->bpropUpdate( final_cost_input, target,

final_cost_value[0],

final_cost_gradient );

final_module->bpropUpdate( input_representation,

final_cost_input,

expectation_gradients[ n_layers-1 ],

final_cost_gradient );

train_costs.last() = final_cost_value[0];

for( int i=n_layers-1 ; i>0 ; i-- )

{

layers[i]->bpropUpdate( activations[i],

expectations[i],

activation_gradients[i],

expectation_gradients[i] );

connections[i-1]->bpropUpdate( expectations[i-1],

activations[i],

expectation_gradients[i-1],

activation_gradients[i] );

}

}

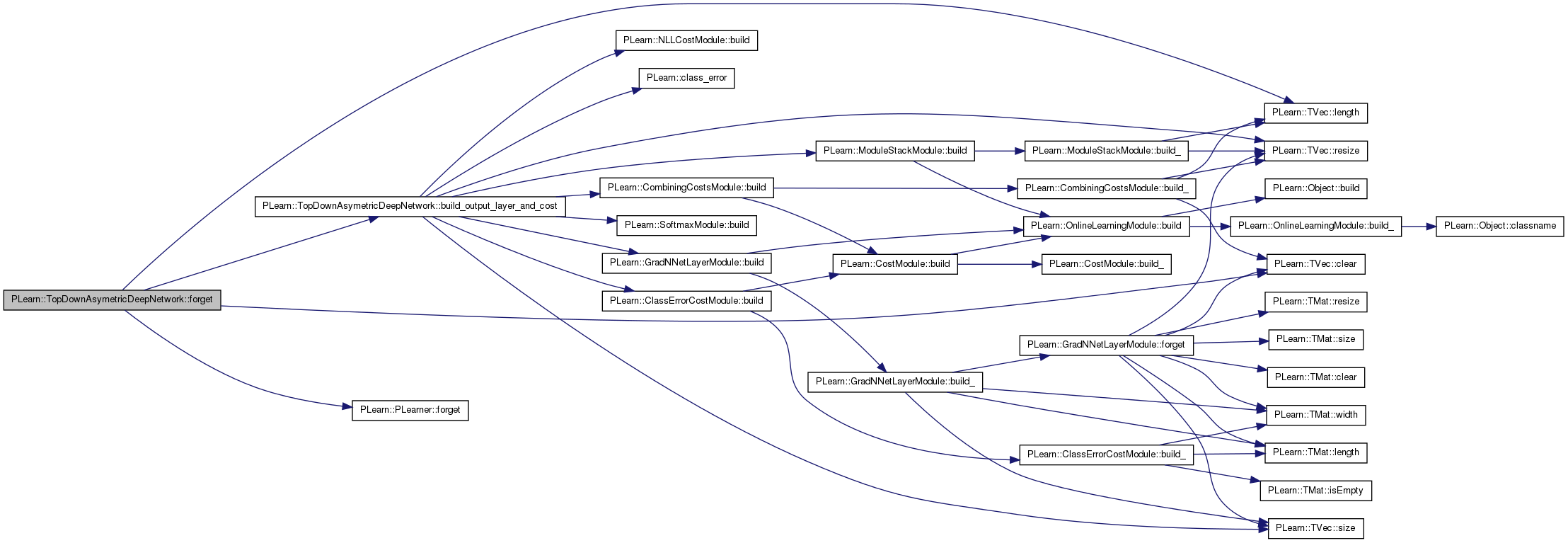

| void PLearn::TopDownAsymetricDeepNetwork::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 499 of file TopDownAsymetricDeepNetwork.cc.

References build_output_layer_and_cost(), PLearn::TVec< T >::clear(), connections, PLearn::PLearner::forget(), greedy_stages, i, layers, PLearn::TVec< T >::length(), n_layers, reconstruction_connections, PLearn::PLearner::stage, and top_down_layers.

{

inherited::forget();

for( int i=0 ; i<n_layers ; i++ )

layers[i]->forget();

for( int i=0 ; i<n_layers ; i++ )

top_down_layers[i]->forget();

for( int i=0 ; i<n_layers-1 ; i++ )

connections[i]->forget();

for( int i=0; i<reconstruction_connections.length(); i++)

reconstruction_connections[i]->forget();

build_output_layer_and_cost();

stage = 0;

greedy_stages.clear();

}

| OptionList & PLearn::TopDownAsymetricDeepNetwork::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

| OptionMap & PLearn::TopDownAsymetricDeepNetwork::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

| RemoteMethodMap & PLearn::TopDownAsymetricDeepNetwork::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 57 of file TopDownAsymetricDeepNetwork.cc.

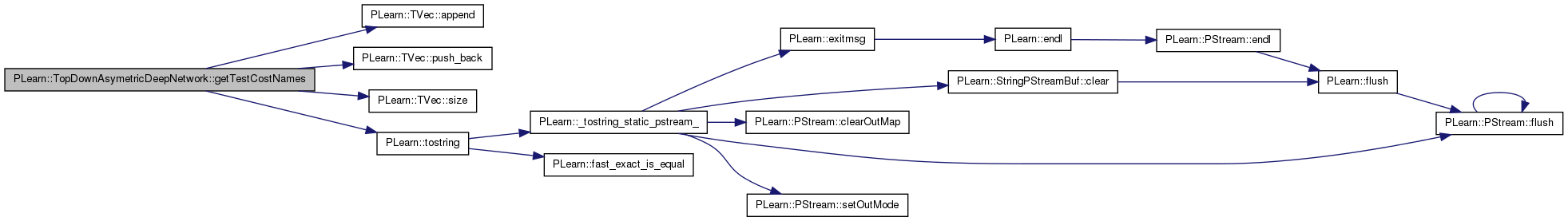

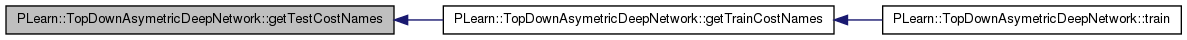

| TVec< string > PLearn::TopDownAsymetricDeepNetwork::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 891 of file TopDownAsymetricDeepNetwork.cc.

References PLearn::TVec< T >::append(), i, layers, PLearn::TVec< T >::push_back(), PLearn::TVec< T >::size(), and PLearn::tostring().

Referenced by getTrainCostNames().

{

// Return the names of the costs computed by computeCostsFromOutputs

// (these may or may not be exactly the same as what's returned by

// getTrainCostNames).

TVec<string> cost_names(0);

for( int i=0; i<layers.size()-1; i++)

cost_names.push_back("reconstruction_error_" + tostring(i+1));

cost_names.append( "class_error" );

return cost_names;

}

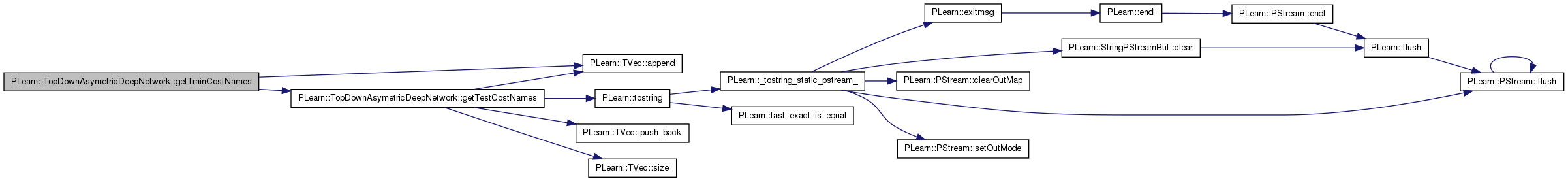

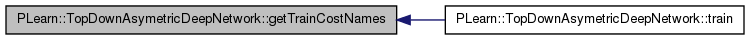

| TVec< string > PLearn::TopDownAsymetricDeepNetwork::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 907 of file TopDownAsymetricDeepNetwork.cc.

References PLearn::TVec< T >::append(), and getTestCostNames().

Referenced by train().

{

TVec<string> cost_names = getTestCostNames();

cost_names.append( "NLL" );

return cost_names;

}

| void PLearn::TopDownAsymetricDeepNetwork::greedyStep | ( | const Vec & | input, |

| const Vec & | target, | ||

| int | index, | ||

| Vec | train_costs, | ||

| int | stage | ||

| ) |

Definition at line 651 of file TopDownAsymetricDeepNetwork.cc.

References activations, autoassociator_input_indices, cd_decrease_ct, cd_learning_rate, computeRepresentation(), connections, expectations, PLearn::fast_exact_is_equal(), fraction_of_masked_inputs, greedy_decrease_ct, greedy_learning_rate, input_representation, j, layers, masked_autoassociator_input, n_layers, neg_down_val, neg_up_val, PLASSERT, pos_down_val, pos_up_val, PLearn::PLearner::random_gen, reconstruction_activation_gradients, reconstruction_activations, reconstruction_connections, reconstruction_expectation_gradients, PLearn::sample(), and top_down_layers.

Referenced by train().

{

PLASSERT( index < n_layers );

real lr;

// Get example representation

computeRepresentation(input, input_representation,

index);

// Autoassociator learning

if( !fast_exact_is_equal( greedy_learning_rate, 0 ) )

{

if( !fast_exact_is_equal( greedy_decrease_ct , 0 ) )

lr = greedy_learning_rate/(1 + greedy_decrease_ct

* this_stage);

else

lr = greedy_learning_rate;

if( fraction_of_masked_inputs > 0 )

random_gen->shuffleElements(autoassociator_input_indices);

top_down_layers[index]->setLearningRate( lr );

connections[index]->setLearningRate( lr );

reconstruction_connections[index]->setLearningRate( lr );

layers[index+1]->setLearningRate( lr );

if( fraction_of_masked_inputs > 0 )

{

masked_autoassociator_input << input_representation;

for( int j=0 ; j < round(fraction_of_masked_inputs*layers[index]->size) ; j++)

masked_autoassociator_input[ autoassociator_input_indices[j] ] = 0;

connections[index]->fprop( masked_autoassociator_input, activations[index+1]);

}

else

connections[index]->fprop(input_representation,

activations[index+1]);

layers[index+1]->fprop(activations[index+1], expectations[index+1]);

reconstruction_connections[ index ]->fprop( expectations[index+1],

reconstruction_activations);

top_down_layers[ index ]->fprop( reconstruction_activations,

top_down_layers[ index ]->expectation);

top_down_layers[ index ]->activation << reconstruction_activations;

top_down_layers[ index ]->setExpectationByRef(

top_down_layers[ index ]->expectation);

real rec_err = top_down_layers[ index ]->fpropNLL(

input_representation);

train_costs[index] = rec_err;

top_down_layers[ index ]->bpropNLL(

input_representation, rec_err,

reconstruction_activation_gradients);

}

// RBM learning

if( !fast_exact_is_equal( cd_learning_rate, 0 ) )

{

connections[index]->setAsDownInput( input_representation );

layers[index+1]->getAllActivations( connections[index] );

layers[index+1]->computeExpectation();

layers[index+1]->generateSample();

// accumulate positive stats using the expectation

// we deep-copy because the value will change during negative phase

pos_down_val = expectations[index];

pos_up_val << layers[index+1]->expectation;

// down propagation, starting from a sample of layers[index+1]

connections[index]->setAsUpInput( layers[index+1]->sample );

top_down_layers[index]->getAllActivations( connections[index] );

top_down_layers[index]->computeExpectation();

top_down_layers[index]->generateSample();

// negative phase

connections[index]->setAsDownInput( top_down_layers[index]->sample );

layers[index+1]->getAllActivations( connections[index] );

layers[index+1]->computeExpectation();

// accumulate negative stats

// no need to deep-copy because the values won't change before update

neg_down_val = top_down_layers[index]->sample;

neg_up_val = layers[index+1]->expectation;

}

// Update hidden layer bias and weights

if( !fast_exact_is_equal( greedy_learning_rate, 0 ) )

{

top_down_layers[ index ]->update(reconstruction_activation_gradients);

reconstruction_connections[ index ]->bpropUpdate(

expectations[index+1],

reconstruction_activations,

reconstruction_expectation_gradients,

reconstruction_activation_gradients);

layers[ index+1 ]->bpropUpdate(

activations[index+1],

expectations[index+1],

// reused

reconstruction_activation_gradients,

reconstruction_expectation_gradients);

if( fraction_of_masked_inputs > 0 )

connections[ index ]->bpropUpdate(

masked_autoassociator_input,

activations[index+1],

reconstruction_expectation_gradients, //reused

reconstruction_activation_gradients);

else

connections[ index ]->bpropUpdate(

input_representation,

activations[index+1],

reconstruction_expectation_gradients, //reused

reconstruction_activation_gradients);

}

// RBM updates

if( !fast_exact_is_equal( cd_learning_rate, 0 ) )

{

if( !fast_exact_is_equal( cd_decrease_ct , 0 ) )

lr = cd_learning_rate/(1 + cd_decrease_ct

* this_stage);

else

lr = cd_learning_rate;

top_down_layers[index]->setLearningRate( lr );

connections[index]->setLearningRate( lr );

layers[index+1]->setLearningRate( lr );

top_down_layers[index]->update( pos_down_val, neg_down_val );

connections[index]->update( pos_down_val, pos_up_val,

neg_down_val, neg_up_val );

layers[index+1]->update( pos_up_val, neg_up_val );

}

}

| void PLearn::TopDownAsymetricDeepNetwork::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 455 of file TopDownAsymetricDeepNetwork.cc.

References activation_gradients, activations, autoassociator_input_indices, connections, PLearn::deepCopyField(), expectation_gradients, expectations, final_cost, final_cost_gradient, final_cost_input, final_cost_value, final_module, greedy_stages, input_representation, layers, PLearn::PLearner::makeDeepCopyFromShallowCopy(), masked_autoassociator_input, neg_down_val, neg_up_val, pos_down_val, pos_up_val, reconstruction_activation_gradients, reconstruction_activations, reconstruction_connections, reconstruction_expectation_gradients, top_down_layers, and training_schedule.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// deepCopyField(, copies);

// Public options

deepCopyField(training_schedule, copies);

deepCopyField(layers, copies);

deepCopyField(top_down_layers, copies);

deepCopyField(connections, copies);

deepCopyField(reconstruction_connections, copies);

// Protected options

deepCopyField(activations, copies);

deepCopyField(expectations, copies);

deepCopyField(activation_gradients, copies);

deepCopyField(expectation_gradients, copies);

deepCopyField(reconstruction_activations, copies);

deepCopyField(reconstruction_activation_gradients, copies);

deepCopyField(reconstruction_expectation_gradients, copies);

deepCopyField(input_representation, copies);

deepCopyField(masked_autoassociator_input, copies);

deepCopyField(autoassociator_input_indices, copies);

deepCopyField(pos_down_val, copies);

deepCopyField(pos_up_val, copies);

deepCopyField(neg_down_val, copies);

deepCopyField(neg_up_val, copies);

deepCopyField(final_cost_input, copies);

deepCopyField(final_cost_value, copies);

deepCopyField(final_cost_gradient, copies);

deepCopyField(greedy_stages, copies);

deepCopyField(final_module, copies);

deepCopyField(final_cost, copies);

}

| int PLearn::TopDownAsymetricDeepNetwork::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 492 of file TopDownAsymetricDeepNetwork.cc.

References n_classes.

{

// if(currently_trained_layer < n_layers)

// return layers[currently_trained_layer]->size;

return n_classes;

}

| void PLearn::TopDownAsymetricDeepNetwork::setLearningRate | ( | real | the_learning_rate | ) | [private] |

Definition at line 916 of file TopDownAsymetricDeepNetwork.cc.

References connections, final_cost, final_module, i, layers, n_layers, and top_down_layers.

Referenced by train().

{

for( int i=0 ; i<n_layers-1 ; i++ )

{

layers[i]->setLearningRate( the_learning_rate );

top_down_layers[i]->setLearningRate( the_learning_rate );

connections[i]->setLearningRate( the_learning_rate );

}

layers[n_layers-1]->setLearningRate( the_learning_rate );

top_down_layers[n_layers-1]->setLearningRate( the_learning_rate );

final_module->setLearningRate( the_learning_rate );

final_cost->setLearningRate( the_learning_rate );

}

| void PLearn::TopDownAsymetricDeepNetwork::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 521 of file TopDownAsymetricDeepNetwork.cc.

References autoassociator_input_indices, cd_learning_rate, classname(), currently_trained_layer, PLearn::TVec< T >::data(), PLearn::endl(), PLearn::fast_exact_is_equal(), PLearn::TVec< T >::fill(), final_cost_gradient, final_cost_input, final_cost_value, fine_tuning_decrease_ct, fine_tuning_learning_rate, fineTuningStep(), fraction_of_masked_inputs, PLearn::VMat::getExample(), getTrainCostNames(), greedy_learning_rate, greedy_stages, greedyStep(), i, input_representation, PLearn::PLearner::inputsize(), j, PLearn::TVec< T >::last(), layers, PLearn::VMat::length(), PLearn::TVec< T >::length(), masked_autoassociator_input, MISSING_VALUE, n_classes, n_layers, neg_down_val, neg_up_val, PLearn::PLearner::nstages, pos_down_val, pos_up_val, reconstruction_activation_gradients, reconstruction_activations, reconstruction_expectation_gradients, PLearn::PLearner::report_progress, PLearn::TVec< T >::resize(), PLearn::sample(), setLearningRate(), PLearn::PLearner::stage, PLearn::TVec< T >::subVec(), PLearn::PLearner::targetsize(), PLearn::tostring(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, and training_schedule.

{

MODULE_LOG << "train() called " << endl;

MODULE_LOG << " training_schedule = " << training_schedule << endl;

Vec input( inputsize() );

Vec target( targetsize() );

real weight; // unused

TVec<string> train_cost_names = getTrainCostNames() ;

Vec train_costs( train_cost_names.length() );

train_costs.fill(MISSING_VALUE) ;

int nsamples = train_set->length();

int sample;

PP<ProgressBar> pb;

// clear stats of previous epoch

train_stats->forget();

int init_stage;

/***** initial greedy training *****/

for( int i=0 ; i<n_layers-1 ; i++ )

{

MODULE_LOG << "Training connection weights between layers " << i

<< " and " << i+1 << endl;

int end_stage = training_schedule[i];

int* this_stage = greedy_stages.subVec(i,1).data();

init_stage = *this_stage;

MODULE_LOG << " stage = " << *this_stage << endl;

MODULE_LOG << " end_stage = " << end_stage << endl;

MODULE_LOG << " greedy_learning_rate = " << greedy_learning_rate << endl;

MODULE_LOG << " cd_learning_rate = " << cd_learning_rate << endl;

if( report_progress && *this_stage < end_stage )

pb = new ProgressBar( "Training layer "+tostring(i)

+" of "+classname(),

end_stage - init_stage );

train_costs.fill(MISSING_VALUE);

reconstruction_activations.resize(layers[i]->size);

reconstruction_activation_gradients.resize(layers[i]->size);

reconstruction_expectation_gradients.resize(layers[i]->size);

input_representation.resize(layers[i]->size);

pos_down_val.resize(layers[i]->size);

pos_up_val.resize(layers[i+1]->size);

neg_down_val.resize(layers[i]->size);

neg_up_val.resize(layers[i+1]->size);

if( fraction_of_masked_inputs > 0 )

{

masked_autoassociator_input.resize(layers[i]->size);

autoassociator_input_indices.resize(layers[i]->size);

for( int j=0 ; j < autoassociator_input_indices.length() ; j++ )

autoassociator_input_indices[j] = j;

}

for( ; *this_stage<end_stage ; (*this_stage)++ )

{

sample = *this_stage % nsamples;

train_set->getExample(sample, input, target, weight);

greedyStep( input, target, i, train_costs, *this_stage);

train_stats->update( train_costs );

if( pb )

pb->update( *this_stage - init_stage + 1 );

}

}

/***** fine-tuning by gradient descent *****/

if( stage < nstages )

{

MODULE_LOG << "Fine-tuning all parameters, by gradient descent" << endl;

MODULE_LOG << " stage = " << stage << endl;

MODULE_LOG << " nstages = " << nstages << endl;

MODULE_LOG << " fine_tuning_learning_rate = " <<

fine_tuning_learning_rate << endl;

init_stage = stage;

if( report_progress && stage < nstages )

pb = new ProgressBar( "Fine-tuning parameters of all layers of "

+ classname(),

nstages - init_stage );

setLearningRate( fine_tuning_learning_rate );

train_costs.fill(MISSING_VALUE);

final_cost_input.resize(n_classes);

final_cost_value.resize(2); // Should be resized anyways

final_cost_gradient.resize(n_classes);

input_representation.resize(layers.last()->size);

for( ; stage<nstages ; stage++ )

{

sample = stage % nsamples;

if( !fast_exact_is_equal( fine_tuning_decrease_ct, 0. ) )

setLearningRate( fine_tuning_learning_rate

/ (1. + fine_tuning_decrease_ct * stage ) );

train_set->getExample( sample, input, target, weight );

fineTuningStep( input, target, train_costs);

train_stats->update( train_costs );

if( pb )

pb->update( stage - init_stage + 1 );

}

}

train_stats->finalize();

MODULE_LOG << " train costs = " << train_stats->getMean() << endl;

// Update currently_trained_layer

if(stage > 0)

currently_trained_layer = n_layers;

else

{

currently_trained_layer = n_layers-1;

while(currently_trained_layer>1

&& greedy_stages[currently_trained_layer-1] <= 0)

currently_trained_layer--;

}

}

Reimplemented from PLearn::PLearner.

Definition at line 180 of file TopDownAsymetricDeepNetwork.h.

TVec<Vec> PLearn::TopDownAsymetricDeepNetwork::activation_gradients [mutable, protected] |

Stores the gradient of the cost wrt the activations of the input and hidden layers (at the input of the layers)

Definition at line 203 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_layers_and_connections(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

TVec<Vec> PLearn::TopDownAsymetricDeepNetwork::activations [mutable, protected] |

Stores the activations of the input and hidden layers (at the input of the layers)

Definition at line 194 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_layers_and_connections(), computeRepresentation(), fineTuningStep(), greedyStep(), and makeDeepCopyFromShallowCopy().

TVec<int> PLearn::TopDownAsymetricDeepNetwork::autoassociator_input_indices [mutable, protected] |

Indices of input components for current layer.

Definition at line 226 of file TopDownAsymetricDeepNetwork.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Contrastive divergence decrease constant.

Definition at line 78 of file TopDownAsymetricDeepNetwork.h.

Referenced by declareOptions(), and greedyStep().

Contrastive divergence learning rate.

Definition at line 75 of file TopDownAsymetricDeepNetwork.h.

Referenced by declareOptions(), greedyStep(), and train().

The weights of the connections between the layers.

Definition at line 106 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_layers_and_connections(), computeRepresentation(), declareOptions(), fineTuningStep(), forget(), greedyStep(), makeDeepCopyFromShallowCopy(), and setLearningRate().

Currently trained layer (1 means the first hidden layer, n_layers means the output layer)

Definition at line 249 of file TopDownAsymetricDeepNetwork.h.

TVec<Vec> PLearn::TopDownAsymetricDeepNetwork::expectation_gradients [mutable, protected] |

Stores the gradient of the cost wrt the expectations of the input and hidden layers (at the output of the layers)

Definition at line 208 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_layers_and_connections(), fineTuningStep(), and makeDeepCopyFromShallowCopy().

TVec<Vec> PLearn::TopDownAsymetricDeepNetwork::expectations [mutable, protected] |

Stores the expectations of the input and hidden layers (at the output of the layers)

Definition at line 198 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_layers_and_connections(), computeRepresentation(), fineTuningStep(), greedyStep(), and makeDeepCopyFromShallowCopy().

PP<CostModule> PLearn::TopDownAsymetricDeepNetwork::final_cost [protected] |

Cost on output layer of neural net.

Definition at line 255 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_(), build_output_layer_and_cost(), declareOptions(), fineTuningStep(), makeDeepCopyFromShallowCopy(), and setLearningRate().

Vec PLearn::TopDownAsymetricDeepNetwork::final_cost_gradient [mutable, protected] |

Cost gradient on output layer.

Definition at line 242 of file TopDownAsymetricDeepNetwork.h.

Referenced by fineTuningStep(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::TopDownAsymetricDeepNetwork::final_cost_input [mutable, protected] |

Input of cost function.

Definition at line 238 of file TopDownAsymetricDeepNetwork.h.

Referenced by fineTuningStep(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::TopDownAsymetricDeepNetwork::final_cost_value [mutable, protected] |

Cost value.

Definition at line 240 of file TopDownAsymetricDeepNetwork.h.

Referenced by fineTuningStep(), makeDeepCopyFromShallowCopy(), and train().

Output layer of neural net.

Definition at line 252 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_(), build_output_layer_and_cost(), declareOptions(), fineTuningStep(), makeDeepCopyFromShallowCopy(), and setLearningRate().

The decrease constant of the learning rate used during fine tuning gradient descent.

Definition at line 93 of file TopDownAsymetricDeepNetwork.h.

Referenced by declareOptions(), and train().

The learning rate used during the fine tuning gradient descent.

Definition at line 89 of file TopDownAsymetricDeepNetwork.h.

Referenced by declareOptions(), and train().

Fraction of the autoassociators' random input components that are masked, i.e.

unsused to reconstruct the input.

Definition at line 122 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_layers_and_connections(), declareOptions(), greedyStep(), and train().

The decrease constant of the learning rate used during the autoassociator gradient descent training.

When a hidden layer has finished its training, the learning rate is reset to it's initial value.

Definition at line 86 of file TopDownAsymetricDeepNetwork.h.

Referenced by declareOptions(), and greedyStep().

The learning rate used during the autoassociator gradient descent training.

Definition at line 81 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_layers_and_connections(), declareOptions(), greedyStep(), and train().

TVec<int> PLearn::TopDownAsymetricDeepNetwork::greedy_stages [protected] |

Stages of the different greedy phases.

Definition at line 245 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::TopDownAsymetricDeepNetwork::input_representation [mutable, protected] |

Example representation.

Definition at line 220 of file TopDownAsymetricDeepNetwork.h.

Referenced by fineTuningStep(), greedyStep(), makeDeepCopyFromShallowCopy(), and train().

The layers of units in the network for bottom up inference.

Definition at line 100 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_(), build_layers_and_connections(), build_output_layer_and_cost(), computeRepresentation(), declareOptions(), fineTuningStep(), forget(), getTestCostNames(), greedyStep(), makeDeepCopyFromShallowCopy(), setLearningRate(), and train().

Vec PLearn::TopDownAsymetricDeepNetwork::masked_autoassociator_input [mutable, protected] |

Perturbed input for current layer.

Definition at line 223 of file TopDownAsymetricDeepNetwork.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Number of classes.

Definition at line 112 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_(), build_output_layer_and_cost(), declareOptions(), outputsize(), and train().

Number of layers.

Definition at line 127 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_(), build_layers_and_connections(), build_output_layer_and_cost(), declareOptions(), fineTuningStep(), forget(), greedyStep(), setLearningRate(), and train().

Vec PLearn::TopDownAsymetricDeepNetwork::neg_down_val [protected] |

Negative down statistic.

Definition at line 233 of file TopDownAsymetricDeepNetwork.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::TopDownAsymetricDeepNetwork::neg_up_val [protected] |

Negative up statistic.

Definition at line 235 of file TopDownAsymetricDeepNetwork.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Output weights l1_penalty_factor.

Definition at line 115 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_output_layer_and_cost(), and declareOptions().

Output weights l2_penalty_factor.

Definition at line 118 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_output_layer_and_cost(), and declareOptions().

Vec PLearn::TopDownAsymetricDeepNetwork::pos_down_val [protected] |

Positive down statistic.

Definition at line 229 of file TopDownAsymetricDeepNetwork.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::TopDownAsymetricDeepNetwork::pos_up_val [protected] |

Positive up statistic.

Definition at line 231 of file TopDownAsymetricDeepNetwork.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::TopDownAsymetricDeepNetwork::reconstruction_activation_gradients [mutable, protected] |

Reconstruction activation gradients.

Definition at line 214 of file TopDownAsymetricDeepNetwork.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

Vec PLearn::TopDownAsymetricDeepNetwork::reconstruction_activations [mutable, protected] |

Reconstruction activations.

Definition at line 211 of file TopDownAsymetricDeepNetwork.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

The reconstruction weights of the autoassociators.

Definition at line 109 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_layers_and_connections(), declareOptions(), forget(), greedyStep(), and makeDeepCopyFromShallowCopy().

Vec PLearn::TopDownAsymetricDeepNetwork::reconstruction_expectation_gradients [mutable, protected] |

Reconstruction expectation gradients.

Definition at line 217 of file TopDownAsymetricDeepNetwork.h.

Referenced by greedyStep(), makeDeepCopyFromShallowCopy(), and train().

The layers of units in the network for top down inference.

Definition at line 103 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_layers_and_connections(), declareOptions(), forget(), greedyStep(), makeDeepCopyFromShallowCopy(), and setLearningRate().

Number of examples to use during each phase of greedy pre-training.

The number of fine-tunig steps is defined by nstages.

Definition at line 97 of file TopDownAsymetricDeepNetwork.h.

Referenced by build_(), declareOptions(), makeDeepCopyFromShallowCopy(), and train().

1.7.4

1.7.4