|

PLearn 0.1

|

|

PLearn 0.1

|

#include <GradientOptimizer.h>

Public Member Functions | |

| GradientOptimizer () | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual GradientOptimizer * | deepCopy (CopiesMap &copies) const |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be. | |

| virtual void | build () |

| Post-constructor. | |

| virtual bool | optimizeN (VecStatsCollector &stats_coll) |

| Main optimization method, to be defined in subclasses. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

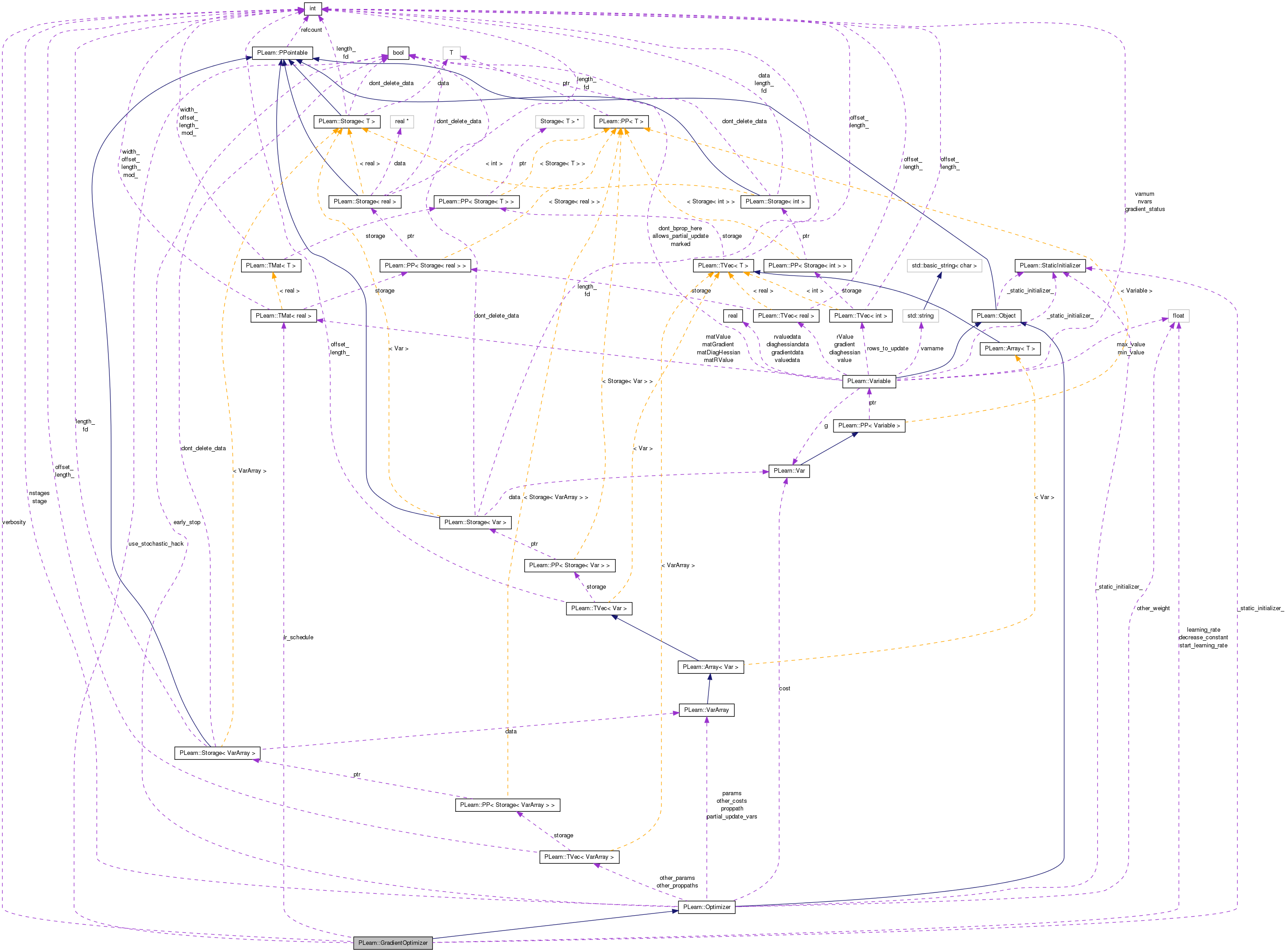

| real | learning_rate |

| gradient descent specific parameters (directly modifiable by the user) | |

| real | start_learning_rate |

| real | decrease_constant |

| bool | use_stochastic_hack |

| Indication that a stochastic hack to accelerate stochastic gradient descent should be used. | |

| Mat | lr_schedule |

| int | verbosity |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declare options (data fields) for the class. | |

Private Types | |

| typedef Optimizer | inherited |

Private Member Functions | |

| void | build_ () |

| Object-specific post-constructor. | |

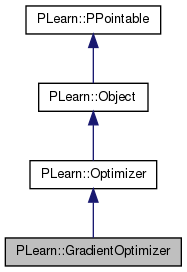

Definition at line 55 of file GradientOptimizer.h.

typedef Optimizer PLearn::GradientOptimizer::inherited [private] |

Reimplemented from PLearn::Optimizer.

Definition at line 57 of file GradientOptimizer.h.

| PLearn::GradientOptimizer::GradientOptimizer | ( | ) |

Definition at line 72 of file GradientOptimizer.cc.

:

learning_rate(0.),

start_learning_rate(1e-2),

decrease_constant(0),

use_stochastic_hack(false),

verbosity(0)

{}

| string PLearn::GradientOptimizer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::Optimizer.

Definition at line 70 of file GradientOptimizer.cc.

| OptionList & PLearn::GradientOptimizer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::Optimizer.

Definition at line 70 of file GradientOptimizer.cc.

| RemoteMethodMap & PLearn::GradientOptimizer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::Optimizer.

Definition at line 70 of file GradientOptimizer.cc.

Reimplemented from PLearn::Optimizer.

Definition at line 70 of file GradientOptimizer.cc.

| Object * PLearn::GradientOptimizer::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 70 of file GradientOptimizer.cc.

| StaticInitializer GradientOptimizer::_static_initializer_ & PLearn::GradientOptimizer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::Optimizer.

Definition at line 70 of file GradientOptimizer.cc.

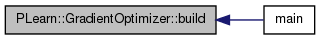

| virtual void PLearn::GradientOptimizer::build | ( | ) | [inline, virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::Optimizer.

Definition at line 105 of file GradientOptimizer.h.

Referenced by main().

{

inherited::build();

build_();

}

| void PLearn::GradientOptimizer::build_ | ( | ) | [inline, private] |

Object-specific post-constructor.

This method should be redefined in subclasses and do the actual building of the object according to previously set option fields. Constructors can just set option fields, and then call build_. This method is NOT virtual, and will typically be called only from three places: a constructor, the public virtual build() method, and possibly the public virtual read method (which calls its parent's read). build_() can assume that its parent's build_() has already been called.

Reimplemented from PLearn::Optimizer.

Definition at line 111 of file GradientOptimizer.h.

{}

| string PLearn::GradientOptimizer::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 70 of file GradientOptimizer.cc.

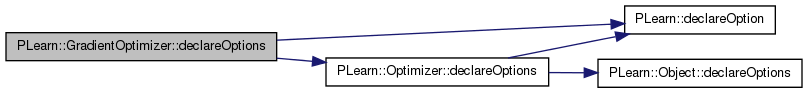

| void PLearn::GradientOptimizer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declare options (data fields) for the class.

Redefine this in subclasses: call declareOption(...) for each option, and then call inherited::declareOptions(options). Please call the inherited method AT THE END to get the options listed in a consistent order (from most recently defined to least recently defined).

static void MyDerivedClass::declareOptions(OptionList& ol) { declareOption(ol, "inputsize", &MyObject::inputsize_, OptionBase::buildoption, "The size of the input; it must be provided"); declareOption(ol, "weights", &MyObject::weights, OptionBase::learntoption, "The learned model weights"); inherited::declareOptions(ol); }

| ol | List of options that is progressively being constructed for the current class. |

Reimplemented from PLearn::Optimizer.

Definition at line 81 of file GradientOptimizer.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::Optimizer::declareOptions(), decrease_constant, learning_rate, PLearn::OptionBase::learntoption, lr_schedule, start_learning_rate, use_stochastic_hack, and verbosity.

{

declareOption(

ol, "start_learning_rate", &GradientOptimizer::start_learning_rate,

OptionBase::buildoption,

"The initial learning rate\n");

declareOption(

ol, "learning_rate", &GradientOptimizer::learning_rate,

OptionBase::learntoption,

"The current learning rate\n");

declareOption(

ol, "decrease_constant", &GradientOptimizer::decrease_constant,

OptionBase::buildoption,

"The learning rate decrease constant \n");

declareOption(

ol, "lr_schedule", &GradientOptimizer::lr_schedule,

OptionBase::buildoption,

"Fixed schedule instead of decrease_constant. This matrix has 2 columns: iteration_threshold \n"

"and learning_rate_factor. As soon as the iteration number goes above the iteration_threshold,\n"

"the corresponding learning_rate_factor is applied (multiplied) to the start_learning_rate to\n"

"obtain the learning_rate.\n");

declareOption(

ol, "use_stochastic_hack", &GradientOptimizer::use_stochastic_hack,

OptionBase::buildoption,

"Indication that a stochastic hack to accelerate stochastic gradient descent should be used.\n"

"Be aware that it will not take into account minimum and maximum values in variables.\n"

);

declareOption(

ol, "verbosity", &GradientOptimizer::verbosity,

OptionBase::buildoption,

"Controls the amount of output. If zero, does not print anything.\n"

"If 'verbosity'=V, print the current cost and learning rate if\n"

"\n"

" stage % V == 0\n"

"\n"

"i.e. every V stages. (Default=0)\n");

inherited::declareOptions(ol);

}

| static const PPath& PLearn::GradientOptimizer::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::Optimizer.

Definition at line 100 of file GradientOptimizer.h.

{ inherited::makeDeepCopyFromShallowCopy(copies); }

| GradientOptimizer * PLearn::GradientOptimizer::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::Optimizer.

Definition at line 70 of file GradientOptimizer.cc.

| OptionList & PLearn::GradientOptimizer::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 70 of file GradientOptimizer.cc.

| OptionMap & PLearn::GradientOptimizer::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 70 of file GradientOptimizer.cc.

| RemoteMethodMap & PLearn::GradientOptimizer::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 70 of file GradientOptimizer.cc.

| virtual void PLearn::GradientOptimizer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [inline, virtual] |

Does the necessary operations to transform a shallow copy (this) into a deep copy by deep-copying all the members that need to be.

This needs to be overridden by every class that adds "complex" data members to the class, such as Vec, Mat, PP<Something>, etc. Typical implementation:

void CLASS_OF_THIS::makeDeepCopyFromShallowCopy(CopiesMap& copies) { inherited::makeDeepCopyFromShallowCopy(copies); deepCopyField(complex_data_member1, copies); deepCopyField(complex_data_member2, copies); ... }

| copies | A map used by the deep-copy mechanism to keep track of already-copied objects. |

Reimplemented from PLearn::Optimizer.

Definition at line 102 of file GradientOptimizer.h.

{ inherited::makeDeepCopyFromShallowCopy(copies); }

| bool PLearn::GradientOptimizer::optimizeN | ( | VecStatsCollector & | stats_coll | ) | [virtual] |

Main optimization method, to be defined in subclasses.

Return true iff no further optimization is possible.

Implements PLearn::Optimizer.

Definition at line 129 of file GradientOptimizer.cc.

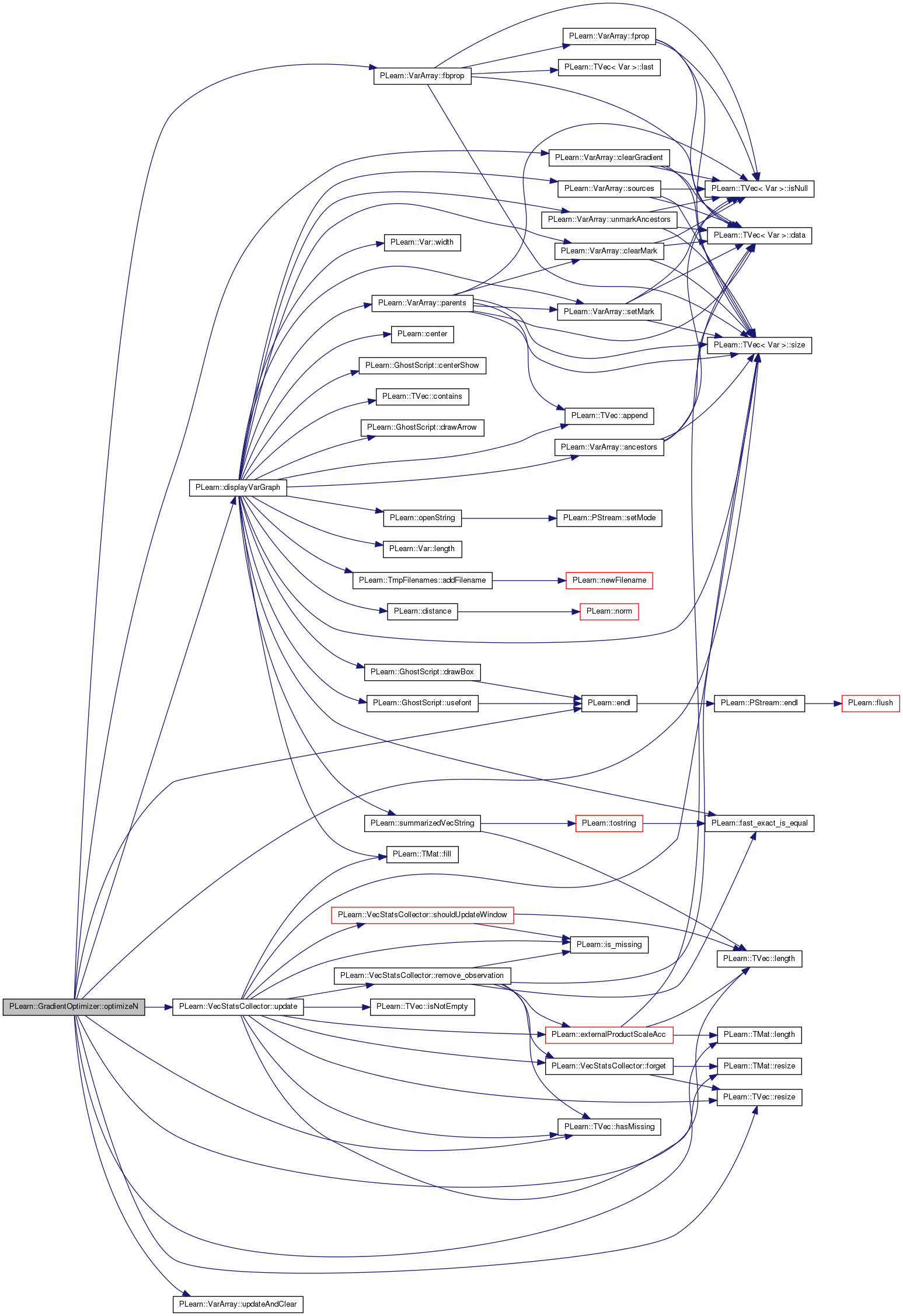

References PLearn::VarArray::clearGradient(), PLearn::Optimizer::cost, decrease_constant, PLearn::displayVarGraph(), PLearn::endl(), PLearn::VarArray::fbprop(), PLearn::TVec< T >::hasMissing(), i, j, learning_rate, PLearn::TMat< T >::length(), PLearn::TVec< T >::length(), lr_schedule, n, PLearn::SumOfVariable::nsamples, PLearn::Optimizer::nstages, PLearn::Optimizer::other_costs, PLearn::Optimizer::other_params, PLearn::Optimizer::other_proppaths, PLearn::Optimizer::other_weight, PLearn::Optimizer::params, PLearn::Optimizer::partial_update_vars, PLERROR, PLearn::Optimizer::proppath, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::Optimizer::stage, start_learning_rate, PLearn::VecStatsCollector::update(), PLearn::VarArray::updateAndClear(), use_stochastic_hack, and verbosity.

Referenced by main().

{

// Big hack for the special case of stochastic gradient, to avoid doing an

// explicit update (temporarily change the gradient fields of the

// parameters to point to the parameters themselves, so that gradients are

// "accumulated" directly in the parameters, thus updating them!

SumOfVariable* sumofvar = dynamic_cast<SumOfVariable*>((Variable*)cost);

Array<Mat> oldgradientlocations;

bool stochastic_hack = use_stochastic_hack && sumofvar!=0 && sumofvar->nsamples==1;

//stochastic_hack=false;

if(stochastic_hack)

{

// make the gradient and values fields of parameters point to the same

// place, so that when the descendants of the parameter Var's do a

// bprop this automatically increments the parameters (by the right

// amount since we set the cost->gradient to -learning_rate).

int n = params.size();

oldgradientlocations.resize(n);

for(int i=0; i<n; i++)

oldgradientlocations[i] = params[i]->defineGradientLocation(params[i]->matValue);

}

else

params.clearGradient();

if(other_costs.length() != 0)

{

for(int i=0; i<other_params.length(); i++)

other_params[i].clearGradient();

}

// Big hack for the special case of stochastic gradient, to avoid doing an explicit update

// (temporarily change the gradient fields of the parameters to point to the parameters themselves,

// so that gradients are "accumulated" directly in the parameters, thus updating them!

int stage_max = stage + nstages; // the stage to reach

int current_schedule = 0;

int n_schedules = lr_schedule.length();

if (n_schedules>0)

while (current_schedule+1 < n_schedules && stage > lr_schedule(current_schedule,0))

current_schedule++;

while (stage < stage_max)

{

if (n_schedules>0)

{

while (current_schedule+1 < n_schedules && stage > lr_schedule(current_schedule,0))

current_schedule++;

learning_rate = start_learning_rate * lr_schedule(current_schedule,1);

}

else

learning_rate = start_learning_rate/(1.0+decrease_constant*stage);

if(other_costs.length() != 0)

{

for(int i=0; i<other_costs.length(); i++)

{

other_proppaths[i].clearGradient();

other_costs[i]->gradient[0] = -learning_rate*other_weight;

static bool display_var_graph_before_fbprop=false;

if (display_var_graph_before_fbprop)

displayVarGraph(other_proppaths[i], true, 333);

//displayVarGraph(other_proppaths[i], true, 333);

other_proppaths[i].fbprop();

//displayVarGraph(other_proppaths[i], true, 333);

#ifdef BOUNDCHECK

int np = other_params[i].size();

for(int j=0; j<np; j++)

if (other_params[i][j]->value.hasMissing())

PLERROR("parameter updated with NaN");

#endif

static bool display_var_graph=false;

if (display_var_graph)

displayVarGraph(proppath, true, 333);

// // Debugging of negative NLL bug...

// if (cost->value[0] <= 0) {

// displayVarGraph(proppath, true, 333);

// cerr << "Negative NLL cost vector = " << cost << endl;

// PLERROR("Negative NLL encountered in optimization");

// }

// set params += -learning_rate * params.gradient

other_params[i].updateAndClear();

}

}

proppath.clearGradient();

cost->gradient[0] = -learning_rate;

static bool display_var_graph_before_fbprop=false;

if (display_var_graph_before_fbprop)

displayVarGraph(proppath, true, 333);

proppath.fbprop();

#ifdef BOUNDCHECK

int np = params.size();

for(int i=0; i<np; i++)

if (params[i]->value.hasMissing())

PLERROR("parameter updated with NaN");

#endif

static bool display_var_graph=false;

if (display_var_graph)

displayVarGraph(proppath, true, 333);

// // Debugging of negative NLL bug...

// if (cost->value[0] <= 0) {

// displayVarGraph(proppath, true, 333);

// cerr << "Negative NLL cost vector = " << cost << endl;

// PLERROR("Negative NLL encountered in optimization");

// }

// set params += -learning_rate * params.gradient

if(!stochastic_hack)

params.updateAndClear();

else

if(partial_update_vars.length() != 0)

for(int i=0; i<partial_update_vars.length(); i++)

partial_update_vars[i]->clearRowsToUpdate();

if (verbosity > 0 && stage % verbosity == 0) {

MODULE_LOG << "Stage " << stage << ": " << cost->value

<< "\tlr=" << learning_rate

<< endl;

}

stats_coll.update(cost->value);

++stage;

}

if(stochastic_hack) // restore the gradients as they previously were...

{

int n = params.size();

for(int i=0; i<n; i++)

params[i]->defineGradientLocation(oldgradientlocations[i]);

}

return false;

}

Reimplemented from PLearn::Optimizer.

Definition at line 100 of file GradientOptimizer.h.

Definition at line 67 of file GradientOptimizer.h.

Referenced by declareOptions(), and optimizeN().

gradient descent specific parameters (directly modifiable by the user)

Definition at line 63 of file GradientOptimizer.h.

Referenced by declareOptions(), and optimizeN().

Definition at line 76 of file GradientOptimizer.h.

Referenced by declareOptions(), and optimizeN().

Definition at line 66 of file GradientOptimizer.h.

Referenced by declareOptions(), main(), and optimizeN().

Indication that a stochastic hack to accelerate stochastic gradient descent should be used.

Definition at line 70 of file GradientOptimizer.h.

Referenced by declareOptions(), and optimizeN().

Definition at line 78 of file GradientOptimizer.h.

Referenced by declareOptions(), and optimizeN().

1.7.4

1.7.4