|

PLearn 0.1

|

|

PLearn 0.1

|

Model made of RBMs linked through time. More...

#include <DynamicallyLinkedRBMsModel.h>

Public Member Functions | |

| DynamicallyLinkedRBMsModel () | |

| Default constructor. | |

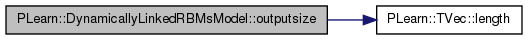

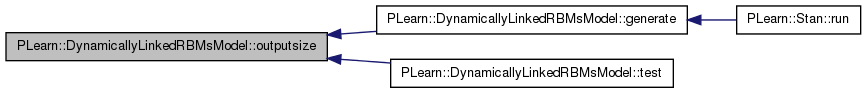

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| (Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!). | |

| virtual void | train () |

| The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process. | |

| void | setLearningRate (real the_learning_rate) |

| Sets the learning of all layers and connections. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| void | generate (int t, int n) |

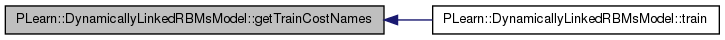

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

| void | partition (TVec< double > part, TVec< double > periode, TVec< double > vel) const |

| Use the partition. | |

| void | clamp_units (const Vec layer_vector, PP< RBMLayer > layer, TVec< int > symbol_sizes) const |

| Clamps the layer units based on a layer vector. | |

| void | clamp_units (const Vec layer_vector, PP< RBMLayer > layer, TVec< int > symbol_sizes, const Vec original_mask, Vec &formated_mask) const |

| Clamps the layer units based on a layer vector and provides the associated mask in the correct format. | |

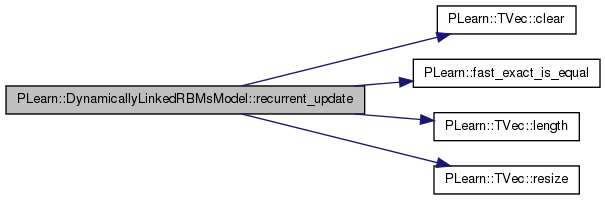

| void | recurrent_update () |

| Updates both the RBM parameters and the dynamic connections in the recurrent tuning phase, after the visible units have been clamped. | |

| virtual void | test (VMat testset, PP< VecStatsCollector > test_stats, VMat testoutputs=0, VMat testcosts=0) const |

| Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual DynamicallyLinkedRBMsModel * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Finish building the object; just call inherited::build followed by build_() | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | recurrent_net_learning_rate |

| The learning rate used during the recurrent phase. | |

| Vec | target_layers_weights |

| The training weights of each target layers. | |

| bool | use_target_layers_masks |

| Indication that a mask indicating which target to predict is present in the input part of the VMatrix dataset. | |

| real | end_of_sequence_symbol |

| Value of the first input component for end-of-sequence delimiter. | |

| PP< RBMLayer > | input_layer |

| The input layer of the model. | |

| TVec< PP< RBMLayer > > | target_layers |

| The target layers of the model. | |

| PP< RBMLayer > | hidden_layer |

| The hidden layer of the model. | |

| PP< RBMLayer > | hidden_layer2 |

| The second hidden layer of the model (optional) | |

| PP< RBMConnection > | dynamic_connections |

| The RBMConnection between the first hidden layers, through time. | |

| PP< RBMConnection > | hidden_connections |

| The RBMConnection between the first and second hidden layers (optional) | |

| PP< RBMConnection > | input_connections |

| The RBMConnection from input_layer to hidden_layer. | |

| TVec< PP< RBMConnection > > | target_connections |

| The RBMConnection from input_layer to hidden_layer. | |

| TVec< int > | target_layers_n_of_target_elements |

| Number of elements in the target part of a VMatrix associated to each target layer. | |

| TVec< int > | input_symbol_sizes |

| Number of symbols for each symbolic field of train_set. | |

| TVec< TVec< int > > | target_symbol_sizes |

| Number of symbols for each symbolic field of train_set. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| AutoVMatrix * | data |

| Store external data;. | |

| Vec | bias_gradient |

| Stores bias gradient. | |

| Vec | visi_bias_gradient |

| Stores bias gradient. | |

| Vec | hidden_gradient |

| Stores hidden gradient of dynamic connections. | |

| Vec | hidden_temporal_gradient |

| Stores hidden gradient of dynamic connections coming from time t+1. | |

| TVec< Vec > | hidden_list |

| List of hidden layers values. | |

| TVec< Vec > | hidden_act_no_bias_list |

| TVec< Vec > | hidden2_list |

| List of second hidden layers values. | |

| TVec< Vec > | hidden2_act_no_bias_list |

| TVec< TVec< Vec > > | target_prediction_list |

| List of target prediction values. | |

| TVec< TVec< Vec > > | target_prediction_act_no_bias_list |

| TVec< Vec > | input_list |

| List of inputs values. | |

| TVec< TVec< Vec > > | targets_list |

| List of inputs values. | |

| Mat | nll_list |

| List of the nll of the input samples in a sequence. | |

| TVec< TVec< Vec > > | masks_list |

| List of all targets' masks. | |

| Vec | dynamic_act_no_bias_contribution |

| Contribution of dynamic weights to hidden layer activation. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Model made of RBMs linked through time.

Definition at line 61 of file DynamicallyLinkedRBMsModel.h.

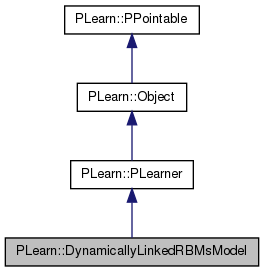

typedef PLearner PLearn::DynamicallyLinkedRBMsModel::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 63 of file DynamicallyLinkedRBMsModel.h.

| PLearn::DynamicallyLinkedRBMsModel::DynamicallyLinkedRBMsModel | ( | ) |

Default constructor.

Definition at line 74 of file DynamicallyLinkedRBMsModel.cc.

References PLearn::PLearner::random_gen.

:

//rbm_learning_rate( 0.01 ),

recurrent_net_learning_rate( 0.01),

use_target_layers_masks( false ),

end_of_sequence_symbol( -1000 )

//rbm_nstages( 0 ),

{

random_gen = new PRandom();

}

| string PLearn::DynamicallyLinkedRBMsModel::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

| OptionList & PLearn::DynamicallyLinkedRBMsModel::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

| RemoteMethodMap & PLearn::DynamicallyLinkedRBMsModel::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

Reimplemented from PLearn::PLearner.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

| Object * PLearn::DynamicallyLinkedRBMsModel::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

| StaticInitializer DynamicallyLinkedRBMsModel::_static_initializer_ & PLearn::DynamicallyLinkedRBMsModel::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

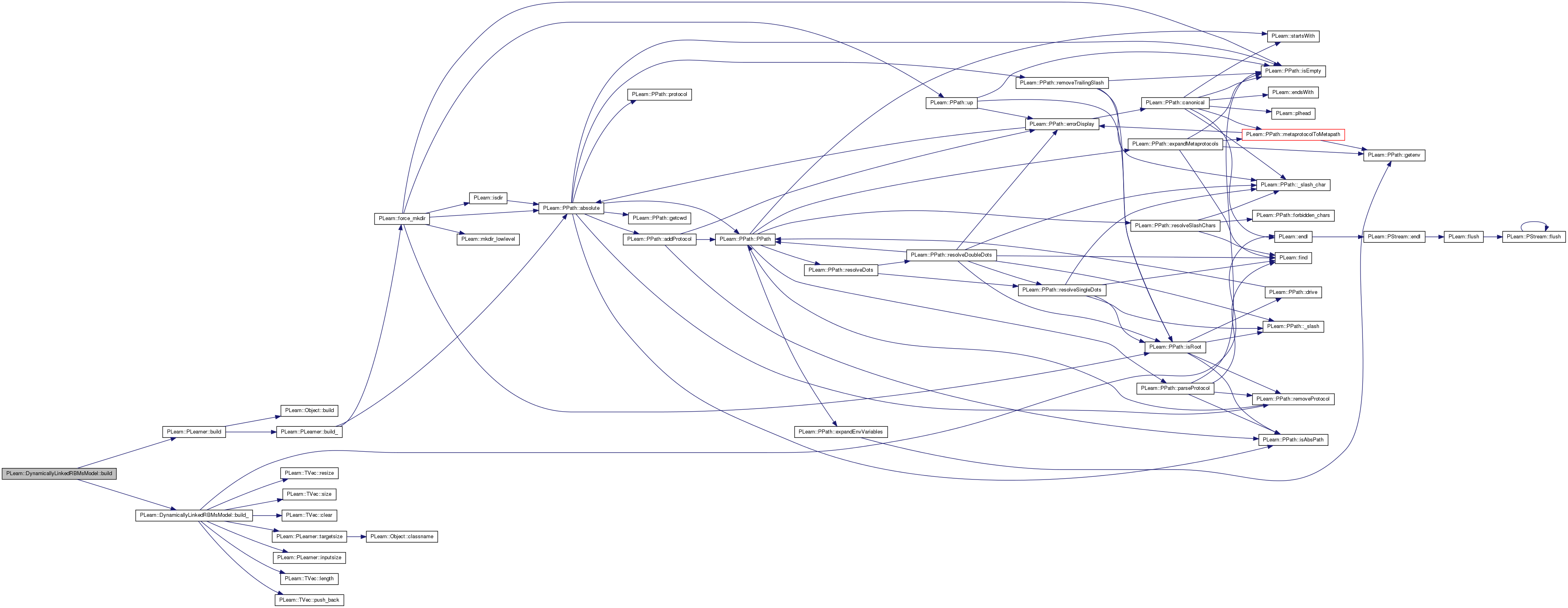

| void PLearn::DynamicallyLinkedRBMsModel::build | ( | ) | [virtual] |

Finish building the object; just call inherited::build followed by build_()

Reimplemented from PLearn::PLearner.

Definition at line 376 of file DynamicallyLinkedRBMsModel.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

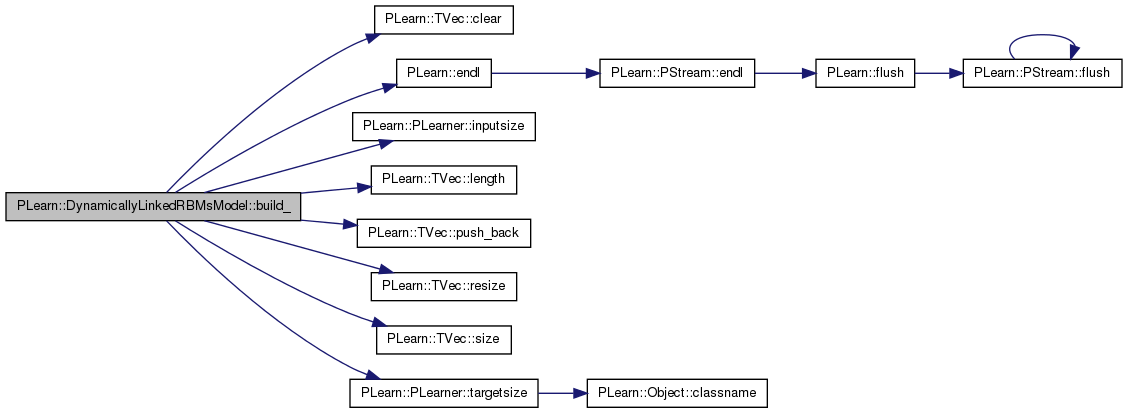

| void PLearn::DynamicallyLinkedRBMsModel::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 190 of file DynamicallyLinkedRBMsModel.cc.

References PLearn::TVec< T >::clear(), dynamic_connections, PLearn::endl(), hidden_connections, hidden_layer, hidden_layer2, i, input_connections, input_layer, input_symbol_sizes, PLearn::PLearner::inputsize(), PLearn::TVec< T >::length(), PLASSERT, PLERROR, PLearn::TVec< T >::push_back(), PLearn::PLearner::random_gen, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), target_connections, target_layers, target_layers_n_of_target_elements, target_layers_weights, target_symbol_sizes, PLearn::PLearner::targetsize(), PLearn::PLearner::train_set, and use_target_layers_masks.

Referenced by build().

{

// ### This method should do the real building of the object,

// ### according to set 'options', in *any* situation.

// ### Typical situations include:

// ### - Initial building of an object from a few user-specified options

// ### - Building of a "reloaded" object: i.e. from the complete set of

// ### all serialised options.

// ### - Updating or "re-building" of an object after a few "tuning"

// ### options have been modified.

// ### You should assume that the parent class' build_() has already been

// ### called.

MODULE_LOG << "build_() called" << endl;

if(train_set)

{

PLASSERT( target_layers_weights.length() == target_layers.length() );

PLASSERT( target_connections.length() == target_layers.length() );

PLASSERT( target_layers.length() > 0 );

PLASSERT( input_layer );

PLASSERT( hidden_layer );

PLASSERT( input_connections );

// Parsing symbols in input

int input_layer_size = 0;

input_symbol_sizes.resize(0);

PP<Dictionary> dict;

int inputsize_without_masks = inputsize()

- ( use_target_layers_masks ? targetsize() : 0 );

for(int i=0; i<inputsize_without_masks; i++)

{

dict = train_set->getDictionary(i);

if(dict)

{

if( dict->size() == 0 )

PLERROR("DynamicallyLinkedRBMsModel::build_(): dictionary "

"of field %d is empty", i);

input_symbol_sizes.push_back(dict->size());

// Adjust size to include one-hot vector

input_layer_size += dict->size();

}

else

{

input_symbol_sizes.push_back(-1);

input_layer_size++;

}

}

if( input_layer->size != input_layer_size )

PLERROR("DynamicallyLinkedRBMsModel::build_(): input_layer->size %d "

"should be %d", input_layer->size, input_layer_size);

// Parsing symbols in target

int tar_layer = 0;

int tar_layer_size = 0;

target_symbol_sizes.resize(target_layers.length());

for( int tar_layer=0; tar_layer<target_layers.length();

tar_layer++ )

target_symbol_sizes[tar_layer].resize(0);

target_layers_n_of_target_elements.resize( targetsize() );

target_layers_n_of_target_elements.clear();

for( int tar=0; tar<targetsize(); tar++)

{

if( tar_layer > target_layers.length() )

PLERROR("DynamicallyLinkedRBMsModel::build_(): target layers "

"does not cover all targets.");

dict = train_set->getDictionary(tar+inputsize());

if(dict)

{

if( use_target_layers_masks )

PLERROR("DynamicallyLinkedRBMsModel::build_(): masks for "

"symbolic targets is not implemented.");

if( dict->size() == 0 )

PLERROR("DynamicallyLinkedRBMsModel::build_(): dictionary "

"of field %d is empty", tar);

target_symbol_sizes[tar_layer].push_back(dict->size());

target_layers_n_of_target_elements[tar_layer]++;

tar_layer_size += dict->size();

}

else

{

target_symbol_sizes[tar_layer].push_back(-1);

target_layers_n_of_target_elements[tar_layer]++;

tar_layer_size++;

}

if( target_layers[tar_layer]->size == tar_layer_size )

{

tar_layer++;

tar_layer_size = 0;

}

}

if( tar_layer != target_layers.length() )

PLERROR("DynamicallyLinkedRBMsModel::build_(): target layers "

"does not cover all targets.");

// Building weights and layers

if( !input_layer->random_gen )

{

input_layer->random_gen = random_gen;

input_layer->forget();

}

if( !hidden_layer->random_gen )

{

hidden_layer->random_gen = random_gen;

hidden_layer->forget();

}

input_connections->down_size = input_layer->size;

input_connections->up_size = hidden_layer->size;

if( !input_connections->random_gen )

{

input_connections->random_gen = random_gen;

input_connections->forget();

}

input_connections->build();

if( dynamic_connections )

{

dynamic_connections->down_size = hidden_layer->size;

dynamic_connections->up_size = hidden_layer->size;

if( !dynamic_connections->random_gen )

{

dynamic_connections->random_gen = random_gen;

dynamic_connections->forget();

}

dynamic_connections->build();

}

if( hidden_layer2 )

{

if( !hidden_layer2->random_gen )

{

hidden_layer2->random_gen = random_gen;

hidden_layer2->forget();

}

PLASSERT( hidden_connections );

hidden_connections->down_size = hidden_layer->size;

hidden_connections->up_size = hidden_layer2->size;

if( !hidden_connections->random_gen )

{

hidden_connections->random_gen = random_gen;

hidden_connections->forget();

}

hidden_connections->build();

}

for( int tar_layer = 0; tar_layer < target_layers.length(); tar_layer++ )

{

PLASSERT( target_layers[tar_layer] );

PLASSERT( target_connections[tar_layer] );

if( !target_layers[tar_layer]->random_gen )

{

target_layers[tar_layer]->random_gen = random_gen;

target_layers[tar_layer]->forget();

}

if( hidden_layer2 )

target_connections[tar_layer]->down_size = hidden_layer2->size;

else

target_connections[tar_layer]->down_size = hidden_layer->size;

target_connections[tar_layer]->up_size = target_layers[tar_layer]->size;

if( !target_connections[tar_layer]->random_gen )

{

target_connections[tar_layer]->random_gen = random_gen;

target_connections[tar_layer]->forget();

}

target_connections[tar_layer]->build();

}

}

}

| void PLearn::DynamicallyLinkedRBMsModel::clamp_units | ( | const Vec | layer_vector, |

| PP< RBMLayer > | layer, | ||

| TVec< int > | symbol_sizes | ||

| ) | const |

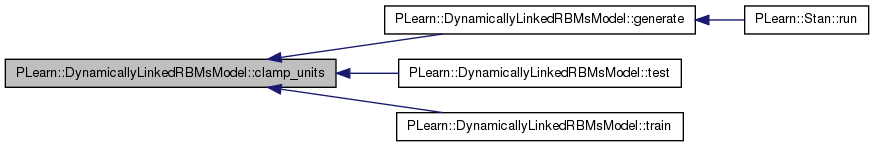

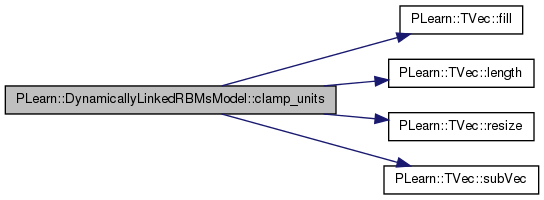

Clamps the layer units based on a layer vector.

Definition at line 767 of file DynamicallyLinkedRBMsModel.cc.

References i, and PLearn::TVec< T >::length().

Referenced by generate(), test(), and train().

{

int it = 0;

int ss = -1;

for(int i=0; i<layer_vector.length(); i++)

{

ss = symbol_sizes[i];

// If input is a real ...

if(ss < 0)

{

layer->expectation[it++] = layer_vector[i];

}

else // ... or a symbol

{

// Convert to one-hot vector

layer->expectation.subVec(it,ss).clear();

layer->expectation[it+(int)layer_vector[i]] = 1;

it += ss;

}

}

layer->setExpectation( layer->expectation );

}

| void PLearn::DynamicallyLinkedRBMsModel::clamp_units | ( | const Vec | layer_vector, |

| PP< RBMLayer > | layer, | ||

| TVec< int > | symbol_sizes, | ||

| const Vec | original_mask, | ||

| Vec & | formated_mask | ||

| ) | const |

Clamps the layer units based on a layer vector and provides the associated mask in the correct format.

Definition at line 792 of file DynamicallyLinkedRBMsModel.cc.

References PLearn::TVec< T >::fill(), i, PLearn::TVec< T >::length(), PLASSERT, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::subVec().

{

int it = 0;

int ss = -1;

PLASSERT( original_mask.length() == layer_vector.length() );

formated_mask.resize(layer->size);

for(int i=0; i<layer_vector.length(); i++)

{

ss = symbol_sizes[i];

// If input is a real ...

if(ss < 0)

{

formated_mask[it] = original_mask[i];

layer->expectation[it++] = layer_vector[i];

}

else // ... or a symbol

{

// Convert to one-hot vector

layer->expectation.subVec(it,ss).clear();

formated_mask.subVec(it,ss).fill(original_mask[i]);

layer->expectation[it+(int)layer_vector[i]] = 1;

it += ss;

}

}

layer->setExpectation( layer->expectation );

}

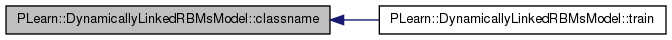

| string PLearn::DynamicallyLinkedRBMsModel::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

Referenced by train().

| void PLearn::DynamicallyLinkedRBMsModel::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 954 of file DynamicallyLinkedRBMsModel.cc.

References PLERROR.

{

PLERROR("DynamicallyLinkedRBMsModel::computeCostsFromOutputs(): this is a "

"dynamic, generative model, that can only compute negative "

"log-likelihooh costs for a whole VMat");

}

| void PLearn::DynamicallyLinkedRBMsModel::computeOutput | ( | const Vec & | input, |

| Vec & | output | ||

| ) | const [virtual] |

Computes the output from the input.

Reimplemented from PLearn::PLearner.

Definition at line 947 of file DynamicallyLinkedRBMsModel.cc.

References PLERROR.

{

PLERROR("DynamicallyLinkedRBMsModel::computeOutput(): this is a dynamic, "

"generative model, that can only compute negative log-likelihood "

"costs for a whole VMat");

}

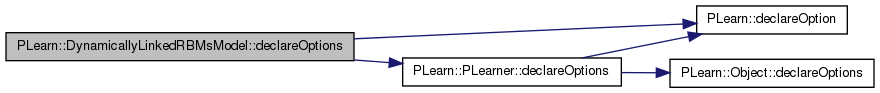

| void PLearn::DynamicallyLinkedRBMsModel::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::PLearner.

Definition at line 84 of file DynamicallyLinkedRBMsModel.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), dynamic_connections, end_of_sequence_symbol, hidden_connections, hidden_layer, hidden_layer2, input_connections, input_layer, input_symbol_sizes, PLearn::OptionBase::learntoption, recurrent_net_learning_rate, target_connections, target_layers, target_layers_n_of_target_elements, target_layers_weights, target_symbol_sizes, and use_target_layers_masks.

{

// declareOption(ol, "rbm_learning_rate", &DynamicallyLinkedRBMsModel::rbm_learning_rate,

// OptionBase::buildoption,

// "The learning rate used during RBM contrastive "

// "divergence learning phase.\n");

declareOption(ol, "recurrent_net_learning_rate",

&DynamicallyLinkedRBMsModel::recurrent_net_learning_rate,

OptionBase::buildoption,

"The learning rate used during the recurrent phase.\n");

// declareOption(ol, "rbm_nstages", &DynamicallyLinkedRBMsModel::rbm_nstages,

// OptionBase::buildoption,

// "Number of epochs for rbm phase.\n");

declareOption(ol, "target_layers_weights",

&DynamicallyLinkedRBMsModel::target_layers_weights,

OptionBase::buildoption,

"The training weights of each target layers.\n");

declareOption(ol, "use_target_layers_masks",

&DynamicallyLinkedRBMsModel::use_target_layers_masks,

OptionBase::buildoption,

"Indication that a mask indicating which target to predict\n"

"is present in the input part of the VMatrix dataset.\n");

declareOption(ol, "end_of_sequence_symbol",

&DynamicallyLinkedRBMsModel::end_of_sequence_symbol,

OptionBase::buildoption,

"Value of the first input component for end-of-sequence "

"delimiter.\n");

declareOption(ol, "input_layer", &DynamicallyLinkedRBMsModel::input_layer,

OptionBase::buildoption,

"The input layer of the model.\n");

declareOption(ol, "target_layers", &DynamicallyLinkedRBMsModel::target_layers,

OptionBase::buildoption,

"The target layers of the model.\n");

declareOption(ol, "hidden_layer", &DynamicallyLinkedRBMsModel::hidden_layer,

OptionBase::buildoption,

"The hidden layer of the model.\n");

declareOption(ol, "hidden_layer2", &DynamicallyLinkedRBMsModel::hidden_layer2,

OptionBase::buildoption,

"The second hidden layer of the model (optional).\n");

declareOption(ol, "dynamic_connections",

&DynamicallyLinkedRBMsModel::dynamic_connections,

OptionBase::buildoption,

"The RBMConnection between the first hidden layers, "

"through time (optional).\n");

declareOption(ol, "hidden_connections",

&DynamicallyLinkedRBMsModel::hidden_connections,

OptionBase::buildoption,

"The RBMConnection between the first and second "

"hidden layers (optional).\n");

declareOption(ol, "input_connections",

&DynamicallyLinkedRBMsModel::input_connections,

OptionBase::buildoption,

"The RBMConnection from input_layer to hidden_layer.\n");

declareOption(ol, "target_connections",

&DynamicallyLinkedRBMsModel::target_connections,

OptionBase::buildoption,

"The RBMConnection from input_layer to hidden_layer.\n");

/*

declareOption(ol, "",

&DynamicallyLinkedRBMsModel::,

OptionBase::buildoption,

"");

*/

declareOption(ol, "target_layers_n_of_target_elements",

&DynamicallyLinkedRBMsModel::target_layers_n_of_target_elements,

OptionBase::learntoption,

"Number of elements in the target part of a VMatrix associated\n"

"to each target layer.\n");

declareOption(ol, "input_symbol_sizes",

&DynamicallyLinkedRBMsModel::input_symbol_sizes,

OptionBase::learntoption,

"Number of symbols for each symbolic field of train_set.\n");

declareOption(ol, "target_symbol_sizes",

&DynamicallyLinkedRBMsModel::target_symbol_sizes,

OptionBase::learntoption,

"Number of symbols for each symbolic field of train_set.\n");

/*

declareOption(ol, "", &DynamicallyLinkedRBMsModel::,

OptionBase::learntoption,

"");

*/

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::DynamicallyLinkedRBMsModel::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 218 of file DynamicallyLinkedRBMsModel.h.

:

//##### Not Options #####################################################

| DynamicallyLinkedRBMsModel * PLearn::DynamicallyLinkedRBMsModel::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

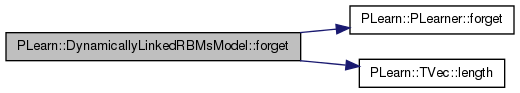

| void PLearn::DynamicallyLinkedRBMsModel::forget | ( | ) | [virtual] |

(Re-)initializes the PLearner in its fresh state (that state may depend on the 'seed' option) and sets 'stage' back to 0 (this is the stage of a fresh learner!).

Reimplemented from PLearn::PLearner.

Definition at line 432 of file DynamicallyLinkedRBMsModel.cc.

References dynamic_connections, PLearn::PLearner::forget(), hidden_connections, hidden_layer, hidden_layer2, i, input_connections, input_layer, PLearn::TVec< T >::length(), PLearn::PLearner::stage, target_connections, and target_layers.

{

inherited::forget();

input_layer->forget();

hidden_layer->forget();

input_connections->forget();

if( dynamic_connections )

dynamic_connections->forget();

if( hidden_layer2 )

{

hidden_layer2->forget();

hidden_connections->forget();

}

for( int i=0; i<target_layers.length(); i++ )

{

target_layers[i]->forget();

target_connections[i]->forget();

}

stage = 0;

}

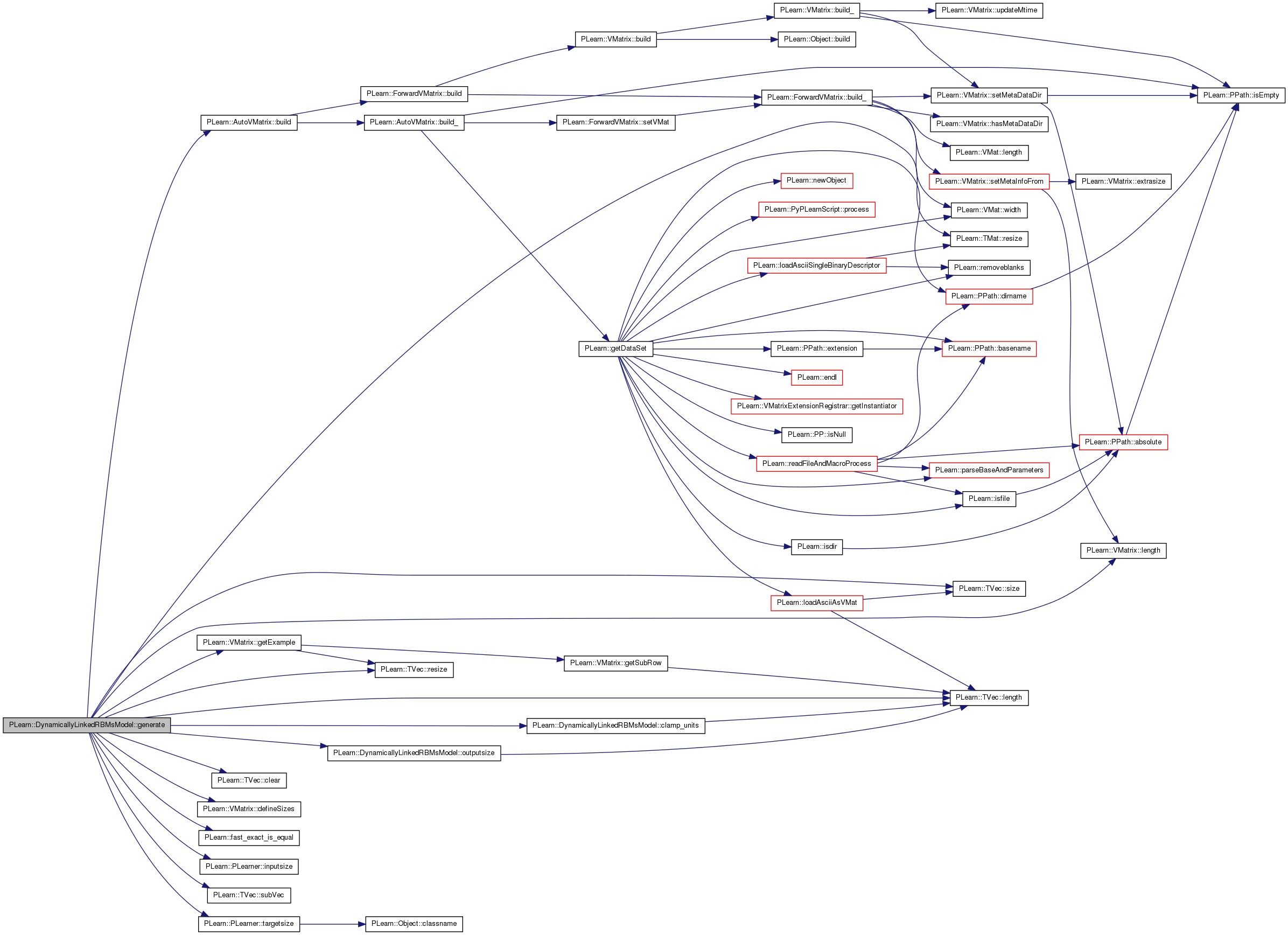

Definition at line 1263 of file DynamicallyLinkedRBMsModel.cc.

References PLearn::AutoVMatrix::build(), clamp_units(), PLearn::TVec< T >::clear(), data, PLearn::VMatrix::defineSizes(), dynamic_act_no_bias_contribution, dynamic_connections, end_of_sequence_symbol, PLearn::fast_exact_is_equal(), PLearn::AutoVMatrix::filename, PLearn::VMatrix::getExample(), hidden2_act_no_bias_list, hidden2_list, hidden_act_no_bias_list, hidden_connections, hidden_layer, hidden_layer2, hidden_list, i, input_connections, input_layer, input_list, input_symbol_sizes, PLearn::PLearner::inputsize(), j, PLearn::TVec< T >::length(), PLearn::VMatrix::length(), masks_list, nll_list, outputsize(), PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::TVec< T >::subVec(), target_connections, target_layers, target_layers_n_of_target_elements, target_layers_weights, target_prediction_act_no_bias_list, target_prediction_list, target_symbol_sizes, targets_list, PLearn::PLearner::targetsize(), and use_target_layers_masks.

Referenced by PLearn::Stan::run().

{

//PPath* the_filename = "/home/stan/Documents/recherche_maitrise/DDBN_bosendorfer/data/generate/scoreGen.amat";

data = new AutoVMatrix();

data->filename = "/home/stan/Documents/recherche_maitrise/DDBN_bosendorfer/data/listData/target_tm12_input_t_tm12_tp12/scoreGen_tar_tm12__in_tm12_tp12.amat";

//data->filename = "/home/stan/Documents/recherche_maitrise/DDBN_bosendorfer/create_data/scoreGenSuitePerf.amat";

data->defineSizes(208,16,0);

//data->inputsize = 21;

//data->targetsize = 0;

//data->weightsize = 0;

data->build();

int len = data->length();

int tarSize = outputsize();

int partTarSize;

Vec input;

Vec target;

real weight;

Vec output(outputsize());

output.clear();

/*Vec costs(nTestCosts());

costs.clear();

Vec n_items(nTestCosts());

n_items.clear();*/

int r,r2;

int ith_sample_in_sequence = 0;

int inputsize_without_masks = inputsize()

- ( use_target_layers_masks ? targetsize() : 0 );

int sum_target_elements = 0;

for (int i = 0; i < len; i++)

{

data->getExample(i, input, target, weight);

if(i>n)

{

for (int k = 1; k <= t; k++)

{

if(k<=i){

partTarSize = outputsize();

for( int tar=0; tar < target_layers.length(); tar++ )

{

input.subVec(inputsize_without_masks-(tarSize*(t-k))-partTarSize-1,target_layers[tar]->size) << target_prediction_list[tar][ith_sample_in_sequence-k];

partTarSize -= target_layers[tar]->size;

}

}

}

}

/*

for (int k = 1; k <= t; k++)

{

partTarSize = outputsize();

for( int tar=0; tar < target_layers.length(); tar++ )

{

if(i>=t){

input.subVec(inputsize_without_masks-(tarSize*(t-k))-partTarSize-1,target_layers[tar]->size) << target_prediction_list[tar][ith_sample_in_sequence-k];

partTarSize -= target_layers[tar]->size;

}

}

}

*/

if( fast_exact_is_equal(input[0],end_of_sequence_symbol) )

{

/* ith_sample_in_sequence = 0;

hidden_list.resize(0);

hidden_act_no_bias_list.resize(0);

hidden2_list.resize(0);

hidden2_act_no_bias_list.resize(0);

target_prediction_list.resize(0);

target_prediction_act_no_bias_list.resize(0);

input_list.resize(0);

targets_list.resize(0);

nll_list.resize(0,0);

masks_list.resize(0);*/

continue;

}

// Resize internal variables

hidden_list.resize(ith_sample_in_sequence+1);

hidden_act_no_bias_list.resize(ith_sample_in_sequence+1);

if( hidden_layer2 )

{

hidden2_list.resize(ith_sample_in_sequence+1);

hidden2_act_no_bias_list.resize(ith_sample_in_sequence+1);

}

input_list.resize(ith_sample_in_sequence+1);

input_list[ith_sample_in_sequence].resize(input_layer->size);

targets_list.resize( target_layers.length() );

target_prediction_list.resize( target_layers.length() );

target_prediction_act_no_bias_list.resize( target_layers.length() );

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

targets_list[tar].resize( ith_sample_in_sequence+1);

targets_list[tar][ith_sample_in_sequence].resize(

target_layers[tar]->size);

target_prediction_list[tar].resize(

ith_sample_in_sequence+1);

target_prediction_act_no_bias_list[tar].resize(

ith_sample_in_sequence+1);

}

}

nll_list.resize(ith_sample_in_sequence+1,target_layers.length());

if( use_target_layers_masks )

{

masks_list.resize( target_layers.length() );

for( int tar=0; tar < target_layers.length(); tar++ )

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

masks_list[tar].resize( ith_sample_in_sequence+1 );

}

// Forward propagation

// Fetch right representation for input

clamp_units(input.subVec(0,inputsize_without_masks),

input_layer,

input_symbol_sizes);

input_list[ith_sample_in_sequence] << input_layer->expectation;

// Fetch right representation for target

sum_target_elements = 0;

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

if( use_target_layers_masks )

{

clamp_units(target.subVec(

sum_target_elements,

target_layers_n_of_target_elements[tar]),

target_layers[tar],

target_symbol_sizes[tar],

input.subVec(

inputsize_without_masks

+ sum_target_elements,

target_layers_n_of_target_elements[tar]),

masks_list[tar][ith_sample_in_sequence]

);

}

else

{

clamp_units(target.subVec(

sum_target_elements,

target_layers_n_of_target_elements[tar]),

target_layers[tar],

target_symbol_sizes[tar]);

}

targets_list[tar][ith_sample_in_sequence] <<

target_layers[tar]->expectation;

}

sum_target_elements += target_layers_n_of_target_elements[tar];

}

input_connections->fprop( input_list[ith_sample_in_sequence],

hidden_act_no_bias_list[ith_sample_in_sequence]);

if( ith_sample_in_sequence > 0 && dynamic_connections )

{

dynamic_connections->fprop(

hidden_list[ith_sample_in_sequence-1],

dynamic_act_no_bias_contribution );

hidden_act_no_bias_list[ith_sample_in_sequence] +=

dynamic_act_no_bias_contribution;

}

hidden_layer->fprop( hidden_act_no_bias_list[ith_sample_in_sequence],

hidden_list[ith_sample_in_sequence] );

if( hidden_layer2 )

{

hidden_connections->fprop(

hidden_list[ith_sample_in_sequence],

hidden2_act_no_bias_list[ith_sample_in_sequence]);

hidden_layer2->fprop(

hidden2_act_no_bias_list[ith_sample_in_sequence],

hidden2_list[ith_sample_in_sequence]

);

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_connections[tar]->fprop(

hidden2_list[ith_sample_in_sequence],

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence]

);

target_layers[tar]->fprop(

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence],

target_prediction_list[tar][

ith_sample_in_sequence] );

if( use_target_layers_masks )

target_prediction_list[tar][ ith_sample_in_sequence] *=

masks_list[tar][ith_sample_in_sequence];

}

}

}

else

{

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_connections[tar]->fprop(

hidden_list[ith_sample_in_sequence],

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence]

);

target_layers[tar]->fprop(

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence],

target_prediction_list[tar][

ith_sample_in_sequence] );

if( use_target_layers_masks )

target_prediction_list[tar][ ith_sample_in_sequence] *=

masks_list[tar][ith_sample_in_sequence];

}

}

}

sum_target_elements = 0;

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_layers[tar]->activation <<

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence];

target_layers[tar]->activation += target_layers[tar]->bias;

target_layers[tar]->setExpectation(

target_prediction_list[tar][

ith_sample_in_sequence]);

nll_list(ith_sample_in_sequence,tar) =

target_layers[tar]->fpropNLL(

targets_list[tar][ith_sample_in_sequence] );

/*costs[tar] += nll_list(ith_sample_in_sequence,tar);

// Normalize by the number of things to predict

if( use_target_layers_masks )

{

n_items[tar] += sum(

input.subVec( inputsize_without_masks

+ sum_target_elements,

target_layers_n_of_target_elements[tar]) );

}

else

n_items[tar]++;*/

}

if( use_target_layers_masks )

sum_target_elements +=

target_layers_n_of_target_elements[tar];

}

ith_sample_in_sequence++;

}

/*

ith_sample_in_sequence = 0;

hidden_list.resize(0);

hidden_act_no_bias_list.resize(0);

hidden2_list.resize(0);

hidden2_act_no_bias_list.resize(0);

target_prediction_list.resize(0);

target_prediction_act_no_bias_list.resize(0);

input_list.resize(0);

targets_list.resize(0);

nll_list.resize(0,0);

masks_list.resize(0);

*/

//Vec tempo;

//TVec<real> tempo;

//tempo.resize(visible_layer->size);

ofstream myfile;

myfile.open ("/home/stan/Documents/recherche_maitrise/DDBN_bosendorfer/data/generate/test.txt");

for (int i = 0; i < target_prediction_list[0].length() ; i++ ){

for( int tar=0; tar < target_layers.length(); tar++ )

{

for (int j = 0; j < target_prediction_list[tar][i].length() ; j++ ){

if(i>n){

myfile << target_prediction_list[tar][i][j] << " ";

}

else{

myfile << targets_list[tar][i][j] << " ";

}

}

}

myfile << "\n";

}

myfile.close();

}

| OptionList & PLearn::DynamicallyLinkedRBMsModel::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

| OptionMap & PLearn::DynamicallyLinkedRBMsModel::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

| RemoteMethodMap & PLearn::DynamicallyLinkedRBMsModel::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 71 of file DynamicallyLinkedRBMsModel.cc.

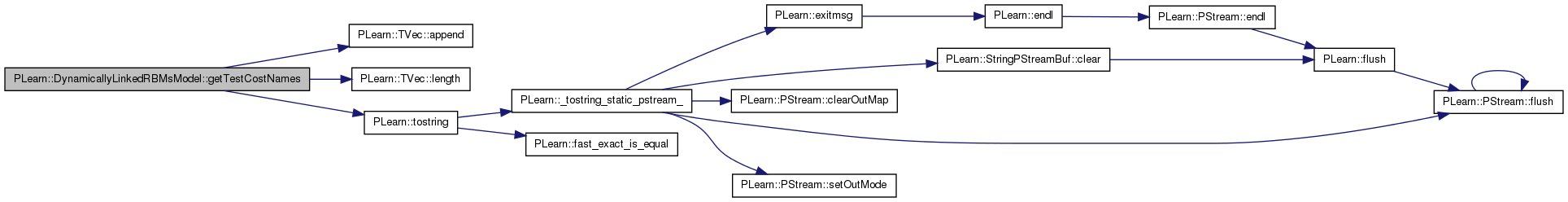

| TVec< string > PLearn::DynamicallyLinkedRBMsModel::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 1250 of file DynamicallyLinkedRBMsModel.cc.

References PLearn::TVec< T >::append(), i, PLearn::TVec< T >::length(), target_layers, and PLearn::tostring().

Referenced by getTrainCostNames().

{

TVec<string> cost_names(0);

for( int i=0; i<target_layers.length(); i++ )

cost_names.append("target" + tostring(i) + ".NLL");

return cost_names;

}

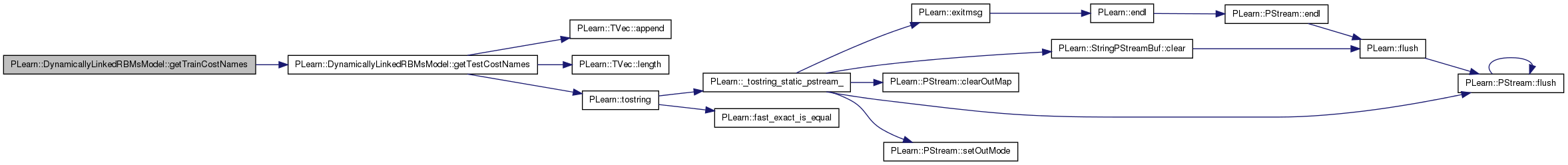

| TVec< string > PLearn::DynamicallyLinkedRBMsModel::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 1258 of file DynamicallyLinkedRBMsModel.cc.

References getTestCostNames().

Referenced by train().

{

return getTestCostNames();

}

| void PLearn::DynamicallyLinkedRBMsModel::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 383 of file DynamicallyLinkedRBMsModel.cc.

References bias_gradient, PLearn::deepCopyField(), dynamic_act_no_bias_contribution, dynamic_connections, hidden2_act_no_bias_list, hidden2_list, hidden_act_no_bias_list, hidden_connections, hidden_gradient, hidden_layer, hidden_layer2, hidden_list, hidden_temporal_gradient, input_connections, input_layer, input_list, input_symbol_sizes, PLearn::PLearner::makeDeepCopyFromShallowCopy(), masks_list, nll_list, target_connections, target_layers, target_layers_n_of_target_elements, target_prediction_act_no_bias_list, target_prediction_list, target_symbol_sizes, targets_list, and visi_bias_gradient.

{

inherited::makeDeepCopyFromShallowCopy(copies);

deepCopyField( input_layer, copies);

deepCopyField( target_layers , copies);

deepCopyField( hidden_layer, copies);

deepCopyField( hidden_layer2 , copies);

deepCopyField( dynamic_connections , copies);

deepCopyField( hidden_connections , copies);

deepCopyField( input_connections , copies);

deepCopyField( target_connections , copies);

deepCopyField( target_layers_n_of_target_elements, copies);

deepCopyField( input_symbol_sizes, copies);

deepCopyField( target_symbol_sizes, copies);

deepCopyField( bias_gradient , copies);

deepCopyField( visi_bias_gradient , copies);

deepCopyField( hidden_gradient , copies);

deepCopyField( hidden_temporal_gradient , copies);

deepCopyField( hidden_list , copies);

deepCopyField( hidden_act_no_bias_list , copies);

deepCopyField( hidden2_list , copies);

deepCopyField( hidden2_act_no_bias_list , copies);

deepCopyField( target_prediction_list , copies);

deepCopyField( target_prediction_act_no_bias_list , copies);

deepCopyField( input_list , copies);

deepCopyField( targets_list , copies);

deepCopyField( nll_list , copies);

deepCopyField( masks_list , copies);

deepCopyField( dynamic_act_no_bias_contribution, copies);

// deepCopyField(, copies);

//PLERROR("DynamicallyLinkedRBMsModel::makeDeepCopyFromShallowCopy(): "

//"not implemented yet");

}

| int PLearn::DynamicallyLinkedRBMsModel::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 424 of file DynamicallyLinkedRBMsModel.cc.

References i, PLearn::TVec< T >::length(), and target_layers.

Referenced by generate(), and test().

{

int out_size = 0;

for( int i=0; i<target_layers.length(); i++ )

out_size += target_layers[i]->size;

return out_size;

}

| void PLearn::DynamicallyLinkedRBMsModel::partition | ( | TVec< double > | part, |

| TVec< double > | periode, | ||

| TVec< double > | vel | ||

| ) | const |

Use the partition.

| void PLearn::DynamicallyLinkedRBMsModel::recurrent_update | ( | ) |

Updates both the RBM parameters and the dynamic connections in the recurrent tuning phase, after the visible units have been clamped.

Definition at line 843 of file DynamicallyLinkedRBMsModel.cc.

References bias_gradient, PLearn::TVec< T >::clear(), dynamic_connections, PLearn::fast_exact_is_equal(), hidden2_act_no_bias_list, hidden2_list, hidden_act_no_bias_list, hidden_connections, hidden_gradient, hidden_layer, hidden_layer2, hidden_list, hidden_temporal_gradient, i, input_connections, input_list, PLearn::TVec< T >::length(), masks_list, nll_list, PLearn::TVec< T >::resize(), target_connections, target_layers, target_layers_weights, target_prediction_act_no_bias_list, target_prediction_list, targets_list, use_target_layers_masks, and visi_bias_gradient.

Referenced by train().

{

hidden_temporal_gradient.resize(hidden_layer->size);

hidden_temporal_gradient.clear();

for(int i=hidden_list.length()-1; i>=0; i--){

if( hidden_layer2 )

hidden_gradient.resize(hidden_layer2->size);

else

hidden_gradient.resize(hidden_layer->size);

hidden_gradient.clear();

if(use_target_layers_masks)

{

for( int tar=0; tar<target_layers.length(); tar++)

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_layers[tar]->activation << target_prediction_act_no_bias_list[tar][i];

target_layers[tar]->activation += target_layers[tar]->bias;

target_layers[tar]->setExpectation(target_prediction_list[tar][i]);

target_layers[tar]->bpropNLL(targets_list[tar][i],nll_list(i,tar),bias_gradient);

bias_gradient *= target_layers_weights[tar];

bias_gradient *= masks_list[tar][i];

target_layers[tar]->update(bias_gradient);

if( hidden_layer2 )

target_connections[tar]->bpropUpdate(hidden2_list[i],target_prediction_act_no_bias_list[tar][i],

hidden_gradient, bias_gradient,true);

else

target_connections[tar]->bpropUpdate(hidden_list[i],target_prediction_act_no_bias_list[tar][i],

hidden_gradient, bias_gradient,true);

}

}

}

else

{

for( int tar=0; tar<target_layers.length(); tar++)

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_layers[tar]->activation << target_prediction_act_no_bias_list[tar][i];

target_layers[tar]->activation += target_layers[tar]->bias;

target_layers[tar]->setExpectation(target_prediction_list[tar][i]);

target_layers[tar]->bpropNLL(targets_list[tar][i],nll_list(i,tar),bias_gradient);

bias_gradient *= target_layers_weights[tar];

target_layers[tar]->update(bias_gradient);

if( hidden_layer2 )

target_connections[tar]->bpropUpdate(hidden2_list[i],target_prediction_act_no_bias_list[tar][i],

hidden_gradient, bias_gradient,true);

else

target_connections[tar]->bpropUpdate(hidden_list[i],target_prediction_act_no_bias_list[tar][i],

hidden_gradient, bias_gradient,true);

}

}

}

if (hidden_layer2)

{

hidden_layer2->bpropUpdate(

hidden2_act_no_bias_list[i], hidden2_list[i],

bias_gradient, hidden_gradient);

hidden_connections->bpropUpdate(

hidden_list[i],

hidden2_act_no_bias_list[i],

hidden_gradient, bias_gradient);

}

if(i!=0 && dynamic_connections )

{

hidden_gradient += hidden_temporal_gradient;

hidden_layer->bpropUpdate(

hidden_act_no_bias_list[i], hidden_list[i],

hidden_temporal_gradient, hidden_gradient);

dynamic_connections->bpropUpdate(

hidden_list[i-1],

hidden_act_no_bias_list[i], // Here, it should be cond_bias, but doesn't matter

hidden_gradient, hidden_temporal_gradient);

hidden_temporal_gradient << hidden_gradient;

input_connections->bpropUpdate(

input_list[i],

hidden_act_no_bias_list[i],

visi_bias_gradient, hidden_temporal_gradient);// Here, it should be activations - cond_bias, but doesn't matter

}

else

{

hidden_layer->bpropUpdate(

hidden_act_no_bias_list[i], hidden_list[i],

hidden_temporal_gradient, hidden_gradient); // Not really temporal gradient, but this is the final iteration...

input_connections->bpropUpdate(

input_list[i],

hidden_act_no_bias_list[i],

visi_bias_gradient, hidden_temporal_gradient);// Here, it should be activations - cond_bias, but doesn't matter

}

}

}

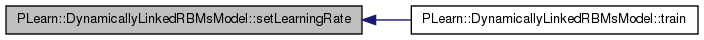

| void PLearn::DynamicallyLinkedRBMsModel::setLearningRate | ( | real | the_learning_rate | ) |

Sets the learning of all layers and connections.

Definition at line 823 of file DynamicallyLinkedRBMsModel.cc.

References dynamic_connections, hidden_connections, hidden_layer, hidden_layer2, i, input_connections, input_layer, PLearn::TVec< T >::length(), target_connections, and target_layers.

Referenced by train().

{

input_layer->setLearningRate( the_learning_rate );

hidden_layer->setLearningRate( the_learning_rate );

input_connections->setLearningRate( the_learning_rate );

if( dynamic_connections )

dynamic_connections->setLearningRate( the_learning_rate ); //HUGO: multiply by dynamic_connections_learning_weight;

if( hidden_layer2 )

{

hidden_layer2->setLearningRate( the_learning_rate );

hidden_connections->setLearningRate( the_learning_rate );

}

for( int i=0; i<target_layers.length(); i++ )

{

target_layers[i]->setLearningRate( the_learning_rate );

target_connections[i]->setLearningRate( the_learning_rate );

}

}

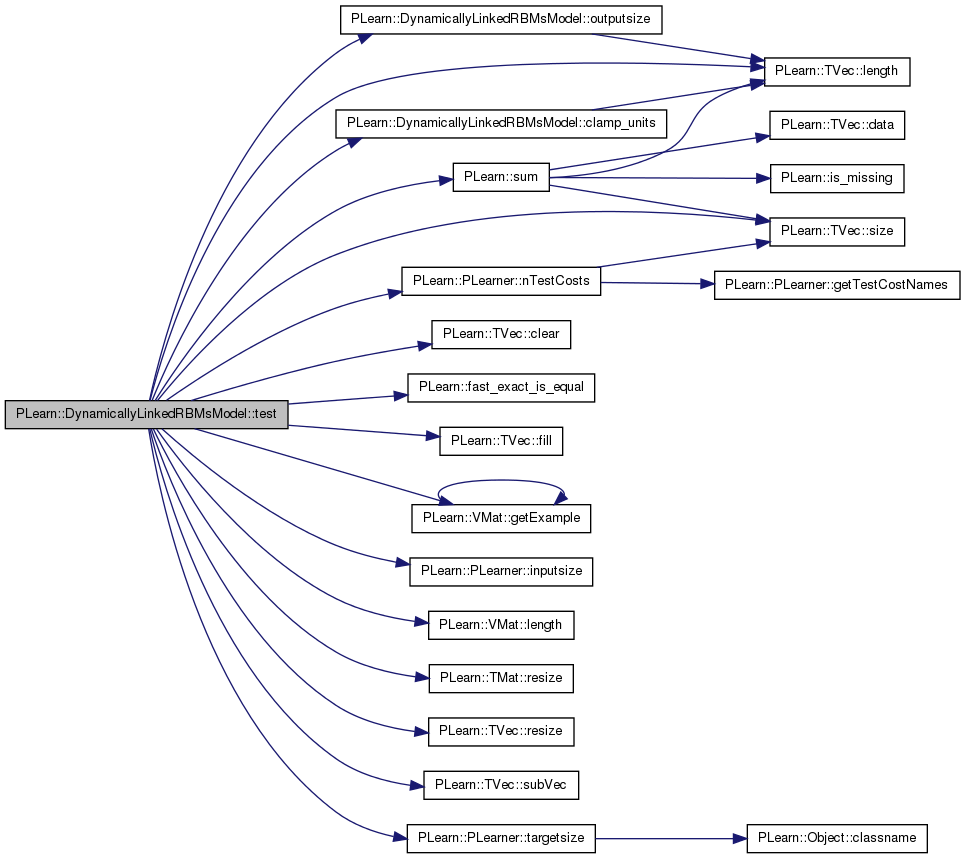

| void PLearn::DynamicallyLinkedRBMsModel::test | ( | VMat | testset, |

| PP< VecStatsCollector > | test_stats, | ||

| VMat | testoutputs = 0, |

||

| VMat | testcosts = 0 |

||

| ) | const [virtual] |

Performs test on testset, updating test cost statistics, and optionally filling testoutputs and testcosts.

The default version repeatedly calls computeOutputAndCosts or computeCostsOnly. Note that neither test_stats->forget() nor test_stats->finalize() is called, so that you should call them yourself (respectively before and after calling this method) if you don't plan to accumulate statistics.

Reimplemented from PLearn::PLearner.

Definition at line 962 of file DynamicallyLinkedRBMsModel.cc.

References clamp_units(), PLearn::TVec< T >::clear(), dynamic_act_no_bias_contribution, dynamic_connections, end_of_sequence_symbol, PLearn::fast_exact_is_equal(), PLearn::TVec< T >::fill(), PLearn::VMat::getExample(), hidden2_act_no_bias_list, hidden2_list, hidden_act_no_bias_list, hidden_connections, hidden_layer, hidden_layer2, hidden_list, i, input_connections, input_layer, input_list, input_symbol_sizes, PLearn::PLearner::inputsize(), PLearn::TVec< T >::length(), PLearn::VMat::length(), masks_list, MISSING_VALUE, nll_list, PLearn::PLearner::nTestCosts(), outputsize(), PLearn::PLearner::report_progress, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), PLearn::TVec< T >::subVec(), PLearn::sum(), target_connections, target_layers, target_layers_n_of_target_elements, target_layers_weights, target_prediction_act_no_bias_list, target_prediction_list, target_symbol_sizes, targets_list, PLearn::PLearner::targetsize(), and use_target_layers_masks.

{

int len = testset.length();

Vec input;

Vec target;

real weight;

Vec output(outputsize());

output.clear();

Vec costs(nTestCosts());

costs.clear();

Vec n_items(nTestCosts());

n_items.clear();

PP<ProgressBar> pb;

if (report_progress)

pb = new ProgressBar("Testing learner", len);

if (len == 0) {

// Empty test set: we give -1 cost arbitrarily.

costs.fill(-1);

test_stats->update(costs);

}

int ith_sample_in_sequence = 0;

int inputsize_without_masks = inputsize()

- ( use_target_layers_masks ? targetsize() : 0 );

int sum_target_elements = 0;

for (int i = 0; i < len; i++)

{

testset.getExample(i, input, target, weight);

if( fast_exact_is_equal(input[0],end_of_sequence_symbol) )

{

ith_sample_in_sequence = 0;

hidden_list.resize(0);

hidden_act_no_bias_list.resize(0);

hidden2_list.resize(0);

hidden2_act_no_bias_list.resize(0);

target_prediction_list.resize(0);

target_prediction_act_no_bias_list.resize(0);

input_list.resize(0);

targets_list.resize(0);

nll_list.resize(0,0);

masks_list.resize(0);

if (testoutputs)

{

output.fill(end_of_sequence_symbol);

testoutputs->putOrAppendRow(i, output);

}

continue;

}

// Resize internal variables

hidden_list.resize(ith_sample_in_sequence+1);

hidden_act_no_bias_list.resize(ith_sample_in_sequence+1);

if( hidden_layer2 )

{

hidden2_list.resize(ith_sample_in_sequence+1);

hidden2_act_no_bias_list.resize(ith_sample_in_sequence+1);

}

input_list.resize(ith_sample_in_sequence+1);

input_list[ith_sample_in_sequence].resize(input_layer->size);

targets_list.resize( target_layers.length() );

target_prediction_list.resize( target_layers.length() );

target_prediction_act_no_bias_list.resize( target_layers.length() );

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

targets_list[tar].resize( ith_sample_in_sequence+1);

targets_list[tar][ith_sample_in_sequence].resize(

target_layers[tar]->size);

target_prediction_list[tar].resize(

ith_sample_in_sequence+1);

target_prediction_act_no_bias_list[tar].resize(

ith_sample_in_sequence+1);

}

}

nll_list.resize(ith_sample_in_sequence+1,target_layers.length());

if( use_target_layers_masks )

{

masks_list.resize( target_layers.length() );

for( int tar=0; tar < target_layers.length(); tar++ )

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

masks_list[tar].resize( ith_sample_in_sequence+1 );

}

// Forward propagation

// Fetch right representation for input

clamp_units(input.subVec(0,inputsize_without_masks),

input_layer,

input_symbol_sizes);

input_list[ith_sample_in_sequence] << input_layer->expectation;

// Fetch right representation for target

sum_target_elements = 0;

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

if( use_target_layers_masks )

{

clamp_units(target.subVec(

sum_target_elements,

target_layers_n_of_target_elements[tar]),

target_layers[tar],

target_symbol_sizes[tar],

input.subVec(

inputsize_without_masks

+ sum_target_elements,

target_layers_n_of_target_elements[tar]),

masks_list[tar][ith_sample_in_sequence]

);

}

else

{

clamp_units(target.subVec(

sum_target_elements,

target_layers_n_of_target_elements[tar]),

target_layers[tar],

target_symbol_sizes[tar]);

}

targets_list[tar][ith_sample_in_sequence] <<

target_layers[tar]->expectation;

}

sum_target_elements += target_layers_n_of_target_elements[tar];

}

input_connections->fprop( input_list[ith_sample_in_sequence],

hidden_act_no_bias_list[ith_sample_in_sequence]);

if( ith_sample_in_sequence > 0 && dynamic_connections )

{

dynamic_connections->fprop(

hidden_list[ith_sample_in_sequence-1],

dynamic_act_no_bias_contribution );

hidden_act_no_bias_list[ith_sample_in_sequence] +=

dynamic_act_no_bias_contribution;

}

hidden_layer->fprop( hidden_act_no_bias_list[ith_sample_in_sequence],

hidden_list[ith_sample_in_sequence] );

if( hidden_layer2 )

{

hidden_connections->fprop(

hidden_list[ith_sample_in_sequence],

hidden2_act_no_bias_list[ith_sample_in_sequence]);

hidden_layer2->fprop(

hidden2_act_no_bias_list[ith_sample_in_sequence],

hidden2_list[ith_sample_in_sequence]

);

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_connections[tar]->fprop(

hidden2_list[ith_sample_in_sequence],

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence]

);

target_layers[tar]->fprop(

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence],

target_prediction_list[tar][

ith_sample_in_sequence] );

if( use_target_layers_masks )

target_prediction_list[tar][ ith_sample_in_sequence] *=

masks_list[tar][ith_sample_in_sequence];

}

}

}

else

{

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_connections[tar]->fprop(

hidden_list[ith_sample_in_sequence],

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence]

);

target_layers[tar]->fprop(

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence],

target_prediction_list[tar][

ith_sample_in_sequence] );

if( use_target_layers_masks )

target_prediction_list[tar][ ith_sample_in_sequence] *=

masks_list[tar][ith_sample_in_sequence];

}

}

}

if (testoutputs)

{

int sum_target_layers_size = 0;

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

output.subVec(sum_target_layers_size,target_layers[tar]->size)

<< target_prediction_list[tar][ ith_sample_in_sequence ];

}

sum_target_layers_size += target_layers[tar]->size;

}

testoutputs->putOrAppendRow(i, output);

}

sum_target_elements = 0;

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_layers[tar]->activation <<

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence];

target_layers[tar]->activation += target_layers[tar]->bias;

target_layers[tar]->setExpectation(

target_prediction_list[tar][

ith_sample_in_sequence]);

nll_list(ith_sample_in_sequence,tar) =

target_layers[tar]->fpropNLL(

targets_list[tar][ith_sample_in_sequence] );

costs[tar] += nll_list(ith_sample_in_sequence,tar);

// Normalize by the number of things to predict

if( use_target_layers_masks )

{

n_items[tar] += sum(

input.subVec( inputsize_without_masks

+ sum_target_elements,

target_layers_n_of_target_elements[tar]) );

}

else

n_items[tar]++;

}

if( use_target_layers_masks )

sum_target_elements +=

target_layers_n_of_target_elements[tar];

}

ith_sample_in_sequence++;

if (report_progress)

pb->update(i);

}

for(int i=0; i<costs.length(); i++)

{

if( !fast_exact_is_equal(target_layers_weights[i],0) )

costs[i] /= n_items[i];

else

costs[i] = MISSING_VALUE;

}

if (testcosts)

testcosts->putOrAppendRow(0, costs);

if (test_stats)

test_stats->update(costs, weight);

ith_sample_in_sequence = 0;

hidden_list.resize(0);

hidden_act_no_bias_list.resize(0);

hidden2_list.resize(0);

hidden2_act_no_bias_list.resize(0);

target_prediction_list.resize(0);

target_prediction_act_no_bias_list.resize(0);

input_list.resize(0);

targets_list.resize(0);

nll_list.resize(0,0);

masks_list.resize(0);

}

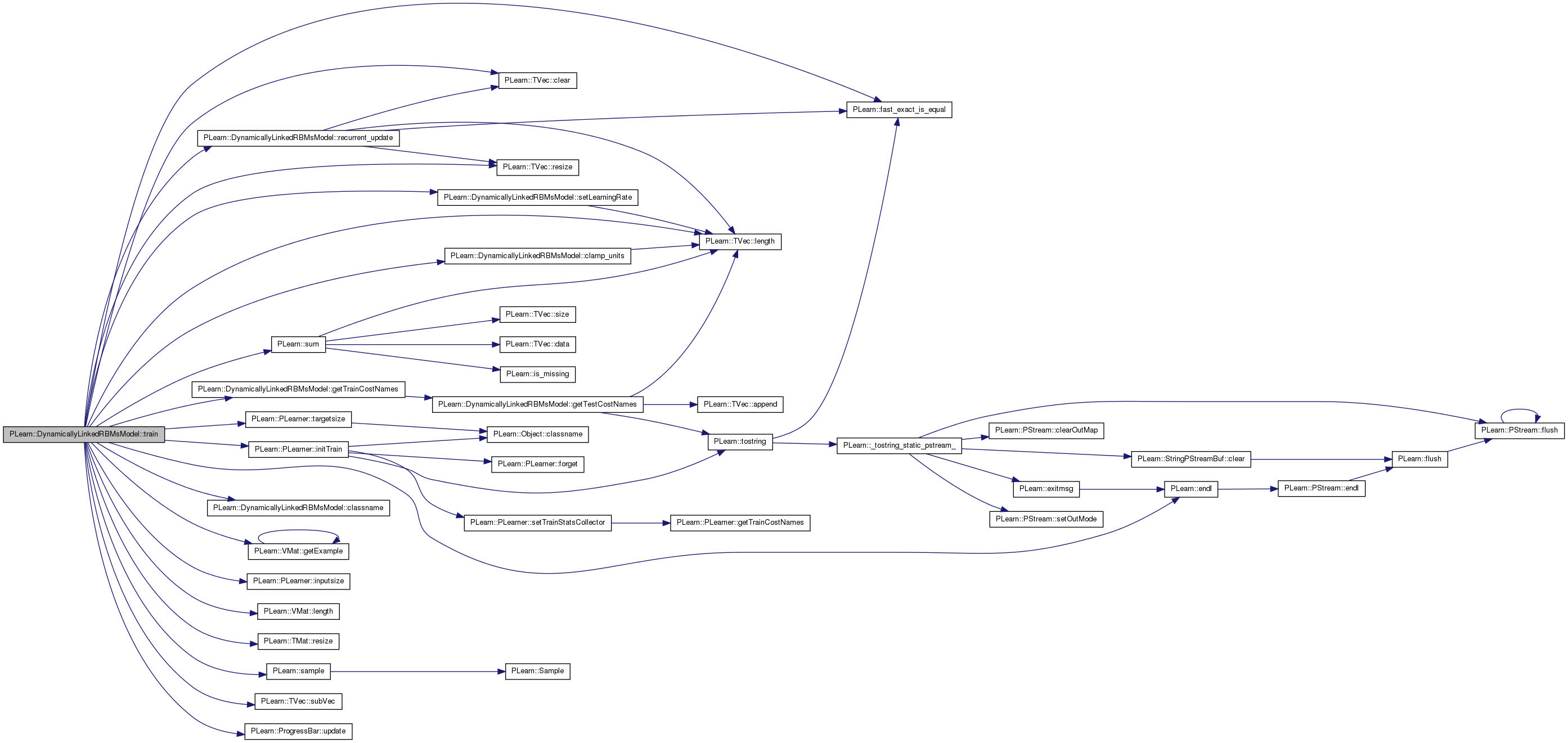

| void PLearn::DynamicallyLinkedRBMsModel::train | ( | ) | [virtual] |

The role of the train method is to bring the learner up to stage==nstages, updating the train_stats collector with training costs measured on-line in the process.

Implements PLearn::PLearner.

Definition at line 456 of file DynamicallyLinkedRBMsModel.cc.

References clamp_units(), classname(), PLearn::TVec< T >::clear(), dynamic_act_no_bias_contribution, dynamic_connections, end_of_sequence_symbol, PLearn::endl(), PLearn::fast_exact_is_equal(), PLearn::VMat::getExample(), getTrainCostNames(), hidden2_act_no_bias_list, hidden2_list, hidden_act_no_bias_list, hidden_connections, hidden_layer, hidden_layer2, hidden_list, i, PLearn::PLearner::initTrain(), input_connections, input_layer, input_list, input_symbol_sizes, PLearn::PLearner::inputsize(), PLearn::TVec< T >::length(), PLearn::VMat::length(), masks_list, MISSING_VALUE, nll_list, PLearn::PLearner::nstages, recurrent_net_learning_rate, recurrent_update(), PLearn::PLearner::report_progress, PLearn::TMat< T >::resize(), PLearn::TVec< T >::resize(), PLearn::sample(), setLearningRate(), PLearn::PLearner::stage, PLearn::TVec< T >::subVec(), PLearn::sum(), target_connections, target_layers, target_layers_n_of_target_elements, target_layers_weights, target_prediction_act_no_bias_list, target_prediction_list, target_symbol_sizes, targets_list, PLearn::PLearner::targetsize(), PLearn::PLearner::train_set, PLearn::PLearner::train_stats, PLearn::ProgressBar::update(), use_target_layers_masks, and PLearn::PLearner::verbosity.

{

MODULE_LOG << "train() called " << endl;

Vec input( inputsize() );

Vec target( targetsize() );

real weight = 0; // Unused

Vec train_costs( getTrainCostNames().length() );

train_costs.clear();

Vec train_n_items( getTrainCostNames().length() );

if( !initTrain() )

{

MODULE_LOG << "train() aborted" << endl;

return;

}

ProgressBar* pb = 0;

// clear stats of previous epoch

train_stats->forget();

/***** RBM training phase *****/

// if(rbm_stage < rbm_nstages)

// {

// }

/***** Recurrent phase *****/

if( stage >= nstages )

return;

if( stage < nstages )

{

MODULE_LOG << "Training the whole model" << endl;

int init_stage = stage;

//int end_stage = max(0,nstages-(rbm_nstages + dynamic_nstages));

int end_stage = nstages;

MODULE_LOG << " stage = " << stage << endl;

MODULE_LOG << " end_stage = " << end_stage << endl;

MODULE_LOG << " recurrent_net_learning_rate = " << recurrent_net_learning_rate << endl;

if( report_progress && stage < end_stage )

pb = new ProgressBar( "Recurrent training phase of "+classname(),

end_stage - init_stage );

setLearningRate( recurrent_net_learning_rate );

int ith_sample_in_sequence = 0;

int inputsize_without_masks = inputsize()

- ( use_target_layers_masks ? targetsize() : 0 );

int sum_target_elements = 0;

while(stage < end_stage)

{

/*

TMat<real> U,V;//////////crap James

TVec<real> S;

U.resize(hidden_layer->size,hidden_layer->size);

V.resize(hidden_layer->size,hidden_layer->size);

S.resize(hidden_layer->size);

U << dynamic_connections->weights;

SVD(U,dynamic_connections->weights,S,V);

S.fill(-0.5);

productScaleAcc(dynamic_connections->bias,dynamic_connections->weights,S,1,0);

*/

train_costs.clear();

train_n_items.clear();

for(int sample=0 ; sample<train_set->length() ; sample++ )

{

train_set->getExample(sample, input, target, weight);

if( fast_exact_is_equal(input[0],end_of_sequence_symbol) )

{

//update

recurrent_update();

ith_sample_in_sequence = 0;

hidden_list.resize(0);

hidden_act_no_bias_list.resize(0);

hidden2_list.resize(0);

hidden2_act_no_bias_list.resize(0);

target_prediction_list.resize(0);

target_prediction_act_no_bias_list.resize(0);

input_list.resize(0);

targets_list.resize(0);

nll_list.resize(0,0);

masks_list.resize(0);

continue;

}

// Resize internal variables

hidden_list.resize(ith_sample_in_sequence+1);

hidden_act_no_bias_list.resize(ith_sample_in_sequence+1);

if( hidden_layer2 )

{

hidden2_list.resize(ith_sample_in_sequence+1);

hidden2_act_no_bias_list.resize(ith_sample_in_sequence+1);

}

input_list.resize(ith_sample_in_sequence+1);

input_list[ith_sample_in_sequence].resize(input_layer->size);

targets_list.resize( target_layers.length() );

target_prediction_list.resize( target_layers.length() );

target_prediction_act_no_bias_list.resize( target_layers.length() );

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

targets_list[tar].resize( ith_sample_in_sequence+1);

targets_list[tar][ith_sample_in_sequence].resize(

target_layers[tar]->size);

target_prediction_list[tar].resize(

ith_sample_in_sequence+1);

target_prediction_act_no_bias_list[tar].resize(

ith_sample_in_sequence+1);

}

}

nll_list.resize(ith_sample_in_sequence+1,target_layers.length());

if( use_target_layers_masks )

{

masks_list.resize( target_layers.length() );

for( int tar=0; tar < target_layers.length(); tar++ )

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

masks_list[tar].resize( ith_sample_in_sequence+1 );

}

// Forward propagation

// Fetch right representation for input

clamp_units(input.subVec(0,inputsize_without_masks),

input_layer,

input_symbol_sizes);

input_list[ith_sample_in_sequence] << input_layer->expectation;

// Fetch right representation for target

sum_target_elements = 0;

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

if( use_target_layers_masks )

{

clamp_units(target.subVec(

sum_target_elements,

target_layers_n_of_target_elements[tar]),

target_layers[tar],

target_symbol_sizes[tar],

input.subVec(

inputsize_without_masks

+ sum_target_elements,

target_layers_n_of_target_elements[tar]),

masks_list[tar][ith_sample_in_sequence]

);

}

else

{

clamp_units(target.subVec(

sum_target_elements,

target_layers_n_of_target_elements[tar]),

target_layers[tar],

target_symbol_sizes[tar]);

}

targets_list[tar][ith_sample_in_sequence] <<

target_layers[tar]->expectation;

}

sum_target_elements += target_layers_n_of_target_elements[tar];

}

input_connections->fprop( input_list[ith_sample_in_sequence],

hidden_act_no_bias_list[ith_sample_in_sequence]);

if( ith_sample_in_sequence > 0 && dynamic_connections )

{

dynamic_connections->fprop(

hidden_list[ith_sample_in_sequence-1],

dynamic_act_no_bias_contribution );

hidden_act_no_bias_list[ith_sample_in_sequence] +=

dynamic_act_no_bias_contribution;

}

hidden_layer->fprop( hidden_act_no_bias_list[ith_sample_in_sequence],

hidden_list[ith_sample_in_sequence] );

if( hidden_layer2 )

{

hidden_connections->fprop(

hidden_list[ith_sample_in_sequence],

hidden2_act_no_bias_list[ith_sample_in_sequence]);

hidden_layer2->fprop(

hidden2_act_no_bias_list[ith_sample_in_sequence],

hidden2_list[ith_sample_in_sequence]

);

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_connections[tar]->fprop(

hidden2_list[ith_sample_in_sequence],

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence]

);

target_layers[tar]->fprop(

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence],

target_prediction_list[tar][

ith_sample_in_sequence] );

if( use_target_layers_masks )

target_prediction_list[tar][ ith_sample_in_sequence] *=

masks_list[tar][ith_sample_in_sequence];

}

}

}

else

{

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_connections[tar]->fprop(

hidden_list[ith_sample_in_sequence],

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence]

);

target_layers[tar]->fprop(

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence],

target_prediction_list[tar][

ith_sample_in_sequence] );

if( use_target_layers_masks )

target_prediction_list[tar][ ith_sample_in_sequence] *=

masks_list[tar][ith_sample_in_sequence];

}

}

}

sum_target_elements = 0;

for( int tar=0; tar < target_layers.length(); tar++ )

{

if( !fast_exact_is_equal(target_layers_weights[tar],0) )

{

target_layers[tar]->activation <<

target_prediction_act_no_bias_list[tar][

ith_sample_in_sequence];

target_layers[tar]->activation += target_layers[tar]->bias;

target_layers[tar]->setExpectation(

target_prediction_list[tar][

ith_sample_in_sequence]);

nll_list(ith_sample_in_sequence,tar) =

target_layers[tar]->fpropNLL(

targets_list[tar][ith_sample_in_sequence] );

train_costs[tar] += nll_list(ith_sample_in_sequence,tar);

// Normalize by the number of things to predict

if( use_target_layers_masks )

{

train_n_items[tar] += sum(

input.subVec( inputsize_without_masks

+ sum_target_elements,

target_layers_n_of_target_elements[tar]) );

}

else

train_n_items[tar]++;

}

if( use_target_layers_masks )

sum_target_elements +=

target_layers_n_of_target_elements[tar];

}

ith_sample_in_sequence++;

}

if( pb )

pb->update( stage + 1 - init_stage);

for(int i=0; i<train_costs.length(); i++)

{

if( !fast_exact_is_equal(target_layers_weights[i],0) )

train_costs[i] /= train_n_items[i];

else

train_costs[i] = MISSING_VALUE;

}

if(verbosity>0)

cout << "mean costs at stage " << stage <<

" = " << train_costs << endl;

stage++;

train_stats->update(train_costs);

}

if( pb )

{

delete pb;

pb = 0;

}

}

train_stats->finalize();

}

Reimplemented from PLearn::PLearner.

Definition at line 218 of file DynamicallyLinkedRBMsModel.h.

Vec PLearn::DynamicallyLinkedRBMsModel::bias_gradient [mutable, protected] |

Stores bias gradient.

Definition at line 234 of file DynamicallyLinkedRBMsModel.h.

Referenced by makeDeepCopyFromShallowCopy(), and recurrent_update().

AutoVMatrix* PLearn::DynamicallyLinkedRBMsModel::data [protected] |

Store external data;.

Definition at line 231 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate().

Vec PLearn::DynamicallyLinkedRBMsModel::dynamic_act_no_bias_contribution [mutable, protected] |

Contribution of dynamic weights to hidden layer activation.

Definition at line 270 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), test(), and train().

The RBMConnection between the first hidden layers, through time.

Definition at line 100 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), forget(), generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), setLearningRate(), test(), and train().

Value of the first input component for end-of-sequence delimiter.

Definition at line 85 of file DynamicallyLinkedRBMsModel.h.

Referenced by declareOptions(), generate(), test(), and train().

TVec< Vec > PLearn::DynamicallyLinkedRBMsModel::hidden2_act_no_bias_list [mutable, protected] |

Definition at line 251 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

TVec< Vec > PLearn::DynamicallyLinkedRBMsModel::hidden2_list [mutable, protected] |

List of second hidden layers values.

Definition at line 250 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

TVec< Vec > PLearn::DynamicallyLinkedRBMsModel::hidden_act_no_bias_list [mutable, protected] |

Definition at line 247 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

The RBMConnection between the first and second hidden layers (optional)

Definition at line 103 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), forget(), generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), setLearningRate(), test(), and train().

Vec PLearn::DynamicallyLinkedRBMsModel::hidden_gradient [mutable, protected] |

Stores hidden gradient of dynamic connections.

Definition at line 240 of file DynamicallyLinkedRBMsModel.h.

Referenced by makeDeepCopyFromShallowCopy(), and recurrent_update().

The hidden layer of the model.

Definition at line 94 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), forget(), generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), setLearningRate(), test(), and train().

The second hidden layer of the model (optional)

Definition at line 97 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), forget(), generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), setLearningRate(), test(), and train().

TVec< Vec > PLearn::DynamicallyLinkedRBMsModel::hidden_list [mutable, protected] |

List of hidden layers values.

Definition at line 246 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

Vec PLearn::DynamicallyLinkedRBMsModel::hidden_temporal_gradient [mutable, protected] |

Stores hidden gradient of dynamic connections coming from time t+1.

Definition at line 243 of file DynamicallyLinkedRBMsModel.h.

Referenced by makeDeepCopyFromShallowCopy(), and recurrent_update().

The RBMConnection from input_layer to hidden_layer.

Definition at line 106 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), forget(), generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), setLearningRate(), test(), and train().

The input layer of the model.

Definition at line 88 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), forget(), generate(), makeDeepCopyFromShallowCopy(), setLearningRate(), test(), and train().

TVec< Vec > PLearn::DynamicallyLinkedRBMsModel::input_list [mutable, protected] |

List of inputs values.

Definition at line 258 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

Number of symbols for each symbolic field of train_set.

Definition at line 118 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), generate(), makeDeepCopyFromShallowCopy(), test(), and train().

TVec< TVec< Vec > > PLearn::DynamicallyLinkedRBMsModel::masks_list [mutable, protected] |

List of all targets' masks.

Definition at line 267 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

Mat PLearn::DynamicallyLinkedRBMsModel::nll_list [mutable, protected] |

List of the nll of the input samples in a sequence.

Definition at line 264 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

The learning rate used during the recurrent phase.

Definition at line 72 of file DynamicallyLinkedRBMsModel.h.

Referenced by declareOptions(), and train().

The RBMConnection from input_layer to hidden_layer.

Definition at line 109 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), forget(), generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), setLearningRate(), test(), and train().

The target layers of the model.

Definition at line 91 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), forget(), generate(), getTestCostNames(), makeDeepCopyFromShallowCopy(), outputsize(), recurrent_update(), setLearningRate(), test(), and train().

Number of elements in the target part of a VMatrix associated to each target layer.

Definition at line 115 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), generate(), makeDeepCopyFromShallowCopy(), test(), and train().

The training weights of each target layers.

Definition at line 78 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), generate(), recurrent_update(), test(), and train().

TVec< TVec< Vec > > PLearn::DynamicallyLinkedRBMsModel::target_prediction_act_no_bias_list [mutable, protected] |

Definition at line 255 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

TVec< TVec< Vec > > PLearn::DynamicallyLinkedRBMsModel::target_prediction_list [mutable, protected] |

List of target prediction values.

Definition at line 254 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

Number of symbols for each symbolic field of train_set.

Definition at line 121 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), generate(), makeDeepCopyFromShallowCopy(), test(), and train().

TVec< TVec< Vec > > PLearn::DynamicallyLinkedRBMsModel::targets_list [mutable, protected] |

List of inputs values.

Definition at line 261 of file DynamicallyLinkedRBMsModel.h.

Referenced by generate(), makeDeepCopyFromShallowCopy(), recurrent_update(), test(), and train().

Indication that a mask indicating which target to predict is present in the input part of the VMatrix dataset.

Definition at line 82 of file DynamicallyLinkedRBMsModel.h.

Referenced by build_(), declareOptions(), generate(), recurrent_update(), test(), and train().

Vec PLearn::DynamicallyLinkedRBMsModel::visi_bias_gradient [mutable, protected] |

Stores bias gradient.

Definition at line 237 of file DynamicallyLinkedRBMsModel.h.

Referenced by makeDeepCopyFromShallowCopy(), and recurrent_update().

1.7.4

1.7.4