|

PLearn 0.1

|

|

PLearn 0.1

|

Layer in an RBM formed with binomial units. More...

#include <RBMGaussianLayer.h>

Public Member Functions | |

| RBMGaussianLayer () | |

| Default constructor. | |

| RBMGaussianLayer (int the_size) | |

| Constructor from the number of units in the multinomial. | |

| virtual void | getUnitActivations (int i, PP< RBMParameters > rbmp, int offset=0) |

| Uses "rbmp" to obtain the activations of unit "i" of this layer. | |

| virtual void | getAllActivations (PP< RBMParameters > rbmp, int offset=0) |

| Uses "rbmp" to obtain the activations of all units in this layer. | |

| virtual void | generateSample () |

| compute a sample, and update the sample field | |

| virtual void | computeExpectation () |

| compute the expectation | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient) |

| back-propagates the output gradient to the input | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMGaussianLayer * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| RBMGaussianLayer (real the_learning_rate=0.) | |

| Default constructor. | |

| RBMGaussianLayer (int the_size, real the_learning_rate=0.) | |

| Constructor from the number of units in the multinomial. | |

| RBMGaussianLayer (int the_size, real the_learning_rate=0., bool do_share_quad_coeff=false) | |

| Constructor from the number of units in the multinomial, with an aditional option. | |

| virtual void | generateSample () |

| compute a sample, and update the sample field | |

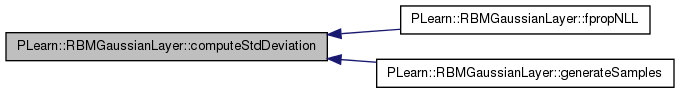

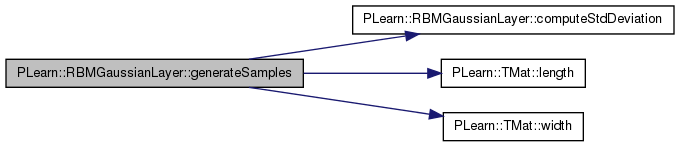

| virtual void | generateSamples () |

| Generate a mini-batch set of samples. | |

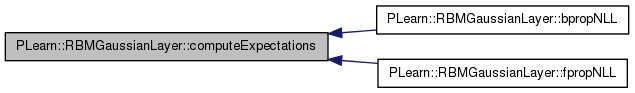

| virtual void | computeExpectation () |

| compute the expectation | |

| virtual void | computeExpectations () |

| compute the batch expectation | |

| virtual void | computeStdDeviation () |

| compute the standard deviation | |

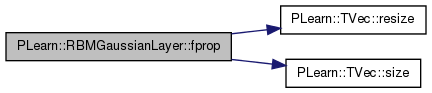

| virtual void | fprop (const Vec &input, Vec &output) const |

| forward propagation | |

| virtual void | bpropUpdate (const Vec &input, const Vec &output, Vec &input_gradient, const Vec &output_gradient, bool accumulate=false) |

| back-propagates the output gradient to the input | |

| virtual void | bpropUpdate (const Mat &inputs, const Mat &outputs, Mat &input_gradients, const Mat &output_gradients, bool accumulate=false) |

| Back-propagate the output gradient to the input, and update parameters. | |

| virtual void | accumulatePosStats (const Vec &pos_values) |

| Accumulates positive phase statistics. | |

| virtual void | accumulateNegStats (const Vec &neg_values) |

| Accumulates negative phase statistics. | |

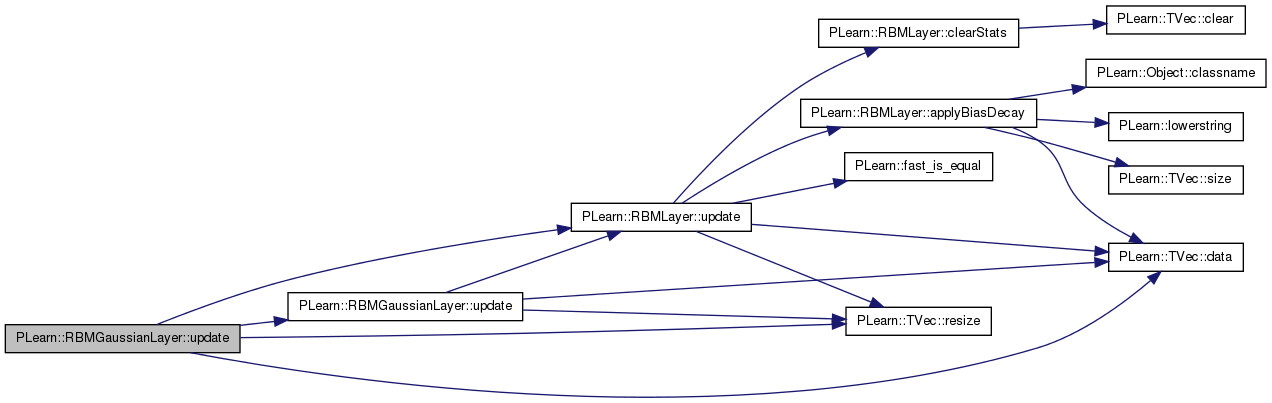

| virtual void | update () |

| Update parameters according to accumulated statistics. | |

| virtual void | update (const Vec &pos_values, const Vec &neg_values) |

| Update parameters according to one pair of vectors. | |

| virtual void | update (const Mat &pos_values, const Mat &neg_values) |

| Batch version. | |

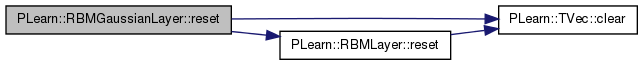

| virtual void | reset () |

| resets activations, sample, expectation and sigma fields | |

| virtual void | clearStats () |

| resets the statistics and counts | |

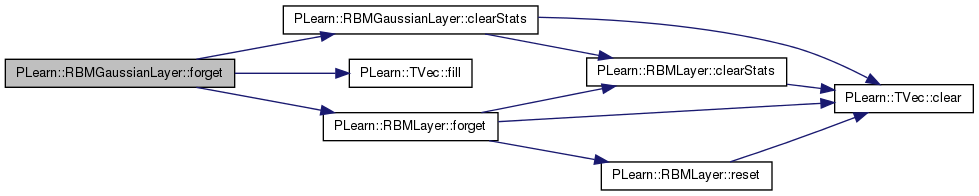

| virtual void | forget () |

| forgets everything | |

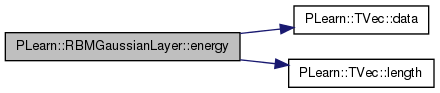

| virtual real | energy (const Vec &unit_values) const |

| compute bias' unit_values + min_quad_coeff.^2' unit_values.^2 | |

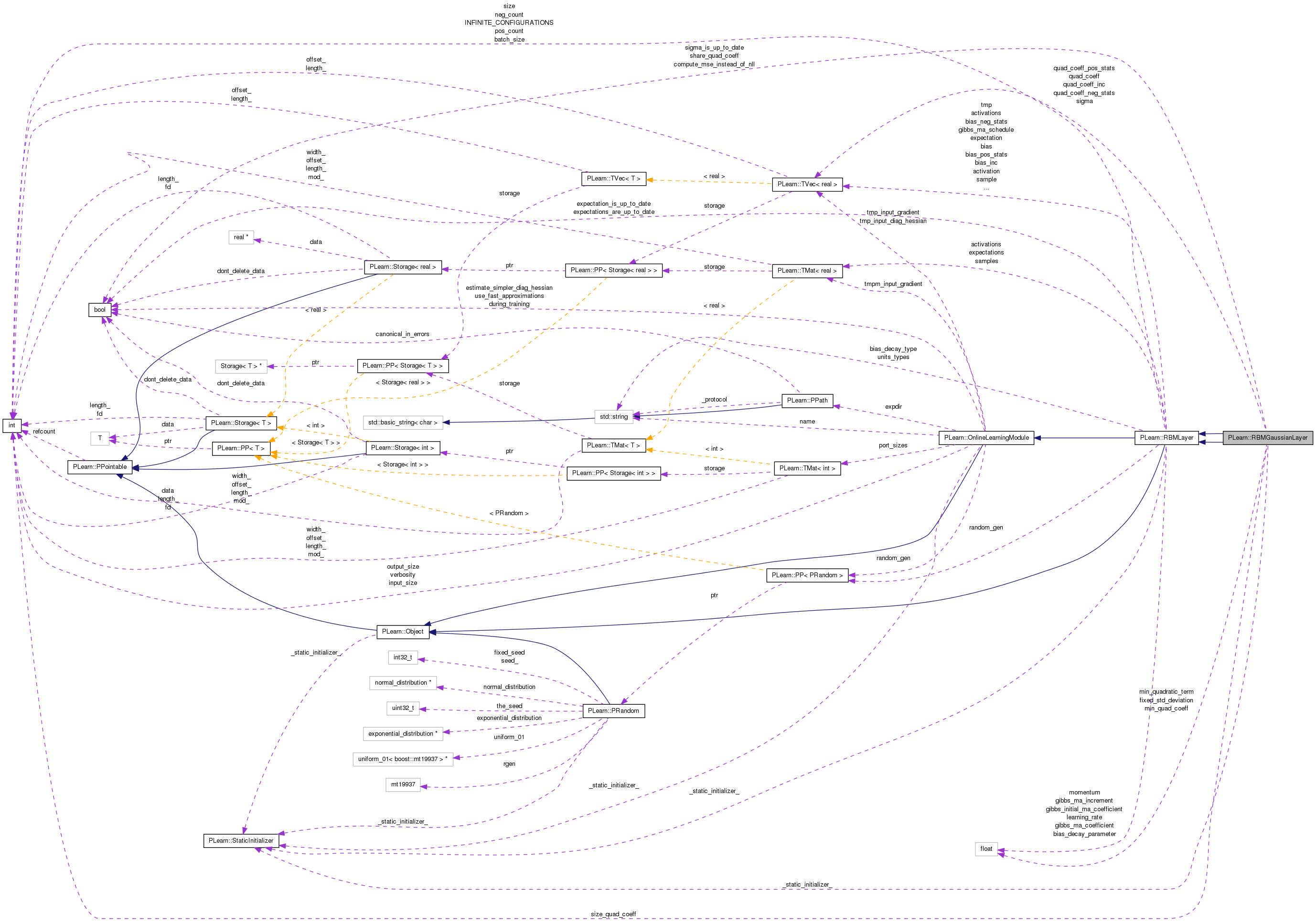

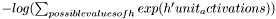

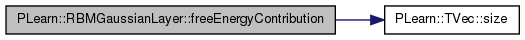

| virtual real | freeEnergyContribution (const Vec &unit_activations) const |

Computes  This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed. | |

| virtual int | getConfigurationCount () |

| Returns a number of different configurations the layer can be in. | |

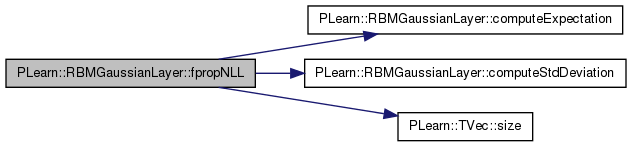

| virtual real | fpropNLL (const Vec &target) |

| Computes the negative log-likelihood of target given the internal activations of the layer. | |

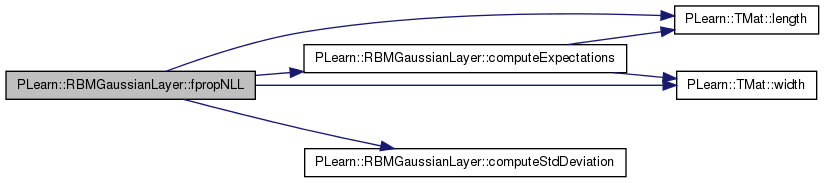

| virtual void | fpropNLL (const Mat &targets, const Mat &costs_column) |

| virtual void | bpropNLL (const Vec &target, real nll, Vec &bias_gradient) |

| Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations. | |

| virtual void | bpropNLL (const Mat &targets, const Mat &costs_column, Mat &bias_gradients) |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual RBMGaussianLayer * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| real | min_quadratic_term |

| real | min_quad_coeff |

| bool | share_quad_coeff |

| int | size_quad_coeff |

| Number of units when share_quad_coeff is False or 1 when share_quad_coeff is True. | |

| real | fixed_std_deviation |

| bool | compute_mse_instead_of_nll |

| Indication that fpropNLL should compute the MSE instead of the NLL. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Protected Attributes | |

| Vec | quad_coeff |

| Quadradic coefficients. | |

| Vec | quad_coeff_pos_stats |

| Vec | quad_coeff_neg_stats |

| Vec | quad_coeff_inc |

| Vec | sigma |

| Stores the standard deviations. | |

| bool | sigma_is_up_to_date |

Private Types | |

| typedef RBMLayer | inherited |

| typedef RBMLayer | inherited |

Private Member Functions | |

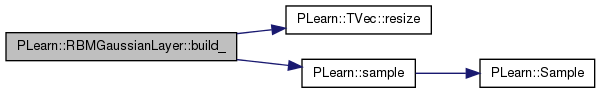

| void | build_ () |

| This does the actual building. | |

| void | build_ () |

| This does the actual building. | |

Layer in an RBM formed with binomial units.

Layer in an RBM formed with Gaussian units.

Definition at line 53 of file DEPRECATED/RBMGaussianLayer.h.

typedef RBMLayer PLearn::RBMGaussianLayer::inherited [private] |

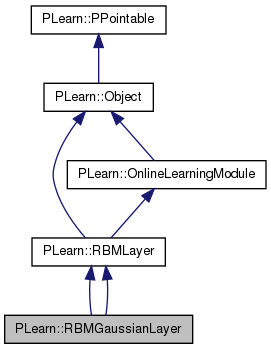

Reimplemented from PLearn::RBMLayer.

Definition at line 55 of file DEPRECATED/RBMGaussianLayer.h.

typedef RBMLayer PLearn::RBMGaussianLayer::inherited [private] |

Reimplemented from PLearn::RBMLayer.

Definition at line 54 of file RBMGaussianLayer.h.

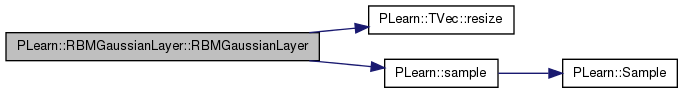

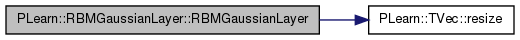

| PLearn::RBMGaussianLayer::RBMGaussianLayer | ( | ) |

| PLearn::RBMGaussianLayer::RBMGaussianLayer | ( | int | the_size | ) |

Constructor from the number of units in the multinomial.

Definition at line 55 of file DEPRECATED/RBMGaussianLayer.cc.

References PLearn::TVec< T >::resize(), and PLearn::sample().

{

size = the_size;

units_types = string( the_size, 'q' );

activations.resize( 2*the_size );

sample.resize( the_size );

expectation.resize( the_size );

expectation_is_up_to_date = false;

}

| PLearn::RBMGaussianLayer::RBMGaussianLayer | ( | real | the_learning_rate = 0. | ) |

Default constructor.

Definition at line 53 of file RBMGaussianLayer.cc.

:

inherited( the_learning_rate ),

min_quad_coeff( 0. ),

share_quad_coeff( false ),

size_quad_coeff( 0 ),

fixed_std_deviation( -1 ),

compute_mse_instead_of_nll( false ),

sigma_is_up_to_date( false )

{

}

Constructor from the number of units in the multinomial.

Definition at line 64 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::activation, PLearn::RBMLayer::bias, PLearn::RBMLayer::bias_neg_stats, PLearn::RBMLayer::bias_pos_stats, PLearn::RBMLayer::expectation, PLearn::TVec< T >::resize(), PLearn::RBMLayer::sample, and PLearn::RBMLayer::size.

:

inherited( the_learning_rate ),

min_quad_coeff( 0. ),

share_quad_coeff( false ),

size_quad_coeff( 0 ),

fixed_std_deviation( -1 ),

compute_mse_instead_of_nll( false ),

quad_coeff( the_size, 1. ), // or 1./M_SQRT2 ?

quad_coeff_pos_stats( the_size ),

quad_coeff_neg_stats( the_size ),

sigma( the_size ),

sigma_is_up_to_date( false )

{

size = the_size;

activation.resize( the_size );

sample.resize( the_size );

expectation.resize( the_size );

bias.resize( the_size );

bias_pos_stats.resize( the_size );

bias_neg_stats.resize( the_size );

}

| PLearn::RBMGaussianLayer::RBMGaussianLayer | ( | int | the_size, |

| real | the_learning_rate = 0., |

||

| bool | do_share_quad_coeff = false |

||

| ) |

Constructor from the number of units in the multinomial, with an aditional option.

Definition at line 86 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::activation, PLearn::RBMLayer::bias, PLearn::RBMLayer::bias_neg_stats, PLearn::RBMLayer::bias_pos_stats, PLearn::RBMLayer::expectation, quad_coeff, PLearn::TVec< T >::resize(), PLearn::RBMLayer::sample, share_quad_coeff, sigma, PLearn::RBMLayer::size, and size_quad_coeff.

:

inherited( the_learning_rate ),

min_quad_coeff( 0. ),

fixed_std_deviation( -1 ),

compute_mse_instead_of_nll( false ),

quad_coeff_pos_stats( the_size ),

quad_coeff_neg_stats( the_size ),

sigma_is_up_to_date( false )

{

size = the_size;

activation.resize( the_size );

sample.resize( the_size );

expectation.resize( the_size );

bias.resize( the_size );

bias_pos_stats.resize( the_size );

bias_neg_stats.resize( the_size );

share_quad_coeff = do_share_quad_coeff;

if ( share_quad_coeff )

size_quad_coeff=1;

else

size_quad_coeff=size;

quad_coeff=Vec(size_quad_coeff,1.);

sigma=Vec(size_quad_coeff);

}

| string PLearn::RBMGaussianLayer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| static string PLearn::RBMGaussianLayer::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

| OptionList & PLearn::RBMGaussianLayer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| static OptionList& PLearn::RBMGaussianLayer::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

| RemoteMethodMap & PLearn::RBMGaussianLayer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| static RemoteMethodMap& PLearn::RBMGaussianLayer::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

Reimplemented from PLearn::RBMLayer.

| static Object* PLearn::RBMGaussianLayer::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

| Object * PLearn::RBMGaussianLayer::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| StaticInitializer RBMGaussianLayer::_static_initializer_ & PLearn::RBMGaussianLayer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| static void PLearn::RBMGaussianLayer::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::RBMLayer.

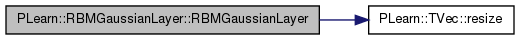

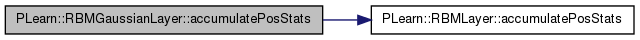

| void PLearn::RBMGaussianLayer::accumulateNegStats | ( | const Vec & | neg_values | ) | [virtual] |

Accumulates negative phase statistics.

Reimplemented from PLearn::RBMLayer.

Definition at line 467 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::accumulateNegStats(), fixed_std_deviation, i, quad_coeff, quad_coeff_neg_stats, share_quad_coeff, and PLearn::RBMLayer::size.

{

if ( fixed_std_deviation <= 0 )

{

if (share_quad_coeff)

for( int i=0 ; i<size ; i++ )

{

real x_i = neg_values[i];

quad_coeff_neg_stats[i] += 2 * quad_coeff[0] * x_i * x_i;

}

else

for( int i=0 ; i<size ; i++ )

{

real x_i = neg_values[i];

quad_coeff_neg_stats[i] += 2 * quad_coeff[i] * x_i * x_i;

}

}

inherited::accumulateNegStats( neg_values );

}

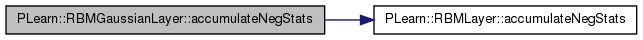

| void PLearn::RBMGaussianLayer::accumulatePosStats | ( | const Vec & | pos_values | ) | [virtual] |

Accumulates positive phase statistics.

Reimplemented from PLearn::RBMLayer.

Definition at line 446 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::accumulatePosStats(), fixed_std_deviation, i, quad_coeff, quad_coeff_pos_stats, share_quad_coeff, and PLearn::RBMLayer::size.

{

if ( fixed_std_deviation <= 0 )

{

if (share_quad_coeff)

for( int i=0 ; i<size ; i++ )

{

real x_i = pos_values[i];

quad_coeff_pos_stats[i] += 2 * quad_coeff[0] * x_i * x_i;

}

else

for( int i=0 ; i<size ; i++ )

{

real x_i = pos_values[i];

quad_coeff_pos_stats[i] += 2 * quad_coeff[i] * x_i * x_i;

}

}

inherited::accumulatePosStats( pos_values );

}

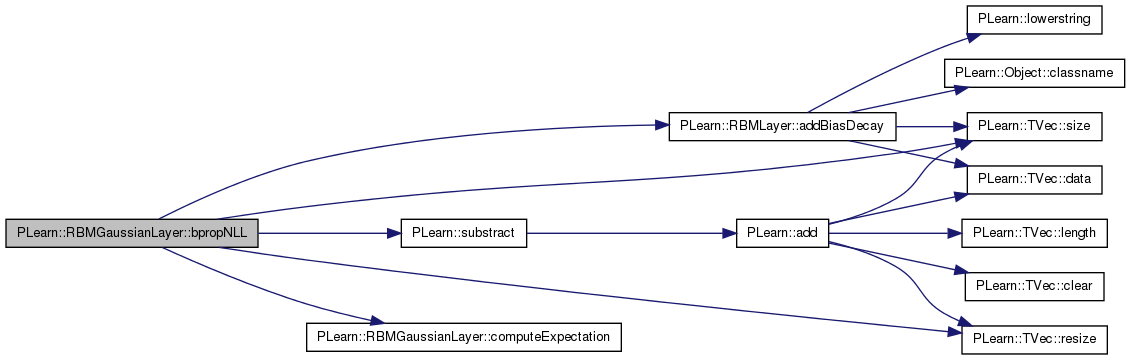

| void PLearn::RBMGaussianLayer::bpropNLL | ( | const Vec & | target, |

| real | nll, | ||

| Vec & | bias_gradient | ||

| ) | [virtual] |

Computes the gradient of the negative log-likelihood of target with respect to the layer's bias, given the internal activations.

Reimplemented from PLearn::RBMLayer.

Definition at line 832 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::addBiasDecay(), compute_mse_instead_of_nll, computeExpectation(), PLearn::RBMLayer::expectation, PLearn::OnlineLearningModule::input_size, PLASSERT, PLearn::TVec< T >::resize(), PLearn::TVec< T >::size(), and PLearn::substract().

{

computeExpectation();

PLASSERT( target.size() == input_size );

bias_gradient.resize( size );

// bias_gradient = expectation - target

substract(expectation, target, bias_gradient);

if( compute_mse_instead_of_nll )

bias_gradient *= 2.;

addBiasDecay(bias_gradient);

}

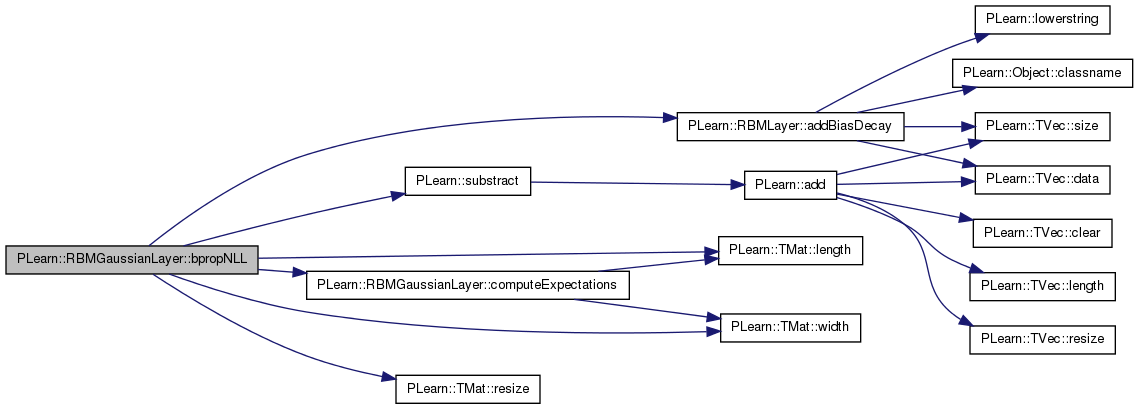

| void PLearn::RBMGaussianLayer::bpropNLL | ( | const Mat & | targets, |

| const Mat & | costs_column, | ||

| Mat & | bias_gradients | ||

| ) | [virtual] |

Reimplemented from PLearn::RBMLayer.

Definition at line 848 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::addBiasDecay(), PLearn::RBMLayer::batch_size, compute_mse_instead_of_nll, computeExpectations(), PLearn::RBMLayer::expectations, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), PLASSERT, PLearn::TMat< T >::resize(), PLearn::substract(), and PLearn::TMat< T >::width().

{

computeExpectations();

PLASSERT( targets.width() == input_size );

PLASSERT( targets.length() == batch_size );

PLASSERT( costs_column.width() == 1 );

PLASSERT( costs_column.length() == batch_size );

bias_gradients.resize( batch_size, size );

// bias_gradients = expectations - targets

substract(expectations, targets, bias_gradients);

if( compute_mse_instead_of_nll )

bias_gradients *= 2.;

addBiasDecay(bias_gradients);

}

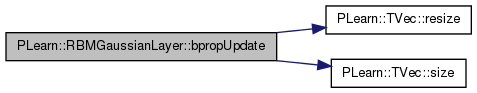

| void PLearn::RBMGaussianLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient | ||

| ) | [virtual] |

back-propagates the output gradient to the input

Implements PLearn::RBMLayer.

Definition at line 108 of file DEPRECATED/RBMGaussianLayer.cc.

References i, PLASSERT, PLearn::TVec< T >::resize(), and PLearn::TVec< T >::size().

{

PLASSERT( input.size() == 2 * size );

PLASSERT( output.size() == size );

PLASSERT( output_gradient.size() == size );

input_gradient.resize( 2 * size ) ;

for( int i=0 ; i<size ; ++i ) {

input_gradient[2*i] = output_gradient[i] ;

input_gradient[2*i+1] = 0. ;

}

}

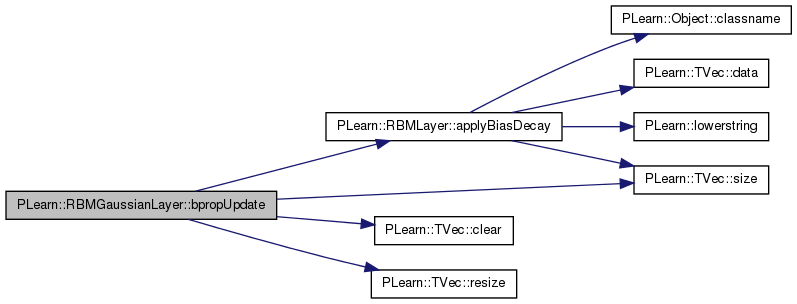

| void PLearn::RBMGaussianLayer::bpropUpdate | ( | const Vec & | input, |

| const Vec & | output, | ||

| Vec & | input_gradient, | ||

| const Vec & | output_gradient, | ||

| bool | accumulate = false |

||

| ) | [virtual] |

back-propagates the output gradient to the input

Implements PLearn::RBMLayer.

Definition at line 240 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::applyBiasDecay(), PLearn::RBMLayer::bias, PLearn::RBMLayer::bias_inc, PLearn::TVec< T >::clear(), i, PLearn::RBMLayer::learning_rate, PLearn::RBMLayer::momentum, PLASSERT, PLASSERT_MSG, quad_coeff, PLearn::TVec< T >::resize(), share_quad_coeff, PLearn::RBMLayer::size, and PLearn::TVec< T >::size().

{

PLASSERT( input.size() == size );

PLASSERT( output.size() == size );

PLASSERT( output_gradient.size() == size );

if( accumulate )

{

PLASSERT_MSG( input_gradient.size() == size,

"Cannot resize input_gradient AND accumulate into it" );

}

else

{

input_gradient.resize( size );

input_gradient.clear();

}

if( momentum != 0. )

{

bias_inc.resize( size );

//quad_coeff_inc.resize( size );//quad_coeff_inc.resize( 1 );

}

// real two_lr = 2 * learning_rate;

real a_i = quad_coeff[0];

for( int i=0 ; i<size ; ++i )

{

if(!share_quad_coeff)

a_i = quad_coeff[i];

real in_grad_i = output_gradient[i] / (2 * a_i * a_i);

input_gradient[i] += in_grad_i;

if( momentum == 0. )

{

// bias -= learning_rate * input_gradient

bias[i] -= learning_rate * in_grad_i;

/* For the moment, we do not want to change the quadratic

coefficient during the gradient descent phase.

// update the quadratic coefficient:

// a_i -= learning_rate * out_grad_i * (b_i + input_i) / a_i^3

// (or a_i -= 2 * learning_rate * in_grad_i * (b_i + input_i) / a_i

a_i -= two_lr * in_grad_i * (bias[i] + input[i])

/ a_i;

if( a_i < min_quad_coeff )

a_i = min_quad_coeff;

*/

}

else

{

// bias_inc = momentum * bias_inc - learning_rate * input_gradient

// bias += bias_inc

bias_inc[i] = momentum * bias_inc[i] - learning_rate * in_grad_i;

bias[i] += bias_inc[i];

/*

// The update rule becomes:

// a_inc_i = momentum * a_i_inc - learning_rate * out_grad_i

// * (b_i + input_i) / a_i^3

// a_i += a_inc_i

quad_coeff_inc[i] = momentum * quad_coeff_inc[i]

- two_lr * in_grad_i * (bias[i] + input[i])

/ a_i;

a_i += quad_coeff_inc[i];

if( a_i < min_quad_coeff )

a_i = min_quad_coeff;

*/

}

}

applyBiasDecay();

}

| virtual void PLearn::RBMGaussianLayer::bpropUpdate | ( | const Mat & | inputs, |

| const Mat & | outputs, | ||

| Mat & | input_gradients, | ||

| const Mat & | output_gradients, | ||

| bool | accumulate = false |

||

| ) | [inline, virtual] |

Back-propagate the output gradient to the input, and update parameters.

Implements PLearn::RBMLayer.

Definition at line 111 of file RBMGaussianLayer.h.

References PLASSERT_MSG.

{

PLASSERT_MSG(false, "Not implemented");

}

| void PLearn::RBMGaussianLayer::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMLayer.

Definition at line 149 of file DEPRECATED/RBMGaussianLayer.cc.

{

inherited::build();

build_();

}

| virtual void PLearn::RBMGaussianLayer::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::RBMLayer.

| void PLearn::RBMGaussianLayer::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMLayer.

Definition at line 136 of file DEPRECATED/RBMGaussianLayer.cc.

References PLearn::TVec< T >::resize(), and PLearn::sample().

{

if( size < 0 )

size = int(units_types.size());

if( size != (int) units_types.size() )

units_types = string( size, 'q' );

activations.resize( 2*size );

sample.resize( size );

expectation.resize( size );

expectation_is_up_to_date = false;

}

| void PLearn::RBMGaussianLayer::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::RBMLayer.

| string PLearn::RBMGaussianLayer::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| virtual string PLearn::RBMGaussianLayer::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

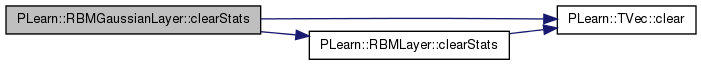

| void PLearn::RBMGaussianLayer::clearStats | ( | ) | [virtual] |

resets the statistics and counts

Reimplemented from PLearn::RBMLayer.

Definition at line 324 of file RBMGaussianLayer.cc.

References PLearn::TVec< T >::clear(), PLearn::RBMLayer::clearStats(), quad_coeff_neg_stats, and quad_coeff_pos_stats.

Referenced by forget().

{

quad_coeff_pos_stats.clear();

quad_coeff_neg_stats.clear();

inherited::clearStats();

}

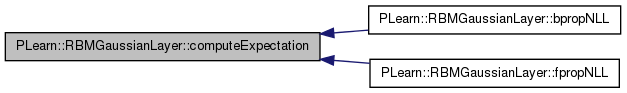

| void PLearn::RBMGaussianLayer::computeExpectation | ( | ) | [virtual] |

compute the expectation

Implements PLearn::RBMLayer.

Definition at line 97 of file DEPRECATED/RBMGaussianLayer.cc.

References i.

Referenced by bpropNLL(), and fpropNLL().

{

if( expectation_is_up_to_date )

return;

for( int i=0 ; i<size ; i++ )

expectation[i] = activations[2*i];

expectation_is_up_to_date = true;

}

| virtual void PLearn::RBMGaussianLayer::computeExpectation | ( | ) | [virtual] |

compute the expectation

Implements PLearn::RBMLayer.

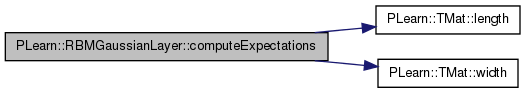

| void PLearn::RBMGaussianLayer::computeExpectations | ( | ) | [virtual] |

compute the batch expectation

Implements PLearn::RBMLayer.

Definition at line 176 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::activations, PLearn::RBMLayer::batch_size, PLearn::RBMLayer::expectations, PLearn::RBMLayer::expectations_are_up_to_date, i, PLearn::TMat< T >::length(), PLASSERT, quad_coeff, share_quad_coeff, PLearn::RBMLayer::size, and PLearn::TMat< T >::width().

Referenced by bpropNLL(), and fpropNLL().

{

if( expectations_are_up_to_date )

return;

PLASSERT( expectations.width() == size

&& expectations.length() == batch_size );

if(share_quad_coeff)

{

real a_i = quad_coeff[0];

for (int k = 0; k < batch_size; k++)

for (int i = 0 ; i < size ; i++)

{

expectations(k, i) = activations(k, i) / (2 * a_i * a_i) ;

}

}

else

for (int k = 0; k < batch_size; k++)

for (int i = 0 ; i < size ; i++)

{

real a_i = quad_coeff[i];

expectations(k, i) = activations(k, i) / (2 * a_i * a_i) ;

}

expectations_are_up_to_date = true;

}

| void PLearn::RBMGaussianLayer::computeStdDeviation | ( | ) | [virtual] |

compute the standard deviation

Definition at line 204 of file RBMGaussianLayer.cc.

References M_SQRT2, quad_coeff, share_quad_coeff, sigma, sigma_is_up_to_date, and PLearn::RBMLayer::size.

Referenced by fpropNLL(), and generateSamples().

{

if( sigma_is_up_to_date )

return;

// sigma = 1 / (sqrt(2) * quad_coeff[i])

if(share_quad_coeff)

sigma[0] = 1 / (M_SQRT2 * quad_coeff[0]);

else

for( int i=0 ; i<size ; i++ )

sigma[i] = 1 / (M_SQRT2 * quad_coeff[i]);

sigma_is_up_to_date = true;

}

| void PLearn::RBMGaussianLayer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMLayer.

Definition at line 125 of file DEPRECATED/RBMGaussianLayer.cc.

{

/*

declareOption(ol, "size", &RBMGaussianLayer::size,

OptionBase::buildoption,

"Number of units.");

*/

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static void PLearn::RBMGaussianLayer::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::RBMLayer.

| static const PPath& PLearn::RBMGaussianLayer::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 98 of file DEPRECATED/RBMGaussianLayer.h.

:

//##### Not Options #####################################################

| static const PPath& PLearn::RBMGaussianLayer::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 170 of file RBMGaussianLayer.h.

:

//##### Learned Options #################################################

| RBMGaussianLayer * PLearn::RBMGaussianLayer::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMLayer.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| virtual RBMGaussianLayer* PLearn::RBMGaussianLayer::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::RBMLayer.

compute bias' unit_values + min_quad_coeff.^2' unit_values.^2

Reimplemented from PLearn::RBMLayer.

Definition at line 685 of file RBMGaussianLayer.cc.

References a, b, PLearn::RBMLayer::bias, PLearn::TVec< T >::data(), i, PLearn::TVec< T >::length(), PLASSERT, quad_coeff, share_quad_coeff, PLearn::RBMLayer::size, and PLearn::RBMLayer::tmp.

{

PLASSERT( unit_values.length() == size );

real en = 0.;

real tmp;

if (size > 0)

{

real* v = unit_values.data();

real* a = quad_coeff.data();

real* b = bias.data();

if(share_quad_coeff)

for(register int i=0; i<size; i++)

{

tmp = a[0]*v[i];

en += tmp*tmp - b[i]*v[i];

}

else

for(register int i=0; i<size; i++)

{

tmp = a[i]*v[i];

en += tmp*tmp - b[i]*v[i];

}

}

return en;

}

| void PLearn::RBMGaussianLayer::forget | ( | ) | [virtual] |

forgets everything

Reimplemented from PLearn::RBMLayer.

Definition at line 332 of file RBMGaussianLayer.cc.

References clearStats(), PLearn::TVec< T >::fill(), fixed_std_deviation, PLearn::RBMLayer::forget(), M_SQRT2, and quad_coeff.

{

clearStats();

if( fixed_std_deviation > 0 )

quad_coeff.fill( 1 / ( M_SQRT2 * fixed_std_deviation ) );

else

quad_coeff.fill( 1. );

inherited::forget();

}

forward propagation

Reimplemented from PLearn::RBMLayer.

Definition at line 219 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::bias, i, PLearn::OnlineLearningModule::input_size, PLearn::OnlineLearningModule::output_size, PLASSERT, quad_coeff, PLearn::TVec< T >::resize(), share_quad_coeff, PLearn::TVec< T >::size(), and PLearn::RBMLayer::size.

{

PLASSERT( input.size() == input_size );

output.resize( output_size );

if(share_quad_coeff)

{

real a_i = quad_coeff[0];

for( int i=0 ; i<size ; i++ )

{

output[i] = (input[i] + bias[i]) / (2 * a_i * a_i);

}

}

else

for( int i=0 ; i<size ; i++ )

{

real a_i = quad_coeff[i];

output[i] = (input[i] + bias[i]) / (2 * a_i * a_i);

}

}

Computes the negative log-likelihood of target given the internal activations of the layer.

Reimplemented from PLearn::RBMLayer.

Definition at line 729 of file RBMGaussianLayer.cc.

References compute_mse_instead_of_nll, computeExpectation(), computeStdDeviation(), PLearn::RBMLayer::expectation, i, PLearn::OnlineLearningModule::input_size, Log2Pi, pl_log, PLASSERT, quad_coeff, share_quad_coeff, sigma, PLearn::TVec< T >::size(), and PLearn::RBMLayer::size.

{

PLASSERT( target.size() == input_size );

computeExpectation();

computeStdDeviation();

real ret = 0;

if( compute_mse_instead_of_nll )

{

real r;

for( int i=0 ; i<size ; i++ )

{

r = (target[i] - expectation[i]);

ret += r * r;

}

}

else

{

if(share_quad_coeff)

for( int i=0 ; i<size ; i++ )

{

real r = (target[i] - expectation[i]) * quad_coeff[0];

ret += r * r + pl_log(sigma[0]);

}

else

for( int i=0 ; i<size ; i++ )

{

// ret += (target[i]-expectation[i])^2/(2 sigma[i]^2)

// + log(sqrt(2*Pi) * sigma[i])

real r = (target[i] - expectation[i]) * quad_coeff[i];

ret += r * r + pl_log(sigma[i]);

}

ret += 0.5*size*Log2Pi;

}

return ret;

}

Reimplemented from PLearn::RBMLayer.

Definition at line 767 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::batch_size, compute_mse_instead_of_nll, computeExpectations(), computeStdDeviation(), PLearn::RBMLayer::expectation, PLearn::RBMLayer::expectations, i, PLearn::OnlineLearningModule::input_size, PLearn::TMat< T >::length(), Log2Pi, pl_log, PLASSERT, quad_coeff, share_quad_coeff, sigma, PLearn::RBMLayer::size, and PLearn::TMat< T >::width().

{

PLASSERT( targets.width() == input_size );

PLASSERT( targets.length() == batch_size );

PLASSERT( costs_column.width() == 1 );

PLASSERT( costs_column.length() == batch_size );

computeExpectations();

computeStdDeviation();

real nll;

real *expectation, *target;

if( compute_mse_instead_of_nll )

{

for (int k=0;k<batch_size;k++) // loop over minibatch

{

nll = 0;

expectation = expectations[k];

target = targets[k];

real r;

for( register int i=0 ; i<size ; i++ ) // loop over outputs

{

r = (target[i] - expectation[i]);

nll += r * r;

}

costs_column(k,0) = nll;

}

}

else

{

if(share_quad_coeff)

for (int k=0;k<batch_size;k++) // loop over minibatch

{

nll = 0;

expectation = expectations[k];

target = targets[k];

for( register int i=0 ; i<size ; i++ ) // loop over outputs

{

real r = (target[i] - expectation[i]) * quad_coeff[0];

nll += r * r + pl_log(sigma[0]);

}

nll += 0.5*size*Log2Pi;

costs_column(k,0) = nll;

}

else

for (int k=0;k<batch_size;k++) // loop over minibatch

{

nll = 0;

expectation = expectations[k];

target = targets[k];

for( register int i=0 ; i<size ; i++ ) // loop over outputs

{

// nll += (target[i]-expectation[i])^2/(2 sigma[i]^2)

// + log(sqrt(2*Pi) * sigma[i])

real r = (target[i] - expectation[i]) * quad_coeff[i];

nll += r * r + pl_log(sigma[i]);

}

nll += 0.5*size*Log2Pi;

costs_column(k,0) = nll;

}

}

}

| real PLearn::RBMGaussianLayer::freeEnergyContribution | ( | const Vec & | unit_activations | ) | const [virtual] |

Computes  This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

This quantity is used for computing the free energy of a sample x in the OTHER layer of an RBM, from which unit_activations was computed.

Reimplemented from PLearn::RBMLayer.

Definition at line 712 of file RBMGaussianLayer.cc.

References i, LogPi, pl_log, PLASSERT, quad_coeff, share_quad_coeff, PLearn::TVec< T >::size(), and PLearn::RBMLayer::size.

{

PLASSERT( unit_activations.size() == size );

// result = \sum_{i=0}^{size-1} (-(a_i/(2 q_i))^2 + log(q_i)) - n/2 log(Pi)

real result = -0.5 * size * LogPi;

for (int i=0; i<size; i++)

{

real a_i = unit_activations[i];

real q_i = share_quad_coeff ? quad_coeff[i] : quad_coeff[0];

result += pl_log(q_i);

result -= a_i * a_i / (4 * q_i * q_i);

}

return result;

}

| void PLearn::RBMGaussianLayer::generateSample | ( | ) | [virtual] |

compute a sample, and update the sample field

Implements PLearn::RBMLayer.

Definition at line 90 of file DEPRECATED/RBMGaussianLayer.cc.

References i, and PLearn::sample().

{

for( int i=0 ; i<size ; i++ )

sample[i] = random_gen->gaussian_mu_sigma( activations[2*i],

activations[2*i + 1] );

}

| virtual void PLearn::RBMGaussianLayer::generateSample | ( | ) | [virtual] |

compute a sample, and update the sample field

Implements PLearn::RBMLayer.

| void PLearn::RBMGaussianLayer::generateSamples | ( | ) | [virtual] |

Generate a mini-batch set of samples.

Implements PLearn::RBMLayer.

Definition at line 130 of file RBMGaussianLayer.cc.

References PLearn::RBMLayer::batch_size, computeStdDeviation(), PLearn::RBMLayer::expectations, PLearn::RBMLayer::expectations_are_up_to_date, PLearn::TMat< T >::length(), PLASSERT, PLASSERT_MSG, PLCHECK_MSG, PLearn::RBMLayer::random_gen, PLearn::RBMLayer::samples, share_quad_coeff, sigma, PLearn::RBMLayer::size, and PLearn::TMat< T >::width().

{

PLASSERT_MSG(random_gen,

"random_gen should be initialized before generating samples");

PLCHECK_MSG(expectations_are_up_to_date, "Expectations should be computed "

"before calling generateSamples()");

computeStdDeviation();

PLASSERT( samples.width() == size && samples.length() == batch_size );

if(share_quad_coeff)

for (int k = 0; k < batch_size; k++)

for (int i=0 ; i<size ; i++)

samples(k, i) = random_gen->gaussian_mu_sigma( expectations(k, i), sigma[0] );

else

for (int k = 0; k < batch_size; k++)

for (int i=0 ; i<size ; i++)

samples(k, i) = random_gen->gaussian_mu_sigma( expectations(k, i), sigma[i] );

}

| void PLearn::RBMGaussianLayer::getAllActivations | ( | PP< RBMParameters > | rbmp, |

| int | offset = 0 |

||

| ) | [virtual] |

Uses "rbmp" to obtain the activations of all units in this layer.

Unit 0 of this layer corresponds to unit "offset" of "rbmp".

Implements PLearn::RBMLayer.

Definition at line 77 of file DEPRECATED/RBMGaussianLayer.cc.

{

rbmp->computeUnitActivations( offset, size, activations );

/* static FILE * f = fopen("mu_sigma.txt" , "wt") ;

if (activations.size() > 2)

fprintf(f , "%0.8f %0.8f %0.8f %0.8f \n" , activations[0] , activations[1] ,

activations[2], activations[3]) ;

else

fprintf(f , "%0.8f %0.8f\n" , activations[0] , activations[1]) ;

*/

expectation_is_up_to_date = false;

}

| virtual int PLearn::RBMGaussianLayer::getConfigurationCount | ( | ) | [inline, virtual] |

Returns a number of different configurations the layer can be in.

Reimplemented from PLearn::RBMLayer.

Definition at line 151 of file RBMGaussianLayer.h.

{

return INFINITE_CONFIGURATIONS;

}

| OptionList & PLearn::RBMGaussianLayer::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| virtual OptionList& PLearn::RBMGaussianLayer::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| OptionMap & PLearn::RBMGaussianLayer::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| virtual OptionMap& PLearn::RBMGaussianLayer::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| RemoteMethodMap & PLearn::RBMGaussianLayer::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 49 of file DEPRECATED/RBMGaussianLayer.cc.

| virtual RemoteMethodMap& PLearn::RBMGaussianLayer::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

| void PLearn::RBMGaussianLayer::getUnitActivations | ( | int | i, |

| PP< RBMParameters > | rbmp, | ||

| int | offset = 0 |

||

| ) | [virtual] |

Uses "rbmp" to obtain the activations of unit "i" of this layer.

This activation vector is computed by the "i+offset"-th unit of "rbmp"

Implements PLearn::RBMLayer.

Definition at line 67 of file DEPRECATED/RBMGaussianLayer.cc.

References PLearn::TVec< T >::subVec().

{

Vec activation = activations.subVec( 2*i, 2 );

rbmp->computeUnitActivations( i+offset, 1, activation );

expectation_is_up_to_date = false;

}

| void PLearn::RBMGaussianLayer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMLayer.

Definition at line 156 of file DEPRECATED/RBMGaussianLayer.cc.

{

inherited::makeDeepCopyFromShallowCopy(copies);

}

| virtual void PLearn::RBMGaussianLayer::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::RBMLayer.

| void PLearn::RBMGaussianLayer::reset | ( | ) | [virtual] |

resets activations, sample, expectation and sigma fields

Reimplemented from PLearn::RBMLayer.

Definition at line 317 of file RBMGaussianLayer.cc.

References PLearn::TVec< T >::clear(), PLearn::RBMLayer::reset(), sigma, and sigma_is_up_to_date.

{

inherited::reset();

sigma.clear();

sigma_is_up_to_date = false;

}

Update parameters according to one pair of vectors.

Reimplemented from PLearn::RBMLayer.

Definition at line 558 of file RBMGaussianLayer.cc.

References a, PLearn::TVec< T >::data(), fixed_std_deviation, i, PLearn::RBMLayer::learning_rate, min_quad_coeff, PLearn::RBMLayer::momentum, quad_coeff, quad_coeff_inc, PLearn::TVec< T >::resize(), share_quad_coeff, sigma_is_up_to_date, PLearn::RBMLayer::size, PLearn::RBMLayer::update(), and update().

{

// quad_coeff[i] -= learning_rate * 2 * quad_coeff[i] * (pos_values[i]^2

// - neg_values[i]^2)

if ( fixed_std_deviation <= 0 )

{

real two_lr = 2 * learning_rate;

real* a = quad_coeff.data();

real* pv = pos_values.data();

real* nv = neg_values.data();

if( momentum == 0. )

{

if (share_quad_coeff)

{

real update=0;

for( int i=0 ; i<size ; i++ )

{

update += two_lr * a[0] * (nv[i]*nv[i] - pv[i]*pv[i]);

}

a[0] += update/(real)size;

if( a[0] < min_quad_coeff )

a[0] = min_quad_coeff;

}

else

for( int i=0 ; i<size ; i++ )

{

a[i] += two_lr * a[i] * (nv[i]*nv[i] - pv[i]*pv[i]);

if( a[i] < min_quad_coeff )

a[i] = min_quad_coeff;

}

}

else

{

real* ainc = quad_coeff_inc.data();

if(share_quad_coeff)

{

quad_coeff_inc.resize( 1 );

for( int i=0 ; i<size ; i++ )

{

ainc[0] = momentum*ainc[0]

+ two_lr * a[0] * (nv[i]*nv[i] - pv[i]*pv[i]);

ainc[0] /= (real)size;

a[0] += ainc[0];

}

if( a[0] < min_quad_coeff )

a[0] = min_quad_coeff;

}

else

{

quad_coeff_inc.resize( size );

for( int i=0 ; i<size ; i++ )

{

ainc[i] = momentum*ainc[i]

+ two_lr * a[i] * (nv[i]*nv[i] - pv[i]*pv[i]);

a[i] += ainc[i];

if( a[i] < min_quad_coeff )

a[i] = min_quad_coeff;

}

}

}

// We will need to recompute sigma

sigma_is_up_to_date = false;

}

// update the bias

inherited::update( pos_values, neg_values );

}

Batch version.

Reimplemented from PLearn::RBMLayer.

Definition at line 628 of file RBMGaussianLayer.cc.

References a, PLearn::RBMLayer::batch_size, PLearn::TVec< T >::data(), fixed_std_deviation, i, PLearn::RBMLayer::learning_rate, PLearn::TMat< T >::length(), min_quad_coeff, PLearn::RBMLayer::momentum, PLASSERT, PLCHECK_MSG, quad_coeff, share_quad_coeff, sigma_is_up_to_date, PLearn::RBMLayer::size, PLearn::RBMLayer::update(), update(), and PLearn::TMat< T >::width().

{

PLASSERT( pos_values.width() == size );

PLASSERT( neg_values.width() == size );

int batch_size = pos_values.length();

PLASSERT( neg_values.length() == batch_size );

// quad_coeff[i] -= learning_rate * 2 * quad_coeff[i] * (pos_values[i]^2

// - neg_values[i]^2)

if ( fixed_std_deviation <= 0 )

{

real two_lr = 2 * learning_rate / batch_size;

real* a = quad_coeff.data();

if( momentum == 0. )

{

if (share_quad_coeff)

for( int k=0; k<batch_size; k++ )

{

real *pv_k = pos_values[k];

real *nv_k = neg_values[k];

real update=0;

for( int i=0; i<size; i++ )

{

update += two_lr * a[0] * (nv_k[i]*nv_k[i] - pv_k[i]*pv_k[i]);

}

a[0] += update/(real)size;

if( a[0] < min_quad_coeff )

a[0] = min_quad_coeff;

}

else

for( int k=0; k<batch_size; k++ )

{

real *pv_k = pos_values[k];

real *nv_k = neg_values[k];

for( int i=0; i<size; i++ )

{

a[i] += two_lr * a[i] * (nv_k[i]*nv_k[i] - pv_k[i]*pv_k[i]);

if( a[i] < min_quad_coeff )

a[i] = min_quad_coeff;

}

}

}

else

PLCHECK_MSG( false,

"momentum and minibatch are not compatible yet" );

// We will need to recompute sigma

sigma_is_up_to_date = false;

}

// Update the bias

inherited::update( pos_values, neg_values );

}

| void PLearn::RBMGaussianLayer::update | ( | ) | [virtual] |

Update parameters according to accumulated statistics.

Reimplemented from PLearn::RBMLayer.

Definition at line 487 of file RBMGaussianLayer.cc.

References a, PLearn::TVec< T >::data(), fixed_std_deviation, i, PLearn::RBMLayer::learning_rate, min_quad_coeff, PLearn::RBMLayer::momentum, PLearn::RBMLayer::neg_count, PLearn::RBMLayer::pos_count, quad_coeff, quad_coeff_inc, quad_coeff_neg_stats, quad_coeff_pos_stats, PLearn::TVec< T >::resize(), share_quad_coeff, sigma_is_up_to_date, PLearn::RBMLayer::size, and PLearn::RBMLayer::update().

Referenced by update().

{

// quad_coeff -= learning_rate * (quad_coeff_pos_stats/pos_count

// - quad_coeff_neg_stats/neg_count)

if ( fixed_std_deviation <= 0 )

{

real pos_factor = -learning_rate / pos_count;

real neg_factor = learning_rate / neg_count;

real* a = quad_coeff.data();

real* aps = quad_coeff_pos_stats.data();

real* ans = quad_coeff_neg_stats.data();

if( momentum == 0. )

{

if(share_quad_coeff)

{

real update=0;

for( int i=0 ; i<size ; i++ )

{

update += pos_factor * aps[i] + neg_factor * ans[i];

}

a[0] += update/(real)size;

if( a[0] < min_quad_coeff )

a[0] = min_quad_coeff;

}

else

for( int i=0 ; i<size ; i++ )

{

a[i] += pos_factor * aps[i] + neg_factor * ans[i];

if( a[i] < min_quad_coeff )

a[i] = min_quad_coeff;

}

}

else

{

if(share_quad_coeff)

{

quad_coeff_inc.resize( 1 );

real* ainc = quad_coeff_inc.data();

for( int i=0 ; i<size ; i++ )

{

ainc[0] = momentum*ainc[0] + pos_factor*aps[i] + neg_factor*ans[i];

ainc[0] /= (real)size;

a[0] += ainc[0];

}

if( a[0] < min_quad_coeff )

a[0] = min_quad_coeff;

}

else

{

quad_coeff_inc.resize( size );

real* ainc = quad_coeff_inc.data();

for( int i=0 ; i<size ; i++ )

{

ainc[i] = momentum*ainc[i] + pos_factor*aps[i] + neg_factor*ans[i];

a[i] += ainc[i];

if( a[i] < min_quad_coeff )

a[i] = min_quad_coeff;

}

}

}

// We will need to recompute sigma

sigma_is_up_to_date = false;

}

// will update the bias, and clear the statistics

inherited::update();

}

static StaticInitializer PLearn::RBMGaussianLayer::_static_initializer_ [static] |

Reimplemented from PLearn::RBMLayer.

Definition at line 98 of file DEPRECATED/RBMGaussianLayer.h.

Indication that fpropNLL should compute the MSE instead of the NLL.

bpropNLL will also give the appropriate gradient. Might want to set fixed_std_deviation to 1 in this case.

Definition at line 72 of file RBMGaussianLayer.h.

Referenced by bpropNLL(), and fpropNLL().

Definition at line 67 of file RBMGaussianLayer.h.

Referenced by accumulateNegStats(), accumulatePosStats(), forget(), and update().

Definition at line 59 of file RBMGaussianLayer.h.

Referenced by update().

Definition at line 60 of file DEPRECATED/RBMGaussianLayer.h.

Vec PLearn::RBMGaussianLayer::quad_coeff [protected] |

Quadradic coefficients.

Definition at line 181 of file RBMGaussianLayer.h.

Referenced by accumulateNegStats(), accumulatePosStats(), bpropUpdate(), computeExpectations(), computeStdDeviation(), energy(), forget(), fprop(), fpropNLL(), freeEnergyContribution(), RBMGaussianLayer(), and update().

Vec PLearn::RBMGaussianLayer::quad_coeff_inc [protected] |

Definition at line 186 of file RBMGaussianLayer.h.

Referenced by update().

Vec PLearn::RBMGaussianLayer::quad_coeff_neg_stats [protected] |

Definition at line 185 of file RBMGaussianLayer.h.

Referenced by accumulateNegStats(), clearStats(), and update().

Vec PLearn::RBMGaussianLayer::quad_coeff_pos_stats [protected] |

Definition at line 184 of file RBMGaussianLayer.h.

Referenced by accumulatePosStats(), clearStats(), and update().

Definition at line 61 of file RBMGaussianLayer.h.

Referenced by accumulateNegStats(), accumulatePosStats(), bpropUpdate(), computeExpectations(), computeStdDeviation(), energy(), fprop(), fpropNLL(), freeEnergyContribution(), generateSamples(), RBMGaussianLayer(), and update().

Vec PLearn::RBMGaussianLayer::sigma [protected] |

Stores the standard deviations.

Definition at line 189 of file RBMGaussianLayer.h.

Referenced by computeStdDeviation(), fpropNLL(), generateSamples(), RBMGaussianLayer(), and reset().

bool PLearn::RBMGaussianLayer::sigma_is_up_to_date [protected] |

Definition at line 190 of file RBMGaussianLayer.h.

Referenced by computeStdDeviation(), reset(), and update().

Number of units when share_quad_coeff is False or 1 when share_quad_coeff is True.

Definition at line 65 of file RBMGaussianLayer.h.

Referenced by RBMGaussianLayer().

1.7.4

1.7.4