|

PLearn 0.1

|

|

PLearn 0.1

|

Kind of SoftMax. More...

#include <SoftSoftMaxVariable.h>

Public Member Functions | |

| SoftSoftMaxVariable () | |

| ### declare public option fields (such as build options) here Start your comments with Doxygen-compatible comments such as //! | |

| SoftSoftMaxVariable (Variable *input1, Variable *input2) | |

| Constructor initializing from two input variables. | |

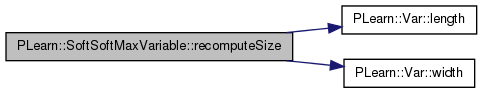

| virtual void | recomputeSize (int &l, int &w) const |

| Recomputes the length l and width w that this variable should have, according to its parent variables. | |

| virtual void | fprop () |

| compute output given input | |

| virtual void | bprop () |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual SoftSoftMaxVariable * | deepCopy (CopiesMap &copies) const |

| virtual void | build () |

| Post-constructor. | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

Static Public Member Functions | |

| static string | _classname_ () |

| SoftSoftMaxVariable. | |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares the class options. | |

Private Types | |

| typedef BinaryVariable | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

Private Attributes | |

| Mat | logH_mat |

Kind of SoftMax.

Definition at line 58 of file SoftSoftMaxVariable.h.

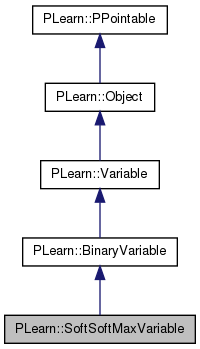

typedef BinaryVariable PLearn::SoftSoftMaxVariable::inherited [private] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 60 of file SoftSoftMaxVariable.h.

| PLearn::SoftSoftMaxVariable::SoftSoftMaxVariable | ( | ) | [inline] |

### declare public option fields (such as build options) here Start your comments with Doxygen-compatible comments such as //!

Default constructor, usually does nothing

Definition at line 72 of file SoftSoftMaxVariable.h.

{}

| string PLearn::SoftSoftMaxVariable::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 51 of file SoftSoftMaxVariable.cc.

| OptionList & PLearn::SoftSoftMaxVariable::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 51 of file SoftSoftMaxVariable.cc.

| RemoteMethodMap & PLearn::SoftSoftMaxVariable::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 51 of file SoftSoftMaxVariable.cc.

Reimplemented from PLearn::BinaryVariable.

Definition at line 51 of file SoftSoftMaxVariable.cc.

| Object * PLearn::SoftSoftMaxVariable::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftSoftMaxVariable.cc.

| StaticInitializer SoftSoftMaxVariable::_static_initializer_ & PLearn::SoftSoftMaxVariable::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 51 of file SoftSoftMaxVariable.cc.

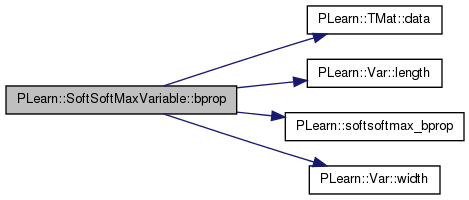

| void PLearn::SoftSoftMaxVariable::bprop | ( | ) | [virtual] |

Implements PLearn::Variable.

Definition at line 285 of file SoftSoftMaxVariable.cc.

References d, PLearn::TMat< T >::data(), PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, PLearn::Var::length(), logH_mat, PLearn::Variable::matGradient, n, PLearn::softsoftmax_bprop(), and PLearn::Var::width().

{

int n = input1->matValue.length();

int d = input1->matValue.width();

const real* const X = input1->matValue.data();

const real* const U = input2->matValue.data();

// const real* const H = matValue.data();

// For numerical reasons we use logH that has been computed during fprop and stored, rather than H that is in output->matValue.

const real* const logH = logH_mat.data();

const real* const H_gr = matGradient.data();

real* const X_gr = input1->matGradient.data();

real* const U_gr = input2->matGradient.data();

softsoftmax_bprop(n, d, X, U, logH, H_gr,

X_gr, U_gr);

/*

Mat X = input1->matValue,

A = input2->matValue,

grad_X = input1->matGradient,

grad_A = input2->matGradient;

real temp;

//chacun des exemples de X

for (int n=0; n<X.length(); n++)

//chaque coordonné dun exemple //correspond au gradient

for (int k=0; k<X.width(); k++)

//même exemple, coordonnée aussi // correspond à un exemple

for (int j=0; j<X.width(); j++)

{

temp = matGradient(n,j)*matValue(n,j)*matValue(n,j)*safeexp(X(n,k)+A(j,k))/safeexp(X(n,j));

if(k==j)

grad_X(n,k) += matGradient(n,j)*matValue(n,k)*(1.-matValue(n,k));

else

grad_X(n,k) -= temp;

grad_A(j,k) -= temp;

}

*/

}

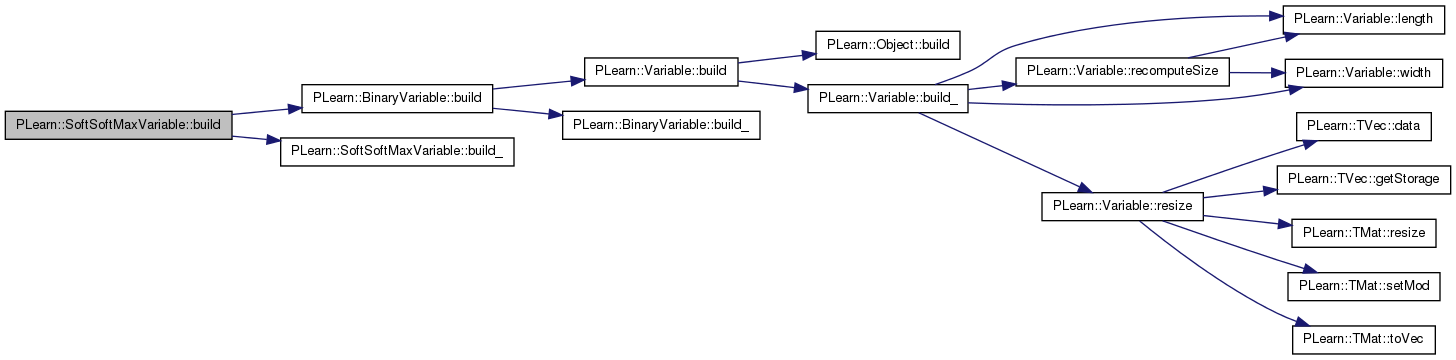

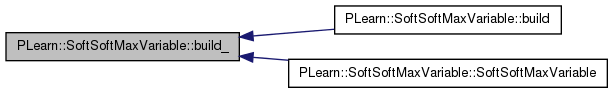

| void PLearn::SoftSoftMaxVariable::build | ( | ) | [virtual] |

Post-constructor.

The normal implementation should call simply inherited::build(), then this class's build_(). This method should be callable again at later times, after modifying some option fields to change the "architecture" of the object.

Reimplemented from PLearn::BinaryVariable.

Definition at line 336 of file SoftSoftMaxVariable.cc.

References PLearn::BinaryVariable::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::SoftSoftMaxVariable::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::BinaryVariable.

Definition at line 379 of file SoftSoftMaxVariable.cc.

Referenced by build(), and SoftSoftMaxVariable().

{

// ### This method should do the real building of the object,

// ### according to set 'options', in *any* situation.

// ### Typical situations include:

// ### - Initial building of an object from a few user-specified options

// ### - Building of a "reloaded" object: i.e. from the complete set of

// ### all serialised options.

// ### - Updating or "re-building" of an object after a few "tuning"

// ### options have been modified.

// ### You should assume that the parent class' build_() has already been

// ### called.

}

| string PLearn::SoftSoftMaxVariable::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftSoftMaxVariable.cc.

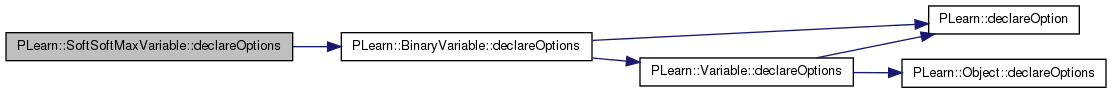

| void PLearn::SoftSoftMaxVariable::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares the class options.

Reimplemented from PLearn::BinaryVariable.

Definition at line 359 of file SoftSoftMaxVariable.cc.

References PLearn::BinaryVariable::declareOptions().

{

// ### Declare all of this object's options here.

// ### For the "flags" of each option, you should typically specify

// ### one of OptionBase::buildoption, OptionBase::learntoption or

// ### OptionBase::tuningoption. If you don't provide one of these three,

// ### this option will be ignored when loading values from a script.

// ### You can also combine flags, for example with OptionBase::nosave:

// ### (OptionBase::buildoption | OptionBase::nosave)

// ### ex:

// declareOption(ol, "myoption", &SoftSoftMaxVariable::myoption,

// OptionBase::buildoption,

// "Help text describing this option");

// ...

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::SoftSoftMaxVariable::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 96 of file SoftSoftMaxVariable.h.

:

//##### Protected Options ###############################################

| SoftSoftMaxVariable * PLearn::SoftSoftMaxVariable::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::BinaryVariable.

Definition at line 51 of file SoftSoftMaxVariable.cc.

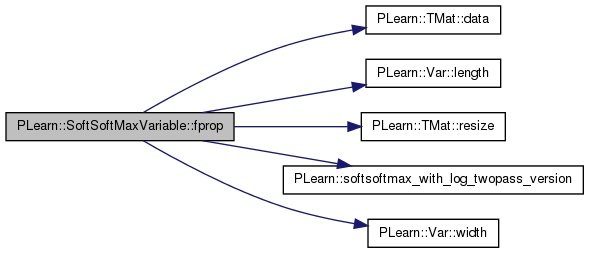

| void PLearn::SoftSoftMaxVariable::fprop | ( | ) | [virtual] |

compute output given input

Implements PLearn::Variable.

Definition at line 255 of file SoftSoftMaxVariable.cc.

References d, PLearn::TMat< T >::data(), i, PLearn::BinaryVariable::input1, PLearn::BinaryVariable::input2, PLearn::Var::length(), logH_mat, PLearn::Variable::matValue, n, PLERROR, PLearn::TMat< T >::resize(), PLearn::softsoftmax_with_log_twopass_version(), and PLearn::Var::width().

{

if(input1->matValue.isNotContiguous() || input2->matValue.isNotContiguous())

PLERROR("SoftSoftMaxVariable input matrices must be contiguous.");

int n = input1->matValue.length();

int d = input1->matValue.width();

if(input2->matValue.length()!=d || input2->matValue.width()!=d)

PLERROR("SoftSoftMaxVariable second input matriuix (U) must be a square matrix of width and length matching the width of first input matrix");

// make sure U's diagonal is 0

Mat Umat = input2->matValue;

for(int i=0; i<d; i++)

Umat(i,i) = 0;

const real* const X = input1->matValue.data();

const real* const U = input2->matValue.data();

real* const H = matValue.data();

logH_mat.resize(n,d);

real* const logH = logH_mat.data();

softsoftmax_with_log_twopass_version(n, d, X, U, logH, H);

// perr << "Twopass version: " << endl << matValue << endl;

// softsoftmax_fprop_singlepass_version(n, d, X, U, H);

// perr << "Singlepass version: " << endl << matValue << endl;

// perr << "--------------------------------------" << endl;

}

| OptionList & PLearn::SoftSoftMaxVariable::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftSoftMaxVariable.cc.

| OptionMap & PLearn::SoftSoftMaxVariable::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftSoftMaxVariable.cc.

| RemoteMethodMap & PLearn::SoftSoftMaxVariable::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 51 of file SoftSoftMaxVariable.cc.

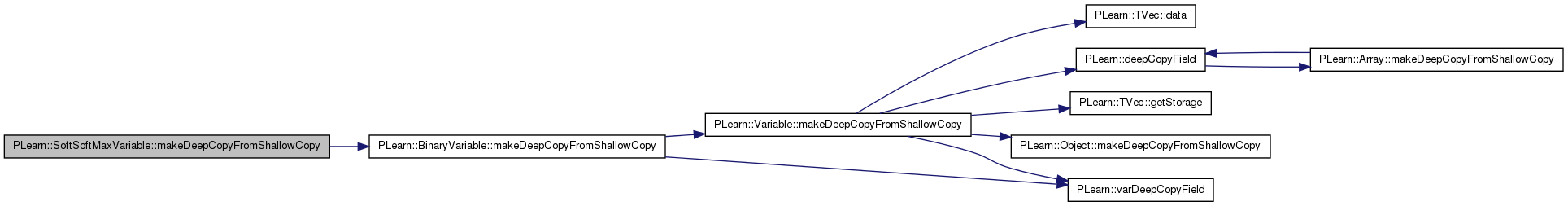

| void PLearn::SoftSoftMaxVariable::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::BinaryVariable.

Definition at line 342 of file SoftSoftMaxVariable.cc.

References PLearn::BinaryVariable::makeDeepCopyFromShallowCopy(), and PLERROR.

{

inherited::makeDeepCopyFromShallowCopy(copies);

// ### Call deepCopyField on all "pointer-like" fields

// ### that you wish to be deepCopied rather than

// ### shallow-copied.

// ### ex:

// deepCopyField(trainvec, copies);

// ### If you want to deepCopy a Var field:

// varDeepCopyField(somevariable, copies);

// ### Remove this line when you have fully implemented this method.

PLERROR("SoftSoftMaxVariable::makeDeepCopyFromShallowCopy not fully (correctly) implemented yet!");

}

Recomputes the length l and width w that this variable should have, according to its parent variables.

This is used for ex. by sizeprop() The default version stupidly returns the current dimensions, so make sure to overload it in subclasses if this is not appropriate.

Reimplemented from PLearn::Variable.

Definition at line 243 of file SoftSoftMaxVariable.cc.

References PLearn::BinaryVariable::input1, PLearn::Var::length(), and PLearn::Var::width().

{

// ### usual code to put here is:

if (input1) {

l = input1->length(); // the computed length of this Var

w = input1->width(); // the computed width

} else

l = w = 0;

}

Reimplemented from PLearn::BinaryVariable.

Definition at line 96 of file SoftSoftMaxVariable.h.

Mat PLearn::SoftSoftMaxVariable::logH_mat [private] |

Definition at line 129 of file SoftSoftMaxVariable.h.

1.7.4

1.7.4