|

PLearn 0.1

|

|

PLearn 0.1

|

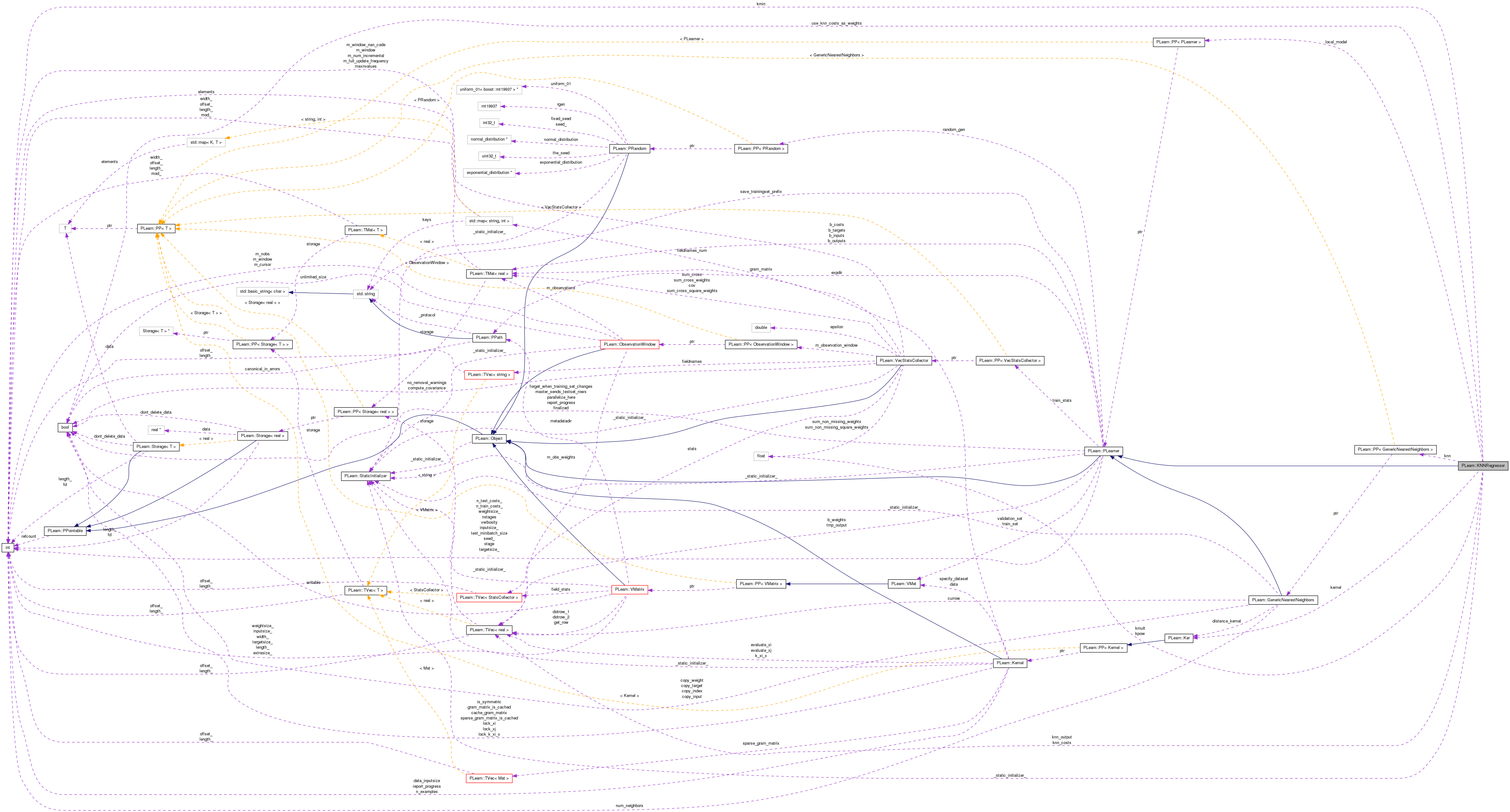

This class provides a simple multivariate regressor based upon an enclosed K-nearest-neighbors finder (derived from GenericNearestNeighbors; specified with the 'knn' option). More...

#include <KNNRegressor.h>

Public Member Functions | |

| KNNRegressor () | |

| Default constructor. | |

| virtual void | build () |

| Simply calls inherited::build() then build_(). | |

| virtual void | makeDeepCopyFromShallowCopy (CopiesMap &copies) |

| Transforms a shallow copy into a deep copy. | |

| virtual string | classname () const |

| virtual OptionList & | getOptionList () const |

| virtual OptionMap & | getOptionMap () const |

| virtual RemoteMethodMap & | getRemoteMethodMap () const |

| virtual KNNRegressor * | deepCopy (CopiesMap &copies) const |

| virtual void | setTrainingSet (VMat training_set, bool call_forget=true) |

| Overridden to call knn->setTrainingSet. | |

| virtual int | outputsize () const |

| Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options). | |

| virtual void | forget () |

| Forwarded to knn. | |

| virtual void | train () |

| Forwarded to knn. | |

| virtual void | computeOutput (const Vec &input, Vec &output) const |

| Computes the output from the input. | |

| virtual void | computeCostsFromOutputs (const Vec &input, const Vec &output, const Vec &target, Vec &costs) const |

| Computes the costs from already computed output. | |

| virtual bool | computeConfidenceFromOutput (const Vec &input, const Vec &output, real probability, TVec< pair< real, real > > &intervals) const |

| Delegate to local model if one is specified; not implemented otherwise (although one could easily return the standard error of the mean, weighted by the kernel measure; -- to do). | |

| virtual TVec< std::string > | getTestCostNames () const |

| Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method). | |

| virtual TVec< std::string > | getTrainCostNames () const |

| Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats. | |

Static Public Member Functions | |

| static string | _classname_ () |

| static OptionList & | _getOptionList_ () |

| static RemoteMethodMap & | _getRemoteMethodMap_ () |

| static Object * | _new_instance_for_typemap_ () |

| static bool | _isa_ (const Object *o) |

| static void | _static_initialize_ () |

| static const PPath & | declaringFile () |

Public Attributes | |

| PP< GenericNearestNeighbors > | knn |

| The K-nearest-neighbors finder to use (default is an ExhaustiveNearestNeighbors with an EpanechnikovKernel, lambda=1) | |

| int | kmin |

| Minimum number of neighbors to use (default=5) | |

| real | kmult |

| Multiplicative factor on n^kpow to determine number of neighbors to use (default=0) | |

| real | kpow |

| Power of the number of training examples to determine number of neighbors (default=0.5) | |

| bool | use_knn_costs_as_weights |

| Whether to weigh each of the K neighbors by the kernel evaluations, obtained from the costs coming out of the 'knn' object (default=true) | |

| Ker | kernel |

| Disregard the 'use_knn_costs_as_weights' option, and use this kernel to weight the observations. | |

| PP< PLearner > | local_model |

| Train a local regression model from the K neighbors, weighted by the kernel evaluations. | |

Static Public Attributes | |

| static StaticInitializer | _static_initializer_ |

Static Protected Member Functions | |

| static void | declareOptions (OptionList &ol) |

| Declares this class' options. | |

Protected Attributes | |

| Vec | knn_output |

| Internal use: temporary buffer for knn output. | |

| Vec | knn_costs |

| Internal use: temporary buffer for knn costs. | |

Private Types | |

| typedef PLearner | inherited |

Private Member Functions | |

| void | build_ () |

| This does the actual building. | |

This class provides a simple multivariate regressor based upon an enclosed K-nearest-neighbors finder (derived from GenericNearestNeighbors; specified with the 'knn' option).

The class contains several options to determine the number of neighbors to use (K). This number always overrides the option 'num_neighbors' that may have been specified in the GenericNearestNeighbors utility object. Basically, the generic formula for the number of neighbors is

K = max(kmin, kmult*(n^kpow)),

where 'kmin', 'kmult', and 'kpow' are options, and 'n' is the number of examples in the training set.

The cost output from this class is:

If the option 'use_knn_costs_as_weights' is true (by default), it is assumed that the costs coming from the 'knn' object are kernel evaluations for each nearest neighbor. These are used as weights to determine the final class probabilities. (NOTE: it is important to use a kernel that computes a SIMILARITY MEASURE, and not a DISTANCE MEASURE; the default EpanechnikovKernel has the proper behavior.) If the option is false, an equal weighting is used (equivalent to square window). In addition, a different weighting kernel may be specified with the 'kernel' option.

A local weighted regression model may be trained at each test point by specifying a 'local_model'. For instance, to perform local linear regression, you may use a LinearRegressor for this purpose.

Definition at line 86 of file KNNRegressor.h.

typedef PLearner PLearn::KNNRegressor::inherited [private] |

Reimplemented from PLearn::PLearner.

Definition at line 88 of file KNNRegressor.h.

| PLearn::KNNRegressor::KNNRegressor | ( | ) |

Default constructor.

Definition at line 92 of file KNNRegressor.cc.

: knn(new ExhaustiveNearestNeighbors(new EpanechnikovKernel(), false)), kmin(5), kmult(0.0), kpow(0.5), use_knn_costs_as_weights(true), kernel(), local_model() { }

| string PLearn::KNNRegressor::_classname_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 90 of file KNNRegressor.cc.

| OptionList & PLearn::KNNRegressor::_getOptionList_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 90 of file KNNRegressor.cc.

| RemoteMethodMap & PLearn::KNNRegressor::_getRemoteMethodMap_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 90 of file KNNRegressor.cc.

Reimplemented from PLearn::PLearner.

Definition at line 90 of file KNNRegressor.cc.

| Object * PLearn::KNNRegressor::_new_instance_for_typemap_ | ( | ) | [static] |

Reimplemented from PLearn::Object.

Definition at line 90 of file KNNRegressor.cc.

| StaticInitializer KNNRegressor::_static_initializer_ & PLearn::KNNRegressor::_static_initialize_ | ( | ) | [static] |

Reimplemented from PLearn::PLearner.

Definition at line 90 of file KNNRegressor.cc.

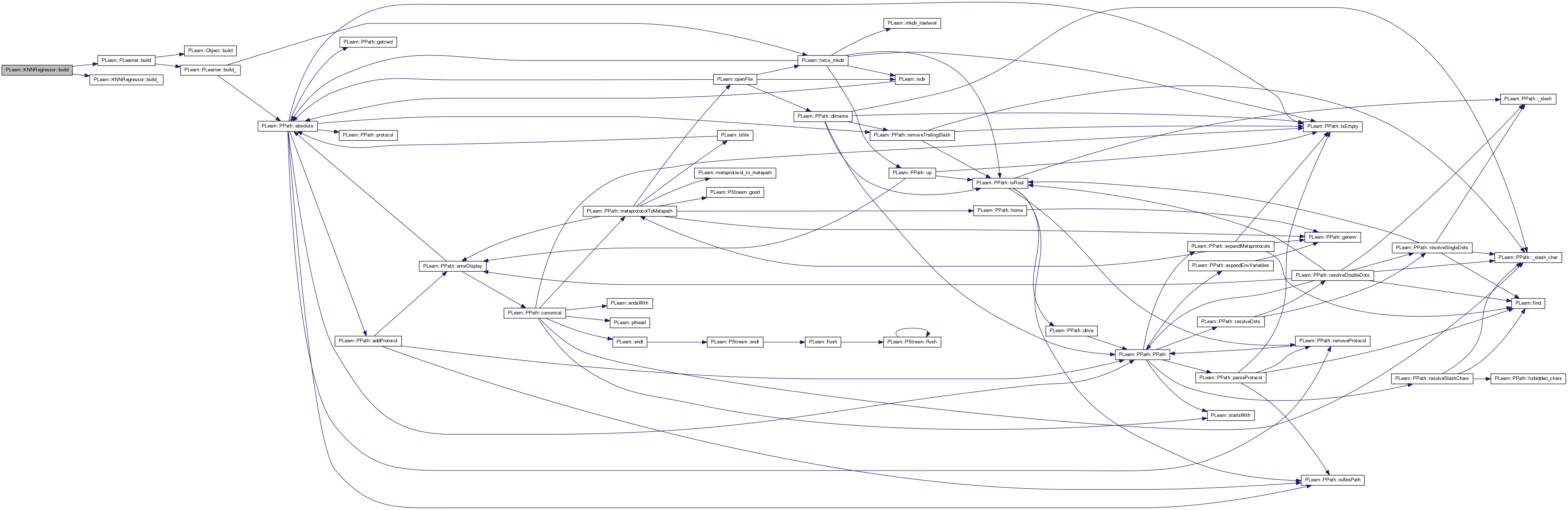

| void PLearn::KNNRegressor::build | ( | ) | [virtual] |

Simply calls inherited::build() then build_().

Reimplemented from PLearn::PLearner.

Definition at line 155 of file KNNRegressor.cc.

References PLearn::PLearner::build(), and build_().

{

inherited::build();

build_();

}

| void PLearn::KNNRegressor::build_ | ( | ) | [private] |

This does the actual building.

Reimplemented from PLearn::PLearner.

Definition at line 145 of file KNNRegressor.cc.

References kmin, knn, and PLERROR.

Referenced by build().

{

if (!knn)

PLERROR("KNNRegressor::build_: the 'knn' option must be specified");

if (kmin <= 0)

PLERROR("KNNRegressor::build_: the 'kmin' option must be strictly positive");

}

| string PLearn::KNNRegressor::classname | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 90 of file KNNRegressor.cc.

| bool PLearn::KNNRegressor::computeConfidenceFromOutput | ( | const Vec & | input, |

| const Vec & | output, | ||

| real | probability, | ||

| TVec< pair< real, real > > & | intervals | ||

| ) | const [virtual] |

Delegate to local model if one is specified; not implemented otherwise (although one could easily return the standard error of the mean, weighted by the kernel measure; -- to do).

< for now -- to be fixed

Reimplemented from PLearn::PLearner.

Definition at line 302 of file KNNRegressor.cc.

References local_model.

{

if (! local_model)

return false;

// Assume that the local model has been trained; don't re-train it

return local_model->computeConfidenceFromOutput(input, output, probability, intervals);

}

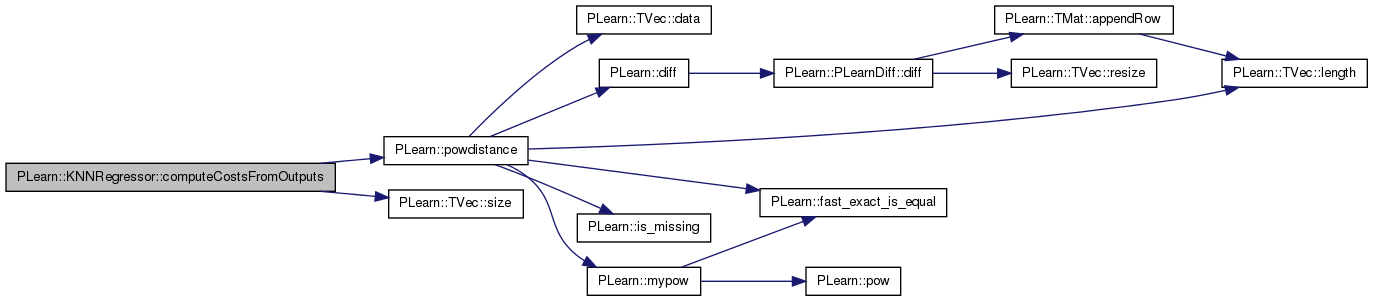

| void PLearn::KNNRegressor::computeCostsFromOutputs | ( | const Vec & | input, |

| const Vec & | output, | ||

| const Vec & | target, | ||

| Vec & | costs | ||

| ) | const [virtual] |

Computes the costs from already computed output.

Implements PLearn::PLearner.

Definition at line 295 of file KNNRegressor.cc.

References PLASSERT, PLearn::powdistance(), and PLearn::TVec< T >::size().

{

PLASSERT( costs.size() == 1 );

costs[0] = powdistance(output,target,2);

}

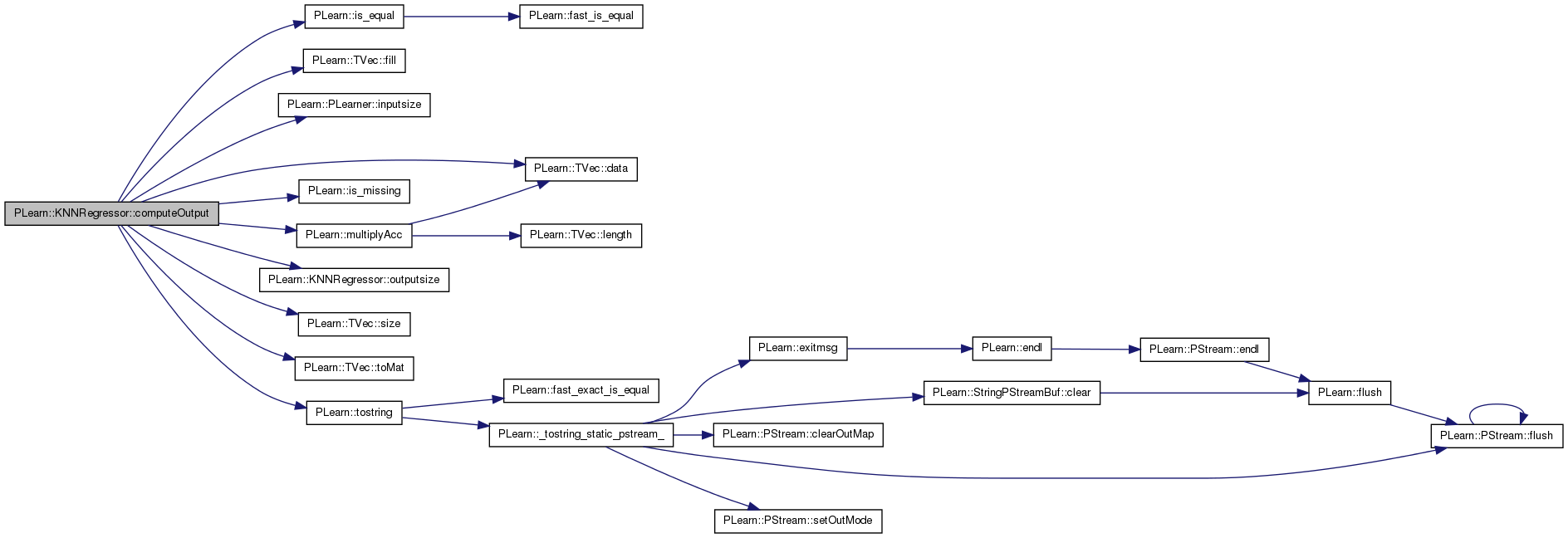

Computes the output from the input.

< not used by knn

Reimplemented from PLearn::PLearner.

Definition at line 212 of file KNNRegressor.cc.

References PLearn::TVec< T >::data(), PLearn::TVec< T >::fill(), i, PLearn::PLearner::inputsize(), PLearn::is_equal(), PLearn::is_missing(), kernel, knn, knn_costs, knn_output, local_model, PLearn::multiplyAcc(), n, outputsize(), PLASSERT, PLWARNING, PLearn::PStream::pretty_ascii, PLearn::TVec< T >::size(), PLearn::TVec< T >::toMat(), PLearn::tostring(), use_knn_costs_as_weights, and w.

{

PLASSERT( output.size() == outputsize() );

// Start by computing the nearest neighbors

Vec knn_targets;

knn->computeOutputAndCosts(input, knn_targets, knn_output, knn_costs);

// A little sanity checking on the knn costs: make sure that they not all

// zero as this certainly indicates a wrong kernel

bool has_non_zero_costs = false;

for (int i=0, n=knn_costs.size() ; i<n && !has_non_zero_costs ; ++i)

has_non_zero_costs = !is_missing(knn_costs[i]) && !is_equal(knn_costs[i], 0.0);

if (! has_non_zero_costs) {

string input_str = tostring(input, PStream::pretty_ascii);

PLWARNING("KNNRegressor::computeOutput: all %d neighbors have zero similarity with\n"

"input vector %s;\n"

"check the similarity kernel bandwidth. Replacing them by uniform weights.",

knn_costs.size(), input_str.c_str());

knn_costs.fill(1.0);

}

// For each neighbor, the KNN object outputs the following:

// 1) input vector

// 2) output vector

// 3) the weight (in all cases)

// We shall patch the weight of each neighbor (observation) to reflect

// the effect of the kernel weighting

const int inputsize = input.size();

const int outputsize = output.size();

const int weightoffset = inputsize+outputsize;

const int rowwidth = weightoffset+1;

real* knn_output_data = knn_output.data();

real total_weight = 0.0;

for (int i=0, n=knn->num_neighbors; i<n; ++i, knn_output_data += rowwidth) {

real w;

if (kernel) {

Vec cur_input(inputsize, knn_output_data);

w = kernel(cur_input, input);

}

else if (use_knn_costs_as_weights)

w = knn_costs[i];

else

w = 1.0;

if (is_missing(w))

w = 0.0;

// Patch the existing weight

knn_output_data[weightoffset] *= w;

total_weight += knn_output_data[weightoffset];

}

// If total weight is too small, make the output all zeros

if (total_weight < 1e-6) {

output.fill(0.0);

return;

}

// Now compute the output per se

if (! local_model) {

// If no local model was requested, simply perform a weighted

// average of the nearest-neighbors

output.fill(0.0);

knn_output_data = knn_output.data();

for (int i=0, n=knn->num_neighbors; i<n; ++i, knn_output_data+=rowwidth) {

Vec cur_output(outputsize, knn_output_data+inputsize);

multiplyAcc(output, cur_output,

knn_output_data[weightoffset] / total_weight);

}

}

else {

// Reinterpret knn_output as a training set and use local model

Mat training_data = knn_output.toMat(knn->num_neighbors, rowwidth);

VMat training_set(training_data);

training_set->defineSizes(inputsize, outputsize, 1 /* weightsize */);

local_model->setTrainingSet(training_set, true /* forget */);

local_model->setTrainStatsCollector(new VecStatsCollector());

local_model->train();

local_model->computeOutput(input,output);

}

}

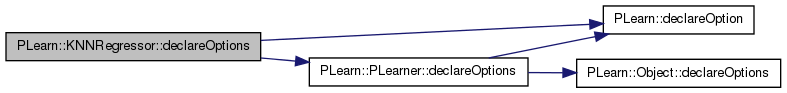

| void PLearn::KNNRegressor::declareOptions | ( | OptionList & | ol | ) | [static, protected] |

Declares this class' options.

Reimplemented from PLearn::PLearner.

Definition at line 102 of file KNNRegressor.cc.

References PLearn::OptionBase::buildoption, PLearn::declareOption(), PLearn::PLearner::declareOptions(), kernel, kmin, kmult, knn, kpow, local_model, and use_knn_costs_as_weights.

{

declareOption(

ol, "knn", &KNNRegressor::knn, OptionBase::buildoption,

"The K-nearest-neighbors finder to use (default is an\n"

"ExhaustiveNearestNeighbors with a EpanechnikovKernel, lambda=1)");

declareOption(

ol, "kmin", &KNNRegressor::kmin, OptionBase::buildoption,

"Minimum number of neighbors to use (default=5)");

declareOption(

ol, "kmult", &KNNRegressor::kmult, OptionBase::buildoption,

"Multiplicative factor on n^kpow to determine number of neighbors to\n"

"use (default=0)");

declareOption(

ol, "kpow", &KNNRegressor::kpow, OptionBase::buildoption,

"Power of the number of training examples to determine number of\n"

"neighbors (default=0.5)");

declareOption(

ol, "use_knn_costs_as_weights", &KNNRegressor::use_knn_costs_as_weights,

OptionBase::buildoption,

"Whether to weigh each of the K neighbors by the kernel evaluations,\n"

"obtained from the costs coming out of the 'knn' object (default=true)");

declareOption(

ol, "kernel", &KNNRegressor::kernel, OptionBase::buildoption,

"Disregard the 'use_knn_costs_as_weights' option, and use this kernel\n"

"to weight the observations. If this object is not specified\n"

"(default), and the 'use_knn_costs_as_weights' is false, the\n"

"rectangular kernel is used.");

declareOption(

ol, "local_model", &KNNRegressor::local_model, OptionBase::buildoption,

"Train a local regression model from the K neighbors, weighted by\n"

"the kernel evaluations. This is carried out at each test point.");

// Now call the parent class' declareOptions

inherited::declareOptions(ol);

}

| static const PPath& PLearn::KNNRegressor::declaringFile | ( | ) | [inline, static] |

Reimplemented from PLearn::PLearner.

Definition at line 144 of file KNNRegressor.h.

:

//##### PLearner Methods ####################################################

| KNNRegressor * PLearn::KNNRegressor::deepCopy | ( | CopiesMap & | copies | ) | const [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 90 of file KNNRegressor.cc.

| void PLearn::KNNRegressor::forget | ( | ) | [virtual] |

Forwarded to knn.

Reimplemented from PLearn::PLearner.

Definition at line 200 of file KNNRegressor.cc.

| OptionList & PLearn::KNNRegressor::getOptionList | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 90 of file KNNRegressor.cc.

| OptionMap & PLearn::KNNRegressor::getOptionMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 90 of file KNNRegressor.cc.

| RemoteMethodMap & PLearn::KNNRegressor::getRemoteMethodMap | ( | ) | const [virtual] |

Reimplemented from PLearn::Object.

Definition at line 90 of file KNNRegressor.cc.

| TVec< string > PLearn::KNNRegressor::getTestCostNames | ( | ) | const [virtual] |

Returns the names of the costs computed by computeCostsFromOutpus (and thus the test method).

Implements PLearn::PLearner.

Definition at line 314 of file KNNRegressor.cc.

{

static TVec<string> costs(1);

costs[0] = "mse";

return costs;

}

| TVec< string > PLearn::KNNRegressor::getTrainCostNames | ( | ) | const [virtual] |

Returns the names of the objective costs that the train method computes and for which it updates the VecStatsCollector train_stats.

Implements PLearn::PLearner.

Definition at line 321 of file KNNRegressor.cc.

{

return TVec<string>();

}

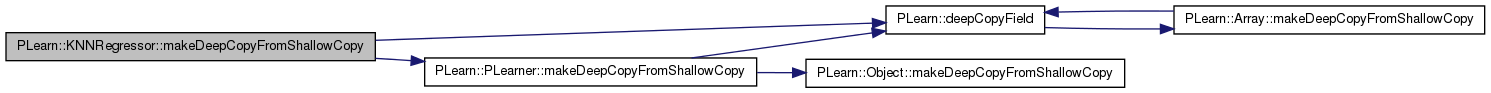

| void PLearn::KNNRegressor::makeDeepCopyFromShallowCopy | ( | CopiesMap & | copies | ) | [virtual] |

Transforms a shallow copy into a deep copy.

Reimplemented from PLearn::PLearner.

Definition at line 162 of file KNNRegressor.cc.

References PLearn::deepCopyField(), kernel, knn, knn_costs, knn_output, local_model, and PLearn::PLearner::makeDeepCopyFromShallowCopy().

{

deepCopyField(knn_output, copies);

deepCopyField(knn_costs, copies);

deepCopyField(knn, copies);

deepCopyField(kernel, copies);

deepCopyField(local_model, copies);

inherited::makeDeepCopyFromShallowCopy(copies);

}

| int PLearn::KNNRegressor::outputsize | ( | ) | const [virtual] |

Returns the size of this learner's output, (which typically may depend on its inputsize(), targetsize() and set options).

Implements PLearn::PLearner.

Definition at line 173 of file KNNRegressor.cc.

References PLearn::PLearner::train_set.

Referenced by computeOutput().

{

return train_set->targetsize();

}

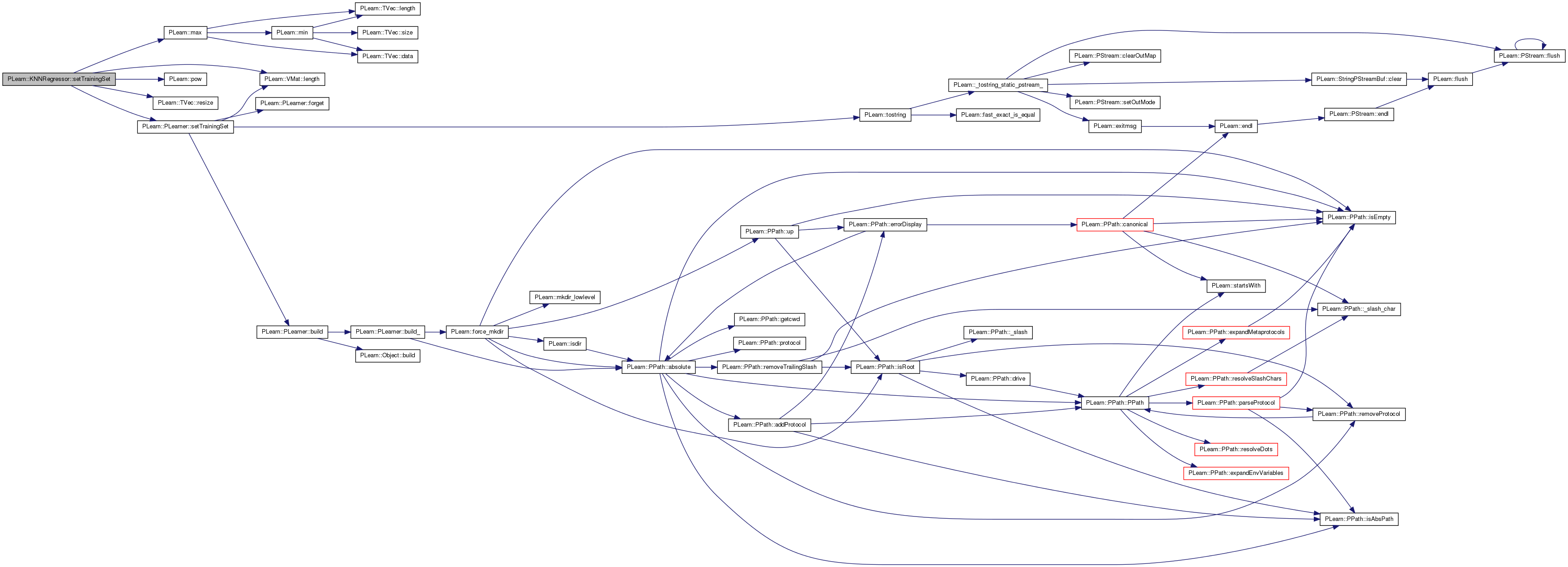

Overridden to call knn->setTrainingSet.

Reimplemented from PLearn::PLearner.

Definition at line 179 of file KNNRegressor.cc.

References kmin, kmult, knn, knn_costs, knn_output, kpow, PLearn::VMat::length(), PLearn::max(), n, PLASSERT, PLearn::pow(), PLearn::TVec< T >::resize(), and PLearn::PLearner::setTrainingSet().

{

PLASSERT( knn );

inherited::setTrainingSet(training_set,call_forget);

// Now we carry out a little bit of tweaking on the embedded knn:

// - ask to report input+target+weight

// - set number of neighbors

// - set training set (which performs a build if necessary)

int n = training_set.length();

int num_neighbors = max(kmin, int(kmult*pow(double(n), double(kpow))));

knn->num_neighbors = num_neighbors;

knn->copy_input = true;

knn->copy_target = true;

knn->copy_weight = true;

knn->copy_index = false;

knn->setTrainingSet(training_set,call_forget);

knn_costs.resize(knn->nTestCosts());

knn_output.resize(knn->outputsize());

}

| void PLearn::KNNRegressor::train | ( | ) | [virtual] |

Reimplemented from PLearn::PLearner.

Definition at line 144 of file KNNRegressor.h.

Disregard the 'use_knn_costs_as_weights' option, and use this kernel to weight the observations.

If this object is not specified (default), and the 'use_knn_costs_as_weights' is false, the rectangular kernel is used.

Definition at line 123 of file KNNRegressor.h.

Referenced by computeOutput(), declareOptions(), and makeDeepCopyFromShallowCopy().

Minimum number of neighbors to use (default=5)

Definition at line 105 of file KNNRegressor.h.

Referenced by build_(), declareOptions(), and setTrainingSet().

Multiplicative factor on n^kpow to determine number of neighbors to use (default=0)

Definition at line 109 of file KNNRegressor.h.

Referenced by declareOptions(), and setTrainingSet().

The K-nearest-neighbors finder to use (default is an ExhaustiveNearestNeighbors with an EpanechnikovKernel, lambda=1)

Definition at line 102 of file KNNRegressor.h.

Referenced by build_(), computeOutput(), declareOptions(), forget(), makeDeepCopyFromShallowCopy(), setTrainingSet(), and train().

Vec PLearn::KNNRegressor::knn_costs [mutable, protected] |

Internal use: temporary buffer for knn costs.

Definition at line 95 of file KNNRegressor.h.

Referenced by computeOutput(), makeDeepCopyFromShallowCopy(), and setTrainingSet().

Vec PLearn::KNNRegressor::knn_output [mutable, protected] |

Internal use: temporary buffer for knn output.

Definition at line 92 of file KNNRegressor.h.

Referenced by computeOutput(), makeDeepCopyFromShallowCopy(), and setTrainingSet().

Power of the number of training examples to determine number of neighbors (default=0.5)

Definition at line 113 of file KNNRegressor.h.

Referenced by declareOptions(), and setTrainingSet().

Train a local regression model from the K neighbors, weighted by the kernel evaluations.

This is carried out at each test point.

Definition at line 127 of file KNNRegressor.h.

Referenced by computeConfidenceFromOutput(), computeOutput(), declareOptions(), and makeDeepCopyFromShallowCopy().

Whether to weigh each of the K neighbors by the kernel evaluations, obtained from the costs coming out of the 'knn' object (default=true)

Definition at line 117 of file KNNRegressor.h.

Referenced by computeOutput(), and declareOptions().

1.7.4

1.7.4